Abstract

Blackbody radiation, emitted from a furnace and described by a Planck spectrum, contains (on average) an entropy of bits per photon. Since normal physical burning is a unitary process, this amount of entropy is compensated by the same amount of “hidden information” in correlations between the photons. The importance of this result lies in the posterior extension of this argument to the Hawking radiation from black holes, demonstrating that the assumption of unitarity leads to a perfectly reasonable entropy/information budget for the evaporation process. In order to carry out this calculation, we adopt a variant of the “average subsystem” approach, but consider a tripartite pure system that includes the influence of the rest of the universe, and which allows “young” black holes to still have a non-zero entropy; which we identify with the standard Bekenstein entropy.

1. Introduction

Hawking’s 1976 calculation [1] of the thermal emission from a black hole is often interpreted in terms of Clausius entropy, indicating that, starting from a star in some unknown pure state, after it collapses to a black hole and subsequently evaporates, the system will (at the final stage) be in a mixed state, with corresponding loss of information. This argument gave rise to the so-called black hole information paradox, and there exist many very different proposals mooted to resolve it. For example, the information is irremediably lost, or it is stored in remnants or baby universes, or perhaps one has to appeal to the existence of new physical phenomena such as firewalls, fuzzballs, gravastars, etc. Most of the current ideas are based on the maintenance of unitary, as in standard quantum mechanics, but in some situations this assumption gives rise to non-standard physical effects—as in the case of the Page proposal [2], which motivated, in part, the idea of firewalls [3].

In order to obtain a better understanding of this problem, we first considered a standard unitary process, standard thermodynamic burning, in order to show the exact quantity of entropy exchanged between the burning matter and the electromagnetic field, which (given unitarity) must be compensated for with information hidden in correlations between the photons involved in the process [4]. We have used this quite standard result as a starting point to understand what happens with the entropy/information budget in general relativistic black hole evaporation. In this context, we have constructed a specific model in which we have seen that there is no paradoxical behaviour [5]. So, we claim that maybe the evaporation process is relatively benign.

2. Entropy/Information in Blackbody Radiation

We begin by considering the standard thermodynamics process of burning matter, where it is well known that the underlying theory is unitary—at least as long as one uses standard quantum mechanics. Unitarity implies strict conservation of the von Neumann entropy. We will use this fact to correctly understand entropy budget when we calculate the entropy associated with the blackbody radiation.1

The application of standard statistical mechanics to a furnace with a small hole leads to the notion of blackbody radiation. The reasoning that then gives rise to the Planck spectrum implies some coarse graining (that is, we choose to measure some aspects of the emitted photons and ignore others). In this process, every photon that escapes from the furnace transfers an amount of entropy to the radiation field given by

where is the energy of the photon and T is the temperature of the furnace. This is simply the Clausius definition of entropy. For convenience, from now on, this single-photon definition of entropy will be measured in terms of bits, converted from “physical” entropy by means of the relation.

Now take into account the effect of coarse graining the entropy, considering it in terms of the von Neumann entropy, which is conserved under the evolution of the system. The information hidden in the correlations hidden by the coarse graining process is simply

After these preliminary definitions, the next step is to calculate the average energy per photon in blackbody radiation (using the Planck distribution). We see

where is the Riemann zeta function. From this expression, it is straightforward to calculate, using the definitions of Equations (1) and (2), the average entropy per blackbody photon. We find

The standard deviation, (simply coming from the fact that the Planck spectrum has a finite width), is

Overall, the average entropy per photon in blackbody radiation is [4]:

This expression is relevant when the only thing that we know about the photon is that it was emitted as part of some blackbody spectrum from a furnace at some (possibly unknown) temperature. The result depends only on the shape of the Planck spectrum, the Clausius notion of entropy, and quite ordinary thermodynamic reasoning.

Since we know that the underlying physics is unitary, this entropy must be compensated with an equal quantity of information. That information would be hidden in the photon–photon correlations that we did not take into account in our coarse graining procedure. The fact that even a standard unitary burning process has a precisely quantifiable entropy/information budget should not really come as a surprise, but it certainly does not seem to be a well-appreciated facet of quantum statistical mechanics.

3. Hawking Evaporation of Black Holes

Taking into account the previous result, now we are interested in applying it to the study of the entropy budget associated with the evaporation process of a relativistic black hole. In order to develop our study, we assume the existence of trapping or apparent horizons, which are enough for the Hawking radiation to exist [6,7], but which also allow the information to escape from the interior of the black hole. (Event horizons are not necessary for Hawking evaporation, and simply lead to unnecessary confusion.) Moreover, we consider that the evaporation process is complete and the whole process unitary—we shall then check whether this assumption is consistent, and whether the information comes out throughout the Hawking radiation process. Specifically, the question is, how is entropy encoded in this process? Does the information emerge continuously or only at late stages of the evaporation process? (For instance, very late stages of the evaporation process, when the black hole mass, curvature, and Hawking temperature all become Planck scale, might best be viewed as a particle cascade, see for instance [8].) In order to understand the entropy/information budget, we are going to calculate first the classical thermodynamic entropy and later the quantum (entanglement) entropy, in order to compare and contrast them.

3.1. Thermodynamic Clausius Entropy in the Hawking Flux

The Bekenstein entropy lost by a Schwarzschild black hole (per emitted quanta) is

In a similar way, the Clausius entropy gain of the external radiation field (per emitted quanta), that is, the entropy gain of the Hawking flux, is

Both entropies are the same (as they certainly should be) and their measure in bits will be exactly the same quantity that we calculated previously in the standard thermodynamic case [in Equation (7)], . So, it can be seen that throughout the whole evaporation process, semi-classically, we have:

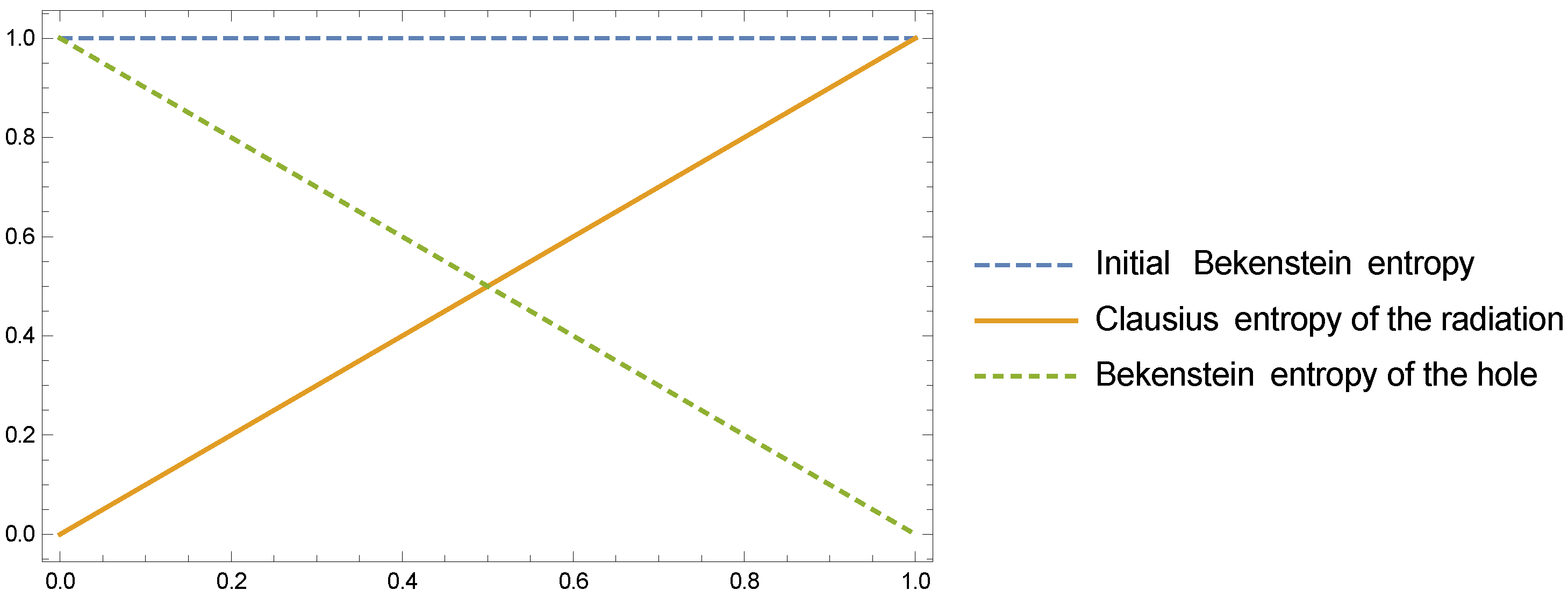

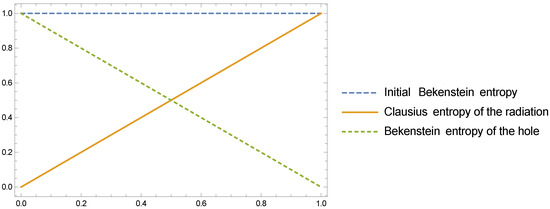

This is represented in Figure 1, showing that semi-classically everything holds together very nicely. (This should not be at all surprising since the normalization constant in the Bekenstein entropy was originally derived by integrating up the Clausius entropy in the Hawking flux.)

Figure 1.

Clausius (thermodynamic) entropy balance: As the black hole Bekenstein entropy (defined in terms of the area of the horizon) decreases, the Clausius entropy of the radiation increases, to keep total entropy constant and equal to the initial Bekenstein entropy.

3.2. Entanglement Entropy in the Hawking Flux

In order to calculate the entanglement (von Neumann) entropy, we have adopted a variant of a method developed by Page [9] that allows us to calculate the average subsystem entropy of multipartite systems. We take the Hilbert space of the total system to split as the tensor product of Hilbert subspaces corresponding to subsystems A and B, (for now taking into account only a bipartite system), , and also take the total space to be in a pure state. So, we can define the subsystem density matrix of A as , and for subsystem B. Then, each subsystem has an associated subsystem von Neumann entropy, , and similarly . Indeed, because the total system is in a pure state .

Taking a uniform average over all possible pure states of the total system,2 the central result that Page obtained was that the subsystem entropy satisfies

where and are the dimensions of the Hilbert spaces corresponding to each subsystem, and is the minimum of dimension of the two Hilbert spaces. Therefore, the subsystem entropy is very close to its maximum value, so that each subsystem is very close to being in a maximally mixed state. By combining the exact result derived by Sen [10], and the discussion carried out in reference [5], we can provide a strict limit to this entropy, given by

so the average subsystem is within nat of its maximum possible value.

4. Bipartite Entanglement

The specific model considered by Page [2] was a global system comprised of one subsystem that corresponds to the Hawking radiation and another subsystem that corresponds to the black hole, for which Hilbert spaces are given, respectively, by and . In this bipartite system, initially, before the evaporation of the black hole starts, there is not yet any Hawking radiation. Then, the Hilbert space is trivial, but the Hilbert space is enormous. (However, note that one has to assume that the total system is in a pure state to apply Page’s argument.) As the subsystem entropy is given by the minimum Hilbert space dimension, one has , where the subscript indicates the initial state, .

In the opposite way, once the evaporation is completed, there will be no black hole, so, its Hilbert space dimension is trivial, and it is the Hawking radiation subspace which has an enormous Hilbert space dimension, giving , where now the subscript indicates the final state, considered when .

In order to calculate the entropy at intermediate states, it is necessary to consider that the evolution is unitary, thus the total Hilbert space dimension is constant . Under these conditions, the average subsystem entropy will be given in terms of

It is easy to find the maximum value of the average subsystem entropy, which is reached when , at which stage it takes the value

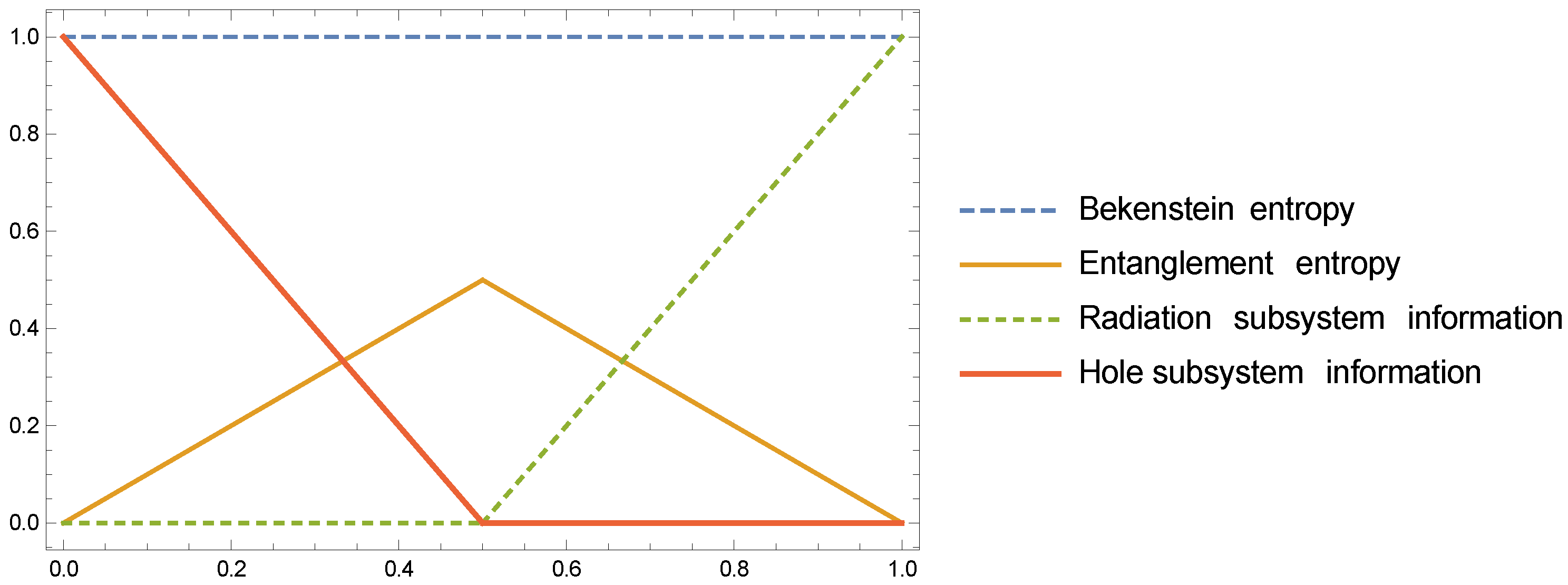

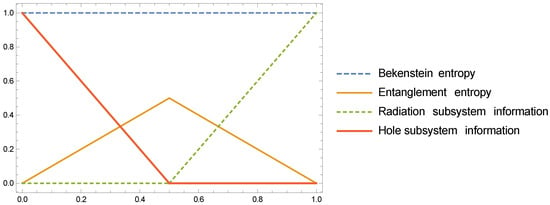

This time at which the black hole has lost half of its entropy is called the “Page time”. It is possible to represent the shape of the evolution of this subsystem entropy, as it can be seen in Figure 2. The so-called “Page curve” [2] is the “entanglement entropy” curve.

Figure 2.

Page curves for entanglement entropy and asymmetric subsystem information: These are derived under the “average subsystem” assumption applied to a pure-state bipartite system consisting only of (black hole) plus the (Hawking radiation).

Page also calculated the (averaged) asymmetric subsystem information given by the expressions [2]

which are also represented in Figure 2. These are the curves labelled “radiation subsystem information” and “hole subsystem information". In order to get a better understanding of the information budget, we have calculated the mutual entropy of the subsystems, given by

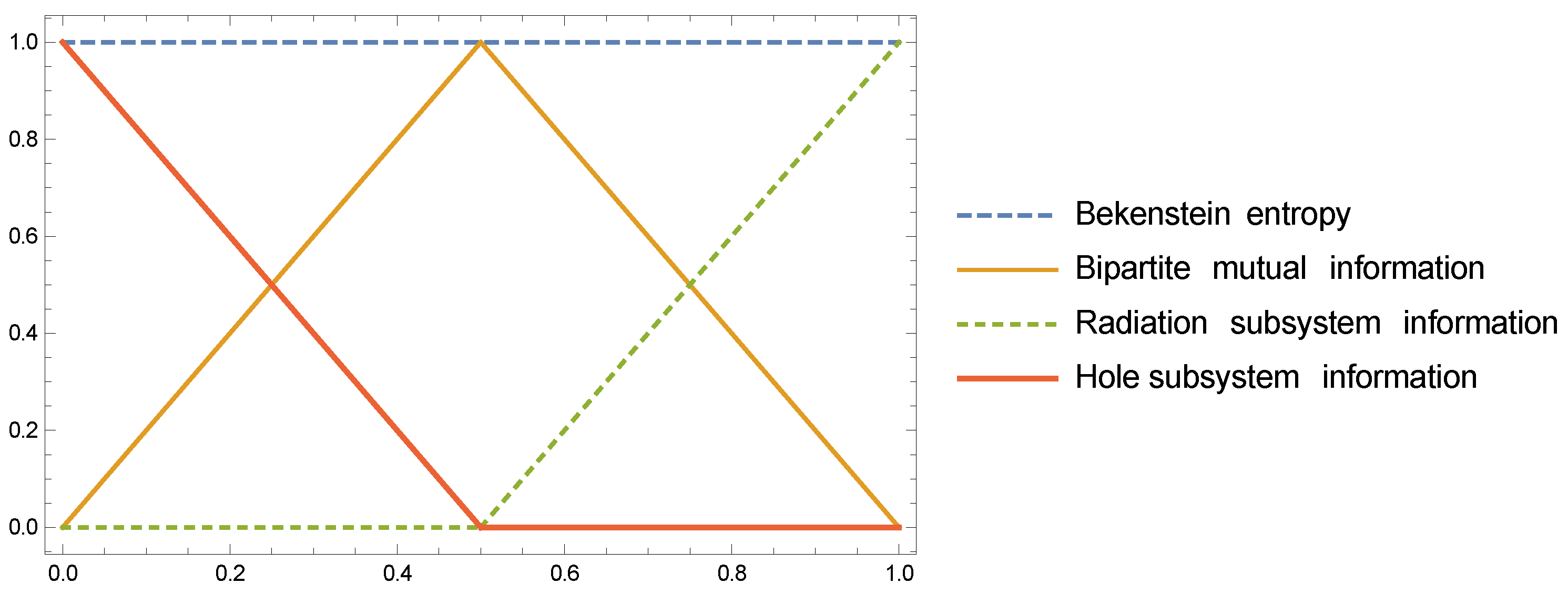

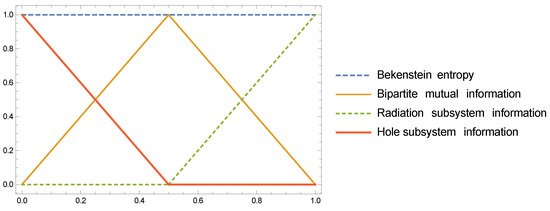

In this bipartite system, it can be expressed as

We have found that when we apply the “average subsystem” process to the mutual information, and combine it with the asymmetric subsystem information, we have that the “sum rule” is satisfied:

This sum rule is represented in Figure 3.

Figure 3.

Modified Page curves for the bipartite mutual information and the asymmetric subsystem information: These are derived under the “average subsystem” assumption applied to a pure-state bipartite system consisting only of (black hole) plus the (Hawking radiation).

The Page curve underlies much of the present discussion about the “information paradox”. The main result implies that the black hole subsystem is maximally entangled with the radiation subsystem. However, at the same time, if we sub-divide the Hawking radiation subsystem between early and late radiation (respectively, before and after Page time), these two subsystems would be also maximally entangled between them, and also with the black hole subsystem. The problem lies in the fact that, due to the monogamy of entanglement, this is not possible, and it was one of the motivations for the proposal of firewalls [3]. Nevertheless, we argue that this model misses much of the relevant physics.

There are some unacceptable aspects of the standard argument. For instance, the standard argument assumes that the initial black hole (after formation but before any radiation is emitted) is in a pure state, so that the initial subsystem entropy vanishes. That assertion is in tension with the idea of relating the initial von Neumann entropy with the Bekenstein entropy of the black hole. That the Bekenstein entropy is a coarse-grained von Neumann entropy characterizing the number of ways in which the black hole could have formed is an old idea going back to the 1970s. Quantitatively, this idea was first formalized by Bombelli et al. [11], and few years later was independently explored by Srednicki [12], both groups calculating the scaling of entanglement entropy with area (see, also, the reviews [13,14], and the recent article on coarse-graining [15]). We propose that these problematic issues may be related to the consideration of an over-simplified (black hole)+(radiation) “closed box” system, ignoring the environment. That is, we argue that we should instead consider a tripartite system, in which we explicitly add the rest of the universe, (the environment), expecting a much better physically more reasonable behaviour for the entropy budget.

5. Tripartite Entanglement

We now consider a tripartite system which consists of three subsystems, associated to the black hole, the Hawking radiation, and rest of the universe (environment), respectively. So the Hilbert space now is split in the form: . Since we assume that the entire Universe is in a pure state (), now the entropy of the subsystems is given by , , and . In this case3, the initial subsystem entropies, (before the evaporation starts, when ), are and . It is important to realise that after black hole formation but before evaporation starts one has .

Starting from any stellar object, collapse and horizon formation (be it an apparent horizon, trapping horizon, event horizon, or some notion of approximate horizon) is an extremely dramatic coarse-graining process. We emphasize (since we have seen this point causes some considerable confusion) that the end result of the collapse process is that the entropy of the newly formed black hole is the Bekenstein entropy associated with the horizon. Indeed, the Bekenstein entropy is the entropy associated with all possible ways in which the black hole could have been formed; not the entropy of the original stellar object that underwent collapse; that stellar entropy is unknown and unknowable after black hole formation, this merely being one side effect of the “no hair” theorems.

(Indeed, if one denies the applicability of Bekenstein entropy to the newly created black hole, then it is absolutely no surprise that one rapidly ties oneself up in logical knots when considering the Hawking emission process.)

More precisely: One can either appeal to Bekenstein’s original papers to get (entropy) ∝ (area), and then fix the normalization constant using Hawking’s original papers [16,17]. Alternatively, if one insists on working only with von Neumann entropy, then one can use Srednicki’s calculation showing that generically (entropy) ∝ (area) for any surface we cannot look behind [12], and again fix the normalization constant using Hawking’s original papers [17]. (See also Bombelli et al. calculations of the von Neumann entropy implied by the existence of a horizon [11].)

The final entropies, when the black hole is completely evaporated () are and . We assume that the evolution is unitary, so the total Hilbert space is preserved. The environment does not participate directly in the evaporation process, since the role of the environment is merely to allow the initial black hole to have a non-zero entropy. After , the environment evolves separately, that is, the unitary time evolution operator is the tensor product of a unitary operator corresponding to the environment and another unitary operator corresponding to the black hole and Hawking radiation subsystems, . Thus, the total Hilbert space of the environment and the total Hilbert space of the other two subsystems are independently preserved during the evaporation process, and we can express the conservation of the Hilbert space dimension as and .

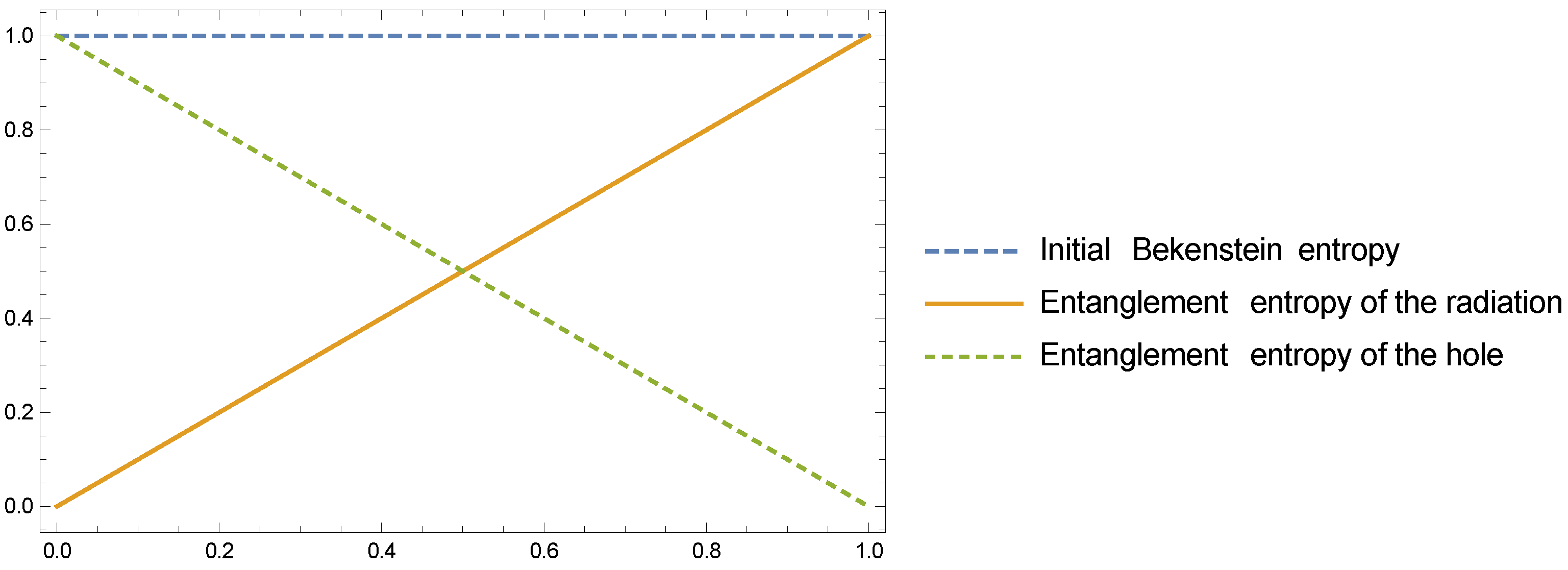

We have computed the average entropy of the black hole and Hawking radiation subsystems, taking as an additional assumption that (throughout the evolution) the Bekenstein entropy can be interpreted as the entanglement entropy of the black hole,

We have also obtained the sum of both averaged entropies. After some calculation

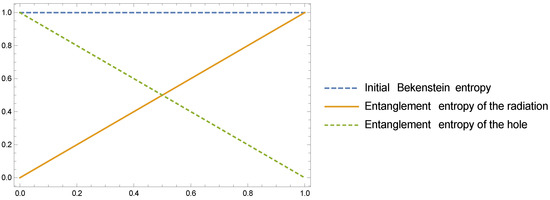

This sum rule is represented in Figure 4. In the same way, we have obtained the average entropy of the environment subsystem. It is important to note that this entropy only corresponds to that part of the universe which is entangled with the other subsystems, it is not the entropy of the rest of the universe. Calculation yields

Figure 4.

Tripartite quantum (von Neumann) entropy balance: Under the “average subsystem” assumption, now applied to a pure-state tripartite system consisting of (black hole) plus (Hawking radiation) plus (rest of universe), the quantum (von Neumann) analysis reproduces the Clausius (thermodynamic) analysis. As the black hole Bekenstein entropy decreases, the entanglement entropy of the radiation increases, to keep total entropy approximately constant, at least to within 1 nat. In the limit where the environment (rest of universe) becomes arbitrarily large, the correspondence is exact.

In this tripartite system, the mutual information between the Hawking radiation subsystem and the black hole subsystem is more interesting, and is given by the expression

It is possible to calculate the average mutual information, and one finally finds [5] that it is always less than nat during the whole evaporation process,

It is also interesting to note that if the environment becomes arbitrarily large, which is certainly possible in this tripartite system without any loss of generality, then it can be seen that the previous sum in Equation (21) becomes exact

and the mutual entropy is also exactly zero [5]

6. Discussion

First of all, we have obtained the numerical value of the entropy per photon emitted in black body radiation, which, because the process is unitary, must be compensated by an equal “hidden information” in the correlations. As is well known, there is no “information puzzle” in a standard thermodynamic process, but we note that due to the coarse-graining [15], a specifically quantifiable amount of entropy/information is nevertheless exchanged in the process [4].

From this starting point, we have applied these ideas to the consideration of general relativistic black holes, calculating both the classical thermodynamic entropy and the Bekenstein entropy, and seeing that they compensate perfectly. Once we have calculated the classical entropy, we then calculate the quantum (entanglement) entropy, considering a model based on a tripartite system. The result obtained is completely in agreement with the classical expected results, at least to within 1 nat. In contrast, the result previously obtained by Page, by considering a bipartite model that does not interact with the environment, gives rise to not well-understood physics.

From our analysis, it can be seen that although when we restrict attention to any particular subsystem we perceive an amount of entanglement entropy, (a loss of information), there exists a complementary amount of entropy/information that is codified in the correlations between the subsystems. Then, assuming the unitarity of the evolution of the (black hole) + (Hawking radiation) subsystem, and working within the standard Page-like average-subsystem framework, we showed that it seems that there is no pressing need for any unusual physical effect to enter into the process. This implies a continuous purification of the Hawking radiation, and could lead to a completely non-controversial and quite standard physical picture for the evaporation of a black hole. (Here, we are taking into account only the semiclassical process of Hawking radiation, until a deeper understanding of the underlying micro-physics of quantum gravity phenomena might be found.)

Acknowledgments

A.A.-S. is supported by the grant GACR-14-37086G of the Czech Science Foundation. M.V. is supported by the Marsden fund, administered by the Royal Society of New Zealand.

Author Contributions

Both authors contributed equally to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hawking, S.W. Breakdown of predictability in gravitational collapse. Phys. Rev. D 1976, 14, 2460–2473. [Google Scholar] [CrossRef]

- Page, D.N. Information in black hole radiation. Phys. Rev. Lett. 1993, 71, 3743–3746. [Google Scholar] [CrossRef] [PubMed]

- Almheiri, A.; Marolf, D.; Polchinski, J.; Sully, J. Black holes: Complementarity or firewalls? J. High Energy Phys. 2013, 2013, 62. [Google Scholar] [CrossRef]

- Alonso-Serrano, A.; Visser, M. On burning a lump of coal. Phys. Lett. B 2016, 757, 383–386. [Google Scholar] [CrossRef]

- Alonso-Serrano, A.; Visser, M. Entropy/information flux in Hawking radiation. arXiv, 2015; arXiv:arXiv:1512.01890. [Google Scholar]

- Visser, M. Hawking radiation without black hole entropy. Phys. Rev. Lett. 1998, 80, 3436–3439. [Google Scholar] [CrossRef]

- Barceló, C.; Liberati, S.; Sonego, S.; Visser, M. Hawking-like radiation does not require a trapped region. Phys. Rev. Lett. 2006, 97, 171301. [Google Scholar] [CrossRef] [PubMed]

- Visser, M. Hawking radiation: A Particle physics perspective. Mod. Phys. Lett. A 1993, 8, 1661–1670. [Google Scholar] [CrossRef]

- Page, D.N. Average entropy of a subsystem. Phys. Rev. Lett. 1993, 71, 1291–1294. [Google Scholar] [CrossRef] [PubMed]

- Sen, S. Average Entropy of a Quantum Subsystem. Phys. Rev. Lett. 1996, 77, 1–3. [Google Scholar] [CrossRef] [PubMed]

- Bombelli, L.; Koul, R.K.; Lee, J.; Sorkin, R.D. A Quantum Source of Entropy for Black Holes. Phys. Rev. D 1986, 34, 373–383. [Google Scholar] [CrossRef]

- Srednicki, M. Entropy and area. Phys. Rev. Lett. 1993, 71, 666–669. [Google Scholar] [CrossRef] [PubMed]

- Eisert, J.; Cramer, M.; Plenio, M.B. Area laws for the entanglement entropy—A review. Rev. Mod. Phys. 2010, 82, 277–306. [Google Scholar] [CrossRef]

- Das, S.; Shankaranarayanan, S.; Sur, S. Black hole entropy from entanglement: A Review. arXiv, 2009; arXiv:arXiv:0806.0402. [Google Scholar]

- Alonso-Serrano, A.; Visser, M. Coarse graining Shannon and von Neumann entropies. Entropy 2017, 19, 207. [Google Scholar] [CrossRef]

- Bekenstein, J.D. Black holes and entropy. Phys. Rev. D 1973, 7, 2333–2346. [Google Scholar] [CrossRef]

- Hawking, S.W. Black hole explosions. Nature 1974, 248, 30–31. [Google Scholar] [CrossRef]

| 1. | There are alternative non-extensive generalizations of the classical Clausius entropy—such as the Tsallis entropy. Classically, the Tsallis entropy generalizes the Shannon entropy, and in a quantum situation it generalizes the von Neumann entropy. However, considerations of the Tsallis entropy lie far beyond the scope of the present article—for now, we are trying to understand the Hawking entropy budget using the standard tool of von Neumann entropy. |

| 2. | This uniform averaging process always makes sense mathematically; when applied to black holes, this mathematical process is a “stand in” for the only partially known physics that thermalizes both the Hawking radiation and the evolving black hole. Our initial input assumptions along these lines are certainly no stronger than those used in deriving the Page curve. |

| 3. | If the universe is not in a pure state, the fix is simple: Divide the universe into observable and unobservable sectors. Take the entire universe to be a pure state and trace over the unobservable portion of the universe; the observable part of the universe is then described by a density matrix. Discard the unobservable part of the universe (it is merely a spectator) and work with the black hole, the Hawking radiation, and the rest of the observable universe (environment). The same argument applies, and we see that for all practical purposes we might as well (without loss of generality) assume the universe is in a pure state. |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).