A Comprehensive Pattern Recognition Neural Network for Collision Classification Using Force Sensor Signals

Abstract

:1. Introduction

- (1)

- A high performance and accuracy classifier is proposed based on a PRNN for classifying the human–robot contacts.

- (2)

- The proposed classifier is designed considering a few inputs/sizes, which are the external joint torques of a 2-DOF manipulator and the force sensor signal, which were estimated in our previous work [14].

- (3)

- To achieve the high accuracy of the classifier, the proposed PRNN is trained using a conjugate gradient backpropagation algorithm which has a superlinear convergence rate on most problems and is more effective and faster compared with the standard backpropagation. During the training, the main aim and objective is to obtain the smallest or the zero-value cross-entropy. The smaller the cross-entropy, the better the model or the classifier.

- (4)

- The generalization ability or the effectiveness of the classifier is investigated using different conditions than the training ones.

- (5)

- The same methodology and work are repeated using a PRNN but is trained in this case by a different training algorithm, which is the Levenberg–Marquardt. The PRNN-LM is compared with the proposed PRNN trained by the conjugate gradient backpropagation algorithm.

- (6)

- A comparison is presented between our proposed approach (PRNN and PRNN-LM) and the other previous ones to prove its preferability.

2. Materials and Methods

- (1)

- Design of the PRNN based on the estimated signals in [14]. In this step, the structure of the proposed PRNN is presented. The input layer, the hidden layer, and the output layer are investigated in detail. In addition, the number of inputs used is determined.

- (2)

- Training the designed PRNN using a conjugate gradient backpropagation algorithm. In this stage, parts of the data are used for the training process. The training process is carried out using a scaled conjugate gradient backpropagation algorithm. Many trials and errors are executed to find the best number of hidden neurons, the best activation functions found at the hidden and output layers, and the best number of epochs/iterations, which lead to the high performance of the developed PRNN. The best performance means obtaining very small (about zero value) cross-entropy, which can measure the classification model performance using the probability and error theory. The smaller the cross-entropy, the better the model or the classifier. Therefore, the main aim is to minimize the cross-entropy as small as possible.

- (3)

- Testing and validating the trained PRNN using other different data than the ones used for training. In this stage, other parts of data which were not used for the train process are used to test and validate the trained PRNN to show its high performance and effectiveness under different conditions and data. If the trained PRNN has the high performance in this step, then it is ready for the classifications. If the trained PRNN does not have high performance, the training process identified in step 2 is repeated until the high performance is produced.

- (4)

- Studying and investigating the effectiveness of the trained PRNN in classifying the signals. In this stage, the effectiveness in percentage (%) is determined for the PRNN in the training case, validation case, and the testing case. In addition, all the collected data are used together to test the trained PRNN, and its effectiveness (%) is determined.

- (5)

- The previous steps are repeated but using a PRNN trained by another algorithm, which is the Levenberg–Marquardt (LM) technique. In other words, all the work is repeated by the PRNN-LM. Its effectiveness is calculated in all stages: (1) training, (2) testing, (3) validation, and (4) testing using all data. A comparison is made between the PRNN and the PRNN-LM.

- (6)

- A comparison is executed between the PRNN and the PRNN-LM classifiers and the other previously published classifiers in terms of the method/approach used, the inputs used during design, the application to real robots, and the effectiveness (%).

3. Results

3.1. Experimental Work

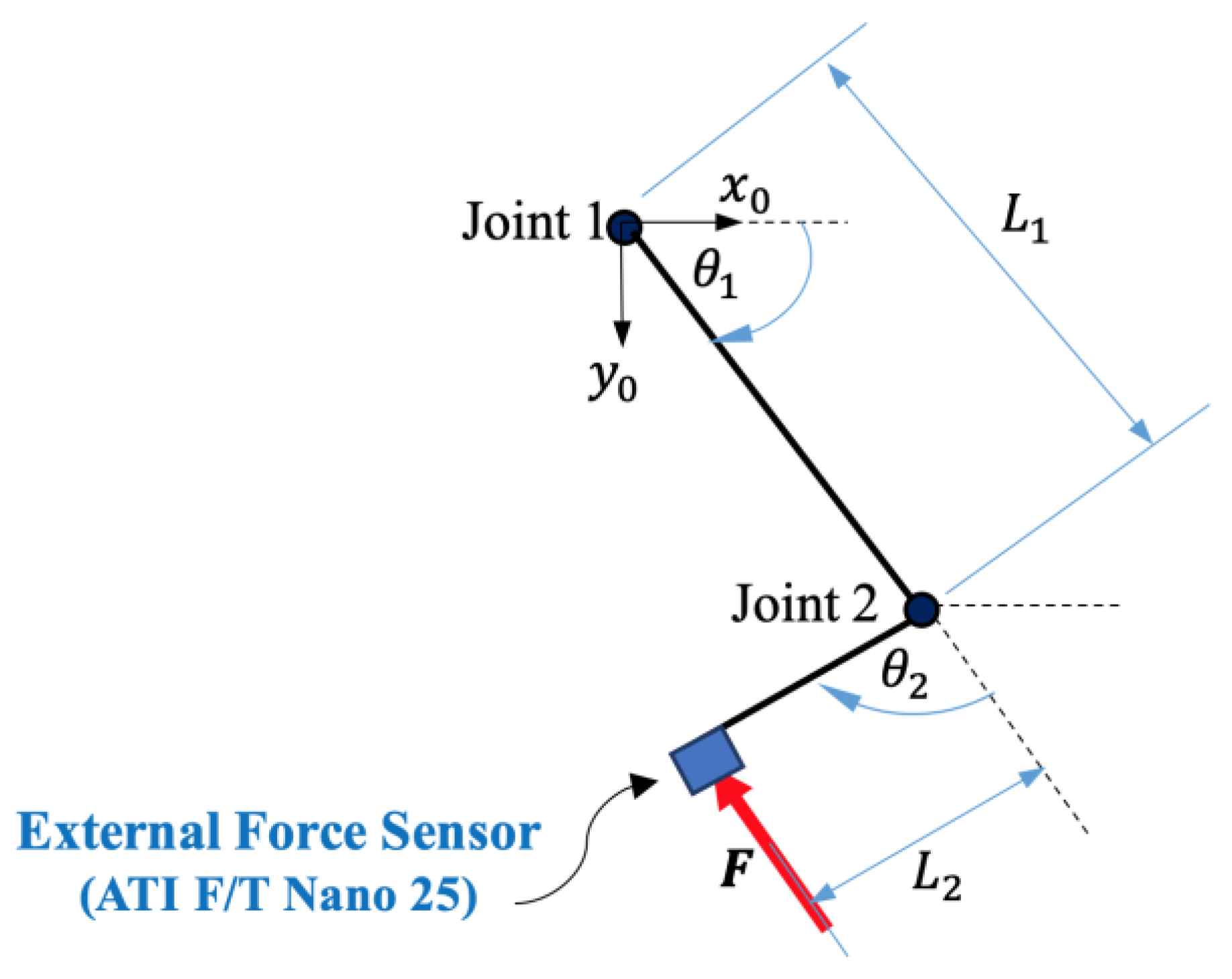

- The first layer is the input layer, and it contains three inputs: the estimated external force sensor signal and the estimated external torque of joints 1 and 2 of the 2-DOF manipulator. These inputs were obtained from [14].

- The second layer is the nonlinear hidden layer, and it contains an activation function of hyperbolic tangent (tanh) type. After trying many different hidden neurons to achieve a high performance of the designed PRNN, the best number of hidden neurons is 120, as discussed at the end of this section. High performance of NN means achieving very small (about zero value) cross-entropy, which can measure the classification model performance using the probability and error theory, where the more likely or the bigger the probability of something is, the lower the cross-entropy [27]. In other words, cross-entropy is used when adjusting the weights of the model during the training process. The main purpose is minimizing the cross-entropy, and the smaller the cross-entropy the better the model/classifier. A perfect model has a cross-entropy loss of zero value [28].

- The third layer is the nonlinear hidden layer, and it contains an activation function of SoftMax type. The output layer contains two outputs representing two cases: the first one if there is a collision, and the second case if there is not a collision.

3.2. Experimental Results

- The number of hidden neurons is 120.

- The number of epochs or iterations is 51. The high performance of the PRNN is achieved after this number of iterations. In other words, once high performance (smallest cross-entropy) has been achieved, the iterations are stopped.

- The smallest obtained cross-entropy is 0.069678.

- The time that consumed for training is 12 s, which is a very short time. However, this time is not very important because the training is carried out offline and the main aim is obtaining a high performance NN in classification.

4. Discussion and Comparisons

4.1. PRNN Trained by Levenberg–Marquardt Algorithm (PRNN-LM)

4.2. Comparison with Other Previous Works

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ballesteros, J.; Pastor, F.; Gómez-De-Gabriel, J.M.; Gandarias, J.M.; García-Cerezo, A.J.; Urdiales, C. Proprioceptive Estimation of Forces Using Underactuated Fingers for Robot-Initiated pHRI. Sensors 2020, 20, 2863. [Google Scholar] [CrossRef] [PubMed]

- Sharkawy, A.-N.; Koustoumpardis, P.N. Human–Robot Interaction: A Review and Analysis on Variable Admittance Control, Safety, and Perspectives. Machines 2022, 10, 591. [Google Scholar] [CrossRef]

- Dimeas, F.; Avendaño-Valencia, L.D.; Aspragathos, N. Human–robot collision detection and identification based on fuzzy and time series modelling. Robotica 2014, 33, 1886–1898. [Google Scholar] [CrossRef]

- Lu, S.; Chung, J.H.; Velinsky, S.A. Human-Robot Collision Detection and Identification Based on Wrist and Base Force/Torque Sensors. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 June 2005; pp. 796–801. [Google Scholar]

- Sharkawy, A.-N.; Aspragathos, N. Human-Robot Collision Detection Based on Neural Networks. Int. J. Mech. Eng. Robot. Res. 2018, 7, 150–157. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N.A. Manipulator Collision Detection and Collided Link Identification Based on Neural Networks. In Advances in Service and Industrial Robotics. RAAD 2018. Mechanisms and Machine Science; Nikos, A., Panagiotis, K., Vassilis, M., Eds.; Springer: Cham, Switzerland, 2018; pp. 3–12. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N. Human–robot collisions detection for safe human–robot interaction using one multi-input–output neural network. Soft Comput. 2020, 24, 6687–6719. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N. Neural Network Design for Manipulator Collision Detection Based Only on the Joint Position Sensors. Robotica 2020, 38, 1737–1755. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Ali, M.M. NARX Neural Network for Safe Human–Robot Collaboration Using Only Joint Position Sensor. Logistics 2022, 6, 75. [Google Scholar] [CrossRef]

- Mahmoud, K.H.; Sharkawy, A.-N.; Abdel-Jaber, G.T. Development of safety method for a 3-DOF industrial robot based on recurrent neural network. J. Eng. Appl. Sci. 2023, 70, 1–20. [Google Scholar] [CrossRef]

- Briquet-Kerestedjian, N.; Wahrburg, A.; Grossard, M.; Makarov, M.; Rodriguez-Ayerbe, P. Using Neural Networks for Classifying Human-Robot Contact Situations. In Proceedings of the 2019 18th European Control Conference, ECC 2019, EUCA, Naples, Italy, 25–28 June 2019; pp. 3279–3285. [Google Scholar] [CrossRef]

- Franzel, F.; Eiband, T.; Lee, D. Detection of Collaboration and Collision Events during Contact Task Execution. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Munich, Germany, 19–21 July 2021; pp. 376–383. [Google Scholar] [CrossRef]

- Abu Al-Haija, Q.; Al-Saraireh, J. Asymmetric Identification Model for Human-Robot Contacts via Supervised Learning. Symmetry 2022, 14, 591. [Google Scholar] [CrossRef]

- Sharkawy, A.; Mousa, H.M. Signals estimation of force sensor attached at manipulator end-effector based on artificial neural network. In Handbook of Nanosensors: Materials and Technological Applications; Ali, G.A.M., Chong, K.F., Makhlouf, A.S.H., Eds.; Springer Nature: Berlin/Heidelberg, Germany, 2023; pp. 1–25. [Google Scholar]

- Schmidhuber, J. Deep Learning in Neural Networks: An Overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Haykin, S. Neural Networks and Learning Machines, 3rd ed.; Pearson: London, UK, 2009. [Google Scholar]

- Sharkawy, A.-N. Principle of Neural Network and its Main Types: Review. J. Adv. Appl. Comput. Math. 2020, 7, 8–19. [Google Scholar] [CrossRef]

- Chen, S.-C.; Lin, S.-W.; Tseng, T.-Y.; Lin, H.-C. Optimization of Back-Propagation Network Using Simulated Annealing Approach. In Proceedings of the 2006 IEEE International Conference on Systems, Man and Cybernetics, Taipei, Taiwan, 8–11 October 2006; pp. 2819–2824. [Google Scholar] [CrossRef]

- Sassi, M.A.; Otis, M.J.-D.; Campeau-Lecours, A. Active stability observer using artificial neural network for intuitive physical human–robot interaction. Int. J. Adv. Robot. Syst. 2017, 14, 1–16. [Google Scholar] [CrossRef]

- De Momi, E.; Kranendonk, L.; Valenti, M.; Enayati, N.; Ferrigno, G. A Neural Network-Based Approach for Trajectory Planning in Robot–Human Handover Tasks. Front. Robot. AI 2016, 3, 1–10. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N. A recurrent neural network for variable admittance control in human–robot cooperation: Simultaneously and online adjustment of the virtual damping and Inertia parameters. Int. J. Intell. Robot. Appl. 2020, 4, 441–464. [Google Scholar] [CrossRef]

- Rad, A.B.; Bui, T.W.; Li, Y.; Wong, Y.K. A new on-line PID tuning method using neural networks. In Proceedings of the IFAC Digital Control: Past, Present and Future of PID Control, Terrassa, Spain, 5–7 April 2000; Volume 33, pp. 443–448. [Google Scholar]

- Elbelady, S.A.; Fawaz, H.E.; Aziz, A.M.A. Online Self Tuning PID Control Using Neural Network for Tracking Control of a Pneumatic Cylinder Using Pulse Width Modulation Piloted Digital Valves. Int. J. Mech. Mechatron. Eng. IJMME-IJENS 2016, 16, 123–136. [Google Scholar]

- Hernández-Alvarado, R.; García-Valdovinos, L.G.; Salgado-Jiménez, T.; Gómez-Espinosa, A.; Fonseca-Navarro, F. Neural Network-Based Self-Tuning PID Control for Underwater Vehicles. Sensors 2016, 16, 1429. [Google Scholar] [CrossRef]

- Xie, T.; Yu, H.; Wilamowski, B. Comparison between Traditional Neural Networks and Radial Basis Function Networks. In Proceedings of the 2011 IEEE International Symposium on Industrial Electronics, Gdansk, Poland, 27–30 June 2011; pp. 1194–1199. [Google Scholar]

- Jeatrakul, P.; Wong, K.W. Comparing the performance of different neural networks for binary classification problems. In Proceedings of the 2009 Eighth International Symposium on Natural Language Processing, Bangkok, Thailand, 20–22 October 2009; pp. 111–115. [Google Scholar] [CrossRef]

- Bhardwaj, A. What is Cross Entropy? Published in Towards Data Science. 2020. Available online: https://towardsdatascience.com/what-is-cross-entropy-3bdb04c13616 (accessed on 3 November 2020).

- Koech, K.E. Cross-Entropy Loss Function. Published in Towards Data Science. 2020. Available online: https://towardsdatascience.com/cross-entropy-loss-function-f38c4ec8643e (accessed on 2 October 2020).

- Møller, M.F. A scaled conjugate gradient algorithm for fast supervised learning. Neural Netw. 1993, 6, 525–533. [Google Scholar] [CrossRef]

- Nayak, S. Scaled Conjugate Gradient Backpropagation Algorithm for Selection of Industrial Robots. Int. J. Comput. Appl. 2017, 6, 92–101. [Google Scholar] [CrossRef]

- Gill, P.E.; Murray, W.; Wright, M.H. Practical Optimization; Emerald Group Publishing Limited: Bingley, UK, 1982. [Google Scholar]

- Hestenes, M.R. Conjugate Direction Methods in Optimization; Springer: New York, NY, USA, 1980. [Google Scholar] [CrossRef]

| Parameter | Signal | ||

|---|---|---|---|

| External Force Sensor, (N) | External Torque of Joint 1, (Nm) | External Torque of Joint 2, (Nm) | |

| Mean of absolute value | 0.4362 | 0.1830 | 0.0710 |

| Maximum of absolute value | 20.3771 | 10.7715 | 3.3552 |

| Minimum of absolute value | 4.1624 × 10−6 | 1.3568 × 10−5 | 2.2087 × 10−6 |

| Standard deviation of absolute value | 1.5284 | 0.7845 | 0.2474 |

| Parameter | Value |

|---|---|

| Number of layers | Three layers; input, hidden, and output layers |

| Number of inputs | Two inputs; the position of joint 1 and of joint 2 |

| Activation function of hidden layer | Tanh (hyperbolic tangent)—hidden layer is nonlinear |

| Number of hidden neurons | 60 |

| Number of outputs | Three outputs; force sensor signal, external torque of joint 1 and of joint 2 |

| Activation function of output layer | Linear |

| Training algorithm | Levenberg–Marquardt (LM) |

| Total collected samples | 54,229 samples |

| Number of training samples | 46,095 samples |

| Number of testing samples | 5423 samples |

| Number of validation samples | 2711 samples |

| Software for training process | MATLAB |

| Processer used for training | Intel(R) Core (TM) i5-8250U CPU@1.60GHz |

| Training time | 18 min and 26 s |

| Number of epochs/iterations used | 1000 |

| Criterion considered for the training | Obtaining the smallest mean-squared error (MSE). The smaller MSE, the most accurate network in estimation |

| The MSE obtained | 0.06666 |

| Obtained regression from training | R = 0.96618 |

| Obtained regression from testing | R = 0.97292 |

| Obtained regression from validation | R = 0.97582 |

| The average of absolute approximation error resulted from testing process | In case of force sensor signal, 0.1512 Nm In case of external torque of joint 1, 0.0605 Nm In case of external torque of joint 2, 0.0267 Nm |

| Effectiveness | The results proved that the MLFFNN is efficient and estimates the signals in a correct way |

| Process | Number of Samples | Samples with Collision | Sample without Collision |

|---|---|---|---|

| Training | 37,961 | 1073 | 36,888 |

| Validation | 8134 | 215 | 7919 |

| Testing | 8134 | 216 | 7918 |

| Hidden Neurons | ||||||||

|---|---|---|---|---|---|---|---|---|

| Main parameter to check the performance of the designed PRNN during the training | 20 | 40 | 60 | 80 | 100 | 120 | 140 | 160 |

| Resulting cross-entropy | 0.4851 | 0.3654 | 0.3281 | 0.1342 | 0.0895 | 0.0697 (the best case) | 0.0701 | 0.0723 |

| Process | Number of Samples | Samples with Collisions | Samples without Collisions |

|---|---|---|---|

| Training | 37,961 | 1017 | 36,944 |

| Validation | 8134 | 227 | 7909 |

| Testing | 8134 | 242 | 7892 |

| Researcher | Method Used | Inputs Used | Application |

|---|---|---|---|

| Briquet-Kerestedjian et al. [11] | Artificial neural network (NN) | Many inputs, including window of M samples based on actual speeds of the joints and the load joint torques. | The proprietary torque sensor in the robot is required for this approach; therefore, this method is restricted to being used with collaborative robots only. |

| Franzel et al. [12] | Support vector machine (SVM) | All the sensor readings, such as position and orientation of end-effector, the external torques of each joint, the wrench resulted from an external sensor, and the angles of joints. An external force/torque sensor is required. | As there is no need for any proprietary torque sensor in the robot, the method can be applied to any robot. |

| Al-Haija and Al-Saraireh [13] | Ensemble-based bagged trees (EBT) | Six inputs, including data from a 140-millisecond time lapse sensor, the motor torque, the external torque, the position, and velocity of the joint. | The proprietary torque sensor in the robot is required for this approach; therefore, this method is restricted to being used with the collaborative robots only. |

| The current work | PRNN/PRNN-LM | Three inputs, which are the estimated external force sensor signal and the estimated external torques. | As there is no need for any proprietary torque sensor in the robot, the method can be applied to any robot. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharkawy, A.-N.; Ma’arif, A.; Furizal; Sekhar, R.; Shah, P. A Comprehensive Pattern Recognition Neural Network for Collision Classification Using Force Sensor Signals. Robotics 2023, 12, 124. https://doi.org/10.3390/robotics12050124

Sharkawy A-N, Ma’arif A, Furizal, Sekhar R, Shah P. A Comprehensive Pattern Recognition Neural Network for Collision Classification Using Force Sensor Signals. Robotics. 2023; 12(5):124. https://doi.org/10.3390/robotics12050124

Chicago/Turabian StyleSharkawy, Abdel-Nasser, Alfian Ma’arif, Furizal, Ravi Sekhar, and Pritesh Shah. 2023. "A Comprehensive Pattern Recognition Neural Network for Collision Classification Using Force Sensor Signals" Robotics 12, no. 5: 124. https://doi.org/10.3390/robotics12050124

APA StyleSharkawy, A.-N., Ma’arif, A., Furizal, Sekhar, R., & Shah, P. (2023). A Comprehensive Pattern Recognition Neural Network for Collision Classification Using Force Sensor Signals. Robotics, 12(5), 124. https://doi.org/10.3390/robotics12050124