A Semiautonomous Control Strategy Based on Computer Vision for a Hand–Wrist Prosthesis

Abstract

:1. Introduction

2. Materials and Methods

2.1. Proposed Approach

2.1.1. EMG Signal Classifier

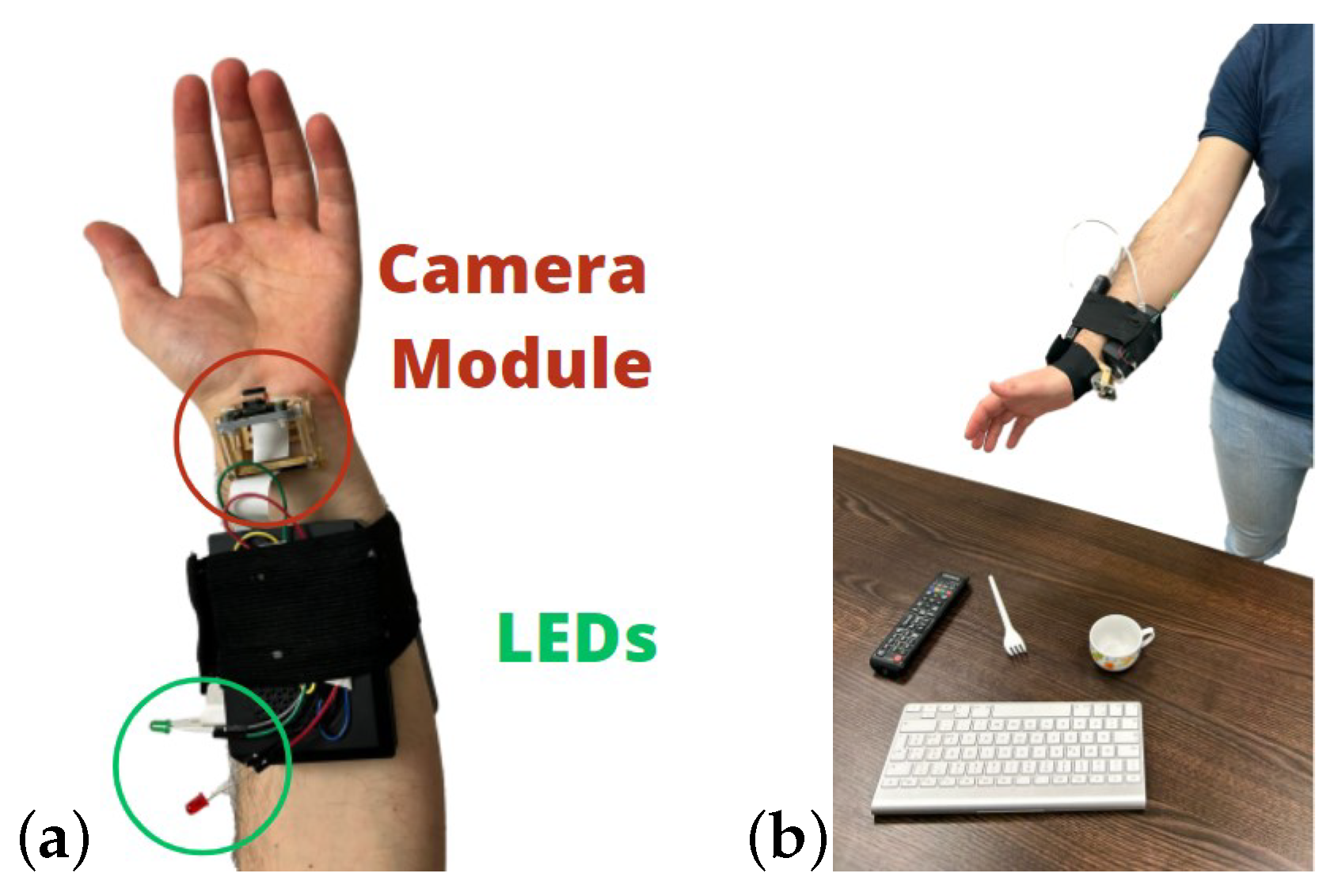

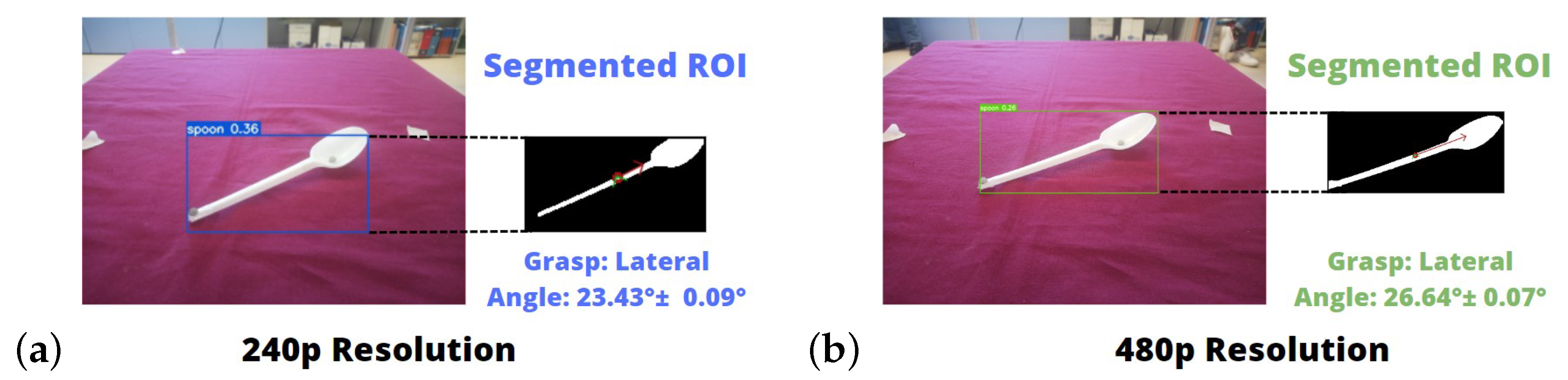

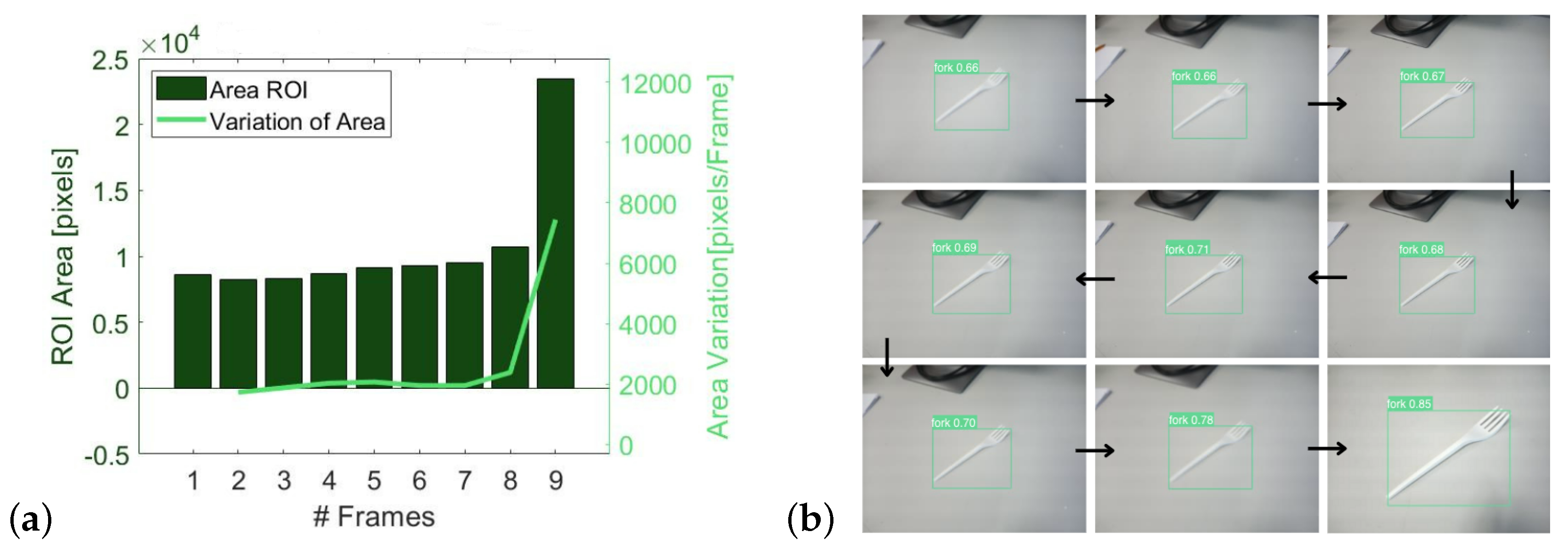

2.1.2. Computer Vision System (CVS)

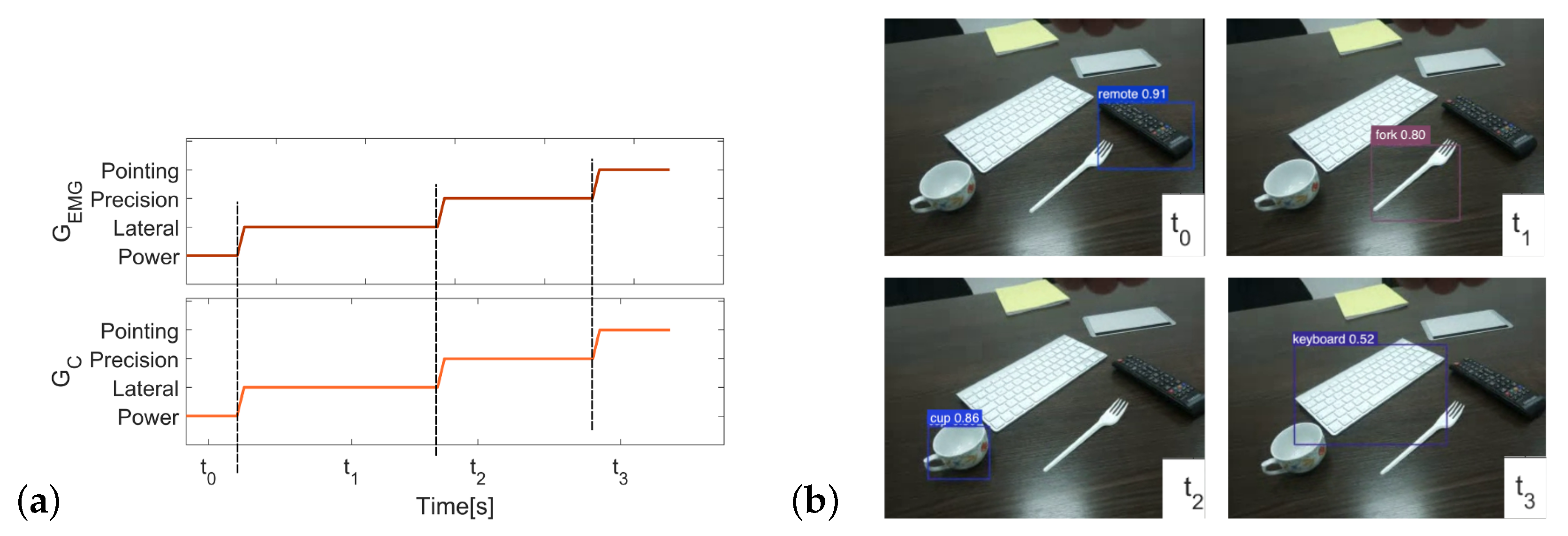

2.1.3. Vision-Based Hand–Wrist Control Strategy

- If there are no objects detected in the scene, then the control algorithm returns the Rest gesture. This information is given back to the user via visual feedback, as explained in Section 2.1.4.

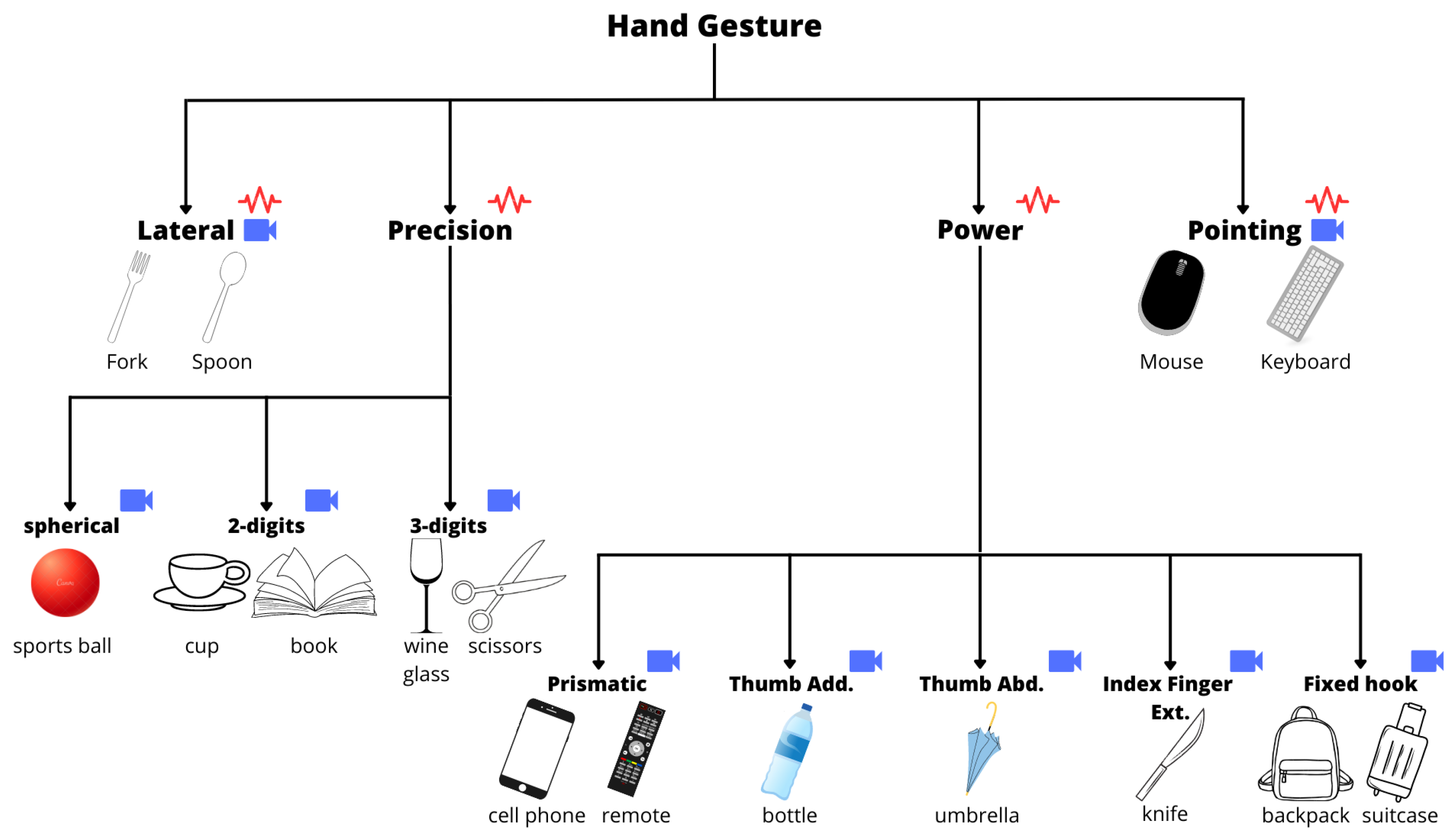

- If there is non-coherence between the output from the EMG classifier and the hand gestures associated with the detected objects, then priority is given to the visual information. It means that the output of the Grasp Selection module is the hand gesture corresponding to the object with the highest confidence level. The correspondence between the object and the grasp is shown in Figure 2.

- If there is coherence between the output of the EMG classifier and the grasp associated with an object in the scene, then the object is taken into account, and the other objects not belonging to the class recognized by the EMG classifier are removed from the list of detections. In this case, the number of objects is variable and depends on how many of them can be grasped with the hand gesture obtained from the EMG classifier. In particular, If there are multiple objects that can be grasped with the class obtained from the user EMG classifier, then only objects framed in the central portion of the scene are considered. It is assumed that the object the user wants to grab is framed in the central region of the image [29], thus the one towards which the prosthetic hand will move. Even in this case, the number of objects is variable and depends on how many of them are in the central portion of the acquired image. If there are multiple objects in the central portion of the image, then the one that was recognized with a higher confidence level is selected.

2.1.4. Visual Feedback

2.2. Experimental Validation

2.2.1. Experimental Setup

2.2.2. Experimental Protocol

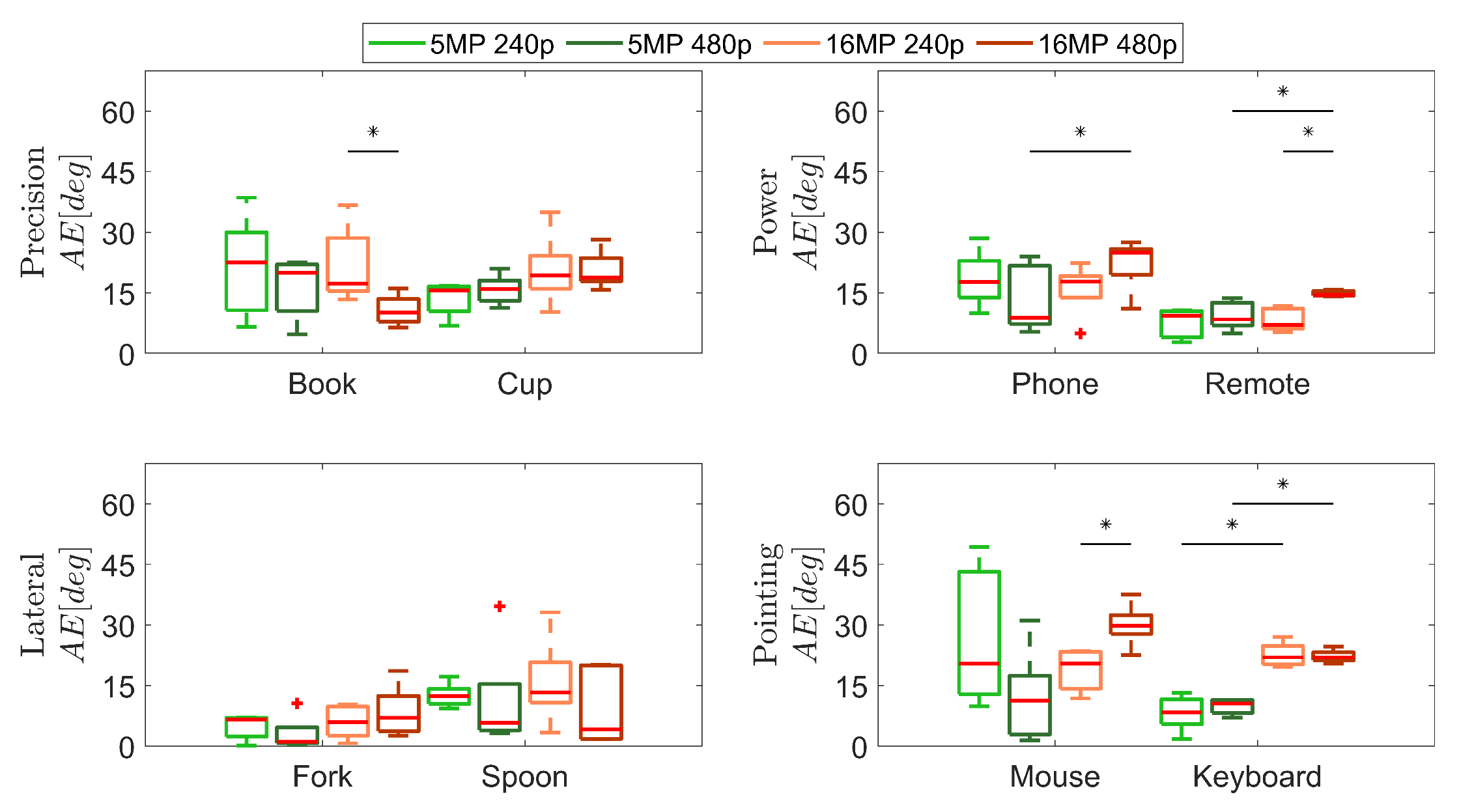

2.2.3. Key Performance Indicators (KPIs)

2.2.4. Statistical Analysis

3. Results and Discussions

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DoF | Degrees of Freedom |

| EMG | Electromyography |

| SCS | Semiautonomous Control Strategy |

| CVS | Computer Vision System |

| CNN | Convolutional Neural Network |

| P/S | Pronation/Supination |

| SBC | Single-Board Computer |

| COCO | Common Objects in Context |

| ROI | Region Of Interest |

| PCA | Principal Component Analysis |

| PC | Principal Component |

| VNC | Virtual Network Computing |

| KPI | Key Performance Indicator |

| AOC | Accuracy in Object Classification |

| AGC | Accuracy in Grasp Classification |

| AE | Angular Error |

| AES | Angular Estimation Stability |

| AF | Analysis Frequency |

| FPS | Frames Per Second |

| OA | Occlusion Area |

| SR | Success Rate |

References

- Yamamoto, M.; Chung, K.C.; Sterbenz, J.; Shauver, M.J.; Tanaka, H.; Nakamura, T.; Oba, J.; Chin, T.; Hirata, H. Cross-sectional international multicenter study on quality of life and reasons for abandonment of upper limb prostheses. Plast. Reconstr. Surg. Glob. Open 2019, 7, e2205. [Google Scholar] [CrossRef] [PubMed]

- Tamantini, C.; Cordella, F.; Lauretti, C.; Zollo, L. The WGD—A dataset of assembly line working gestures for ergonomic analysis and work-related injuries prevention. Sensors 2021, 21, 7600. [Google Scholar] [CrossRef]

- Jang, C.H.; Yang, H.S.; Yang, H.E.; Lee, S.Y.; Kwon, J.W.; Yun, B.D.; Choi, J.Y.; Kim, S.N.; Jeong, H.W. A survey on activities of daily living and occupations of upper extremity amputees. Ann. Rehabilit. Med. 2011, 35, 907–921. [Google Scholar] [CrossRef]

- Smail, L.C.; Neal, C.; Wilkins, C.; Packham, T.L. Comfort and function remain key factors in upper limb prosthetic abandonment: Findings of a scoping review. Disabil. Rehabilit. Assist. Technol. 2021, 16, 821–830. [Google Scholar] [CrossRef] [PubMed]

- Igual, C.; Pardo, L.A., Jr.; Hahne, J.M.; Igual, J. Myoelectric control for upper limb prostheses. Electronics 2019, 8, 1244. [Google Scholar] [CrossRef]

- Roche, A.D.; Rehbaum, H.; Farina, D.; Aszmann, O.C. Prosthetic myoelectric control strategies: A clinical perspective. Curr. Surg. Rep. 2014, 2, 44. [Google Scholar] [CrossRef]

- Atzori, M.; Cognolato, M.; Müller, H. Deep learning with convolutional neural networks applied to electromyography data: A resource for the classification of movements for prosthetic hands. Front. Neurorobot. 2016, 10, 9. [Google Scholar] [CrossRef]

- Hahne, J.M.; Schweisfurth, M.A.; Koppe, M.; Farina, D. Simultaneous control of multiple functions of bionic hand prostheses: Performance and robustness in end users. Sci. Robot. 2018, 3, eaat3630. [Google Scholar] [CrossRef]

- Leone, F.; Gentile, C.; Cordella, F.; Gruppioni, E.; Guglielmelli, E.; Zollo, L. A parallel classification strategy to simultaneous control elbow, wrist, and hand movements. J. NeuroEng. Rehabilit. 2022, 19, 10. [Google Scholar] [CrossRef]

- Yadav, D.; Veer, K. Recent trends and challenges of surface electromyography in prosthetic applications. Biomed. Eng. Lett. 2023, 13, 353–373. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhu, J. The Application of EMG and Machine Learning in Human Machine Interface. In Proceedings of the 2nd International Conference on Bioinformatics and Intelligent Computing, Harbin, China, 21–23 January 2022; pp. 465–469. [Google Scholar]

- Tomovic, R.; Boni, G. An adaptive artificial hand. IRE Trans. Autom. Control 1962, 7, 3–10. [Google Scholar] [CrossRef]

- Stefanelli, E.; Cordella, F.; Gentile, C.; Zollo, L. Hand Prosthesis Sensorimotor Control Inspired by the Human Somatosensory System. Robotics 2023, 12, 136. [Google Scholar] [CrossRef]

- Dosen, S.; Cipriani, C.; Kostić, M.; Controzzi, M.; Carrozza, M.C.; Popović, D. Cognitive vision system for control of dexterous prosthetic hands: Experimental evaluation. J. Neuroeng. Rehabilit. 2010, 7, 42. [Google Scholar] [CrossRef] [PubMed]

- Došen, S.; Popović, D.B. Transradial prosthesis: Artificial vision for control of prehension. Artif. Organs 2011, 35, 37–48. [Google Scholar] [CrossRef] [PubMed]

- Castro, M.N.; Dosen, S. Continuous Semi-autonomous Prosthesis Control Using a Depth Sensor on the Hand. Front. Neurorobot. 2022, 16, 814973. [Google Scholar] [CrossRef]

- Ghazaei, G.; Alameer, A.; Degenaar, P.; Morgan, G.; Nazarpour, K. Deep learning-based artificial vision for grasp classification in myoelectric hands. J. Neural Eng. 2017, 14, 036025. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Weiner, P.; Starke, J.; Rader, S.; Hundhausen, F.; Asfour, T. Designing Prosthetic Hands With Embodied Intelligence: The KIT Prosthetic Hands. Front. Neurorobot. 2022, 16, 815716. [Google Scholar] [CrossRef]

- Perera, D.M.; Madusanka, D. Vision-EMG Fusion Method for Real-time Grasping Pattern Classification System. In Proceedings of the 2021 Moratuwa Engineering Research Conference, Moratuwa, Sri Lanka, 27–29 July 2021; pp. 585–590. [Google Scholar]

- Cognolato, M.; Atzori, M.; Gassert, R.; Müller, H. Improving robotic hand prosthesis control with eye tracking and computer vision: A multimodal approach based on the visuomotor behavior of grasping. Front. Artif. Intell. 2022, 4, 744476. [Google Scholar] [CrossRef]

- Deshmukh, S.; Khatik, V.; Saxena, A. Robust Fusion Model for Handling EMG and Computer Vision Data in Prosthetic Hand Control. IEEE Sens. Lett. 2023, 7, 6004804. [Google Scholar] [CrossRef]

- Cordella, F.; Di Corato, F.; Loianno, G.; Siciliano, B.; Zollo, L. Robust pose estimation algorithm for wrist motion tracking. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 3746–3751. [Google Scholar]

- Boshlyakov, A.A.; Ermakov, A.S. Development of a Vision System for an Intelligent Robotic Hand Prosthesis Using Neural Network Technology. In Proceedings of the ITM Web of Conference EDP Sciences, Moscow, Russia, 28–29 November 2020; Volume 35, p. 04006. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Phadtare, M.; Choudhari, V.; Pedram, R.; Vartak, S. Comparison between YOLO and SSD mobile net for object detection in a surveillance drone. Int. J. Sci. Res. Eng. Manag. 2021, 5, b822–b827. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Feix, T.; Romero, J.; Schmiedmayer, H.B.; Dollar, A.M.; Kragic, D. The grasp taxonomy of human grasp types. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 66–77. [Google Scholar] [CrossRef]

- Flanagan, J.R.; Terao, Y.; Johansson, R.S. Gaze behavior when reaching to remembered targets. J. Neurophysiol. 2008, 100, 1533–1543. [Google Scholar] [CrossRef] [PubMed]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Tamantini, C.; Lapresa, M.; Cordella, F.; Scotto di Luzio, F.; Lauretti, C.; Zollo, L. A robot-aided rehabilitation platform for occupational therapy with real objects. In Proceedings of the Converging Clinical and Engineering Research on Neurorehabilitation IV: 5th ICNR2020, Vigo, Spain, 13–16 October 2022; pp. 851–855. [Google Scholar]

- Gardner, M.; Woodward, R.; Vaidyanathan, R.; Bürdet, E.; Khoo, B.C. An unobtrusive vision system to reduce the cognitive burden of hand prosthesis control. In Proceedings of the 13th ICARCV, Kunming, China, 6–9 December 2004; pp. 1279–1284. [Google Scholar]

- DeGol, J.; Akhtar, A.; Manja, B.; Bretl, T. Automatic grasp selection using a camera in a hand prosthesis. In Proceedings of the 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 431–434. [Google Scholar]

- Castro, M.C.F.; Pinheiro, W.C.; Rigolin, G. A Hybrid 3D Printed Hand Prosthesis Prototype Based on sEMG and a Fully Embedded Computer Vision System. Front. Neurorobot. 2022, 15, 751282. [Google Scholar] [CrossRef]

- Devi, M.A.; Udupa, G.; Sreedharan, P. A novel underactuated multi-fingered soft robotic hand for prosthetic application. Robot. Auton. Syst. 2018, 100, 267–277. [Google Scholar]

- Sun, Y.; Liu, Y.; Pancheri, F.; Lueth, T.C. Larg: A lightweight robotic gripper with 3-d topology optimized adaptive fingers. IEEE/ASME Trans. Mechatronics 2022, 27, 2026–2034. [Google Scholar] [CrossRef]

| Camera Model | Arducam 16 MP | Joy-It Wide Angle |

|---|---|---|

| Sensor | Sony IMX519 | OV5647 |

| Resolution [pixels] (Static Images) | 16 MP (4656 × 3496) | 5 MP (2952 × 1944) |

| Resolution [pixels] (Video) | 1080p30, 720p60, 640 × 480p90 | 1080p30, 960p 45, 720p60, 640 × 480p90 |

| Field of View (FoV) | 80° | 160° |

| Autofocus | ✓ | N.A. |

| Dimension [mm] | 25 × 23.86 × 9 | 25 × 24 × 18 |

| Weights [g] | 3 | 5 |

| Cost | 33.95 € | 20.17 € |

| KPI 1 | KPI 2 | KPI 3 | KPI 4 | ||

|---|---|---|---|---|---|

| [deg] | [deg] | [FPS] | |||

| 5 MP 240p | 97.80% | 98.15% | 14.17 ± 10.53 | 0.70 | 2.02 ± 0.13 |

| 5 MP 480p | 97.35% | 97.55% | 11.40 ± 8.12 | 0.38 | 0.87 ± 0.13 |

| 16 MP 240p | 97.85% | 99.80% | 16.26 ± 8.62 | 0.20 | 2.07 ± 0.15 |

| 16 MP 480p | 99.35% | 99.55% | 17.35 ± 8.80 | 0.34 | 0.88 ± 0.17 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cirelli, G.; Tamantini, C.; Cordella, L.P.; Cordella, F. A Semiautonomous Control Strategy Based on Computer Vision for a Hand–Wrist Prosthesis. Robotics 2023, 12, 152. https://doi.org/10.3390/robotics12060152

Cirelli G, Tamantini C, Cordella LP, Cordella F. A Semiautonomous Control Strategy Based on Computer Vision for a Hand–Wrist Prosthesis. Robotics. 2023; 12(6):152. https://doi.org/10.3390/robotics12060152

Chicago/Turabian StyleCirelli, Gianmarco, Christian Tamantini, Luigi Pietro Cordella, and Francesca Cordella. 2023. "A Semiautonomous Control Strategy Based on Computer Vision for a Hand–Wrist Prosthesis" Robotics 12, no. 6: 152. https://doi.org/10.3390/robotics12060152

APA StyleCirelli, G., Tamantini, C., Cordella, L. P., & Cordella, F. (2023). A Semiautonomous Control Strategy Based on Computer Vision for a Hand–Wrist Prosthesis. Robotics, 12(6), 152. https://doi.org/10.3390/robotics12060152