Got It? Comparative Ergonomic Evaluation of Robotic Object Handover for Visually Impaired and Sighted Users

Abstract

:1. Introduction

1.1. Robotic Object Handover

1.2. Accessibility of Human–Robot Handover

| Handover Phase | General User Requirements | BVI User Requirements | ||

|---|---|---|---|---|

| Throughout all phases | apply emergent coordination arising from the interplay between perception and action [6], e.g., active, coordinating gaze behavior [13] | design the interface as close as possible to the norm [19] coordinate handovers without eye contact [26] allow only one partner to move at a time [28] | ||

| Prehandover Phase | Inaction |  | use verbal welcoming and introduction of the robot by name to help locate the robot and establish a social connection with it [26] | |

| Initiation | initiate the handover through a handover request or a task that naturally involves an object handover [6] use planned coordination by forming a shared representation of, e.g., which object will be handed over, handover location, and what task the receiver will subsequently perform with the object [6] | support obtaining order information by a Voice User Interface [26] | ||

| Preparation |  | when planning a grasp, pay attention to object shape, object function and user safety [7], object orientation [8], situation, such as dynamics [5], and the subsequent task the receiver performs after handover [6] let the robot check for human’s eye contact as it approaches [14] | plan object orientation and grasping along human conventions [11] provide verbal information about the type, orientation, and position of the object, along with potential sources of harm when approaching [28] actively provide ongoing status updates or enable retrieval of this information through voice commands at any given time [26,27] | |

| Physical Handover Phase | Physical Exchange |  | let the orientation of delivered objects adhere to the social conventions observed in everyday human handovers [8,9], particularly covering hazardous surfaces and orienting hazardous parts of the object away from the receiver when dealing with potentially hazardous items [11] | provide direct and indirect handover modalities [12,28] provide haptic support for better orientation during midair handovers [28] (e.g., airflow) allow handover processes to be slowed down for more careful execution [29] let users actively control the process themselves, e.g., to confirm when they have safely grasped the object before the robot opens its gripper [26] |

| Performance |  | let the robot return to a known base position to avoid becoming a dangerous obstacle after handover [26] | ||

1.3. Methods for Evaluating Robot-to-Human Handover

1.4. Research Objectives and Paper Structure

- Overall usability:

- Effectiveness:

- Efficiency:

- User Experience and Satisfaction:

2. Materials and Methods

2.1. Sample

2.2. Materials and Context of Use

2.3. Design, Procedures and Measures

2.4. Data Analysis

3. Results

3.1. Overall Usability

3.2. Effectiveness

3.2.1. Success Rate

3.2.2. Joint Action Failure Rate

3.2.3. Robotic Failure Rate

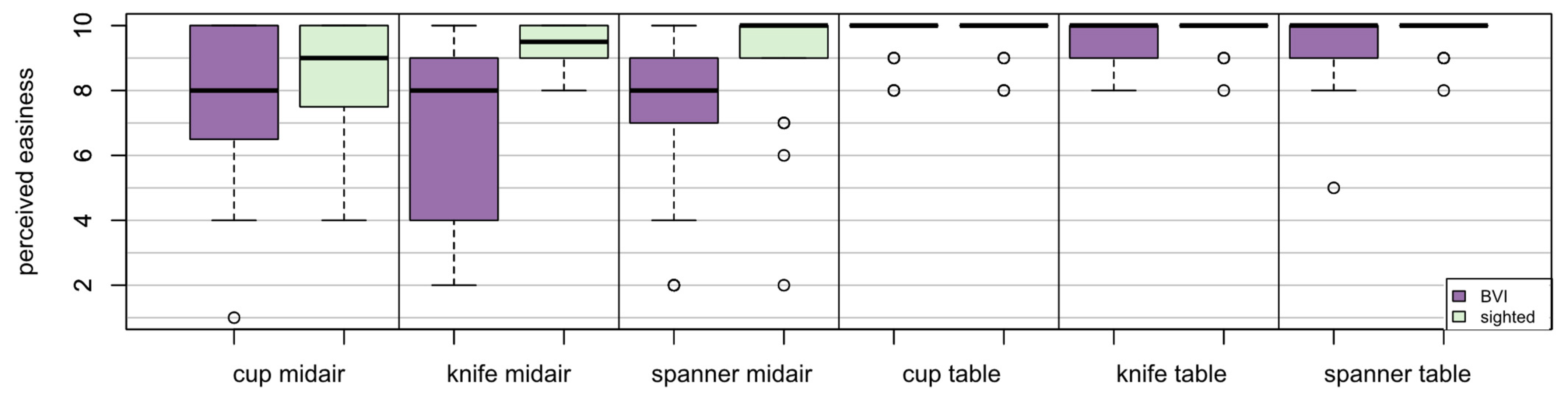

3.2.4. Perceived Easiness

3.3. Efficiency

3.3.1. Task Completion Time

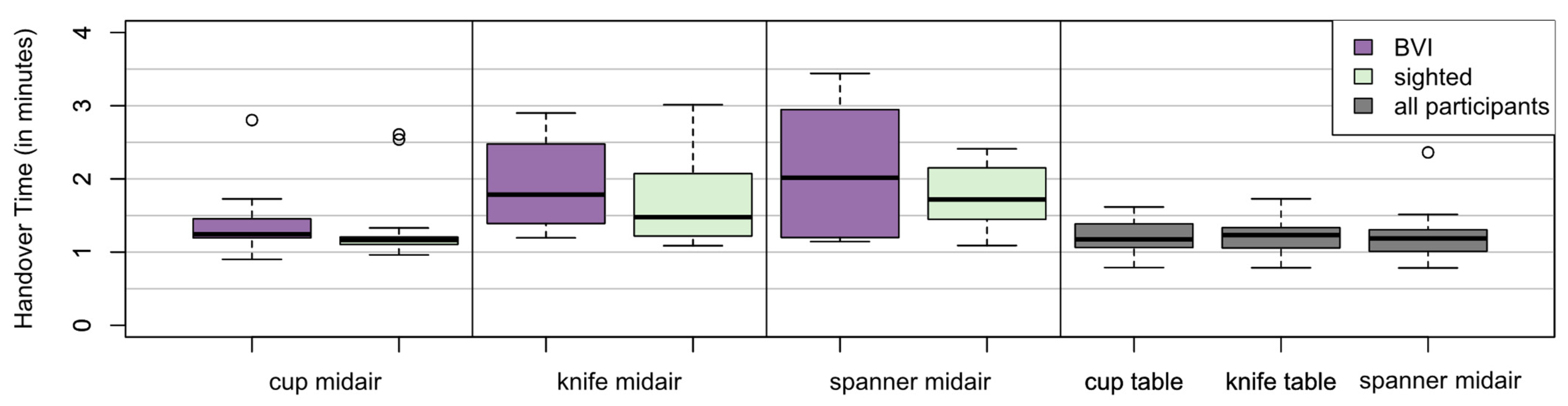

3.3.2. Handover Time

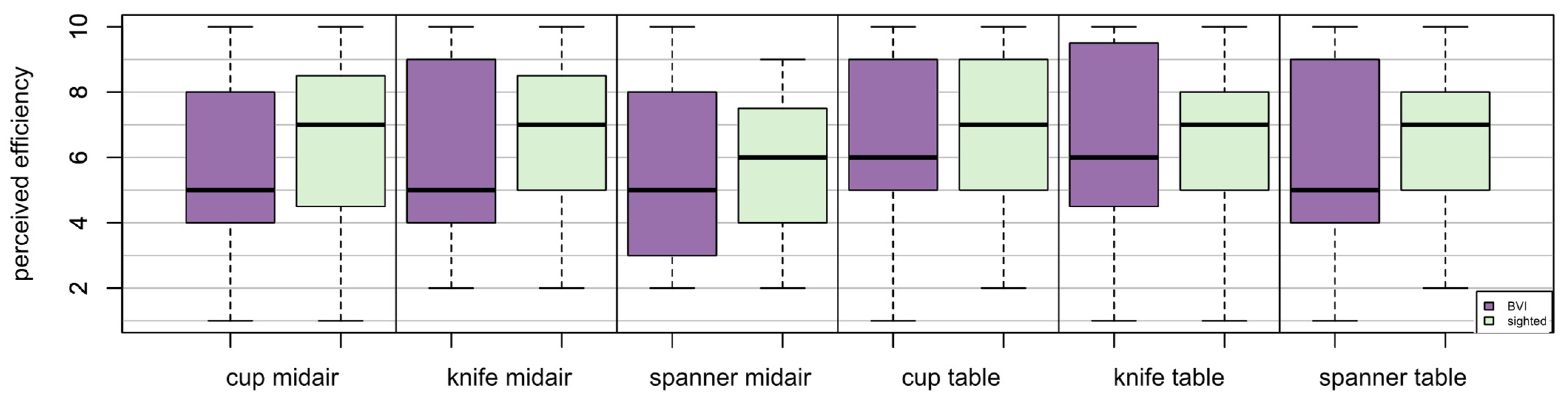

3.3.3. Perceived Efficiency

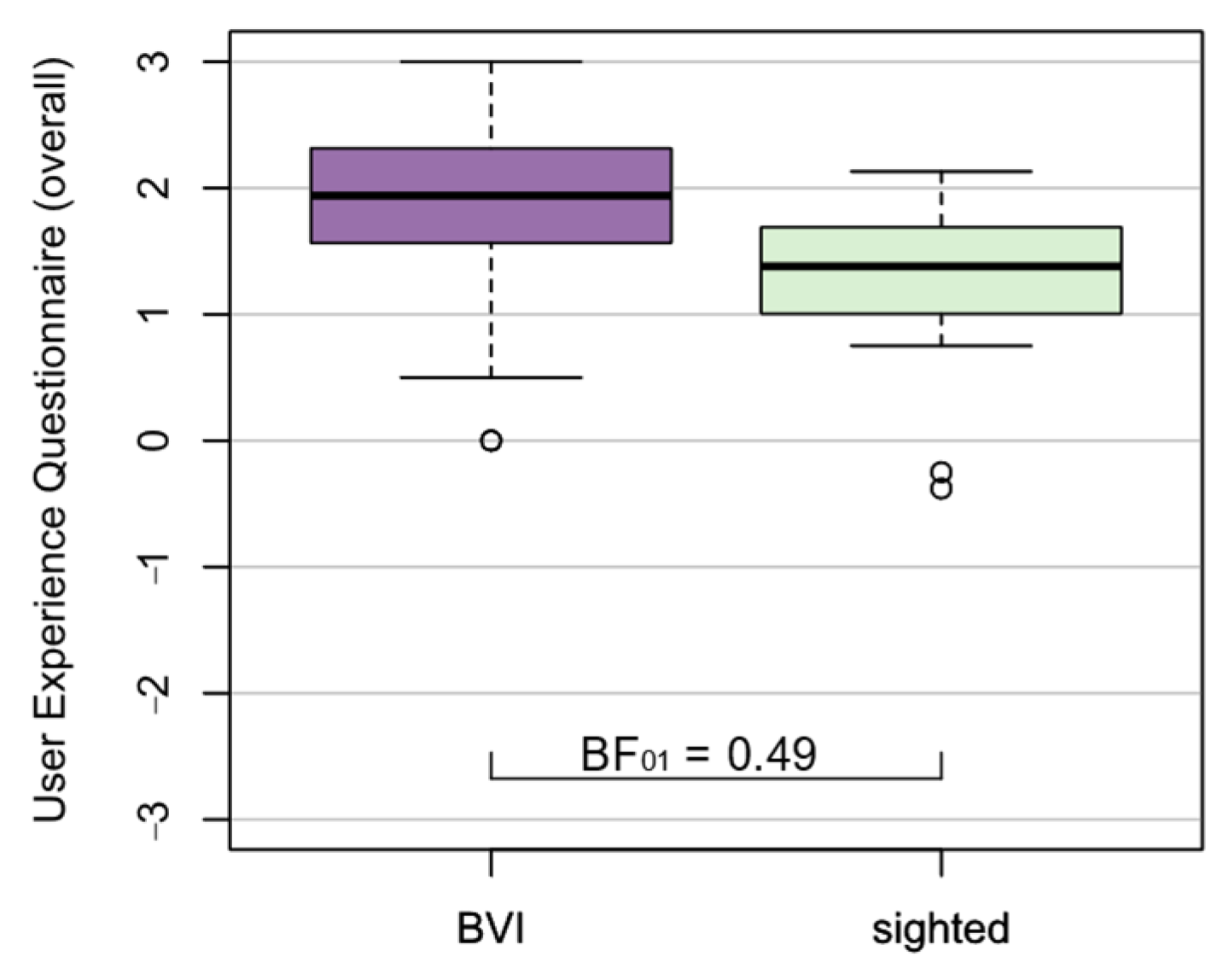

3.4. User Experience and Satisfaction

3.5. Relationship between Dependent Variables

4. Discussion

4.1. Overall Usability

4.2. Effectiveness

4.3. Efficiency

4.4. User Experience and Satisfaction

4.5. Relationship between Dependent Variables

4.6. Limitations and Future Research

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Overview on Descriptive Statistics for all Dependent Variables

| Variable | BVI M (SD) | Sighted M (SD) |

|---|---|---|

| Overall | ||

| SUS (scale range 0 to 100) | 70.25 (16.68) | 72.50 (11.47) |

| Effectiveness | ||

| success rate | 94.55% | 100% |

| joint action failure rate | 1.81% | 0% |

| robotic failure rate | 3.63% | 0% |

| perceived easiness (scale range 1 to 10) 1,2 | 7.95 (2.83) | 9.25 (1.43) |

| Efficiency | ||

| Task completion time 1,2 (in minutes) —midair —on table | 5.75 (0.96) 4.58 (0.66) | 5.78 (1.10) 4.92 (1.03) |

| Handover time 1,2,3 (in minutes) —midair | 1.78 (0.71) | 1.56 (0.51) |

| perceived efficiency (scale range 1 to 10) 1,2 | 5.93 (2.87) | 6.36 (2.39) |

| Satisfaction/User Experience | ||

| Objective preference for modality (midair vs. table) | 81.7% vs. 18.3% | 79.3% vs. 20.7% |

| UEQ-Soverall (scale range −3 to 3) | 1.79 (0.81) | 1.27 (0.67) |

References

- IAPB. International Agency for the Prevention of Blindness’s Vision Atlas. Available online: https://www.iapb.org/learn/vision-atlas/magnitude-and-projections/ (accessed on 11 December 2023).

- Bhowmick, A.; Hazarika, S.M. An insight into assistive technology for the visually impaired and blind people: State-of-the-art and future trends. J. Multimodal User Interfaces 2017, 11, 149–172. [Google Scholar] [CrossRef]

- Mack, K.; McDonnell, E.; Jain, D.; Lu Wang, L.; Froehlich, J.E.; Findlater, L. What Do We Mean by “Accessibility Research”? In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; Kitamura, Y., Quigley, A., Isbister, K., Igarashi, T., Bjørn, P., Drucker, S., Eds.; ACM: New York, NY, USA, 2021; pp. 1–18. [Google Scholar] [CrossRef]

- Babel, F.; Kraus, J.; Baumann, M. Findings from a Qualitative Field Study with an Autonomous Robot in Public: Exploration of User Reactions and Conflicts. Int. J. Soc. Robot. 2022, 14, 1625–1655. [Google Scholar] [CrossRef]

- Kupcsik, A.; Hsu, D.; Lee, W.S. Learning Dynamic Robot-to-Human Object Handover from Human Feedback. In Robotics Research; Bicchi, A., Burgard, W., Eds.; Springer Proceedings in Advanced Robotics; Springer International Publishing: Cham, Switzerland, 2018; pp. 161–176. [Google Scholar] [CrossRef]

- Ortenzi, V.; Cosgun, A.; Pardi, T.; Chan, W.P.; Croft, E.; Kulic, D. Object Handovers: A Review for Robotics. IEEE Trans. Robot. 2021, 37, 1855–1873. [Google Scholar] [CrossRef]

- Kim, J.; Park, J.; Hwang, Y.K.; Lee, M. Three handover methods in esteem etiquettes using dual arms and hands of home-service robot. In Proceedings of the ICARA 2004: Proceedings of the Second International Conference on Autonomous Robots and Agents, Palmerston North, New Zealand, 13–15 December 2004; Mukhopadhyay, S.C., Sen Gupta, G., Eds.; Institute of Information Sciences and Technology, Massey University: Palmerston North, New Zealand, 2004; pp. 34–39. [Google Scholar]

- Cakmak, M.; Srinivasa, S.S.; Lee, M.K.; Forlizzi, J.; Kiesler, S. Human preferences for robot-human hand-over configurations. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1986–1993. [Google Scholar] [CrossRef]

- Aleotti, J.; Micelli, V.; Caselli, S. An Affordance Sensitive System for Robot to Human Object Handover. Int. J. Soc. Robot. 2014, 6, 653–666. [Google Scholar] [CrossRef]

- Haddadin, S.; Albu-Schaffer, A.; Haddadin, F.; Rosmann, J.; Hirzinger, G. Study on Soft-Tissue Injury in Robotics. IEEE Robot. Automat. Mag. 2011, 18, 20–34. [Google Scholar] [CrossRef]

- Langer, D.; Legler, F.; Krusche, S.; Bdiwi, M.; Palige, S.; Bullinger, A.C. Greif zu—Entwicklung einer Greifstrategie für robotergestützte Objektübergaben mit und ohne Sichtkontakt. Z. Arb. Wiss. 2023, 30, 297–316. [Google Scholar] [CrossRef]

- Choi, Y.S.; Chen, T.; Jain, A.; Anderson, C.; Glass, J.D.; Kemp, C.C. Hand it over or set it down: A user study of object delivery with an assistive mobile manipulator. In Proceedings of the RO-MAN 2009—The 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009; pp. 736–743. [Google Scholar] [CrossRef]

- Cochet, H.; Guidetti, M. Contribution of Developmental Psychology to the Study of Social Interactions: Some Factors in Play, Joint Attention and Joint Action and Implications for Robotics. Front. Psychol. 2018, 9, 1992. [Google Scholar] [CrossRef]

- Strabala, K.W.; Lee, M.K.; Dragan, A.D.; Forlizzi, J.L.; Srinivasa, S.; Cakmak, M.; Micelli, V. Towards Seamless Human-Robot Handovers. J. Hum.-Robot. Interact. 2013, 2, 112–132. [Google Scholar] [CrossRef]

- Bdiwi, M. Integrated Sensors System for Human Safety during Cooperating with Industrial Robots for Handing-over and Assembling Tasks. Procedia CIRP 2014, 23, 65–70. [Google Scholar] [CrossRef]

- Boucher, J.-D.; Pattacini, U.; Lelong, A.; Bailly, G.; Elisei, F.; Fagel, S.; Dominey, P.F.; Ventre-Dominey, J. I Reach Faster When I See You Look: Gaze Effects in Human-Human and Human-Robot Face-to-Face Cooperation. Front. Neurorobot. 2012, 6, 3. [Google Scholar] [CrossRef]

- Prada, M.; Remazeilles, A.; Koene, A.; Endo, S. Implementation and experimental validation of Dynamic Movement Primitives for object handover. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Palmer House Hilton, Chicago, IL, USA, 14–18 September 2014; pp. 2146–2153. [Google Scholar] [CrossRef]

- Newell, A.F.; Gregor, P.; Morgan, M.; Pullin, G.; Macaulay, C. User-Sensitive Inclusive Design. Univ. Access Inf. Soc. 2011, 10, 235–243. [Google Scholar] [CrossRef]

- Costa, D.; Duarte, C. Alternative modalities for visually impaired users to control smart TVs. Multimed. Tools Appl. 2020, 79, 31931–31955. [Google Scholar] [CrossRef]

- Da Guia Torres Silva, M.; Albuquerque, E.A.Y.; Goncalves, L.M.G. A Systematic Review on the Application of Educational Robotics to Children with Learning Disability. In Proceedings of the 2022 Latin American Robotics Symposium (LARS), 2022 Brazilian Symposium on Robotics (SBR), and 2022 Workshop on Robotics in Education (WRE), São Bernardo do Campo, Brazil, 18–21 October 2022; pp. 448–453. [Google Scholar] [CrossRef]

- Kyrarini, M.; Lygerakis, F.; Rajavenkatanarayanan, A.; Sevastopoulos, C.; Nambiappan, H.R.; Chaitanya, K.K.; Babu, A.R.; Mathew, J.; Makedon, F. A Survey of Robots in Healthcare. Technologies 2021, 9, 8. [Google Scholar] [CrossRef]

- Morgan, A.A.; Abdi, J.; Syed, M.A.Q.; Kohen, G.E.; Barlow, P.; Vizcaychipi, M.P. Robots in Healthcare: A Scoping Review. Curr. Robot. Rep. 2022, 3, 271–280. [Google Scholar] [CrossRef]

- Tsui, K.M.; Dalphond, J.M.; Brooks, D.J.; Medvedev, M.S.; McCann, E.; Allspaw, J.; Kontak, D.; Yanco, H.A. Accessible Human-Robot Interaction for Telepresence Robots: A Case Study. Paladyn. J. Behav. Robot. 2015, 6, 000010151520150001. [Google Scholar] [CrossRef]

- Bonani, M.; Oliveira, R.; Correia, F.; Rodrigues, A.; Guerreiro, T.; Paiva, A. What My Eyes Can’t See, A Robot Can Show Me. In Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility, Galway, Ireland, 22–24 October 2018; Hwang, F., Ed.; ACM: New York, NY, USA, 2018; pp. 15–27. [Google Scholar] [CrossRef]

- Qbilat, M.; Iglesias, A.; Belpaeme, T. A Proposal of Accessibility Guidelines for Human-Robot Interaction. Electronics 2021, 10, 561. [Google Scholar] [CrossRef]

- Langer, D.; Legler, F.; Kotsch, P.; Dettmann, A.; Bullinger, A.C. I Let Go Now! Towards a Voice-User Interface for Handovers between Robots and Users with Full and Impaired Sight. Robotics 2022, 11, 112. [Google Scholar] [CrossRef]

- Leporini, B.; Buzzi, M. Home Automation for an Independent Living. In Proceedings of the 15th International Web for All Conference, Lyon, France, 23–25 April 2018; ACM: New York, NY, USA, 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Walde, P.; Langer, D.; Legler, F.; Goy, A.; Dittrich, F.; Bullinger, A.C. Interaction Strategies for Handing Over Objects to Blind People. In Proceedings of the Annual Meeting of the Human Factors and Ergonomics Society Europe Chapter, Nantes, France, 2–4 October 2019; Available online: https://www.hfes-europe.org/wp-content/uploads/2019/10/Walde2019poster.pdf (accessed on 11 December 2023).

- Käppler, M.; Deml, B.; Stein, T.; Nagl, J.; Steingrebe, H. The Importance of Feedback for Object Hand-Overs Between Human and Robot. In Human Interaction, Emerging Technologies and Future Applications III; Ahram, T., Taiar, R., Langlois, K., Choplin, A., Eds.; Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2021; pp. 29–35. [Google Scholar] [CrossRef]

- Brulé, E.; Tomlinson, B.J.; Metatla, O.; Jouffrais, C.; Serrano, M. Review of Quantitative Empirical Evaluations of Technology for People with Visual Impairments. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Bernhaupt, R., Mueller, F., Verweij, D., Andres, J., McGrenere, J., Cockburn, A., Avellino, I., Goguey, A., Bjørn, P., Zhao, S., et al., Eds.; ACM: New York, NY, USA, 2020; pp. 1–14. [Google Scholar] [CrossRef]

- Nelles, J.; Kwee-Meier, S.T.; Mertens, A. Evaluation Metrics Regarding Human Well-Being and System Performance in Human-Robot Interaction—A Literature Review. In Proceedings of the 20th Congress of the International Ergonomics Association (IEA 2018), Florence, Italy, 26–30 August 2018; Bagnara, S., Tartaglia, R., Albolino, S., Alexander, T., Fujita, Y., Eds.; Advances in Intelligent Systems and Computing. Springer International Publishing: Cham, Switzerland, 2019; pp. 124–135. [Google Scholar] [CrossRef]

- ISO_9241-11:2018; ISO International Organization for Standardization. DIN EN ISO 9241-11:2018-11, Ergonomie der Mensch-System-Interaktion_-Teil_11: Gebrauchstauglichkeit: Begriffe und Konzepte (ISO_9241-11:2018). Deutsche Fassung EN_ISO_9241-11:2018; Beuth Verlag GmbH: Berlin, Germany, 2018.

- Brooke, J. SUS: A ‘Quick and Dirty’ Usability Scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., Weerdmeester, B.A., McClelland, I.L., Eds.; Taylor and Francis: Bristol, PA, USA; London, UK, 1996; pp. 189–194. [Google Scholar]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum.-Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum.-Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- ISO_9241-210:2019; ISO International Organization for Standardization. DIN EN ISO 9241-210:2020-03, Ergonomie der Mensch-System-Interaktion_-Teil_210: Menschzentrierte Gestaltung Interaktiver Systeme (ISO_9241-210:2019). Deutsche Fassung EN_ISO_9241-210:2019; Beuth Verlag GmbH: Berlin, Germany, 2019.

- Hassenzahl, M. The Effect of Perceived Hedonic Quality on Product Appealingness. Int. J. Hum.-Comput. Interact. 2001, 13, 481–499. [Google Scholar] [CrossRef]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and Evaluation of a User Experience Questionnaire. In HCI and Usability for Education and Work; Holzinger, A., Ed.; Lecture Notes in Computer Science; Springer Berlin Heidelberg: Berlin/Heidelberg, Germany, 2008; pp. 63–76. [Google Scholar] [CrossRef]

- Universal Robots. Die Zukunft ist Kollaborierend. 2016. Available online: https://www.universal-robots.com/de/download-center/#/cb-series/ur10 (accessed on 8 December 2021).

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and Evaluation of a Short Version of the User Experience Questionnaire (UEQ-S). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 103. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2022; Available online: https://www.R-project.org/ (accessed on 19 September 2022).

- Morey, R.D.; Rouder, J.N. BayesFactor: Computation of Bayes Factors for Common Designs. R Package Version 0.9.12-4.2. 2018. Available online: https://CRAN.R-project.org/package=BayesFactor (accessed on 19 September 2022).

- Stekhoven, D.J.; Bühlmann, P. MissForest–non-parametric missing value imputation for mixed-type data. Bioinformatics 2012, 28, 112–118. [Google Scholar] [CrossRef] [PubMed]

- Platias, C.; Petasis, G. A Comparison of Machine Learning Methods for Data Imputation. In Proceedings of the 11th Hellenic Conference on Artificial Intelligence, Athens, Greece, 2–4 September 2020; Spyropoulos, C., Varlamis, I., Androutsopoulos, I., Malakasiotis, P., Eds.; ACM: New York, NY, USA, 2020; pp. 150–159. [Google Scholar] [CrossRef]

- Stekhoven, D.J. missForest: Nonparametric Missing Value Imputation using Random Forest. R Package Version 1.5 (2022-04-14). Available online: https://cran.r-project.org/web/packages/missForest/index.html (accessed on 19 September 2022).

| Subcomponent of Usability According to ISO 9241-11:2018 [32] | Metrics in ISO Norm | Metrics in [6] |

|---|---|---|

| Effectiveness | ||

| “accuracy and completeness with which users achieve specified goals” (p. 18) | objective success rate number of errors | objective task performance success rate 1 errors occurred |

| subjective perceived level of target achievement | subjective robot relative contribution | |

| Efficiency “resources used in relation to the results achieved” (p. 19) e.g., time, effort, costs, materials | objective task completion time physical effort | objective task performance (receiver’s) task completion time 1 interaction force total handover time 1 reaction time fluency (concurrent activity vs. idle time) |

| subjective perceived time perceived effort | subjective workload (NASA-TLX) HR-fluency 1 | |

| Satisfaction “extent to which the user’s physical, cognitive and emotional responses that result from the use of a system […] meet the user’s needs and expectations” (p. 20) | objective repeated use of a system (long-term) | objective task performance psycho-physiological measures |

| subjective satisfaction with task completion overall impression of the system | subjective improvement preference | |

| Additional concepts trust in the robot 1 working alliance 1 positive teammate traits safety |

| Variable | s/o 1 | Measure | Description | Unit | Time Assessed |

|---|---|---|---|---|---|

| Overall usability | s | SUS | System Usability Scale (SUS) [33], 10 items | from 1 = strong disagreement to 5 = strong agreement | End of study |

| effectiveness | o | success rate | ratio of successful trials (participants successfully received the object independent of their grasping behavior) and the total number of trials (see Section 2.3) | Percent | Across all trials |

| joint action failure rate | number of unsuccessful trials caused by failures during joint actions of the human and the robot divided by the total number of trials (see Section 2.3) | Percent | Across all trials | ||

| robotic failure rate | number of unsuccessful trials caused by robotic failures divided by the total number of trials (see Section 2.3) | Percent | Across all trials | ||

| s | perceived easiness 2 | single item ‘getting the object was easy’ [17] | from 1 = strongly disagree to 10 = strongly agree | After each trial 2 | |

| efficiency | o | task completion time | the time period between the robot asking for participants’ order and the robot arriving at home position again after handing over the object | Minutes | Each trial 2 |

| handover time (in minutes) | the time period between the robot requesting handover readiness of participants and the final opening of the robotic gripper | Minutes | Each trial 2 | ||

| s | perceived efficiency | single item ‘the duration of the object handover was acceptable’ | from 1 = strongly disagree to 10 = strongly agree | After each trial 2 | |

| User Experience | s | UEQ-S | User Experience Questionnaire [38] in its short version [40], 8 items | semantic differential rated on a 7-point scale (scale range −3 to 3) | End of study |

| Satisfaction | o | Objective preference for modality | proportion of chosen handovers with modality direct handover ‘midair’ and indirect handover ‘on table’ during block 1 | First 3 trials | |

| s | qualitative feedback | Open questions | - | End of study |

| Variable | Handover Modality | Object | BVI M (SD) | Sighted M (SD) | BF01 |

|---|---|---|---|---|---|

| success rate | midair | cup | 100% | 100% | - |

| knife | 90% | 100% | 1.06 ± 0.01% | ||

| spanner | 89% | 100% | 1.05 ± 0.01% | ||

| on table | cup | 100% | 100% | - | |

| knife | 94% | 100% | 1.37 ± 0.00% | ||

| spanner | 94% | 100% | 1.37 ± 0.00% | ||

| joint action failure rate 1 | midair | cup | - | - | - |

| knife | 5% | - | - | ||

| spanner | 5% | - | - | ||

| on table | cup | - | - | - | |

| knife | - | - | - | ||

| spanner | - | - | - | ||

| robotic failure rate 1 | midair | cup | - | - | - |

| knife | 5% | - | - | ||

| spanner | 5% | - | - | ||

| on table | cup | - | - | - | |

| knife | 6% | - | - | ||

| spanner | 6% | - | - | ||

| perceived easiness 2 | midair | cup | 7.75 (2.40) | 8.16 (2.17) | 1.71 ± 0.0% |

| (scale range 1 to 10) | knife | 6.35 (3.35) | 9.35 (0.75) | 0.06 ± 0.0% | |

| spanner | 6.58 (3.34) | 8.84 (2.06) | 0.67 ± 0.0% | ||

| on table | cup | 9.65 (0.70) | 9.68 (0.67) | 1.80 ± 0.0% | |

| knife | 9.06 (2.19) | 9.78 (0.55) | 1.40 ± 0.0% | ||

| spanner | 8.82 (2.40) | 9.72 (0.58) | 1.26 ± 0.0% |

| Variable | Handover Modality | Object | BVI M (SD) | Sighted M (SD) | BF01 |

|---|---|---|---|---|---|

| task completion time 1 | midair | cup | 5.09 (0.73) | 5.17 (0.69) | 1.76 ± 0.0% |

| (in minutes) | knife | 5.98 (0.76) | 5.97 (1.36) | 1.82 ± 0.0% | |

| spanner | 6.29 (0.97) | 6.19 (0.87) | 1.74 ± 0.0% | ||

| on table | cup | 4.31 (0.51) | 4.89 (1.23) | 0.82 ± 0.0% | |

| knife | 4.67 (0.66) | 4.92 (0.87) | 1.43 ± 0.0% | ||

| spanner | 4.78 (0.73) | 4.96 (0.98) | 1.62 ± 0.0% | ||

| handover time 1,2 (in minutes) | midair | cup | 1.39 (0.40) | 1.31 (0.47) | 1.65 ± 0.0% |

| knife | 1.93 (0.62) | 1.66 (0.55) | 0.71 ± 0.0% | ||

| spanner | 2.07 (0.90) | 1.72 (0.43) | 0.79 ± 0.0% | ||

| perceived efficiency 1 | midair | cup | 5.85 (2.66) | 6.21 (2.74) | 1.77 ± 0.0% |

| (scale 1 to 10) | knife | 5.65 (2.96) | 6.50 (2.40) | 1.65 ± 0.0% | |

| spanner | 5.47 (3.04) | 5.74 (2.16) | 1.80 ± 0.0% | ||

| on table | cup | 6.41 (2.85) | 6.63 (2.52) | 1.78 ± 0.0% | |

| knife | 6.41 (2.85) | 6.28 (2.47) | 1.77 ± 0.0% | ||

| spanner | 5.88 (3.12) | 6.83 (2.20) | 1.56 ± 0.0% |

| Relationship | Repeated Measures Correlation 1 | Kendall’s Tau | z | p-Value |

|---|---|---|---|---|

| overall usability and specific measures | ||||

| SUS × success rate 2 | - | −0.006 | −0.045 | 0.964 |

| SUS × UEQ-S overall | - | 0.302 | 2.61 | <0.001 |

| objective and subjective measures | ||||

| effectiveness: | ||||

| success rate × perceived easiness 2 | 0.544 | - | 8.33 | <0.001 |

| efficiency: | ||||

| task completion time × perceived efficiency 2 | −0.242 | - | 3.41 | <0.001 |

| handover time × perceived efficiency 2 | −0.191 | - | 2.69 | 0.007 |

| human idle time × perceived efficiency 2 | 0.076 | - | 1.06 | 0.287 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Langer, D.; Legler, F.; Diekmann, P.; Dettmann, A.; Glende, S.; Bullinger, A.C. Got It? Comparative Ergonomic Evaluation of Robotic Object Handover for Visually Impaired and Sighted Users. Robotics 2024, 13, 43. https://doi.org/10.3390/robotics13030043

Langer D, Legler F, Diekmann P, Dettmann A, Glende S, Bullinger AC. Got It? Comparative Ergonomic Evaluation of Robotic Object Handover for Visually Impaired and Sighted Users. Robotics. 2024; 13(3):43. https://doi.org/10.3390/robotics13030043

Chicago/Turabian StyleLanger, Dorothea, Franziska Legler, Pia Diekmann, André Dettmann, Sebastian Glende, and Angelika C. Bullinger. 2024. "Got It? Comparative Ergonomic Evaluation of Robotic Object Handover for Visually Impaired and Sighted Users" Robotics 13, no. 3: 43. https://doi.org/10.3390/robotics13030043