Hand Teleoperation with Combined Kinaesthetic and Tactile Feedback: A Full Upper Limb Exoskeleton Interface Enhanced by Tactile Linear Actuators

Abstract

:1. Introduction

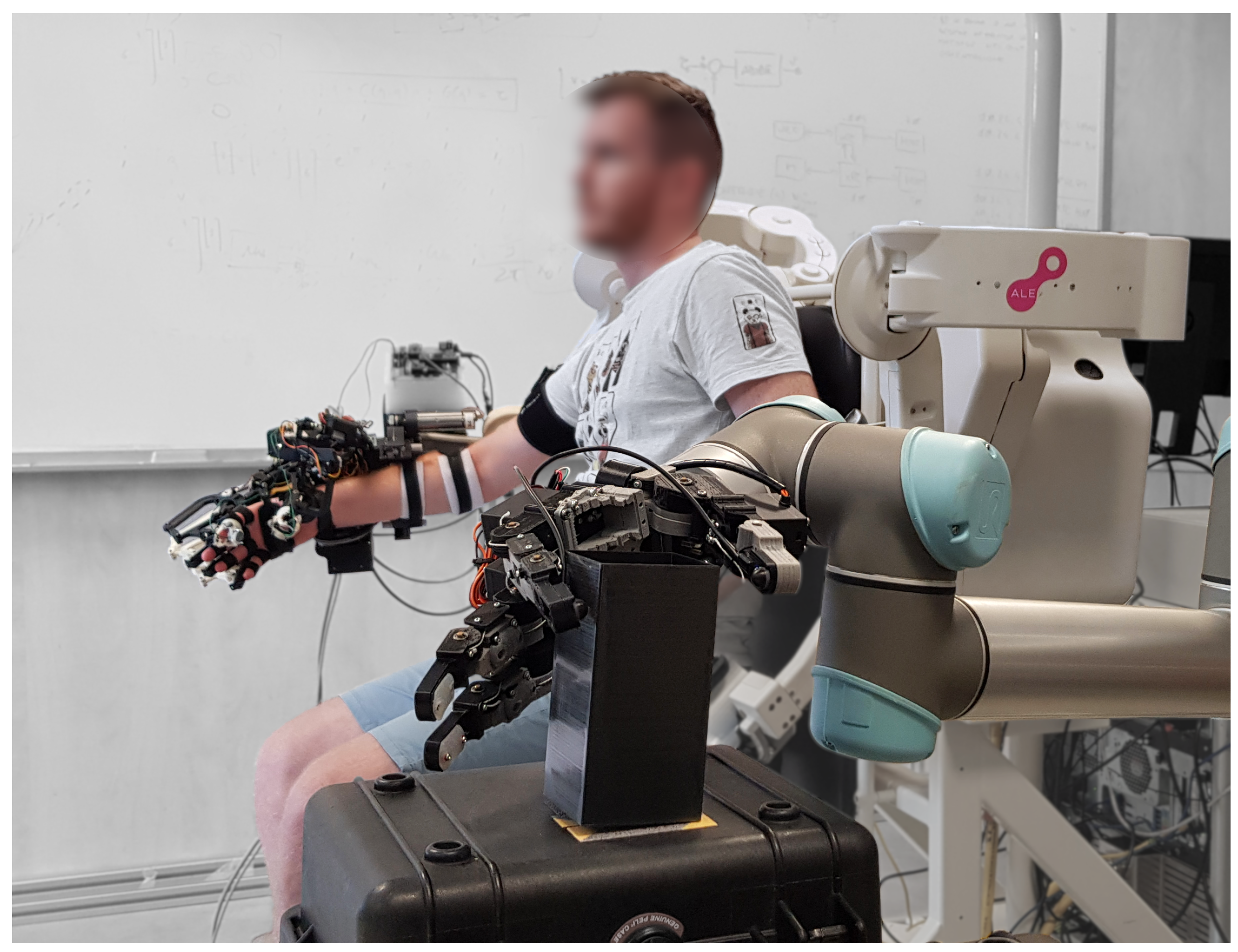

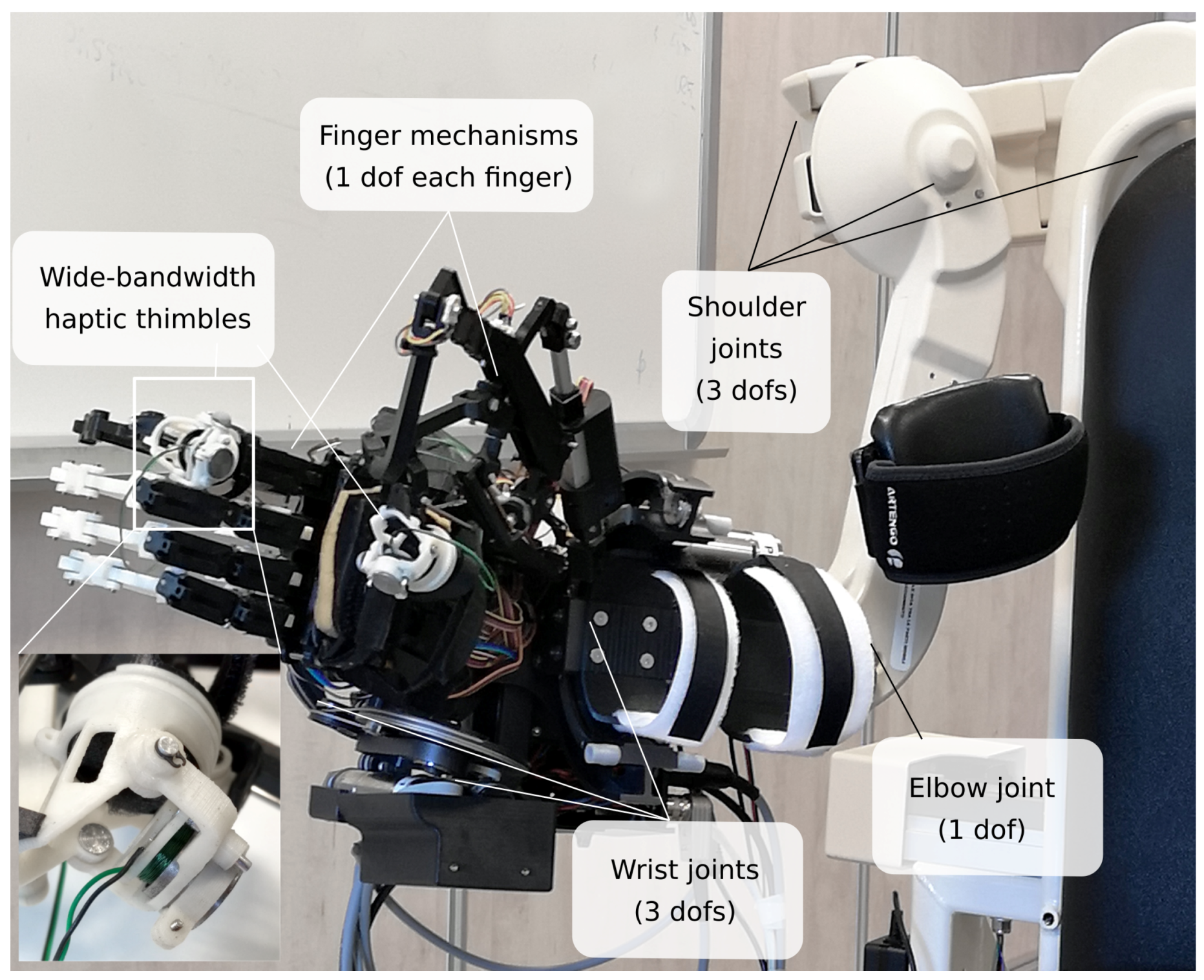

- The proposed system is composed of a full upper limb exoskeleton comprising a hand exoskeleton that provides both kinaesthetic force feedback at the fingertips and wide-bandwidth cutaneous force feedback through dedicated linear tactile actuators. Regarding the follower, sensitive force sensors at the fingertips are used to convey clean contact and force-modulated signals (Figure 1).

- The full arm exoskeleton allows for the transparent use of a hand exoskeleton, providing tracking, gravity, and dynamic compensation in a large upper limb workspace and also presenting the opportunity (not implemented in this setup) of full kinaesthetic force feedback delivered at each segment of the upper limb.

- The hand exoskeleton in place of haptic gloves allows for the implementation of larger and heavier mechanisms to properly transmit forces to the fingers, as well as heavier actuators to convey more intense, wide-bandwidth tactile feedback. In this paper, the consistency and richness of the obtained haptic feedback are evaluated through the measurement of the interaction forces in a pick-and-place teleoperation task.

2. Materials and Methods

2.1. The Exoskeleton Interface

2.1.1. The ALEX Arm Exoskeleton Module

2.1.2. The WRES Wrist Exoskeleton Module

2.1.3. The Hand Exoskeleton Module

2.1.4. The Tactile Feedback Module

3. Teleoperation Experimental Setup

3.1. Follower Robotic System

3.2. Teleoperation Setup

3.3. Experimental Procedure

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Da Vinci. Intuitive Surgical Systems. Available online: https://www.intuitive.com/en-us/products-and-services/da-vinci/systems (accessed on 15 July 2023).

- KHG, Kerntechnische Hilfsdienst GmbH. Available online: https://khgmbh.de (accessed on 15 July 2023).

- Ministry of Defence, UK. British Army Receives Pioneering Bomb Disposal Robots. Available online: https://www.gov.uk/government/news/british-army-receives-pioneering-bomb-disposal-robots (accessed on 15 July 2023).

- Patel, R.V.; Atashzar, S.F.; Tavakoli, M. Haptic feedback and force-based teleoperation in surgical robotics. Proc. IEEE 2022, 110, 1012–1027. [Google Scholar] [CrossRef]

- Porcini, F.; Chiaradia, D.; Marcheschi, S.; Solazzi, M.; Frisoli, A. Evaluation of an Exoskeleton-based Bimanual Teleoperation Architecture with Independently Passivated Slave Devices. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10205–10211. [Google Scholar] [CrossRef]

- Chan, L.; Naghdy, F.; Stirling, D. Application of adaptive controllers in teleoperation systems: A survey. IEEE Trans. Hum.-Mach. Syst. 2014, 44, 337–352. [Google Scholar]

- Anderson, R.; Spong, M. Asymptotic stability for force reflecting teleoperators with time delays. In Proceedings of the 1989 International Conference on Robotics and Automation, Scottsdale, AZ, USA, 14–19 May 1989; pp. 1618–1625. [Google Scholar]

- Lawrence, D.A. Stability and transparency in bilateral teleoperation. In Proceedings of the 31st IEEE Conference on Decision and Control, Tucson, AZ, USA, 16–18 December 1992; pp. 2649–2655. [Google Scholar]

- Mitra, P.; Niemeyer, G. Model-mediated telemanipulation. Int. J. Robot. Res. 2008, 27, 253–262. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Scheggi, S.; Prattichizzo, D.; Misra, S. Haptic feedback for microrobotics applications: A review. Front. Robot. AI 2016, 3, 53. [Google Scholar] [CrossRef]

- Bolopion, A.; Régnier, S. A review of haptic feedback teleoperation systems for micromanipulation and microassembly. IEEE Trans. Autom. Sci. Eng. 2013, 10, 496–502. [Google Scholar] [CrossRef]

- Hulin, T.; Hertkorn, K.; Kremer, P.; Schätzle, S.; Artigas, J.; Sagardia, M.; Zacharias, F.; Preusche, C. The DLR bimanual haptic device with optimized workspace. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3441–3442. [Google Scholar]

- Lenz, C.; Behnke, S. Bimanual telemanipulation with force and haptic feedback through an anthropomorphic avatar system. Robot. Auton. Syst. 2023, 161, 104338. [Google Scholar] [CrossRef]

- Luo, R.; Wang, C.; Keil, C.; Nguyen, D.; Mayne, H.; Alt, S.; Schwarm, E.; Mendoza, E.; Padır, T.; Whitney, J.P. Team Northeastern’s approach to ANA XPRIZE Avatar final testing: A holistic approach to telepresence and lessons learned. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 7054–7060. [Google Scholar]

- Park, S.; Kim, J.; Lee, H.; Jo, M.; Gong, D.; Ju, D.; Won, D.; Kim, S.; Oh, J.; Jang, H.; et al. A Whole-Body Integrated AVATAR System: Implementation of Telepresence With Intuitive Control and Immersive Feedback. IEEE Robot. Autom. Mag. 2023, 2–10. [Google Scholar] [CrossRef]

- Cisneros-Limón, R.; Dallard, A.; Benallegue, M.; Kaneko, K.; Kaminaga, H.; Gergondet, P.; Tanguy, A.; Singh, R.P.; Sun, L.; Chen, Y.; et al. A cybernetic avatar system to embody human telepresence for connectivity, exploration, and skill transfer. Int. J. Soc. Robot. 2024, 1–28. [Google Scholar] [CrossRef]

- Marques, J.M.; Peng, J.C.; Naughton, P.; Zhu, Y.; Nam, J.S.; Hauser, K. Commodity Telepresence with Team AVATRINA’s Nursebot in the ANA Avatar XPRIZE Finals. In Proceedings of the 2nd Workshop Toward Robot Avatars, IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 1–3. [Google Scholar]

- Klamt, T.; Schwarz, M.; Lenz, C.; Baccelliere, L.; Buongiorno, D.; Cichon, T.; DiGuardo, A.; Droeschel, D.; Gabardi, M.; Kamedula, M.; et al. Remote mobile manipulation with the centauro robot: Full-body telepresence and autonomous operator assistance. J. Field Robot. 2019, 37, 889–919. [Google Scholar] [CrossRef]

- Mallwitz, M.; Will, N.; Teiwes, J.; Kirchner, E.A. The capio active upper body exoskeleton and its application for teleoperation. In Proceedings of the 13th Symposium on Advanced Space Technologies in Robotics and Automation. ESA/Estec Symposium on Advanced Space Technologies in Robotics and Automation (ASTRA-2015), Noordwijk, The Netherlands, 11–13 May 2015. [Google Scholar]

- Sarac, M.; Solazzi, M.; Leonardis, D.; Sotgiu, E.; Bergamasco, M.; Frisoli, A. Design of an underactuated hand exoskeleton with joint estimation. In Advances in Italian Mechanism Science; Springer: Cham, Switzerland, 2017; pp. 97–105. [Google Scholar]

- Wang, D.; Song, M.; Naqash, A.; Zheng, Y.; Xu, W.; Zhang, Y. Toward whole-hand kinesthetic feedback: A survey of force feedback gloves. IEEE Trans. Haptics 2018, 12, 189–204. [Google Scholar] [CrossRef]

- Baik, S.; Park, S.; Park, J. Haptic glove using tendon-driven soft robotic mechanism. Front. Bioeng. Biotechnol. 2020, 8, 541105. [Google Scholar]

- Hosseini, M.; Sengül, A.; Pane, Y.; De Schutter, J.; Bruyninck, H. Exoten-glove: A force-feedback haptic glove based on twisted string actuation system. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 320–327. [Google Scholar]

- Xiong, Q.; Liang, X.; Wei, D.; Wang, H.; Zhu, R.; Wang, T.; Mao, J.; Wang, H. So-EAGlove: VR haptic glove rendering softness sensation with force-tunable electrostatic adhesive brakes. IEEE Trans. Robot. 2022, 38, 3450–3462. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, D.; Wang, Z.; Zhang, Y.; Xiao, J. Passive force-feedback gloves with joint-based variable impedance using layer jamming. IEEE Trans. Haptics 2019, 12, 269–280. [Google Scholar] [CrossRef] [PubMed]

- Westling, G.; Johansson, R.S. Responses in glabrous skin mechanoreceptors during precision grip in humans. Exp. Brain Res. 1987, 66, 128–140. [Google Scholar] [CrossRef] [PubMed]

- Jansson, G.; Monaci, L. Identification of real objects under conditions similar to those in haptic displays: Providing spatially distributed information at the contact areas is more important than increasing the number of areas. Virtual Real. 2006, 9, 243–249. [Google Scholar] [CrossRef]

- Edin, B.B.; Westling, G.; Johansson, R.S. Independent control of human finger-tip forces at individual digits during precision lifting. J. Physiol. 1992, 450, 547–564. [Google Scholar] [CrossRef] [PubMed]

- Preechayasomboon, P.; Rombokas, E. Haplets: Finger-worn wireless and low-encumbrance vibrotactile haptic feedback for virtual and augmented reality. Front. Virtual Real. 2021, 2, 738613. [Google Scholar] [CrossRef]

- Jung, Y.H.; Yoo, J.Y.; Vázquez-Guardado, A.; Kim, J.H.; Kim, J.T.; Luan, H.; Park, M.; Lim, J.; Shin, H.S.; Su, C.J.; et al. A wireless haptic interface for programmable patterns of touch across large areas of the skin. Nat. Electron. 2022, 5, 374–385. [Google Scholar] [CrossRef]

- Luo, H.; Wang, Z.; Wang, Z.; Zhang, Y.; Wang, D. Perceptual Localization Performance of the Whole Hand Vibrotactile Funneling Illusion. IEEE Trans. Haptics 2023, 16, 240–250. [Google Scholar] [CrossRef]

- Clemente, F.; D’Alonzo, M.; Controzzi, M.; Edin, B.B.; Cipriani, C. Non-invasive, temporally discrete feedback of object contact and release improves grasp control of closed-loop myoelectric transradial prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 1314–1322. [Google Scholar] [CrossRef]

- Minamizawa, K.; Fukamachi, S.; Kajimoto, H.; Kawakami, N.; Tachi, S. Gravity grabber: Wearable haptic display to present virtual mass sensation. In Proceedings of the SIGGRAPH07: Special Interest Group on Computer Graphics and Interactive Techniques Conference, San Diego, CA, USA, 5–9 August 2007; p. 8-es. [Google Scholar]

- Schorr, S.B.; Okamura, A.M. Three-dimensional skin deformation as force substitution: Wearable device design and performance during haptic exploration of virtual environments. IEEE Trans. Haptics 2017, 10, 418–430. [Google Scholar] [CrossRef]

- Giraud, F.H.; Joshi, S.; Paik, J. Haptigami: A fingertip haptic interface with vibrotactile and 3-DoF cutaneous force feedback. IEEE Trans. Haptics 2021, 15, 131–141. [Google Scholar] [CrossRef]

- Musić, S.; Salvietti, G.; Chinello, F.; Prattichizzo, D.; Hirche, S. Human–robot team interaction through wearable haptics for cooperative manipulation. IEEE Trans. Haptics 2019, 12, 350–362. [Google Scholar] [CrossRef]

- McMahan, W.; Gewirtz, J.; Standish, D.; Martin, P.; Kunkel, J.A.; Lilavois, M.; Wedmid, A.; Lee, D.I.; Kuchenbecker, K.J. Tool contact acceleration feedback for telerobotic surgery. IEEE Trans. Haptics 2011, 4, 210–220. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Prattichizzo, D.; Kuchenbecker, K.J. Displaying sensed tactile cues with a fingertip haptic device. IEEE Trans. Haptics 2015, 8, 384–396. [Google Scholar] [CrossRef] [PubMed]

- Palagi, M.; Santamato, G.; Chiaradia, D.; Gabardi, M.; Marcheschi, S.; Solazzi, M.; Frisoli, A.; Leonardis, D. A Mechanical Hand-Tracking System with Tactile Feedback Designed for Telemanipulation. IEEE Trans. Haptics 2023, 16, 594–601. [Google Scholar] [CrossRef] [PubMed]

- Liarokapis, M.V.; Artemiadis, P.K.; Kyriakopoulos, K.J. Telemanipulation with the DLR/HIT II robot hand using a dataglove and a low cost force feedback device. In Proceedings of the 21st Mediterranean Conference on Control and Automation, Platanias, Greece, 25–28 June 2013; pp. 431–436. [Google Scholar]

- Martinez-Hernandez, U.; Szollosy, M.; Boorman, L.W.; Kerdegari, H.; Prescott, T.J. Towards a wearable interface for immersive telepresence in robotics. In Interactivity, Game Creation, Design, Learning, and Innovation, Proceedings of the 5th International Conference, ArtsIT 2016, and First International Conference, DLI 2016, Esbjerg, Denmark, 2–3 May 2016; Proceedings 5; Springer: Cham, Switzerland, 2017; pp. 65–73. [Google Scholar]

- Bimbo, J.; Pacchierotti, C.; Aggravi, M.; Tsagarakis, N.; Prattichizzo, D. Teleoperation in cluttered environments using wearable haptic feedback. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3401–3408. [Google Scholar]

- Campanelli, A.; Tiboni, M.; Verité, F.; Saudrais, C.; Mick, S.; Jarrassé, N. Innovative Multi Vibrotactile-Skin Stretch (MuViSS) haptic device for sensory motor feedback from a robotic prosthetic hand. Mechatronics 2024, 99, 103161. [Google Scholar] [CrossRef]

- Barontini, F.; Obermeier, A.; Catalano, M.G.; Fani, S.; Grioli, G.; Bianchi, M.; Bicchi, A.; Jakubowitz, E. Tactile Feedback in Upper Limb Prosthetics: A Pilot Study on Trans-Radial Amputees Comparing Different Haptic Modalities. IEEE Trans. Haptics 2023, 16, 760–769. [Google Scholar] [CrossRef]

- Pirondini, E.; Coscia, M.; Marcheschi, S.; Roas, G.; Salsedo, F.; Frisoli, A.; Bergamasco, M.; Micera, S. Evaluation of a new exoskeleton for upper limb post-stroke neuro-rehabilitation: Preliminary results. In Replace, Repair, Restore, Relieve–Bridging Clinical and Engineering Solutions in Neurorehabilitation; Springer: Cham, Switzerland, 2014; pp. 637–645. [Google Scholar]

- Buongiorno, D.; Sotgiu, E.; Leonardis, D.; Marcheschi, S.; Solazzi, M.; Frisoli, A. WRES: A novel 3 DoF WRist ExoSkeleton with tendon-driven differential transmission for neuro-rehabilitation and teleoperation. IEEE Robot. Autom. Lett. 2018, 3, 2152–2159. [Google Scholar] [CrossRef]

- Gabardi, M.; Solazzi, M.; Leonardis, D.; Frisoli, A. Design and evaluation of a novel 5 DoF underactuated thumb-exoskeleton. IEEE Robot. Autom. Lett. 2018, 3, 2322–2329. [Google Scholar] [CrossRef]

- Gabardi, M.; Solazzi, M.; Leonardis, D.; Frisoli, A. A new wearable fingertip haptic interface for the rendering of virtual shapes and surface features. In Proceedings of the 2016 IEEE Haptics Symposium (HAPTICS), Philadelphia, PA, USA, 8–11 April 2016; pp. 140–146. [Google Scholar]

- UR5, Universal Robots. Available online: https://www.universal-robots.com/products/ur5-robot/ (accessed on 27 May 2024).

- Leonardis, D.; Frisoli, A. CORA hand: A 3D printed robotic hand designed for robustness and compliance. Meccanica 2020, 55, 1623–1638. [Google Scholar] [CrossRef]

- Cole, K.J.; Abbs, J.H. Grip force adjustments evoked by load force perturbations of a grasped object. J. Neurophysiol. 1988, 60, 1513–1522. [Google Scholar] [CrossRef] [PubMed]

- Leonardis, D.; Solazzi, M.; Bortone, I.; Frisoli, A. A 3-RSR haptic wearable device for rendering fingertip contact forces. IEEE Trans. Haptics 2016, 10, 305–316. [Google Scholar] [CrossRef] [PubMed]

| ALEX Arm-Exo Specifications | |

| Mass of moving parts | ≅3 kg |

| Number of actuated DoF | 4 |

| Payload | 5 kg |

| Interaction torques in transparency mode | |

| Shoulder joints | ≅0.25 N-m |

| Elbow joint | ≅0.12 N-m |

| WRES Wrist-Exo Specifications | |

| Device mass | ≅0.29 kg |

| Range of motion | |

| PS | 146° |

| FE | 75° |

| RU | 4° |

| Payload (continuous torque) | 1.62 N-m |

| Interaction torques in transparency mode | |

| PS | ≤0.8 N-m at 60°/s |

| FE | ≤0.3 N-m at 200°/s |

| RU | ≤0.3 N-m at 60°/s |

| Hand Exoskeleton Specifications | |

|---|---|

| Device Mass | ≅0.35 kg |

| Motor gear ratio | 35:1 |

| Stroke of the motor | 50 mm |

| Max. cont force of the motor | 40 N |

| Max. velocity | 32 mm/s |

| Haptic Thimble Specifications | |

|---|---|

| Dimensions | 66 × 35 × 38 mm |

| Weight | ≅0.03 kg |

| Max cont. force | 0.5 N |

| Bandpass | 0–250 Hz |

| Stroke | 4 mm |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leonardis, D.; Gabardi, M.; Marcheschi, S.; Barsotti, M.; Porcini, F.; Chiaradia, D.; Frisoli, A. Hand Teleoperation with Combined Kinaesthetic and Tactile Feedback: A Full Upper Limb Exoskeleton Interface Enhanced by Tactile Linear Actuators. Robotics 2024, 13, 119. https://doi.org/10.3390/robotics13080119

Leonardis D, Gabardi M, Marcheschi S, Barsotti M, Porcini F, Chiaradia D, Frisoli A. Hand Teleoperation with Combined Kinaesthetic and Tactile Feedback: A Full Upper Limb Exoskeleton Interface Enhanced by Tactile Linear Actuators. Robotics. 2024; 13(8):119. https://doi.org/10.3390/robotics13080119

Chicago/Turabian StyleLeonardis, Daniele, Massimiliano Gabardi, Simone Marcheschi, Michele Barsotti, Francesco Porcini, Domenico Chiaradia, and Antonio Frisoli. 2024. "Hand Teleoperation with Combined Kinaesthetic and Tactile Feedback: A Full Upper Limb Exoskeleton Interface Enhanced by Tactile Linear Actuators" Robotics 13, no. 8: 119. https://doi.org/10.3390/robotics13080119