An ANFIS-Based Strategy for Autonomous Robot Collision-Free Navigation in Dynamic Environments

Abstract

1. Introduction

- Development of a novel control strategy that employs the ANFIS model’s capabilities to simplify and facilitate the decision-making process, thereby enhancing the system’s computational efficiency.

- Application of four Adaptive Neuro-Fuzzy Inference Systems (ANFIS) that are expertly optimized to minimize the rule set of fuzzy controllers, while maintaining system efficiency.

- Enabling efficient navigation in environments cluttered with obstacles through a simplified rule structure.

- Integration of a path-planning algorithm that adds a layer of sophistication, enhancing the determination of optimal trajectories and significantly improving the efficiency and effectiveness of autonomous robot navigation.

2. Kinematics of a Two-Wheel Differential Drive Robot

3. Strategy for Path Planning

3.1. Path Planning Concepts

3.2. The Bump-Surface Concept

4. Basic Concepts on the Adaptive Neuro-Fuzzy Inference System (ANFIS)

5. Designing the ANFIS Controllers for the Automated Guided Vehicle

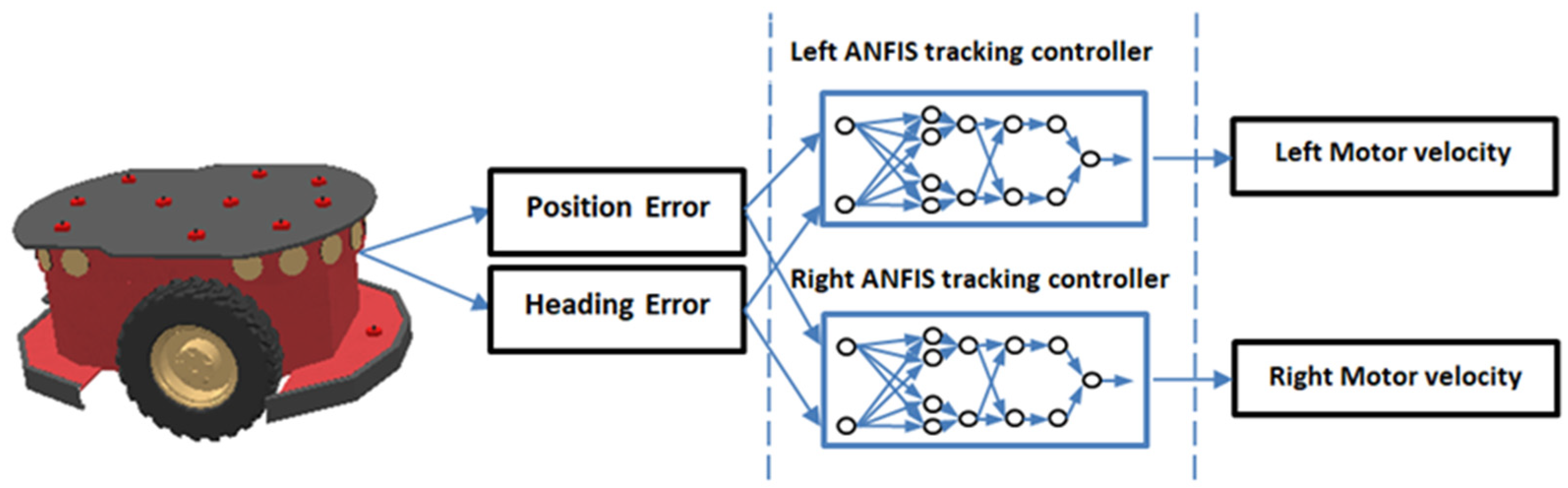

5.1. ANFIS Tracking Controllers

5.1.1. Data Set for ANFIS Tracking Controllers

- ‘small’ represents values from 0 to 0.13, indicating that the robot is very close to the target.

- ‘medium’ ranges from 0.05 to 0.3, suggesting a moderate distance from the target.

- ‘large’ encompasses values from 0.15 to 1, indicating that the robot is far from the target.

- ‘negative big’ represents values ranging from −1 to −0.3, indicating a significant deviation in the negative direction.

- ‘negative small’ spans values from −0.55 to −0.07, suggesting a moderate negative deviation.

- ‘zero’ encompasses values from −0.15 to 0.15, indicating no deviation from the desired heading.

- ‘positive small’ extends from 0.07 to 0.55, representing a moderate positive deviation.

- ‘positive big’ ranges from 0.3 to 1, indicating a significant deviation in the positive direction.

- ‘slow’: covering values from 0 to 0.4, representing slower motor speeds.

- ‘medium’: ranging from 0.27 to 0.6, indicating intermediate motor speeds.

- ‘fast’: extending from 0.47 to 1, denoting higher motor speeds.

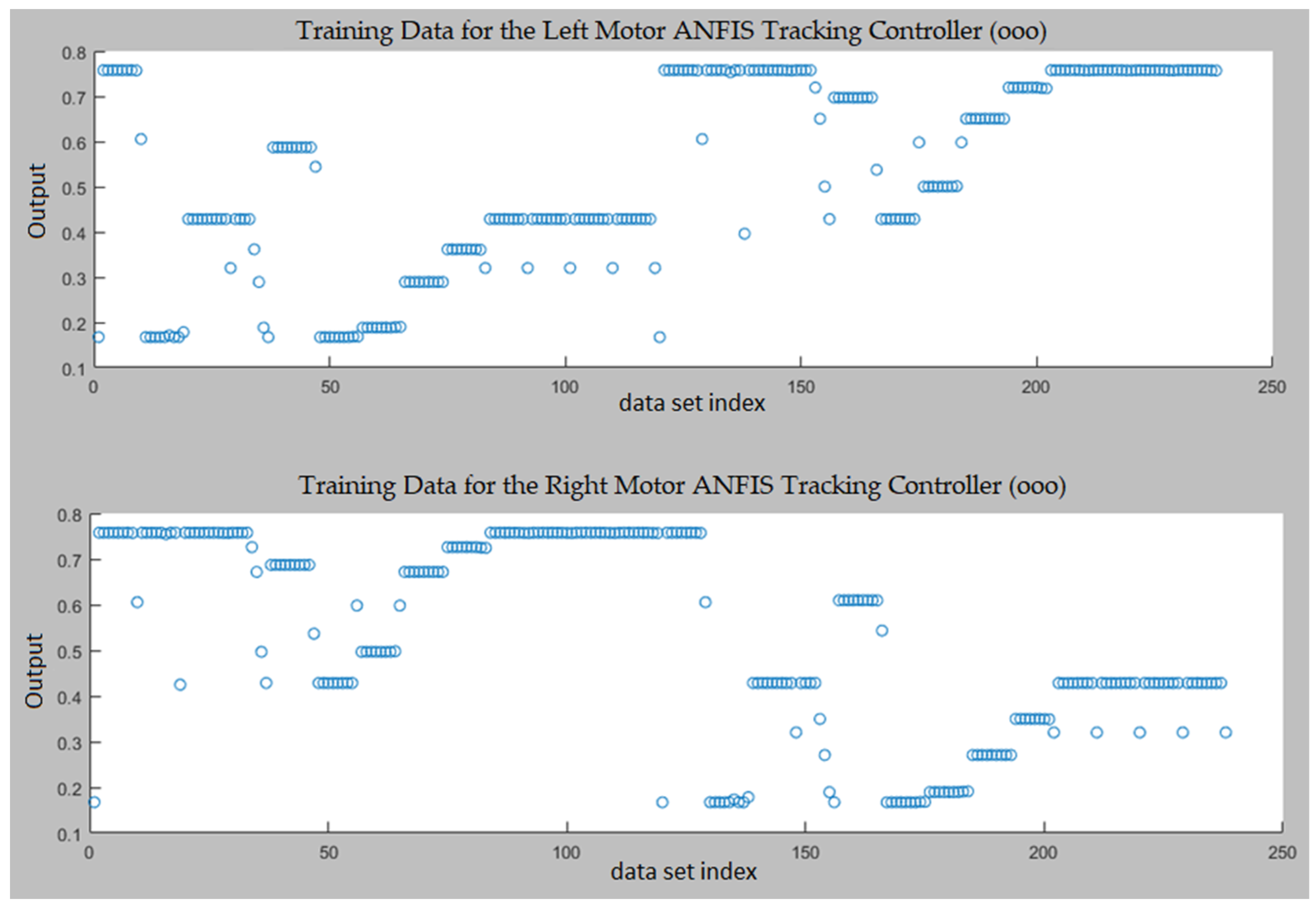

5.1.2. Training ANFIS for Tracking Controllers (Left and Right)

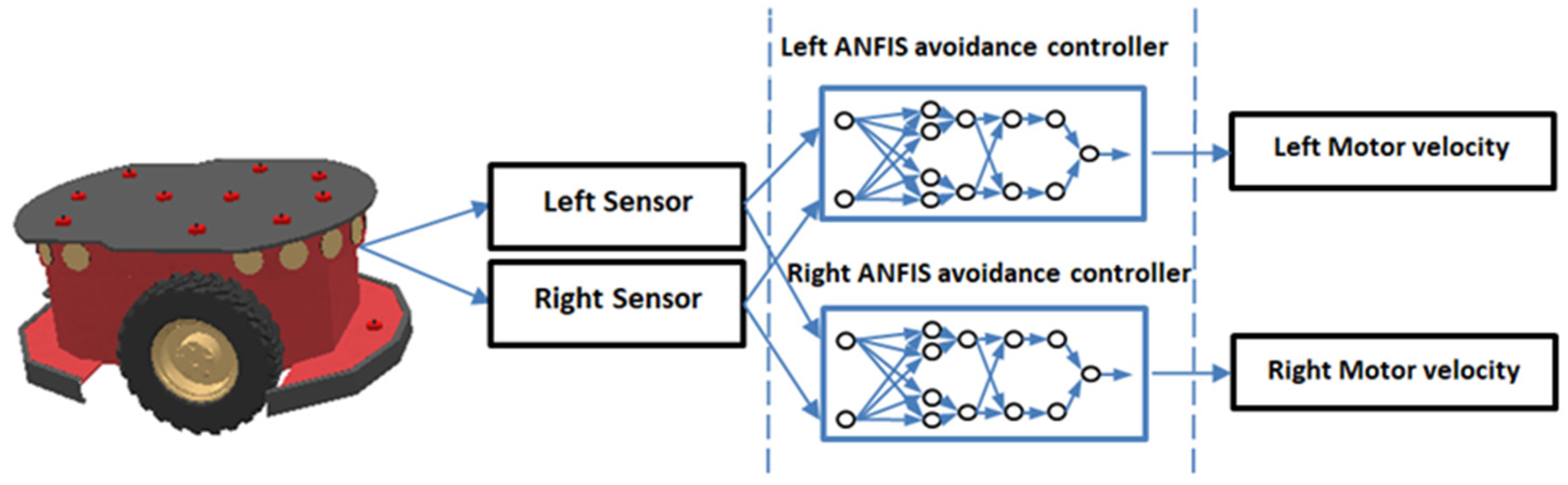

5.2. ANFIS Avoidance Controllers

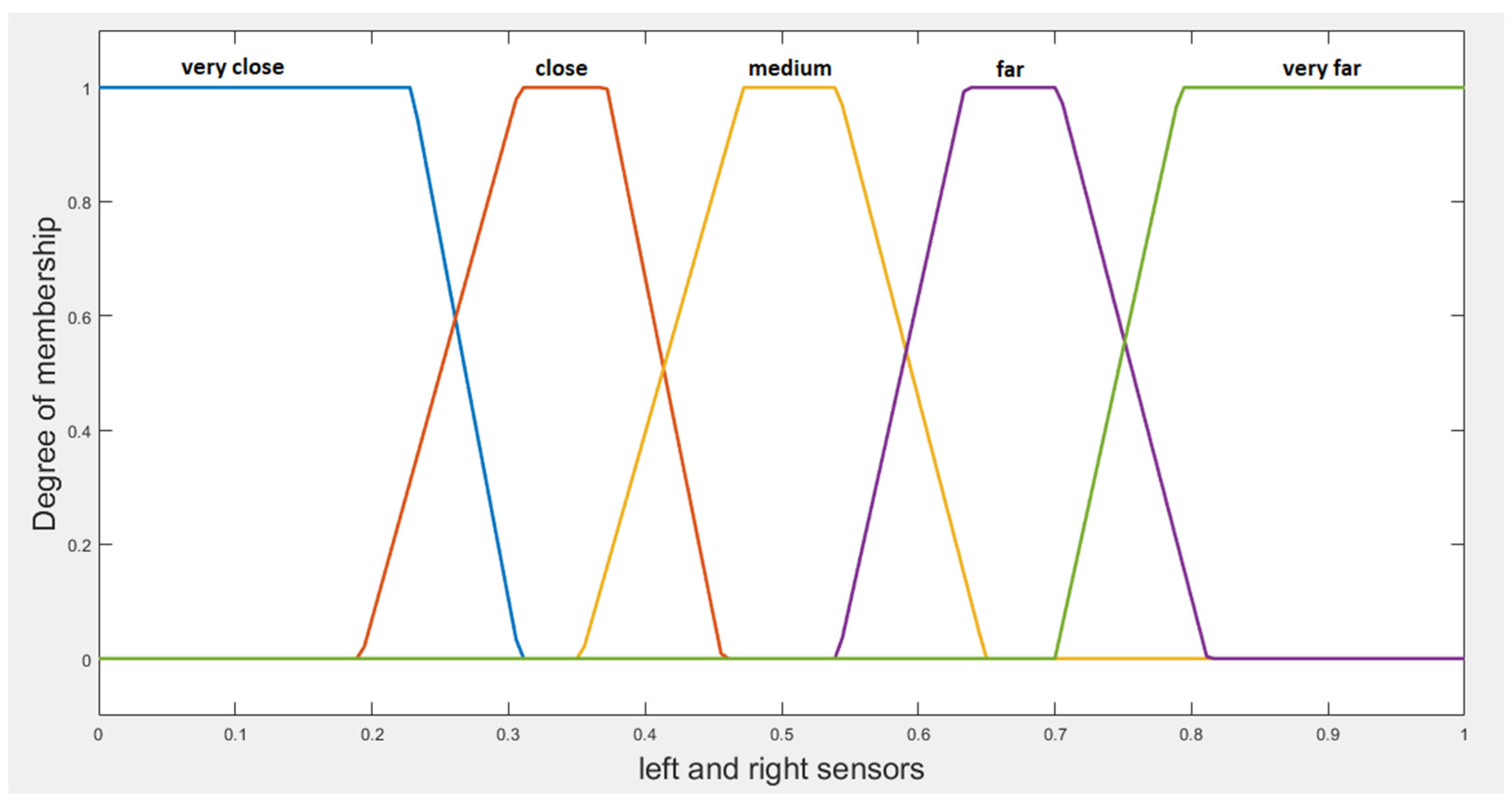

5.2.1. Data Set for ANFIS Avoidance Controllers

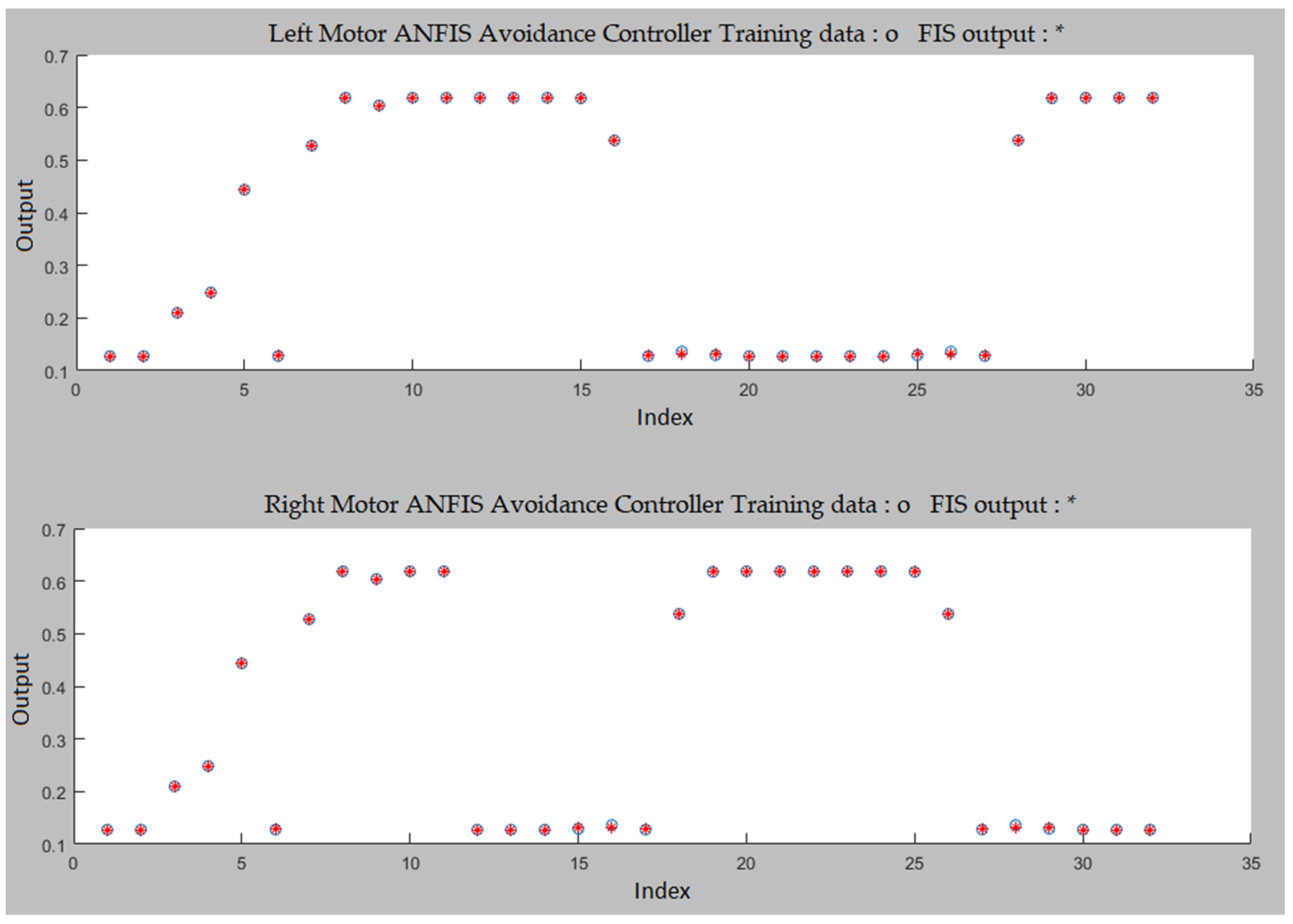

5.2.2. Training ANFIS for Avoidance Controllers (Left and Right)

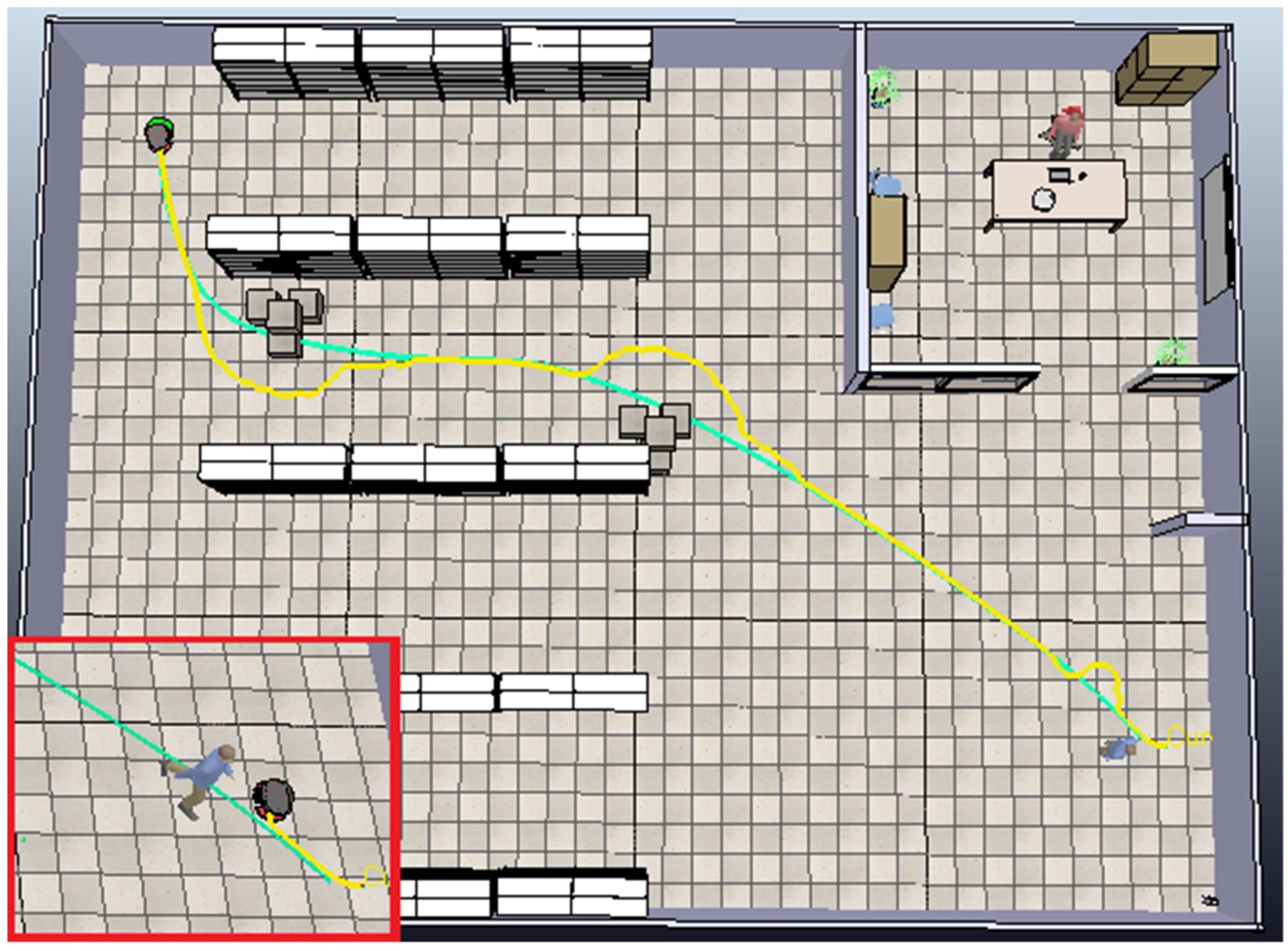

6. Simulation Experiments

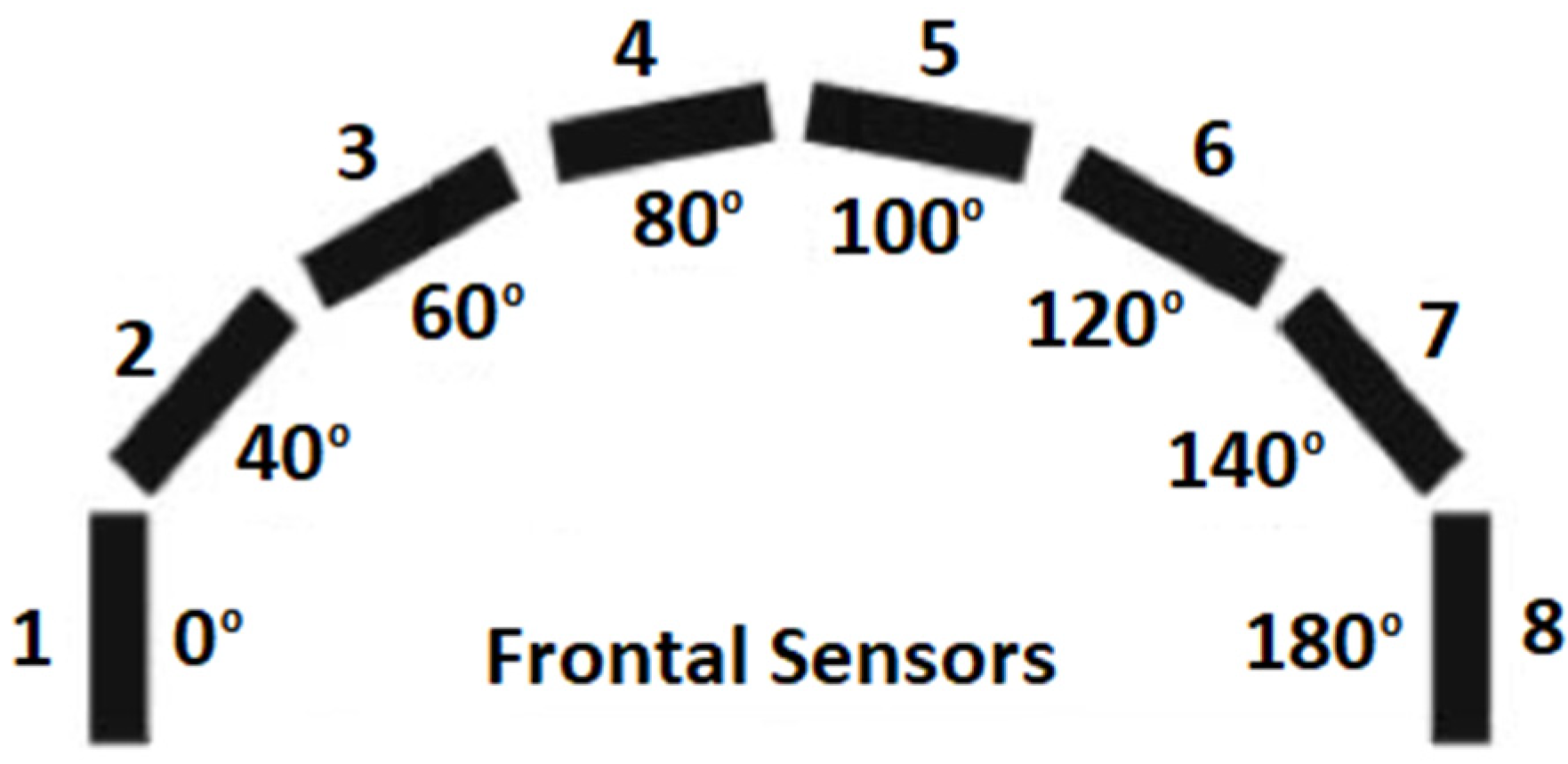

6.1. Robot System and Sensor Arrangement

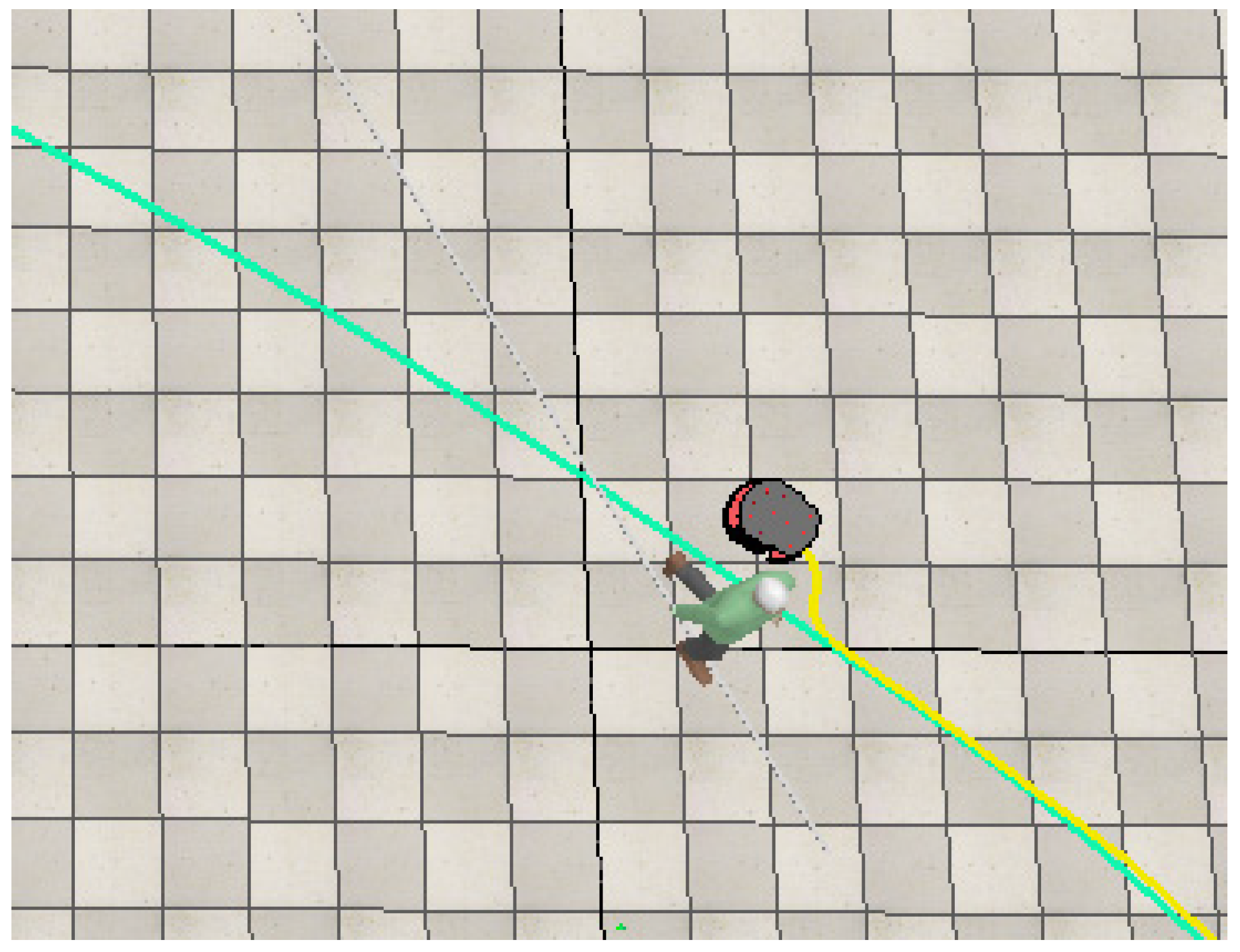

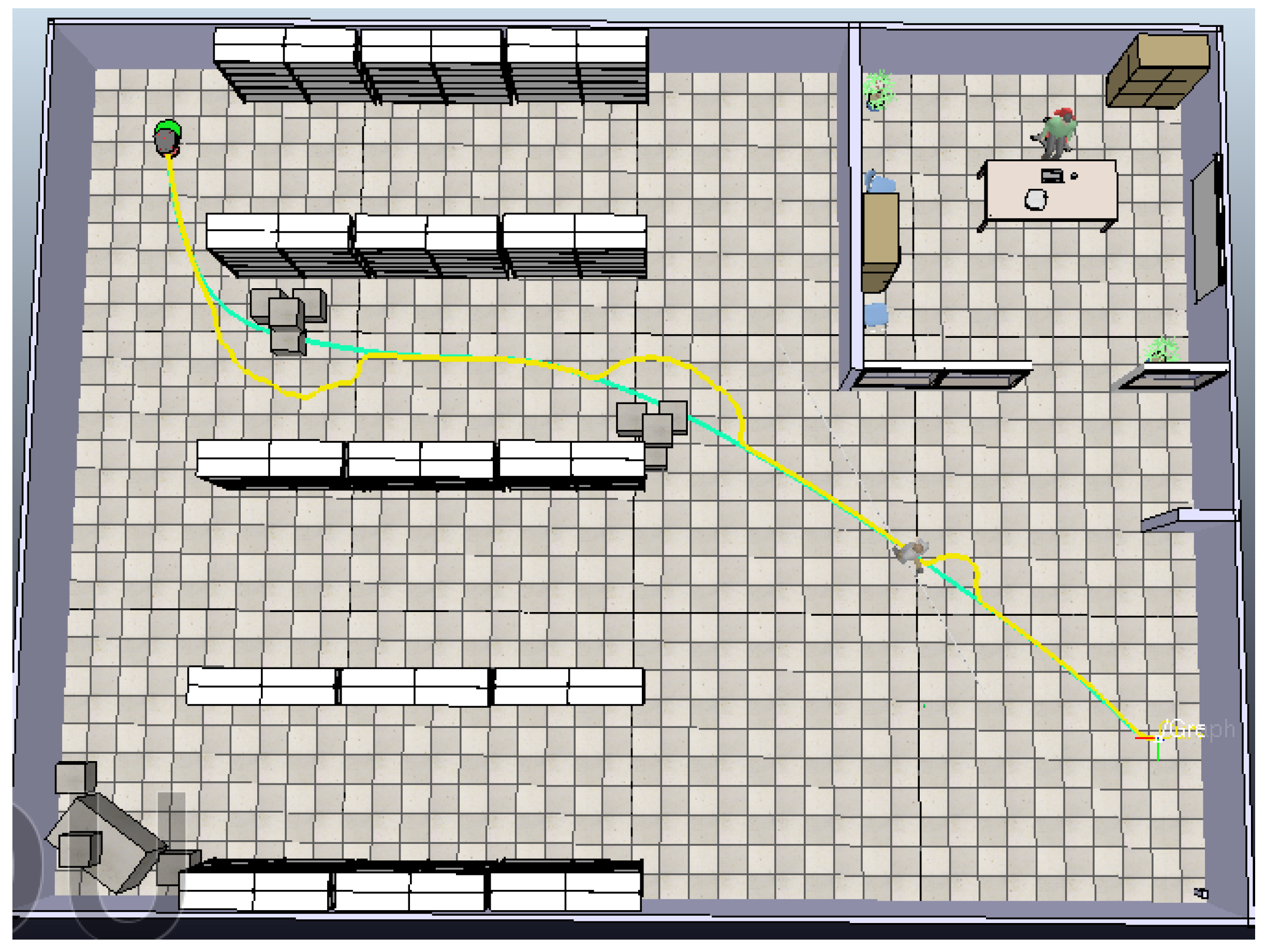

6.2. Simulation Results

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pratama, P.S.; Jeong, S.K.; Park, S.S.; Kim, S.B. Moving Object Tracking and Avoidance Algorithm for Differential Driving AGV Based on Laser Measurement Technology. Int. J. Sci. Eng. 2013, 4, 11–15. [Google Scholar] [CrossRef][Green Version]

- Ito, S.; Hiratsuka, S.; Ohta, M.; Matsubara, H.; Ogawa, M. Small Imaging Depth LIDAR and DCNN-Based Localization for Automated Guided Vehicle. Sensors 2018, 18, 177. [Google Scholar] [CrossRef]

- Rozsa, Z.; Sziranyi, T. Obstacle Prediction for Automated Guided Vehicles Based on Point Clouds Measured by a Tilted LIDAR Sensor. IEEE Trans. Intell. Transp. Syst. 2018, 19, 2708–2720. [Google Scholar] [CrossRef]

- Lee, J.; Hyun, C.-H.; Park, M. A Vision-Based Automated Guided Vehicle System with Marker Recognition for Indoor Use. Sensors 2013, 13, 10052–10073. [Google Scholar] [CrossRef]

- Miao, Z.; Zhang, X.; Huang, G. Research on Dynamic Obstacle Avoidance Path Planning Strategy of AGV. J. Phys. Conf. Ser. 2021, 2006, 12067. [Google Scholar] [CrossRef]

- Haider, M.H.; Wang, Z.; Khan, A.A.; Ali, H.; Zheng, H.; Usman, S.; Kumar, R.; Usman Maqbool Bhutta, M.; Zhi, P. Robust mobile robot navigation in cluttered environments based on hybrid adaptive neuro-fuzzy inference and sensor fusion. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 9060–9070. [Google Scholar] [CrossRef]

- Farahat, H.; Farid, S.; Mahmoud, O.E. Adaptive Neuro-Fuzzy control of Autonomous Ground Vehicle (AGV) based on Machine Vision. Eng. Res. J. 2019, 163, 218–233. [Google Scholar] [CrossRef]

- Jung, K.; Lee, I.; Song, H.; Kim, J.; Kim, S. Vision Guidance System for AGV Using ANFIS. In Proceedings of the 5th International Conference on Intelligent Robotics and Applications, Montreal, QC, Canada, 3–5 October 2012; Volume I, pp. 377–385. [Google Scholar] [CrossRef]

- Khelchandra, T.; Huang, J.; Debnath, S. Path planning of mobile robot with neuro-genetic-fuzzy technique in static environment. Int. J. Hybrid Intell. Syst. 2014, 11, 71–80. [Google Scholar] [CrossRef]

- Faisal, M.; Hedjar, R.; Al Sulaiman, M.; Al-Mutib, K. Fuzzy Logic Navigation and Obstacle Avoidance by a Mobile Robot in an Unknown Dynamic Environment. Int. J. Adv. Robot. Syst. 2013, 10, 37. [Google Scholar] [CrossRef]

- Anish, P.; Abhishek, K.K.; Dayal, R.P.; Patle, B.K. Autonomous mobile robot navigation between static and dynamic obstacles using multiple ANFIS architecture. World J. Eng. 2019, 16, 275–286. [Google Scholar] [CrossRef]

- Brahim, H.; Mohammed, R.; Abdelwahab, N. An intelligent ANFIS mobile robot controller using an expertise-based guidance technique. In Proceedings of the 14th IEEE International Conference on Intelligent Systems: Theories and Applications (SITA), Casablanca, Morocco, 22–23 November 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Singh, M.K.; Parhi, D.R.; Pothal, J.K. ANFIS approach for navigation of mobile robots. In Proceedings of the IEEE International Conference on Advances in Recent Technologies in Communication and Computing, Kottayam, India, 27–28 October 2009; pp. 727–731. [Google Scholar] [CrossRef]

- Muhammad, H.H.; Hub, A.; Abdullah, A.K.; Hao, Z.; Usman, M.B.; Shaban, U.; Pengpeng, Z.; Zhonglai, V. Autonomous mobile robot navigation using adaptive neuro fuzzy inference system. In Proceedings of the IEEE International Conference on Innovations and Development of Information Technologies and Robotics (IDITR), Chengdu, China, 27–29 May 2022; pp. 93–99. [Google Scholar] [CrossRef]

- Malika, L.; Nacéra, B. Intelligent system for robotic navigation using ANFIS and ACOr. Appl. Artif. Intell. 2019, 33, 399–419. [Google Scholar] [CrossRef]

- Marichal, G.; Acosta, L.; Moreno, L.; Méndez, J.A.; Rodrigo, J.; Sigut, M. Obstacle avoidance for a mobile robot: A neuro-fuzzy approach. Fuzzy Sets Syst. 2001, 124, 171–179. [Google Scholar] [CrossRef]

- Vaidhehi, V. The role of dataset in training ANFIS system for course advisor. Int. J. Innov. Res. Adv. Eng. 2014, 1, 249–253. [Google Scholar]

- Mishra, D.K.; Thomas, A.; Kuruvilla, J.; Kalyanasundaram, P.; Ramalingeswara Prasad, K.; Haldorai, A. Design of mobile robot navigation controller using neuro-fuzzy logic system. Comput. Electr. Eng. 2022, 101, 108044. [Google Scholar] [CrossRef]

- Zacharia, P.T. An Adaptive Neuro-fuzzy Inference System for Robot Handling Fabrics with Curved Edges towards Sewing. J. Intell. Robot. Syst. 2010, 58, 193–209. [Google Scholar] [CrossRef]

- Sy-Hung, B.; Soo, Y.Y. An efficient approach for line-following automated guided vehicles based on fuzzy inference mechanism. J. Robot. Control 2022, 3, 395–401. [Google Scholar] [CrossRef]

- Kovács, S.; Kóczy, L.T. Application of an approximate fuzzy logic controller in an AGV steering system, path tracking and collision avoidance strategy. Fuzzy Set Theory Appl. Tatra Mt. Math. Publ. 1999, 16, 456–467. [Google Scholar]

- Dudek, G.; Jenkin, M. Computational Principles of Mobile Robotics, 2nd ed.; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Gul, F.; Rahiman, W.; Sahal Nazli Alhady, S. A comprehensive study for robot navigation techniques. Cogent Eng. 2019, 6, 1–25. [Google Scholar] [CrossRef]

- Juang, C.-F.; Chou, C.-Y.; Lin, C.-T. Navigation of a Fuzzy-Controlled Wheeled Robot Through the Combination of Expert Knowledge and Data-Driven Multiobjective Evolutionary Learning. IEEE Trans. Cybern. 2022, 52, 7388–7401. [Google Scholar] [CrossRef]

- Al-Mallah, M.; Ali, M.; Al-Khawaldeh, M. Obstacles Avoidance for Mobile Robot Using Type-2 Fuzzy Logic Controller. Robotics 2022, 11, 130. [Google Scholar] [CrossRef]

- Oliveira, L.D.; Neto, A.A. Comparative Analysis of Fuzzy Inference Systems Applications on Mobile Robot Navigation in Unknown Environments. In Proceedings of the 2023 Latin American Robotics Symposium (LARS), 2023 Brazilian Symposium on Robotics (SBR), and 2023 Workshop on Robotics in Education (WRE), Salvador, Brazil, 9–11 October 2023; pp. 325–330. [Google Scholar] [CrossRef]

- Al-Mahturi, A.; Santoso, F.; Garratt, M.A.; Anavatti, S.G. A Novel Evolving Type-2 Fuzzy System for Controlling a Mobile Robot under Large Uncertainties. Robotics 2023, 12, 40. [Google Scholar] [CrossRef]

- Mohd Romlay, M.R.; Mohd Ibrahim, A.; Toha, S.F.; Toha, S.F.; De Wilde, P.; Venkat, I.; Ahmad, M.S. Obstacle avoidance for a robotic navigation aid using Fuzzy Logic Controller-Optimal Reciprocal Collision Avoidance (FLC-ORCA). Neural Comput. Appl. 2023, 35, 22405–22429. [Google Scholar] [CrossRef]

- Hong, T.S.; Nakhaeinia, D.; Karasfi, A. Application of Fuzzy Logic in Mobile Robot Navigation. In Fuzzy Logic—Controls, Concepts, Theories and Applications; InTechOpen: London, UK, 2012; pp. 21–36. [Google Scholar] [CrossRef]

- Brahami, A. Virtual navigation of mobile robot in V-REP using hybrid ANFIS-PSO controller. Control Eng. Appl. Inform. 2024, 26, 25–35. [Google Scholar] [CrossRef]

- Yousfi, N.; Bououden, S.; Fergani, B. Sliding Mode Control with Neural State Observer for enhanced trajectory tracking in mobile robots. Sens. Mater. 2024, 36, 1405–1418. [Google Scholar] [CrossRef]

- Kowalski, P.; Nowak, J. Sensor fusion for outdoor mobile robot localization using ANFIS. Meas. Autom. Monit. 2023, 68, 116–120. [Google Scholar] [CrossRef]

- Apriaskar, E.; Fahmizal, F.; Cahyani, I.; Mayub, A. Autonomous Mobile Robot based on Behaviour-Based Robotic using V-REP Simulator–Pioneer P3-DX Robot. J. Robot. Eng. 2020, 16, 15–22. [Google Scholar] [CrossRef]

- Azariadis, P.; Aspragathos, N. Obstacle representation by Bump-Surfaces for optimal motion-planning. J. Robot. Auton. Syst. 2005, 51, 129–150. [Google Scholar] [CrossRef]

- Jang, J.-S.R. ANFIS Adaptive-Network-based Fuzzy Inference System. IEEE Trans. Syst. Man Cybern. 1993, 23, 665–685. [Google Scholar] [CrossRef]

- Hung, C.-C.; Fernandez, B. Minimizing rules of fuzzy logic system by using a systematic approach. In Proceedings of the 2nd IEEE International Conference on Fuzzy Systems, San Francisco, CA, USA, 28 March–1 April 1993; Volume 1, pp. 38–44. [Google Scholar] [CrossRef]

| Rule | Position Error | Heading Error | Right Motor Velocity | Left Motor Velocity |

|---|---|---|---|---|

| 1 | large | negative big | medium | fast |

| 2 | large | negative small | slow | medium |

| 3 | large | zero | fast | fast |

| 4 | large | positive small | medium | slow |

| 5 | large | positive big | fast | medium |

| 6 | medium | negative big | medium | fast |

| 7 | medium | negative small | slow | medium |

| 8 | medium | zero | fast | fast |

| 9 | medium | positive small | medium | slow |

| 10 | medium | positive big | fast | medium |

| 11 | small | negative big | slow | fast |

| 12 | small | negative small | slow | fast |

| 13 | small | zero | slow | slow |

| 14 | small | positive small | fast | slow |

| 15 | small | positive big | fast | slow |

| Position Error | Heading Error | Expected Right Motor Velocity | Expected Left Motor Velocity |

|---|---|---|---|

| 0.9 | 0 | 0.7578 | 0.7578 |

| 0.8 | 0 | 0.7578 | 0.7578 |

| 0.7 | 0 | 0.7578 | 0.7578 |

| 0.6 | 0 | 0.7578 | 0.7578 |

| 0.5 | 0 | 0.7578 | 0.7578 |

| 0.4 | 0 | 0.7578 | 0.7578 |

| 0.3 | 0 | 0.7578 | 0.7578 |

| 0.2 | 0 | 0.7572 | 0.7572 |

| 0.1 | 0 | 0.6055 | 0.6055 |

| 0 | 0.9 | 0.7578 | 0.1674 |

| 0 | 0.8 | 0.7578 | 0.1674 |

| 0 | 0.7 | 0.7578 | 0.1674 |

| 0 | 0.6 | 0.7578 | 0.1674 |

| 0 | 0.5 | 0.7578 | 0.1674 |

| 0 | 0.4 | 0.7546 | 0.1713 |

| Rule | Left Sensor | Right Sensor | Right Motor Velocity | Left Motor Velocity |

|---|---|---|---|---|

| 1 | very close | very close | slow | slow |

| 2 | very close | close | slow | fast |

| 3 | very close | medium | slow | fast |

| 4 | very close | far | slow | fast |

| 5 | very close | very far | slow | fast |

| 6 | close | very close | fast | slow |

| 7 | close | close | slow | slow |

| 8 | close | medium | slow | fast |

| 9 | close | far | slow | fast |

| 10 | close | very far | slow | fast |

| 11 | medium | very close | fast | slow |

| 12 | medium | close | fast | slow |

| 13 | medium | medium | slow | slow |

| 14 | medium | far | slow | fast |

| 15 | medium | very far | slow | fast |

| 16 | far | very close | fast | slow |

| 17 | far | close | fast | slow |

| 18 | far | medium | fast | slow |

| 19 | far | far | fast | fast |

| 20 | far | very far | slow | fast |

| 21 | very far | very close | fast | slow |

| 22 | very far | close | fast | slow |

| 23 | very far | medium | fast | slow |

| 24 | very far | far | fast | slow |

| 25 | very far | very far | fast | fast |

| Left Sensor | Right Sensor | Expected Right Motor Velocity | Expected Left Motor Velocity |

|---|---|---|---|

| 0 | 1 | 0.6186 | 0.1276 |

| 0.1 | 0.9 | 0.6186 | 0.1276 |

| 0.2 | 0.8 | 0.6186 | 0.1276 |

| 0.3 | 0.7 | 0.6175 | 0.1292 |

| 0.4 | 0.6 | 0.5377 | 0.1364 |

| 0.5 | 0.5 | 0.1276 | 0.1276 |

| 0.6 | 0.4 | 0.1363 | 0.5377 |

| 0.7 | 0.3 | 0.1292 | 0.6175 |

| 0.8 | 0.2 | 0.1276 | 0.6186 |

| 0.9 | 0.1 | 0.1276 | 0.6186 |

| 1 | 0 | 0.1276 | 0.6186 |

| Strategy | Time to Reach the End Point (s) | Number of Turns | Number of Rules for Tracking Controller | Number of Rules for Avoidance Controller |

|---|---|---|---|---|

| ANFIS | 71.4 | 13 | 9 | 16 |

| Fuzzy system | 73.2 | 18 | 15 | 25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stavrinidis, S.; Zacharia, P. An ANFIS-Based Strategy for Autonomous Robot Collision-Free Navigation in Dynamic Environments. Robotics 2024, 13, 124. https://doi.org/10.3390/robotics13080124

Stavrinidis S, Zacharia P. An ANFIS-Based Strategy for Autonomous Robot Collision-Free Navigation in Dynamic Environments. Robotics. 2024; 13(8):124. https://doi.org/10.3390/robotics13080124

Chicago/Turabian StyleStavrinidis, Stavros, and Paraskevi Zacharia. 2024. "An ANFIS-Based Strategy for Autonomous Robot Collision-Free Navigation in Dynamic Environments" Robotics 13, no. 8: 124. https://doi.org/10.3390/robotics13080124

APA StyleStavrinidis, S., & Zacharia, P. (2024). An ANFIS-Based Strategy for Autonomous Robot Collision-Free Navigation in Dynamic Environments. Robotics, 13(8), 124. https://doi.org/10.3390/robotics13080124