Abstract

This paper presents a wavelet-based image guided tracking control system for unmanned multirotor aerial vehicle system with the presence of uncertainty. The visual signals for the visual tracking process are developed by using wavelet coefficients. The design uses a multiresolution interaction matrix with half and details images to relate the time-variation of wavelet coefficients with the velocity of the aerial vehicle and controller. The proposed design is evaluated on a virtual quadrotor aerial vehicle system to demonstrate the effectiveness of the wavelet-based visual tracking system without using an image processing unit in the presence of uncertainty. In contrast to the classical visual tracking technique, the wavelet-based method does not require an image processing task.

1. Introduction

Over the past years, there have been tremendous research interest on the development of autonomous flight tracking system for small scale multirotor miniature aerial vehicle. This demands may be because of its wide variety of civilian and military applications. The literature review on control designs on this area can be found in [1]. The survey on small scale aerial vehicle can be traced from [2,3,4]. Some textbooks have also been reported on this area, for example, [5,6,7,8]. The most recent results in this area can also be found here [9,10]. The image guided tracking control design for the vehicle has also been studied by researchers and industrial, for example [11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30]. In classical vision-based tracking control design, one usually uses visual/geometrical information for example image points, lines and circles that obtained from either one or multiple cameras to minimize the error between a set of actual and reference measurements. These vision based tracking designs require to process images which associated with features extraction and matching tasks over time. The goal in image processing is to identify and match the geometrical features in the image segments [28,31]. This segmented process is computationally expensive slowing down the tracking process significantly. The integrating of additional algorithms slows down the tracking convergence speed significantly. To deal with these problems, a new type of image-based tracking methods have recently been proposed. The results in this area can be traced from [31,32,33,34,35,36]. Authors in [31] showed that vision-based control can be designed without using the image processing process. In [32,33], authors presented visual tracking mechanism by using pure photometry image signal. Authors in [34] developed visual tracking approaches based on using image gradient. The entropy-based visual tracking technique also introduced in [35]. Recently, authors in [36] designed visual tracking schemes for automatic positioning under a scanning electron microscope by using Fourier transformation. These methods may be used to relax the requirement of the image processing system while ensuring accurate visual tracking without the presence of uncertainty. Most recently, authors in [37] applied a wavelet-based visual tracking system for rigid robot manipulators. Recently, authors in [36] designed visual tracking schemes for automatic positioning under a scanning electron microscope by using Fourier transformation. These methods can be used to relax the requirement of the image processing system while ensuring accurate visual tracking without the presence of uncertainty. Most recently, authors in [37] applied a wavelet-based visual tracking system for rigid robot manipulators. Most recently, authors in [38] presented a wavelet-based tracking system for an aerial vehicle. However, the design and analysis assumed that the vision and vehicle system is free from uncertainty. Moreover, the design and analysis do not provide the convergence analysis of the closed-loop system formulated by visual and vehicle tracking control algorithm. In our view, the visual tracking process and control algorithms in many of these above methods may deteriorate significantly with the presence of uncertainty associated with the visual/image processing and modeling errors, control inputs, and other external disturbances including flying environments.

This paper presents a wavelet-based image guided tracking control system for the multirotor aerial vehicle in the presence of uncertainty. The proposed wavelet based design can also be used in other areas such as corners detection, pattern recognition, filtering, economic data and data compression, compressed sensing, and temperature analysis. In contrast with the Fourier transform, the wavelet transform can be applied to express the image in both the time and frequency domain. The wavelet design uses a multiresolution wavelet transform (MWT) to develop an image-guided tracking control algorithm for the multirotor aerial vehicle. In our design, we use a half-resolution image obtained from the MWT considered as a visual signal. The design develops the spatial derivative wavelet coefficients involved in computing multiple resolution interaction matrices relating to the time-variation of derivative wavelet coefficients with vehicles’ spatial velocity from the detail wavelet coefficients. The half-resolution based design can provide automatic filtering of the low and high frequencies in the image generally corresponding to the image noise. Such filtering allows selecting noiseless and redundant visual signals for a more accurate and stable visual tracking control system. The proposed design is extensively evaluated on a virtual quadrotor aerial vehicle platform with half and details image-based MWT in the presence of uncertainty. The tests are conducted in nominal conditions and using different coefficients resolution to express the optimal ones improving the controller behaviors concerning convergence, robustness, and accuracy. The evaluation results show that the MWT based design can provide accuracy and efficiency without using image processing tasks with the presence of uncertainty.

The paper is organized as follows: Section 2 reviews the basics of the MWT and the dynamical model of the systems. Section 2 also presents the design and analysis of the MWT based tracking control system for a multirotor aerial vehicle. Section 3 presents design synthesis and test results on a quadrotor aerial vehicle system. Finally, Section 4 provides concluding remarks of this work.

2. Wavelet-Based Visual Tracking System Design for UAV

The visual tracking system has been extensively studied by many researchers from computer vision and image processing communities. The design allows the system to perceive the surrounding environment, make decisions, and react to changes relying on interdisciplinary research paradigm including computer vision, image processing, and control system. Classical visual tracking usually involves image processing task to detect and match the geometrical features in the image. The image processing task affects the tracking performance significantly as the process requires high computational effort and slows down the tracking control system. To deal with this problem, this work focuses on the design and development of a wavelet-based visual tracking system. First, we present a brief background review on the basics of the multiresolution wavelet transformation mechanism. Then, we introduce a wavelet-based visual tracking system by developing a model and visual control strategy. Finally, a dynamical model and visual tracking system for a quadrotor unmanned aerial vehicle system are presented.

2.1. Multiresolution Wavelet Transform (MWT)

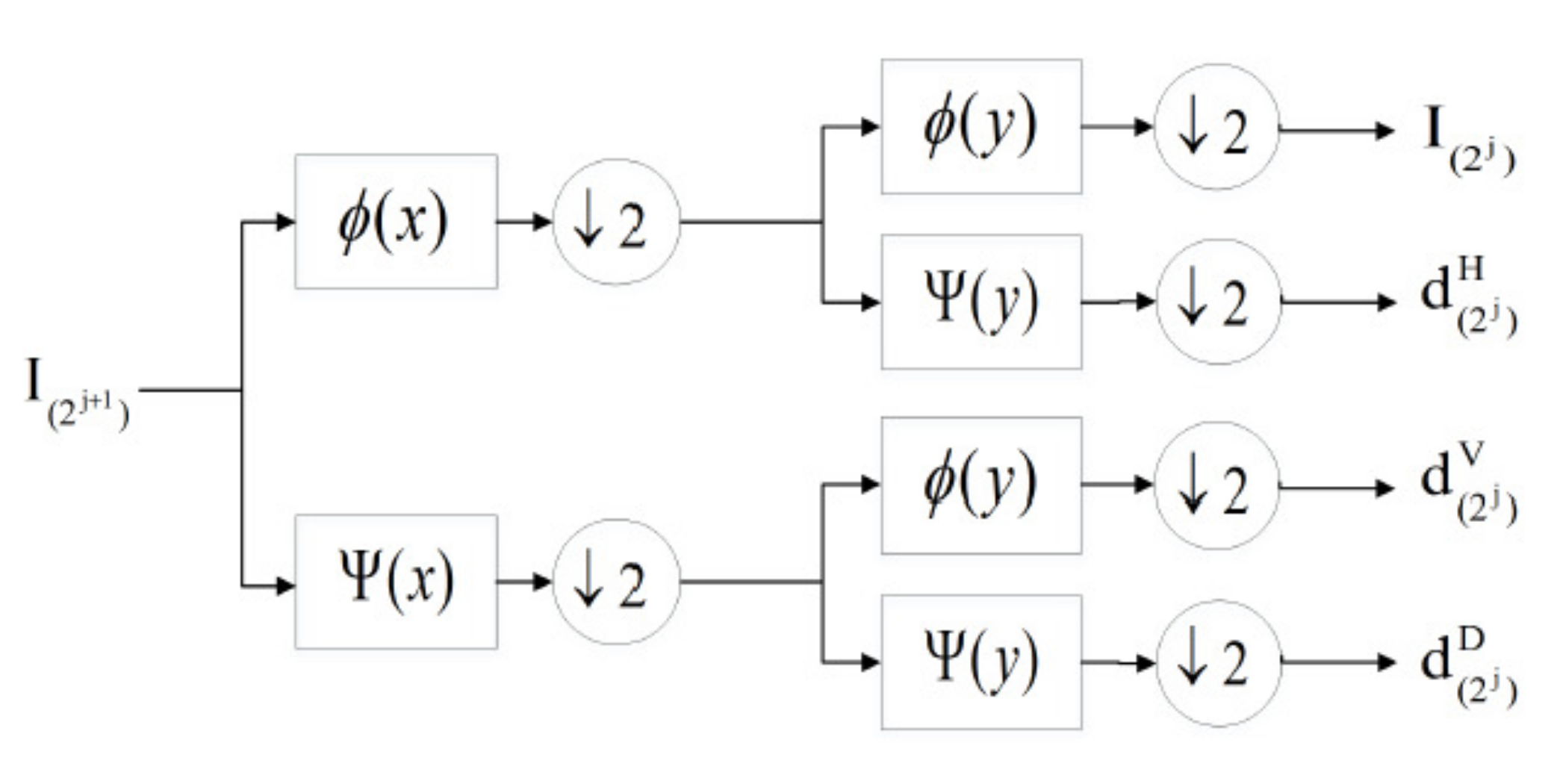

Wavelets transform is a mathematical tool that provides a representation of signals in time and frequency domains [39]. Such transformation aims to decompose the original full resolution image into an approximation half resolution image, a horizontal, vertical, and diagonal details images [38,40,41,42].

We consider a 2D signal and a wavelet function . Their inner products are defined as the general wavelet transform as

For MWT, two functions have to be defined first, a scaling function and a mother wavelet function. The scaling function can be modeled as a low pass filter with certain Daubechies pair of the fourth-order coefficients. The mother wavelet can be modeled as a high pass filter with different coefficients [43]. MWT is designed by defining four different combinations related to the wavelet function to generate four different subsignals. The four combined signals can be designed as

where is the down-sampling operator and ∘ refers to the composition of functions. In this paper, the operator will be referred as . Finally, the original full-resolution image is decomposed into four subsignals through the inner product with each defined by four combinations as

to achieve the approximation image at half resolution, and

to achieve the horizontal, vertical, and diagonal details.

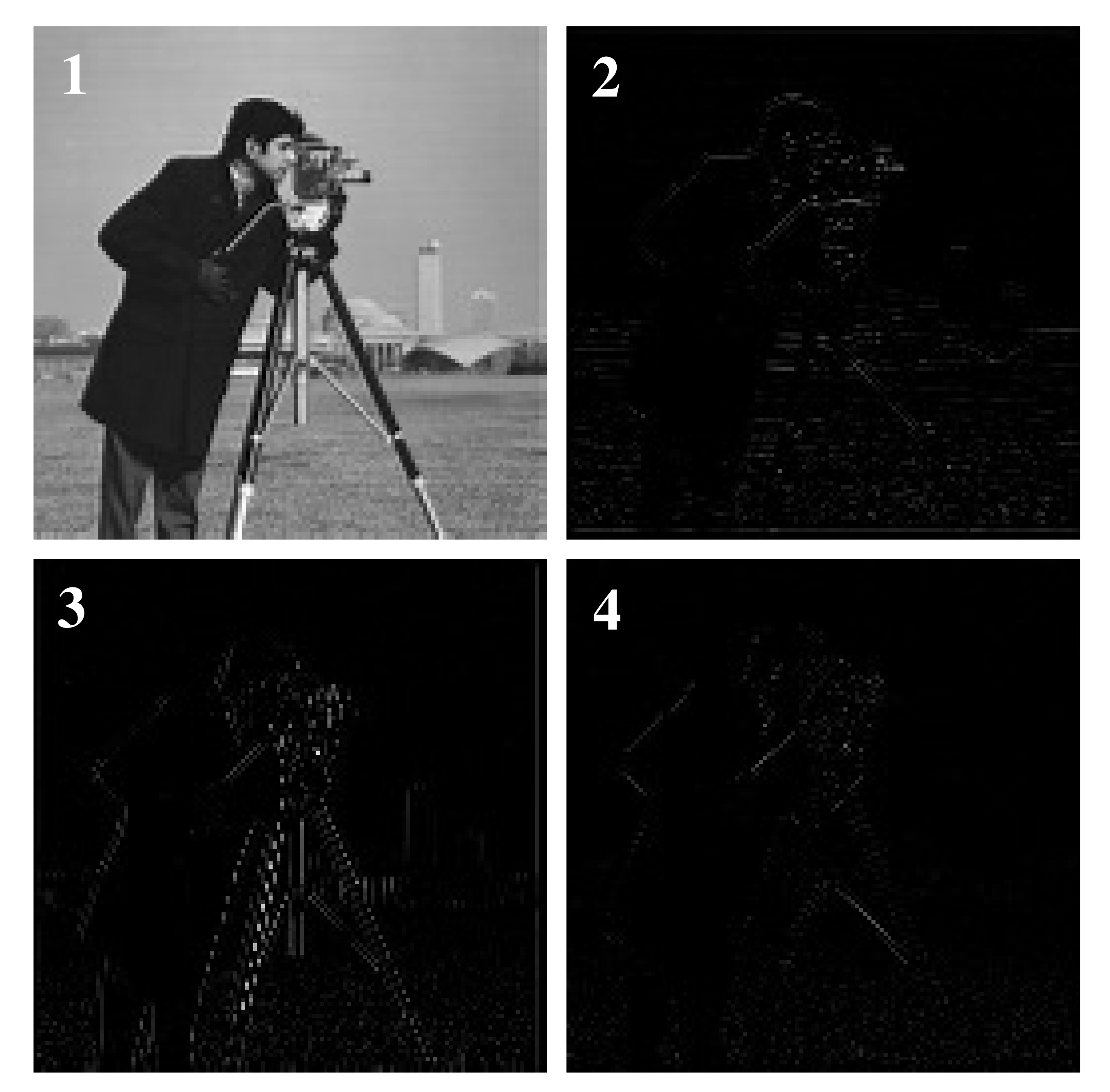

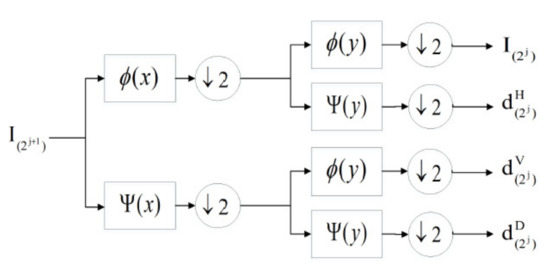

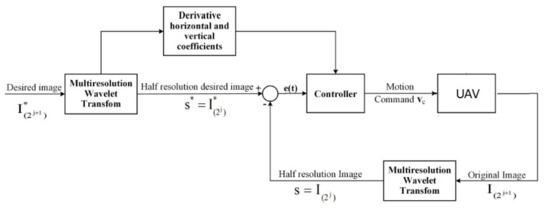

For a better understanding, Equations (6) and (7) can be summarized as depicted in Figure 1. Specifically, an example of applying low-pass and high-pass filters in the rows and columns directions according to the image given in Figure 2 is illustrated in Figure 3.

Figure 1.

Multiresolution wavelet transform.

Figure 2.

Original image.

Figure 3.

MWT approximation image, horizontal details, vertical details, and diagonal details.

2.2. MWT Based Interaction Matrix Modeling

Let us first model the change in camera position to the change in features before developing a wavelet-based autonomous visual tracking system. The relationship between the camera movement and the corresponding variation of the detected features can be described by the following model

where is the interaction matrix (or the feature Jacobian) that links the change in camera position to the change in features, and is the camera velocity vector including instantaneous linear and angular velocities. In wavelet-based based design, the half resolution approximation image is used as the visual signal . Specifically, the luminance at location is considered to be the new feature. For that purpose, a new interaction matrix related to the luminance should be estimated as given by the following equation

Now, consider a 3D point in the world being projected to the image plane as . The variation in may occur either because of the object motion or camera motion. The relative motion estimation between two images can be illustrated using optical flow [44]. Since (9) requires finding the change in luminance, one can estimate the luminance in the following form

However, if at time t, the normalized coordinates of the point coincide with x, then Equation (10) can be written as

where is the spatial gradient of , and is the 2D velocity of the point . Following the luminance constancy assumption, then substitute , and Equation (10) becomes

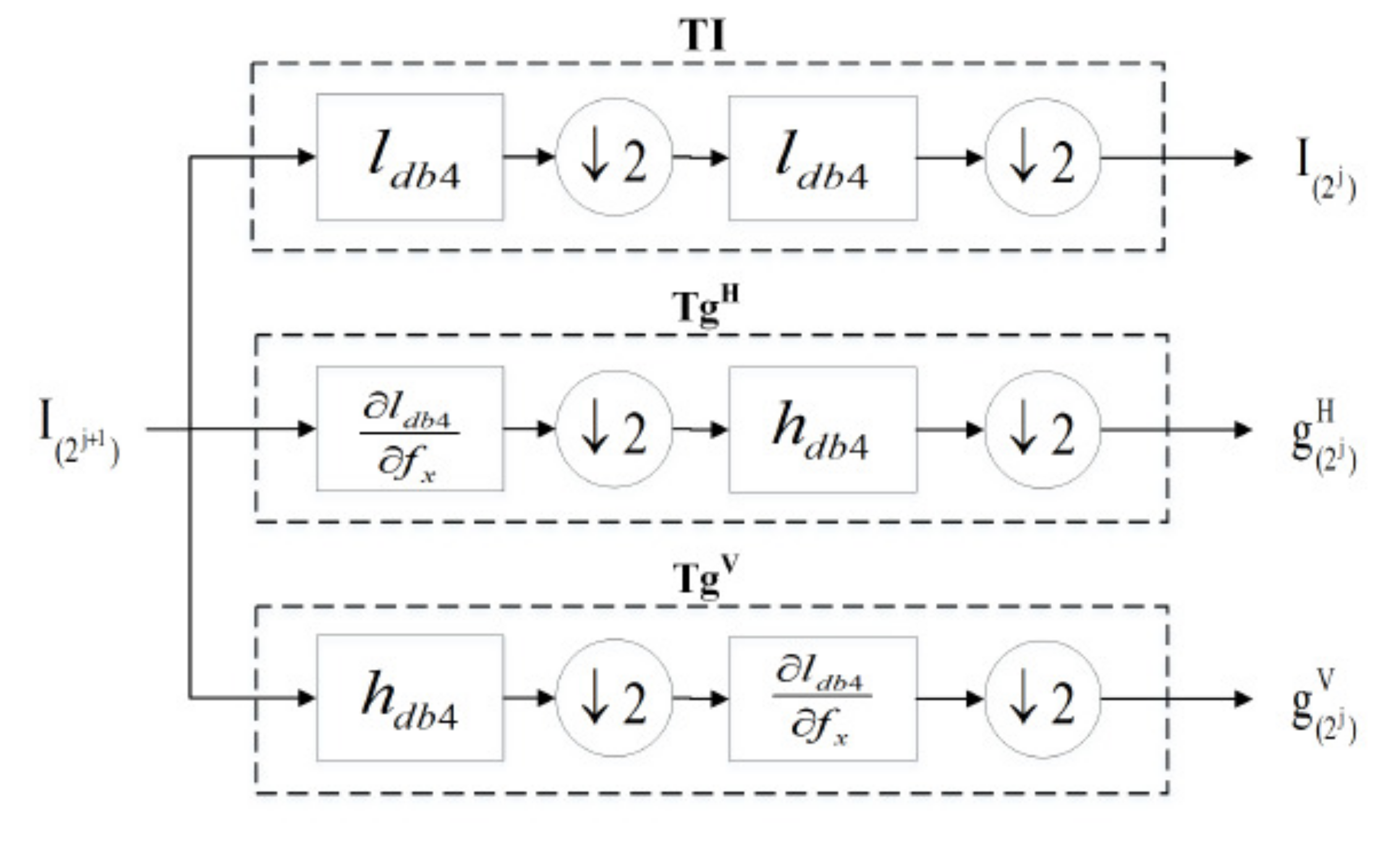

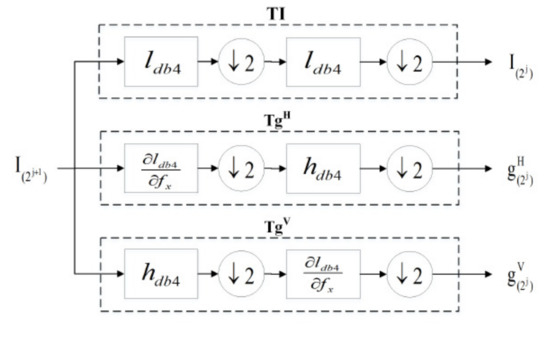

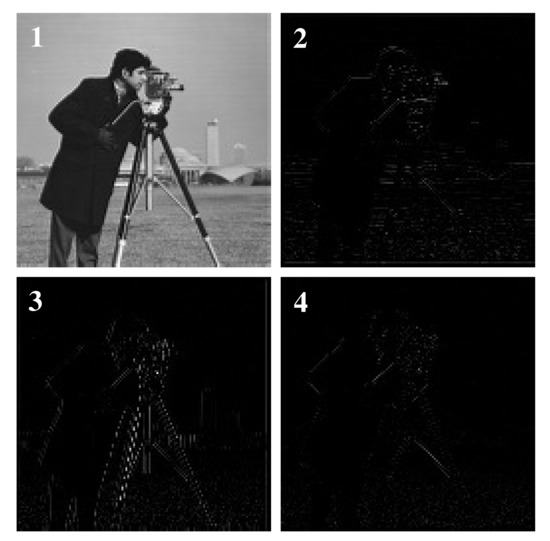

For simplicity, the following two functions are introduced

where and are the derivative horizontal and vertical details as illustrated in Figure 4. Now, by substituting (6) and (14) in (13), one can write

Figure 4.

MWT showing the derivative horizontal and vertical details.

Using feature as , we have

Now, using the relation between the change in features and the camera velocity for the 2D feature and with

one can write as

Equation (18) can be simplified as

where is the new multiresolution interaction matrix .

2.3. Visual Tracking Control System Design and Stability Analysis

Our aim is now to develop wavelet based visual tracking system. The goal is to generate velocities aiming to decrease the error exponentially. For the case of wavelets-based based design, the tracking error is the difference between the current and the desired approximation images in each iteration as expressed as

Then, the relationship between the camera movement and the corresponding variation of detected features can be described by the following model

Now, a similar model that maps the camera velocity with the variation of the error through the newly designed multiresolution interaction matrix can be described as

To ensure an exponential convergence of the tracking error , one can design image guided wavelet based velocity tracking controller as

where is the Moore-Penrose pseudoinverse of the multiresolution interaction matrix. If the interaction matrix has a full rank, then we have . This implies that the signals and are bounded and guaranteed to be minimum values. When interaction matrix has a full rank and , then the matrix is invertible. This means that it is possible to ensure the existence of the velocity control . However, in practice, the interaction matrix or is usually approximated to reduce the computational complexity. In practical application, the interaction matrix or its pseudoinverse are usually estimated or approximated as it may not be possible to find their precise values. As a result, the image guided wavelet based autonomous visual tracking control needs to change to the following form

where denotes the approximated pseudoinverse multiresolution interaction matrix. The interaction matrix is approximated to reduce the computational complexity. For a particular task, the interaction matrix is computed at the desired location and the result is used for all iterations . An additional step is used to achieve a smooth convergence of the tracking task defined as the Levenberg–Marquardt optimization technique [9]. So, the new optimized visual tracking control law can be written in the following form

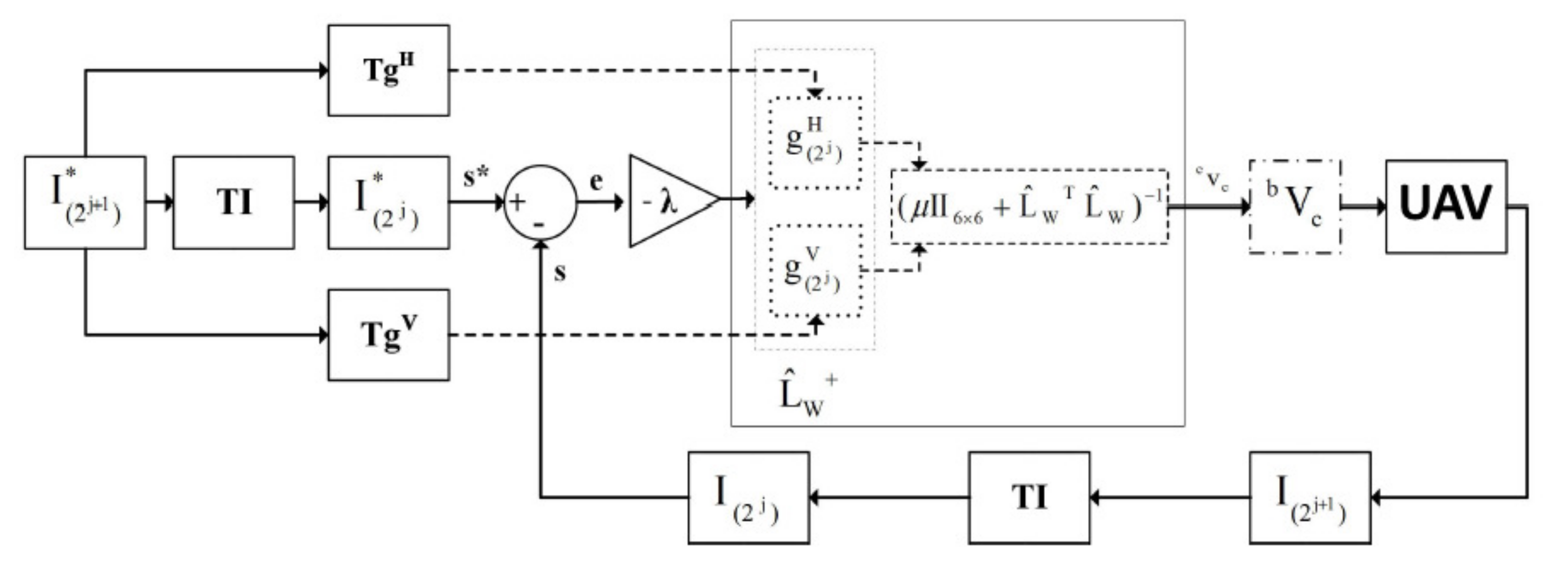

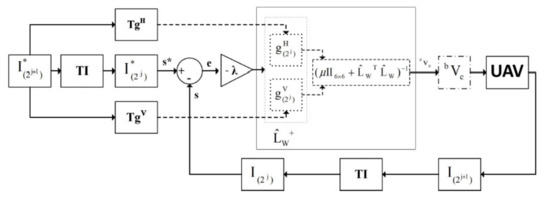

The final block diagram of the multiresolution wavelet based visual tracking control system is illustrated in Figure 5 with all the details. It shows how the half-resolution approximation image is used as the visual signal and how the derivative horizontal and vertical details are used to modify the new multiresolution interaction matrix before being used in the new optimized tracking control law. The signals , and in Figure 5 are presented in Figure 4. Based on our above analysis, one can state that the linear and angular velocity tracking error signals of the camera are bounded and exponential converge to zero. Since the tracking error velocity signals are bounded, the linear and angular position tracking error signals is also bounded and exponentially converge to zero.

Figure 5.

Detailed block diagram of wavelet based visual tracking system.

2.4. Dynamical Model for UAV

Let us now introduce the dynamical model of the quadrotor aerial vehicle by Using Newton–Euler formulation. The dynamical model for the vehicle can be established with the presence of uncertainty as [10,38]

where and denotes the mass and moment of inertia, and presents the position and rotational angles defining the roll, pitch and yaw, respectively, defines the thruster input force vector, , and describes the control inputs for the roll, pitch and yaw moments, g is the gravitational force, l denotes the length from the center of the mass of the vehicle and , , , , and defines the lumped uncertainty associated with mass, inertia, aerodynamic friction, and other external disturbances including flying environment. The dynamical model for the quadrotor aerial vehicle system can also be written in state-space form as [10]

with , , , , , , , , , , , , , , , , , , , and . The proposed design and stability analysis is based on the following assumptions: : The attitude angles are bounded as , and . : The terms , , , , , are continuous and bounded by known constants belonging to a compact sets.

2.5. Tracking Control System Design and Stability Analysis

Let us now develop a wavelet based visual flight tracking control system for the attitude, altitude, and virtual position dynamics of the quadrotor aerial vehicle system. For the sake of simplicity, the position and velocity states of the vehicle are defined as , . The states of the camera are defined as and with , , , , , , , , , , , , In our analysis, the goal is to show that the states of the vehicle converge to the desired camera states asymptotically provided that the position and velocity states of the camera are bounded and exponentially converge to zero.

In design and analysis, the model parameters of the vehicle are assumed to be constant. The bounds of the disturbances are known and lie over the given compact sets. Then, because of assumption and , it is possible to develop a control algorithm such that the visual tracking system can ensure the bound of the position and velocity states of the vehicle and provided that the desired position and velocity of the camera and are bounded as derived in the previous subsection. The design considers that the attitude dynamics are fully actuated and linearised by decoupling the first three terms in the model (27). The design also considers that the attitude dynamic is faster than the translation dynamics. Now, we introduce the following visual tracking control algorithm for the thruster input

where , and is the desired linear velocity of the camera-generated by the z components of the camera velocity vector. The vector is the desired thruster in z direction to the vehicle. Now, the goal is to develop wavelet-based image-guided attitude control laws , , and for the quadrotor vehicle. The roll and pitch control laws are used to control the translation to x, y, and z axis, respectively. To design , , and , the desired rolling, pitching, and yawing angles, as well as their angular rates, are required. The desired angular rates of the rolling and pitching angles are obtained from the fourth, fifth, and sixth components of the camera velocity . The desired angular position of the rolling and pitching angles is developed by using the following relationship

where the virtual input algorithms and are designed as

with , , and Then, attitude control algorithms for the rolling, pitching and yawing moments can be designed as

with , , , , , , and . To show tracking error convergence with controller (30)–(33), we first derive the tracking error model in the following state space equation

where , , , , , , , , , , , , , , , , , with , , . The Lyapunov method is used to show the convergence of the closed loop system. To do that, the following Lyapunov function candidate is chosen as where with and . In view of assumptions and and using the inequality with , the time derivative of along the closed loop tracking error models formulated by (30)–(36) can be simplified as . This implies that there exist control design parameters such that the visual tracking control algorithms (30) to (33) can ensure that the states of the vehicle and are bounded and asymptotically stable provided that the position and velocity of the camera and are bounded and exponentially converge to zero as derived in the previous subsection. This implies that all the error states signals in closed-loop tracking error systems are bounded and asymptotically stable in the Lyapunov sense.

3. Design Synthesis and Test Results

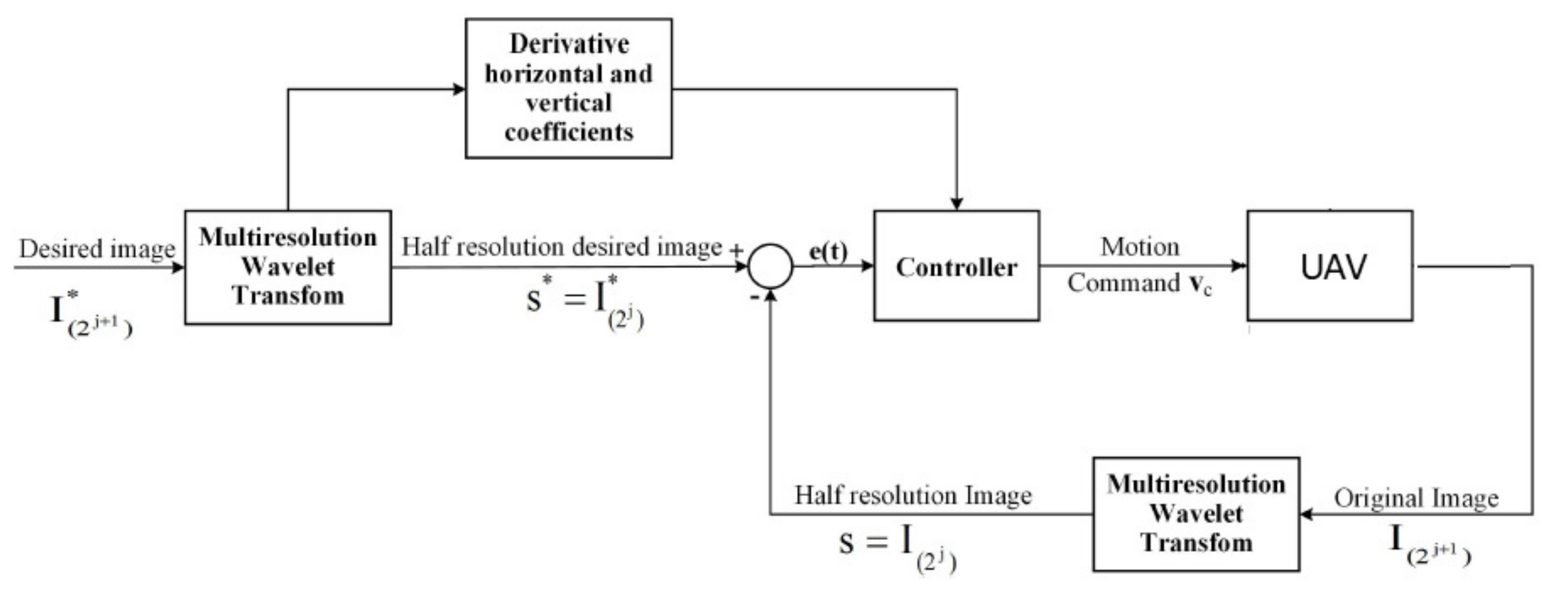

This section presents the test results of the proposed wavelet-based visual tracking system on a quadrotor aerial vehicle. The implementation block diagram of the wavelet-based autonomous visual tracking system is depicted in Figure 6.

Figure 6.

Implementation block diagram of the wavelet based visual tracking system.

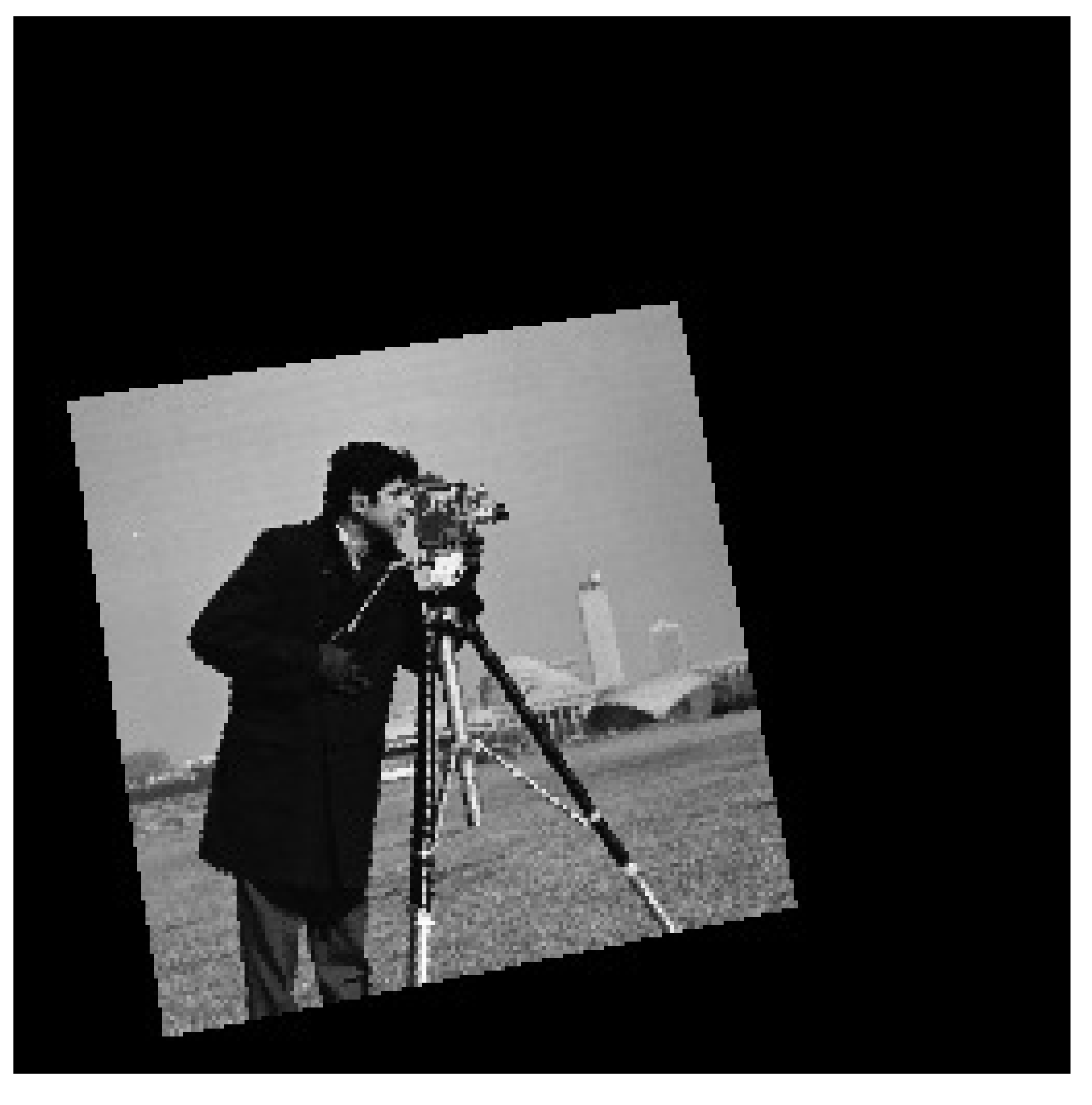

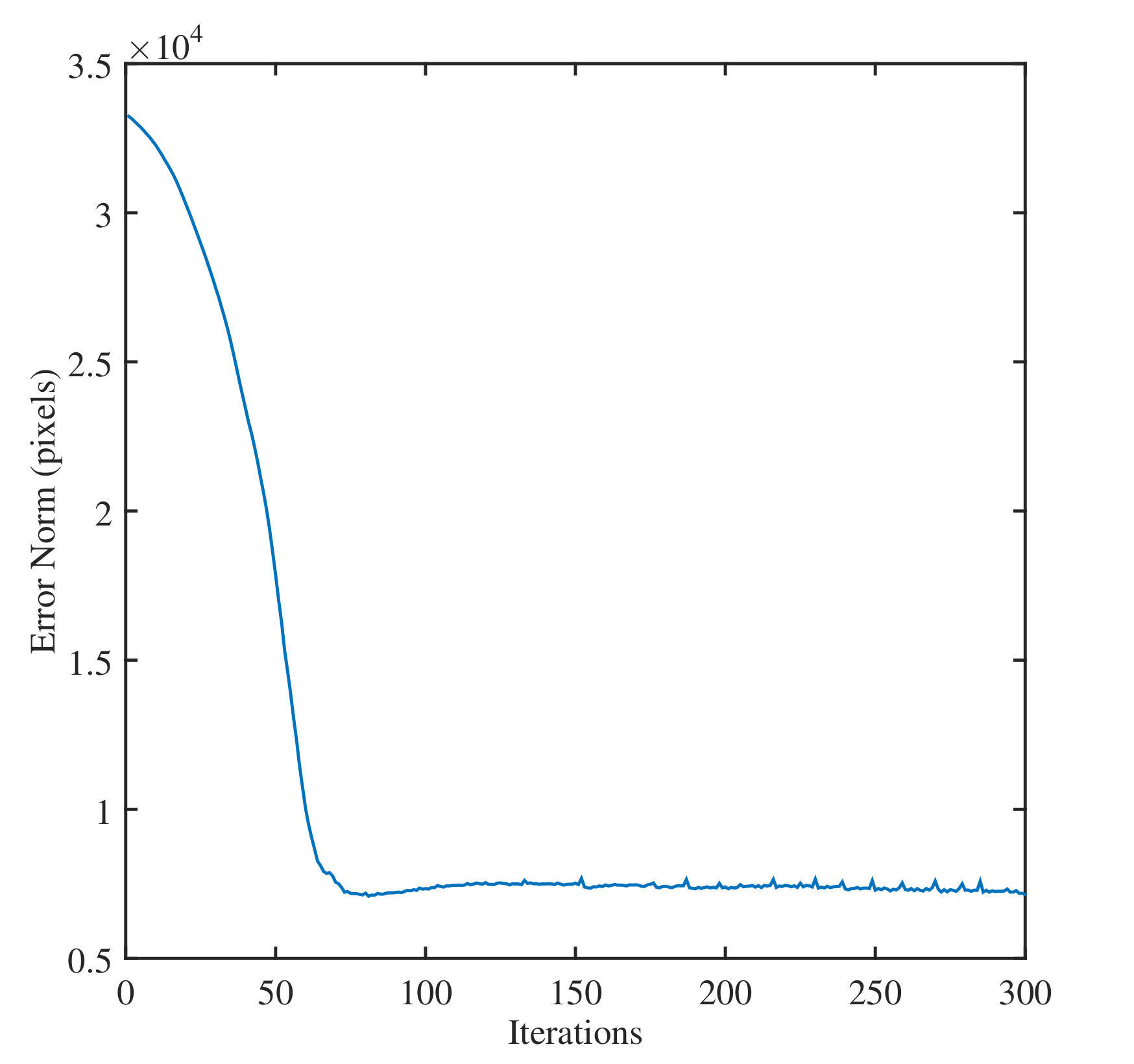

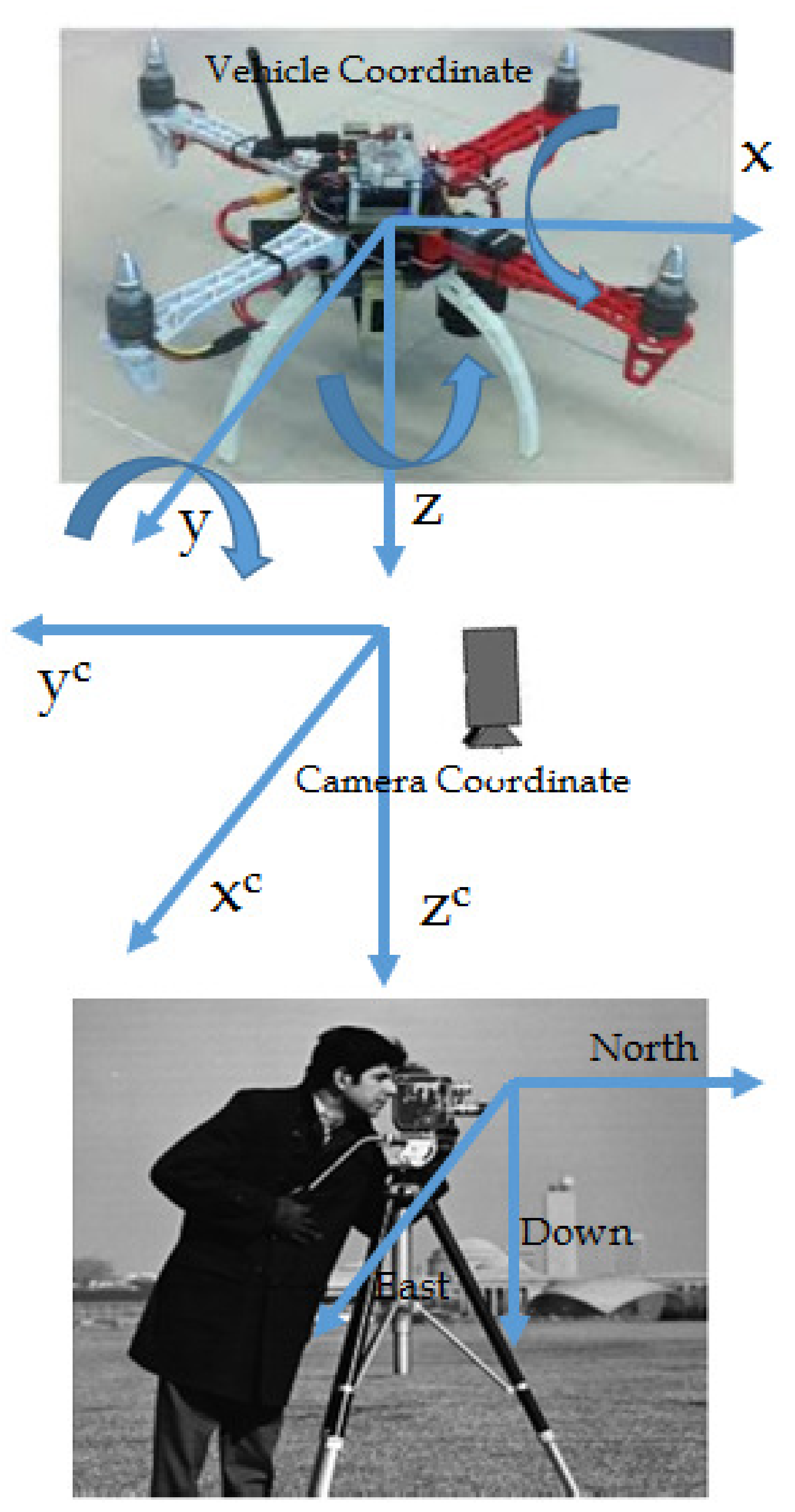

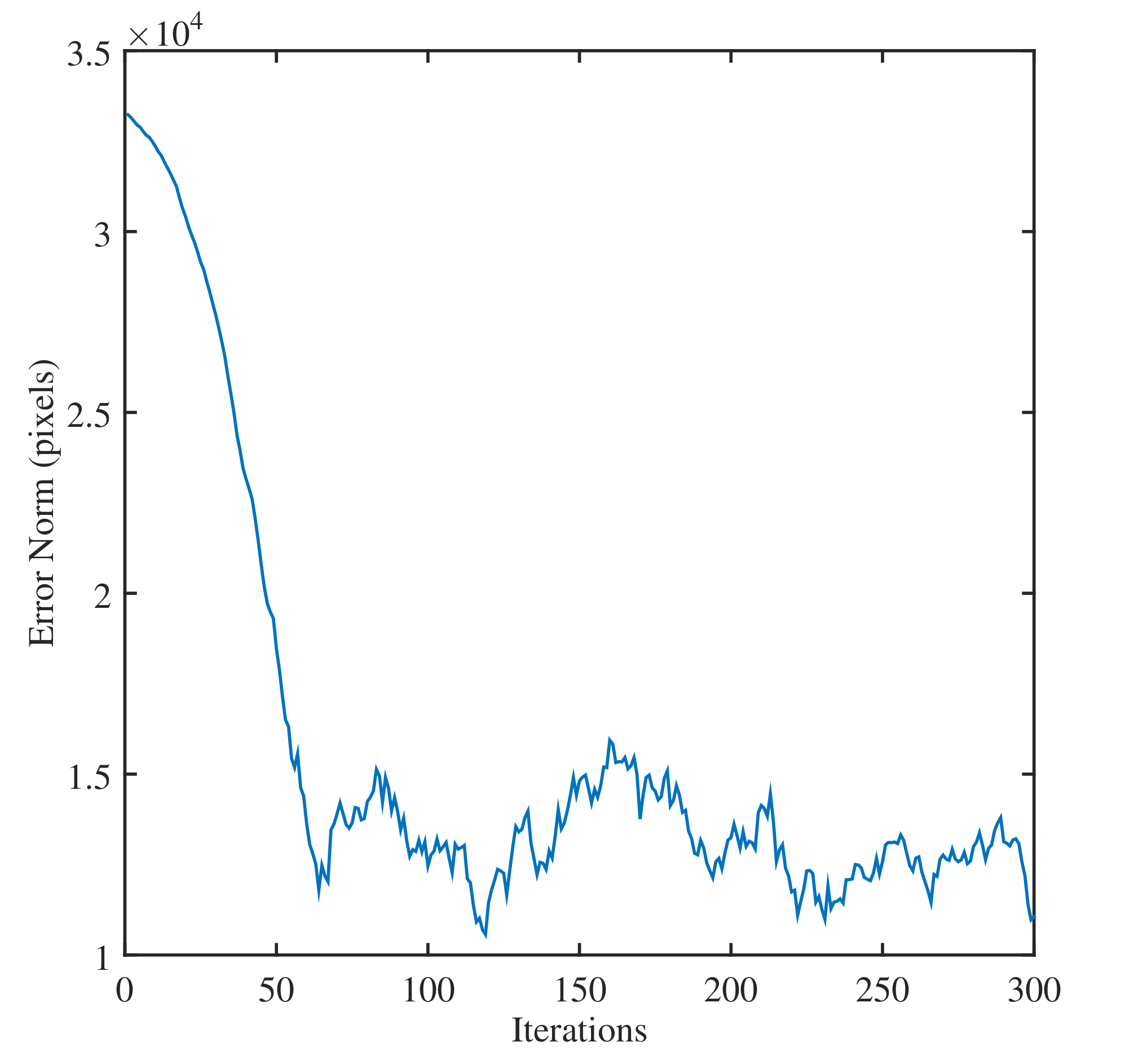

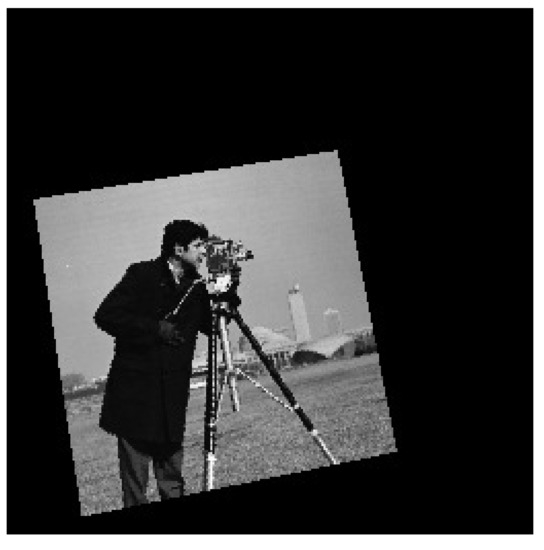

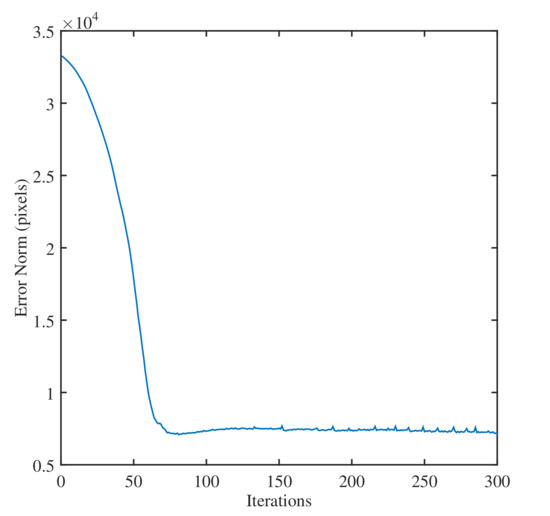

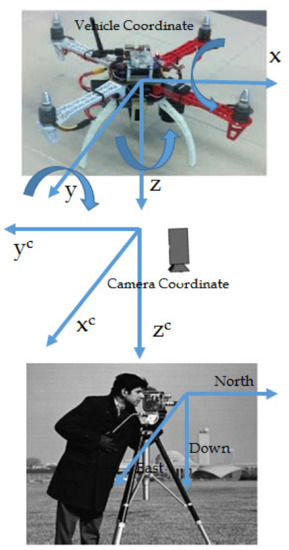

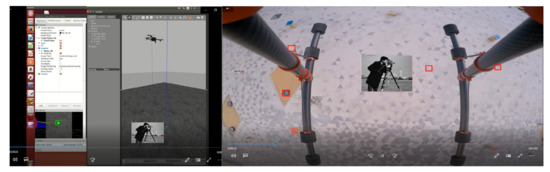

The test is conducted on a virtual quadrotor aerial vehicle system. The vehicle is equipped with a downward camera attached to the center of the vehicle. The camera is equipped with the vehicle such that it can look down to the vehicle. In our test, we use a known image as the reference target object which estimates the position (3D) of the vehicle. The target is synchronized in such a way that its axes are parallel to the local plane North East axes. First, we calibrate the camera before conducting a test to calculate the camera intrinsic parameters. The focal length and sensor size are selected as (mm) and m). Figure 7 shows the decomposed reference image into horizontal, vertical, approximation, and diagonal coefficients. For our test, the width and height of the image are selected as and Pixels. The initial linear position of the vehicle, camera and rotation is defined as (m), (m), (m), , , and . The initial camera image is shown in Figure 8. Figure 9 depicts the initial image with the reference image. Using the proposed MWT based wavelet based visual tracking design, the final image is shown in Figure 10. Figure 11 presents the error image between the final image and the reference image. The error norm of the MWT based visual tracking system is depicted in Figure 12. Let us now select the parameters for the evaluated quadrotor aerial vehicle as , , , (kg), (m), (m/s), and .

Figure 7.

The wavelet decomposition of the reference image approximation-horizontal, vertical, and diagonal coefficients [38] (© 2018 IEEE).

Figure 8.

The initial image uses in our test [38] (© 2018 IEEE)

Figure 9.

The initial and reference image [38] (© 2018 IEEE).

Figure 10.

The final image based on using MWT based visual tracking system [38] (© 2018 IEEE).

Figure 11.

The final image difference with respect to the reference image [38] (© 2018 IEEE).

Figure 12.

The profile of the error norm of the wavelet based visual tracking system [38] (© 2018 IEEE).

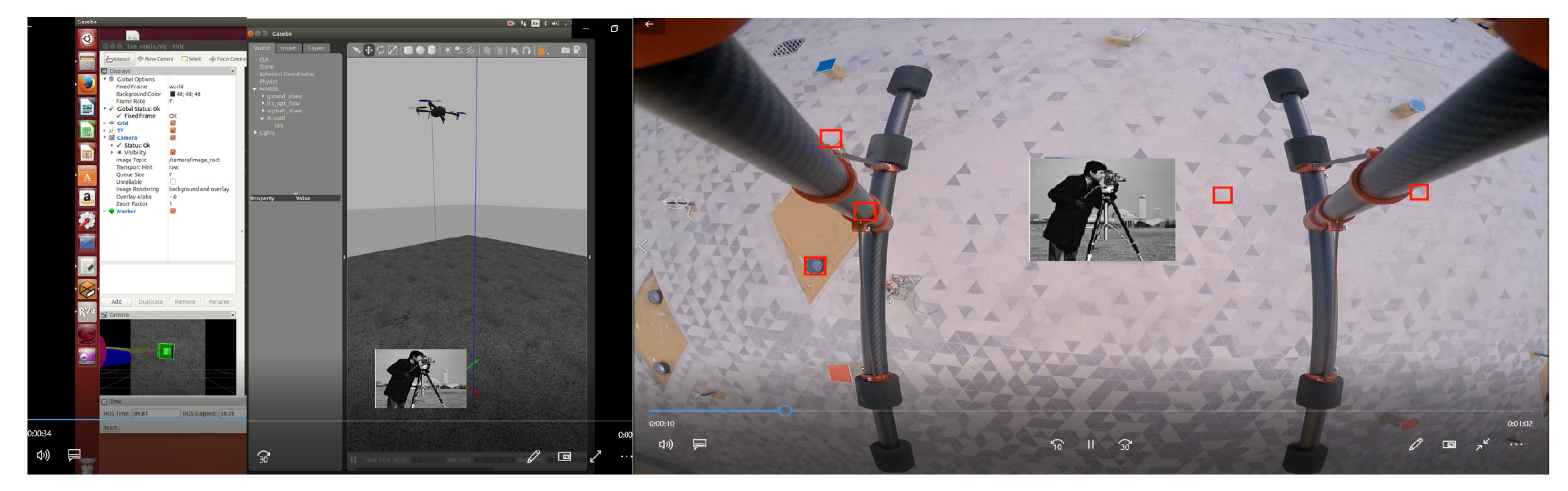

The controller design parameters for the aerial vehicle are selected as , , , , , , and . The controller parameters for the visual tracking controller are selected as and . The sampling time for all tests is chosen as (s). In our test, the camera position with respect to vehicle is assumed to be known and constant. Figure 13 depicts the coordinate system of the vehicle, camera, and target object. Figure 14 presents the image of the real scene of the flying vehicle interacting with target objects. To test the wavelet-based based design on a quadrotor vehicle, we first generate a vehicle’s position matrix to the ground by using a homogeneous transformation matrix .

Figure 13.

The coordinate systems-vehicle, camera and target object.

Figure 14.

The image of the real scene of the flying vehicle interacting with target object.

The vehicles position states are used to obtain homogeneous transformation matrix as where , , , , , , , .

Applying known constant transformation matrix , the camera position to the ground can also be calculated. The desired camera velocity signals are obtained from camera velocities by using a transformation matrix linking the camera to vehicle . Then, the visual tracking control laws , , and with the virtual control inputs and are implemented to force the quadrotor to the desired position and velocity signals of the attached camera. The tests are conducted for four cases. In our first case, it is assumed that the vehicle dynamics and inputs are free from uncertainty. In the second case, it is assumed that the vehicle inputs are subjected to unknown random noise uncertainty.

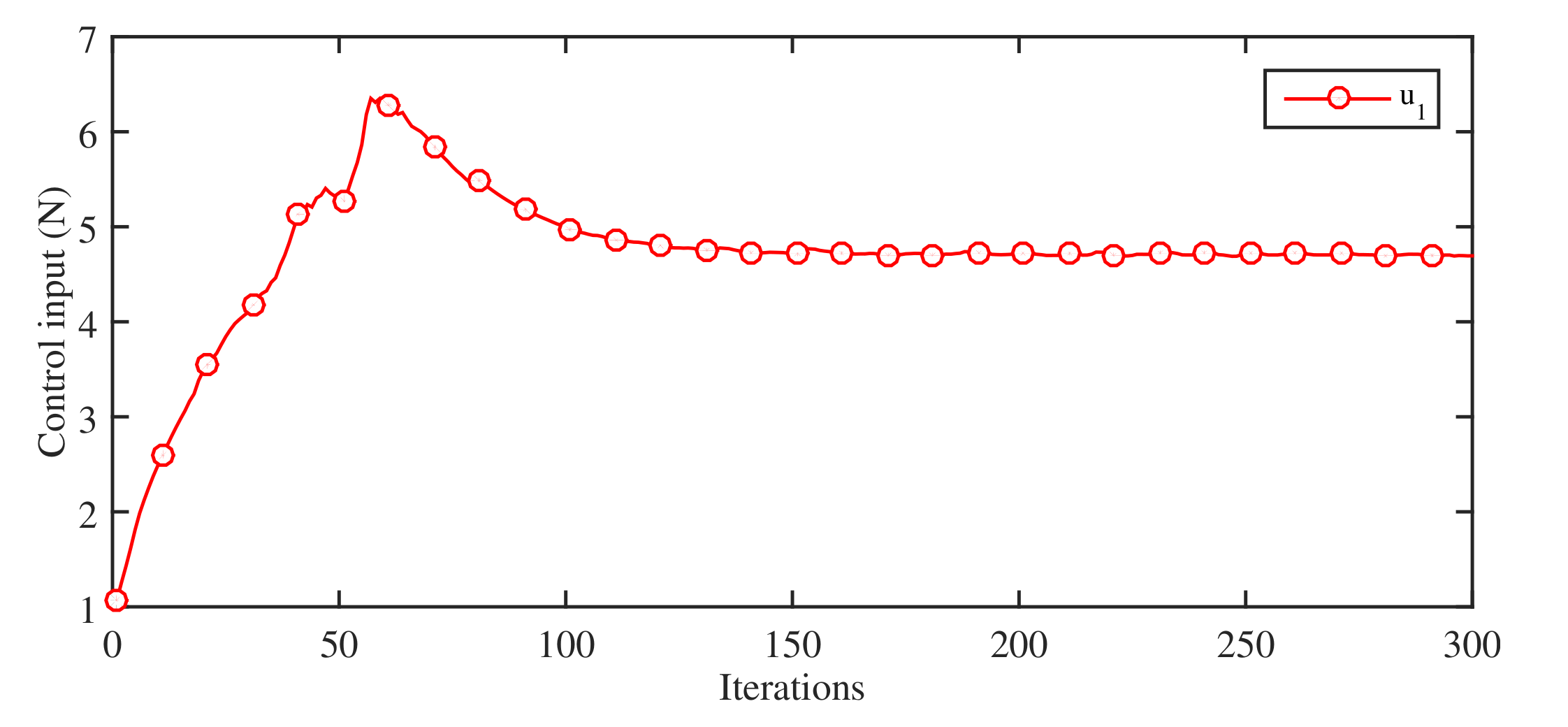

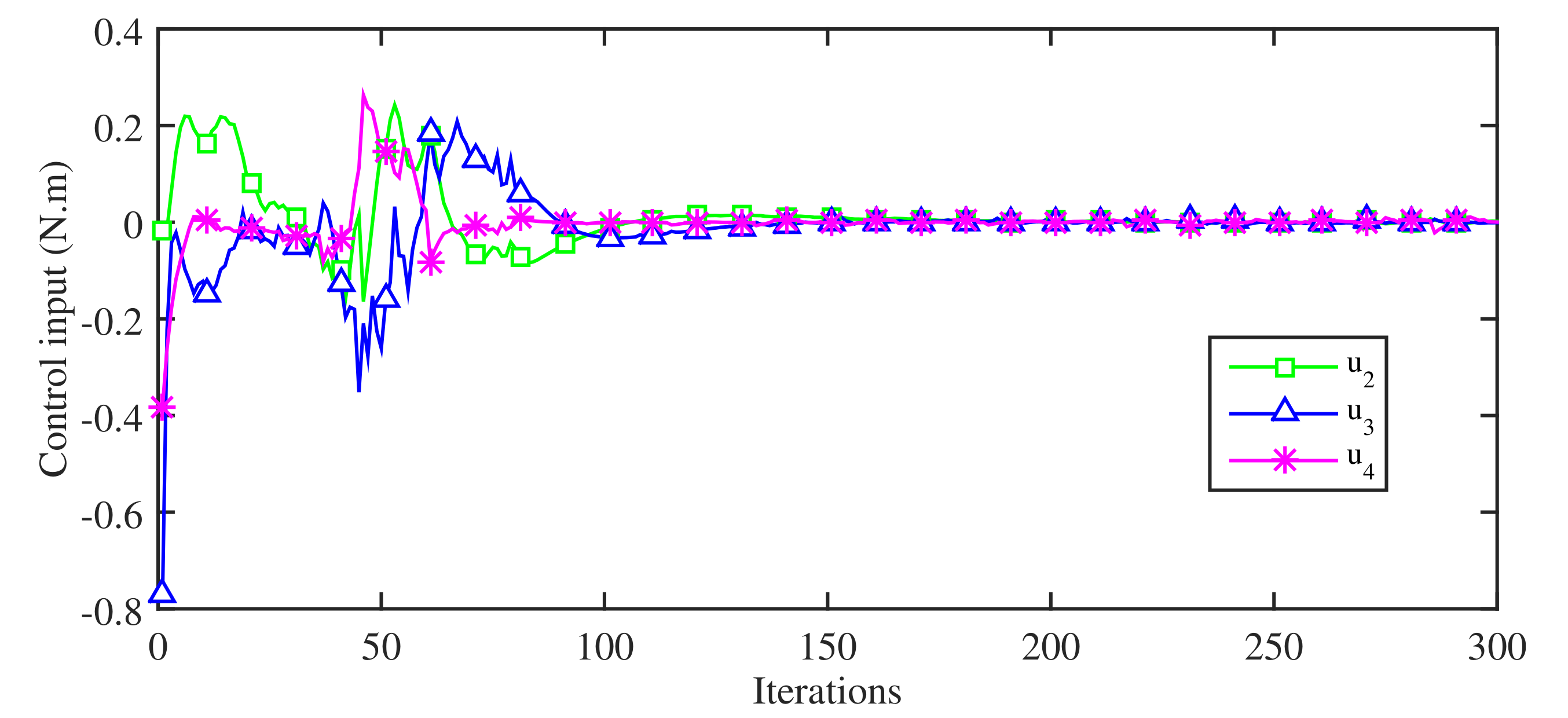

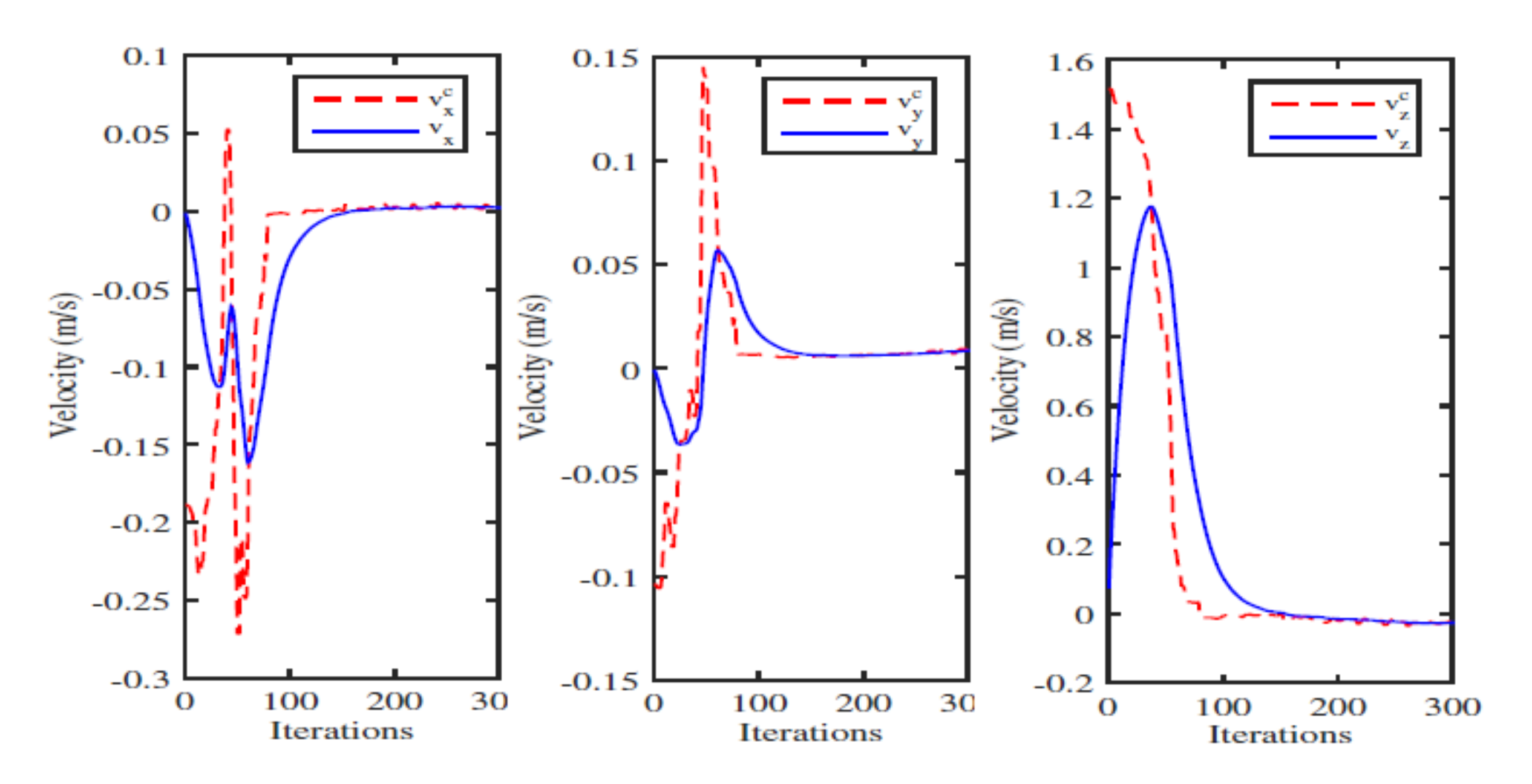

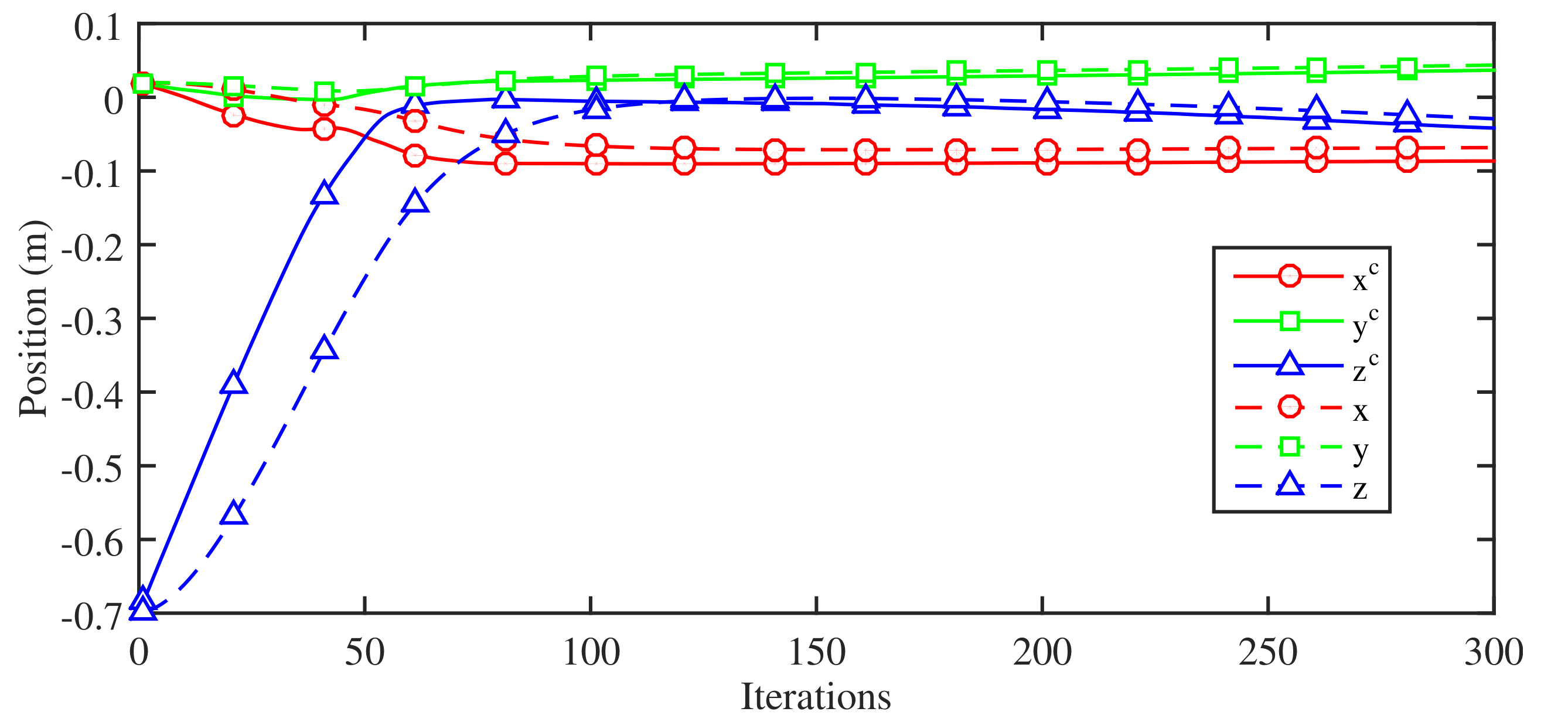

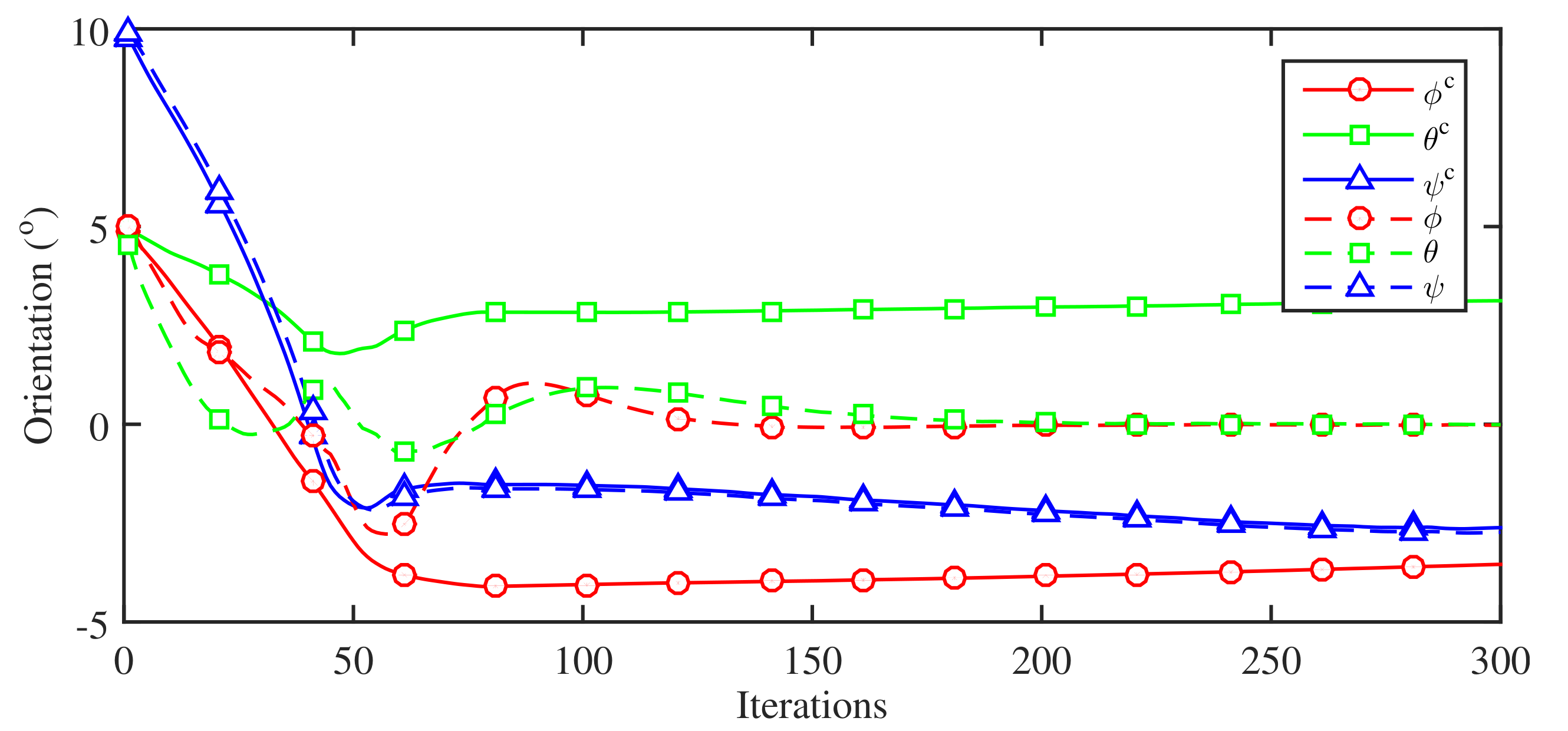

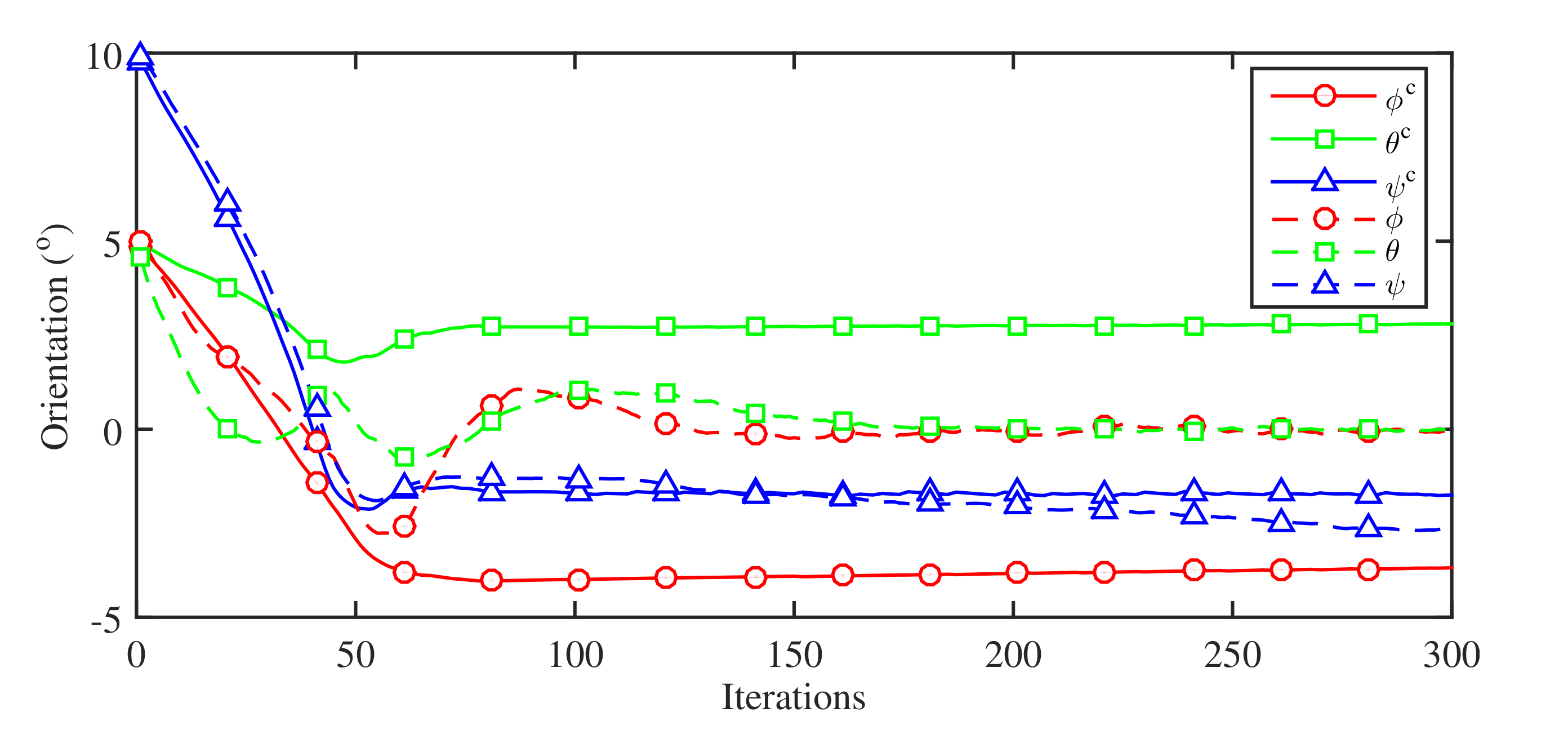

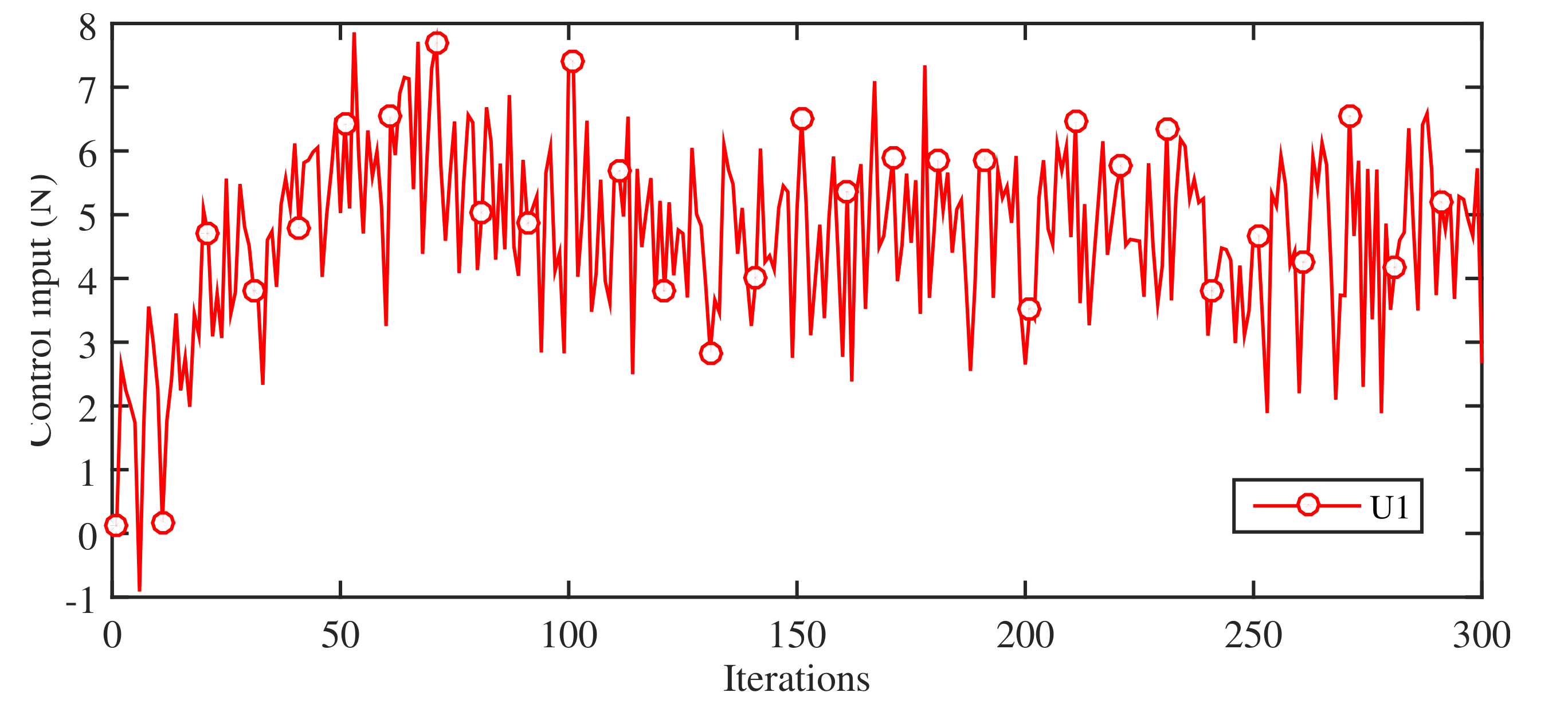

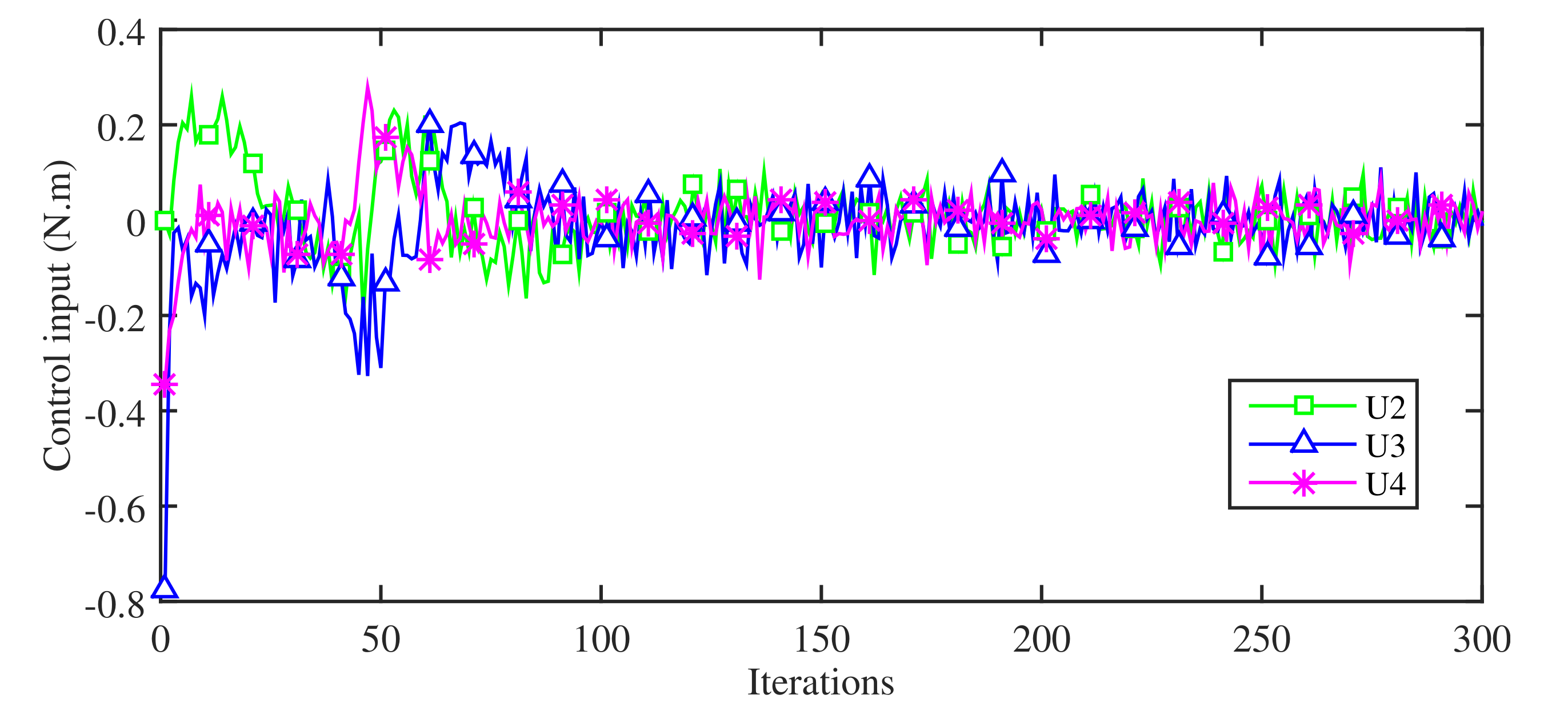

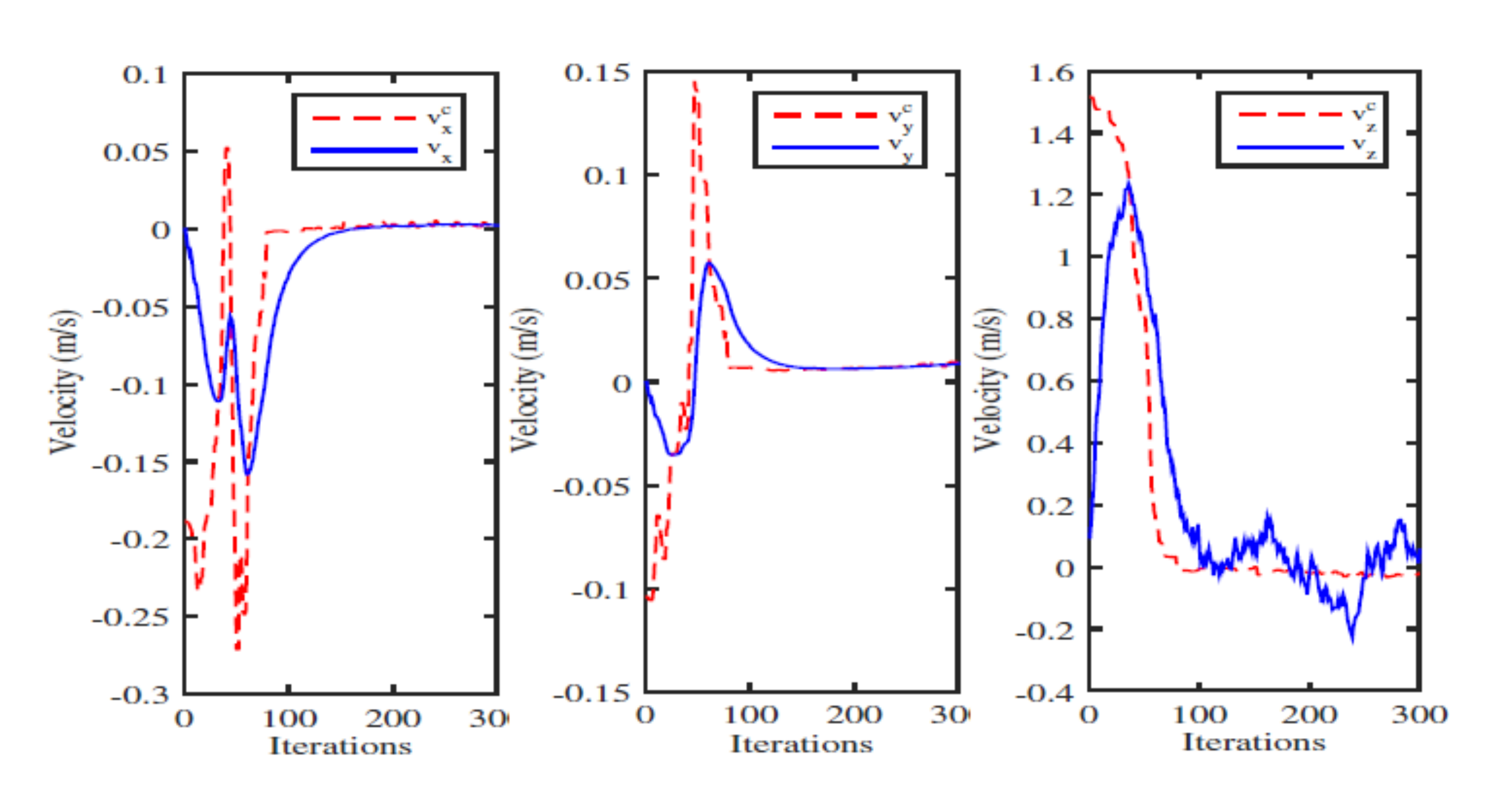

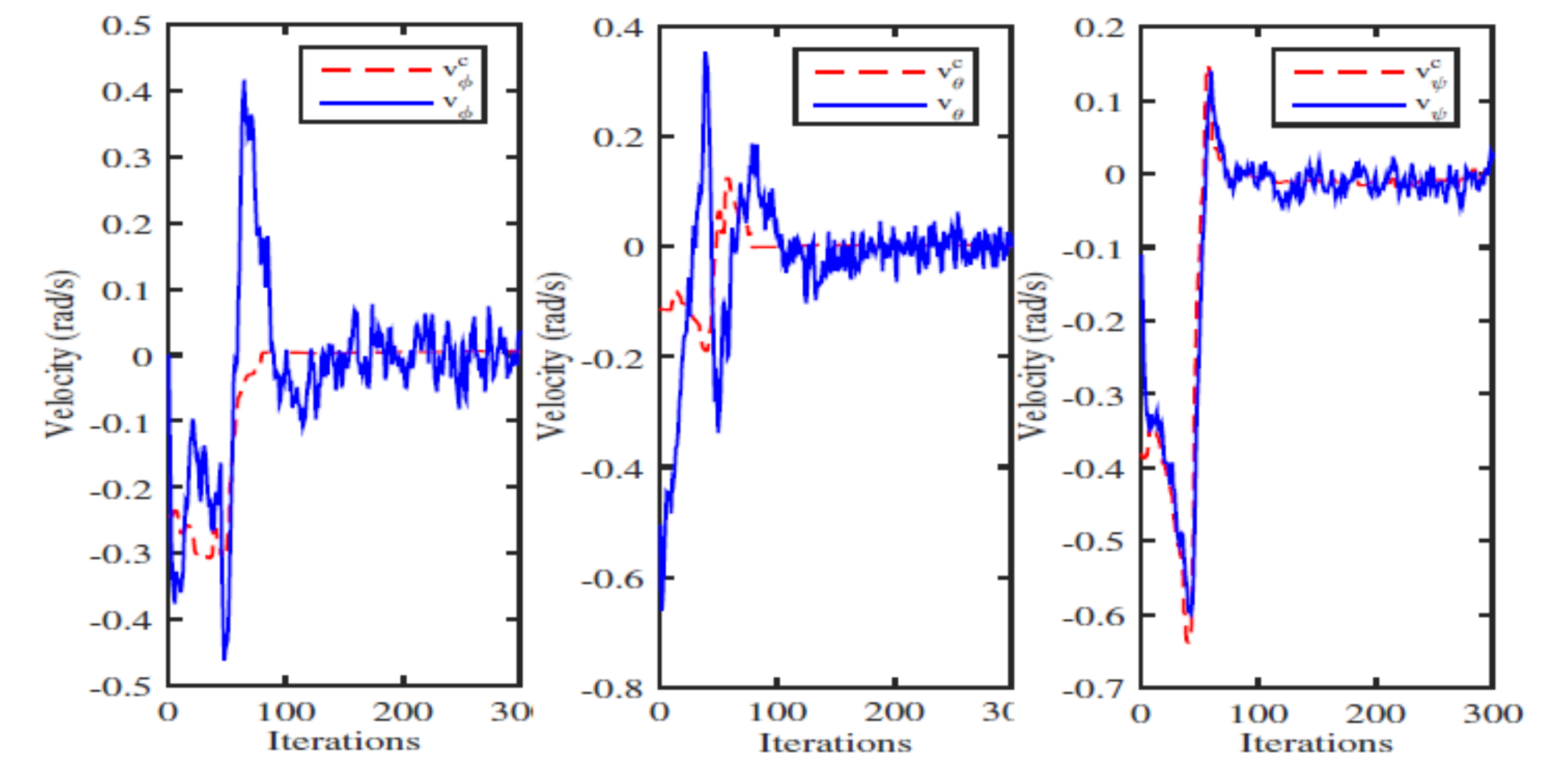

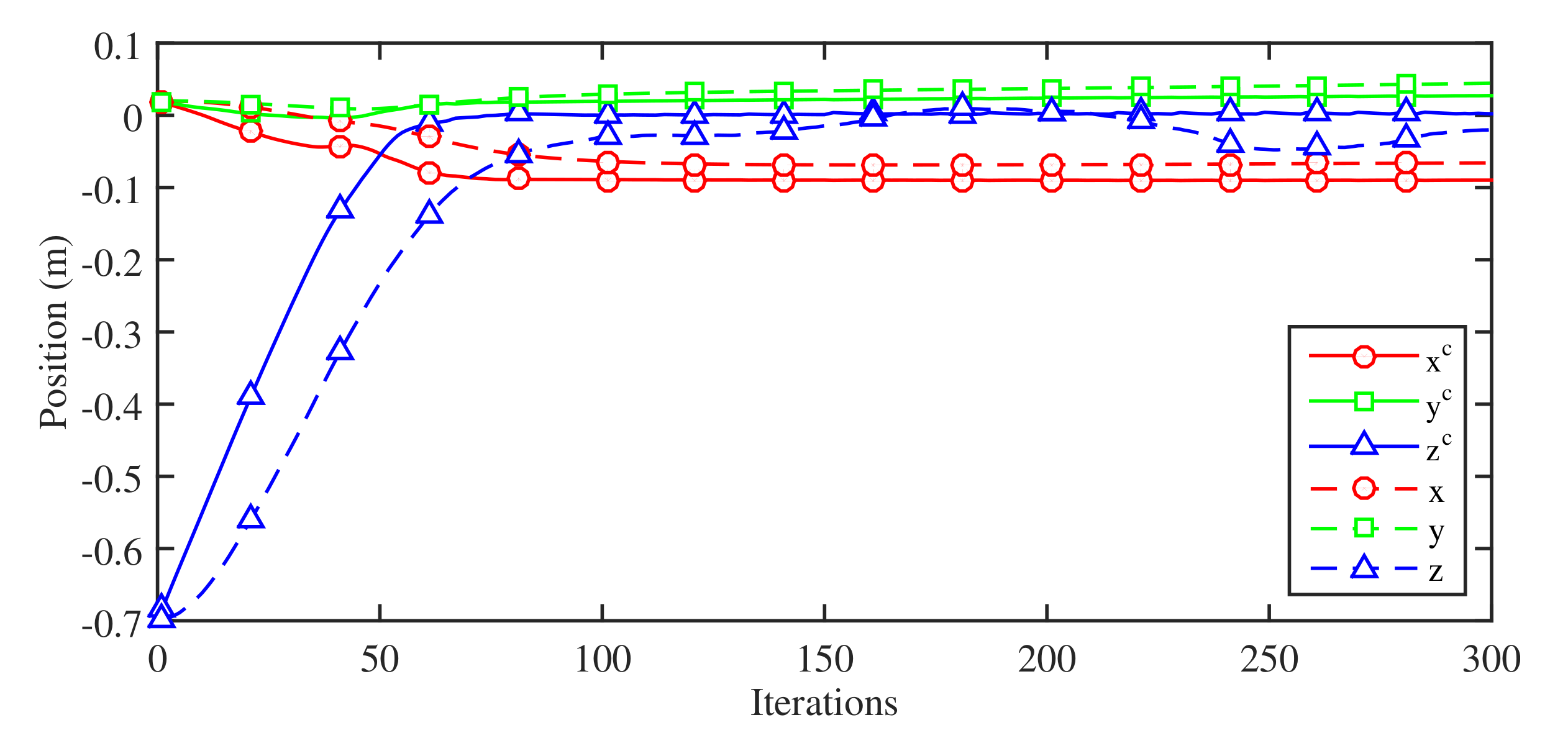

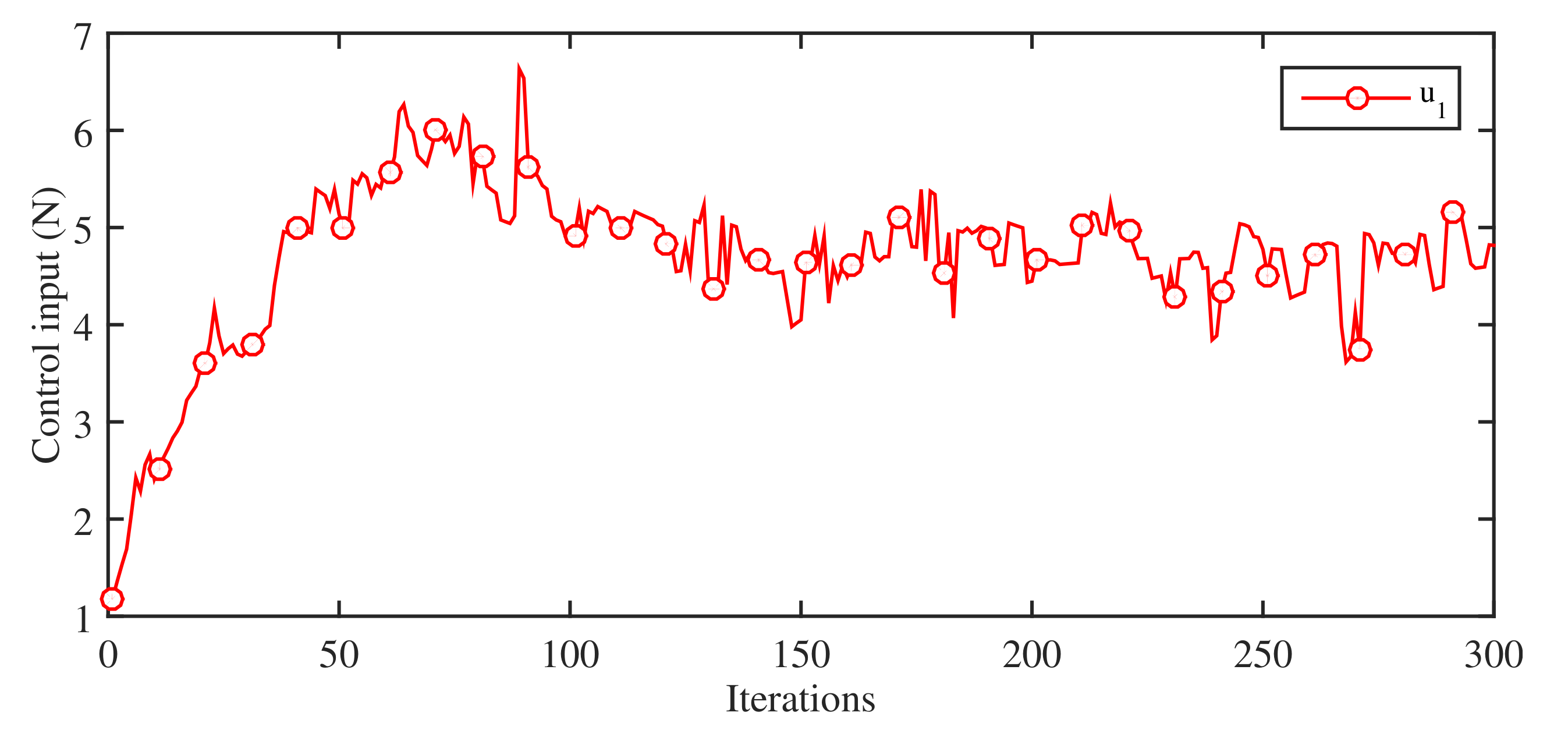

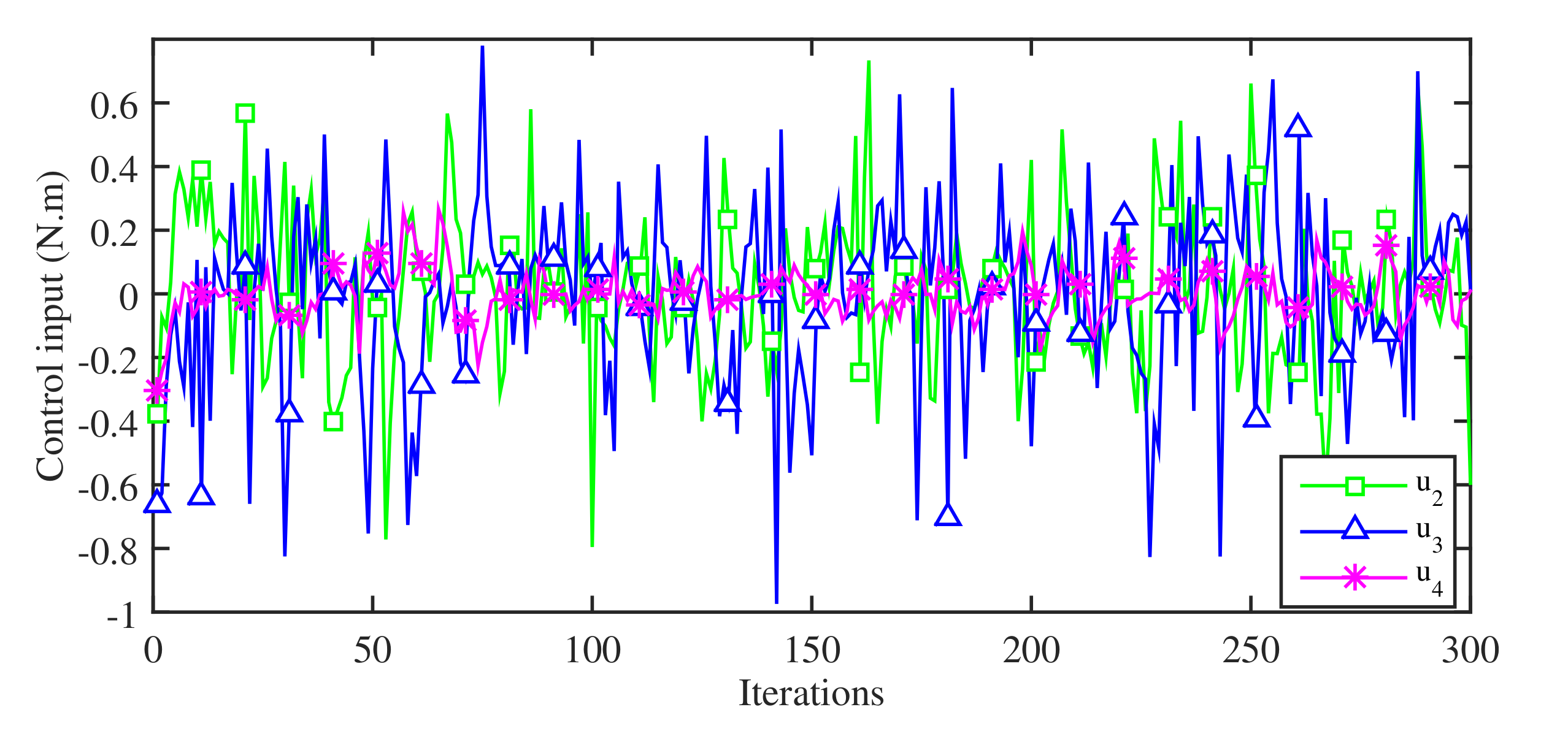

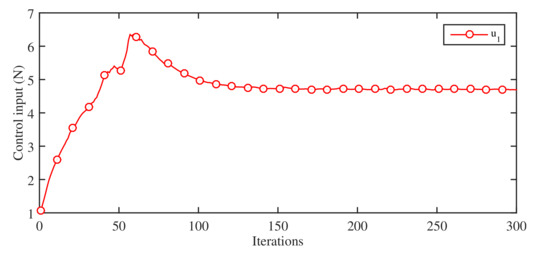

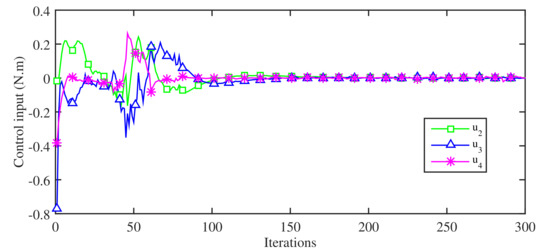

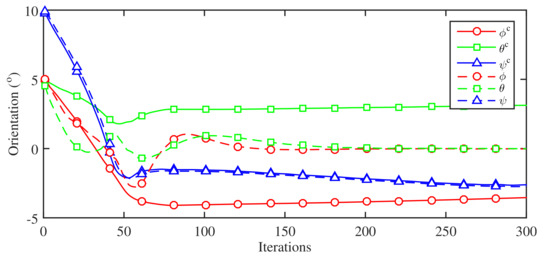

In the third case, the six acceleration measurements of the vehicle’s dynamics are associated with unknown random noise uncertainty. The final case considers that the camera velocities are also subjected to unknown random noise uncertainty. Let us first test the proposed MWT based visual tracking system on the given quadrotor aerial vehicle with case 1. Figure 15, Figure 16, Figure 17, Figure 18, Figure 19 and Figure 20 show the evaluation results of the proposed wavelet-based design for the vehicle with known uncertainty. Figure 15 and Figure 16 present the generated inputs.

Figure 15.

The input using wavelet based visual tracking system with Case 1.

Figure 16.

The control inputs , , with Case 1.

Figure 17.

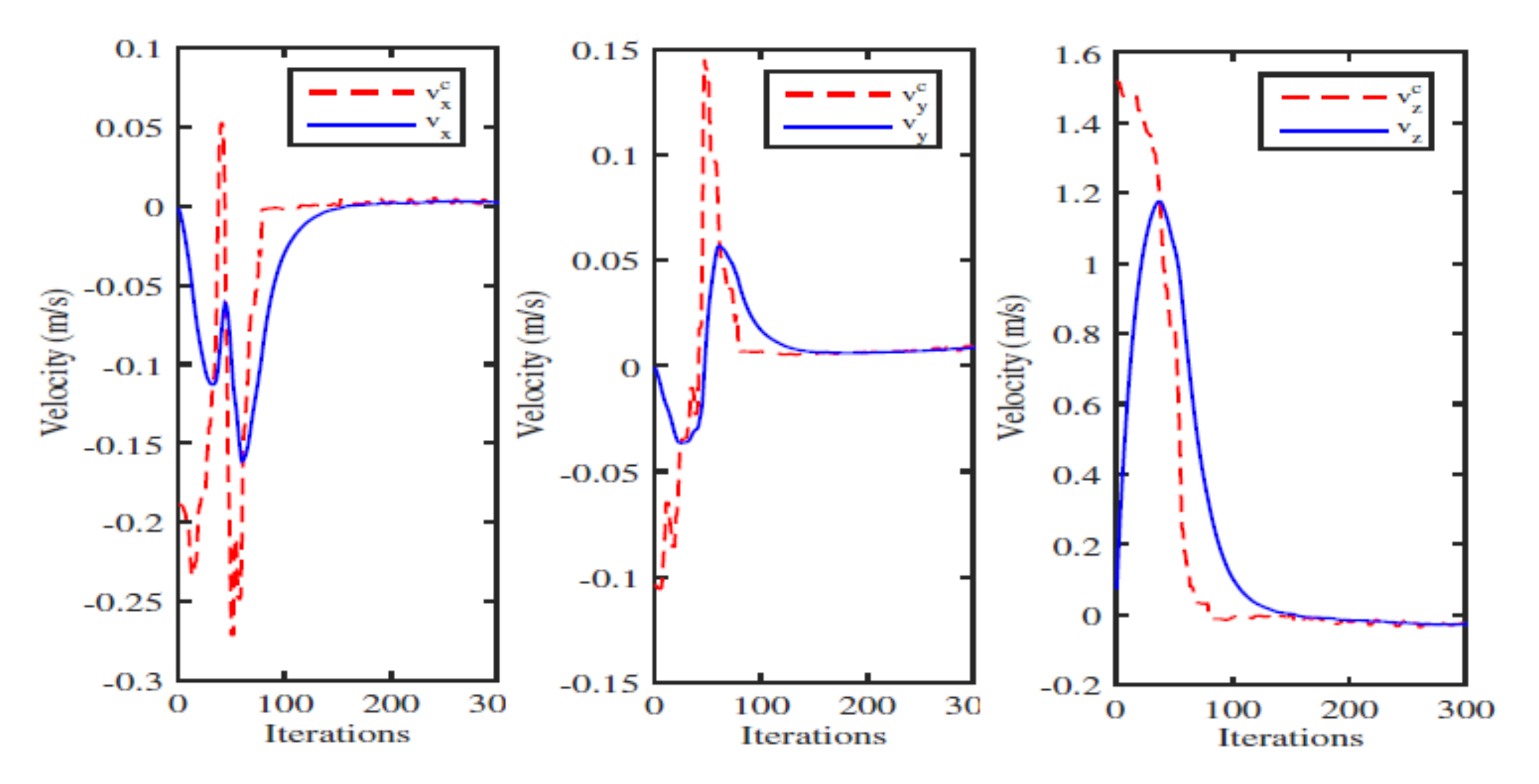

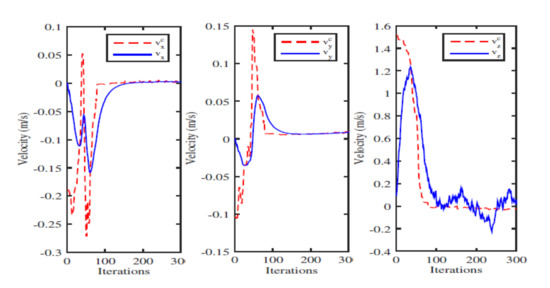

The desired and measured linear velocities in x, y, z direction with Case 1.

Figure 18.

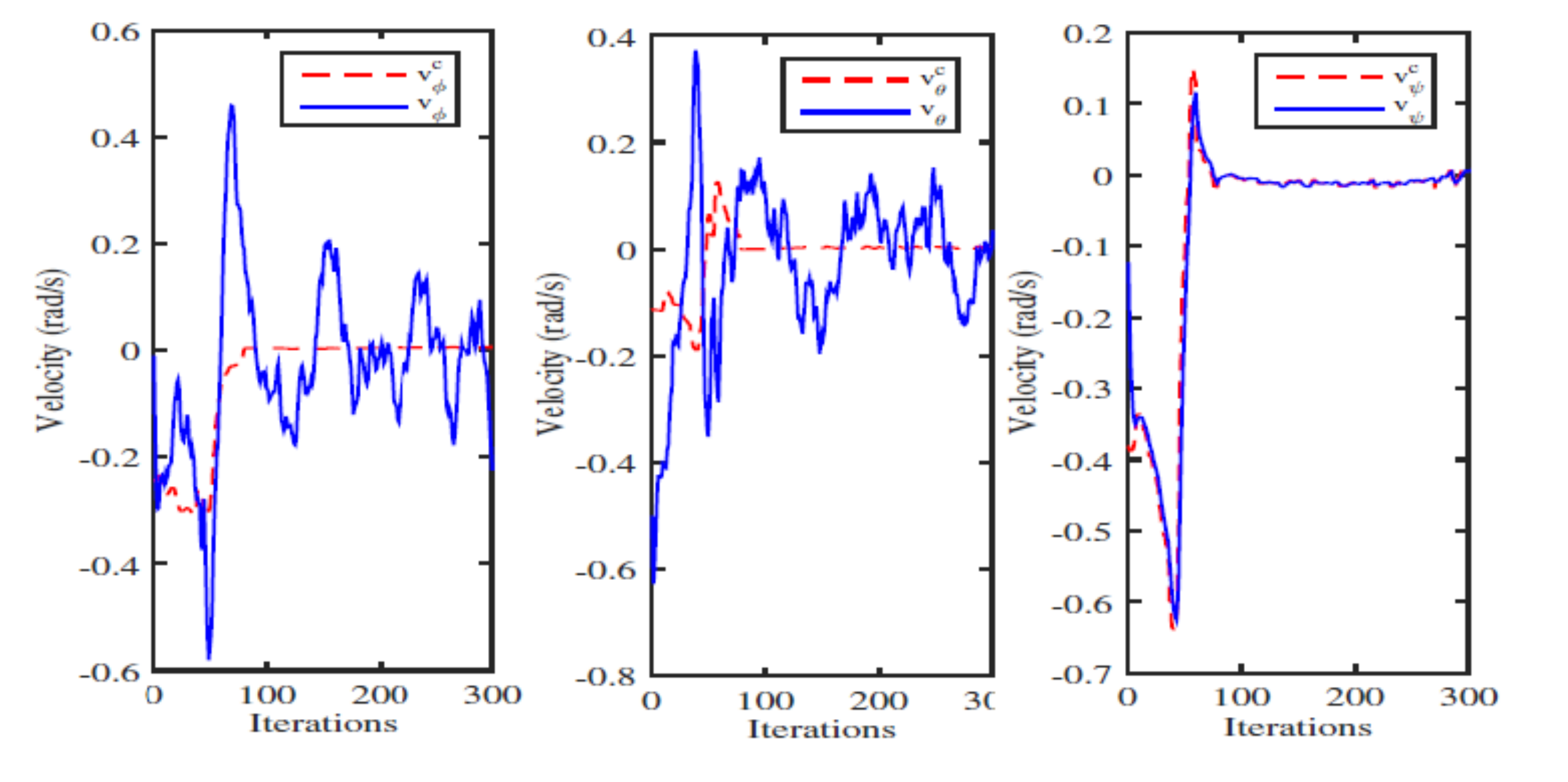

The desired and measured angular velocities in x, y, z direction with Case 1.

Figure 19.

The desired and measured linear position with Case 1.

Figure 20.

The desired and measured angular position with Case 1.

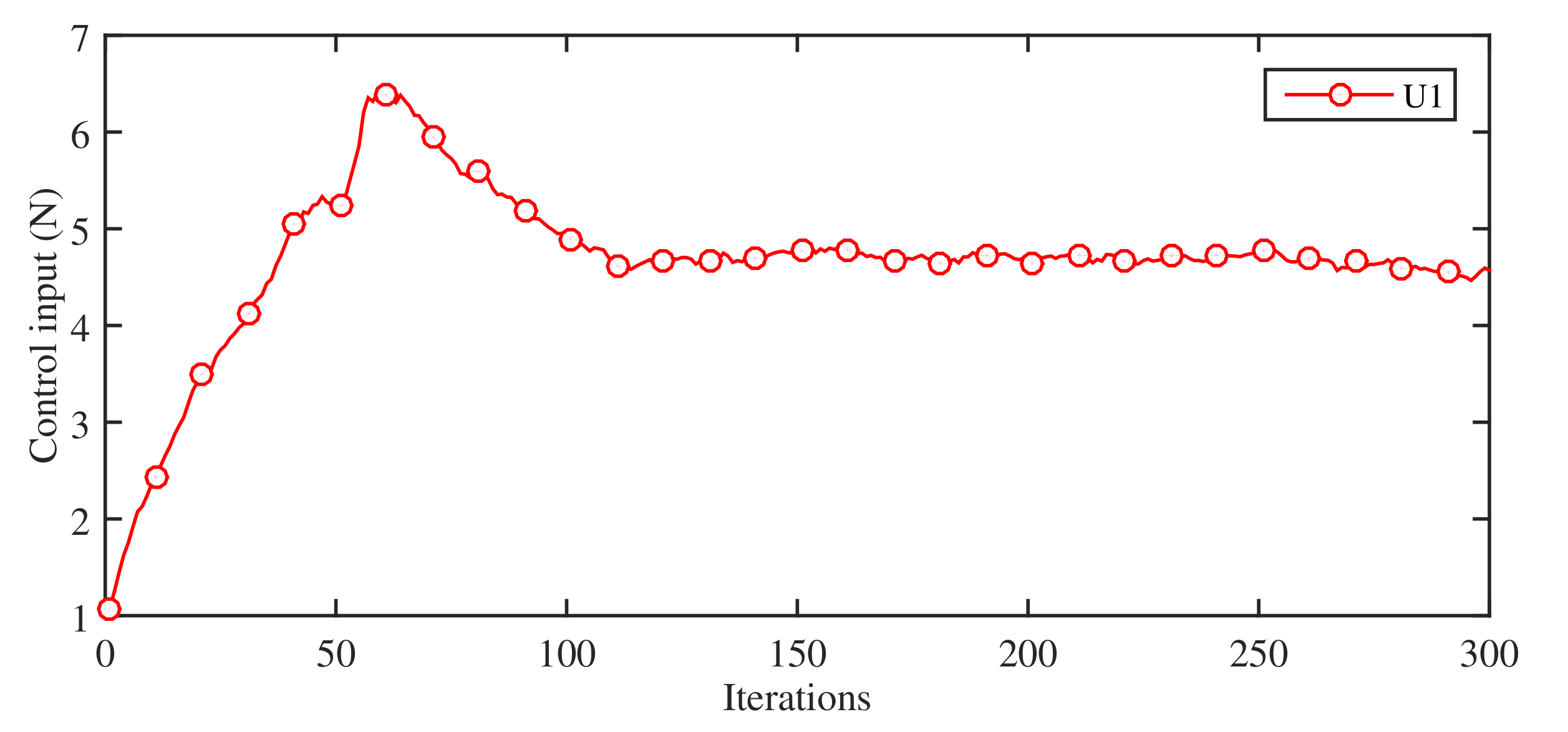

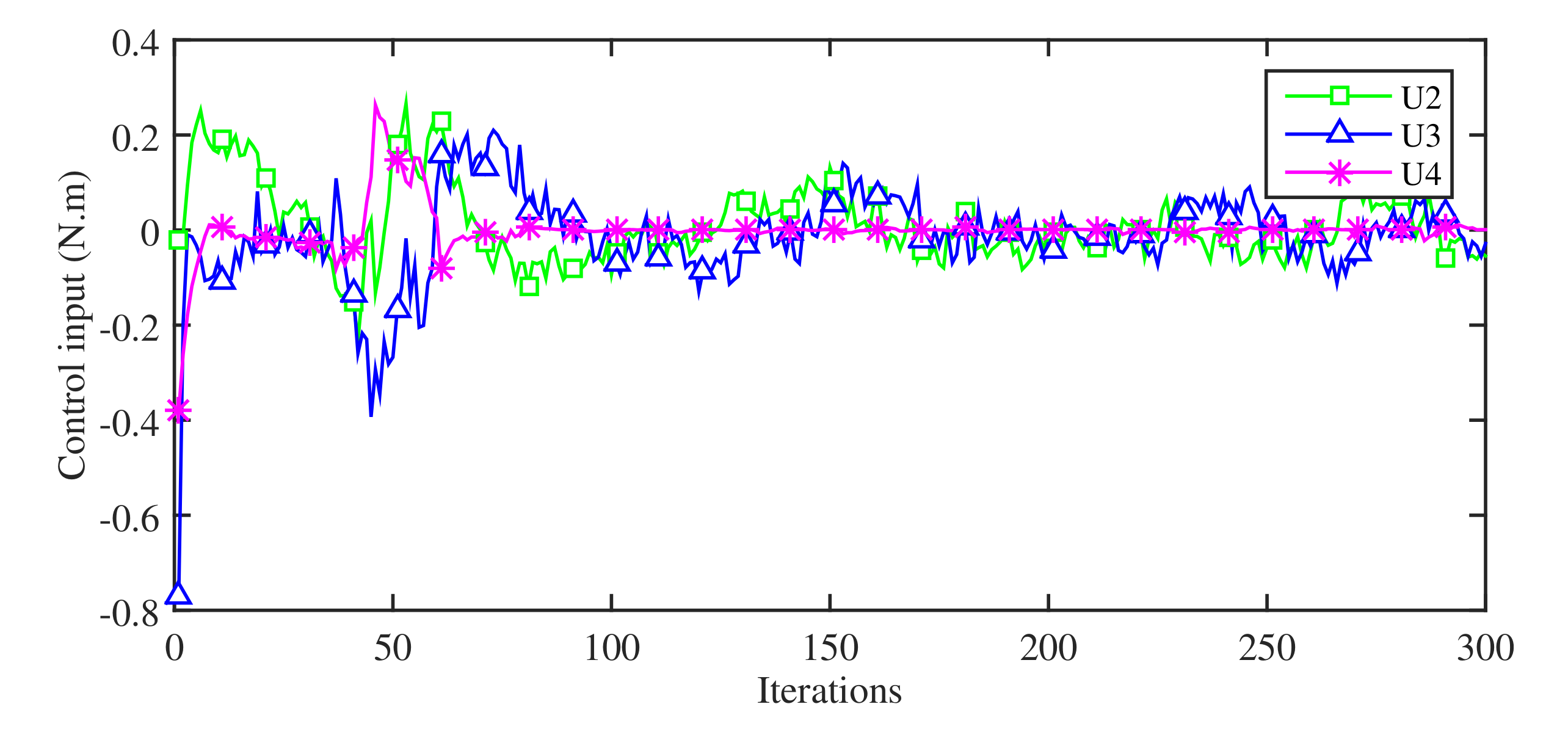

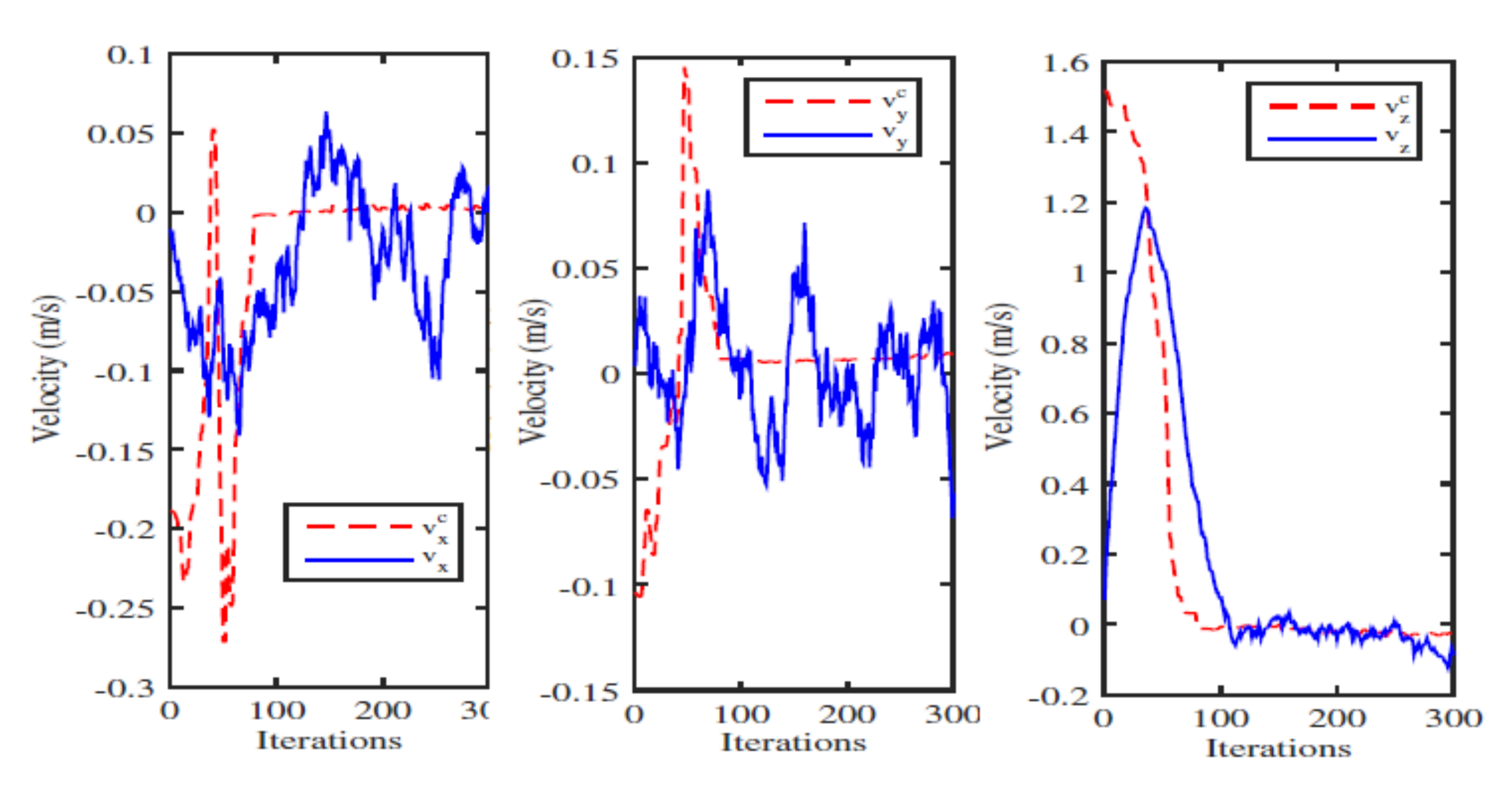

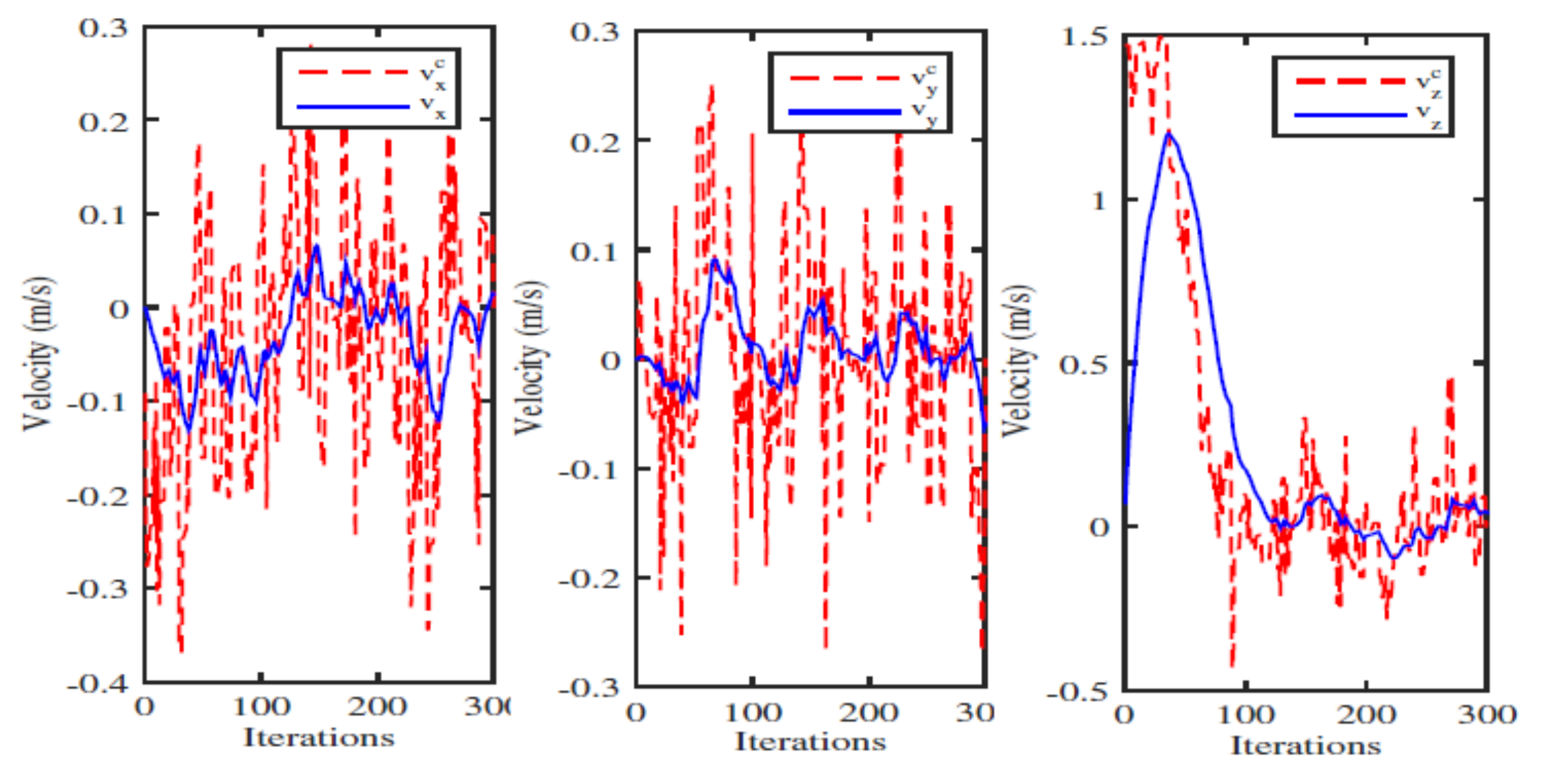

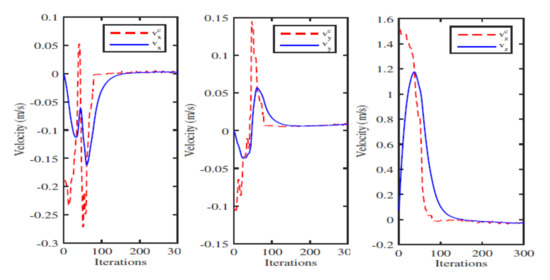

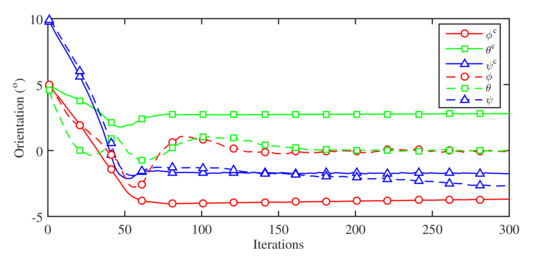

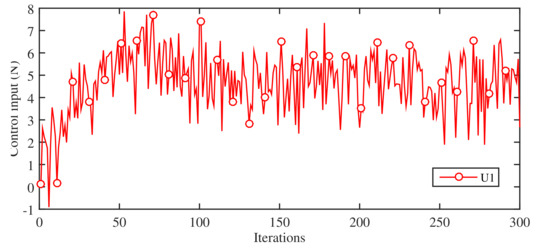

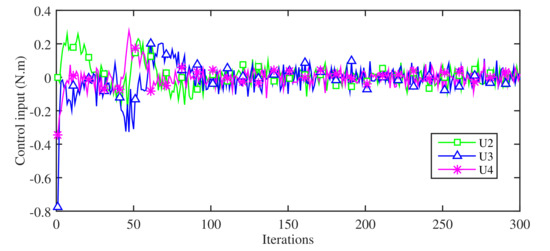

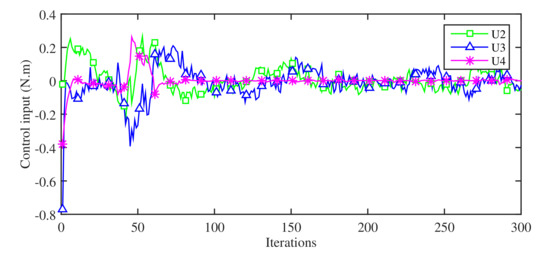

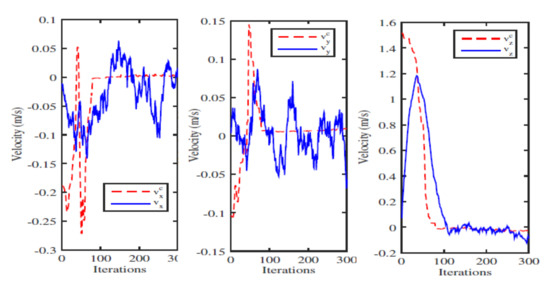

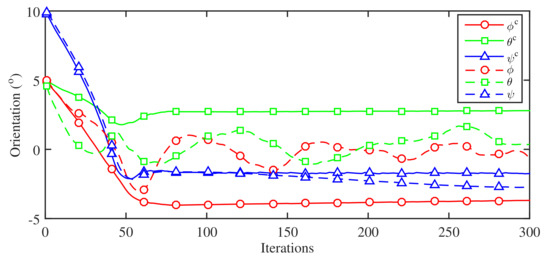

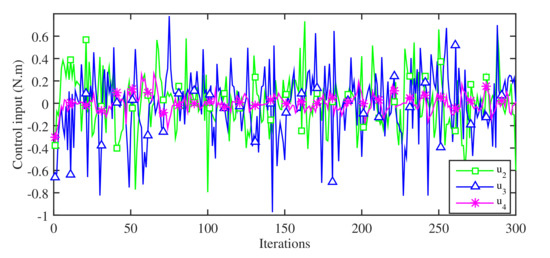

The evolution results of the desired and measured linear and angular velocities of the vehicle are given in Figure 17 and Figure 18, respectively. The profiles of the desired and measured linear and angular positions of the vehicle are given in Figure 19 and Figure 20, respectively. In view of these results, we can see that the measured position and velocity signals can track the desired position and velocity signals of the camera asymptotically. We now evaluate the performance of the wavelet-based tracking system in the presence of uncertainty. The design is tested with random Gaussian noise uncertainty associated with the thruster input and control inputs signals. Specifically, the vehicle input is contaminated by Gaussian noise uncertainty with mean and variance . The vehicle moments , and are also contaminated by Gaussian noise uncertainty with and . For fair comparison, all design parameters are kept similar to our previous test. The evaluation results of the proposed design with the unknown noisy inputs present in Figure 21 and Figure 22. Figure 22 and Figure 23 show the profile of the thruster input and control inputs, respectively.

Figure 21.

The desired and measured angular position in x, y, and z-direction with Case 2.

Figure 22.

The thruster input contaminated with uncertainty with Case 2.

Figure 23.

The control input when contaminated with uncertainty with Case 2.

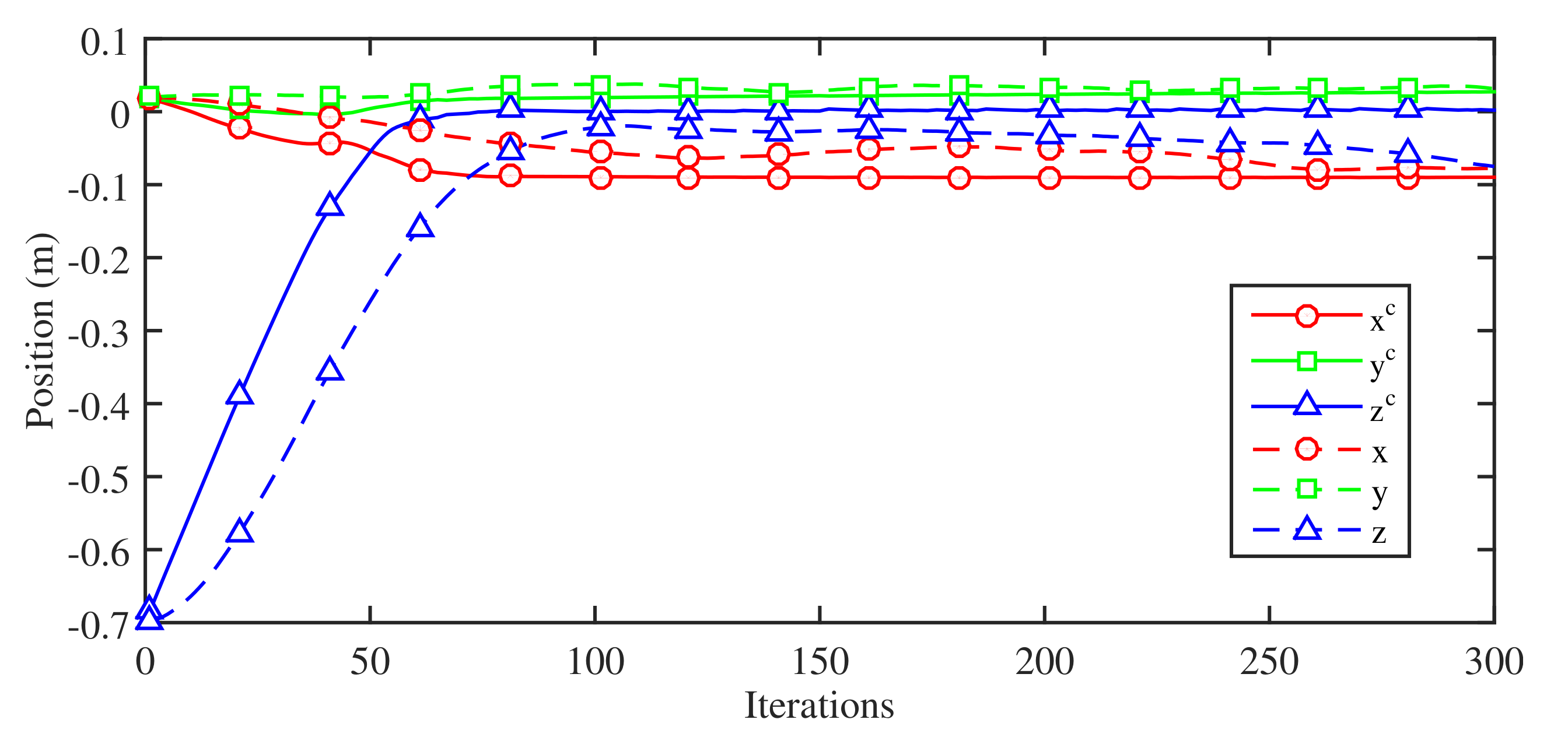

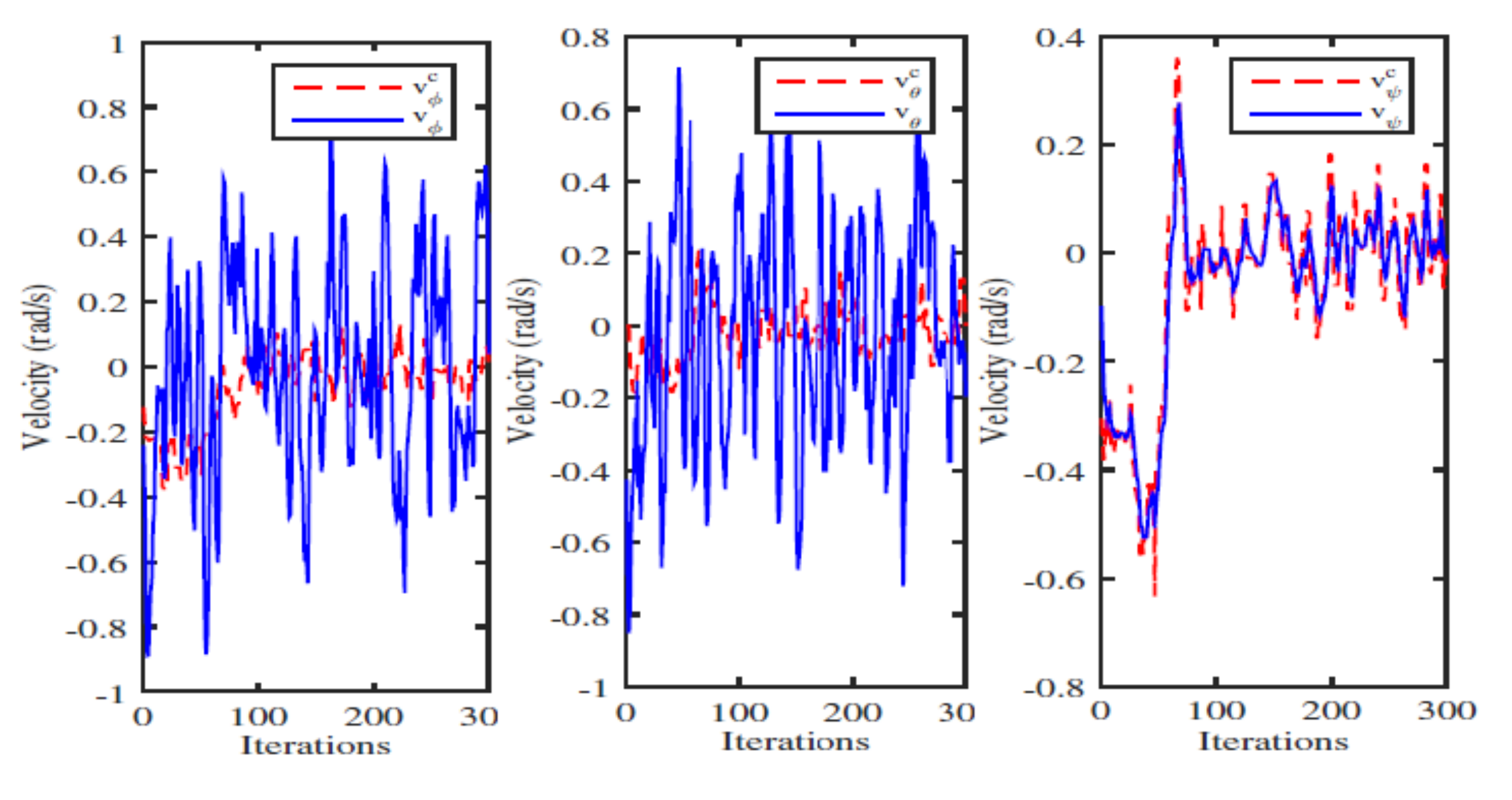

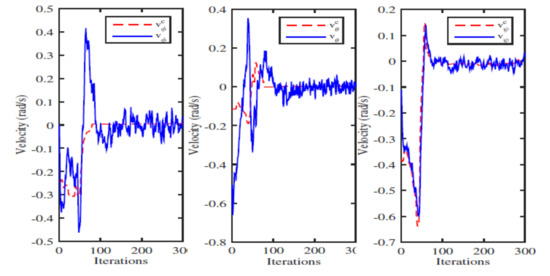

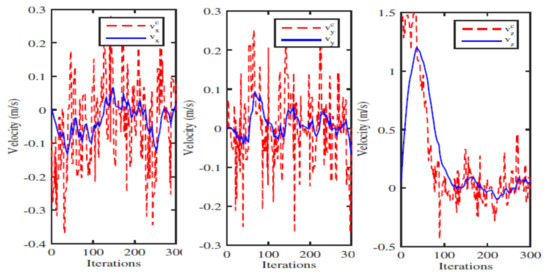

The evolution results of the desired and measured linear velocities of the vehicle with noisy control inputs are shown in Figure 24. The desired and measured angular velocities of the vehicle are depicted in Figure 25. The desired and measured linear and angular position profiles are presented in Figure 21, Figure 22, Figure 23, Figure 24, Figure 25 and Figure 26, respectively. Notice from these results that the system remains stable and ensures asymptotic stability with small oscillation with the linear and angular velocity states in the presence of uncertainty associated with the inputs. We now evaluate the proposed design with the presence of a random Gaussian noise uncertainty associated with the acceleration dynamics of the given quadrotor vehicle. The vehicles acceleration measurements are contaminated with Gaussian noise with parameters {, } for linear acceleration dynamics and {, } for angular acceleration dynamics.

Figure 24.

The desired and measured linear velocities in x, y, z-direction with Case 2.

Figure 25.

The desired and measured angular velocities in x, y, z-direction with Case 2.

Figure 26.

The desired and measured linear position in x, y and z-direction with Case 2.

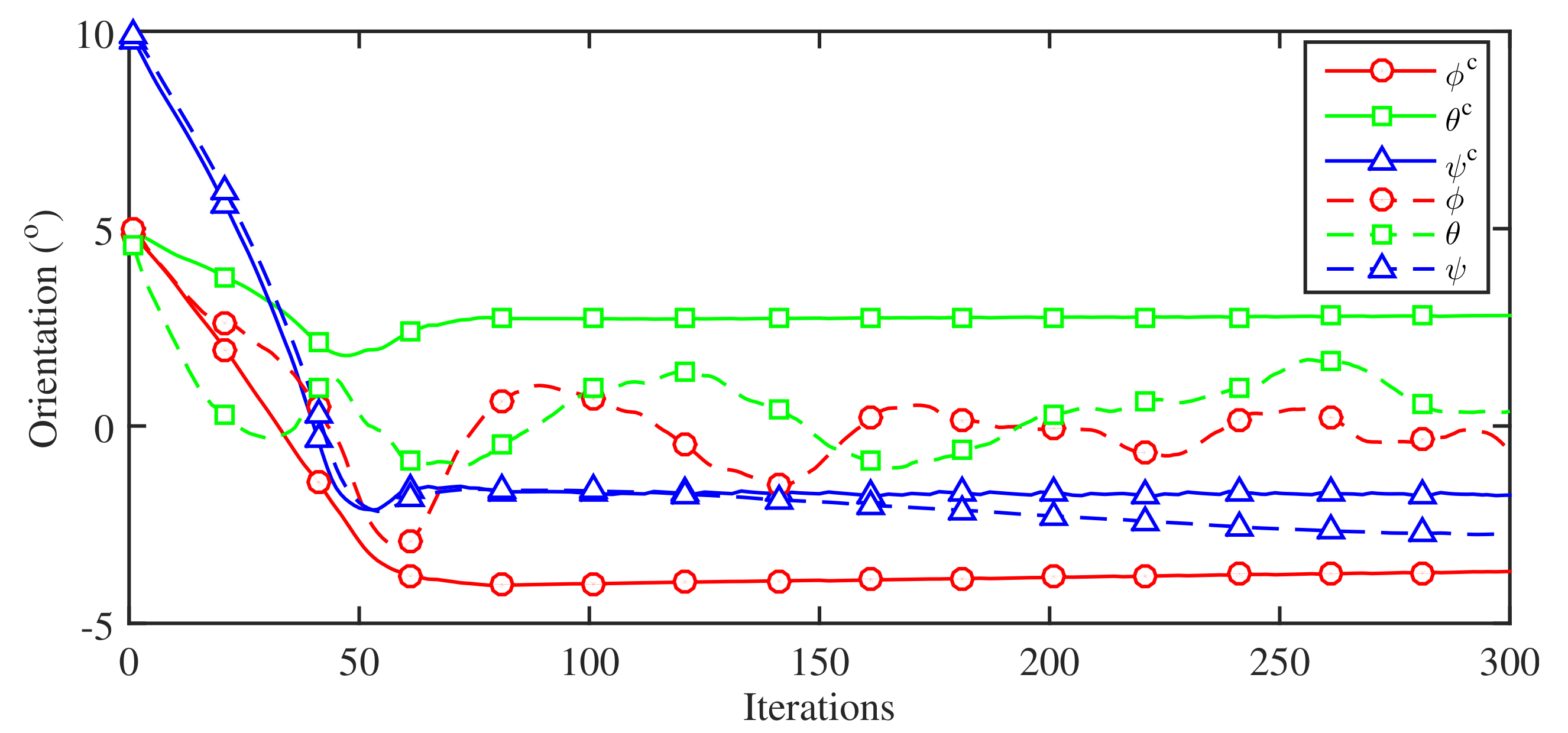

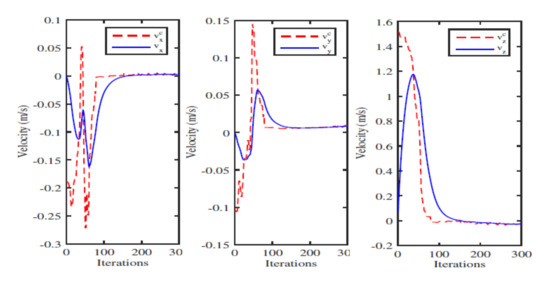

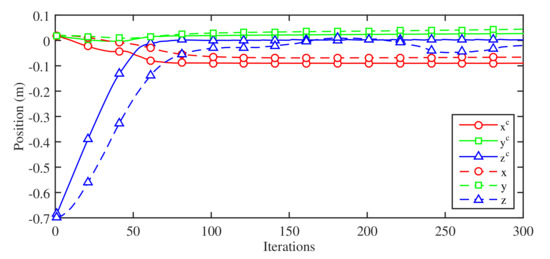

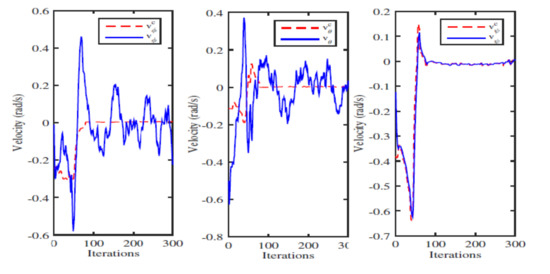

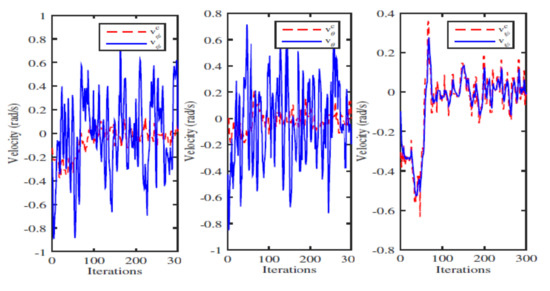

All other design parameters remain similar to our previous tests. Figure 27, Figure 28, Figure 29, Figure 30, Figure 31 and Figure 32 depict the evaluation results with the presence of uncertain noisy acceleration measurement dynamics. Figure 27 and Figure 28 show the input profiles of the vehicle with the contaminated linear and angular acceleration measurement uncertainty to iteration numbers. The desired and measured linear velocities of the vehicle are shown in Figure 29. Figure 30 depicts the profiles of the desired and measured angular velocities with respect to the iteration numbers. Figure 31 and Figure 32 present the profiles of the desired and measured linear and angular positions of the vehicle with the noisy acceleration measurement uncertainty, respectively.

Figure 27.

The thruster input with acceleration measurement uncertainty with Case 3.

Figure 28.

The control inputs with acceleration measurement uncertainty with Case 3.

Figure 29.

The desired and measured linear velocity in x, y, z-direction with noisy acceleration measurement uncertainty with Case 3.

Figure 30.

The desired and measured angular velocity in x, y, z-direction with Case 3.

Figure 31.

The desired and measured linear position in x, y, and z-direction with Case 3.

Figure 32.

The desired and measured angular position in x, y, and z-direction with Case 3.

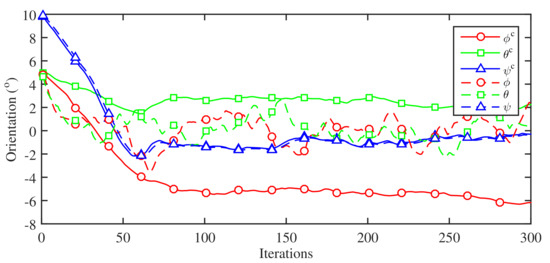

These results show that the wavelet based visual tracking system can ensure asymptotic tracking property of the error states of the vehicle even with the presence of very high noisy acceleration measurement dynamics. Finally, we examine the robustness of the proposed wavelet based visual tracking system on the given quadrotor aerial vehicle with the presence of uncertainty associated with the linear and angular velocities of the camera.

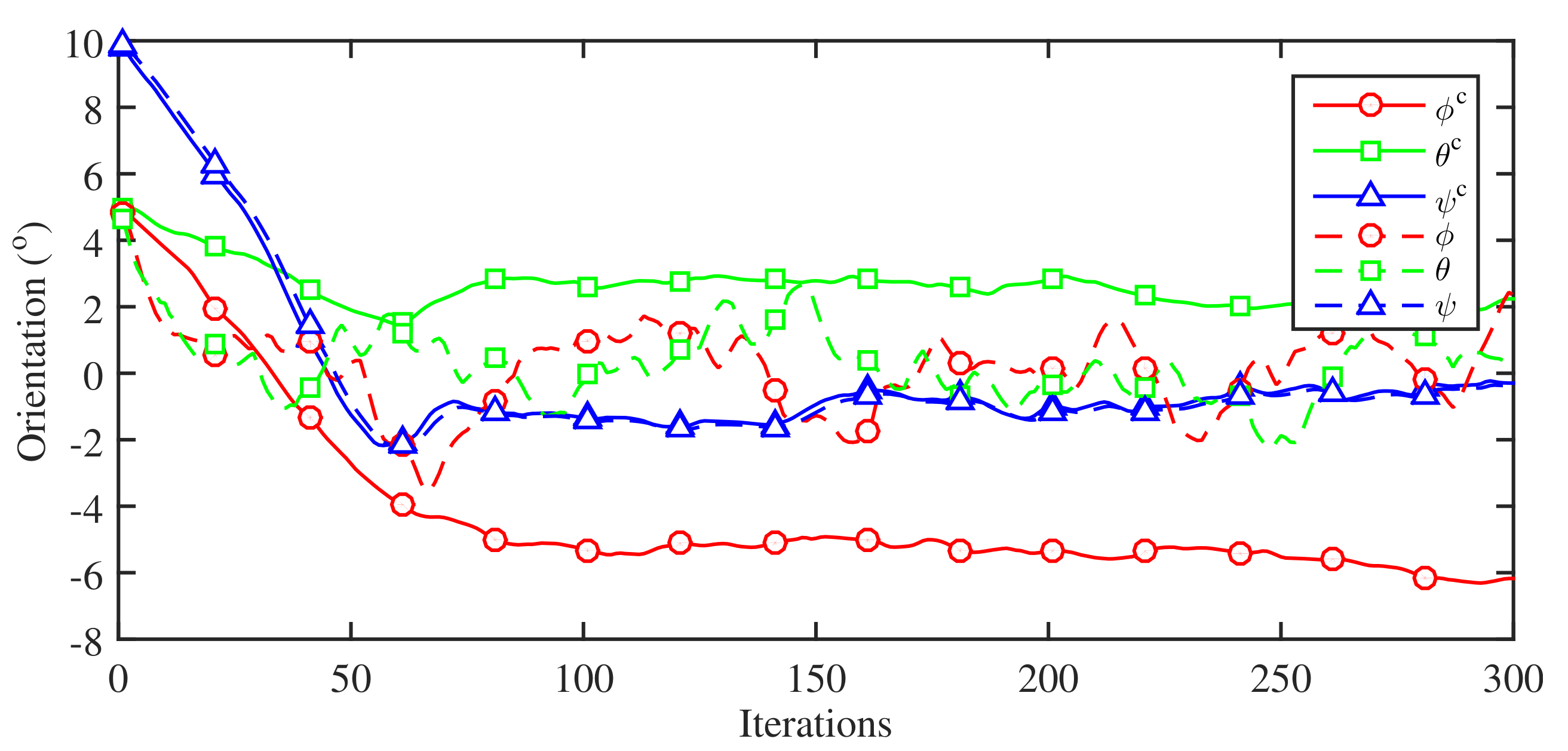

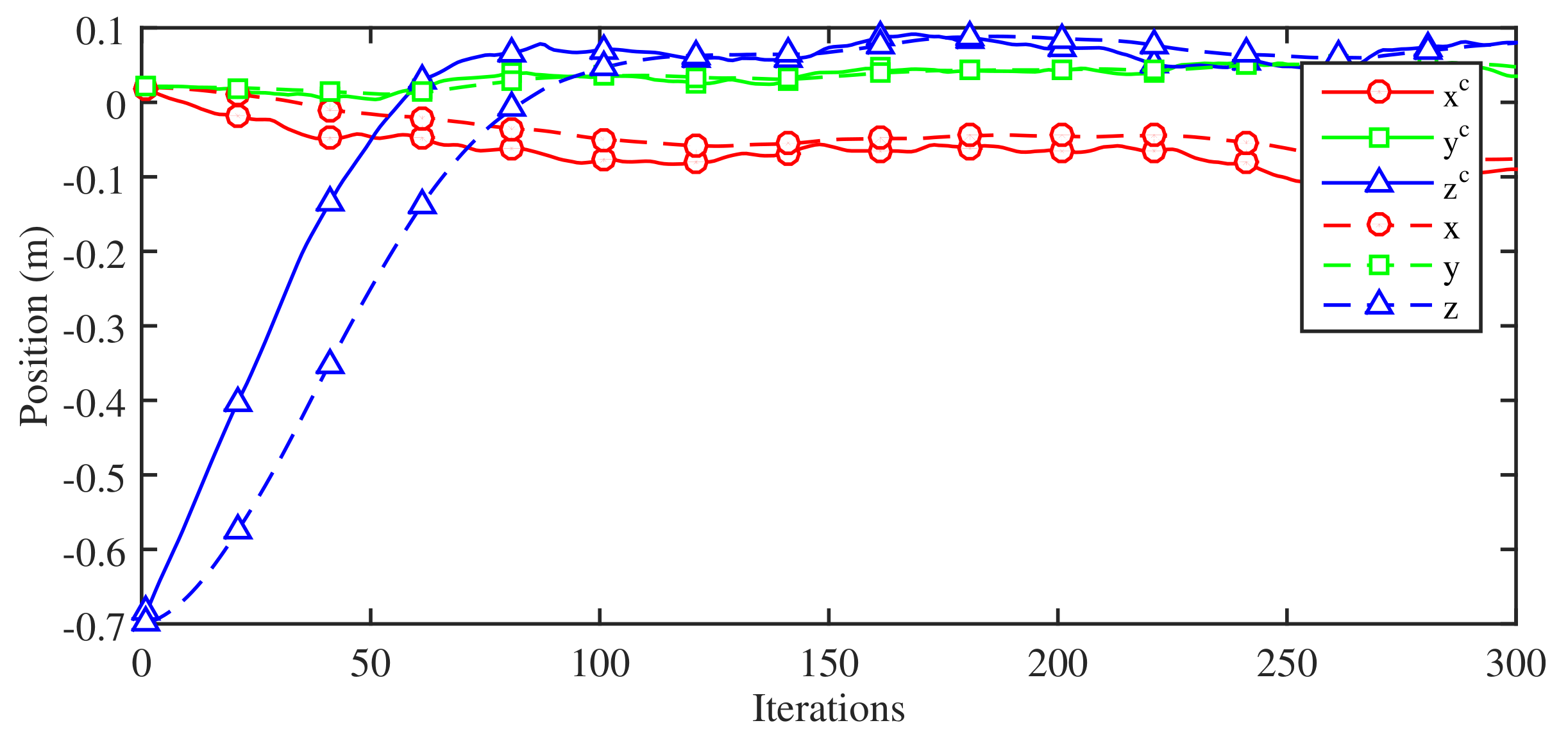

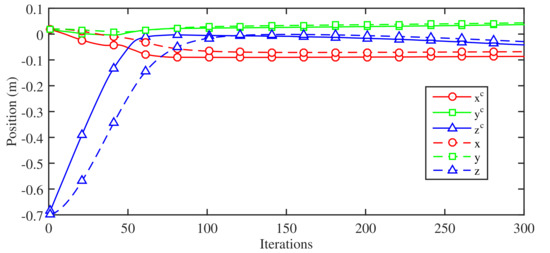

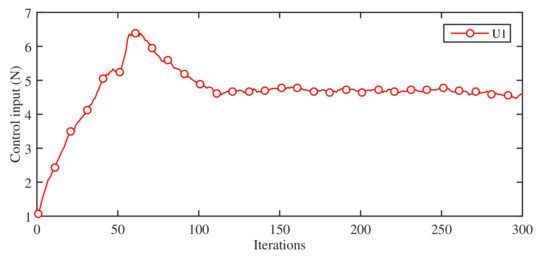

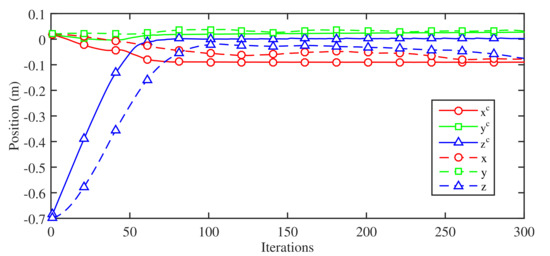

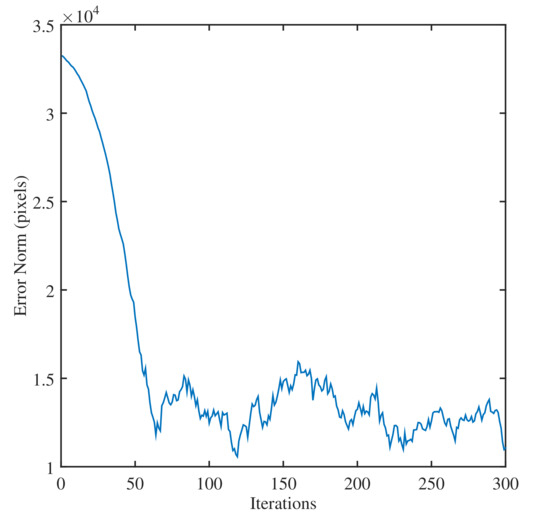

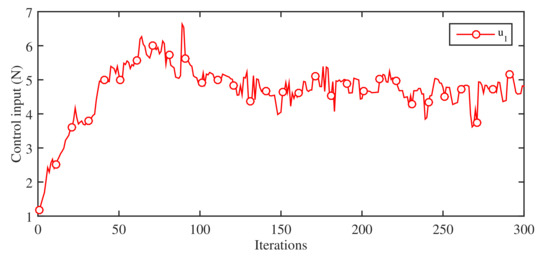

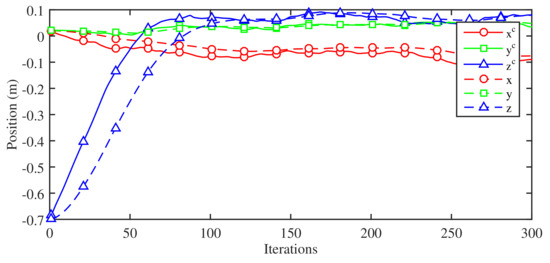

Unlike the previous cases, this test affects both camera view and visual tracking process to affect the control system of the vehicle significantly. The goal is to examine the robustness of the design with the presence of uncertainty associated with the linear and angular camera velocities. To do that, a Gaussian noise uncertainty is added with the computed camera velocities. The linear velocities of the camera is contaminated with Gaussian noise with parameters {, }. The angular velocities of the camera are contaminated with Gaussian noise with parameters{, }. For fair comparison, all other design parameters and test scenarios remain the same as our previous cases. The tested results with the presence of uncertainty associated with the linear and angular camera measurement velocities depict in Figure 33 and Figure 34 show. Despite the uncertainty associated with camera velocities, the MWT based visual tracking process managed to bring the camera view closed to the desired view as depicted in Figure 34. Figure 35 and Figure 36 show the control inputs of the vehicle when the linear and angular camera velocities are contaminated with uncertainty. Figure 37 shows the desired noisy linear camera velocities and measured linear velocities of the vehicle with Case 4. Figure 38 depicts the desired noisy angular camera velocities and measured angular velocities of the vehicle with Case 4. The linear position states of the vehicle and camera are given in Figure 39 with Case 4. Figure 33 presents angular position states of the vehicle and camera with Case 4. Notice from these results that the vehicle remains stable and maintains good convergence accuracy even with the presence of large uncertainty associated with the desired linear and angular camera velocity signals.

Figure 33.

The desired camera and measured angular position in x, y, and z-direction with the presence of uncertainty in camera velocities.

Figure 34.

The profile of the error norm with the presence of the uncertainty associated with the linear and angular camera velocities with Case 4.

Figure 35.

The thruster input profile with the presence of uncertainty with the linear and angular camera velocities with Case 4.

Figure 36.

The control inputs profile with the presence of uncertainty with the linear and angular camera velocities with Case 4.

Figure 37.

The desired noisy camera and measured linear velocity of the vehicle in x-direction with Case 4.

Figure 38.

The desired noisy camera and measured angular velocity in x, y, z-direction with Case 4.

Figure 39.

The desired camera and measured linear position in x, y and z-direction with Case 4 with uncertainty associated with the camera velocities.

Remarks 1: The classical visual tracking process aims to detect and match the geometrical features in the image such as points, lines, and circles. However, this segment slows down the tracking process as it is computationally expensive. Moreover, adding an extra algorithm for 3D perception will result in a serious drawback in terms of convergence speed. This is seen as the main obstacle of the tracking task in many cases. To overcome these problems, MWT based techniques are developed to eliminate the need for a visual tracking process. The main idea is to use features that can be directly derived from the images without processing, if not using the whole image as the visual signal.

Remark 2: In our future work, adaptive learning based visual tracking system for aerial vehicle will be designed to deal with uncertainty associated with the unceratinty along the line of the method proposed in [46,47,48]. The future works will also be involved in testing the design on a quadrotor aerial vehicle.

4. Conclusions

In this paper, we have developed a wavelet-based image-guided visual tracking system for an unmanned aerial vehicle. The visual tracking system has been designed by using wavelet coefficients of the half and full image. The design developed a multiresolution interaction matrix to relate the time-variation of the wavelet coefficients with the velocity of the vehicle and controller. The proposed wavelet-based MWT design was implemented and evaluated on a virtual quadrotor aerial vehicle simulator. The evaluation results showed that the MWT based visual tracking system can achieve accuracy and efficiency even without using an image processing unit as opposed to a classical visual tracking mechanism. The tests show that the proposed MWT based visual tracking system can ensure the stability of the vehicle and guarantee good convergence accuracy even with the presence of uncertainty associated with the camera velocities, vehicle dynamics, and vehicle inputs.

Author Contributions

Conceptualization, S.I. and J.D.; methodology, S.I.; software, S.I. and H.M.; validation, S.I. and H.M.; formal analysis, S.I.; investigation, S.I. and J.D.; resources, S.I. and J.D.; data curation, S.I. and H.M.; writing—original draft preparation, S.I.; writing—review and editing, S.I.; visualization, S.I. and J.D.; supervision, S.I. and J.D.; project administration, S.I. and J.D.; funding acquisition, S.I. and J.D. All authors have read and agreed to the published version of the manuscript..

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest..

References

- Ollero, A.; Merino, L. Control and perception techniques for aerial robotics. Annu. Rev. Control 2004, 28, 167–178. [Google Scholar] [CrossRef]

- Hua, C.C.; Guan, X.; Shi, P. Robust Backstepping control for a class of time delayed systems. IEEE Trans. Autom. Control 2005, 50, 894–899. [Google Scholar]

- Cai, G.; Dias, J.; Seneviratne, L. A survey of small-scale unmanned aerial vehicles: Recent advances and future development trends. Unmanned Syst. 2014, 2, 175–199. [Google Scholar] [CrossRef]

- Gupte, S.; Mohandas, P.T.; Conrad, J. A survey on quadrotor unmanned aerial vehicles. In Proceedings of the 2012 IEEE Southeastcon, Orlando, FL, USA, 15–18 March 2012. [Google Scholar]

- Nonami, K.; Kendoul, F.; Suzuki, S.; Wang, W. Autonomous Flying Robots, Unmanned Aerial Vehicles and Micro Aerial Vehicles; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Austin, R. Unmanned Air Systems: UAV Design, Development and Deployment; Wiley: Hoboken, NJ, USA, 2010. [Google Scholar]

- Valvanis, K. Advances in Unmanned Aerial Vehicles: State of the Art and the Road to Autonomy; Springer: Dordrecht, The Netherlands, 2007; Volume 33. [Google Scholar]

- Valvanis, K.; Vachtsevanos, G. Handbook of Unmanned Aerial Vehicles; Springer: Dordrecht, The Netherlands, 2015. [Google Scholar]

- Lourakis, M. A brief description of the levenberg-marquardt algorithm implemented by levmar. Found. Res. Technol. 2005, 4, 1–6. [Google Scholar]

- Islam, S.; Liu, P.X.; Saddik, A.E. Nonlinear adaptive control of quadrotor flying vehicle. Nonlinear Dyn. 2014, 78, 117–133. [Google Scholar] [CrossRef]

- Hutchinson, S.; Hager, G.D.; Corke, P.I. A tutorial on visual servo control. IEEE Tran. Rob. Autom. 1996, 12, 651–670. [Google Scholar] [CrossRef]

- Bloesch, M.; Weiss, S.; Scaramuzza, D.; Siegwart, R. Vision based mav navigation in unknown and unstructured environments. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010. [Google Scholar]

- Eberli, D.; Scaramuzza, D.; Weiss, S.; Siegwart, R. Vision based position control for mavs using one single artificial landmark. J. Intell. Robot. Syst. 2011, 61, 495–512. [Google Scholar] [CrossRef]

- Achtelik, M.; Bachrach, A.; He, R.; Prentice, S.; Roy, N. Stereo Vision and Laser Odometry for Autonomous Helicopters in GPSdenied Indoor Environments. In Proceedings of the SPIE Unmanned Systems Technology XI, Orlando, FL, USA, 14–17 April 2009. [Google Scholar]

- Altug, E.; Ostrowski, J.; Mahony, R. Control of a quadrotor helicopter using visual feedback. In Proceedings of the Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; pp. 72–77. [Google Scholar]

- Park, S.; Won, D.; Kang, M.; Kim, T.; Lee, H.; Kwon, S. Ric (robust internal-loop compensator) based flight control of a quad-rotor type uav. In Proceedings of the Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3542–3547. [Google Scholar]

- Klose, S.; Wang, J.; Achtelik, M.; Panin, G.; Holzapfel, F.; Knoll, A. Markerless, Vision-Assisted Flight Control of a Quadrocopter. In Proceedings of the Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010. [Google Scholar]

- Ludington, B.; Johnson, E.; Vachtsevanos, G. Augmenting uav autonomy. IEEE Robot. Automat. Mag. 2006, 24, 63–71. [Google Scholar] [CrossRef]

- Hamel, T.; Mahony, R.; Chriette, A. Visual servo trajectory tracking for a four rotor vtol aerial vehicle. In Proceedings of the Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; pp. 2781–2786. [Google Scholar]

- Cheviron, T.; Hamel, T.; Mahony, R.; Baldwin, G. Robust nonlinear fusion of inertial and visual data for position, velocity and attitude estimation of uav. In Proceedings of the Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; pp. 2010–2016. [Google Scholar]

- Herisse, B.; Russotto, F.; Hamel, T.; Mahony, R. Hovering flight and vertical landing control of a vtol unmanned aerial vehicle using optical flow. In Proceedings of the Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 801–806. [Google Scholar]

- Hrabar, S.; Sukhatme, G.; Corke, P.; Usher, K.; Roberts, J. Combined optic-flow and stereo-based navigation of urban canyons for a uav. In Proceedings of the Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3309–3316. [Google Scholar]

- Ahrens, S.; Levine, D.; Andrews, G.; How, J. Vision-based guidance and control of a hovering vehicle in unknown, gps-denied environments. In Proceedings of the Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Achtelik, M.W.; Weiss, S.; Siegwart, R. Onboard IMU and monocular vision based control for MAVS in unknown in- and outdoor environments. In Proceedings of the IEEE international Conference On Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011. [Google Scholar]

- Li, K.; Huang, R.; Phang, S.K.; Lai, S.; Wang, F.; Tan, P.; Chen, B.M.; Lee, T.H. Off-board visual odometry and control of an ultralight quadrotor mav. In Proceedings of the IMAV 2014: International Micro Air Vehicle Conference and Competition 2014, Delft, The Netherland, 12–15 August 2014. [Google Scholar]

- Bristeau, P.-J.; Callou, F.; Vissiere, D.; Petit, N. The navigation and control technology inside the ar. In drone micro uav. In Proceedings of the 18th IFAC World Congress, Milano, Italy, 28 August–2 September 2011; Volume 18, pp. 1477–1484. [Google Scholar]

- Engel, J.; Sturm, J.; Cremers, D. Camera-based navigation of a low-cost quadrocopter. In Proceedings of the Intelligent Robots and Systems (IROS), IEEE/RSJ International Conference, Vilamoura, Portugal, 7–12 October 2012; pp. 2815–2821. [Google Scholar]

- Marchand, E.; Spindler, F.; Chaumette, F. ViSP for visual servoing: A generic software platform with a wide class of robot control skills. IEEE Robot. Autom. Mag. 2015, 12, 40–52. [Google Scholar] [CrossRef]

- Zingg, S.; Scaramuzza, D.; Weiss, S.; Siegwart, R. Mav obstacle avoidance using optical flow. In Proceedings of the Conference on Robotics and Automation, Anchorage, Alaska, 4–8 May 2010. [Google Scholar]

- Han, J.L.; Xu, Y.J.; Di, L.; Chen, Y.Q. Low-cost multi-UAV technologies for contour mapping of nuclear radiation field. J. Intell. Robot. Syst. 2013, 70, 401–410. [Google Scholar] [CrossRef]

- Chaumette, F.; Hutchinson, S. Visual servo control. part I. basic approaches. IEEE Robot. Autom. Mag. 2006, 13, 82–90. [Google Scholar] [CrossRef]

- Koichiro, D. A direct interpretation of dynamic images with camera and object motions for vision guided robot control. Int. J. Comput. Vis. 2000, 37, 7–20. [Google Scholar]

- Collewet, C.; Marchand, E. Photometric visual servoing. IEEE Trans. Robot. 2011, 27, 828–834. [Google Scholar] [CrossRef]

- Tamadazte, B.; Piat, N.; Marchand, E. A direct visual servoing scheme for automatic nanopositioning. IEEE/ASME Trans. Mech. 2012, 17, 728–736. [Google Scholar] [CrossRef]

- Marchand, E.; Collewet, C. Using image gradient as a visual feature for visual servoing. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 5687–5692. [Google Scholar]

- Dame, A.; Marchand, E. Entropy-based visual servoing. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 707–713. [Google Scholar]

- Marturi, N.; Tamadazte, B.; Dembele, S.; Piat, N. Visual servoing schemes for automatic nanopositioning under scanning electron microscope. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 981–986. [Google Scholar]

- Islam, S.; Mukhtar, H.; Al Khawli, T.; Sunda-Meya, A. Wavelet-Based Visual Tracking System for Miniature Aerial Vehicle. In Proceedings of the 44th Annual Conference of the IEEE Industrial Electronics Society (IECON), Omni Shoreham Hotel, Washington, DC, USA, 21–23 October 2018; pp. 2651–2656. [Google Scholar]

- Mallat, S. A Wavelet Tour of Signal Processing: The Sparse Way; Academic Press: Cambridge, MA, USA, 2008. [Google Scholar]

- Meyer, Y. Ondelettes, Filtres Miroirs en Quadrature et Traitement Numerique de Limage; Springer: Berlin/Heidelberg, Germany, 1990. [Google Scholar]

- Ourak, M.; Tamadazte, B.; Lehmann, O.; Andreff, N. Wavelets-based 6 DOF Visual Servoing. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3414–3419. [Google Scholar]

- George, W.P. Basic Orthogonal Wavelet Theory; Wiley-IEEE Press: Piscataway, NJ, USA, 2003; pp. 30–99. [Google Scholar]

- Daubechies, I. Ten Lectures on Wavelets; SIAM: Philadelphia, PA, USA, 1992; Volume 61. [Google Scholar]

- Gehrig, S.K.; Scharwächter, T. A real-time multi-cue framework for determining optical flow confidence. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 1978–1985. [Google Scholar]

- Bernard, C. Wavelets and Ill-Posed Problems: Optic Flow Estimation and Scattered Data Interpolation. Ph.D. Thesis, Ecole Polytechnique, Palaiseau, France, 1999. [Google Scholar]

- Islam, S.; Liu, P.X.; Saddik, A.E. Robust control of four rotor unmanned aerial vehicle with disturbance uncertainty. IEEE Trans. Ind. Electron. 2015, 62, 1563–1571. [Google Scholar] [CrossRef]

- Hua, C.C.; Feng, G.; Guan, X. Robust controller design of a class of nonlinear time delay systems via backstepping method. Automatica 2008, 44, 567–573. [Google Scholar] [CrossRef]

- Hua, C.C.; Yang, Y.; Liu, P.X. Output-Feedback Adaptive Control of Networked Teleoperation System With Time-Varying Delay and Bounded Inputs. IEEE/ASME Trans. Mech. 2014, 20, 2009–2020. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).