Abstract

The automotive industry has experienced remarkable growth in recent decades, with a significant focus on advancements in autonomous driving technology. While still in its early stages, the field of autonomous driving has generated substantial research interest, fueled by the promise of achieving fully automated vehicles in the foreseeable future. High-definition (HD) maps are central to this endeavor, offering centimeter-level accuracy in mapping the environment and enabling precise localization. Unlike conventional maps, these highly detailed HD maps are critical for autonomous vehicle decision-making, ensuring safe and accurate navigation. Compiled before testing and regularly updated, HD maps meticulously capture environmental data through various methods. This study explores the vital role of HD maps in autonomous driving, delving into their creation, updating processes, and the challenges and future directions in this rapidly evolving field.

1. Introduction

The origins of autonomous driving can be traced back to earlier pioneering efforts, notably the research conducted by Ernst Dickmanns in the 1980s. Widely regarded as the father of autonomous vehicles, Dickmanns transformed a Mercedes-Benz van into an autonomous vehicle guided by an integrated computer system [1]. This groundbreaking work laid the foundation for subsequent advancements in the field.

At the onset of the 21st century, numerous competitions were instrumental in promoting research and development in autonomous driving (AD). The challenges held by the US Defense Advanced Research Projects Agency (DARPA) to evaluate the capabilities of intelligent vehicles in harsh and complex environments were particularly influential in driving advancements in this field [2].

High-definition (HD) maps have emerged as a crucial part of advanced driver assistance systems (ADASs) and autonomous vehicles (AVs). These highly detailed and precise digital representations of the physical world provide the essential contextual awareness and environmental understanding required for safe and reliable navigation. The concept of HD maps gained significant interest with the Bertha Benz Memorial Route project in 2013, where a Mercedes-Benz S500 successfully completed the historic route in a fully autonomous manner, leveraging an HD map that contained detailed information about road geometry, lane positions, and topological connections [3]. The precise map-relative localization facilitated by HD maps demonstrated their significance in enabling reliable autonomous navigation.

The importance of HD maps in the autonomous driving industry has grown exponentially in recent years, primarily due to their ability to define the environment with exceptional precision and capture even occluded areas [4]. HD maps provide crucial redundancy and complementary information to the sensor suite (LiDAR, cameras, and radars) employed by AVs for constructing the perception module of the autonomous system.

In recent years, significant advancements have been made in the generation and maintenance of HD maps, driven by the rapid development of sensor technologies, computational power, and machine learning techniques [5,6,7]. Researchers and industry leaders have proposed novel approaches to streamline data collection, improve mapping accuracy, and enable efficient map updates, paving the way for more reliable and scalable HD mapping solutions [2,8].

Moreover, HD maps play a vital role in addressing long-tail scenarios—rare, complex, and unpredictable events that pose significant challenges for AVs. These scenarios require sophisticated handling to ensure safety and reliability. HD maps offer centimeter-level accuracy in localization, which is essential in areas with poor GPS signals, such as urban canyons or tunnels. They enhance the vehicle’s perception system by providing prior knowledge of road geometry, lane markings, and static objects, which is particularly useful in adverse weather conditions like heavy snow or fog. For instance, in dense fog, where camera visibility is reduced, an AV can rely on HD maps which are supported by multiple sensors to navigate by using reliable environmental data [9].

HD maps enable predictive awareness by allowing AVs to anticipate road features and potential hazards, such as sharp curves, steep gradients, or pedestrian crossings. This proactive approach improves safety; for example, an AV can slow down before reaching a known pedestrian crossing. HD maps also integrate real-time data on traffic conditions, construction zones, and temporary road closures, allowing AVs to adapt to changing environments. For instance, an AV can reroute to avoid a newly established construction zone. Furthermore, HD maps facilitate long-tail scenario training by providing a detailed and realistic virtual environment for extensive simulations. This allows AVs to train and test various scenarios without the risks associated with real-world testing, ultimately improving safety and reliability in autonomous driving.

The purpose of this research is to provide a comprehensive survey of recent advancements in HD map creation and update techniques by reviewing the state-of-the-art techniques. Additionally, the study discusses the challenges inherent in HD maps and examines how current systems address these issues. Furthermore, we explore the future direction of the autonomous driving industry. The paper is structured as follows: Section 2 covers the basics of the HD map, Section 3 delves into creating HD maps, Section 4 discusses updating HD maps, Section 5 addresses challenges and solutions, and Section 6 explores future directions which can further transform the AV industry.

2. Basics of HD Maps

Autonomous vehicles (AVs) require highly precise maps to navigate roads and lanes. Regular digital maps do not apply to AVs because they lack information about traffic lights and signs, traffic lanes, the height of pole-like objects, and the accurate size of curves. Designed specifically for human use, these maps cannot be used for AV localization, where the vehicle itself needs to understand the map and locate its position relative to the surroundings.

Though HD maps are crucial, there exists no unique standard HD map structure in the autonomous driving industry [5]. Different companies adopt various architectures and methods to create HD maps, highlighting the lack of a unified approach. Google’s Waymo, a leader in autonomous taxis, uses high-resolution sensors, but specific HD map layers are not publicly known. Baidu’s open-source Apollo platform supports accurate localization, precise path planning, and real-time updates for navigating diverse environments by continually enhancing its mapping technologies and integrating advanced sensors like LiDAR and 4D millimeter-wave radar [10].

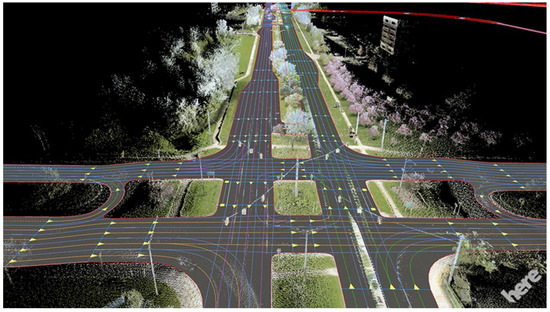

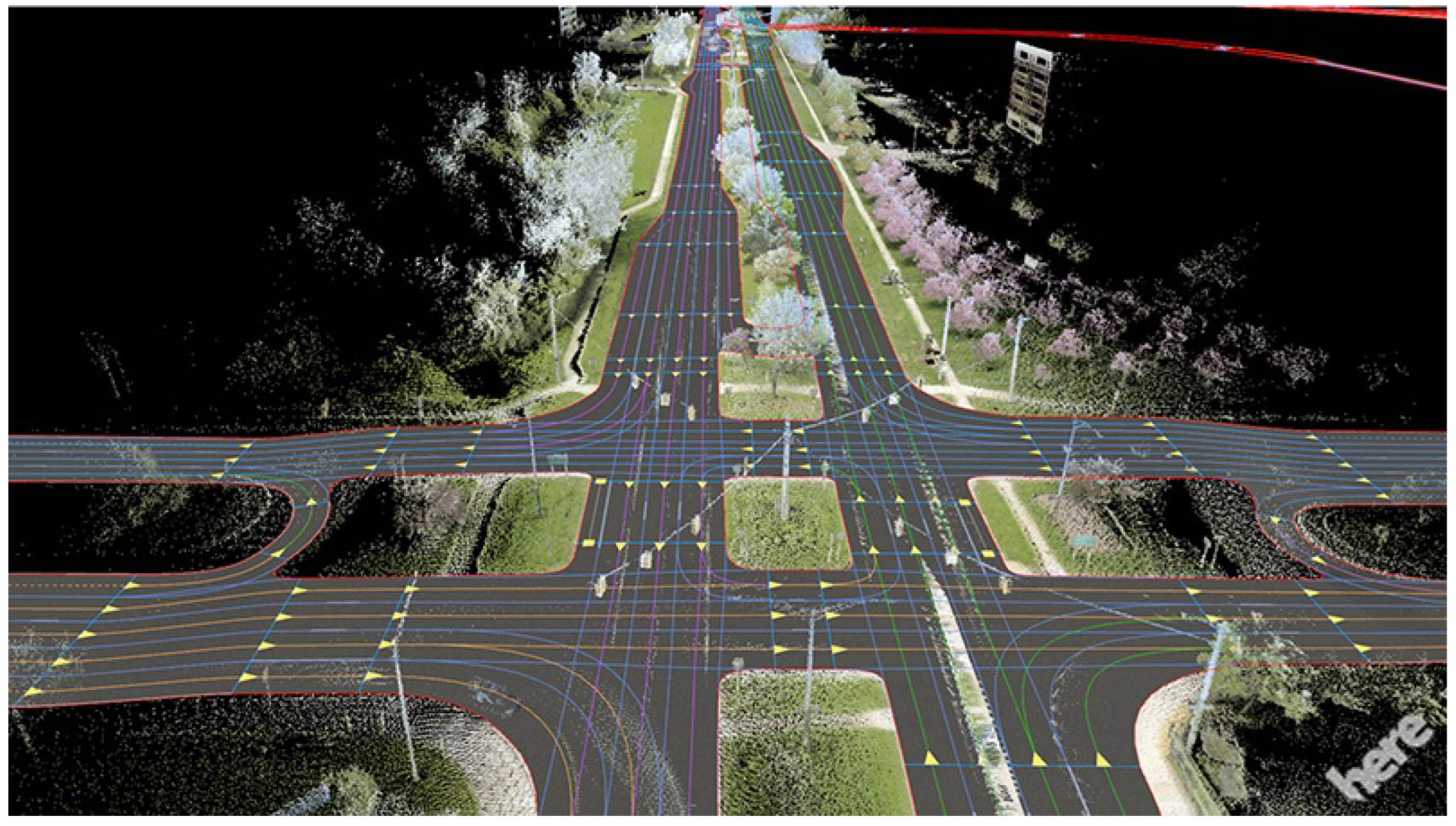

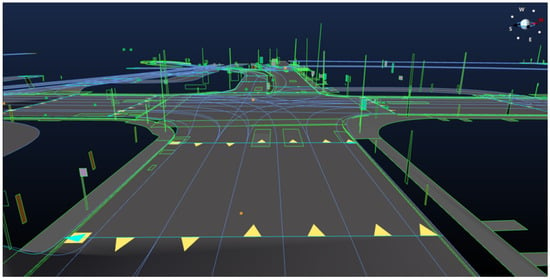

However, we can look at some available architectures to understand common practices in the industry. HD maps from HERE, TomTom, and BerthaDerive (Lanelet) share a similar three-layer architecture but have slightly different functionalities in each layer. Figure 1 shows an example of HD map from HERE and Figure 2 illustrates HERE’s HD map localization layer lane data. Lyft Level 5 has a different HD map structure with five layers [8,11,12]. The comparison between these architectures [5,13] is shown in Table 1. Although different companies have their own HD map structure, in this paper, we focus on the main categories of mapping information that an autonomous driving system uses: topological, geometric, semantic, and dynamic elements/real-time updates, and feature-based layers [4].

Table 1.

HD map layer structures from different companies involved in AD.

Figure 1.

Example of the HD map from HERE [14].

Figure 1.

Example of the HD map from HERE [14].

2.1. Topological Representation

The fundamental component of a high-definition road map is the topology representing a road network [4,15]. In HD maps, topology captures how roads, lanes, intersections, and other features are connected. This topological information not only benefits the HD map to drive autonomously but also facilitates route optimization based on factors such as traffic speed, road quality, traffic delays, and traffic rules. The topological representation typically comprises nodes and edges, where nodes represent intersections, interchanges, or specific points of interest, while edges represent the road segments connecting these nodes. Edges are often associated with attributes such as road classifications, speed limits, and turn restrictions. They provide valuable context for path planning and decision-making. By accurately capturing the intricate relationships and interconnections within the road network, the topological representation of HD maps plays a crucial role in enabling safe and efficient autonomous driving, as well as facilitating advanced applications like intelligent transportation systems and fleet management [15].

2.2. Geometric Representation

The accurate representation of features and objects in HD maps is another crucial task. The geometric representation of HD maps refers to how the spatial features and layout of the environment are captured and represented in the digital map data. Geometric features include the shapes and positions of roads, lanes, sidewalks, buildings, and terrain. These features are typically represented using a vector data structure, which describes simplified geometric shapes such as points, lines, curves, circles, and polygons [8]. One notable example of a geometric representation layer is the Lyft Level 5 geometric map, which contains highly detailed 3D information about the environment, organized to support precise calculations and simulations [11,12]. This level of detail is essential for enabling accurate localization, path planning, and decision-making for AVs.

2.3. Semantic Representation

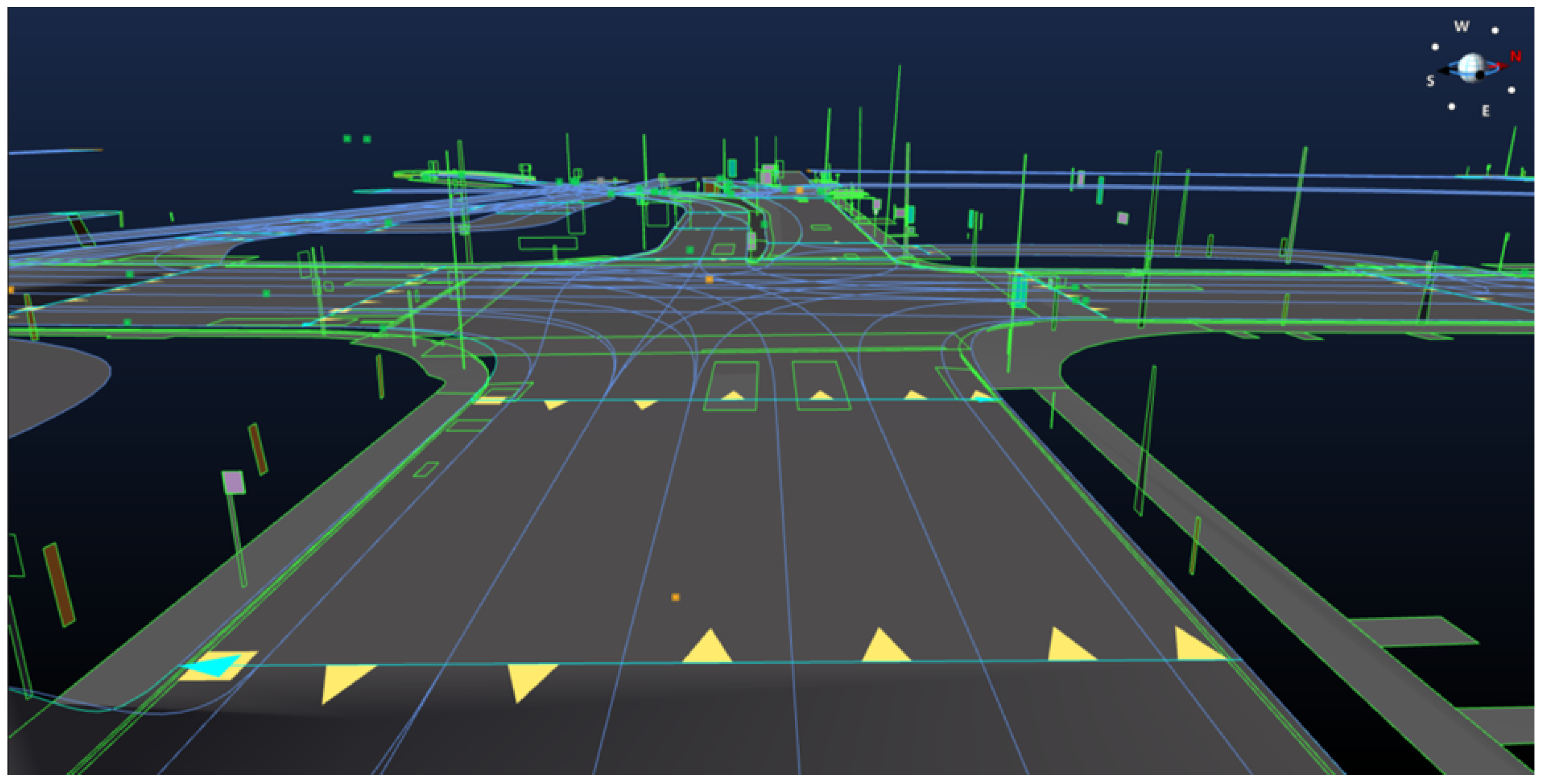

The semantic representation of HD maps builds on the geometric and topological representations by providing “semantic meaning” for features [4,11,12]. Semantic representation includes various traffic 2D and 3D objects such as lane boundaries, intersections, crosswalks, parking spots, stop signs, traffic lights, road speed limits, lane information, and road classification [4,11]. This semantic understanding is crucial for autonomous driving, where vehicles need to comprehend the surroundings to make smart, safe, and informed decisions. The semantic representation acts as a bridge between the raw geometric data and the higher-level understanding required for intelligent decision-making and path planning. By assigning semantic labels and attributes to the geometric features, it enables autonomous vehicles to interpret the environment in a more meaningful and contextual way. Notable examples of semantic representation layers include the HD map localization model layer from HERE [16] and the RoadDNA layer from TomTom [17]. These layers hold object-level semantic features, which help accurately estimate the vehicle’s position using object locations and contextual information [6,16,17]. In general terms, the semantic representation assigns semantic labels and attributes to road features and objects defined in the geometric representation, providing a richer and more comprehensive understanding of the environment for autonomous driving systems [6].

Figure 2.

Lane data from HERE’s HD map Localization layer [16].

Figure 2.

Lane data from HERE’s HD map Localization layer [16].

2.4. Dynamic Elements

Dynamic elements are features, objects, or conditions within the environment that are susceptible to change over time. These features require continuous monitoring and updating to provide up-to-date information for autonomous vehicles. Dynamic elements like pedestrians, obstacles, and vehicles need to be updated for the HD map to be always precise and accurate [5]. The dynamic element layer of HD maps captures and represents these time-varying aspects of the environment, which are essential for safe and efficient path planning and decision-making. This layer includes features such as traffic conditions, construction zones, temporary road closures, and the positions and movements of other road users, such as vehicles, pedestrians, and cyclists. Accurate representation and frequent updating of dynamic elements are critical for autonomous vehicles to anticipate and respond to changing situations promptly. This information can be combined with the static elements of the HD map, such as road geometry and traffic rules, to enable more comprehensive and reliable path planning and decision-making. Proper management of dynamic elements, which will be explained in-depth in Section 4, is essential for planning a safe and efficient path for autonomous vehicles in dynamic and complex environments.

2.5. Feature-Based Map Layers

HD maps rely heavily on advanced feature-based map layers for accurate localization and navigation. These layers use various techniques to identify and match features in the environment to ensure precise vehicle positioning. One state-of-the-art approach in feature-based mapping is visual place recognition (VPR). Recent advancements in VPR, such as SeqNet, have improved performance through learning powerful visual features and employing sequential matching processes [18].

Another significant advancement is the use of 3D LiDAR maps for vehicle localization. The SeqPolar method introduces a polarized LiDAR map (PLM) and a second-order hidden Markov model (HMM2) for map matching, which offers a concise and structured representation of 3D LiDAR clouds. This method enhances localization accuracy significantly [19].

The integration of these techniques into HD map systems significantly enhances the accuracy and reliability of feature-based map layers, which is crucial for improving the localization and navigation capabilities of autonomous vehicles, especially in dynamic and complex environments.

In the autonomous driving industry, apart from layer structures, formats should be considered as well. Table 2 summarizes the key HD map formats and their respective representations.

Table 2.

Common HD map formats and representations.

One common format is Lanelet2 [20], derived from liblanelet [21]. It uses an XML-based OSM format and organizes maps into three layers: physical (points and line strings for physical elements), relational (lanelets, areas, and regulatory elements), and topological. Lanelet2 focuses on precise lane-level navigation and traffic regulation.

Another common format is OpenDRIVE [22] by ASAM. It uses an XML format to describe road networks with three layers: the reference line (geometric primitives for road shapes), lane (drivable paths), and features (traffic signals and signs). OpenDRIVE emphasizes static map features and road structure.

Apollo OpenDRIVE, owned by Baidu, utilizes a modified OpenDRIVE format [5,10,23]. The Apollo framework is an open-source project developed by Baidu, a company based in China. Apollo uses points, in contrast to the original OpenDRIVE format, which uses geometric primitives.

3. HD Map Generation Techniques

As mentioned in the previous sections, high precision is required for HD maps to be effective for autonomous driving. Initially, HD maps are generated offline; however, to ensure their relevance and accuracy, they must undergo real-time updates once created. The general steps taken to generate HD maps will be discussed in this section. The process contains data collection from multiple sources, sensor fusion, point cloud registration, and feature extraction.

3.1. Data Collection

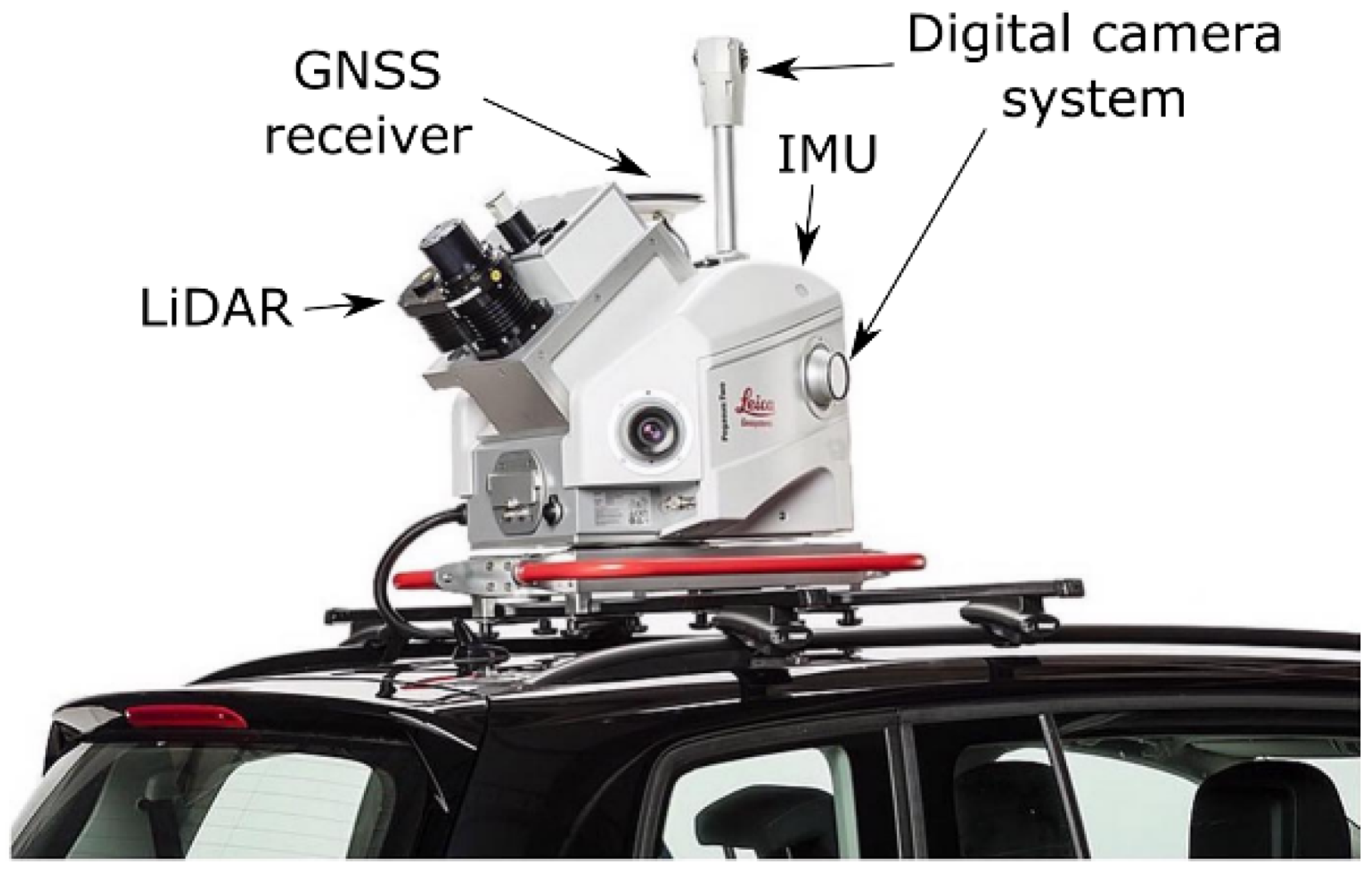

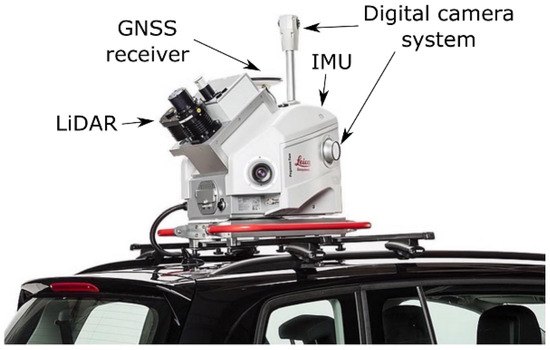

The first step in the HD map generation process is data collection. A vehicle mounted with high-precision and well-calibrated sensors is sent to survey and collect comprehensive data about the environment. Mapping vehicles are equipped with mobile mapping systems (MMSs), which typically comprise various sensors. The sensors used in an MMS setup may vary depending on the requirements and the details needed for mapping. LiDAR is one of the common sensors used in MMS. LiDAR sensors provide highly accurate 3D point cloud data of the environment. Apart from LiDAR, cameras are used to capture high-resolution images of the surroundings. These high-resolution images provide additional information about road markings, traffic signs, and other features. The global navigation satellite system (GNSS) is another common sensor found in MMS. GNSS receivers are capable of receiving signals from multiple satellite constellations simultaneously, which increases accuracy and precision. GNSS sensors are usually coupled with an inertial measurement unit (IMU) sensor to help estimate the vehicle’s motion, including position, velocity, and orientation. In addition, radar sensors could be used to detect and track moving objects. Odometer sensors can also be used to measure the distance traveled by the vehicle’s wheels. Other sensors can be equipped based on the specific need of the mapping task. The above sensors can also be bought separately and configured to do the data collection. However this approach might not be simple and it is also a time-consuming task [6,24]. An MMS equipped with LiDAR, GNSS, IMU, and camera is shown in Figure 3.

Figure 3.

Mobile mapping system with various positioning and data collection sensors, designed to produce a precise georeferenced 3D map of the environment [24].

Typically, there are three primary approaches used to gather data for HD maps. Table 3 compares these approaches. The first involves sourcing data from publicly available datasets; however, these are not as plentiful for autonomous driving. Nonetheless, researchers can tap into a handful of datasets like the Level5 Lyft dataset [25], the KITTI dataset [26], the nuScenes dataset [27], and the Argoverse dataset [28]. These open-source datasets contain pre-labeled traffic data, 3D point clouds, images, and other sensor data, facilitating experimentation and map generation. The second method entails collecting data firsthand. Researchers may opt for this method when focusing on specific regions or features within HD maps, though resulting datasets tend to be geographically limited. Lastly, crowdsourcing emerges as a powerful third option. Leveraging contributions from numerous vehicles, this method yields vast and diverse datasets covering expansive areas [5]. Moreover, the collective nature of crowdsourced data captures a wide array of scenarios, offering invaluable insights into the challenges AVs might encounter. For instance, recent research proposes a crowd-sourcing framework to continuously update point cloud maps (PCM) for HD maps, integrating LiDAR and vehicle communication technologies to detect and incorporate environment changes in real time [29].

Table 3.

Data collection methods for HD map.

3.2. Sensor Fusion

Sensor fusion plays an important role in HD map generation by combining data from multiple sensors to create reliable and accurate HD maps. Sensor fusion integrates data from multiple sensors, such as LiDAR, camera, IMU, GPS, and radar in order to capture various aspects of the environment. It leverages data from various sensing modalities to minimize detection uncertainties and address the limitations of individual sensors operating independently [9,30,31,32]. Table 4 presents a comparison between LiDAR, camera, and radar sensors based on various factors to be considered in autonomous driving.

Table 4.

Comparison of LiDAR, camera, and radar sensors for the HD map.

Integration of multiple sensors to provide redundancy and achieve a more accurate HD map requires careful calibration of sensors. Combinations of sensors widely used for autonomous driving are based on LiDAR, camera, and radar. Although other sensor integrations exist, the more widely researched combinations are among those three sensors [33]. These widely used sensor combinations are LiDAR–camera–radar, LiDAR–camera, and camera–radar.

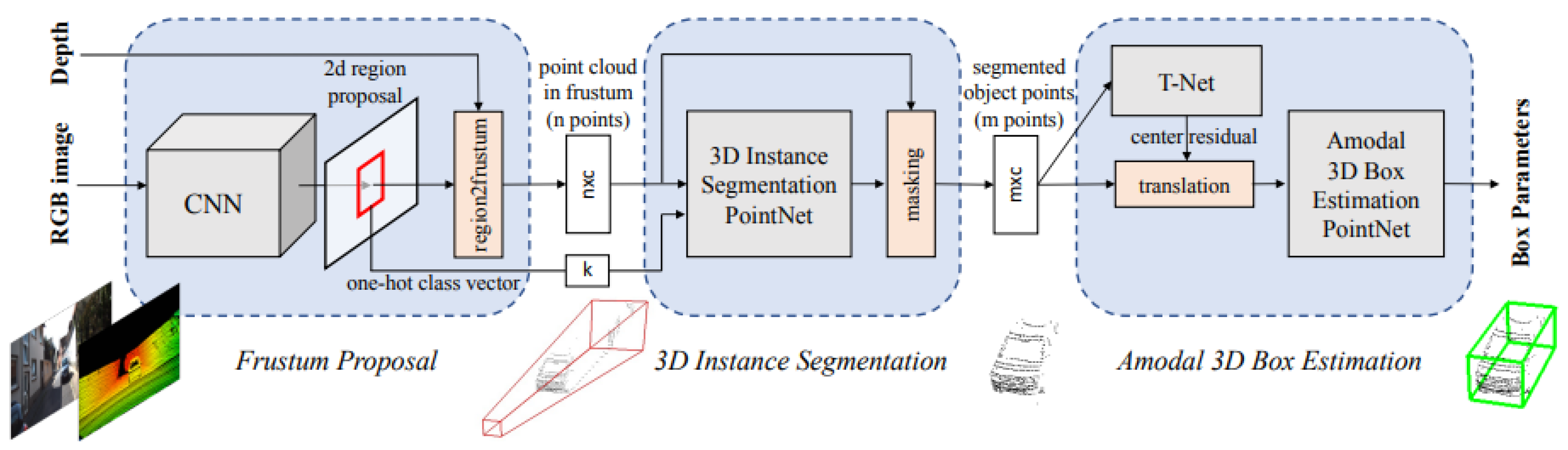

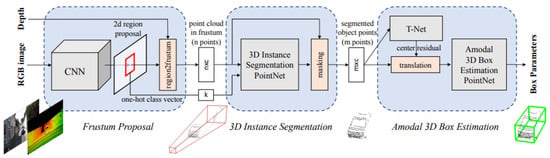

The fusion of LiDAR and camera sensors yields high-resolution images and higher accuracy in distance measurements. LiDAR–camera fusion has proven to show better performance than the use of these sensors separately [34,35,36,37,38]. One approach used to couple these two sensors is to project the point cloud data from LiDAR onto the image obtained from the camera sensor [38]. Another way to implement LiDAR–camera fusion is to construct a representation of the camera sensor’s 2D detections into the LiDAR point cloud data, as presented in FrustrumNet [39]. FrustrumNet’s architecture, illustrated in Figure 4, involves projecting 2D object detections from the camera into 3D FrustrumNet candidates in the LiDAR point cloud. This fusion of complementary sensor data leverages the strengths of both modalities, enhancing the robustness and accuracy of perception in autonomous driving applications.

Figure 4.

FrustrumNet architecture—a two-stage approach where, first, a 2D CNN detects objects, and then the point cloud within the corresponding 3D frustum is processed by PointNets to segment the object and estimate its amodal 3D bounding box [39].

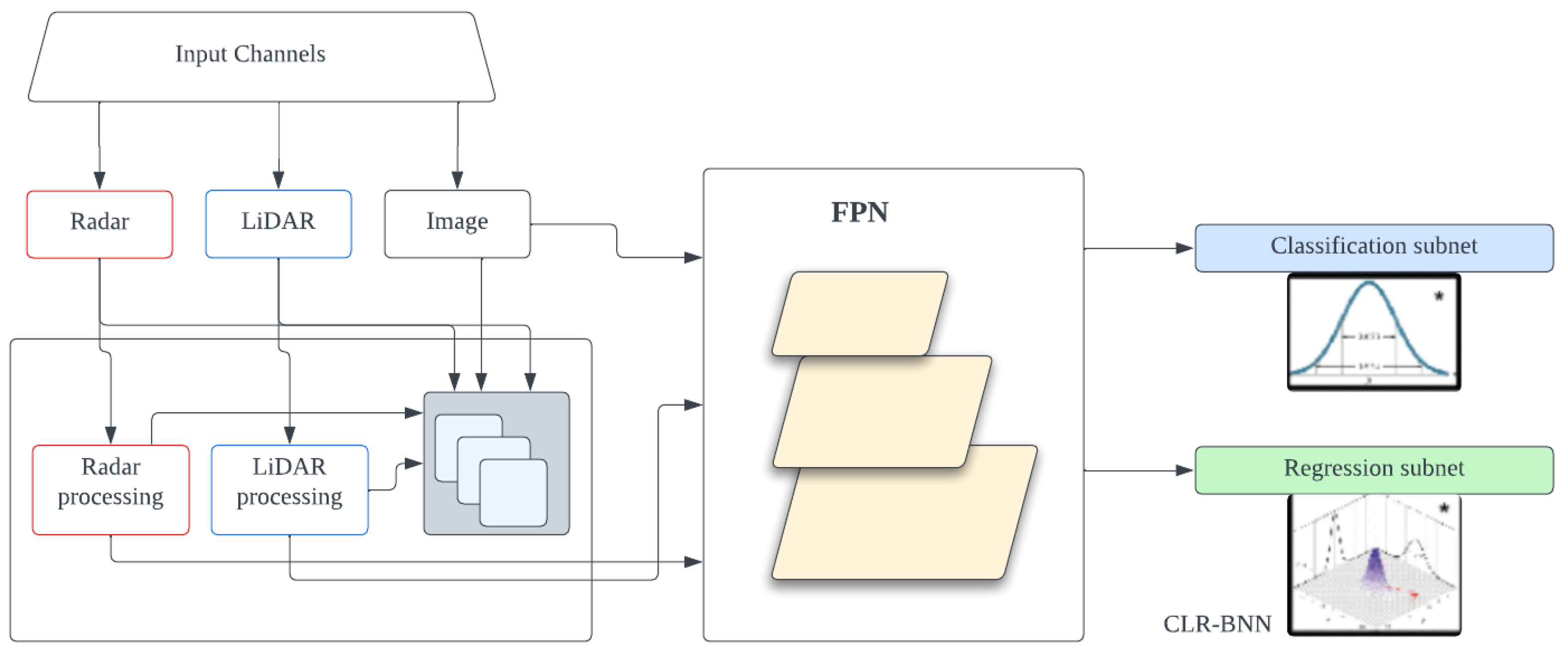

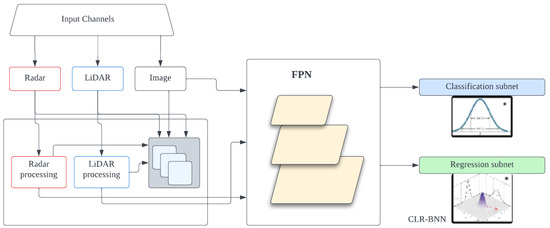

Utilizing LiDAR–camera–radar fusion, as discussed in LRVFNet [40], involves the generation of distinct features from various sensor modalities (LiDAR, mmWave radar, and visual sensors) using a deep multi-scale attention-based architecture. This fusion method effectively integrates complementary information from these modalities to enhance accuracy and robustness in object detection. Similarly, the fusion mechanism utilized in CLR-BNN [41] employs a Bayesian neural network to fuse data from the three sensors. This approach results in improvements in detection accuracy and reduced uncertainties in diverse driving situations. Figure 5 illustrates the highlevel architecture of CLR-BNN.

Figure 5.

CLR-BNN high-level architecture for sensor fusion [41].

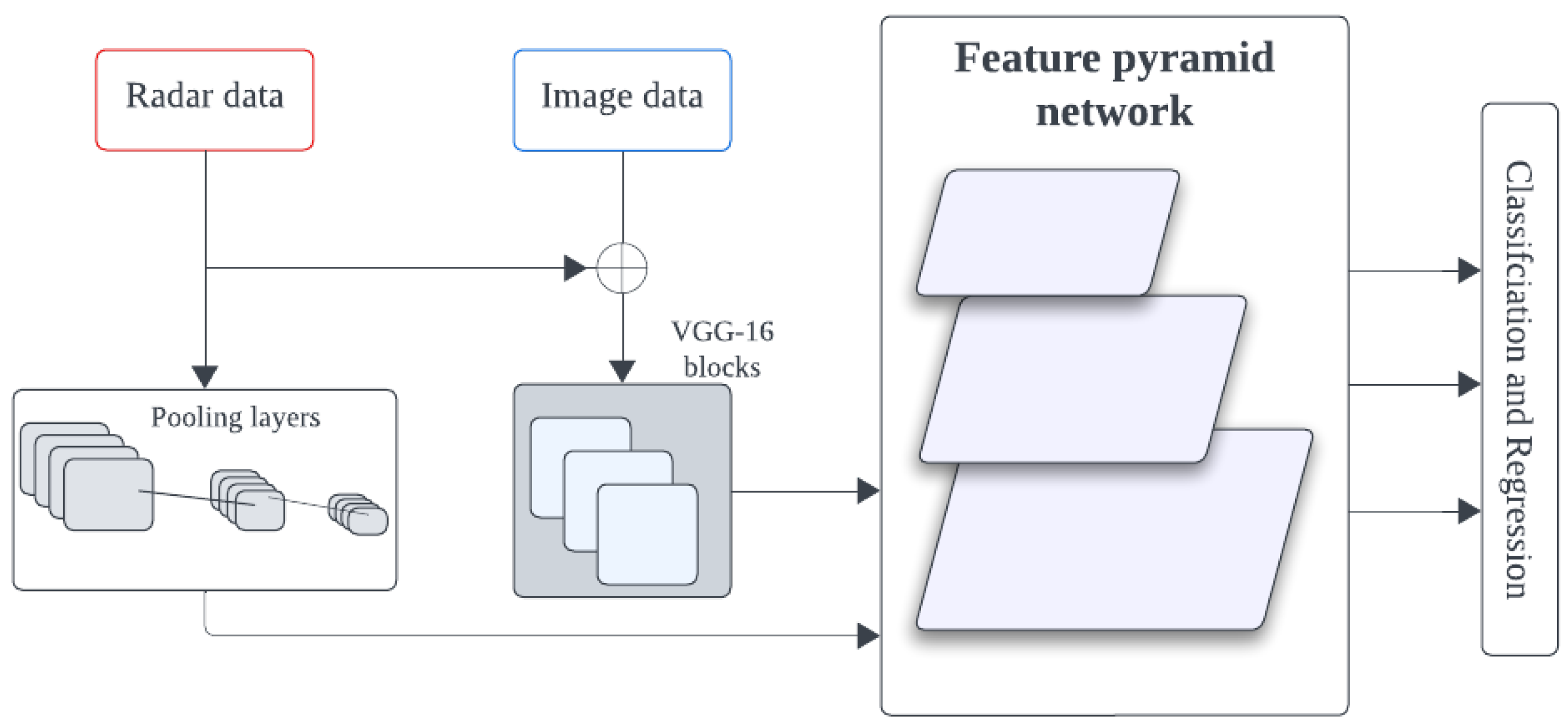

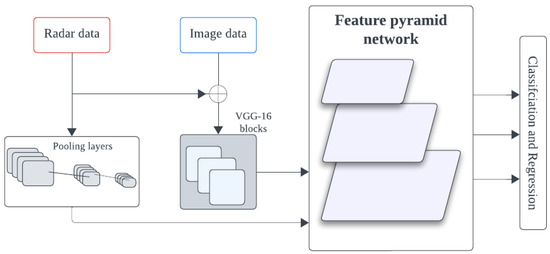

The integration of camera–radar sensors offers significant advantages for AVs, as it combines the high-resolution visual data from cameras, capturing intricate environmental details such as color, texture, and shape, with the long-range detection capabilities of radar sensors, which operate reliably even in challenging weather conditions and low visibility scenarios. CameraRadarFusionNet (CRF-Net) [42] shown in Figure 6 enhances 2D object detection networks by integrating camera data and sparse radar data within network layers, which autonomously determines the optimal fusion level, outperforming image-only networks across diverse datasets.

Figure 6.

High-level architecture of CameraRadarFusionNet (CRF-Net) [42].

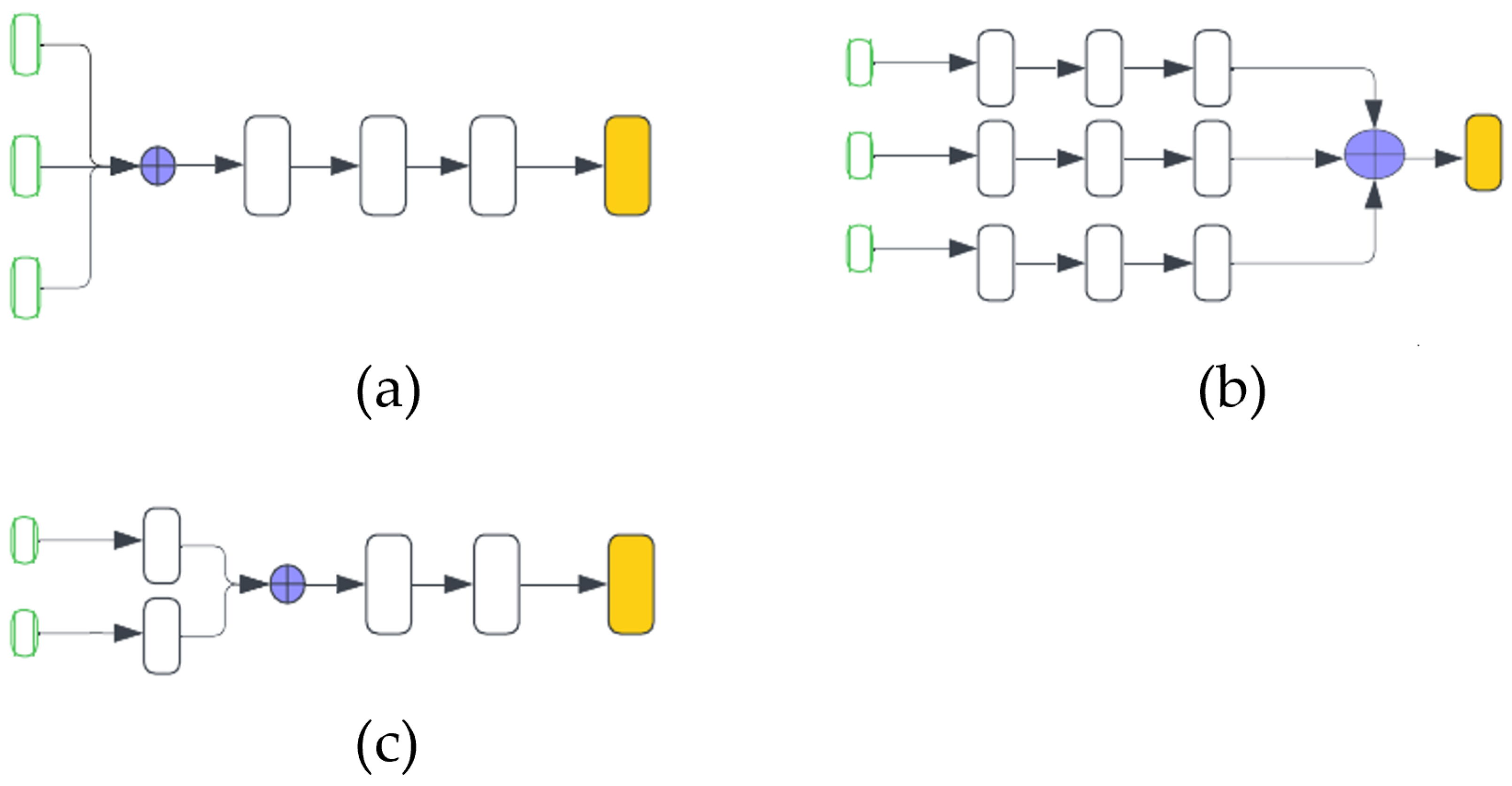

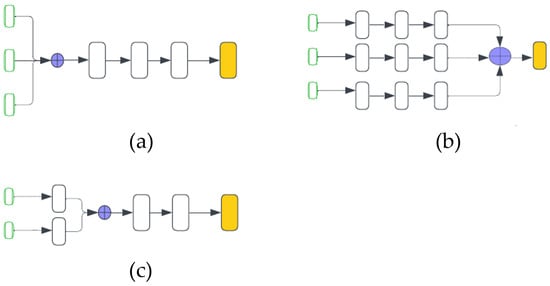

Sensor fusion integrates data from various sensors via early, middle, or late fusion strategies. These approaches differ when integration occurs: before processing (early), at the feature level (middle), or at the decision level (late). Figure 7 shows the basic working principle of these three fusion strategies.

Figure 7.

Three kinds of fusion strategies: (a) early fusion, (b) middle fusion, and (c) late fusion. Green blocks represent input sensors (camera, LiDAR, and radar), whereas yellow blocks represent output.

Among the most widely adopted algorithms used to implement sensor fusion strategies are object detection techniques such as YOLO [43] and SSD [44] for camera data, as well as 3D object detection methods like VoxelNet [45] and PointNet [46] for processing point cloud data from LiDAR or radar sensors [33].

3.3. Map Generation Algorithms

Within HD map generation techniques, this section specifically addresses the algorithms used in the map generation process. This section focuses on the methods and techniques employed to process sensor data and construct HD maps of the environment.

Point Cloud Registration Approaches

Algorithms play a critical role in transforming raw sensor data into meaningful map representations. After data collection, very powerful algorithms are crucial for the generation of HD maps. A process known as point cloud registration is performed to align several overlapping and non-overlapping point cloud data obtained from the data collection phase. The point cloud data gathered from different sensors needs to be aligned to obtain a comprehensive map of the environment. The alignment that needs to take place might not necessarily be from different sensors. This means that we can have point cloud data from the same sensor but for different times or the data could have been from a different viewpoint. Therefore, this condition also requires point cloud registration to align the acquired point clouds.

Generally, four techniques are used to perform point cloud registration: optimization-based approaches, probabilistic-based approaches, feature-based approaches, and deep learning techniques [47,48]. Table 5 compares and contrasts these approaches.

Table 5.

Comparison of point cloud registration approaches: advantages and limitations.

- Optimization-based approaches—Optimization-based approaches involve two steps, which are correspondence and transformation. The correspondence step finds corresponding points between the point clouds that are being registered. Establishing accurate correspondence is crucial for determining how the point clouds should be aligned. Common techniques for establishing correspondences include the nearest neighbor search, which finds the closest point in the target cloud for each point in the source cloud based on distance metrics such as Euclidean distance. The second step, the transformation step, estimates the optimal transformation and aligns the source point cloud with the target point cloud. Typically, the transformation consists of translation, rotation, and also scaling. Optimization-based approaches aim to minimize the value of a cost function that quantifies the discrepancy between corresponding points in the two point clouds. The iterative closest point (ICP) is one of the most widely used optimization-based methods [49]. ICP iteratively refines the transformation parameters until they converge to an optimum solution. The goal of the ICP algorithm is to align the point clouds optimally [50]. Over the years, numerous variants and improvements to the ICP algorithm have been proposed [51,52], contributing significantly to the advancement of point cloud registration techniques. These algorithms have had a profound impact on the creation of highly accurate HD maps, which are essential for various applications, including autonomous driving and robotics [48].

- Probabilistic-based approaches—While optimization-based approaches like ICP have proven beneficial for point cloud registration, they can encounter limitations when the point clouds have significant overlap or occlusions. In such scenarios, ICP derivative algorithms may converge to local optima, leading to registration failure. To address these limitations, probabilistic registration algorithms based on statistical models have gained traction in recent years, particularly those leveraging Gaussian mixture models (GMMs) [49] and the coherent point drift (CPD) algorithm [53].These probabilistic methods incorporate uncertainty into the registration process by estimating the posterior distribution of the transformation parameters. This approach is valuable for handling uncertain or noisy data, offering probabilistic estimates of the registration outcomes rather than deterministic solutions. GMM and CPD, along with their respective variants [54,55], are particularly useful when dealing with noisy or uncertain data, as they can provide probabilistic estimates of the registration results, accounting for the inherent uncertainties in the data.

- Feature-based approaches—Feature-based approaches for point cloud registration depart from traditional optimization-based methods by leveraging deep learning techniques to extract robust feature correspondences. These approaches focus on identifying distinctive key points or features from the point clouds, which serve as landmarks for establishing correspondences and facilitating registration. As highlighted in [47], these methodologies utilize deep neural networks as a powerful feature extraction mechanism, aiming to derive highly distinctive features that enable precise registration outcomes such as the SeqPolar method [19]. While feature-based approaches offer advantages in providing accurate correspondence searching and facilitating precise registrations, they also come with certain challenges. These methods typically require extensive training data to effectively learn the feature representations, and their performance may degrade when encountering unfamiliar scenes or environments that significantly deviate from the training data distribution.

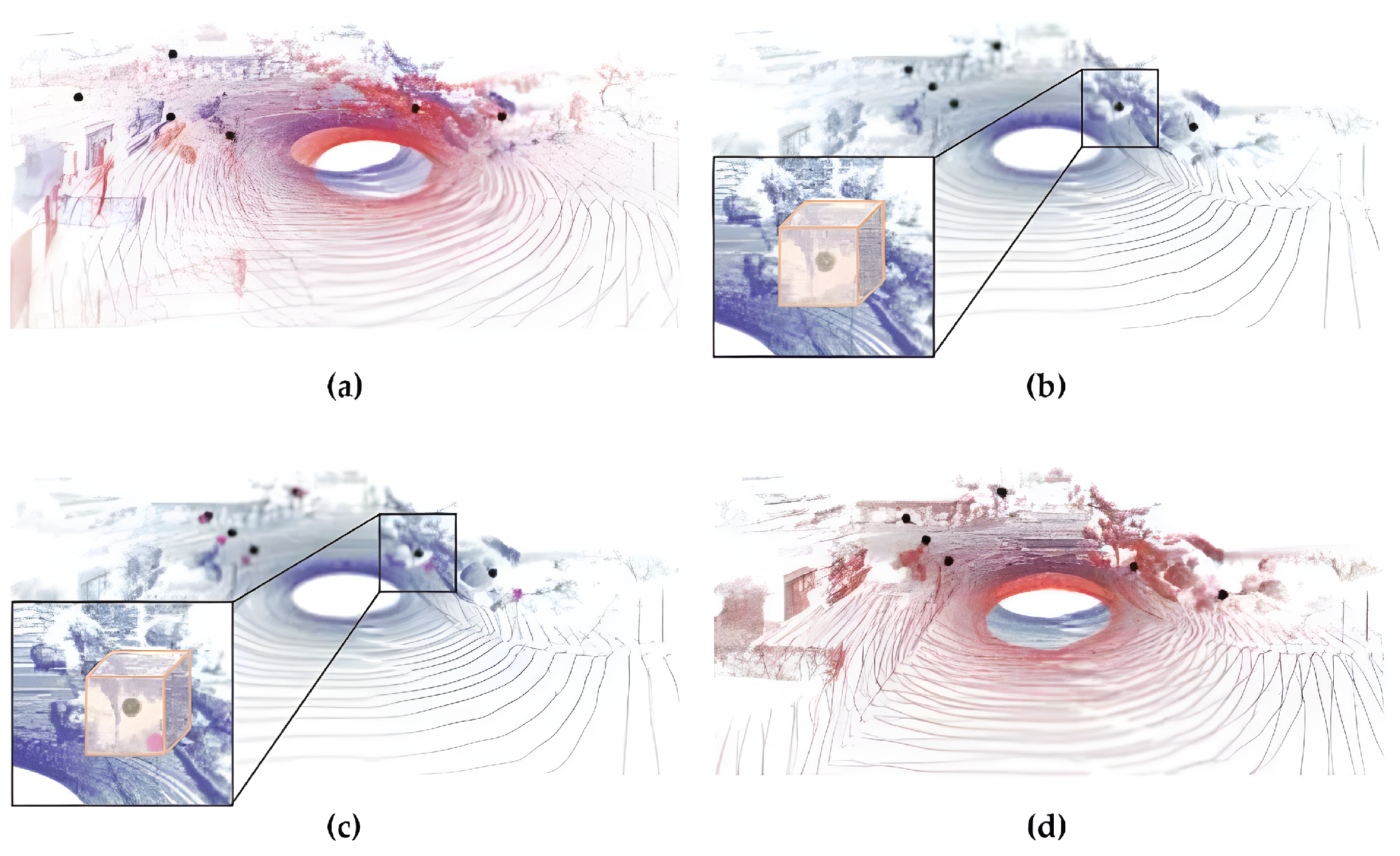

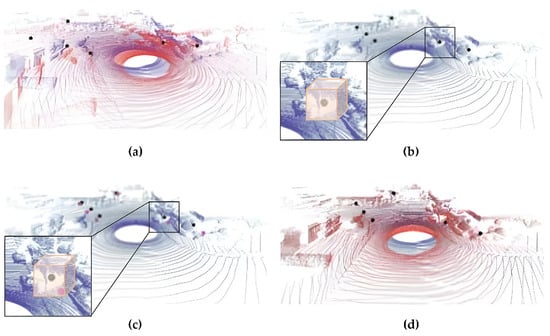

- Deep learning techniques—Deep learning techniques, particularly convolutional neural networks (CNNs), attention-based models, and other advanced architectures, have emerged as powerful tools for point cloud registration tasks. These methods leverage the ability of deep networks to learn feature representations directly from the point cloud data and capture complex patterns and relationships, enabling robust registration. Deep learning-based registration approaches can be classified into two main categories: end-to-end learning methods, where the entire registration pipeline is learned from data and hybrid approaches that combine deep learning with traditional techniques. Examples of deep learning-based registration methods include the deep closest point (DCP) [56] and DeepVCP [57]. DeepVCP shown in Figure 8, in particular, is an end-to-end deep learning framework for 3D point cloud registration that has demonstrated superior performance compared to both optimization-based approaches [49,58] and probabilistic-based approaches [53]. By leveraging the representational power of deep networks, these methods have shown promising results in addressing the challenges of point cloud registration, outperforming traditional techniques in various scenarios.

Figure 8. An illustration of the DeepVCP approach; the source point clouds and target point clouds are matched by the registration process. (a) The source (red) and target (blue) point clouds and the keypoints (black) detected by the point weighting layer. (b) A search region is generated for each keypoint and represented by grid voxels. (c) The matched points (magenta) generated by the corresponding point generation layer. (d) The final registration result computed by performing SVD given the matched keypoint pairs [57].

Figure 8. An illustration of the DeepVCP approach; the source point clouds and target point clouds are matched by the registration process. (a) The source (red) and target (blue) point clouds and the keypoints (black) detected by the point weighting layer. (b) A search region is generated for each keypoint and represented by grid voxels. (c) The matched points (magenta) generated by the corresponding point generation layer. (d) The final registration result computed by performing SVD given the matched keypoint pairs [57].

While significant advancements have been made in the algorithms employed for point cloud registration, several challenges and limitations persist, hindering the widespread adoption of these techniques. The most prominent challenges are as follows:

- Point cloud data collected from sensors usually contain outliers, noise, and missing data, which can affect the registration process.

- Point cloud registration algorithms often involve computationally intensive operations and require substantial computational resources, such as memory and processing power.

- In situations where point clouds are non-overlapping or have limited overlap, it is difficult for the registration process to find the correspondence and visualize the environment.

- Imprecise calibration of sensors could also be introduced to the registration process. Sensors involved in the data collection need to be properly calibrated for increased accuracy.

- Density differences in point clouds could result either due to sensor differences or there could be variations across different regions of the scene. For example, in regions where the point cloud is sparse, it could be difficult to find correspondence between points leading to errors in the point cloud registration.

3.4. Feature Extraction for HD Map

After the point cloud registration process, feature extraction becomes a crucial component in generating informative and high-quality HD maps for reliable use across various environments. Feature extraction involves identifying and extracting relevant features from sensor data to create a detailed representation of the environment. Features refer to distinct elements of the environment that are extracted from sensor data and used to create detailed representations of the surroundings. These features provide valuable information for navigation, vehicle localization, and various other applications in AVs and systems. Some common features found in HD maps include [5,59] road and lane markings, road networks, traffic signs, landmarks, and pole-like objects. Traditionally feature extraction was done manually, with human operators identifying and annotating features of interest in sensor data. This process is not cost-effective and it is a time-consuming task [5]. Recently, machine learning approaches, particularly deep learning techniques, have been employed to automate feature extraction from point cloud data [60].

Several deep learning methods have been proposed for extracting specific features, such as lane markings. LMPNet [59] is based on the feature pyramid network (FPN) proposed a method to extract the lane markings in images, which are then projected from a perspective space into a three-dimensional space in accordance with the position data. The authors of [61] used StixelNet, which employs deep convolutional networks, to obtain outstanding results in lane detection and object detection on the KITTI dataset [26]. In addition, deep deconvolutional networks with convolutional networks were used in [62], whereas EL-GAN [63] used generative adversarial networks (GANs) for feature extraction. DAGMapper [60] utilizes inference within a directed acyclic graphical model (DAG), integrating deep neural networks for conditional probabilities and employing a greedy algorithm for graph estimation. Deep learning algorithms for extracting traffic signs are also presented in [64]. Additionally, YOLOv3 architecture [65] has been employed for traffic sign detection [63]. For extracting road networks, aerial images are usually utilized since they offer a bird’s-eye view of the terrain. Deep learning techniques like CNNs have been used in DeepRoadMapper [66], RoadTracer [67], and [68], to extract the road topology from aerial images. In addition, road networks can be extracted from 3D point clouds, sensor fusion, and camera images [5]. Figure 9 shows road network extraction methods.

Figure 9.

Comparison of inferred road networks in Chicago (top), Boston, Salt Lake City, and Toronto (bottom). The yellow is the inferred graph (yellow) over ground truth from OSM (blue). RoadTracer (Left), RoadTracer-segmentation approach (Middle), and DeepRoadMapper (Right) [67].

4. Updating HD Maps

4.1. Importance of Regular Updates

Ensuring accurate and reliable navigation for AVs necessitates regular updates to HD maps. As AVs navigate through various environments, changes such as road infrastructure modifications, variations in traffic patterns, and dynamic events like road closures, construction sites, and accidents are inevitable [5,69]. Regularly updating HD maps is crucial to reflect these changes and support safe and efficient navigation strategies [7].

Moreover, frequent map updates play a vital role in enhancing the quality of route planning capabilities. By incorporating the latest information on road conditions, traffic patterns, and potential disruptions, AVs can dynamically adjust their planned routes, enabling timely responses to dynamic events [3,6]. This adaptability not only optimizes navigation efficiency but also contributes to improved safety by accounting for real-time changes in the environment. Therefore, maintaining up-to-date HD maps through regular updates is a critical aspect of autonomous vehicle operation, ensuring that the navigation system has access to the most accurate and current information, allowing for informed decision-making and seamless adaptation to the ever-changing conditions of the road network.

4.2. Real-Time Data Sources

Real-time data sources provide crucial information for updating HD maps. These sources include vehicle sensors, such as cameras, LiDAR, and radar, which capture data about the surrounding environment in real time. Additionally, connected vehicles (vehicle-to-vehicle (V2V)) and infrastructures (vehicle-to-infrastructure (V2I)), such as traffic monitoring systems and road sensors, contribute to real-time data collection. Other sources, such as crowd-sourced data from navigation apps and social media, could provide valuable insights into traffic conditions and road incidents if the sources are well-trusted.

4.3. Automated Update Process

To ensure efficient and accurate updates to HD maps, automated processes have been employed to streamline the integration of real-time data streams with map databases. These processes leverage algorithms to detect and incorporate changes into the maps, reducing the need for manual intervention and minimizing the risk of errors. In recent years, deep learning techniques have emerged as powerful tools for automating the detection of changes to be integrated into HD map updates [70,71]. In a comprehensive survey paper [6], various approaches employed in automated map update processes are outlined, including probabilistic and geometric-based approaches. Techniques such as Kalman smoothing and Dempster–Shafer’s theory of beliefs, as demonstrated in [72], leverage probabilistic models to incorporate uncertainties and belief systems in the update process. Geometric-based approaches rely on geometric calculations and analyses to detect and incorporate changes in the environment, complementing deep learning techniques. By leveraging these automated techniques, HD maps can remain up-to-date with the latest information, reflecting changes in the environment with minimal manual effort. This automation not only improves the efficiency of the update process but also enhances the overall accuracy and reliability of the HD maps, ensuring seamless and safe navigation for AVs.

Simultaneous localization and mapping (SLAM) algorithms are another crucial component in the automated update process for HD maps. SLAM techniques enable AVs to generate and maintain maps of their environment while simultaneously determining their location within the mapped environment. This capability is particularly valuable for updating HD maps in real-time as the vehicle navigates through dynamic environments. LiDAR-based SLAM algorithms, such as LOAM (lidar odometry and mapping) [73], LeGO-LOAM [74], and SuMa++ [75], leverage point cloud data from LiDAR sensors to simultaneously estimate the vehicle’s pose and create 3D maps of the environment. These algorithms can detect changes in the surroundings, such as new construction, obstacles, or road alterations, and automatically incorporate these updates into the HD maps. Visual SLAM techniques, like ORB-SLAM [76] and DSO (direct sparse odometry) [77], utilize visual odometry to perform localization and mapping. By analyzing the visual data and detecting changes in the environment, these algorithms can update the HD maps with new or modified features, such as road markings, traffic signs, or landmarks. Additionally, multi-sensor fusion approaches that combine data from LiDAR, cameras, IMU, and other sensors have been developed for SLAM-based HD map updates. Examples include LIO-SAM [78], R3-Live [79], FAST-LIVO [80], FAST-LIO [81], and FAST-LIO2 [82], which leverage the complementary strengths of different sensor modalities for robust and accurate map updates. By integrating SLAM algorithms into the automated update process, HD maps can continuously adapt to the dynamic changes in the environment, ensuring up-to-date and reliable information for autonomous vehicle navigation.

5. Challenges and Solutions

Over the years, HD maps have gained a lot of popularity and have experienced major breakthroughs. However, there are still areas where HD maps need improvement. These challenges will be discussed in this section.

5.1. Environmental Challenges

While keeping HD maps accurate helps mitigate the impact of environmental challenges [6], maintaining accurate HD maps and ensuring they function reliably under all environmental conditions is a significant challenge [4]. Handling construction zones, performing reliably in harsh weather conditions, and effectively mapping complex urban landscapes require constant updates and significant budgetary investments.

5.2. Technical Challenges

Universally scalable HD maps are essential for efficient autonomous driving systems in any city, region, or road. However, scalability is difficult to achieve due to factors such as varying traffic rules, signage, and road geometry across regions [6]. More efficient mapping algorithms are required to handle these discrepancies. Additionally, the lack of standardization in HD maps is a concern. Different companies use proprietary formats, making data exchange and interoperability challenging. The complexity of data collection presents another technical hurdle. After collecting terabytes of data, preprocessing it for just an hour of driving requires significant computing power, particularly for tasks like identifying and classifying relevant information [13]. Finally, delivering updated map data to AVs with minimal latency is crucial for safe operation. This is especially important in areas with poor internet infrastructure where slow network speeds can compromise safety.

5.3. Data Privacy and Security Concerns

In the technology and data-driven era, privacy is paramount. For AD systems, the requirement for centimeter-level precision necessitates recording the user’s location and movement. This information can be leveraged to provide services like live updates on traffic congestion and road blockages [4]. Nevertheless, if not handled properly, these sensitive data could potentially be exploited for malicious purposes. The issue of security should not be taken lightly; it must be addressed comprehensively at every level by HD map providers.

6. Future Direction

6.1. Advances in Sensor Technologies

Although the future remains uncertain, and the technology we currently utilize may eventually be superseded, continued advancements in existing sensor technologies, such as LiDAR, cameras, radar, and IMUs, are anticipated to play a pivotal role in enhancing the capabilities of HD map creation and updating processes. Emerging sensor technologies, including solid-state LiDAR, high-resolution cameras, and multi-modal sensor fusion systems, offer improved accuracy, reliability, and cost-effectiveness for capturing and processing environmental data, further boosting the development of robust and up-to-date HD maps.

6.2. Integration of 5G and Edge Computing for Real-Time Updates

The integration of 5G cellular networks and edge computing infrastructure will facilitate real-time updates and seamless distribution of HD map data to vehicles and other connected devices. The high bandwidth and low latency offered by 5G networks, coupled with edge computing capabilities at roadside infrastructure and onboard vehicle systems, will enable dynamic map updates, efficient traffic management, and enhanced situational awareness for AVs. Furthermore, as 5G networks become available in the future, HD mapping systems will benefit from improved robustness, reliability, and potentially new functionalities, driven by the advancements in network performance and computing power.

6.3. AI in HD Map Creation and Updating

Artificial intelligence (AI) and machine learning (ML) algorithms will continue to play a critical role in HD map generation and updating processes. Deep learning, reinforcement learning, and generative adversarial networks (GANs) enable automated feature extraction, anomaly detection, change detection, and predictive modeling from sensor data. AI-driven approaches streamline map creation workflows, improve accuracy, and adapt to changing environments more effectively.

6.4. Standardized HD Mapping Ecosystem

Currently, most companies involved in AD produce their proprietary HD maps. However, collaboration among industry stakeholders, including automotive manufacturers, technology companies, government agencies, and standards organizations like the ASAM OpenDRIVE format [22], is essential for standardizing HD map formats, data exchange protocols, and quality assurance processes. Lanelet2, Apollo (utilizing a modified OpenDRIVE format), and NDS (Global standard for automotive map data) represent significant steps toward this standardization. Critical steps include fostering collaboration among stakeholders, adopting established formats such as OpenDRIVE, Lanelet2, and NDS for compatibility and interoperability, and developing unified specifications. Concerted standardization efforts promote seamless data sharing, and innovation across the entire ecosystem, driving continuous improvement in HD map creation, updating, and utilization for various applications. This collaborative approach fosters a unified and consistent HD mapping infrastructure and accelerates the development and deployment of advanced autonomous driving solutions, benefiting the industry as a whole. These future directions represent ongoing trends and areas of focus in the development and advancement of HD maps, with the potential to reshape the landscape of future transportation, navigation, and urban mobility in the years to come.

6.5. Autonomous Vehicles for Sustainability

The advancement of autonomous vehicles (AVs) represents a pivotal opportunity to foster sustainability in transportation. AVs have the potential to significantly reduce greenhouse gas emissions by optimizing driving patterns and minimizing energy consumption through the use of high-definition (HD) maps. These maps provide precise geographic data that enable AVs to navigate efficiently, thereby reducing fuel usage and emissions. Studies suggest that AVs can cut fuel consumption by 10–20% compared to conventional vehicles, particularly in urban areas where traffic congestion is prevalent [83]. Furthermore, AVs equipped with HD maps can enhance traffic flow by communicating with infrastructure and other vehicles, mitigating congestion, and improving overall air quality.

Autonomous driving technology also promotes sustainability through the promotion of shared mobility services and the potential reduction in vehicle ownership. By integrating with ride-sharing platforms and optimizing routes based on real-time data from HD maps, AVs can support a shift toward shared mobility models, thereby reducing the number of vehicles on the road and decreasing urban sprawl. Additionally, AVs contribute to safety improvements by leveraging HD maps for precise navigation and obstacle detection, thereby reducing traffic accidents and their associated environmental and economic costs. As cities and transportation systems evolve, the integration of AV technology with sustainable urban planning initiatives facilitated by HD maps holds promise for creating more efficient, livable, and environmentally friendly urban environments.

7. Conclusions

This comprehensive survey underscores the critical role of HD maps in propelling the advancement of autonomous driving technologies. It delves into various facets of HD map generation and maintenance, exploring the intricacies of map layers, data collection methodologies, sensor fusion strategies, point cloud registration techniques, and feature extraction processes essential for creating detailed and accurate representations of the environment. Moreover, the significance of regular updates in ensuring the currency and reliability of HD maps is emphasized. Furthermore, this review addresses the challenges inherent in HD map development and maintenance, while also exploring potential solutions and future perspectives that promise to enhance the effectiveness and utility of HD maps in driving the evolution of autonomous driving systems.

Author Contributions

Conceptualization, Kaleab Taye Asrat and Hyung-Ju Cho; methodology, Kaleab Taye Asrat; software, Kaleab Taye Asrat; validation, Kaleab Taye Asrat; formal analysis, Kaleab Taye Asrat; investigation, Kaleab Taye Asrat; resources, Kaleab Taye Asrat; data curation, Kaleab Taye Asrat; writing—original draft preparation, Kaleab Taye Asrat; writing—review and editing, Hyung-Ju Cho; visualization, Kaleab Taye Asrat; supervision, Hyung-Ju Cho; project administration, Hyung-Ju Cho; funding acquisition, Hyung-Ju Cho. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF), funded by the Ministry of Education (NRF-2020R1I1A3052713).

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Delcker, J. The Man Who Invented the Self-Driving Car (in 1986). Available online: https://www.politico.eu/article/delf-driving-car-born-1986-ernst-dickmanns-mercedes/ (accessed on 14 February 2024).

- Chen, L.; Li, Y.; Huang, C.; Li, B.; Xing, Y.; Tian, D.; Li, L.; Hu, Z.; Na, X.; Li, Z.; et al. Milestones in Autonomous Driving and Intelligent Vehicles: Survey of Surveys. IEEE Trans. Intell. Veh. 2023, 8, 1046–1056. [Google Scholar] [CrossRef]

- Ziegler, J.; Bender, P.; Schreiber, M.; Lategahn, H.; Strauss, T.; Stiller, C.; Dang, T.; Franke, U.; Appenrodt, N.; Keller, C.G.; et al. Making Bertha Drive—An Autonomous Journey on a Historic Route. IEEE Intell. Transp. Syst. Mag. 2014, 6, 8–20. [Google Scholar] [CrossRef]

- Wong, K.; Gu, Y.; Kamijo, S. Mapping for Autonomous Driving: Opportunities and Challenges. IEEE Intell. Transp. Syst. Mag. 2021, 13, 91–106. [Google Scholar] [CrossRef]

- Bao, Z.; Hossain, S.; Lang, H.; Lin, X. A review of high-definition map creation methods for autonomous driving. Eng. Appl. Artif. Intell. 2023, 122, 106125. [Google Scholar] [CrossRef]

- Elghazaly, G.; Frank, R.; Harvey, S.; Safko, S. High-Definition Maps: Comprehensive Survey, Challenges, and Future Perspectives. IEEE Open J. Intell. Transp. Syst. 2023, 4, 527–550. [Google Scholar] [CrossRef]

- Dahlström, T. The Road to Everywhere: Are HD Maps for Autonomous Driving Sustainable? Available online: https://www.autonomousvehicleinternational.com/features/the-road-to-everywhere-are-hd-maps-for-autonomous-driving-sustainable.html (accessed on 14 February 2024).

- Ebrahimi Soorchaei, B.; Razzaghpour, M.; Valiente, R.; Raftari, A.; Fallah, Y.P. High-Definition Map Representation Techniques for Automated Vehicles. Electronics 2022, 11, 3374. [Google Scholar] [CrossRef]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11682–11692. [Google Scholar]

- Apollo. Available online: https://github.com/ApolloAuto/apollo (accessed on 6 June 2024).

- Level5, W.P. Rethinking Maps for Self-Driving. Available online: https://medium.com/wovenplanetlevel5/https-medium-com-lyftlevel5-rethinking-maps-for-self-driving-a147c24758d6 (accessed on 14 February 2024).

- Bučko, B.; Zábovská, K.; Ristvej, J.; Jánošíková, M. HD Maps and Usage of Laser Scanned Data as a Potential Map Layer. In Proceedings of the 2021 44th International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 27 September–1 October 2021; pp. 1670–1675. [Google Scholar] [CrossRef]

- Seif, H.G.; Hu, X. Autonomous Driving in the iCity—HD Maps as a Key Challenge of the Automotive Industry. Engineering 2016, 2, 159–162. [Google Scholar] [CrossRef]

- Bonetti, P. HERE Introduces HD LIVE Map to Show the Path to Highly Automated Driving. Available online: https://www.here.com/learn/blog/here-introduces-hd-live-map-to-show-the-path-to-highly-automated-driving (accessed on 1 March 2024).

- Lógó, J.M.; Barsi, A. The Role of Topology in High-Definition Maps for Autonomous Driving. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B4-2022, 383–388. [Google Scholar] [CrossRef]

- HERE. Introduction to Mapping Concepts—Localization Model. Available online: https://www.here.com/docs/bundle/introduction-to-mapping-concepts-user-guide/page/topics/model-local.html (accessed on 14 February 2024).

- TomTom. TomTom HD Maps. Available online: https://www.tomtom.com/products/hd-map/ (accessed on 14 February 2024).

- Garg, S.; Milford, M. Seqnet: Learning descriptors for sequence-based hierarchical place recognition. IEEE Robot. Autom. Lett. 2021, 6, 4305–4312. [Google Scholar] [CrossRef]

- Tao, Q.; Hu, Z.; Zhou, Z.; Xiao, H.; Zhang, J. SeqPolar: Sequence matching of polarized LiDAR map with HMM for intelligent vehicle localization. IEEE Trans. Veh. Technol. 2022, 71, 7071–7083. [Google Scholar] [CrossRef]

- Poggenhans, F.; Pauls, J.H.; Janosovits, J.; Orf, S.; Naumann, M.; Kuhnt, F.; Mayr, M. Lanelet2: A high-definition map framework for the future of automated driving. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1672–1679. [Google Scholar]

- Bender, P.; Ziegler, J.; Stiller, C. Lanelets: Efficient map representation for autonomous driving. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Ypsilanti, MI, USA, 8–11 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 420–425. [Google Scholar]

- ASAM OpenDRIVE. Available online: https://www.asam.net/standards/detail/opendrive/ (accessed on 14 February 2024).

- Gran, C.W. HD-Maps in Autonomous Driving. Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2019. [Google Scholar]

- Elhashash, M.; Albanwan, H.; Qin, R. A Review of Mobile Mapping Systems: From Sensors to Applications. Sensors 2022, 22, 4262. [Google Scholar] [CrossRef] [PubMed]

- Houston, J.L.; Zuidhof, G.C.A.; Bergamini, L.; Ye, Y.; Jain, A.; Omari, S.; Iglovikov, V.I.; Ondruska, P. One Thousand and One Hours: Self-driving Motion Prediction Dataset. In Proceedings of the 4th Conference on Robot Learning, Cambridge, MA, USA, 16–18 November 2020; pp. 409–418. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11621–11631. [Google Scholar]

- Chang, M.F.; Lambert, J.; Sangkloy, P.; Singh, J.; Bak, S.; Hartnett, A.; Wang, D.; Carr, P.; Lucey, S.; Ramanan, D.; et al. Argoverse: 3D Tracking and Forecasting With Rich Maps. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8740–8749. [Google Scholar] [CrossRef]

- Kim, C.; Cho, S.; Sunwoo, M.; Resende, P.; Bradaï, B.; Jo, K. Updating point cloud layer of high definition (hd) map based on crowd-sourcing of multiple vehicles installed lidar. IEEE Access 2021, 9, 8028–8046. [Google Scholar] [CrossRef]

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep Learning Sensor Fusion for Autonomous Vehicle Perception and Localization: A Review. Sensors 2020, 20, 4220. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access 2020, 8, 2847–2868. [Google Scholar] [CrossRef]

- Wang, X.; Li, K.; Chehri, A. Multi-Sensor Fusion Technology for 3D Object Detection in Autonomous Driving: A Review. IEEE Trans. Intell. Transp. Syst. 2024, 25, 1148–1165. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef] [PubMed]

- Zhong, H.; Wang, H.; Wu, Z.; Zhang, C.; Zheng, Y.; Tang, T. A survey of LiDAR and camera fusion enhancement. Procedia Comput. Sci. 2021, 183, 579–588. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3d object detection network for autonomous driving. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Shahian Jahromi, B.; Tulabandhula, T.; Cetin, S. Real-Time Hybrid Multi-Sensor Fusion Framework for Perception in Autonomous Vehicles. Sensors 2019, 19, 4357. [Google Scholar] [CrossRef]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-task multi-sensor fusion for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7337–7345. [Google Scholar]

- Thakur, A.; Rajalakshmi, P. LiDAR and Camera Raw Data Sensor Fusion in Real-Time for Obstacle Detection. In Proceedings of the 2023 IEEE Sensors Applications Symposium (SAS), Ottawa, ON, Canada, 18–20 July 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar]

- Xiao, Y.; Liu, Y.; Luan, K.; Cheng, Y.; Chen, X.; Lu, H. Deep LiDAR-Radar-Visual Fusion for Object Detection in Urban Environments. Remote Sens. 2023, 15, 4433. [Google Scholar] [CrossRef]

- Ravindran, R.; Santora, M.J.; Jamali, M.M. Camera, LiDAR, and Radar Sensor Fusion Based on Bayesian Neural Network (CLR-BNN). IEEE Sens. J. 2022, 22, 6964–6974. [Google Scholar] [CrossRef]

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, M. A Deep Learning-based Radar and Camera Sensor Fusion Architecture for Object Detection. In Proceedings of the 2019 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 15–17 October 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. arXiv 2017, arXiv:1711.06396. Available online: http://arxiv.org/abs/1711.06396 (accessed on 13 April 2024).

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. arXiv 2017, arXiv:1612.00593. Available online: http://arxiv.org/abs/1612.00593 (accessed on 13 April 2024).

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A comprehensive survey on point cloud registration. arXiv 2021, arXiv:2103.02690. Available online: http://arxiv.org/abs/2103.02690 (accessed on 13 April 2024).

- Chang, M.F.; Dong, W.; Mangelson, J.; Kaess, M.; Lucey, S. Map Compressibility Assessment for LiDAR Registration. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 5560–5567. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Zhang, Z. Iterative point matching for registration of free-form curves and surfaces. Int. J. Comput. Vis. 1994, 13, 119–152. [Google Scholar] [CrossRef]

- Zhang, J.; Yao, Y.; Deng, B. Fast and Robust Iterative Closest Point. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3450–3466. [Google Scholar] [CrossRef]

- Wang, F.; Zhao, Z. A survey of iterative closest point algorithm. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 4395–4399. [Google Scholar] [CrossRef]

- Myronenko, A.; Song, X. Point Set Registration: Coherent Point Drift. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2262–2275. [Google Scholar] [CrossRef]

- Liu, Z.; Yu, C.; Qiu, F.; Liu, Y. A Fast Coherent Point Drift Method for Rigid 3D Point Cloud Registration. In Proceedings of the 2023 42nd Chinese Control Conference (CCC), Tianjin, China, 24–26 July 2023; pp. 7776–7781. [Google Scholar] [CrossRef]

- Gao, W.; Tedrake, R. Filterreg: Robust and efficient probabilistic point-set registration using gaussian filter and twist parameterization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11095–11104. [Google Scholar]

- Wang, Y.; Solomon, J. Deep Closest Point: Learning Representations for Point Cloud Registration. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3522–3531. [Google Scholar] [CrossRef]

- Lu, W.; Wan, G.; Zhou, Y.; Fu, X.; Yuan, P.; Song, S. DeepVCP: An End-to-End Deep Neural Network for Point Cloud Registration. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 12–21. [Google Scholar] [CrossRef]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems V, Seattle, WA, USA, 28 June–1 July 2009; pp. 21–31. [Google Scholar] [CrossRef]

- Zhou, J.; Guo, Y.; Bian, Y.; Huang, Y.; Li, B. Lane Information Extraction for High Definition Maps Using Crowdsourced Data. IEEE Trans. Intell. Transp. Syst. 2023, 24, 7780–7790. [Google Scholar] [CrossRef]

- Homayounfar, N.; Liang, J.; Ma, W.C.; Fan, J.; Wu, X.; Urtasun, R. DAGMapper: Learning to Map by Discovering Lane Topology. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2911–2920. [Google Scholar] [CrossRef]

- Levi, D.; Garnett, N.; Fetaya, E.; Herzlyia, I. Stixelnet: A deep convolutional network for obstacle detection and road segmentation. In Proceedings of the BMVC, Swansea, UK, 7–10 September 2015; Volume 1, p. 4. [Google Scholar]

- Mohan, R. Deep Deconvolutional Networks for Scene Parsing. arXiv 2014, arXiv:1411.4101. Available online: http://arxiv.org/abs/1411.4101 (accessed on 13 April 2024).

- Ghafoorian, M.; Nugteren, C.; Baka, N.; Booij, O.; Hofmann, M. EL-GAN: Embedding Loss Driven Generative Adversarial Networks for Lane Detection. arXiv 2018, arXiv:1806.05525. Available online: http://arxiv.org/abs/1806.05525 (accessed on 13 April 2024).

- Plachetka, C.; Sertolli, B.; Fricke, J.; Klingner, M.; Fingscheidt, T. DNN-Based Map Deviation Detection in LiDAR Point Clouds. IEEE Open J. Intell. Transp. Syst. 2023, 4, 580–601. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. Available online: http://arxiv.org/abs/1804.02767 (accessed on 13 April 2024).

- Máttyus, G.; Luo, W.; Urtasun, R. DeepRoadMapper: Extracting Road Topology from Aerial Images. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3458–3466. [Google Scholar] [CrossRef]

- Bastani, F.; He, S.; Abbar, S.; Alizadeh, M.; Balakrishnan, H.; Chawla, S.; Madden, S.; DeWitt, D. RoadTracer: Automatic Extraction of Road Networks from Aerial Images. arXiv 2018, arXiv:1802.03680. Available online: http://arxiv.org/abs/1802.03680 (accessed on 13 April 2024).

- Ventura, C.; Pont-Tuset, J.; Caelles, S.; Maninis, K.K.; Gool, L.V. Iterative Deep Learning for Road Topology Extraction. arXiv 2018, arXiv:1808.09814. Available online: http://arxiv.org/abs/1808.09814 (accessed on 13 April 2024).

- Liu, R.; Wang, J.; Zhang, B. High Definition Map for Automated Driving: Overview and Analysis. J. Navig. 2020, 73, 324–341. [Google Scholar] [CrossRef]

- TaŞ, M.O.; Yavuz, S.; Yazici, A. Updating HD-Maps for Autonomous Transfer Vehicles in Smart Factories. In Proceedings of the 2018 6th International Conference on Control Engineering & Information Technology (CEIT), Istanbul, Turkey, 25–27 October 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Heo, M.; Kim, J.; Kim, S. HD Map Change Detection with Cross-Domain Deep Metric Learning. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10218–10224. [Google Scholar] [CrossRef]

- Jo, K.; Kim, C.; Sunwoo, M. Simultaneous Localization and Map Change Update for the High Definition Map-Based Autonomous Driving Car. Sensors 2018, 18, 3145. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014; Volume 2, pp. 1–9. [Google Scholar]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar] [CrossRef]

- Chen, X.; Milioto, A.; Palazzolo, E.; Giguere, P.; Behley, J.; Stachniss, C. Suma++: Efficient lidar-based semantic slam. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4530–4537. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System Mur-Artal, Raul and Montiel, Jose Maria Martinez and Tardos. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. arXiv 2020, arXiv:2007.00258. Available online: http://arxiv.org/abs/2007.00258 (accessed on 13 April 2024).

- Lin, J.; Zhang, F. R 3 LIVE: A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 10672–10678. [Google Scholar]

- Zheng, C.; Zhu, Q.; Xu, W.; Liu, X.; Guo, Q.; Zhang, F. FAST-LIVO: Fast and tightly-coupled sparse-direct LiDAR-inertial-visual odometry. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 4003–4009. [Google Scholar]

- Xu, W.; Zhang, F. FAST-LIO: A Fast, Robust LiDAR-Inertial Odometry Package by Tightly-Coupled Iterated Kalman Filter. IEEE Robot. Autom. Lett. 2021, 6, 3317–3324. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. Fast-lio2: Fast direct lidar-inertial odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Le Hong, Z.; Zimmerman, N. Air quality and greenhouse gas implications of autonomous vehicles in Vancouver, Canada. Transp. Res. Part D Transp. Environ. 2021, 90, 102676. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).