A Comprehensive Survey on High-Definition Map Generation and Maintenance

Abstract

:1. Introduction

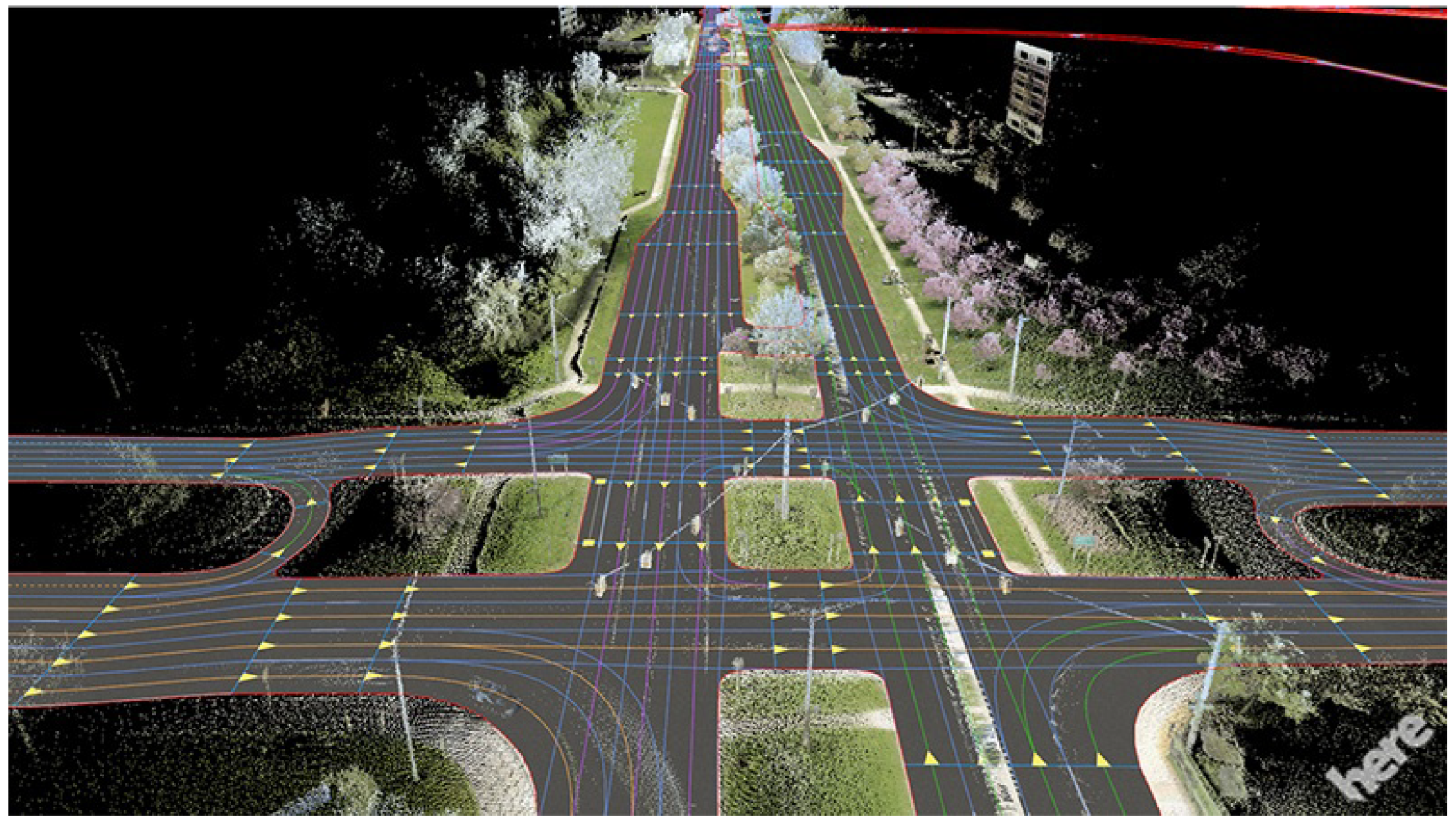

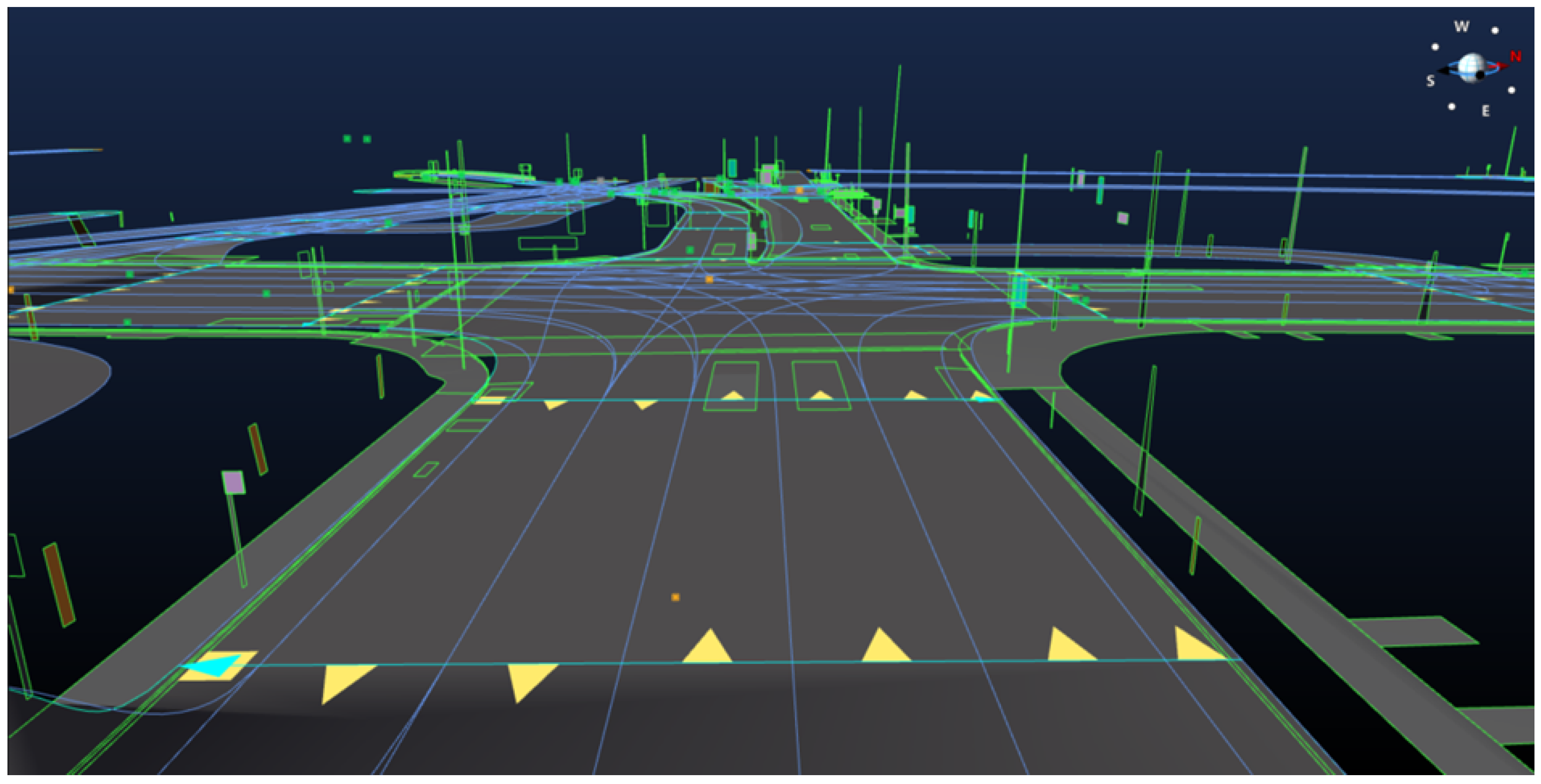

2. Basics of HD Maps

2.1. Topological Representation

2.2. Geometric Representation

2.3. Semantic Representation

2.4. Dynamic Elements

2.5. Feature-Based Map Layers

3. HD Map Generation Techniques

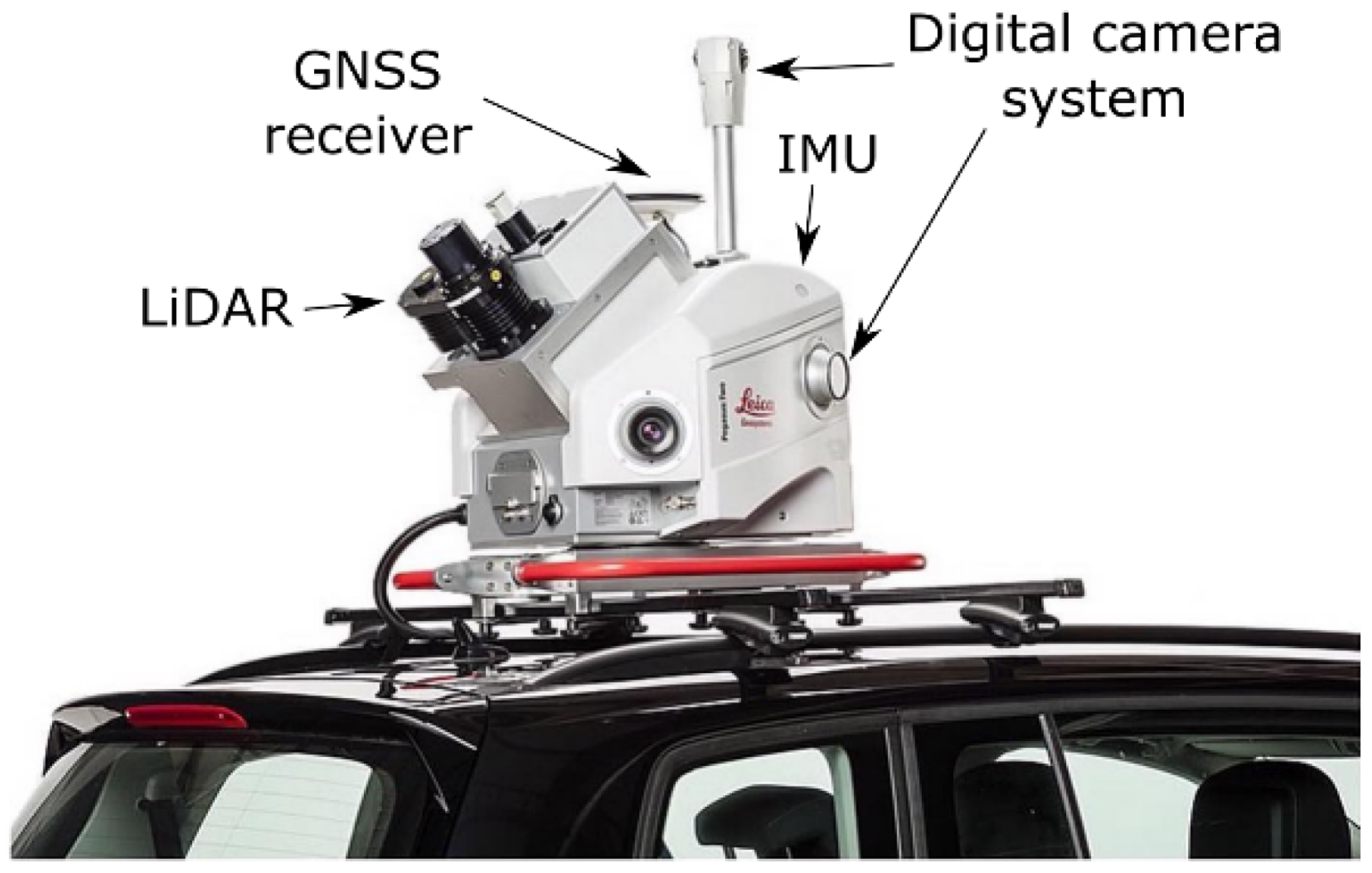

3.1. Data Collection

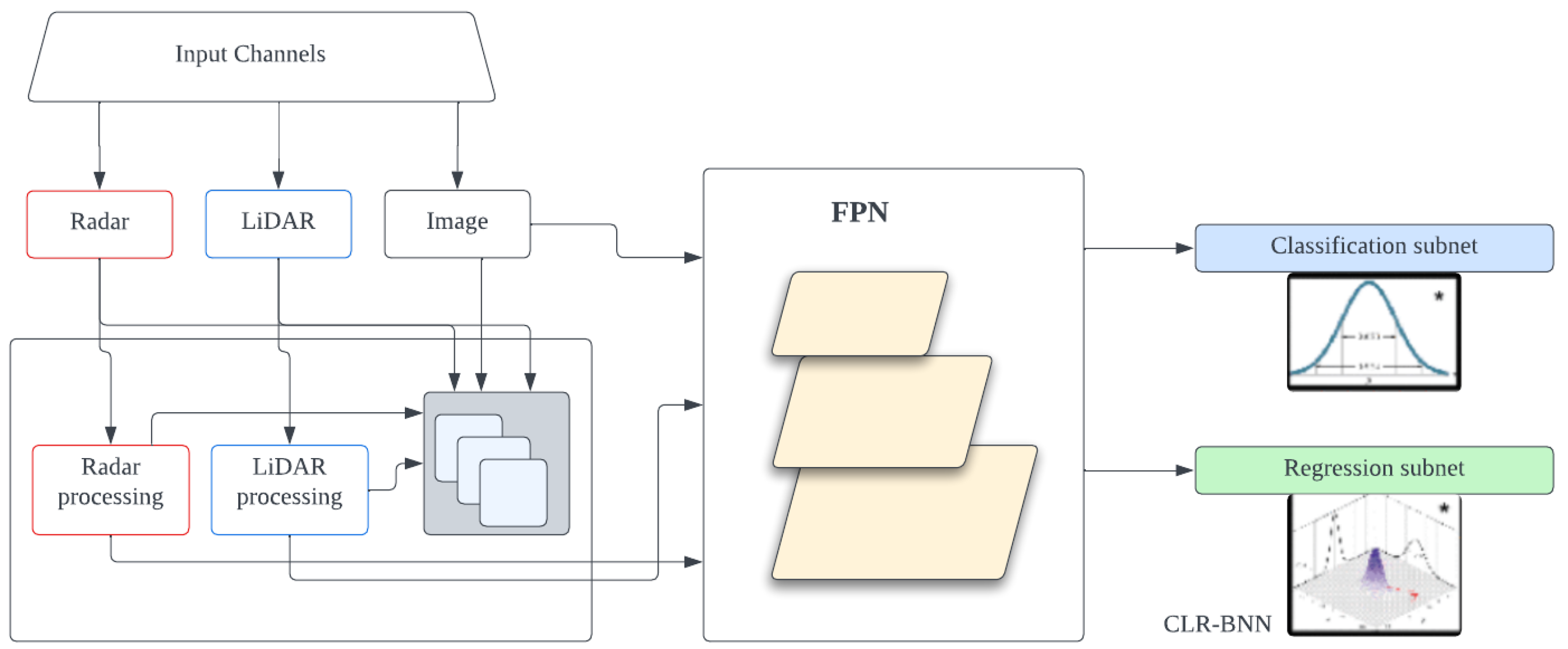

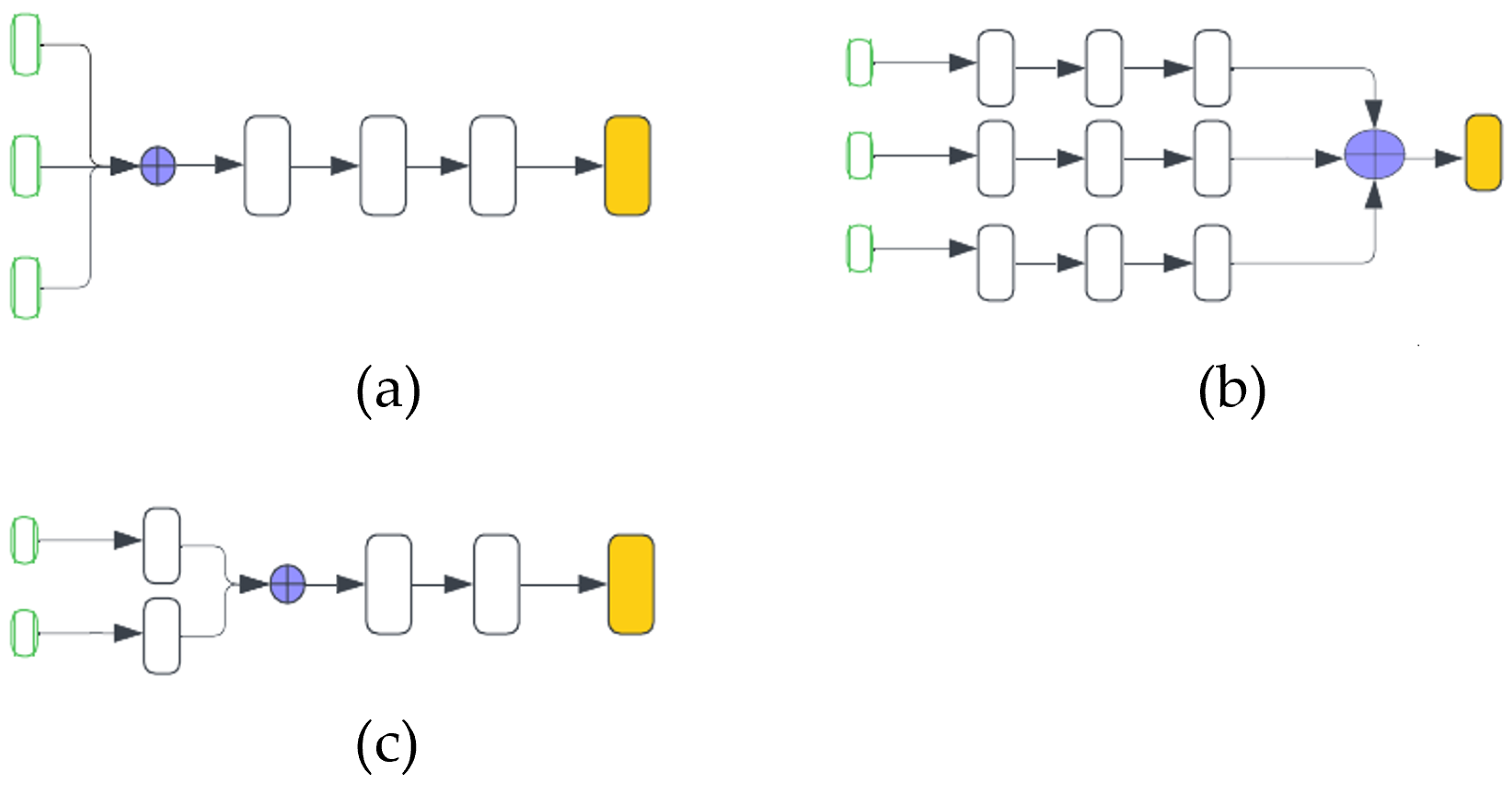

3.2. Sensor Fusion

3.3. Map Generation Algorithms

Point Cloud Registration Approaches

- Optimization-based approaches—Optimization-based approaches involve two steps, which are correspondence and transformation. The correspondence step finds corresponding points between the point clouds that are being registered. Establishing accurate correspondence is crucial for determining how the point clouds should be aligned. Common techniques for establishing correspondences include the nearest neighbor search, which finds the closest point in the target cloud for each point in the source cloud based on distance metrics such as Euclidean distance. The second step, the transformation step, estimates the optimal transformation and aligns the source point cloud with the target point cloud. Typically, the transformation consists of translation, rotation, and also scaling. Optimization-based approaches aim to minimize the value of a cost function that quantifies the discrepancy between corresponding points in the two point clouds. The iterative closest point (ICP) is one of the most widely used optimization-based methods [49]. ICP iteratively refines the transformation parameters until they converge to an optimum solution. The goal of the ICP algorithm is to align the point clouds optimally [50]. Over the years, numerous variants and improvements to the ICP algorithm have been proposed [51,52], contributing significantly to the advancement of point cloud registration techniques. These algorithms have had a profound impact on the creation of highly accurate HD maps, which are essential for various applications, including autonomous driving and robotics [48].

- Probabilistic-based approaches—While optimization-based approaches like ICP have proven beneficial for point cloud registration, they can encounter limitations when the point clouds have significant overlap or occlusions. In such scenarios, ICP derivative algorithms may converge to local optima, leading to registration failure. To address these limitations, probabilistic registration algorithms based on statistical models have gained traction in recent years, particularly those leveraging Gaussian mixture models (GMMs) [49] and the coherent point drift (CPD) algorithm [53].These probabilistic methods incorporate uncertainty into the registration process by estimating the posterior distribution of the transformation parameters. This approach is valuable for handling uncertain or noisy data, offering probabilistic estimates of the registration outcomes rather than deterministic solutions. GMM and CPD, along with their respective variants [54,55], are particularly useful when dealing with noisy or uncertain data, as they can provide probabilistic estimates of the registration results, accounting for the inherent uncertainties in the data.

- Feature-based approaches—Feature-based approaches for point cloud registration depart from traditional optimization-based methods by leveraging deep learning techniques to extract robust feature correspondences. These approaches focus on identifying distinctive key points or features from the point clouds, which serve as landmarks for establishing correspondences and facilitating registration. As highlighted in [47], these methodologies utilize deep neural networks as a powerful feature extraction mechanism, aiming to derive highly distinctive features that enable precise registration outcomes such as the SeqPolar method [19]. While feature-based approaches offer advantages in providing accurate correspondence searching and facilitating precise registrations, they also come with certain challenges. These methods typically require extensive training data to effectively learn the feature representations, and their performance may degrade when encountering unfamiliar scenes or environments that significantly deviate from the training data distribution.

- Deep learning techniques—Deep learning techniques, particularly convolutional neural networks (CNNs), attention-based models, and other advanced architectures, have emerged as powerful tools for point cloud registration tasks. These methods leverage the ability of deep networks to learn feature representations directly from the point cloud data and capture complex patterns and relationships, enabling robust registration. Deep learning-based registration approaches can be classified into two main categories: end-to-end learning methods, where the entire registration pipeline is learned from data and hybrid approaches that combine deep learning with traditional techniques. Examples of deep learning-based registration methods include the deep closest point (DCP) [56] and DeepVCP [57]. DeepVCP shown in Figure 8, in particular, is an end-to-end deep learning framework for 3D point cloud registration that has demonstrated superior performance compared to both optimization-based approaches [49,58] and probabilistic-based approaches [53]. By leveraging the representational power of deep networks, these methods have shown promising results in addressing the challenges of point cloud registration, outperforming traditional techniques in various scenarios.

- Point cloud data collected from sensors usually contain outliers, noise, and missing data, which can affect the registration process.

- Point cloud registration algorithms often involve computationally intensive operations and require substantial computational resources, such as memory and processing power.

- In situations where point clouds are non-overlapping or have limited overlap, it is difficult for the registration process to find the correspondence and visualize the environment.

- Imprecise calibration of sensors could also be introduced to the registration process. Sensors involved in the data collection need to be properly calibrated for increased accuracy.

- Density differences in point clouds could result either due to sensor differences or there could be variations across different regions of the scene. For example, in regions where the point cloud is sparse, it could be difficult to find correspondence between points leading to errors in the point cloud registration.

3.4. Feature Extraction for HD Map

4. Updating HD Maps

4.1. Importance of Regular Updates

4.2. Real-Time Data Sources

4.3. Automated Update Process

5. Challenges and Solutions

5.1. Environmental Challenges

5.2. Technical Challenges

5.3. Data Privacy and Security Concerns

6. Future Direction

6.1. Advances in Sensor Technologies

6.2. Integration of 5G and Edge Computing for Real-Time Updates

6.3. AI in HD Map Creation and Updating

6.4. Standardized HD Mapping Ecosystem

6.5. Autonomous Vehicles for Sustainability

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Delcker, J. The Man Who Invented the Self-Driving Car (in 1986). Available online: https://www.politico.eu/article/delf-driving-car-born-1986-ernst-dickmanns-mercedes/ (accessed on 14 February 2024).

- Chen, L.; Li, Y.; Huang, C.; Li, B.; Xing, Y.; Tian, D.; Li, L.; Hu, Z.; Na, X.; Li, Z.; et al. Milestones in Autonomous Driving and Intelligent Vehicles: Survey of Surveys. IEEE Trans. Intell. Veh. 2023, 8, 1046–1056. [Google Scholar] [CrossRef]

- Ziegler, J.; Bender, P.; Schreiber, M.; Lategahn, H.; Strauss, T.; Stiller, C.; Dang, T.; Franke, U.; Appenrodt, N.; Keller, C.G.; et al. Making Bertha Drive—An Autonomous Journey on a Historic Route. IEEE Intell. Transp. Syst. Mag. 2014, 6, 8–20. [Google Scholar] [CrossRef]

- Wong, K.; Gu, Y.; Kamijo, S. Mapping for Autonomous Driving: Opportunities and Challenges. IEEE Intell. Transp. Syst. Mag. 2021, 13, 91–106. [Google Scholar] [CrossRef]

- Bao, Z.; Hossain, S.; Lang, H.; Lin, X. A review of high-definition map creation methods for autonomous driving. Eng. Appl. Artif. Intell. 2023, 122, 106125. [Google Scholar] [CrossRef]

- Elghazaly, G.; Frank, R.; Harvey, S.; Safko, S. High-Definition Maps: Comprehensive Survey, Challenges, and Future Perspectives. IEEE Open J. Intell. Transp. Syst. 2023, 4, 527–550. [Google Scholar] [CrossRef]

- Dahlström, T. The Road to Everywhere: Are HD Maps for Autonomous Driving Sustainable? Available online: https://www.autonomousvehicleinternational.com/features/the-road-to-everywhere-are-hd-maps-for-autonomous-driving-sustainable.html (accessed on 14 February 2024).

- Ebrahimi Soorchaei, B.; Razzaghpour, M.; Valiente, R.; Raftari, A.; Fallah, Y.P. High-Definition Map Representation Techniques for Automated Vehicles. Electronics 2022, 11, 3374. [Google Scholar] [CrossRef]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11682–11692. [Google Scholar]

- Apollo. Available online: https://github.com/ApolloAuto/apollo (accessed on 6 June 2024).

- Level5, W.P. Rethinking Maps for Self-Driving. Available online: https://medium.com/wovenplanetlevel5/https-medium-com-lyftlevel5-rethinking-maps-for-self-driving-a147c24758d6 (accessed on 14 February 2024).

- Bučko, B.; Zábovská, K.; Ristvej, J.; Jánošíková, M. HD Maps and Usage of Laser Scanned Data as a Potential Map Layer. In Proceedings of the 2021 44th International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 27 September–1 October 2021; pp. 1670–1675. [Google Scholar] [CrossRef]

- Seif, H.G.; Hu, X. Autonomous Driving in the iCity—HD Maps as a Key Challenge of the Automotive Industry. Engineering 2016, 2, 159–162. [Google Scholar] [CrossRef]

- Bonetti, P. HERE Introduces HD LIVE Map to Show the Path to Highly Automated Driving. Available online: https://www.here.com/learn/blog/here-introduces-hd-live-map-to-show-the-path-to-highly-automated-driving (accessed on 1 March 2024).

- Lógó, J.M.; Barsi, A. The Role of Topology in High-Definition Maps for Autonomous Driving. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B4-2022, 383–388. [Google Scholar] [CrossRef]

- HERE. Introduction to Mapping Concepts—Localization Model. Available online: https://www.here.com/docs/bundle/introduction-to-mapping-concepts-user-guide/page/topics/model-local.html (accessed on 14 February 2024).

- TomTom. TomTom HD Maps. Available online: https://www.tomtom.com/products/hd-map/ (accessed on 14 February 2024).

- Garg, S.; Milford, M. Seqnet: Learning descriptors for sequence-based hierarchical place recognition. IEEE Robot. Autom. Lett. 2021, 6, 4305–4312. [Google Scholar] [CrossRef]

- Tao, Q.; Hu, Z.; Zhou, Z.; Xiao, H.; Zhang, J. SeqPolar: Sequence matching of polarized LiDAR map with HMM for intelligent vehicle localization. IEEE Trans. Veh. Technol. 2022, 71, 7071–7083. [Google Scholar] [CrossRef]

- Poggenhans, F.; Pauls, J.H.; Janosovits, J.; Orf, S.; Naumann, M.; Kuhnt, F.; Mayr, M. Lanelet2: A high-definition map framework for the future of automated driving. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1672–1679. [Google Scholar]

- Bender, P.; Ziegler, J.; Stiller, C. Lanelets: Efficient map representation for autonomous driving. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Ypsilanti, MI, USA, 8–11 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 420–425. [Google Scholar]

- ASAM OpenDRIVE. Available online: https://www.asam.net/standards/detail/opendrive/ (accessed on 14 February 2024).

- Gran, C.W. HD-Maps in Autonomous Driving. Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2019. [Google Scholar]

- Elhashash, M.; Albanwan, H.; Qin, R. A Review of Mobile Mapping Systems: From Sensors to Applications. Sensors 2022, 22, 4262. [Google Scholar] [CrossRef] [PubMed]

- Houston, J.L.; Zuidhof, G.C.A.; Bergamini, L.; Ye, Y.; Jain, A.; Omari, S.; Iglovikov, V.I.; Ondruska, P. One Thousand and One Hours: Self-driving Motion Prediction Dataset. In Proceedings of the 4th Conference on Robot Learning, Cambridge, MA, USA, 16–18 November 2020; pp. 409–418. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11621–11631. [Google Scholar]

- Chang, M.F.; Lambert, J.; Sangkloy, P.; Singh, J.; Bak, S.; Hartnett, A.; Wang, D.; Carr, P.; Lucey, S.; Ramanan, D.; et al. Argoverse: 3D Tracking and Forecasting With Rich Maps. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8740–8749. [Google Scholar] [CrossRef]

- Kim, C.; Cho, S.; Sunwoo, M.; Resende, P.; Bradaï, B.; Jo, K. Updating point cloud layer of high definition (hd) map based on crowd-sourcing of multiple vehicles installed lidar. IEEE Access 2021, 9, 8028–8046. [Google Scholar] [CrossRef]

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep Learning Sensor Fusion for Autonomous Vehicle Perception and Localization: A Review. Sensors 2020, 20, 4220. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access 2020, 8, 2847–2868. [Google Scholar] [CrossRef]

- Wang, X.; Li, K.; Chehri, A. Multi-Sensor Fusion Technology for 3D Object Detection in Autonomous Driving: A Review. IEEE Trans. Intell. Transp. Syst. 2024, 25, 1148–1165. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef] [PubMed]

- Zhong, H.; Wang, H.; Wu, Z.; Zhang, C.; Zheng, Y.; Tang, T. A survey of LiDAR and camera fusion enhancement. Procedia Comput. Sci. 2021, 183, 579–588. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3d object detection network for autonomous driving. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Shahian Jahromi, B.; Tulabandhula, T.; Cetin, S. Real-Time Hybrid Multi-Sensor Fusion Framework for Perception in Autonomous Vehicles. Sensors 2019, 19, 4357. [Google Scholar] [CrossRef]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-task multi-sensor fusion for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7337–7345. [Google Scholar]

- Thakur, A.; Rajalakshmi, P. LiDAR and Camera Raw Data Sensor Fusion in Real-Time for Obstacle Detection. In Proceedings of the 2023 IEEE Sensors Applications Symposium (SAS), Ottawa, ON, Canada, 18–20 July 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar]

- Xiao, Y.; Liu, Y.; Luan, K.; Cheng, Y.; Chen, X.; Lu, H. Deep LiDAR-Radar-Visual Fusion for Object Detection in Urban Environments. Remote Sens. 2023, 15, 4433. [Google Scholar] [CrossRef]

- Ravindran, R.; Santora, M.J.; Jamali, M.M. Camera, LiDAR, and Radar Sensor Fusion Based on Bayesian Neural Network (CLR-BNN). IEEE Sens. J. 2022, 22, 6964–6974. [Google Scholar] [CrossRef]

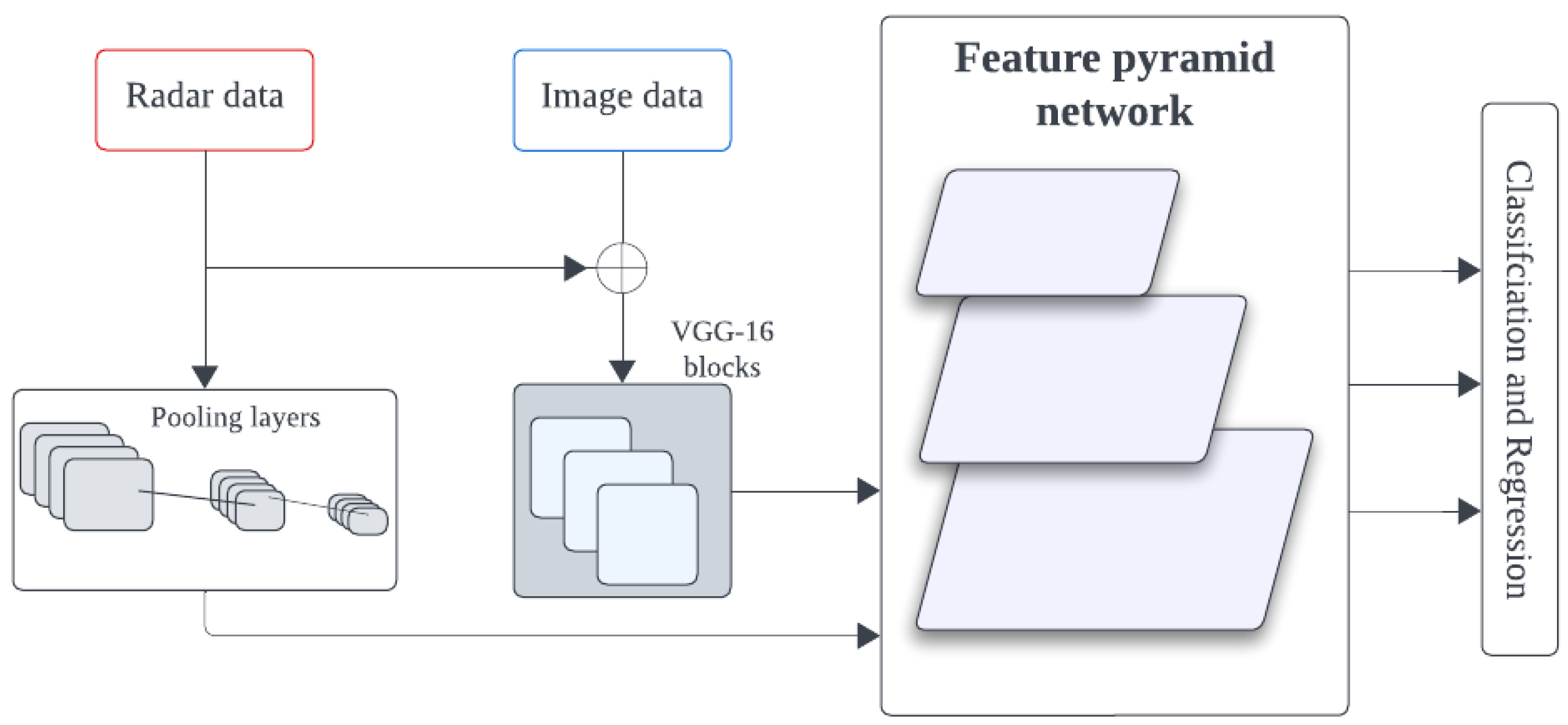

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, M. A Deep Learning-based Radar and Camera Sensor Fusion Architecture for Object Detection. In Proceedings of the 2019 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 15–17 October 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. arXiv 2017, arXiv:1711.06396. Available online: http://arxiv.org/abs/1711.06396 (accessed on 13 April 2024).

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. arXiv 2017, arXiv:1612.00593. Available online: http://arxiv.org/abs/1612.00593 (accessed on 13 April 2024).

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A comprehensive survey on point cloud registration. arXiv 2021, arXiv:2103.02690. Available online: http://arxiv.org/abs/2103.02690 (accessed on 13 April 2024).

- Chang, M.F.; Dong, W.; Mangelson, J.; Kaess, M.; Lucey, S. Map Compressibility Assessment for LiDAR Registration. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 5560–5567. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Zhang, Z. Iterative point matching for registration of free-form curves and surfaces. Int. J. Comput. Vis. 1994, 13, 119–152. [Google Scholar] [CrossRef]

- Zhang, J.; Yao, Y.; Deng, B. Fast and Robust Iterative Closest Point. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3450–3466. [Google Scholar] [CrossRef]

- Wang, F.; Zhao, Z. A survey of iterative closest point algorithm. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 4395–4399. [Google Scholar] [CrossRef]

- Myronenko, A.; Song, X. Point Set Registration: Coherent Point Drift. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2262–2275. [Google Scholar] [CrossRef]

- Liu, Z.; Yu, C.; Qiu, F.; Liu, Y. A Fast Coherent Point Drift Method for Rigid 3D Point Cloud Registration. In Proceedings of the 2023 42nd Chinese Control Conference (CCC), Tianjin, China, 24–26 July 2023; pp. 7776–7781. [Google Scholar] [CrossRef]

- Gao, W.; Tedrake, R. Filterreg: Robust and efficient probabilistic point-set registration using gaussian filter and twist parameterization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11095–11104. [Google Scholar]

- Wang, Y.; Solomon, J. Deep Closest Point: Learning Representations for Point Cloud Registration. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3522–3531. [Google Scholar] [CrossRef]

- Lu, W.; Wan, G.; Zhou, Y.; Fu, X.; Yuan, P.; Song, S. DeepVCP: An End-to-End Deep Neural Network for Point Cloud Registration. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 12–21. [Google Scholar] [CrossRef]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems V, Seattle, WA, USA, 28 June–1 July 2009; pp. 21–31. [Google Scholar] [CrossRef]

- Zhou, J.; Guo, Y.; Bian, Y.; Huang, Y.; Li, B. Lane Information Extraction for High Definition Maps Using Crowdsourced Data. IEEE Trans. Intell. Transp. Syst. 2023, 24, 7780–7790. [Google Scholar] [CrossRef]

- Homayounfar, N.; Liang, J.; Ma, W.C.; Fan, J.; Wu, X.; Urtasun, R. DAGMapper: Learning to Map by Discovering Lane Topology. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2911–2920. [Google Scholar] [CrossRef]

- Levi, D.; Garnett, N.; Fetaya, E.; Herzlyia, I. Stixelnet: A deep convolutional network for obstacle detection and road segmentation. In Proceedings of the BMVC, Swansea, UK, 7–10 September 2015; Volume 1, p. 4. [Google Scholar]

- Mohan, R. Deep Deconvolutional Networks for Scene Parsing. arXiv 2014, arXiv:1411.4101. Available online: http://arxiv.org/abs/1411.4101 (accessed on 13 April 2024).

- Ghafoorian, M.; Nugteren, C.; Baka, N.; Booij, O.; Hofmann, M. EL-GAN: Embedding Loss Driven Generative Adversarial Networks for Lane Detection. arXiv 2018, arXiv:1806.05525. Available online: http://arxiv.org/abs/1806.05525 (accessed on 13 April 2024).

- Plachetka, C.; Sertolli, B.; Fricke, J.; Klingner, M.; Fingscheidt, T. DNN-Based Map Deviation Detection in LiDAR Point Clouds. IEEE Open J. Intell. Transp. Syst. 2023, 4, 580–601. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. Available online: http://arxiv.org/abs/1804.02767 (accessed on 13 April 2024).

- Máttyus, G.; Luo, W.; Urtasun, R. DeepRoadMapper: Extracting Road Topology from Aerial Images. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3458–3466. [Google Scholar] [CrossRef]

- Bastani, F.; He, S.; Abbar, S.; Alizadeh, M.; Balakrishnan, H.; Chawla, S.; Madden, S.; DeWitt, D. RoadTracer: Automatic Extraction of Road Networks from Aerial Images. arXiv 2018, arXiv:1802.03680. Available online: http://arxiv.org/abs/1802.03680 (accessed on 13 April 2024).

- Ventura, C.; Pont-Tuset, J.; Caelles, S.; Maninis, K.K.; Gool, L.V. Iterative Deep Learning for Road Topology Extraction. arXiv 2018, arXiv:1808.09814. Available online: http://arxiv.org/abs/1808.09814 (accessed on 13 April 2024).

- Liu, R.; Wang, J.; Zhang, B. High Definition Map for Automated Driving: Overview and Analysis. J. Navig. 2020, 73, 324–341. [Google Scholar] [CrossRef]

- TaŞ, M.O.; Yavuz, S.; Yazici, A. Updating HD-Maps for Autonomous Transfer Vehicles in Smart Factories. In Proceedings of the 2018 6th International Conference on Control Engineering & Information Technology (CEIT), Istanbul, Turkey, 25–27 October 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Heo, M.; Kim, J.; Kim, S. HD Map Change Detection with Cross-Domain Deep Metric Learning. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10218–10224. [Google Scholar] [CrossRef]

- Jo, K.; Kim, C.; Sunwoo, M. Simultaneous Localization and Map Change Update for the High Definition Map-Based Autonomous Driving Car. Sensors 2018, 18, 3145. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014; Volume 2, pp. 1–9. [Google Scholar]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar] [CrossRef]

- Chen, X.; Milioto, A.; Palazzolo, E.; Giguere, P.; Behley, J.; Stachniss, C. Suma++: Efficient lidar-based semantic slam. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4530–4537. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System Mur-Artal, Raul and Montiel, Jose Maria Martinez and Tardos. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. arXiv 2020, arXiv:2007.00258. Available online: http://arxiv.org/abs/2007.00258 (accessed on 13 April 2024).

- Lin, J.; Zhang, F. R 3 LIVE: A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 10672–10678. [Google Scholar]

- Zheng, C.; Zhu, Q.; Xu, W.; Liu, X.; Guo, Q.; Zhang, F. FAST-LIVO: Fast and tightly-coupled sparse-direct LiDAR-inertial-visual odometry. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 4003–4009. [Google Scholar]

- Xu, W.; Zhang, F. FAST-LIO: A Fast, Robust LiDAR-Inertial Odometry Package by Tightly-Coupled Iterated Kalman Filter. IEEE Robot. Autom. Lett. 2021, 6, 3317–3324. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. Fast-lio2: Fast direct lidar-inertial odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Le Hong, Z.; Zimmerman, N. Air quality and greenhouse gas implications of autonomous vehicles in Vancouver, Canada. Transp. Res. Part D Transp. Environ. 2021, 90, 102676. [Google Scholar] [CrossRef]

| Layer | BERTHA Drive | TomTom | HERE | Lyft Level 5 |

|---|---|---|---|---|

| 1 | Road network | Navigation data | HD road | Base map |

| 2 | Lane level map | Planning data | HD lanes | Geometric map |

| 3 | Landmarks/Road marking map | HD localization | HD localization | Semantic map |

| 4 | - | - | - | Map Priors layer |

| 5 | - | - | - | Real-time layer |

| Format | Description | File Format | Representations |

|---|---|---|---|

| OpenDRIVE | Standard for logical description of road networks | XML | Geometric primitives |

| Lanelet2 | HD map format for autonomous driving | XML, OSM | Points |

| NDS | Global standard for automotive map data | Binary | Vector data |

| Approach | Advantages | Disadvantages |

|---|---|---|

| Open-source dataset | Easily accessible | Dataset might be specific to some area |

| Saves time | Not actively updated | |

| Self-collected dataset | Well-fitted for specific research | Costly compared with using open-source datasets |

| Time-consuming | ||

| Crowdsourcing | Covers a wide area | High cost |

| Data continuously updated | Data preprocessing and labeling could be a difficult task |

| Factors | LiDAR | Camera | Radar |

|---|---|---|---|

| Accuracy | Higher accuracy in measuring distances and capturing 3D point cloud data | Lower accuracy in depth perception as compared to LiDAR | Good accuracy in detecting objects even in adverse weather conditions |

| Range | Shorter range | Limited range | Longer range |

| Resolution | High spatial resolution | High resolution images | Lower resolution |

| Environmental Suitability | Affected by adverse weather conditions such as rain, snow, or fog | Affected by changes in lighting conditions, such as glare and low visibility, reflection | Less affected by environmental factors |

| Cost | Expensive | Affordable compared to LiDAR and Radar | Relatively cost-effective compared to LiDAR |

| Power Consumption | Higher power consumption | Lower power consumption | Moderate power consumption |

| Lane Detection | Less contribution | Very effective | Less contribution |

| Approaches | Advantages | Limitations |

|---|---|---|

| Optimization-based | Well-established methods | Sensitive to initial alignment errors |

| Generally computationally efficient | May struggle with noise and outliers | |

| Effective for rigid and affine transformations | May require manual parameter tuning | |

| Probabilistic-based | Can handle uncertain or noisy data | Computational complexity may be high |

| Provides probabilistic estimates of registration results | May require significant training data | |

| Performance may degrade in unfamiliar scenes | ||

| Feature-based | Robust feature correspondence searching | Dependence on feature extraction quality |

| Facilitates precise registration outcomes | Requires extensive training data | |

| Performance may degrade in unfamiliar scenes with significant distribution differences | ||

| Deep learning-based | Capable of learning complex patterns and relationships | May require large amounts of training data |

| Can improve robustness and accuracy | Training and inference may be computationally expensive | |

| Can handle non-linear transformations |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asrat, K.T.; Cho, H.-J. A Comprehensive Survey on High-Definition Map Generation and Maintenance. ISPRS Int. J. Geo-Inf. 2024, 13, 232. https://doi.org/10.3390/ijgi13070232

Asrat KT, Cho H-J. A Comprehensive Survey on High-Definition Map Generation and Maintenance. ISPRS International Journal of Geo-Information. 2024; 13(7):232. https://doi.org/10.3390/ijgi13070232

Chicago/Turabian StyleAsrat, Kaleab Taye, and Hyung-Ju Cho. 2024. "A Comprehensive Survey on High-Definition Map Generation and Maintenance" ISPRS International Journal of Geo-Information 13, no. 7: 232. https://doi.org/10.3390/ijgi13070232