This section is divided into two parts. The first part introduces the r.sun solar radiation model and then delves into the conceptualization and key technologies of the 3D extension. The second part is focused on the software architecture and business logic of Solar3D.

2.1. The Solar Radiation Model

The r.sun model breaks the global solar radiation into three components: the beam (direct) radiation, the diffuse radiation and the reflective radiation [

2]. The beam irradiation is usually the largest component and the only one that accounts for direct shadowing effect, which is a major factor determining the accessibility of solar energy in urban environments. The clear sky beam irradiance on a horizontal surface

Bhc [W.m-2], which is the solar energy that traverses the atmosphere to reach a horizontal surface, is derived using the following Equation (1) [

2]:

where

TLK,

G0,

m and

δR(

m) are, respectively the air mass Linke turbidity factor, the extraterrestrial irradiance normal to the solar beam, the solar altitude and the Rayleigh optical thickness, and

Bhc is converted into the clear sky beam irradiance on an inclined surface

Bic [W.m-2] using the following Equation (2) [

2]:

where

Mshadow is the shadowing effect determined by the solar vector

Vsun and the shadow casting objects in the scene. The solar vector

Vsun is determined by the solar azimuth angle (θ) and the solar altitude angle (φ).

δexp is the solar incidence angle measured between the sun and an inclined surface described by the slope and aspect angle.

Mshadow a binary shadow mask which returns 0 when the direct-beam light of the sun is blocked or otherwise returns 1. When applying the r.sun model to 3D-city models instead of 2D raster maps, a major technical challenge is to calculate the shadow mask accurately and rapidly for each time step.

Ray casting is a conventional and accurate shading evaluation method [

4]. When performing ray casting, a ray oriented in the target direction is cast to intersect with all triangles in the 3D scene; therefore, this method is computationally intensive. Computation performance is especially critical for calculating long-duration irradiation with high temporal resolution. For example, when calculating annual solar irradiation with a temporal resolution of 10 min, a total of 8760 × 6 rays need to be cast for the shading for each time step to be evaluated and, moreover, the time cost of casting a ray is directly correlated with the geometric complexity of the scene.

An alternative approach to evaluate shading is to produce a shadow map from the solar position for each time step [

8]. Shadow mapping can be easily implemented on the GPU to provide real-time rendering. However, shadow mapping is susceptible to various quality issues associated with perspective aliasing, projective aliasing and insufficient depth precision [

9]. Moreover, when performing time-resolved shading evaluation with shadow maps for a specific location, the shadow mask of each time step needs to be evaluated at a different image-space location on a separate shadow map. Therefore, the results could be subject to notable spatiotemporal uncertainty.

Hemispherical photography is another approach to evaluating shading and estimating solar irradiation [

11]. In hemispherical photography, a fisheye camera with a 360-degree horizontal view and a 180-degree vertical view is placed at the ground looking upward, producing a hemispherical photograph in which all sky directions are simultaneously visible. As the visibility in all sky directions are preserved in the resulting hemispherical photograph, it can be used to determine if the direct beam of the sun is obstructed for any given time of the year.

One of the goals of Solar3D is to provide accurate pointwise estimates of hourly to annual irradiation with high temporal resolution. Having reviewed the three main shading evaluation techniques with this goal in mind, we endeavored to follow the hemispherical photography approach based on the following considerations. (1) In terms of geometric accuracy and uncertainty, given a sufficient image resolution, it is theoretically nearly as accurate as ray casting, and it is not subject to the notable spatiotemporal uncertainty that is associated with shadow mapping; (2) In terms of computation efficiency, theoretically, it scales better with geometric complexity than ray casting; therefore, it can compute faster with 3D-city models, which typically have very high geometric complexity. Furthermore, although shadow mapping may be faster in areal computation, we are focused on accurate pointwise computation; therefore, sacrificing accuracy for performance is not an ideal option.

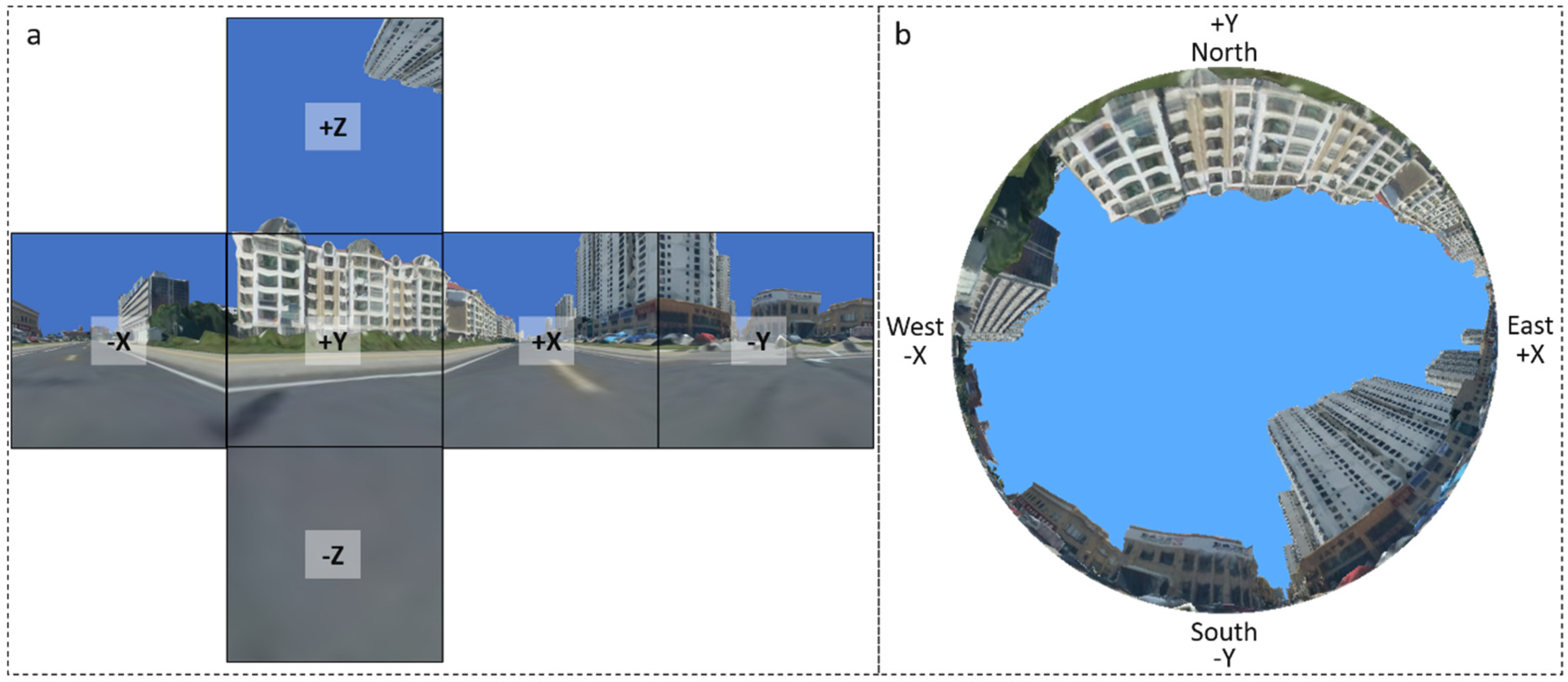

A hemispherical photograph is essentially a 3D panorama projected onto a circle in a 2D image (

Figure 1b). A hemispherical projection can result in oversampling, under-stamping and image distortion. To avoid these issues, instead of relying directly on a projected hemispherical photograph for shading evaluation, we use a 360-panoram in its native form.

In computer graphics, cube mapping is a common technique that is used to preserve a 360-degree panoramic snapshot of the surrounding environment at a given location [

12]. A cube map (

Figure 1a) is composed of six images facing north (positive Y), south (negative Y), east (positive X), west (negative X), the sky (positive Z) and the ground (negative Z). To generate a cube map of a scene, the scene needs to be rendered once for each of the cube’s face. We use OpenGL and the GLSL shading language to generate a cube map as follows [

13]:

- (1)

Allocate a render target texture (RTT) with an alpha channel for each cube map face;

- (2)

Construct a camera with a 90-degree horizontal and vertical view angle at the given location in the scene;

- (3)

For each of the six cube map faces, set up the camera so it is aligned in the direction of the cube map face and initialize the RRT with a transparent background (alpha = 0) and then render the scene offscreen to the associated RTT. The scene must be set up so that it is not enclosed or obstructed by any objects that are not part of the scene, for example by a sky box, so that only the potential shadow-casting objects in the scene will pass the z-buffering test and be shaded with nonzero alpha values. Hence, in the resulting cube map face images, the sky and non-sky pixels can be distinguished by their respective alpha mask values.

At this stage, the shadow mask needed for each time step for use in Equation (2) can be easily determined by looking up the classified cube map image pixels. When looking up a cube map to access the pixel for a given solar position in spherical coordinates , the following steps are performed:

- (1)

Determine the cube map face to look up. As all six cube map faces have a 90-degree horizontal and vertical view angle, the cube map face index can be determined using the solar position (θ, φ) by following the logic expressed in Equation (3):

- (2)

Project the solar position into the image space and fetch the pixel at the resulting image coordinates. The image-space coordinates are obtained using Equation (4):

where

are the resulting image-space coordinates,

are the Cartesian coordinates of

,

are the view and projection matrix of the associated cube map face camera, respectively.

Finally, in addition to the shadow masks, to calculate the irradiation on an inclined surface in a 3D-city model using r.sun, the remaining information needed by r.sun includes the slope and aspect of the surface, which can be easily derived from the surface normal vector [

4].

2.2. The Computation and Software Framework

The core framework is constructed by integrating the r.sun solar radiation model into a 3D-graphics engine, OpenSceneGraph [

14], an OpenGL-based 3D-graphics toolkit widely used in visualization and simulation. OpenSceneGraph is essentially an OpenGL state manager with extended support for scene graph and data management. The source code of the r.sun model was detached from the GRASS GIS repository and integrated into the Solar3D codebase, and it was modified so that the r.sun model can be programmatically called to calculate irradiation with custom parameters. The reasons for choosing OpenSceneGraph are multifold: first, OpenSceneGraph provides user-friendly, object-oriented access to OpenGL interfaces; second, OpenSceneGraph provides built-in support for interactive rendering and loading of a wide variety of common 3D-model formats including osg, ive, 3ds, dae, obj, x, fbx and flt; third, OpenSceneGraph supports smooth loading and rendering of massive OAP3Ds, which are already being widely used in urban and energy planning. Once exported from image-based 3D-reconstruction tools such as Esri Drone2Map and Skyline PhotoMesh into OpenSceneGraph’s Paged LOD format, OAP3Ds can be rapidly loaded into OpenSceneGraph for view-dependent data streaming and rendering. The r.sun model in Solar3D also relies on OpenSceneGraph for supplying the key parameters needed for irradiation calculation. (1) location identified at a 3D surface; (2) slope and aspect angles of the surface; (3) time-resolved shadow masks evaluated from a cube map rendered at the identified position.

OpenSceneGraph, as the rendering engine in Solar3D, serves several purposes. (1) it is used to render 3D-city models that come in different formats, including OAP3Ds, CAD models and procedurally generated 3D models such as those extruded from building footprints; (2) it is used to render the scene into cube maps for shading evaluation; (3) it renders the UI that gathers user input and provides feedback; (4) it handles user device input, primarily moue actions, so that users can interact with the UI and the 3D scene.

The business logic of the core framework works in a loop triggered by user requests (

Figure 2): (1) a user request is started by mouse-clicking at an exposed surface in a 3D scene rendered in an OpenSceneGraph view overlaid with the Solar3D user interface (UI) elements; (2) the 3D position, slope and aspect angle are derived from the clicked surface; (3) a cube map is rendered at the 3D position as described above; (4) all required model input [

15], including the geographic location (latitude, longitude, elevation), Linkie turbidity factor, duration (start day and end day), temporal resolution (in decimal hours), slope, aspect and shadow masks for each time step, is gathered, compiled and fed to r.sun for calculating irradiation. The shadow masks are obtained by sampling the cube map with the solar altitude and azimuth angle for each time step; (5) the r.sun model is run with the supplied input to generate the global, beam, diffuse and reflective irradiation values for the given location; (6) the r.sun-generated irradiation results are returned to the Solar3D UI for immediate display.

To better facilitate urban and energy planning, the core framework is further extended by integrating into a 3D-GIS framework (

Figure 2), osgEarth [

16], an OpenSceneGraph-based 3D geospatial library used to author and render planetary- to local-scale 3D GIS scenes with support for most common GIS content formats, including DSMs, DSMs, local imagery, web map services, web feature service and Esri Shapefile. With the 3D GIS extension, Solar3D can serve more specialized and advanced user needs, including. (1) hosting multiple 3D-city models distributed over a large geographic region; (2) overlaying 3D-city models on top of custom basemaps to provide an enriched geographic context in support of energy analysis and decision making; (3) incorporating the topography surrounding a 3D-city model into shading evaluation; (4) interactively calculating solar irradiation with only DSMs.

Users can start OAP3D with an osgEarth scene by providing a xml configuration file (.earth) authored following osgEarth’s scene authoring specifications. An osgEarth scene (.earth) starts with a map node. A map can be created either as a global or a local scene by specifying the type attribute (type = “geocentric” for a global scene or type = “projected” for a local scene) in the map node. Under the map node, users can add different layer nodes, including image layers, feature layers, elevation layers, model layers and annotations. A layer node is created by providing the layer type, data source and other data symbology and rendering attributes. For example, an image layer can be added by providing the driver attribute (TMS, GDAL, WMS, ArcGIS) and URL attribute (e.g.,

http://readymap.org/readymap/tiles/1.0.0/22/).

The code was written in C++ and complied in Visual Studio 2019 on Windows 10. The three main dependent libraries used, OpenSceneGraph, osgEarth and Qt5, were all pulled from vcpkg [

17], a C++ package manager for Windows, Linux and MacOS, and therefore Solar3D can potentially be complied on Linux and MacOS with additional work to set up the build environment. Solar3D is publicly available at

https://github.com/jian9695/Solar3D.