Thermal Infrared Orthophoto Geometry Correction Using RGB Orthophoto for Unmanned Aerial Vehicle

Abstract

1. Introduction

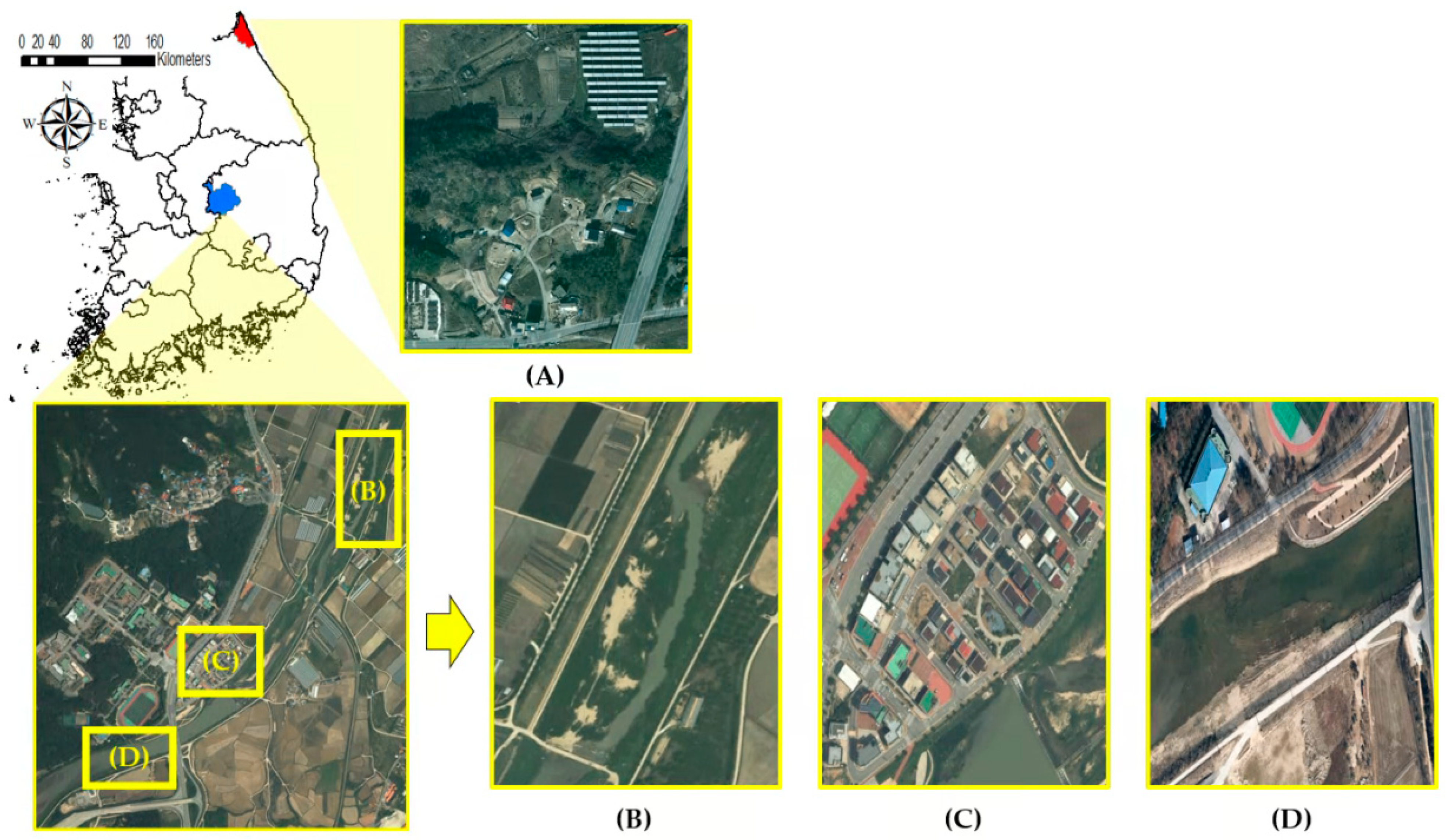

2. Materials

2.1. Study Area and Equipment

2.2. Data Acquisition

2.2.1. UAV Data Acquisition

2.2.2. GNSS Data Acquisition

3. Method

3.1. RGB Orthophoto Generation

3.2. TIR Orthophoto Generation

3.2.1. TIR Orthophoto Generation (Zenmuse XT)

3.2.2. TIR Orthophoto Generation (Zenmuse H20T)

3.3. Geometric Correction between Orthophotos

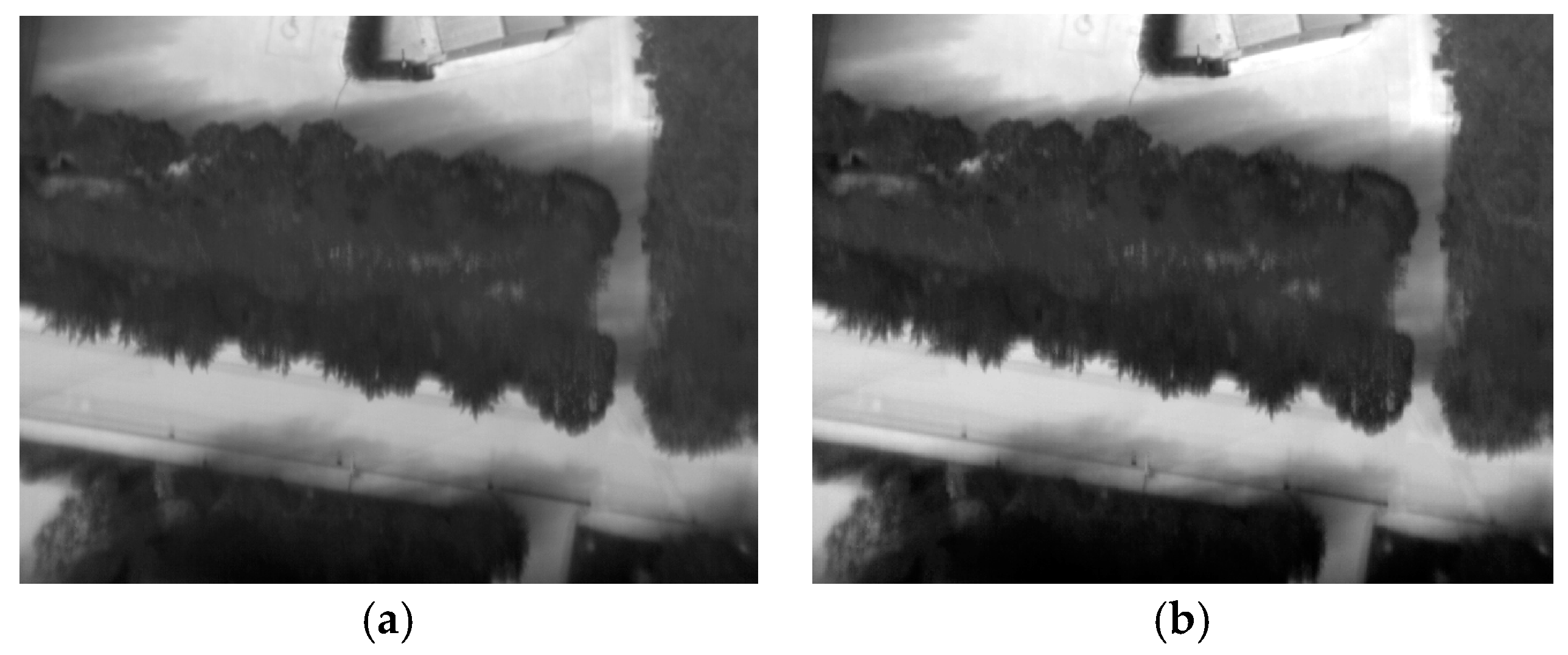

3.3.1. Preprocessing

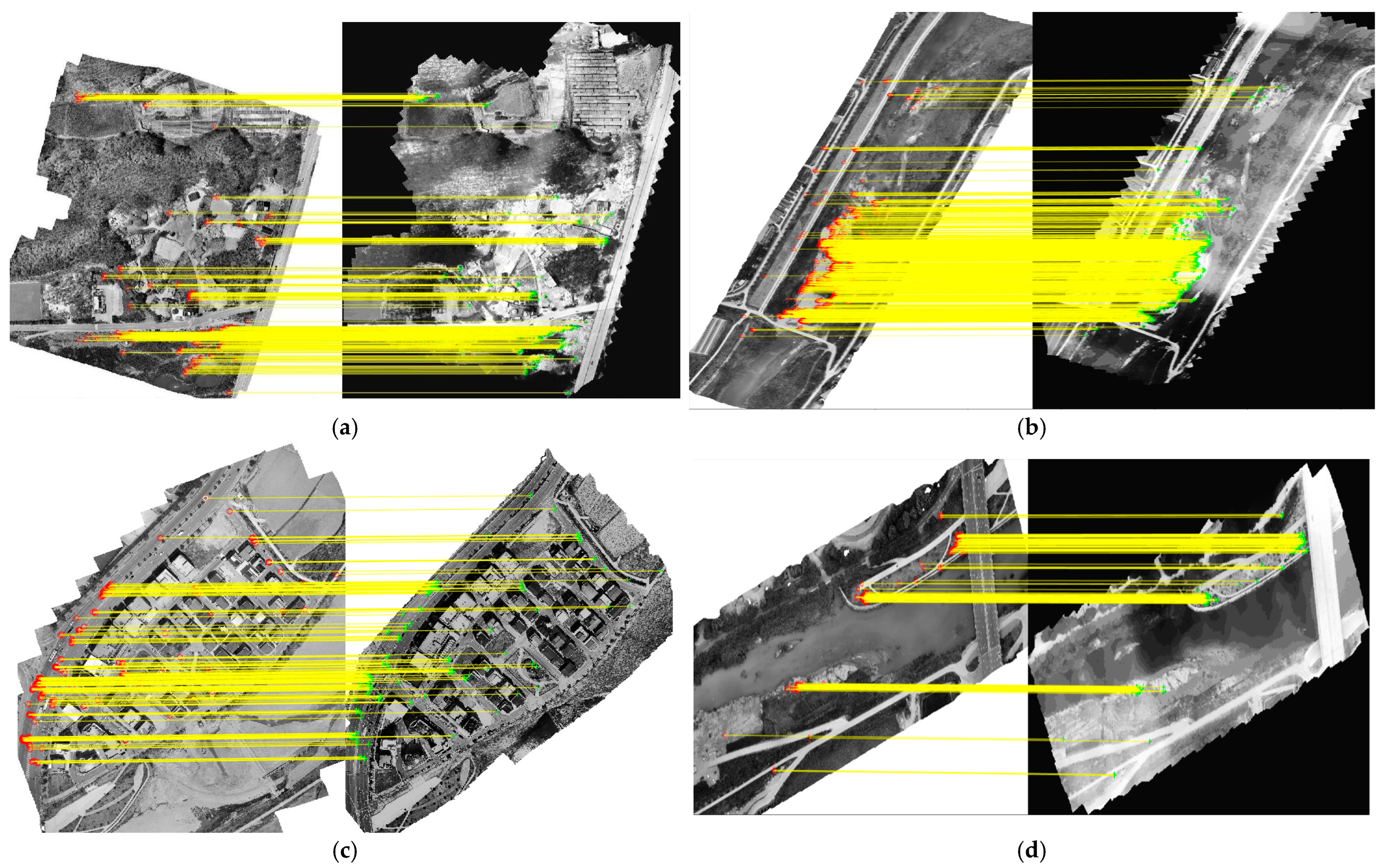

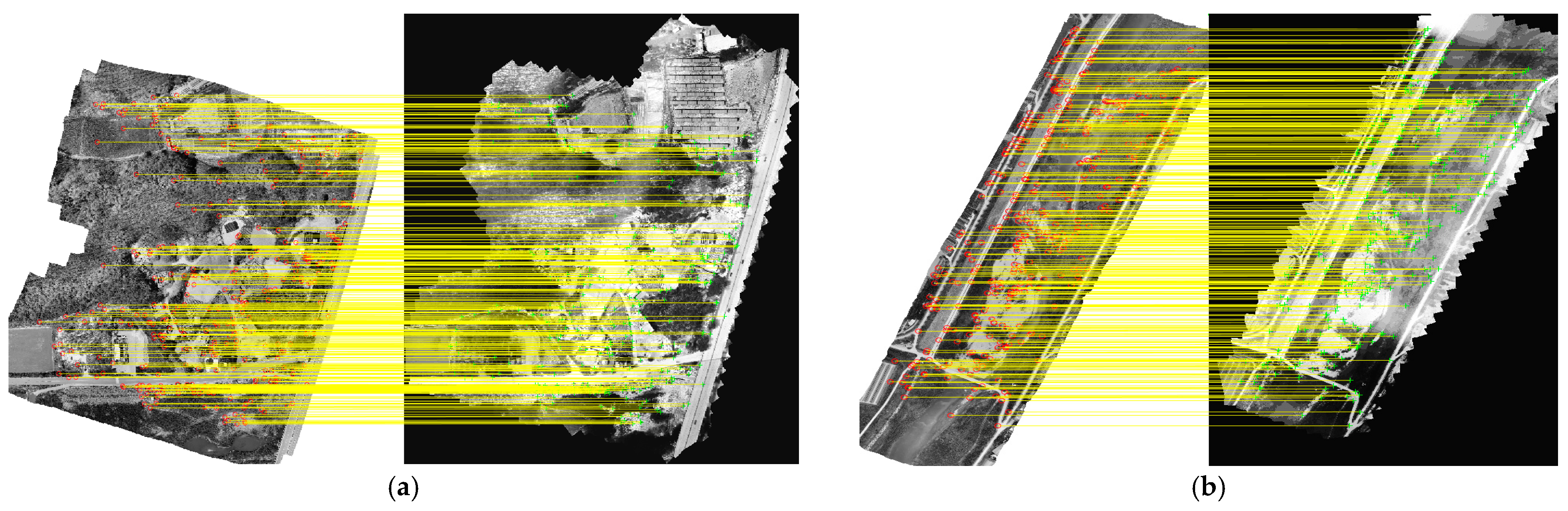

3.3.2. Feature Point Extraction (AKAZE)

3.3.3. Feature Matching

3.3.4. Outlier Removal and Affine Transformation

4. Results and Discussion

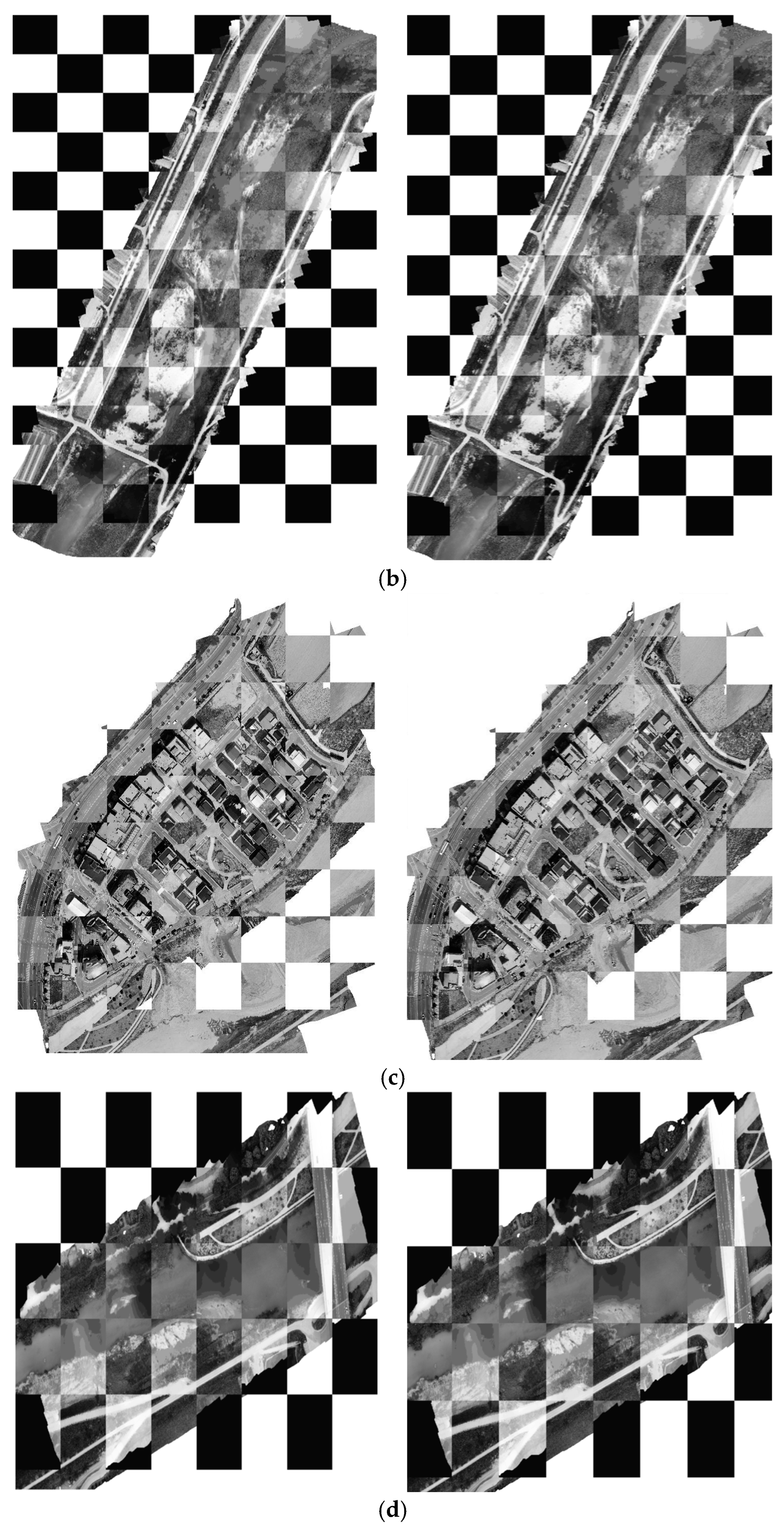

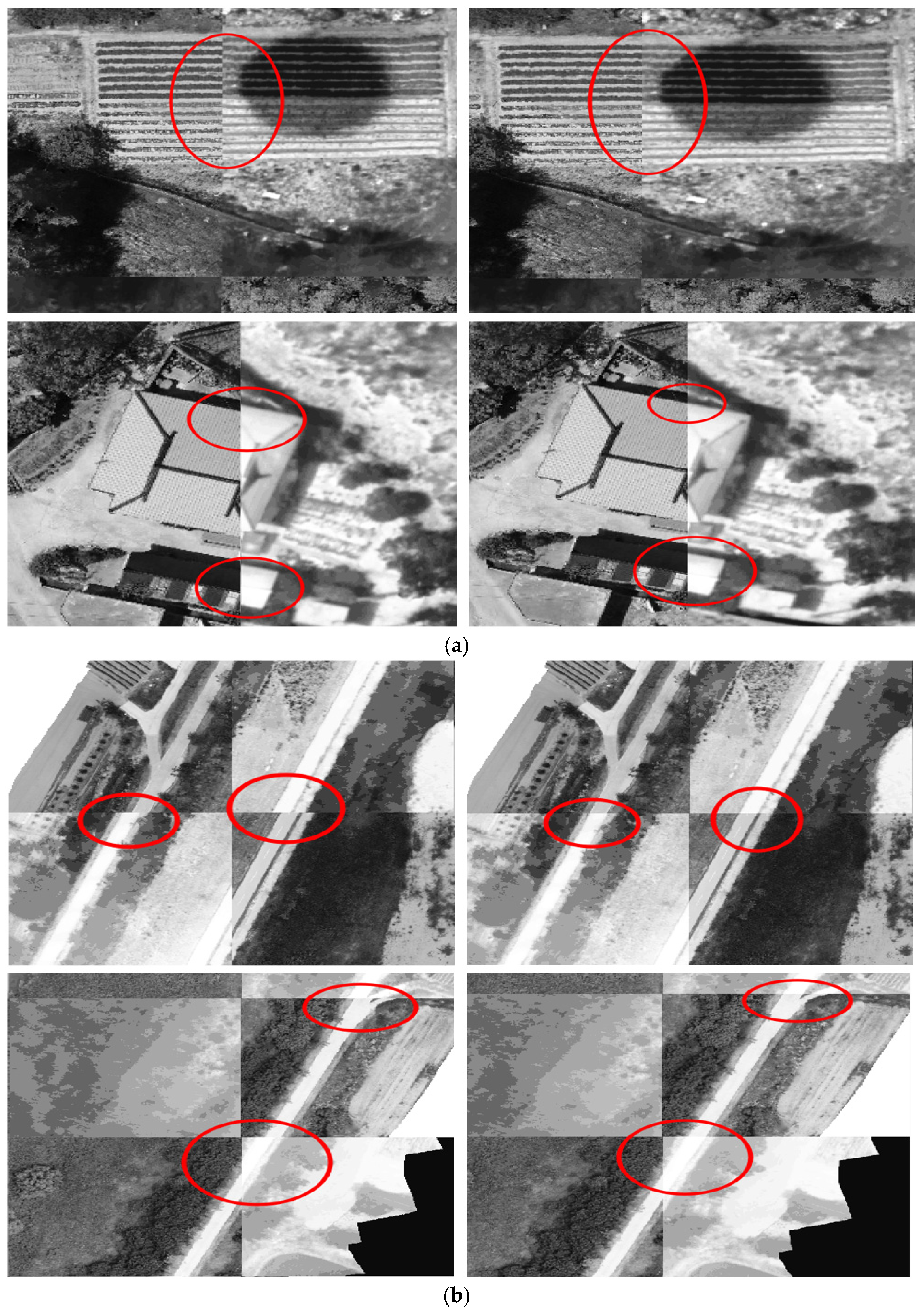

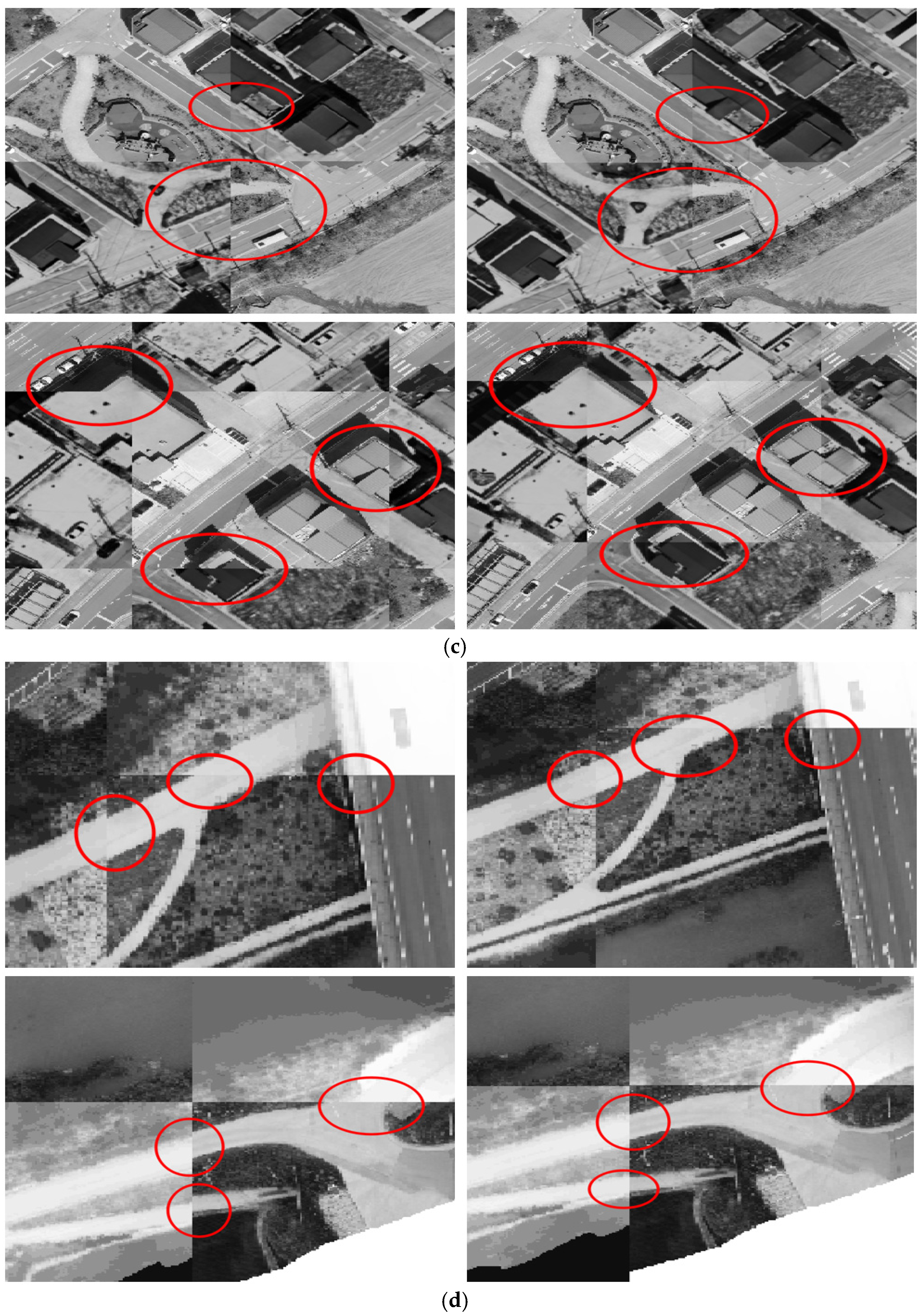

4.1. Application of Geometric Correction

4.2. LST Orthophoto Correction Results

4.3. Quantitative Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stöcker, C.; Nex, F.; Koeva, M.; Gerke, M. High-quality uav-based orthophotos for cadastral mapping: Guidance for optimal flight configurations. Remote Sens. 2020, 12, 3625. [Google Scholar] [CrossRef]

- Deliry, S.I.; Avdan, U. Accuracy of unmanned aerial systems photogrammetry and structure from motion in surveying and mapping: A review. J. Indian Soc. Remote Sens. 2021, 49, 1997–2017. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, X.; Ai, G.; Zhang, Y.; Zuo, Y. Generating a high-precision true digital orthophoto map based on UAV images. ISPRS Int. J. Geo-Inf. 2018, 7, 333. [Google Scholar] [CrossRef]

- Kovanič, Ľ.; Topitzer, B.; Peťovský, P.; Blišťan, P.; Gergeľová, M.B.; Blišťanová, M. Review of photogrammetric and lidar applications of UAV. Appl. Sci. 2023, 13, 6732. [Google Scholar] [CrossRef]

- Attard, M.R.; Phillips, R.A.; Bowler, E.; Clarke, P.J.; Cubaynes, H.; Johnston, D.W.; Fretwell, P.T. Review of Satellite Remote Sensing and Unoccupied Aircraft Systems for Counting Wildlife on Land. Remote Sens. 2024, 16, 627. [Google Scholar] [CrossRef]

- Li, H.; Yin, J.; Jiao, L. An Improved 3D Reconstruction Method for Satellite Images Based on Generative Adversarial Network Image Enhancement. Appl. Sci. 2024, 14, 7177. [Google Scholar] [CrossRef]

- Gudžius, P.; Kurasova, O.; Darulis, V.; Filatovas, E. Deep learning-based object recognition in multispectral satellite imagery for real-time applications. Mach. Vis. Appl. 2021, 32, 98. [Google Scholar] [CrossRef]

- Shoab, M.; Singh, V.K.; Ravibabu, M.V. High-precise true digital orthoimage generation and accuracy assessment based on UAV images. J. Indian Soc. Remote Sens. 2022, 50, 613–622. [Google Scholar] [CrossRef]

- Jang, H.; Kim, S.; Yoo, S.; Han, S.; Sohn, H. Feature matching combining radiometric and geometric characteristics of images, applied to oblique-and nadir-looking visible and TIR sensors of UAV imagery. Sensors 2021, 21, 4587. [Google Scholar] [CrossRef]

- Döpper, V.; Gränzig, T.; Kleinschmit, B.; Förster, M. Challenges in UAS-based TIR imagery processing: Image alignment and uncertainty quantification. Remote Sens. 2020, 12, 1552. [Google Scholar] [CrossRef]

- Park, J.H.; Lee, K.R.; Lee, W.H.; Han, Y.K. Generation of land surface temperature orthophoto and temperature accuracy analysis by land covers based on thermal infrared sensor mounted on unmanned aerial vehicle. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2018, 36, 263–270. [Google Scholar]

- Shin, Y.; Lee, C.; Kim, E. Enhancing Real-Time Kinematic Relative Positioning for Unmanned Aerial Vehicles. Machines 2024, 12, 202. [Google Scholar] [CrossRef]

- Hognogi, G.G.; Pop, A.M.; Marian-Potra, A.C.; Someșfălean, T. The role of UAS-GIS in Digital Era Governance.A Systematic literature review. Sustainability 2021, 131, 11097. [Google Scholar] [CrossRef]

- Kim, S.; Lee, Y.; Lee, H. Applicability investigation of the PPK GNSS method in drone mapping. J. Korean Cadastre Inf. Assoc. 2021, 23, 155–165. [Google Scholar] [CrossRef]

- Seong, J.H.; Lee, K.R.; Han, Y.K.; Lee, W.H. Geometric correction of none-GCP UAV orthophoto using feature points of reference image. J. Korean Soc. Geospat. Inf. Syst. 2020, 27, 27–34. [Google Scholar]

- Angel, Y.; Turner, D.; Parkes, S.; Malbeteau, Y.; Lucieer, A.; McCabe, M.F. Automated georectification and mosaicking of UAV-based hyperspectral imagery from push-broom sensors. Remote Sens. 2019, 12, 34. [Google Scholar] [CrossRef]

- Son, J.; Yoon, W.; Kim, T.; Rhee, S. Iterative Precision Geometric Correction for High-Resolution Satellite Images. Korean J. Remote Sens. 2021, 37, 431–447. [Google Scholar]

- Chen, J.; Cheng, B.; Zhang, X.; Long, T.; Chen, B.; Wang, G.; Zhang, D. A TIR-visible automatic registration and geometric correction method for SDGSAT-1 thermal infrared image based on modified RIFT. Remote Sens. 2022, 14, 1393. [Google Scholar] [CrossRef]

- Li, Y.; He, L.; Ye, X.; Guo, D. Geometric correction algorithm of UAV remote sensing image for the emergency disaster. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 6691–6694. [Google Scholar]

- Dibs, H.; Hasab, H.A.; Jaber, H.S.; Al-Ansari, N. Automatic feature extraction and matching modelling for highly noise near-equatorial satellite images. Innov. Infrastruct. Solut. 2022, 7, 2. [Google Scholar] [CrossRef]

- Retscher, G. Accuracy performance of virtual reference station (VRS) networks. J. Glob. Position. Syst. 2002, 1, 40–47. [Google Scholar] [CrossRef]

- Wanninger, L. Virtual reference stations (VRS). Gps Solut. 2003, 7, 143–144. [Google Scholar] [CrossRef]

- Lee, K.; Lee, W.H. Earthwork Volume Calculation, 3D model generation, and comparative evaluation using vertical and high-oblique images acquired by unmanned aerial vehicles. Aerospace 2022, 9, 606. [Google Scholar] [CrossRef]

- Goncalves, J.A.; Henriques, R. UAV photogrammetry for topographic monitoring of coastal areas. ISPRS J. Photogramm. Remote Sens. 2015, 104, 101–111. [Google Scholar] [CrossRef]

- Reshetyuk, Y.; Mårtensson, S. Generation of highly accurate digital elevation models with unmanned aerial vehicles. Photogramm. Rec. 2016, 31, 143–165. [Google Scholar] [CrossRef]

- Hendrickx, H.; Vivero, S.; De Cock, L.; De Wit, B.; De Maeyer, P.; Lambiel, C.; Delaloye, R.; Nyssen, J.; Frankl, A. The reproducibility of SfM algorithms to produce detailed Digital Surface Models: The example of PhotoScan applied to a high-alpine rock glacier. Remote Sens. Lett. 2019, 10, 11–20. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Sun, Q.; Wang, X.; Xu, J.; Wang, L.; Zhang, H.; Yu, J.; Su, T.; Zhang, X.F. Camera Self-Calibration with Lens Distortion. Optik 2016, 127, 4506–4513. [Google Scholar] [CrossRef]

- Lee, W.H.; Yu, K. Bundle block adjustment with 3D natural cubic splines. Sensors 2009, 9, 9629–9665. [Google Scholar] [CrossRef]

- Lee, K.; Lee, W.H. Temperature accuracy analysis by land cover according to the angle of the thermal infrared imaging camera for unmanned aerial vehicles. ISPRS Int. J. Geo-Inf. 2022, 11, 204. [Google Scholar] [CrossRef]

- Jiang, J.; Zheng, H.; Ji, X.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; Ehsani, R.; Yao, X. Analysis and evaluation of the image preprocessing process of a six-band multispectral camera mounted on an unmanned aerial vehicle for winter wheat monitoring. Sensors 2019, 19, 747. [Google Scholar] [CrossRef]

- Weng, J.; Zhou, W.; Ma, S.; Qi, P.; Zhong, J. Model-free lens distortion correction based on phase analysis of fringe-patterns. Sensors 2020, 21, 209. [Google Scholar] [CrossRef] [PubMed]

- Di Felice, F.; Mazzini, A.; Di Stefano, G.; Romeo, G. Drone high resolution infrared imaging of the Lusi mud eruption. Mar. Pet. Geol. 2018, 90, 38–51. [Google Scholar] [CrossRef]

- Lee, K.; Park, J.; Jung, S.; Lee, W. Roof Color-based warm roof evaluation in cold regions using a UAV mounted thermal infrared imaging camera. Energies 2021, 14, 6488. [Google Scholar] [CrossRef]

- Aubrecht, D.M.; Helliker, B.R.; Goulden, M.L.; Roberts, D.A.; Still, C.J.; Richardson, A.D. Continuous, long-term, high-frequency thermal imaging of vegetation: Uncertainties and recommended best practices. Agric. For. Meteorol. 2016, 228, 315–326. [Google Scholar] [CrossRef]

- Lu, L.; Zhou, Y.; Panetta, K.; Agaian, S. Comparative study of histogram equalization algorithms for image enhancement. In Proceedings of the Mobile Multimedia/Image Processing, Security, and Applications 2010, FL, USA, 5–9 April 2010; pp. 337–347. [Google Scholar]

- Acharya, U.K.; Kumar, S. Image sub-division and quadruple clipped adaptive histogram equalization (ISQCAHE) for low exposure image enhancement. Multidimension. Syst. Signal Process. 2023, 34, 25–45. [Google Scholar]

- Zhou, J.; Pang, L.; Zhang, W. Underwater image enhancement method based on color correction and three-interval histogram stretching. Meas. Sci. Tech. 2021, 32, 115405. [Google Scholar] [CrossRef]

- Kaur, S.; Kaur, M. Image sharpening using basic enhancement techniques. Int. J. Res. Eng Sci. Manag. 2018, 1, 122–126. [Google Scholar]

- Kim, H.G.; Lee, D.B.; Song, B.C. Adaptive Unsharp Masking using Bilateral Filter. J. Inst. Electron. Inf. Eng. 2012, 49, 56–63. [Google Scholar]

- Kansal, S.; Purwar, S.; Tripathi, R.K. Image contrast enhancement using unsharp masking and histogram equalization. Multimed. Tools Appl. 2018, 77, 26919–26938. [Google Scholar] [CrossRef]

- Devi, N.B.; Kavida, A.C.; Murugan, R. Feature extraction and object detection using fast-convolutional neural network for remote sensing satellite image. J. Indian Soc. Remote Sens. 2022, 50, 961–973. [Google Scholar] [CrossRef]

- Oh, J.; Han, Y. A double epipolar resampling approach to reliable conjugate point extraction for accurate Kompsat-3/3A stereo data processing. Remote Sens. 2020, 12, 2940. [Google Scholar] [CrossRef]

- Fortun, D.; Bouthemy, P.; Kervrann, C. Optical flow modeling and computation: A survey. Comput. Vis. Image Underst. 2015, 134, 1–21. [Google Scholar] [CrossRef]

- Gastal, E.S.; Oliveira, M.M. Domain transform for edge-aware image and video processing. In Proceedings of the ACM SIGGRAPH 2011, Vancouver, BC, Canada, 7–11 August 2011; pp. 1–12. [Google Scholar]

- Demchev, D.; Volkov, V.; Kazakov, E.; Alcantarilla, P.F.; Sandven, S.; Khmeleva, V. Sea ice drift tracking from sequential SAR images using accelerated-KAZE features. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5174–5184. [Google Scholar] [CrossRef]

- Soleimani, P.; Capson, D.W.; Li, K.F. Real-time FPGA-based implementation of the AKAZE algorithm with nonlinear scale space generation using image partitioning. J. Real-Time Image Process. 2021, 18, 2123–2134. [Google Scholar] [CrossRef] [PubMed]

- Sharma, S.K.; Jain, K.; Shukla, A.K. A Comparative Analysis of Feature Detectors and Descriptors for Image Stitching. Appl. Sci. 2023, 13, 6015. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Bartoli, A.; Davison, A.J. KAZE features. In Proceedings of the Computer Vision—ECCV 2012, Florence, Italy, 7–13 October 2012; pp. 214–227. [Google Scholar]

- Weickert, J.; Grewenig, S.; Schroers, C.; Bruhn, A. Cyclic schemes for PDE-based image analysis. Int. J. Comput. Vis. 2016, 118, 275–299. [Google Scholar] [CrossRef]

- Weickert, J.; Scharr, H. A scheme for coherence-enhancing diffusion filtering with optimized rotation invariance. J. Vis. Commun. Image Represent. 2002, 13, 103–118. [Google Scholar] [CrossRef]

- Hong, S.; Shin, H. Comparative performance analysis of feature detection and matching methods for lunar terrain images. KSCE J. Civ. Environ. Eng. Res. 2020, 40, 437–444. [Google Scholar]

| RGB (Reference Orthophoto) | TIR (Target Orthophoto) | |

|---|---|---|

| Study area A | 3 September 2019 | 3 September 2019 |

| 11 February 2020 | ||

| 19 April 2023 (Zenmuse H20T) | ||

| Study area B | 23 June 2019 | 13 July 2019 |

| 15 December 2019 | ||

| 16 May 2020 | ||

| 16 January 2021 | ||

| 18 March 2023 (Zenmuse H20T) | ||

| Study area C | 28 April 2020 | 17 May 2020 |

| 19 December 2020 | ||

| 27 June 2021 | ||

| 21 August 2022 (Zenmuse H20T) | ||

| 30 March 2023 (Zenmuse H20T) | ||

| Study area D | 1 July 2019 | 3 June 2019 |

| 9 July 2019 | ||

| 23 May 2020 | ||

| 6 March 2021 | ||

| 19 March 2023 (Zenmuse H20T) |

| RMSE/Maximum Error | ||

|---|---|---|

| Study Area | X Error | Y Error |

| A | 0.02/0.02 | 0.02/0.03 |

| B | 0.02/0.03 | 0.04/0.05 |

| C | 0.02/0.03 | 0.05/0.09 |

| D | 0.03/0.05 | 0.05/0.06 |

| GSD (cm) | RMSE (m) | Maximum Error (m) |

|---|---|---|

| Within 8 | 0.08 | 0.16 |

| Within 12 | 0.12 | 0.24 |

| Within 25 | 0.25 | 0.50 |

| Within 42 | 0.42 | 0.84 |

| Within 65 | 0.65 | 1.30 |

| Within 80 | 0.80 | 1.60 |

| Parameter | Value | |

|---|---|---|

| TIR sensor | PlanckR1 | 17,096.453 |

| PlanckR2 | 0.046642166 | |

| PlanckB | 1428 | |

| PlanckF | 1 | |

| PlanckO | −342 | |

| Alpha 1 | 0.006569 | |

| Alpha 2 | 0.012620 | |

| Beta 1 | −0.002276 | |

| Beta 2 | −0.006670 | |

| X | 1.9 | |

| Environment | Dist | 50 m |

| RAT | 22 °C | |

| Hum | 50% | |

| AirT | 22 °C | |

| E | 0.95 |

| Study Area B | Study Area D | |

|---|---|---|

| Original | 593 | 521 |

| Original + HE | 30,004 | 7132 |

| Original + HE + Sharpening | 34,295 | 8364 |

| Original + BBHE | 54,764 | 42,866 |

| Original + BBHE + Sharpening | 135,089 | 131,089 |

| Study Area B | Study Area D | |

|---|---|---|

| SIFT | 54,820 (2.77 s) | 24,865 (2.75 s) |

| SURF | 17,854 (2.01 s) | 6580 (1.03 s) |

| ORB | 492,748 (1.35 s) | 192,990 (0.98 s) |

| BRISK | 56,988 (1.63 s) | 9920 (1.09 s) |

| AKAZE | 135,089 (7.65 s) | 131,089 (4.89 s) |

| Study Area A | Study Area B | Study Area C | Study Area D | |

|---|---|---|---|---|

| Binary descriptor | 380 | 440 | 386 | 171 |

| Proposed | 454 | 486 | 492 | 221 |

| TIR (Reference Orthophoto) | Inlier (Binary Descriptor) | Inlier (Proposed Method) | |

|---|---|---|---|

| Study area A | 3 September 2019 | 380 | 454 |

| 11 February 2020 | 323 | 371 | |

| 19 April 2023 | 6 | 108 | |

| Study area B | 13 July 2019 | 526 | 545 |

| 15 December 2019 | 440 | 496 | |

| 16 May 2020 | 384 | 402 | |

| 16 January 2021 | 298 | 371 | |

| 18 March 2023 | 8 | 89 | |

| Study area C | 17 May 2020 | 386 | 492 |

| 19 December 2020 | 367 | 435 | |

| 27 June 2021 | 402 | 449 | |

| 21 August 2022 | 333 | 351 | |

| 30 March 2023 | 42 | 97 | |

| Study area D | 3 June 2019 | 184 | 219 |

| 9 July 2019 | 171 | 221 | |

| 23 May 2020 | 169 | 208 | |

| 6 March 2021 | 6 | 102 | |

| 19 March 2023 | 9 | 85 |

| TIR (Reference Orthophoto) | Before Geometric Correction | Geometric Correction (Binary Descriptor) | Geometric Correction (Proposed Method) | |

|---|---|---|---|---|

| Study A | 3 September 2019 | 5.22/0.10 | 0.81/0.02 | 0.79/0.02 |

| 11 February 2020 | 13.81/0.27 | 1.13/0.02 | 1.19/0.02 | |

| 19 April 2023 | 16.11/0.32 | Geometric correction failed | 4.98/0.10 | |

| Study B | 13 July 2019 | 18.45/0.37 | 1.02/0.02 | 1.09/0.02 |

| 15 December 2019 | 17.62/0.35 | 1.21/0.02 | 1.01/0.02 | |

| 16 May 2020 | 25.99/0.52 | 1.64/0.03 | 1.32/0.03 | |

| 16 January 2021 | 18.21/0.36 | 1.72/0.03 | 1.29/0.03 | |

| 18 March 2023 | 24.02/0.48 | Geometric correction failed | 6.47/0.13 | |

| Study C | 17 May 2020 | 8.47/0.17 | 1.24/0.02 | 1.16/0.02 |

| 19 December 2020 | 14.91/0.30 | 0.98/0.02 | 1.24/0.02 | |

| 27 June 2021 | 21.09/0.42 | 1.62/0.03 | 1.42/0.03 | |

| 21 August 2022 | 25.08/0.50 | 1.91/0.04 | 1.50/0.03 | |

| 30 March 2023 | 30.11/0.60 | 5.21/0.10 | 2.21/0.04 | |

| Study D | 3 June 2019 | 14.31/0.29 | 0.74/0.01 | 0.74/0.01 |

| 9 July 2019 | 16.8/0.34 | 1.31/0.03 | 1.31/0.03 | |

| 23 May 2020 | 15.93/0.32 | 1.76/0.04 | 1.76/0.04 | |

| 6 March 2021 | 9.87/0.20 | Geometric correction failed | 5.98/0.12 | |

| 19 March 2023 | 25.87/0.52 | Geometric correction failed | 6.77/0.14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, K.; Lee, W. Thermal Infrared Orthophoto Geometry Correction Using RGB Orthophoto for Unmanned Aerial Vehicle. Aerospace 2024, 11, 817. https://doi.org/10.3390/aerospace11100817

Lee K, Lee W. Thermal Infrared Orthophoto Geometry Correction Using RGB Orthophoto for Unmanned Aerial Vehicle. Aerospace. 2024; 11(10):817. https://doi.org/10.3390/aerospace11100817

Chicago/Turabian StyleLee, Kirim, and Wonhee Lee. 2024. "Thermal Infrared Orthophoto Geometry Correction Using RGB Orthophoto for Unmanned Aerial Vehicle" Aerospace 11, no. 10: 817. https://doi.org/10.3390/aerospace11100817

APA StyleLee, K., & Lee, W. (2024). Thermal Infrared Orthophoto Geometry Correction Using RGB Orthophoto for Unmanned Aerial Vehicle. Aerospace, 11(10), 817. https://doi.org/10.3390/aerospace11100817