Evaluation of Intelligent Auxiliary Information Presentation Mode for Collaborative Flight Formation

Abstract

:1. Introduction

2. Collaborative Flight Formation Simulation System

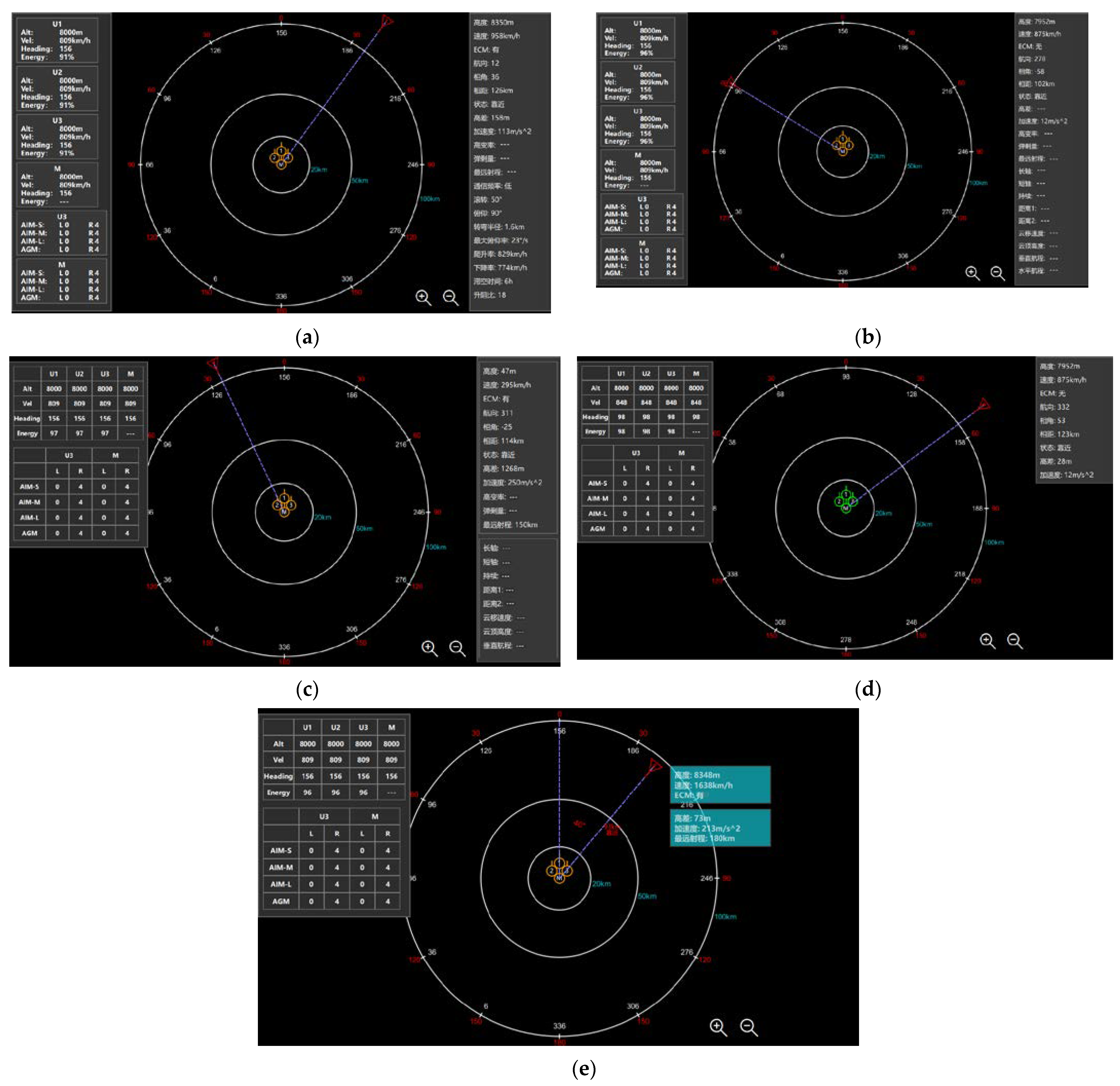

2.1. Simulation Mission and Interface Display

2.2. Intelligent Auxiliary Information Presentation Mode

- Level 1

- 2.

- Level 2

- 3.

- Level 3

- 4.

- Level 4

3. Evaluation Method

3.1. Subjective Evaluation

3.2. Objective Evaluation

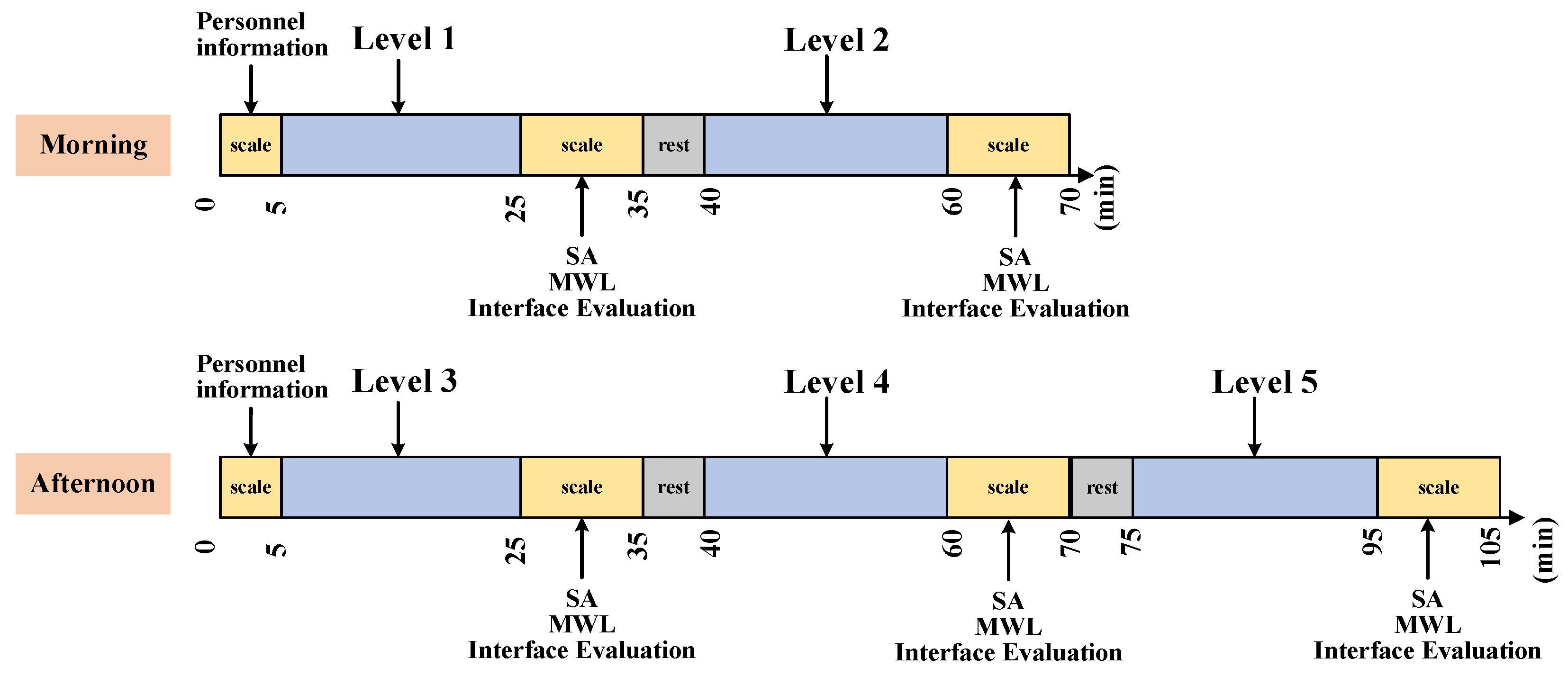

4. Experimental Design

4.1. Participants

4.2. Experimental Materials

4.3. Experimental Process

4.4. Data Analysis

5. Results

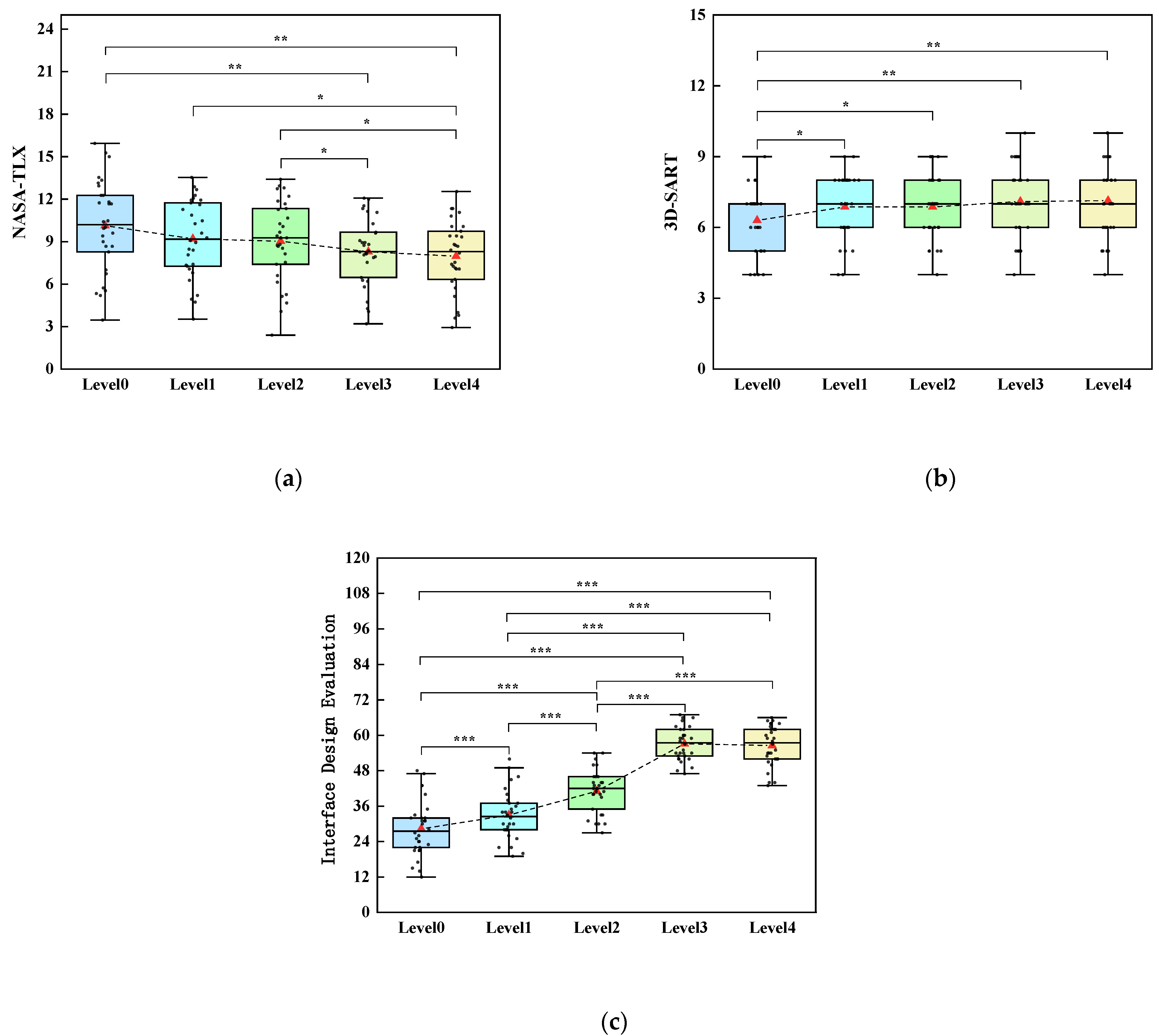

5.1. Subjective Evaluation Results

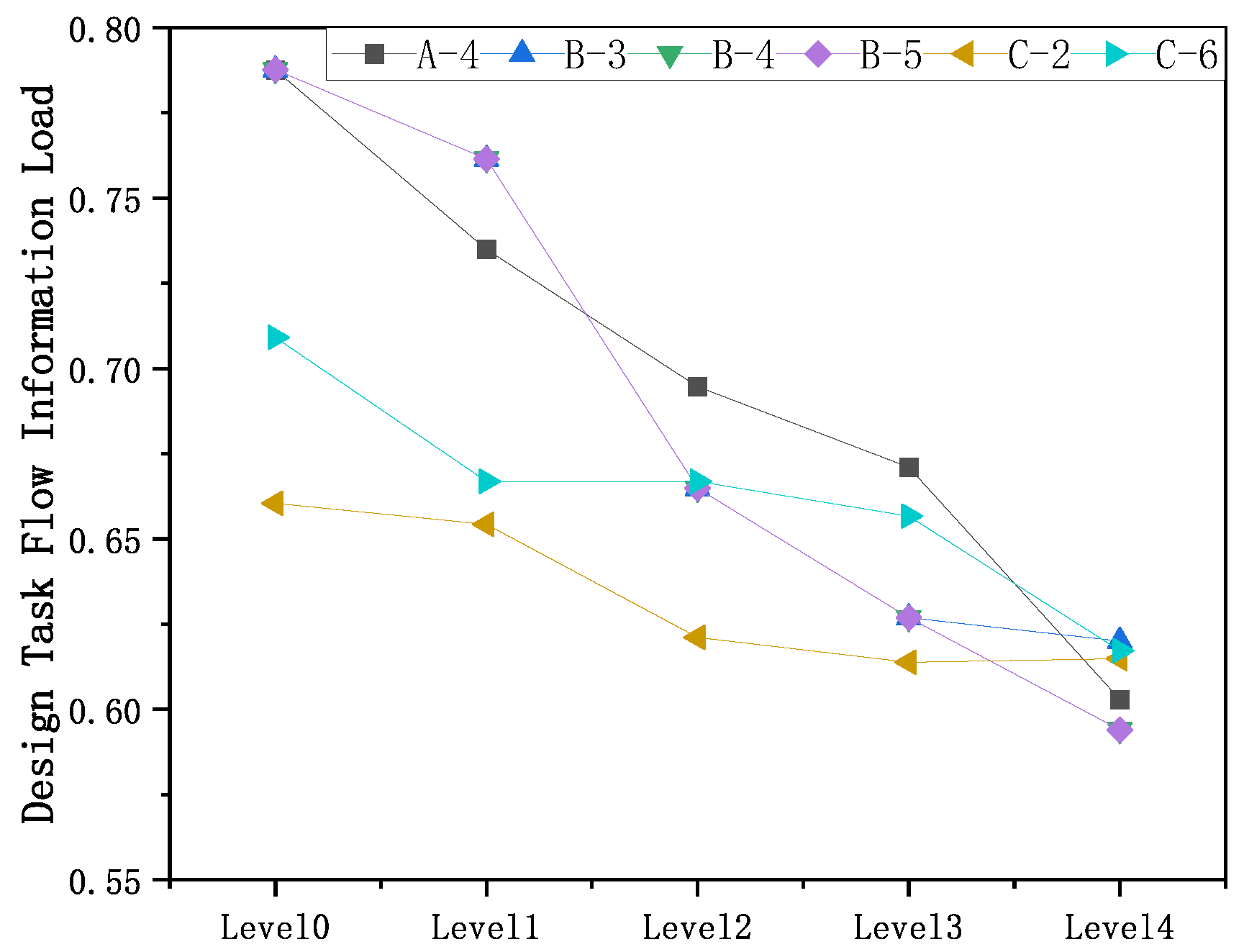

5.2. Objective Evaluation Results

6. Discussion

6.1. Optimal Intelligent Auxiliary Information Presentation Mode

6.2. Limitations and Future Research

- Simplistic Task Scenarios: The simulation involved single-target tasks, which may not reflect the complexity of real-world multi-target scenarios. Future research should evaluate intelligent modes under multi-target conditions to better simulate operational environments.

- Limited Objective Metrics: This study focused on information load as the primary objective metric. Incorporating additional measures, such as task performance or physiological indicators (e.g., eye tracking and bioelectric signals), could provide a more comprehensive evaluation.

- Homogeneous Participant Pool: This study recruited only healthy male college students, limiting the generalizability of the findings. Future studies should include a more diverse participant pool, particularly professional pilots, to ensure broader applicability.

7. Conclusions

- All four intelligent auxiliary information presentation modes reduced mental workload and improved situation awareness compared to the original mode. Level 4 demonstrated the most substantial impact while also presenting the lowest design task flow information load.

- Due to the influence of habits, participants were more likely to utilize interfaces that were consistently displayed. Consequently, most participants favored the design of Level 3, and the overall task’s practical operation ability was the most optimal.

- The intelligent modes effectively reduced visual search time and information judgment time, leading to faster task response times. As the level of intelligence increased, the response time continued to decrease. Levels 3 and 4 demonstrated superior results in improving operational abilities.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, Y.; Tu, T. Interface design of manned/unmanned collaborative accusation terminal. Electron. Technol. Softw. Eng. 2019, 8, 90–92. [Google Scholar]

- Sun, S.; Meng, C.; Hou, Y.; Cai, X. Research on the Collaborative Operational Mode and Key Technologies of Manned/Unmanned Aerial Vehicles. Aero Weapon. 2021, 28, 33–37. [Google Scholar]

- Alqudsi, Y.; Murat, M. Exploring advancements and emerging trends in robotic swarm coordination and control of swarm flying robots: A review. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2025, 239, 180–204. [Google Scholar] [CrossRef]

- Abro, G.E.M.; Ali, Z.A.; Masood, R.J. Synergistic UAV Motion: A Comprehensive Review on Advancing Multi-Agent Coordination. IECE Trans. Sens. Commun. Control 2024, 1, 72–88. [Google Scholar] [CrossRef]

- Shangguan, R.; Lin, L.; Zhou, Y. Overview of cooperative UAV swarm localization. In Proceedings of the International Conference on Remote Sensing and Digital Earth (RSDE 2024), Chengdu, China, 8–10 November 2014. [Google Scholar]

- Li, W.; Zhou, S.; Shi, M.; Yue, J.; Lin, B.; Qin, K. Collision avoidance time-varying group formation tracking control for multi-agent systems. Appl. Intell. 2025, 55, 175. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, W. Command and Control Technology for Unmanned Combat Vehicles. Command Inform. Syst. Technol. 2011, 2, 6–9. [Google Scholar]

- Schouwenaars, T.; Valenti, M.; Feron, E.; How, J.; Roche, E. Linear Programming and Language Processing for Human-Unmanned Aerial-Vehicle Team Missions. J. Guid. Control Dyn. 2006, 29, 303–313. [Google Scholar] [CrossRef]

- Liu, B.; Chen, S.; Wang, X.; Wang, Z. Air combat decision making for coordinated multiple target attack based on science of collectives in the uncertain communication environment. In Proceedings of the 2014 IEEE Chinese Guidance, Navigation and Control Conference, Yantai, China, 8–10 August 2014. [Google Scholar]

- Valenti, M.; Schouwenaars, T.; Kuwata, Y.; Feron, E.; How, J.; Paunicka, J. Implementation of a Manned Vehicle-UAV Mission System. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Providence, RI, USA, 16–19 August 2004. [Google Scholar]

- Zhang, Y.; Mehrjerdi, H. A survey on multiple unmanned vehicles formation control and coordination: Normal and fault situations. In Proceedings of the 2013 International Conference on Unmanned Aircraft Systems (ICUAS), 2013 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 28–31 May 2013. [Google Scholar]

- Boyd, J.A. A Discourse on Winning and Losing; Unpublished set of Briefing Slides Available at Air University Library, Maxwell AFB, Alabama,1987. Available online: http://www.ausairpower.net/JRB/intro.pdf (accessed on 1 November 2024).

- Defense and the National Interest. Available online: https://d-n-i.net/ (accessed on 1 November 2024).

- Endsley, M.R. Toward a theory of situation awareness in dynamic systems. Man Factors 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Feng, C.; Wanyan, X.; Chen, H.; Zhuang, D. Situation awareness model and application based on multi resource load theory. J. Beijing Univ. Aeronaut. Astronaut. 2018, 44, 1438–1446. [Google Scholar]

- Wang, X.; Cao, Y.; Ding, M.; Wang, X.; Yu, W.; Guo, B. Research Progress in Modeling and Evaluation of Cooperative Operation System-of-systems for Manned-unmanned Aerial Vehicles. Aerosp. Electron. Syst. Mag. 2024, 39, 6–31. [Google Scholar] [CrossRef]

- Robb, D.A.; Garcia, F.J.C.; Laskov, A.; Liu, X.; Patron, P.; Hastie, H. Keep Me in the Loop: Increasing Operator Situation Awareness through a Conversational Multimodal Interface. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, Boulder CO, USA, 16–20 October 2018. [Google Scholar]

- Burns, C.M.; Skraaning, G.; Jamieson, G.A.; Lau, N.; Kwok, J.; Welch, R.; Andresen, G. Evaluation of Ecological Interface Design for Nuclear Process Control: Situation Awareness Effects. Hum. Factors 2008, 50, 663–679. [Google Scholar] [CrossRef] [PubMed]

- Rood, M.M.V. Increasing Situation Awareness in USAR Human-Robot Teams by Enhancing the User Interface. Master’s Thesis, Utrecht University, Utrecht, The Netherlands, 2016. [Google Scholar]

- Xiao, Y.; Wang, Z.; Wang, M. The appraisal of reliability and validity of subjective workload assessment technique and NASA-task load index. Chin. J. Ind. Hyg. Occup. Dis. 2005, 23, 178–181. [Google Scholar]

- Kang, W.; Yuan, X. Optimization Design of Aircraft Cockpit Visual Display Interface Based on Mental Load. J. Beijing Univ. Aeronaut. Astronaut. 2008, 34, 782–785. [Google Scholar]

- Salmon, P.M.; Stanton, N.A.; Walker, G.H.; Jenkins, D.P.; Young, M.S. Measuring Situation Awareness in complex systems: Comparison of measures study. Int. J. Ind. Ergon. 2009, 39, 490–500. [Google Scholar]

- Yang, J.; Zeng, Y.; Zhang, K.; Rantanen, E.M. Measurement of situational awareness of air traffic controllers based on events. Space Med. Med. Eng. 2008, 21, 321–327. [Google Scholar]

- Nielsen, J. Usability Engineering; China Machine Press: Beijing, China, 2004. [Google Scholar]

- Shackel, B. Usability-Context, framework, definition, design and evaluation. Interact. Comput. 2009, 21, 339–346. [Google Scholar]

- Shackel, B. The concept of usability. In Proceedings of the IBM Software and Information Usability Symposium, Poughkeepsie NY, USA, 15–18 September 1981; IBM-Corporation: New York, NY, USA, 1981. [Google Scholar]

- Schneiderman, B. Designing the User Interface; Addison Wesley: Boston, MA, USA, 1998. [Google Scholar]

- Deng, Z.; Lu, Y. Study on the Influence Factors of Electronic Commerce Website Users’ Satisfaction and Behavior. Libr. Inform. Serv. 2008, 29, 5. [Google Scholar]

- Lin, Y. Evaluation of user interface satisfaction of mobile maps for touch screen interfaces. In Proceedings of the 2012 International Conference on Advances in Computer-Human Interactions, Valencia, Spain, 30 January–4 February 2012. [Google Scholar]

- Yan, S.; Yu, X.; Zhang, Z.; Peng, M.; Yang, M. Evaluation method of human-machine interface of virtual meter based on RBF network. J. Syst. Simul. 2007, 19, 5731–5735. [Google Scholar]

- Yan, S.; Li, Q.; Zhang, Z.; Peng, M. Research on Subjective evaluation method in human-machine-interface based on grey theory. J. Harbin Eng. Univ. 2005, 16, 98–104. [Google Scholar]

- Xia, C. Study on Human Machine Interface Evaluation of Hoisting Machine based on Fuzzy Factors. Coal Mine Mach. 2007, 28, 3. [Google Scholar]

- Tambe, M.; Johnson, W.L.; Jones, R.M.; Koss, F.; Laird, J.E.; Rosenbloom, P.S.; Schwamb, K. Intelligent Agents for Interactive Simulation Environments. AI Mag. 1995, 16, 15–39. [Google Scholar]

- Liu, S.; Wang, H. Review on cooperative formation control for manned/unmanned aerial vehicles. Flight Dyn. 2022, 40, 1–8. [Google Scholar]

- Yan, W. Research on Radar Detection of Three Kinds Aviation Hazardous Weather Features. Master’s Thesis, Nanjing University of Information Science & Technology, Beijing, China, 2019. [Google Scholar]

- Li, C. Aircraft rerouting strategy research under special weather. J. Civ. Aviat. Univ. China 2020, 38, 5–9. [Google Scholar]

- Song, N.; Xing, Q. Multi-class classification of air targets based on support vector machine. Syst. Eng. Electron. 2006, 28, 1279–1281. [Google Scholar]

- Niu, J.; Wu, X.; Zhang, L.; Li, Z.; Liu, X. Research on information structure layout of combat display and control interface based on visual cognition characteristics. Packag. Eng. 2023, 44, 328–337. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Taylor, R.M. Situational Awareness Rating Technique (Sart): The Development of a Tool for Aircrew Systems Design. In Proceedings of the AGARD AMP Symposium on Situation Awareness in Aerospace Operations, Neuilly Sur Seine, France, 1 April 1990. [Google Scholar]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum. Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- Harper, P.; Norman, K. Improving user satisfaction: The questionnaire for user interaction satisfaction version 5.5. In Proceedings of the 1st Annual Mid-Atlantic Human Factors Conference, Virginia Beach, VA, USA, February 1993. [Google Scholar]

- Davis, J.S.; LeBlanc, R.J. A Study of the Applicability of Complexity Measures. IEEE Trans. Softw. Eng. 1988, 14, 1366–1372. [Google Scholar] [CrossRef]

- Mowshowitz, A. Entropy and the complexity of graphs: I. an index of the relative complexity of a graph. Bull. Math. Biol. 1968, 30, 175–204. [Google Scholar] [CrossRef]

- Zheng, Y.; Lu, Y.; Wang, Z.; Huang, D.; Fu, S. Developing a Measurement for Task Complexity in Flight. Aerosp. Med. Hum. Perform. 2015, 86, 698–704. [Google Scholar] [CrossRef]

- Yang, C.; Pang, L.; Zhang, J.; Cao, X. Workload Measurement Method for Manned Vehicles in Multitasking Environments. Aerosp. 2024, 11, 406. [Google Scholar] [CrossRef]

- Donovan, S.-L.; Triggs, T. Investigating the Effects of Display Design on Unmanned Underwater Vehicle Pilot Performance; DSTO: Canberra, Australia, 2006. [Google Scholar]

| Evaluation | References | Main Content |

|---|---|---|

| Mental workload | [20] | Validated the reliability and validity of subjective mental workload assessment tools, including SWAT and NASA-TLX. |

| [21] | Developed an evaluation model for mental workload using visual displays in aircraft cockpits, optimizing their design to improve performance. | |

| Situation awareness | [22] | Compared memory-based and subjective measurement methods for assessing situation awareness using military command digital maps. |

| [23] | Explored event-based situation awareness measurement techniques for air traffic controllers. | |

| Interface usability | [24,25,26,27] | Proposed the evaluation systems of interface usability, which has been used widely. |

| [28,29] | Focused on user surveys and satisfaction assessments. | |

| Developing relevant evaluation metrics | [30,31,32] | Based on theoretical analysis and mathematical models. |

| Subtask | Microtask |

|---|---|

| Aerial targets (Task A)/ Ground targets (Task B)/ Extreme weather (Task C) | 1 Task category determination |

| 2 Target type determination | |

| 3 The issuance of instructions/Target facility determination/Energy consumption level judgment | |

| 4 Interference and radar status judgment/Target facility scale judgment/Intensity level judgment | |

| 5 The issuance of instructions/Defense capability of target facility judgment/Response method determination | |

| 6 Target intention judgment/Comprehensive risk judgment/The issuance of instructions | |

| 7 The issuance of instructions |

| Key Factors | Indicator | Measurement Method |

|---|---|---|

| Task factors | Task logic complexity HTC | First-order entropy of behavior control diagram |

| Operation factors | Operation step complexity HOC | Second-order entropy of behavior control diagram |

| Personal factors | Knowledge level complexity HKC | Second-order entropy of knowledge hierarchy diagram |

| Human–machine interface factors | Interface complexity HIC | Second-order entropy of interface information structure diagram |

| Result | Mean ± SD | ||||

|---|---|---|---|---|---|

| Level 0 | Level 1 | Level 2 | Level 3 | Level 4 | |

| NASA-TLX | 10.131 ± 3.245 | 9.193 ± 2.736 | 9.029 ± 2.883 | 8.282 ± 2.382 | 7.969 ± 2.496 |

| 3D-SART | 6.300 ± 1.317 | 6.867 ± 1.383 | 6.867 ± 1.358 | 7.100 ± 1.561 | 7.133 ± 1.634 |

| Interface design evaluation | 28.333 ± 9.353 | 32.967 ± 8.298 | 41.067 ± 7.343 | 57.133 ± 5.619 | 56.533 ± 6.786 |

| Result | POA (Mean ± SD) | ||||

|---|---|---|---|---|---|

| Level 0 | Level 1 | Level 2 | Level 3 | Level 4 | |

| B-3 | 0.522 ± 0.118 | 0.448 ± 0.111 | 0.434 ± 0.123 | 0.325 ± 0.088 | 0.306 ± 0.092 |

| Overall task | 0.421 ± 0.087 | 0.396 ± 0.084 | 0.386 ± 0.081 | 0.359 ± 0.077 | 0.360 ± 0.071 |

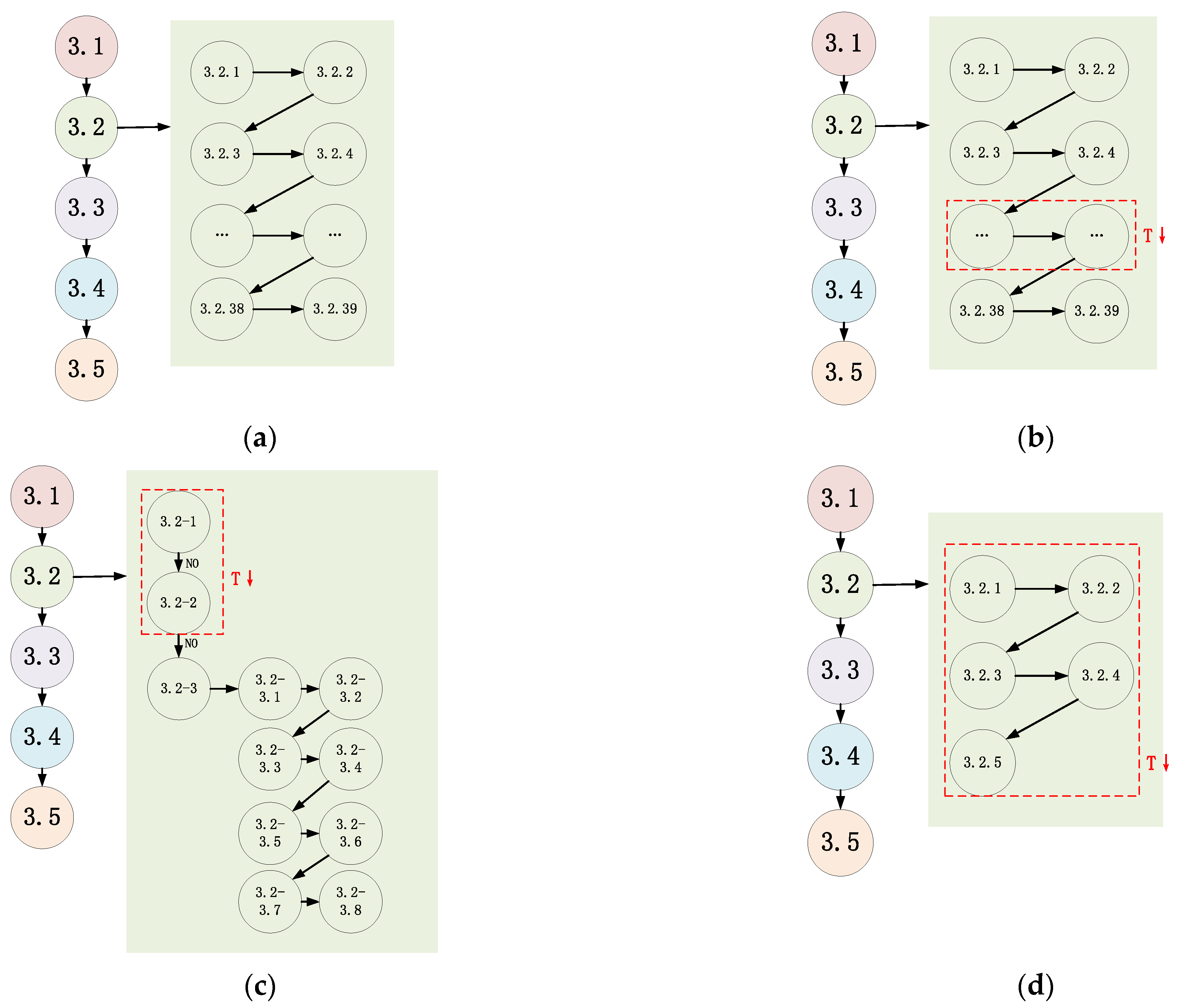

| Operation Steps | Operation Contents | |

|---|---|---|

| 3.1 | View task sequence—“Target facility determination” | |

| 3.2 | 3.2.1 | View information item 1 on the right side of the comprehensive situation |

| 3.2.2 | View information item 2 on the right side of the comprehensive situation | |

| … | … | |

| 3.2.39 | View information item 39 on the right side of the comprehensive situation | |

| 3.3 | Click on the dropdown menu | |

| 3.4 | Choose an answer | |

| 3.5 | Confirm the answer | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Pang, L.; Miao, D.; Yan, H.; Cao, X.; Wu, X. Evaluation of Intelligent Auxiliary Information Presentation Mode for Collaborative Flight Formation. Aerospace 2025, 12, 57. https://doi.org/10.3390/aerospace12010057

Wang X, Pang L, Miao D, Yan H, Cao X, Wu X. Evaluation of Intelligent Auxiliary Information Presentation Mode for Collaborative Flight Formation. Aerospace. 2025; 12(1):57. https://doi.org/10.3390/aerospace12010057

Chicago/Turabian StyleWang, Xiyue, Liping Pang, Dan Miao, Hongying Yan, Xiaodong Cao, and Xiaoxiang Wu. 2025. "Evaluation of Intelligent Auxiliary Information Presentation Mode for Collaborative Flight Formation" Aerospace 12, no. 1: 57. https://doi.org/10.3390/aerospace12010057

APA StyleWang, X., Pang, L., Miao, D., Yan, H., Cao, X., & Wu, X. (2025). Evaluation of Intelligent Auxiliary Information Presentation Mode for Collaborative Flight Formation. Aerospace, 12(1), 57. https://doi.org/10.3390/aerospace12010057