A Study on Chatbot Development Using No-Code Platforms by People with Disabilities for Their Peers at a Sheltered Workshop

Abstract

:1. Introduction

- (a)

- From the perspective of product development as citizen developers: Are LCNC platforms accessible and usable for people with disabilities working in sheltered workshops? Additionally, what types of training could further support them in utilizing these platforms effectively?

- (b)

- From the end users’ perspective: Are the products developed using LCNC platforms (chatbots in this study) accessible and user-friendly for individuals with disabilities working in sheltered workshops?

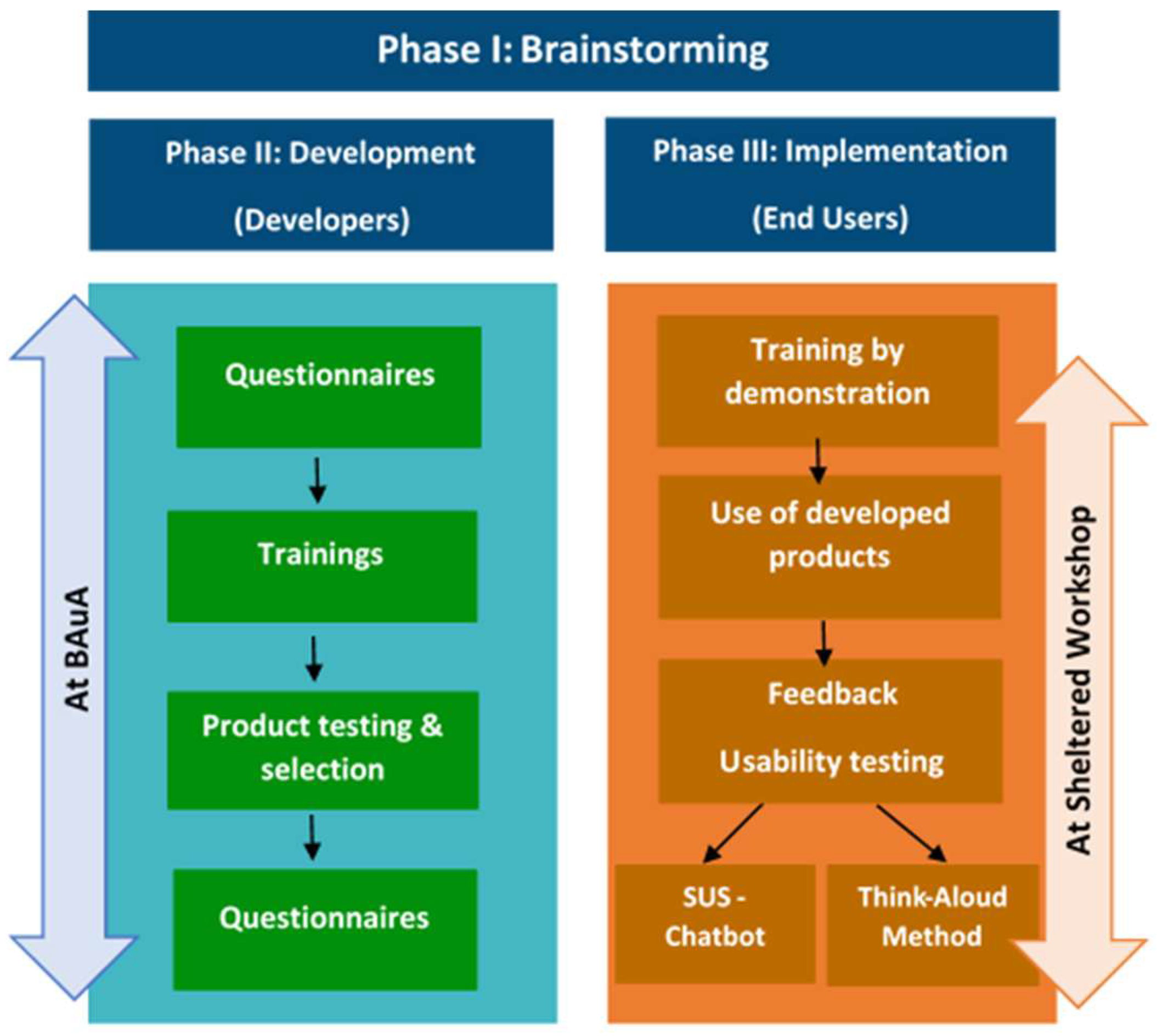

2. Materials and Methods

2.1. Participants

- (1)

- Participants who were trained in the use of the NC platform and developed chatbots (hereafter referred to as Developers). These individuals attended the training sessions for a week at the BAuA. A supervisor female—hereafter referred to as Supervisor 1 accompanied the participants for the first three days and another supervisor male—hereafter referred to as Supervisor 2 accompanied participants for the rest of the week. The change in supervisors was due to the fact that Supervisor 1 worked only part-time at the sheltered workshop and could not be available for the whole week.

- (2)

- Other sheltered workshop employees with disabilities who use the developed chatbots in their daily work at the sheltered workshop (hereafter referred to as End Users).

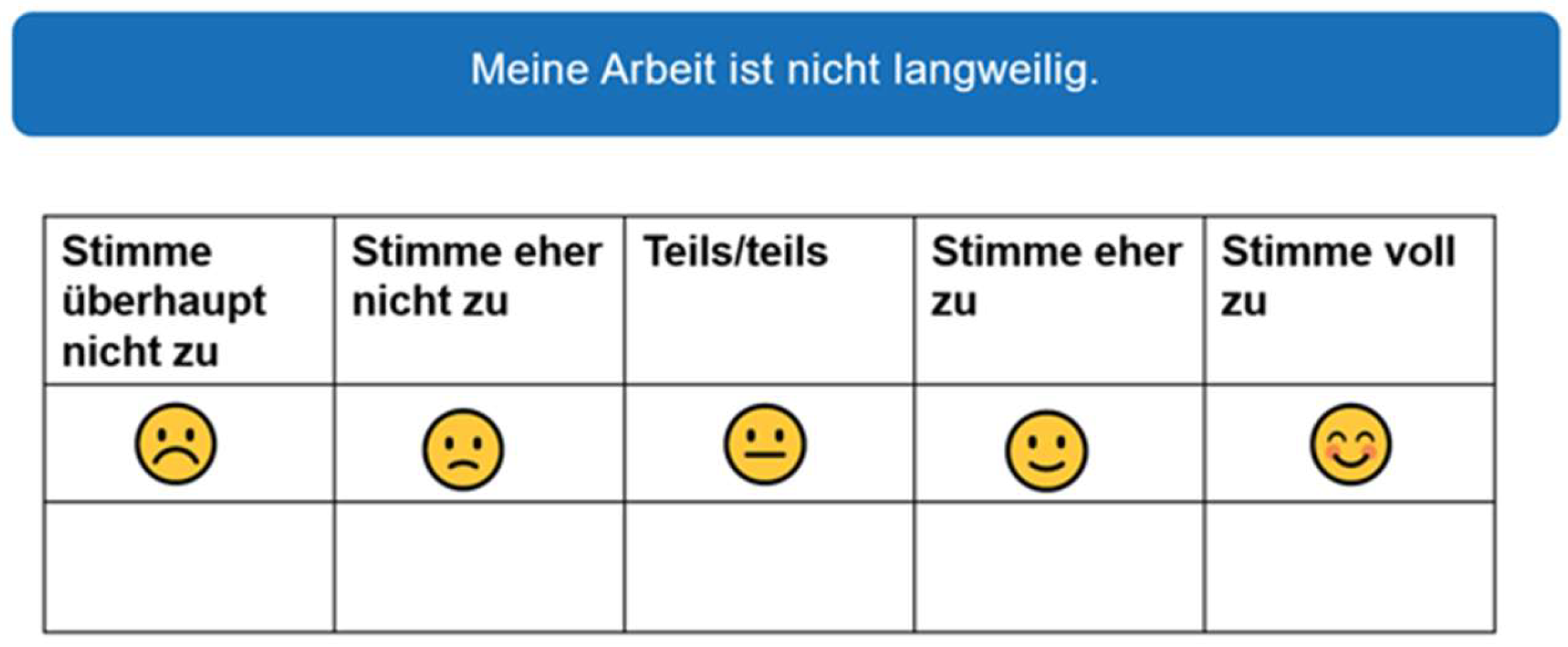

2.2. Questionnaires (Only for the Developers)

- For the Component “Task”

- For the Component “Technology”

- For the Component “Person”

2.3. Study Phases

2.3.1. Phase I: Pre-Study

Step 1: Introduction of NC Platforms to the Sheltered Workshop

Step 2: Collaborative Brainstorming with Employees and Supervisors in the Sheltered Workshops—Identifying Requirements and Selecting the Product and Platform

Step 3: Selection of Chatbots for the Study

Step 4: Recruitment

- Task-oriented qualification (German: tätigkeitsorientierte Qualifizierung): Focuses on skills required for various tasks across one or more work areas.

- Workplace-oriented qualification (German: arbeitsplatzorientierte Qualifizierung): Focuses on skills needed for specific workplaces within a work area.

- Field-oriented qualification (German: berufsfeldorientierte Qualifizierung): Covers all skills required in a particular work area of the workshop.

- Profession-oriented qualification (German: berufsbildorientierte Qualifizierung): Follows the content of a recognized professional occupation.

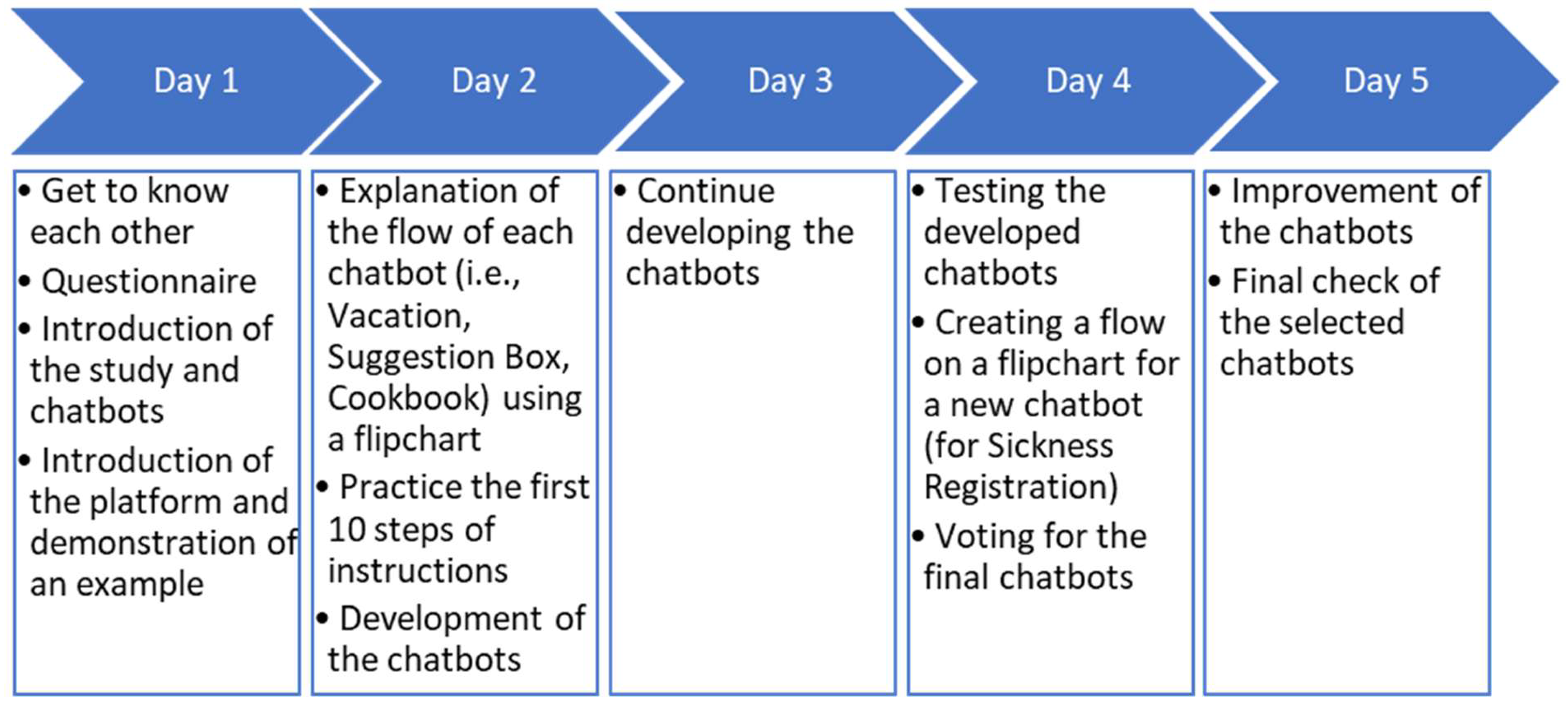

2.3.2. Phase II: Product Development—Developers

Setting

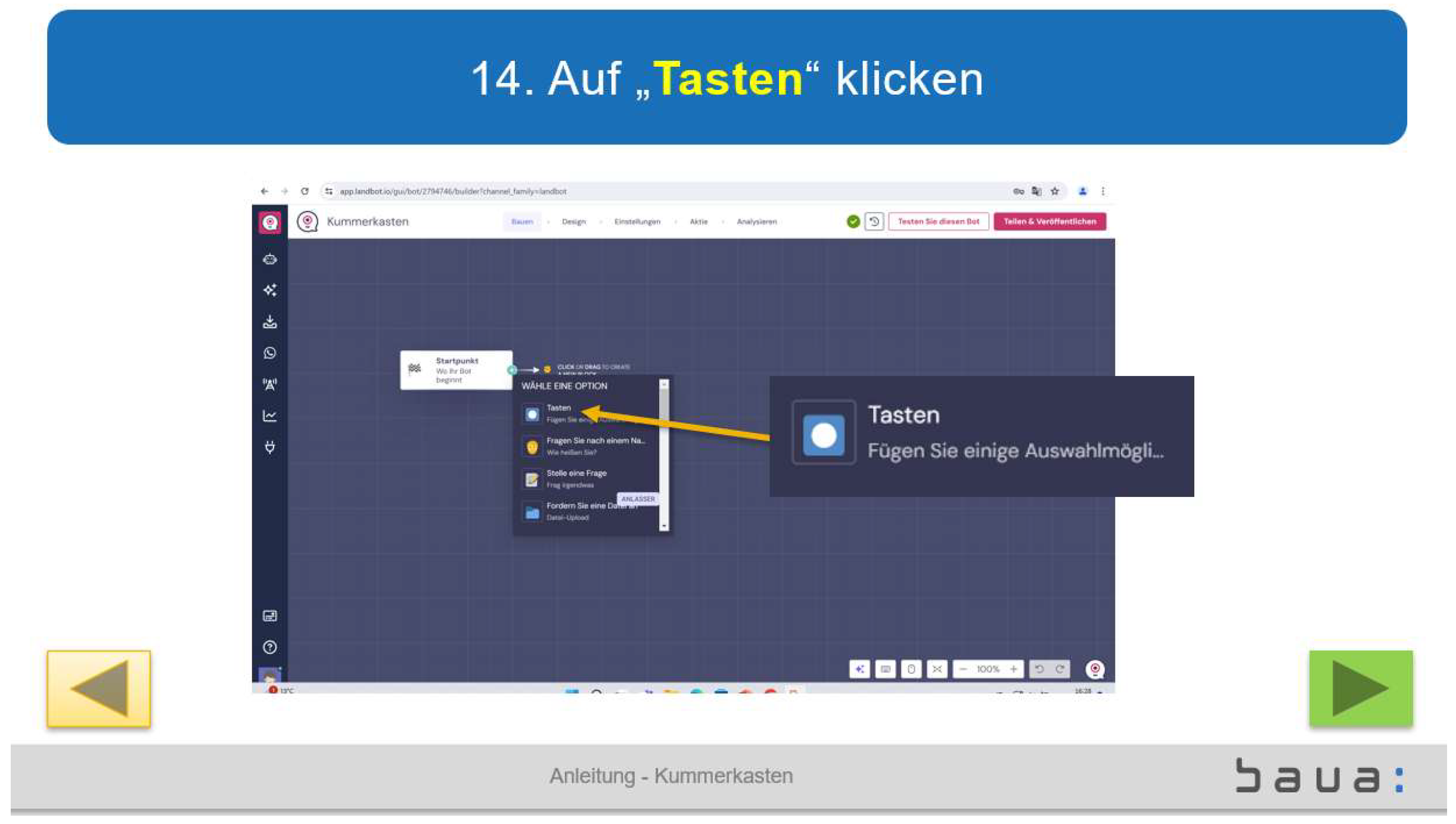

Training Material (For Three Pre-Defined Chatbots)

2.3.3. Phase III: Implementation in the Sheltered Workshop

3. Results

3.1. Results—Developers

3.1.1. Qualitative Results

Developing a New Chatbot Without Instruction (The 4th Chatbot)

- What was good about this week? Everyone said everything together. More explanation was sought and P3 said “developing chatbots”, P1 said “learning something new”, P2 said “the Vacation chatbot was good”, and P4 and P6 said “everything”.

- What did I learn? P3 said: “Put yourself in the developers’ shoes and do other tasks for a change”.

- What could be improved? Everyone unanimously said “nothing”, apart from P2, who said “more Emojis”.

- What do you take with you? P1 said “new skills” and P3 said “Experience to show the others”

- What was neglected? P6 said “the breaks” and P3 immediately said “no, the breaks were good, I like it better here than in the workshops, much quieter, many of us go home [from the sheltered workshop] with a headache, I can concentrate well here”. P1 added “[at the sheltered workshop] there is a lot of running around, no concentration”, P2 added “yes, here is nice”, and P1 continued “yes, here you can work well”.

3.1.2. Quantitative Results

System Usability Scale (SUS)

3.2. Results—End Users

3.2.1. Qualitative Results

3.2.2. Quantitative Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LCNC | low-code and no-code platforms |

| LC | low-code |

| NC | no-code |

| SR | Sickness Registration chatbot |

| SUS | System Usability Scale |

References

- El Kamouchi, H.; Kissi, M.; El Beggar, O. Low-code/No-code Development: A systematic literature review. In Proceedings of the 2023 14th International Conference on Intelligent Systems: Theories and Applications (SITA), Casablanca, Morocco, 22–23 November 2023; pp. 1–8. [Google Scholar]

- Binzer, B.; Winkler, T. Low-Coders, No-Coders, and Citizen Developers in Demand: Examining Knowledge, Skills, and Abilities Through a Job Market Analysis. In Transforming the Digitally Sustainable Enterprise: Proceedings of the 18th International Conference on Wirtschaftsinformatik, Paderborn, Germany, 18 September 2023; Springer: Cham, Switzerland, 2023. [Google Scholar]

- Martinez, E.; Pfister, L. Benefits and limitations of using low-code development to support digitalization in the construction industry. Autom. Constr. 2023, 152, 104909. [Google Scholar] [CrossRef]

- Woo, M. The Rise of No/Low Code Software Development—No Experience Needed? Engineering 2020, 6, 960–961. [Google Scholar] [CrossRef] [PubMed]

- Lebens, M.; Finnegan, R.; Sorsen, S.; Shah, J. Rise of the Citizen Developer. Muma Bus. Rev. 2021, 5, 101–111. [Google Scholar] [CrossRef] [PubMed]

- Sanchis, R.; García-Perales, Ó.; Fraile, F.; Poler, R. Low-Code as Enabler of Digital Transformation in Manufacturing Industry. Appl. Sci. 2020, 10, 12. [Google Scholar] [CrossRef]

- McHugh, S.; Carroll, N.; Connolly, C. Low-Code and No-Code in Secondary Education—Empowering Teachers to Embed Citizen Development in Schools. Comput. Sch. 2023, 41, 399–424. [Google Scholar] [CrossRef]

- Rajaram, A.; Olory, C.; Leduc, V.; Evaristo, G.; Coté, K.; Isenberg, J.; Isenberg, J.S.; Dai, D.L.; Karamchandani, J.; Chen, M.F.; et al. An integrated virtual pathology education platform developed using Microsoft Power Apps and Microsoft Teams. J. Pathol. Inform. 2022, 13, 100117. [Google Scholar] [CrossRef]

- Mew, L.; Field, D. A Case Study on Using the Mendix Low Code Platform to Support a Project Management Course. 2018. Available online: https://www.researchgate.net/publication/329488415_A_Case_Study_on_Using_the_Mendix_Low_Code_Platform_to_support_a_Project_Management_Course (accessed on 3 April 2025).

- Jauhar, S.K.; Jani, S.M.; Kamble, S.S.; Pratap, S.; Belhadi, A.; Gupta, S. How to use no-code artificial intelligence to predict and minimize the inventory distortions for resilient supply chains. Int. J. Prod. Res. 2024, 62, 5510–5534. [Google Scholar] [CrossRef]

- Konin, A.; Siddiqui, S.; Gilani, H.; Mudassir, M.; Ahmed, M.H.; Shaukat, T.; Naufil, M.; Ahmed, A.; Tran, Q.H.; Zia, M.Z. AI-mediated Job Status Tracking in AR as a No-Code service. In Proceedings of the 2022 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Singapore, 17–21 October 2022. [Google Scholar]

- Takahashi, N.; Javed, A.; Kohda, Y. How Low-Code Tools Contribute to Diversity, Equity, and Inclusion (DEI) in the Workplace: A Case Study of a Large Japanese Corporation. Sustainability 2024, 16, 5327. [Google Scholar] [CrossRef]

- Teborg, S.; Hünefeld, L.; Gerdes, T.S. Exploring the working conditions of disabled employees: A scoping review. J. Occup. Med. Toxicol. 2024, 19, 2. [Google Scholar] [CrossRef]

- Zukunft der Werkstätten. Perspektiven für und von Menschen mit Behinderung zwischen Teilhabe-Auftrag und Mindestlohn: Schachler, Viviane; Schlummer, Werner; Weber, Roland; 2023. Available online: https://www.pedocs.de/frontdoor.php?source_opus=26510 (accessed on 3 April 2025).

- BAG WfbM. BAG WfbM zu aktuellen Medienberichten über Werkstätten für Behinderte Menschen 2021. Available online: https://www.bagwfbm.de/article/5199 (accessed on 3 April 2025).

- Goodhue, D.L.; Thompson, R.L. Task-Technology Fit and Individual Performance. MIS Q. 1995, 19, 213–236. [Google Scholar] [CrossRef]

- Matic, R.; Kabiljo, M.; Zivkovic, M.; Cabarkapa, M. Extensible Chatbot Architecture Using Metamodels of Natural Language Understanding. Electronics 2021, 10, 2300. [Google Scholar] [CrossRef]

- Nguyen Quoc, C.; Nguyen Hoang, T.; Cha, J. Using No-Code/Low-Code Solutions to Promote Artificial Intelligence Adoption in Vietnamese Businesses. Int. J. Internet Broadcast. Commun. 2024, 16, 370–378. [Google Scholar]

- Lorenzo, G.; Elia, G.; Sponziello, A. Artificial Intelligence Platforms Enabling Conversational Chatbots: The Case of Tiledesk.com; Springer: Cham, Switzerland, 2025; pp. 119–137. [Google Scholar]

- Mateos-Sanchez, M.; Melo, A.C.; Blanco, L.S.; García, A.M.F. Chatbot, as Educational and Inclusive Tool for People with Intellectual Disabilities. Sustainability 2022, 14, 1520. [Google Scholar] [CrossRef]

- de Filippis, M.L.; Federici, S.; Mele, M.L.; Borsci, S.; Bracalenti, M.; Gaudino, G.; Cocco, A.; Amendola, M.; Simonetti, E. Preliminary Results of a Systematic Review: Quality Assessment of Conversational Agents (Chatbots) for People with Disabilities or Special Needs. In Computers Helping People with Special Needs; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Touzet, C. Using AI to support people with disability in the labour market. In OECD Artificial Intelligence Papers; OECD Publishing: Paris, France, 2023. [Google Scholar] [CrossRef]

- Beudt, S.; Blanc, B.; Feichtenbeiner, R.; Kähler, M. Critical reflection of AI applications for persons with disabilities in vocational rehabilitation. In Proceedings of the DELFI Workshops 2020, Online, 14–15 September 2020; Gesellschaft für Informatik e.V.z.: Bonn, Germany, 2020. [Google Scholar]

- Simon, P. Low-Code/No-Code: Citizen Developers and the Surprising Future of Business Applications; Racket Publishing: Chicago, IL, USA, 2022. [Google Scholar]

- Kajamaa, A.; Mattick, K.; de la Croix, A. How to … do mixed-methods research. Clin. Teach. 2020, 17, 267–271. [Google Scholar] [CrossRef]

- Dawadi, S.; Shrestha, S.; Giri, R. Mixed-Methods Research: A Discussion on its Types, Challenges, and Criticisms. J. Stud. Educ. 2021, 2, 25–36. [Google Scholar] [CrossRef]

- Creswell, J.W.; Plano Clark, V.L. Designing and Conducting Mixed Methods Research, 3rd ed.; SAGE Publications: Thousand Oaks CA, USA, 2017. [Google Scholar]

- Morgeson, F.; Humphrey, S. The Work Design Questionnaire (WDQ): Developing and Validating A Comprehensive Measure for Assessing Job Design and the Nature of Work. J. Appl. Psychol. 2006, 91, 1321–1339. [Google Scholar] [CrossRef]

- Stegmann, S.; van Dick, R.; Ullrich, J.; Charalambous, J.; Menzel, B.; Egold, N.; Wu, T.T.-C. Der Work Design Questionnaire—Vorstellung und erste Validierung einer deutschen Version. Z. Arb. Organ. AO 2010, 54, 1–28. [Google Scholar]

- Schorr, A. Skala zur Erfassung der Digitalen Technologieakzeptanz—Weiterentwicklung zum testtheoretisch geprüften Instrument. Digitaler Wandel, Digitale Arbeit, Digitaler Mensch? Bericht zum 66. Arbeitswissenschaftlichen Kongress vom 16.—18. März 2020, Berlin. No. 35, B.20.52020; pp. 1–6. Available online: https://gfa2020.gesellschaft-fuer-arbeitswissenschaft.de/inhalt/B.20.5.pdf (accessed on 3 April 2025).

- Schorr, A. The Technology Acceptance Model (TAM) and its Importance for Digitalization Research: A Review. Proc. TecPsy 2023, 55–65. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A quick and dirty usability scale. Usability Eval. Ind. 1995, 189, 4–7. [Google Scholar]

- Rummel, B. System Usability Scale (Translated into German). 2013. Available online: https://community.sap.com/t5/additional-blogs-by-sap/system-usability-scale-jetzt-auch-auf-deutsch/ba-p/13487686 (accessed on 3 April 2025).

- Hyzy, M.; Bond, R.; Mulvenna, M.; Bai, L.; Dix, A.; Leigh, S.; Hunt, S. System Usability Scale Benchmarking for Digital Health Apps: Meta-analysis. JMIR mHealth uHealth 2022, 10, e37290. [Google Scholar] [CrossRef]

- Gutiérrez, M.M.; Rojano-Cáceres, J.R. Interpretation of the SUS questionnaire in Mexican sign language to evaluate usability an approach. In Proceedings of the 2020 3rd International Conference of Inclusive Technology and Education (CONTIE), Baja California Sur, Mexico, 28–30 October 2020. [Google Scholar]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Knispel, J.W.L.S.V.; Arling, V. Skala zur Messung der Beruflichen Selbstwirksamkeitserwartung (BSW-5-Rev); Zusammenstellung sozialwissenschaftlicher Items und Skalen (ZIS): Mannheim, Germany, 2021. [Google Scholar]

- Neyer, F.J.F.J.; Gebhardt, C. Kurzskala Technikbereitschaft (TB, Technology Commitment); Zusammenstellung sozialwissenschaftlicher Items und Skalen (ZIS): Mannheim, Germany, 2016. [Google Scholar]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Hannay, J.; Dybå, T.; Arisholm, E.; Sjøberg, D. The effectiveness of pair programming: A meta-analysis. Inf. Softw. Technol. 2009, 51, 1110–1122. [Google Scholar] [CrossRef]

- Roldán-Álvarez, D.; Márquez-Fernández, A.; Rosado-Martín, S.; Martín, E.; Haya, P.A.; García-Herranz, M. Benefits of Combining Multitouch Tabletops and Turn-Based Collaborative Learning Activities for People with Cognitive Disabilities and People with ASD. In Proceedings of the 2014 IEEE 14th International Conference on Advanced Learning Technologies, Athens, Greece, 7–10 July 2014. [Google Scholar]

- Noushad, B.; Van Gerven, P.W.M.; de Bruin, A.B.H. Twelve tips for applying the think-aloud method to capture cognitive processes. Med. Teach. 2024, 46, 892–897. [Google Scholar] [CrossRef]

- Ericsson, K.A.; Simon, H.A. Verbal Reports as Data. Psychol. Rev. 1980, 87, 215–251. [Google Scholar] [CrossRef]

- Lundmark, C.; Nilsson, J.; Krook-Riekkola, A. Taking Stock of Knowledge Transfer Studies: Finding Ways Forward. Environ. Manag. 2023, 72, 1146–1162. [Google Scholar] [CrossRef]

- Mohajerzad, H.; Schrader, J. Transfer from research to practice—A scoping review about transfer strategies in the field of research on digital media. Comput. Educ. Open 2022, 3, 100111. [Google Scholar] [CrossRef]

- Matallana, J.; Paredes, M. Teaching methodology for people with intellectual disabilities: A case study in learning ballet with mobile devices. Univers. Access Inf. Soc. 2023, 24, 409–423. [Google Scholar] [CrossRef]

- Gilson, C.B.; Carter, E.W.; Biggs, E.E. Systematic Review of Instructional Methods to Teach Employment Skills to Secondary Students with Intellectual and Developmental Disabilities. Res. Pract. Pers. Sev. Disabil. 2017, 42, 89–107. [Google Scholar] [CrossRef]

- Schaap, R.; Stevels, V.A.; de Wolff, M.S.; Hazelzet, A.; Anema, J.R.; Coenen, P. “I noticed that when I have a good supervisor, it can make a Lot of difference”. A Qualitative Study on Guidance of Employees with a Work Disability to Improve Sustainable Employability. J. Occup. Rehabil. 2023, 33, 201–212. [Google Scholar] [CrossRef]

- Frogner, J.; Hanisch, H.M.; Kvam, L.; Witsø, A.E. A Glass House of Care: Sheltered Employment for Persons with Intellectual Disabilities. Scand. J. Disabil. Res. 2023, 25, 282–294. [Google Scholar] [CrossRef]

- Oldman, J.; Thomson, L.; Calsaferri, K.; Luke, A.; Bond, G.R. A Case Report of the Conversion of Sheltered Employment to Evidence-Based Supported Employment in Canada. Psychiatr. Serv. 2005, 56, 1436–1440. [Google Scholar] [CrossRef] [PubMed]

- Nettles, J.L. From Sheltered Workshops to Integrated Employment: A Long Transition. LC J. Spec. Educ. 2013, 8, 9. [Google Scholar]

- Elshan, E.; Siemon, D.; Bruhin, O.; Kedziora, D.; Schmidt, N. Unveiling Challenges and Opportunities in Low Code Development Platforms: A StackOverflow Analysis. In Proceedings of the 57th Hawaii International Conference on System Sciences, Honolulu, HI, USA, 3–6 January 2024. [Google Scholar]

- Biedova, O.; Ives, B.; Male, D.; Moore, M. Strategies for Managing Citizen Developers and No-Code Tools. MIS Q. Exec. 2024, 23, 4. [Google Scholar]

| Participant | Age (Years) | Gender | Education * | Disability | Tasks at the Sheltered Workshop |

|---|---|---|---|---|---|

| P1 | 18 | Female | Field-oriented qualification | Physical and developmental disability | Packaging and assembly of products |

| P2 | 19 | Male | Workplace-oriented qualification | Intellectual and developmental disability | Packaging and assembly of products |

| P3 | 20 | Male | Workplace-oriented qualification | Intellectual disability | Packaging and assembly of products |

| P4 | 23 | Female | Workplace-oriented qualification | Cognitive disability | Packaging and assembly of products |

| P5 | 27 | Female | Field-oriented qualification | Intellectual disability | Packaging and assembly of products |

| P6 | 20 | Male | Workplace-oriented qualification | Intellectual and developmental disability | Housekeeping Department |

| Members | Day 2 | Day 3 | Day 4 | |

|---|---|---|---|---|

| Group 1 | P1 and P6 | Chatbot 1: Vacation Chatbot 2: Suggestion Box | Chatbot 1: Vacation Chatbot 2: Cookbook | Chatbot 1: Sickness Registration (SR) Chatbot 2: SR |

| Group 2 | P2 and P3 | Chatbot 1: Cookbook Chatbot 2: Vacation | Chatbot 1: Vacation Chatbot 2: Suggestion Box | Chatbot 1: SR Chatbot 2: SR |

| Group 3 * | P4 and P5 | Chatbot 1: Suggestion Box Chatbot 2: Vacation | Chatbot 1: Vacation Chatbot 2: Vacation and Suggestion Box | Chatbot 1: SR Chatbot 2: SR |

| Participant | Suggestion Box | Cookbook | Sickness Registration | Vacation | Voted for Implementation |

|---|---|---|---|---|---|

| P1 | 4 | 5 | 5 | 4.5 | Cookbook and SR |

| P2 | 5 | 4 | 3 | 3.5 | Suggestion Box |

| P3 | 5 | 5 | 3 | 4 | Vacation |

| P4 | 5 | 5 | 3 | 4.5 | - |

| P5 | 4.5 | 5 | 5 | 5 | - |

| P6 | 5 | 5 | 3 | 3 | - |

| Questionnaire | n | Mean | Median | SD |

|---|---|---|---|---|

| BSW: | ||||

| Before training | 6 | 3.06 | 3.00 | 0.48 |

| After training | 6 | 3.53 | 3.70 | 0.67 |

| TB: | ||||

| Before training | 6 | 41.33 | 40.5 | 9.99 |

| After training | 6 | 42.67 | 42.5 | 7.78 |

| WDQ | ||||

| Before training | 6 | |||

| 1. Task variety | 3.91 | 3.87 | 0.40 | |

| 2. Feedback from job | 4.02 | 3.83 | 0.56 | |

| 3. Problem solving | 3.75 | 3.62 | 0.31 | |

| 4. Skill variety | 4.25 | 4.12 | 0.67 | |

| 5. Equipment use | 3.33 | 3.33 | 0.47 | |

| After training | 6 | |||

| 1. Task variety | 4.12 | 4.25 | 0.41 | |

| 2. Feedback from job | 4.11 | 4.83 | 1.25 | |

| 3. Problem solving | 3.37 | 3.50 | 0.41 | |

| 4. Skill variety | 4.04 | 4.12 | 1.04 | |

| 5. Equipment use | 3.44 | 3.33 | 1.08 | |

| DTAS | ||||

| Before training | 6 | |||

| 1. Perceived usefulness | 17.67 | 18.50 | 2.87 | |

| 2. Perceived ease of use | 14.00 | 15.50 | 5.02 | |

| 3. Attitudes towards usage | 12.17 | 13.50 | 3.65 | |

| 4. Behavioral intention to use | 9.33 | 10.00 | 1.03 | |

| after training | 6 | |||

| 1. Perceived usefulness | 14.83 | 16.00 | 6.01 | |

| 2. Perceived ease of use | 15.50 | 16.00 | 4.55 | |

| 3. Attitudes towards usage | 11.83 | 13.00 | 4.30 | |

| 4. Behavioral intention to use | 7.17 | 7.50 | 3.18 |

| Demographics | N (%) |

|---|---|

| Gender | |

| Female | 4 (28.6) |

| Male | 9 (64.3) |

| Missing | 1 (7.1) |

| Age (years) | |

| 18–24 | 12 (85.7) |

| 25–34 | 1 (7.1) |

| 45–54 | 1 (7.1) |

| Mean (SD) | 22.93 (6.9) |

| Disability | |

| Missing | 8 (28.6) |

| Physical disability | 1 (7.1) |

| Developmental disability | 1 (7.1) |

| Mental illness | 1 (7.1) |

| Multiple disability | 3 (21.4) |

| SUS scores | |

| 100–85 | 12 (85.7) |

| Under 85 | 2 (14.3) |

| Mean (SD) | 88.9 (11.2) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hamideh Kerdar, S.; Kirchhoff, B.M.; Adolph, L.; Bächler, L. A Study on Chatbot Development Using No-Code Platforms by People with Disabilities for Their Peers at a Sheltered Workshop. Technologies 2025, 13, 146. https://doi.org/10.3390/technologies13040146

Hamideh Kerdar S, Kirchhoff BM, Adolph L, Bächler L. A Study on Chatbot Development Using No-Code Platforms by People with Disabilities for Their Peers at a Sheltered Workshop. Technologies. 2025; 13(4):146. https://doi.org/10.3390/technologies13040146

Chicago/Turabian StyleHamideh Kerdar, Sara, Britta Marleen Kirchhoff, Lars Adolph, and Liane Bächler. 2025. "A Study on Chatbot Development Using No-Code Platforms by People with Disabilities for Their Peers at a Sheltered Workshop" Technologies 13, no. 4: 146. https://doi.org/10.3390/technologies13040146

APA StyleHamideh Kerdar, S., Kirchhoff, B. M., Adolph, L., & Bächler, L. (2025). A Study on Chatbot Development Using No-Code Platforms by People with Disabilities for Their Peers at a Sheltered Workshop. Technologies, 13(4), 146. https://doi.org/10.3390/technologies13040146