Microlearning for the Development of Teachers’ Digital Competence Related to Feedback and Decision Making

Abstract

1. Introduction

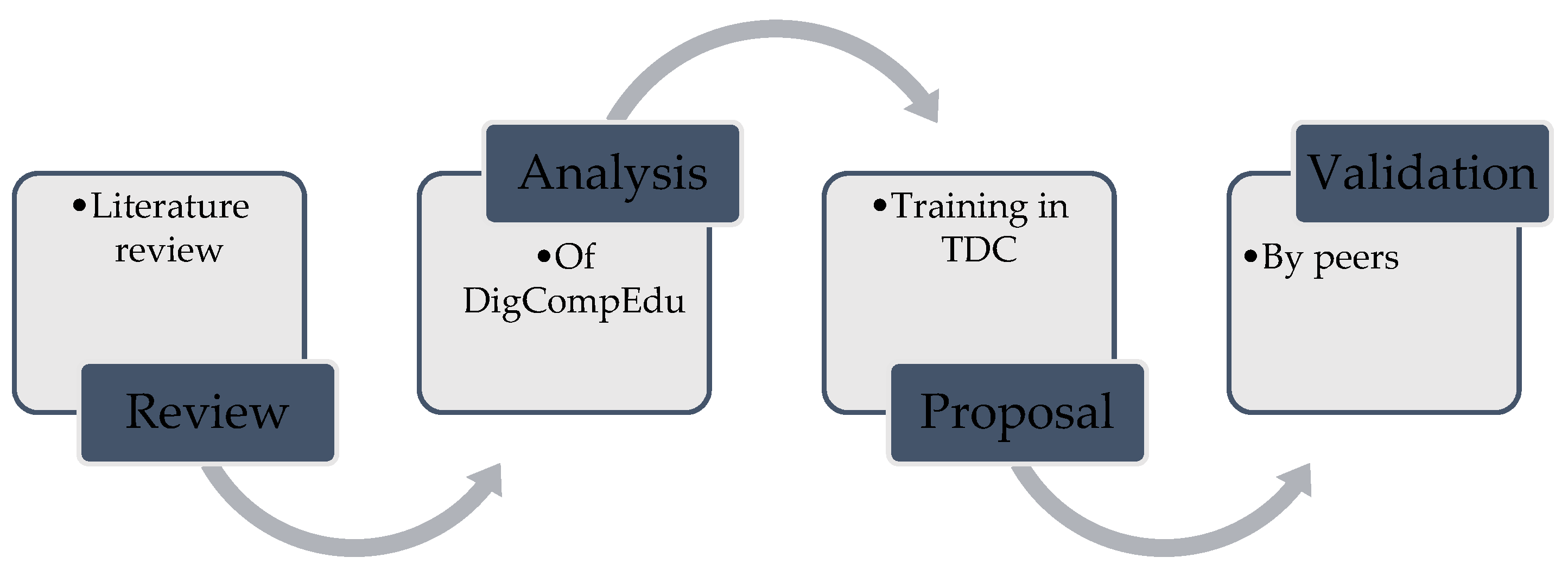

2. Materials and Methods

3. Results

3.1. Literature Review: RQ1

3.2. Literature Review: RQ2

3.3. Content Analysis and Creation of a Training Proposal

3.4. Validation of the Training Proposal

- −

- “Function of the audio format when providing feedback”: The proposal is adjusted by integrating into a single item both the function and the relevant cases for using this format.

- −

- “Feedback from content curation”: The observations showed that the creation of resources is a task that exceeds the teachers’ actual assessment time, so it is adjusted through the construction of comment banks for feedback.

- −

- “Providing scaffolding in feedback”: The types of scaffolding to be addressed in the microcourse are specified to define their scope. The topics are also strengthened to emphasise the importance of considering the analysis of feedback from a dialogical rather than a purely instrumental perspective.

- −

- “Configuring conditions within a Learning Management System (LMS)”: A more detailed description of the scope of automatic feedback through a learning platform is included.

- −

- “Virtual tutors” and “Tools for configuring virtual tutors”: A conceptual clarification is made to explain that the topic of chatbots as feedback tools will be addressed.

- −

- “Tools for reviewing exercises with artificial intelligence (AI)”: It is adjusted to “AI tools that contribute to feedback” and “ChatGPT and its use in feedback practices”.

- −

- “Design and delivery of feedback”: Observations related to level C2 led to delimiting and improving the scope of the microcourse towards “How to identify the effect of our feedback? Media and instruments” and “Analysis of feedback effects and decision-making”.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Competence Level | Indicators | Proposed Microcourses | Themes of the Microcourses |

|---|---|---|---|

| A2 (Explorer): Use of digital technologies to configure feedback. | I use digital technologies to obtain an overview of students’ progress, which I use as a basis for offering suggestions and advice. | Block 1: How to select technological tools for providing learning feedback? M1. What is feedback? M2. What tools can help me provide feedback? M3. Providing feedback using rubrics and checklists. | M1. Foundations of feedback. Feedback cycle. Levels of feedback customisation. Elements in feedback. Feedback formats. M2. Tools associated with LMS. Tools associated with Microsoft. Tools associated with Google. M3. Design and configuration of holistic rubrics for providing feedback. Design and configuration of analytical rubrics for providing feedback. |

| B1 (Integrator): Use of digital technologies to provide feedback. | I use digital technology to grade and provide feedback on electronically submitted assignments. I assist students and/or parents in accessing information about student performance using digital technologies. | Block 2: How to provide feedback at an introductory dialogic level? M4. Providing feedback from objective assessments. M5. Providing feedback from audio. M6. Providing feedback from video and screencasts. | M4. Designing feedback for multiple-choice questions. Designing feedback for matching questions. Designing feedback for true/false questions. Designing feedback for fill-in-the-blank questions. M5. Role of the audio format in feedback. When to use audio for feedback. Considerations in designing audio feedback. Agile tools for providing audio feedback. M6. Role of video format in feedback. When to use video or screencast for feedback. Considerations in designing video or screencast feedback. Agile tools for providing video or screencast feedback. |

| B2 (Expert): Use of digital data to enhance the effectiveness of feedback and support. | I adapt my teaching and assessment practices based on the data generated by the digital technologies I use. I use these data to provide personalised feedback and offer differentiated support to students. | Block 3: How to provide feedback at an advanced dialogic level? M7. Providing feedback from enriched digital content. M8. Learning data analysis. M9. Literacy in feedback for students and providing scaffolding. | M7. Feedback from content curation. Tools for content curation. Creating feedback banks. M8. Available metrics in Moodle that contribute to feedback. Learning analytics reports. Digital tools that provide learning metrics. M9. Validating the clarity of feedback. Scaffolding in feedback. How to engage students to make the most of feedback. |

| C1 (Leader): Use of digital technologies to personalise feedback and support. | I help students identify areas for improvement and collaboratively develop learning plans to address these areas based on the available evidence. | Block 4: How to provide feedback using conditional systems? M10. Configuring conditionals and learning paths in an LMS. M11. Gamifying an LMS as a feedback strategy. | M10. Planning learning paths based on results (automated feedback). Configuring conditions within an LMS. M11. Planning the gamified path. Level Up, Stash, and Game extensions. Use of badges. |

| C2 (Pioneer): Use of digital data to evaluate and improve teaching. Designing new systems for providing feedback. | I reflect, debate, redesign, and innovate teaching strategies based on the digital evidence I find regarding students’ preferences and needs. | Block 5: Providing feedback from an artificial intelligence (AI) perspective M12. AI tools for feedback. M13. Investigating our feedback. | M12. Chatbots as feedback tools. AI tools that contribute to feedback. ChatGPT and its use in feedback practices. M13. Why study the effect of our feedback? How to identify the effect of our feedback? Media and instruments. Analysing the effects of feedback and decision making. |

References

- García-Ruiz, R.; Buenestado-Fernández, M.; Ramírez-Montoya, M.S. Evaluación de la Competencia Digital Docente: Instrumentos, resultados y propuestas. Revisión sistemática de la literatura. Educ. XX1 2023, 26, 273–301. [Google Scholar] [CrossRef]

- Betancur, V.; García, A. Necesidades de formación y referentes de evaluación en torno a la competencia digital docente: Revisión sistemática. Fonseca J. Commun. 2022, 25, 133–147. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Romero-Tena, R.; Palacios-Rodríguez, A. Evaluation of Teacher Digital Competence Frameworks Through Expert Judgement: The Use of the Expert Competence Coefficient. J. New Approaches Educ. Res. 2020, 9, 275–293. [Google Scholar] [CrossRef]

- Punie, Y.; Redecker, C. (Eds.) European Framework for the Digital Competence of Educators: DigCompEdu, EUR 28775 EN; Publications Office of the European Union: Luxembourg, 2017; ISBN 978-92-79-73718-3. [Google Scholar]

- Espasa, A.; Guasch, T. Menos es más: Menos correcciones y más feedback para aprender. In Decálogo para la Mejora de la Docencia Online; Sangrà Morer, A., Badia Garganté, T., Cabrera Lanzo, N., Espasa Roca, A., Fernández Ferrer, M., Guàrdia Ortiz, L., Guasch Pascual, T., Guitert Catasús, M., Maina, M.F., Raffaghelli, J.E., et al., Eds.; Editorial UOC: Barcelona, Spain, 2020; Available online: http://openaccess.uoc.edu/webapps/o2/handle/10609/122307 (accessed on 7 June 2023).

- Haughney, K.; Wakeman, S.; Hart, L. Quality of Feedback in Higher Education: A Review of Literature. Educ. Sci. 2020, 10, 60. [Google Scholar] [CrossRef]

- Cockett, A.; Jackson, C. The use of assessment rubrics to enhance feedback in higher education: An integrative literature review. Nurse Educ. Today 2018, 69, 8–13. [Google Scholar] [CrossRef]

- Morris, R.; Perry, T.; Wardle, L. Formative assessment and feedback for learning in higher education: A systematic review. Rev. Educ. 2021, 9, e3292. [Google Scholar] [CrossRef]

- Gros Salvat, B.; Cano Garcia, E. Self-Regulated Feedback Processes Enhanced by Technology in Higher Education: A Systematic Review. RIED.-Rev. Iberoam. Educ. Distancia 2021, 24, 107–125. [Google Scholar] [CrossRef]

- Paterson, C.; Paterson, N.; Jackson, W.; Work, F. What are students’ needs and preferences for academic feedback in higher education? A systematic review. Nurse Educ. Today 2020, 85, 104236. [Google Scholar] [CrossRef]

- Banihashem, S.K.; Noroozi, O.; van Ginkel, S.; Macfadyen, L.P.; Biemans, H.J.A. A systematic review of the role of learning analytics in enhancing feedback practices in higher education. Educ. Res. Rev. 2022, 37, 100489. [Google Scholar] [CrossRef]

- Betancur, V.; García, A. Características del Diseño de Estrategias de microaprendizaje en escenarios educativos: Revisión sistemática. RIED-Rev. Iberoam. Educ. Distancia 2023, 26, 201–222. [Google Scholar] [CrossRef]

- Cabero Almenara, J.; Romero-Tena, R. Diseño de Un T-MOOC Para La Formación En Competencias Digitales Docentes: Estudio En Desarrollo (Proyecto DIPROMOOC). Innoeduca. Int. J. Technol. Educ. Innov. 2020, 6, 4–13. [Google Scholar] [CrossRef]

- Hug, T. Sound pedagogy practices for designing and implementing microlearning objects. In Microlearning in the Digital Age: The Design and Delivery of Learning in Snippets; Corbeil, J.R., Khan, B.H., Corbeil, M.E., Eds.; eBook Collection (EBSCOhost); Routledge: New York, NY, USA, 2021. [Google Scholar]

- Allela, M.A.; Ogange, B.O.; Junaid, M.I.; Charles, P.B. Effectiveness of Multimodal Microlearning for In-Service Teacher Training. J. Learn. Dev. 2020, 7, 384–398. [Google Scholar] [CrossRef]

- Figueira, L.F. Digital Competence: DigCompEdu Check-in as a Digital Literacy Diagnostic Tool to Support Teacher Training. Educ. Formação 2022, 7. [Google Scholar] [CrossRef]

- Dias-Trindade, S.; Moreira, J.A.; Ferreira, A.G. Assessment of University Teachers on Their Digital Competences. Qwerty-Open Interdiscip. J. Technol. Cult. Educ. 2020, 15, 50–69. [Google Scholar] [CrossRef]

- Basilotta-Gómez-Pablos, V.; Matarranz, M.; Casado-Aranda, L.-A.; Otto, A. Teachers’ Digital Competencies in Higher Education: A Systematic Literature Review. Int. J. Educ. Technol. High. Educ. 2022, 19, 8. [Google Scholar] [CrossRef]

- Lucas, M.; Dorotea, N.; Piedade, J. Developing Teachers’ Digital Competence: Results From a Pilot in Portugal. IEEE Rev. Iberoam. Tecnol. Aprendiz. 2021, 16, 84–92. [Google Scholar] [CrossRef]

- Santo, E.d.E.; Dias-Trindade, S.; dos Reis, R.S. Self-Assessment of Digital Competence for Educators: A Brazilian Study with University Professors. Res. Soc. Dev. 2022, 11, e26311930725. [Google Scholar] [CrossRef]

- Deneen, C.; Munshi, C. Technology-Enabled Feedback: It’s Time for a Critical Review of Research and Practice. In Proceedings of the 35th International Conference of Innovation: Open Oceans: Learning without borders, Proceedings ASCILITE, Geelong, Australia, 25–28 November 2018; pp. 113–120. [Google Scholar]

- Ryan, T.; Henderson, M.; Phillips, M. Feedback Modes Matter: Comparing Student Perceptions of Digital and Non-Digital Feedback Modes in Higher Education. Br. J. Educ. Technol. 2019, 50, 1507–1523. [Google Scholar] [CrossRef]

- Fraile, J.; Ruiz-Bravo, P.; Zamorano-Sande, D.; Orgaz-Rincón, D. Evaluación formativa, autorregulación, feedback y herramientas digitales: Uso de Socrative en educación superior (Formative assessment, self-regulation, feedback and digital tools: Use of Socrative in higher education). Retos 2021, 42, 724–734. [Google Scholar] [CrossRef]

- Revuelta-Domínguez, F.-I.; Guerra-Antequera, J.; González-Pérez, A.; Pedrera-Rodríguez, M.-I.; González-Fernández, A. Digital Teaching Competence: A Systematic Review. Sustainability 2022, 14, 6428. [Google Scholar] [CrossRef]

- Reisoğlu, İ.; Çebi, A. How Can the Digital Competences of Pre-Service Teachers Be Developed? Examining a Case Study through the Lens of DigComp and DigCompEdu. Comput. Educ. 2020, 156, 103940. [Google Scholar] [CrossRef]

- Diaz Redondo, R.P.; Caeiro Rodriguez, M.; Lopez Escobar, J.J.; Fernandez Vilas, A. Integrating Micro-Learning Content in Traditional e-Learning Platforms. Multimed. Tools Appl. 2020, 80, 3121–3151. [Google Scholar] [CrossRef]

- Zhang, J.; West, R.E. Designing Microlearning Instruction for Professional Development Through a Competency Based Approach. TechTrends 2019, 64, 310–318. [Google Scholar] [CrossRef]

- De la Roca, M.; Morales, M.; Teixeira, A.; Hernández, R.; Amado-Salvatierra, H. The Experience of Designing and Developing an EdX’s MicroMasters Program to Develop or Reinforce the Digital Competence on Teachers. In Proceedings of the 2018 Learning with MOOCS (LWMOOCS), Madrid, Spain, 26–28 September 2018; pp. 34–38. [Google Scholar] [CrossRef]

- Basantes-Andrade, A.; Cabezas-González, M.; Casillas-Martín, S. Los nano-MOOC como herramienta de formación en competencia digital docente. Rev. Ibérica Sist. E Tecnol. Informação 2020, E32, 202–214. [Google Scholar]

- Torgerson, C. What is microlearning? Origin, definitions, and applications. In Microlearning in the Digital Age: The Design and Delivery of Learning in Snippets; Corbeil, J.R., Khan, B.H., Corbeil, M.E., Eds.; eBook Collection (EBSCOhost); Routledge: New York, NY, USA, 2021. [Google Scholar]

- Vilchis, N. Microaprendizaje: Lecciones Breves que Enriquecen el Aula; Observatorio/Instituto para el Futuro de la Educación: Monterrey, Mexico, 2023; Available online: https://observatorio.tec.mx/edu-news/microaprendizaje-en-el-aula/ (accessed on 22 May 2023).

- Tufan, D. Multimedia design principles for microlearning. In Microlearning in the Digital Age: The Design and Delivery of Learning in Snippets; Corbeil, J.R., Khan, B.H., Corbeil, M.E., Eds.; eBook Collection (EBSCOhost); Routledge: New York, NY, USA, 2021. [Google Scholar]

- Corbeil, J.R.; Khan, B.H.; Corbeil, M.E. Microlearning in the Digital Age: The Design and Delivery of Learning in Snippets; Routledge: New York, NY, USA, 2021. [Google Scholar]

- Hug, T. Microlearning. In Encyclopedia of the Sciences of Learning; Seel, N.M., Ed.; Springer: Boston, MA, USA, 2012; pp. 2268–2271. [Google Scholar] [CrossRef]

- Colás-Bravo-Bravo, P.; Conde-Jiménez, J.; Reyes-de-Cózar, S. The development of the digital teaching competence from a sociocultural approach. Comunicar 2019, 27, 21–32. [Google Scholar] [CrossRef]

- Lucas, M.; Bem-Haja, P.; Siddiq, F.; Moreira, A.; Redecker, C. The Relation between In-Service Teachers’ Digital Competence and Personal and Contextual Factors: What Matters Most? Comput. Educ. 2021, 160, 104052. [Google Scholar] [CrossRef]

- Tennyson, C.D.; Smallheer, B.A.; De Gagne, J.C. Microlearning Strategies in Nurse Practitioner Education. Nurse Educ. 2022, 47, 2–3. [Google Scholar] [CrossRef]

- Heydari, S.; Adibi, P.; Omid, A.; Yamani, N. Preferences of the Medical Faculty Members for Electronic Faculty Development Programs (e-FDP): A Qualitative Study. Adv. Med. Educ. Pract. 2019, 10, 515–526. [Google Scholar] [CrossRef]

- Prior Filipe, H.; Paton, M.; Tipping, J.; Schneeweiss, S.; Mack, H.G. Microlearning to Improve CPD Learning Objectives. Clin. Teach. 2020, 17, 695–699. [Google Scholar] [CrossRef]

- Govender, K.K.; Madden, M. The Effectiveness of Micro-Learning in Retail Banking. S. Afr. J. High. Educ. 2020, 34, 74–94. [Google Scholar] [CrossRef]

- Emerson, L.C.; Berge, Z.L. Microlearning: Knowledge Management Applications and Competency-Based Training in the Workplace. Knowl. Manag. E-Learn. Int. J. 2018, 10, 125–132. [Google Scholar]

- Lee, Y.-M.; Jahnke, I.; Austin, L. Mobile Microlearning Design and Effects on Learning Efficacy and Learner Experience. Educ. Technol. Res. Dev. 2021, 69, 885–915. [Google Scholar] [CrossRef]

- Espasa Roca, A.; Mayordomo Saiz, R.M.; Guasch Pascual, T.; Martinez Melo, M. Does the Type of Feedback Channel Used in Online Learning Environments Matter? Students’ Perceptions and Impact on Learning. Act. Learn. High. Educ. 2019, 23, 49–63. [Google Scholar] [CrossRef]

- Espasa, A.; Guasch, T.; Mayordomo, R.M.; Martínez-Melo, M.; Carless, D. A Dialogic Feedback Index Measuring Key Aspects of Feedback Processes in Online Learning Environments. High. Educ. Res. Dev. 2018, 37, 499–513. [Google Scholar] [CrossRef]

- Sangrà, A.; Guitert, M.; Behar, P.A. Competencias y metodologías innovadoras para la educación digital. RIED-Rev. Iberoam. Educ. Distancia 2023, 26, 9–16. [Google Scholar] [CrossRef]

- Bulut, O.; Cutumisu, M.; Aquilina, A.M.; Singh, D. Effects of Digital Score Reporting and Feedback on Students’ Learning in Higher Education. Front. Educ. 2019, 4, 65. [Google Scholar] [CrossRef]

- Guasch, T.; Espasa, A.; Martinez-Melo, M. The Art of Questioning in Online Learning Environments: The Potentialities of Feedback in Writing. Assess. Eval. High. Educ. 2019, 44, 111–123. [Google Scholar] [CrossRef]

- Quezada Cáceres, S.; Salinas Tapia, C.; Quezada Cáceres, S.; Salinas Tapia, C. Modelo de retroalimentación para el aprendizaje: Una propuesta basada en la revisión de literatura. Rev. Mex. Investig. Educ. 2021, 26, 225–251. [Google Scholar]

- Carless, D.; Boud, D. The Development of Student Feedback Literacy: Enabling Uptake of Feedback. Assess. Eval. High. Educ. 2018, 43, 1315–1325. [Google Scholar] [CrossRef]

- Espasa-Roca, A.; Guasch-Pascual, T. ¿Cómo implicar a los estudiantes para que utilicen el feedback online? RIED-Rev. Iberoam. Educ. Distancia 2021, 24, 2. [Google Scholar] [CrossRef]

- Wong, L.; Lam, C. Herramientas para la retroalimentación y la evaluación para el aprendizaje a distancia en el contexto de la pandemia por la COVID-19. En Blanco Y Negro 2020, 11, 83–95. [Google Scholar]

- Espasa, A.; Meneses, J. Analysing Feedback Processes in an Online Teaching and Learning Environment: An Exploratory Study. High. Educ. 2010, 59, 277–292. [Google Scholar] [CrossRef]

- Alemdag, E.; Yildirim, Z. Effectiveness of Online Regulation Scaffolds on Peer Feedback Provision and Uptake: A Mixed Methods Study. Comput. Educ. 2022, 188, 104574. [Google Scholar] [CrossRef]

- Sedrakyan, G.; Dennerlein, S.; Pammer-Schindler, V.; Lindstaedt, S. Measuring Learning Progress for Serving Immediate Feedback Needs: Learning Process Quantification Framework (LPQF). In Addressing Global Challenges and Quality Education; Alario-Hoyos, C., Rodríguez-Triana, M.J., Scheffel, M., Arnedillo-Sánchez, I., Dennerlein, S.M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; pp. 443–448. [Google Scholar] [CrossRef]

- Tempelaar, D.; Nguyen, Q.; Rienties, B. Learning Feedback Based on Dispositional Learning Analytics. In Machine Learning Paradigms: Advances in Learning Analytics; Virvou, E.E.M., Alepis, G.A., Tsihrintzis, L.C.J., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 69–89. [Google Scholar] [CrossRef]

- Floratos, N.; Guasch, T.; Espasa, A. Recommendations on Formative Assessment and Feedback Practices for Stronger Engagement in MOOCs. Open Prax. 2015, 7, 141–152. [Google Scholar] [CrossRef]

- Montenegro, N.Y.; Fernández, B.H.; Mesía, M.M.S.; Uriarte, M.N.L. App de gamificación para la retroalimentación formativa en estudiantes de secundaria. Horiz. Rev. Investig. Cienc. Educ. 2022, 6, 2019–2030. [Google Scholar] [CrossRef]

- Tang, J.; (Chris)Zhao, Y.; Wang, T.; Zeng, Z. Examining the Effects of Feedback-Giving as a Gamification Mechanic in Crowd Rating Systems. Int. J. Hum.-Comput. Interact. 2021, 37, 1916–1930. [Google Scholar] [CrossRef]

- López, D.L.; Muniesa, F.V.; Gimeno, Á.V. Aprendizaje adaptativo en moodle: Tres casos prácticos. Educ. Knowl. Soc. 2015, 16, 138–157. [Google Scholar] [CrossRef]

- del Puerto, D.A.; Gutiérrez, P.E. La Inteligencia Artificial como recurso educativo durante la formación inicial del profesorado. RIED.-Rev. Iberoam. Educ. Distancia 2022, 25, 347–362. [Google Scholar] [CrossRef]

- Hooda, M.; Rana, C.; Dahiya, O.; Rizwan, A.; Hossain, M.S. Artificial Intelligence for Assessment and Feedback to Enhance Student Success in Higher Education. Math. Probl. Eng. 2022, 2022, e5215722. [Google Scholar] [CrossRef]

- Vijayakumar, B.; Höhn, S.; Schommer, C. Quizbot: Exploring Formative Feedback with Conversational Interfaces. In Technology Enhanced Assessment; Draaijer, S., Joosten-ten Brinke, D., Ras, E., Eds.; Communications in Computer and Information Science; Springer International Publishing: Cham, Switzerland, 2019; pp. 102–120. [Google Scholar] [CrossRef]

| Categories | Characteristics | No |

|---|---|---|

| Understanding of feedback | The idea that feedback is useful when students understand it and see it as an aid to their learning is supported. | 37 |

| Problematic final feedback | The centrality of feedback to the final task or assignment and the tendency to provide comments without ensuring learning are questioned. | 31 |

| Problematic use of feedback | Why feedback is not effective and the need to educate students about its use is studied. They identify a tendency among students to consider feedback as having little value in their process. | 21 |

| Online feedback | The role of technology in the development of feedback is analysed. The findings are related to the assistance provided by technology to streamline and effectively manage feedback. | 20 |

| Definition of a model | A feedback model with specific characteristics is proposed, which the studies aim to validate through a methodological design. | 18 |

| Cooperation | The importance of peer feedback, the results it produces, and how to design such activities are explored. | 17 |

| Presentation of a tool | Which digital tools were used to explore feedback is indicated. Two specific tools mentioned are Socrative and ExamVis. | 13 |

| Self-regulation | Contributions of feedback to student self-regulation are indicated. | 11 |

| Integration of artificial intelligence (AI) | AI and studies on adaptive systems are integrated, as well as other trends such as 3D modelling for interaction with real objects that provide feedback. | 8 |

| Format of feedback | The format of feedback used (audio, video, or a combination of these with text) is indicated. | 7 |

| Systematic Review of Literature | Some systematic reviews on this particular topic were identified. | 5 |

| Instrument | An instrument for evaluating feedback is provided. | 3 |

| Teacher training | Teacher training strategies on feedback are proposed. | 3 |

| Level | Topic | Average |

|---|---|---|

| B1 | Function of the audio format when providing feedback. | 2.3 |

| B2 | Providing scaffolding in feedback. | 2.3 |

| B2 | Provide feedback scaffolding. | 2.1 |

| C1 | Configuration of conditions within an LMS (Learning Management System). | 2.4 |

| C2 | Virtual tutors. | 2.1 |

| Tools for configuring virtual tutors. | 2.1 | |

| Tools for review of exercises with AI (Gradescope, Cognni, InVideo). | 2.4 | |

| Global relevance. | 2.4 | |

| Feedback design. | 2.1 | |

| Feedback delivery. | 2.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Betancur-Chicué, V.; García-Valcárcel Muñoz-Repiso, A. Microlearning for the Development of Teachers’ Digital Competence Related to Feedback and Decision Making. Educ. Sci. 2023, 13, 722. https://doi.org/10.3390/educsci13070722

Betancur-Chicué V, García-Valcárcel Muñoz-Repiso A. Microlearning for the Development of Teachers’ Digital Competence Related to Feedback and Decision Making. Education Sciences. 2023; 13(7):722. https://doi.org/10.3390/educsci13070722

Chicago/Turabian StyleBetancur-Chicué, Viviana, and Ana García-Valcárcel Muñoz-Repiso. 2023. "Microlearning for the Development of Teachers’ Digital Competence Related to Feedback and Decision Making" Education Sciences 13, no. 7: 722. https://doi.org/10.3390/educsci13070722

APA StyleBetancur-Chicué, V., & García-Valcárcel Muñoz-Repiso, A. (2023). Microlearning for the Development of Teachers’ Digital Competence Related to Feedback and Decision Making. Education Sciences, 13(7), 722. https://doi.org/10.3390/educsci13070722