Acceptance of AI in Semi-Structured Decision-Making Situations Applying the Four-Sides Model of Communication—An Empirical Analysis Focused on Higher Education

Abstract

:1. Introduction

2. Related Work

2.1. Prerequisites for Machine–Human Communication

- Capabilities: AI systems are typically designed to perform specific tasks and are not capable of the same level of understanding and general intelligence as a human being. This means that an AI may be able to perform certain tasks accurately but may not be able to understand or respond to complex or abstract concepts in the same way that a human can [4].

- Responses: AI systems are typically programmed to respond to specific inputs in a predetermined way. This means that the responses of an AI may be more limited and predictable than those of a human, who is capable of a wide range of responses based on their own experiences and understanding of the world [5].

- Empathy: AI systems do not have the ability to feel empathy or understand the emotions of others in the same way that a human can. This means that an AI may not be able to respond to emotional cues or provide emotional support in the same way that a human can [6].

- Learning: While AI systems can be trained to perform certain tasks more accurately over time, they do not have the ability to learn and adapt in the same way that a human can. This means that an AI may not be able to adapt to new situations or learn from its own experiences in the same way that a human can [7].

- Trust: Humans are very critical toward any kind of failure an artificial system is permitting. The level of trust in information being delivered from an AI, in the case of violation, is clearly lower than it would be if the information was delivered from the lips of a human [8].

2.2. The Four-Sides Model in Communication

2.3. Technology Acceptance Model

2.4. AI in Higher Education

3. Research Strategy

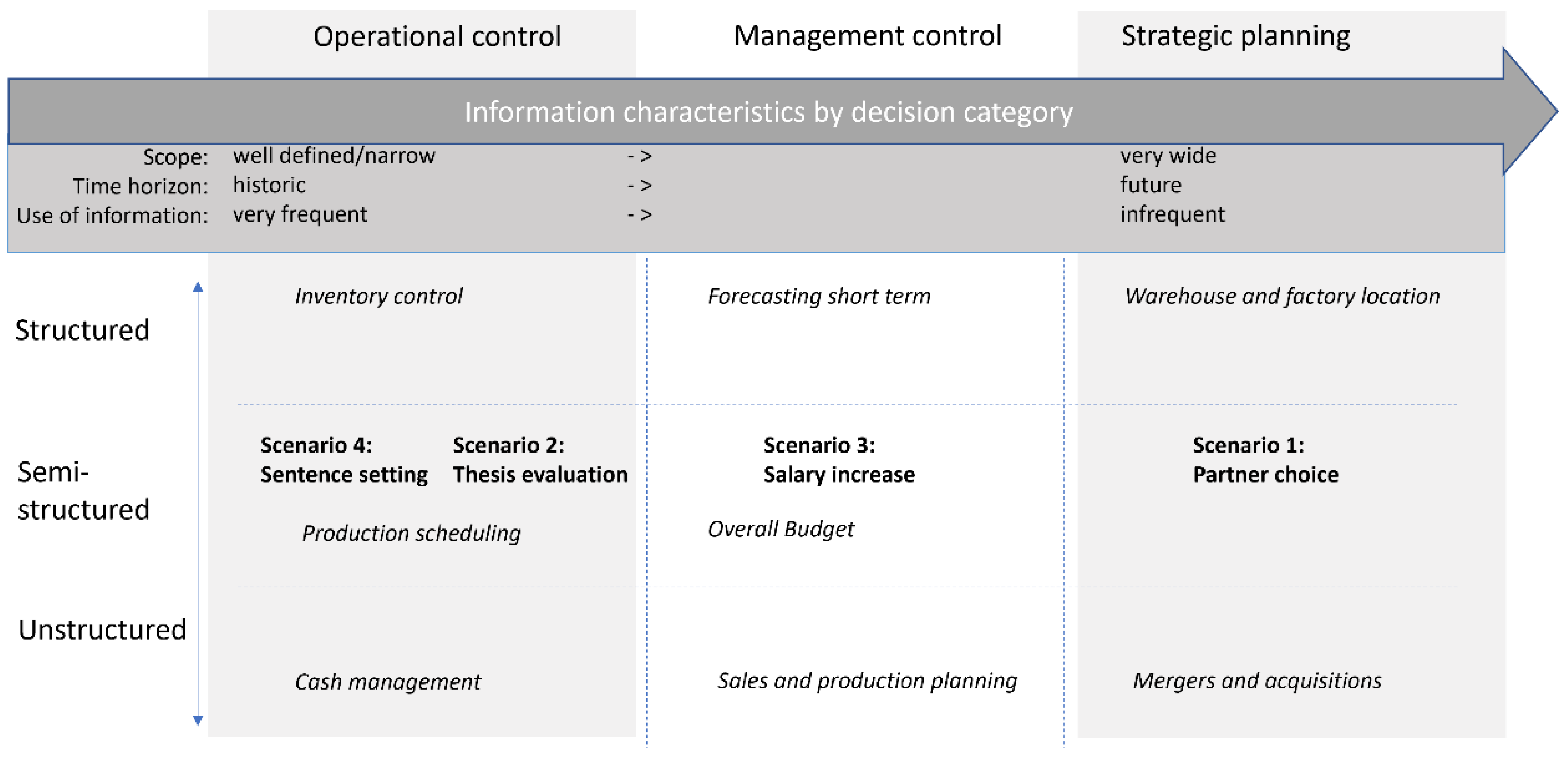

- Scenario 1: Partner choice: An AI in the form of a dating app independently selects the life partner for the person concerned.

- Scenario 2: Thesis evaluation: The thesis (bachelor/master) is marked by an AI.

- Scenario 3: Salary increase: Intelligent software decides whether to receive a salary increase.

- Scenario 4: Sentence setting: An AI decides in court on the sentence for the person concerned.

4. Findings and Discussion

4.1. Applicability

“Yes, even up to the preparation of the decision, but at the end, someone has to say, I take this. Even if the AI says there’s someone not getting money, then there should be someone there to say, ‘Okay, I can understand why the AI is doing this, and I stand behind it and represent that as a boss. And not hide behind the AI and say, I would have given you more money, but I’m sorry, the AI decided otherwise.’ Very bad!”(Interviewee F-5, M, pos. 89)

4.2. Extensions

4.3. Evaluations

“There, I would actually be happy if that were the case, because that’s actually rule-based and comprehensible and consistent, let’s call it that. [...] from the purely scientific perspective and also from the perspective of equality, I think such a procedure makes sense.”(Interviewee I-5, M, pos. 52)

“I am almost certain that a computer judges more objectively than a human being because it makes rule-based judges. I would perhaps wish that someone who has nothing to do with the work, but in especially good or especially bad cases or also in general simply about the result what the AI delivers with the reasoning again very briefly over it looks, whether that makes sense, so as yes as a last check.”(Interviewee J-3, pos. 39)

5. Further Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Samoili, S.; López Cobo, M.; Delipetrev, B.; Martínez-Plumed, F.; Gómez, E.; Prato, G.d. AI Watch: Defining Artificial Intelligence 2.0: Towards an Operational Definition and Taxonomy for the AI Landscape; Publications Office of the European Union: Luxembourg, 2021; ISBN 978-92-76-42648-6.

- Southworth, J.; Migliaccio, K.; Glover, J.; Glover, J.; Reed, D.; McCarty, C.; Brendemuhl, J.; Thomas, A. Developing a model for AI Across the curriculum: Transforming the higher education landscape via innovation in AI literacy. Comput. Educ. Artif. Intell. 2023, 4, 100127. [Google Scholar] [CrossRef]

- Terwiesch, C. Would Chat GPT Get a Wharton MBA?: A Prediction Based on Its Performance in the Operations Management Course. Available online: https://mackinstitute.wharton.upenn.edu/wp-content/uploads/2023/01/Christian-Terwiesch-Chat-GTP-1.24.pdf (accessed on 10 July 2023).

- Korteling, J.E.H.; van de Boer-Visschedijk, G.C.; Blankendaal, R.A.M.; Boonekamp, R.C.; Eikelboom, A.R. Human- versus Artificial Intelligence. Front. Artif. Intell. 2021, 4, 622364. [Google Scholar] [CrossRef] [PubMed]

- Hill, J.; Randolph Ford, W.; Farreras, I.G. Real conversations with artificial intelligence: A comparison between human–human online conversations and human–chatbot conversations. Comput. Hum. Behav. 2015, 49, 245–250. [Google Scholar] [CrossRef]

- Alam, L.; Mueller, S. Cognitive Empathy as a Means for CharacterizingHuman-Human and Human-Machine Cooperative Work. In Proceedings of the International Conference on Naturalistic Decision Makin, Orlando, FL, USA, 25–27 October 2022; Naturalistic Decision Making Association: Orlando, FL, USA, 2022; pp. 1–7.

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; 14th Printing 2022; Cambridge University Press: Cambridge, UK, 2022; ISBN 978-1-107-05713-5. [Google Scholar]

- Alarcon, G.M.; Capiola, A.; Hamdan, I.A.; Lee, M.A.; Jessup, S.A. Differential biases in human-human versus human-robot interactions. Appl. Ergon. 2023, 106, 103858. [Google Scholar] [CrossRef] [PubMed]

- Schulz von Thun, F. Miteinander Reden: Allgemeine Psychologie der Kommunikation; Rowohlt: Hamburg, Germany, 1990; ISBN 3499174898. [Google Scholar]

- Ebert, H. Kommunikationsmodelle: Grundlagen. In Praxishandbuch Berufliche Schlüsselkompetenzen: 50 Handlungskompetenzen für Ausbildung, Studium und Beruf; Becker, J.H., Ebert, H., Pastoors, S., Eds.; Springer: Berlin, Germany, 2018; pp. 19–24. ISBN 3662549247. [Google Scholar]

- Bause, H.; Henn, P. Kommunikationstheorien auf dem Prüfstand. Publizistik 2018, 63, 383–405. [Google Scholar] [CrossRef]

- Richards, I.A. Practical Criticism: A Study of Literary Judgment; Kegan Paul, Trenche, Trubner Co., Ltd.: London, UK, 1930. [Google Scholar]

- Czernilofsky-Basalka, B. Kommunikationsmodelle: Was leisten sie? Fragmentarische Überlegungen zu einem weiten Feld. Quo Vadis Rom.—Z. Für Aktuelle Rom. 2014, 43, 24–32. [Google Scholar]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319. [Google Scholar] [CrossRef]

- García de Blanes Sebastián, M.; Sarmiento Guede, J.R.; Antonovica, A. Tam versus utaut models: A contrasting study of scholarly production and its bibliometric analysis. TECHNO REVIEW Int. Technol. Sci. Soc. Rev./Rev. Int. De Tecnol. Cienc. Y Soc. 2022, 12, 1–27. [Google Scholar] [CrossRef]

- Ajibade, P. Technology Acceptance Model Limitations and Criticisms: Exploring the Practical Applications and Use in Technology-Related Studies, Mixed-Method, and Qualitative Researches; University of Nebraska-Lincoln: Lincoln, NE, USA, 2018. [Google Scholar]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425. [Google Scholar] [CrossRef]

- Granić, A. Technology Acceptance and Adoption in Education. In Handbook of Open, Distance and Digital Education; Zawacki-Richter, O., Jung, I., Eds.; Springer Nature: Singapore, 2023; pp. 183–197. ISBN 978-981-19-2079-0. [Google Scholar]

- Zhang, Y.; Liang, R.; Qi, Y.; Fu, X.; Zheng, Y. Assessing Graduate Academic Scholarship Applications with a Rule-Based Cloud System. In Artificial Intelligence in Education Technologies: New Development and Innovative Practices; Cheng, E.C.K., Wang, T., Schlippe, T., Beligiannis, G.N., Eds.; Springer Nature: Singapore, 2023; pp. 102–110. ISBN 978-981-19-8039-8. [Google Scholar]

- Schlippe, T.; Stierstorfer, Q.; Koppel, M.T.; Libbrecht, P. Artificial Intelligence in Education Technologies: New Development and Innovative Practices; Cheng, E.C.K., Wang, T., Schlippe, T., Beligiannis, G.N., Eds.; Springer Nature: Singapore, 2023; ISBN 978-981-19-8039-8. [Google Scholar]

- Kaur, S.; Tandon, N.; Matharou, G. Contemporary Trends in Education Transformation Using Artificial Intelligence. In Transforming Management Using Artificial Intelligence Techniques, 1st ed.; Garg, V., Agrawal, R., Eds.; CRC Press: Boca Raton, FL, USA, 2020; pp. 89–104. ISBN 9781003032410. [Google Scholar]

- Zhai, X.; Chu, X.; Chai, C.S.; Jong, M.S.Y.; Istenic, A.; Spector, M.; Liu, J.-B.; Yuan, J.; Li, Y.; Cai, N. A Review of Artificial Intelligence (AI) in Education from 2010 to 2020. Complexity 2021, 2021, 8812542. [Google Scholar] [CrossRef]

- Razia, B.; Awwad, B.; Taqi, N. The relationship between artificial intelligence (AI) and its aspects in higher education. Dev. Learn. Organ. Int. J. 2023, 37, 21–23. [Google Scholar] [CrossRef]

- Saunders, M.; Lewis, P.; Thornhill, A. Research Methods for Business Students, 7th ed.; Pearson Education Limited: Essex, UK, 2016; ISBN 978-1292016627. [Google Scholar]

- Adeoye-Olatunde, O.A.; Olenik, N.L. Research and scholarly methods: Semi-structured interviews. J. Am. Coll. Clin. Pharm. 2021, 4, 1358–1367. [Google Scholar] [CrossRef]

- Dreyfus, S.E. The Five-Stage Model of Adult Skill Acquisition. Bull. Sci. Technol. Soc. 2004, 24, 177–181. [Google Scholar] [CrossRef]

- Glaser, B.G.; Strauss, A.L. The Discovery of Grounded Theory: Strategies for Qualitative Research; Routledge: London, UK, 2017; ISBN 0-202-30260-1. [Google Scholar]

- Corbin, J.M.; Strauss, A. Grounded theory research: Procedures, canons, and evaluative criteria. Qual. Sociol. 1990, 13, 3–21. [Google Scholar] [CrossRef]

- Gorry, A.G.; Morton, M.S. A Framework for Information Systems; Working Paper Sloane School of Management; MIT: Cambridge, MA, USA, 1971. [Google Scholar]

- Vollaard, B.; van Ours, J.C. Bias in expert product reviews. J. Econ. Behav. Organ. 2022, 202, 105–118. [Google Scholar] [CrossRef]

- Hu, K. ChatGPT Sets Record for Fastest-Growing User Base—Analyst Note. Available online: https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/ (accessed on 28 April 2023).

| Interview | Age | Sex | Position Held | Focus and AI Knowledge | Management Experience | Skill Level |

|---|---|---|---|---|---|---|

| A | 30 | Male | Founder, tech-startup | AI in finance | Yes | Proficient |

| B | 48 | Male | Technical officer and team leader | Logging and monitoring based on AI | Yes | Competent |

| C | 25 | Male | Software developer | Coding, AI on project base | No | Advanced beginner |

| D | 32 | Male | Technical officer and team leader | AI-user | Yes | Advanced beginner |

| E | 30 | Female | Art director and XR, 3D artist | AI on project base | No | Competent |

| F | 61 | Male | Founder and managing shareholder | Strategy consultant and AI expert | Yes | Expert |

| G | 38 | Male | Managing director, author, lecturer | Math, statistics, and AI | Yes | Proficient |

| H | 31 | Male | Machine-learning expert | AI research and development | No | Expert |

| I | 47 | Male | Leader/partner, big data and advanced analytics advisor | Big data and advanced analytics | Yes | Expert |

| J | 27 | Male | Research associate and engineer | Software and AI | No | Competent |

| TAM Logic | Frequency (All 4 Scenarios) | |

|---|---|---|

| Perceived usefulness | ||

| Established | Is the application already established? | 15 |

| Use | What is the benefit to the person when the AI makes the decision? | 8 |

| Discrimination experience/fears | Experiences/fears of discrimination (gender, origin, religion) have an influence of acceptance. | 19 |

| Transparency | Traceability/transparency regarding the decision making process. | 136 |

| Expertise | Background knowledge. | 14 |

| Complexity | Is it a very complex use case with minor consequences or complex with very serious consequences? | 17 |

| Credibility | Avoiding responsibility through the utilization of AI in decision-making is discouraged. | 8 |

| Perceived ease of use | ||

| Objection options | What options does the person have to appeal the decision? | 10 |

| Understanding | The affected person wants to feel understood in his/her own position. | 6 |

| Attitude towards using | ||

| Moral/Ethics | Is AI use morally defensible? | 14 |

| Data quality | How good are the data provided to the AI? Is it sufficient? | 44 |

| AI-capability | Does the person have confidence in the AI´s technical capabilities? | 44 |

| Data security | Is your own data protected in the application? | 10 |

| Behavioral intention of use | ||

| Experience and habit | Experience of other people, statistics; habits leads to acceptance. | 7 |

| Expectation | What are the person´s expectations of the AI? Are they realistic? | 2 |

| Additional factors | ||

| Assessment | How close is the decision results to your own assessment? | 7 |

| Awareness of AI involvement | The person concerned should be aware that he or she is communication with an AI. | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Greiner, C.; Peisl, T.C.; Höpfl, F.; Beese, O. Acceptance of AI in Semi-Structured Decision-Making Situations Applying the Four-Sides Model of Communication—An Empirical Analysis Focused on Higher Education. Educ. Sci. 2023, 13, 865. https://doi.org/10.3390/educsci13090865

Greiner C, Peisl TC, Höpfl F, Beese O. Acceptance of AI in Semi-Structured Decision-Making Situations Applying the Four-Sides Model of Communication—An Empirical Analysis Focused on Higher Education. Education Sciences. 2023; 13(9):865. https://doi.org/10.3390/educsci13090865

Chicago/Turabian StyleGreiner, Christian, Thomas C. Peisl, Felix Höpfl, and Olivia Beese. 2023. "Acceptance of AI in Semi-Structured Decision-Making Situations Applying the Four-Sides Model of Communication—An Empirical Analysis Focused on Higher Education" Education Sciences 13, no. 9: 865. https://doi.org/10.3390/educsci13090865

APA StyleGreiner, C., Peisl, T. C., Höpfl, F., & Beese, O. (2023). Acceptance of AI in Semi-Structured Decision-Making Situations Applying the Four-Sides Model of Communication—An Empirical Analysis Focused on Higher Education. Education Sciences, 13(9), 865. https://doi.org/10.3390/educsci13090865