Abstract

Chatbots can have a significant positive impact on learning. There is a growing interest in their application in teaching and learning. The self-regulation of learning is fundamental for the development of lifelong learning skills, and for this reason, education should contribute to its development. In this sense, the potential of chatbot technologies for supporting students to self-regulate their learning activity has already been pointed out. The objective of this work is to explore university students’ interactions with EDUguia chatbot to understand whether there are patterns of use linked to phases of self-regulated learning and academic task completion. This study presents an analysis of conversation pathways with a chatbot tutor to enhance self-regulation skills in higher education. Some relevant findings on the length, duration, and endpoints of the conversations are shared. In addition, patterns in these pathways and users’ interactions with the tool are analysed. Some findings are relevant to the analysis of the link between design and user experience, but they can also be related to implementation decisions. The findings presented could contribute to the work of other educators, designers, and developers interested in developing a tool addressing this goal.

1. Introduction

Nowadays, universities seek to improve their educational models by adapting to the needs of their students, for example through the use of Information and Communication Technologies (ICTs). One of these needs is how to improve student learning in a sustainable way [1]. An emerging ICT that is especially promising and has been receiving growing interest in recent years are systems based on chatbots [2].

Chatbots or conversational assistants are computer programs that are capable of interacting with the user through language-based interfaces (text or audio) [3]. Specifically, a chatbot is a software (in this case a virtual assistant) capable of answering a certain number of user questions, providing the correct answers [4]. Moreover, chatbots not only have the ability to mimic human conversation but can also offer personalised services [5].

On some occasions, the interface used by chatbots is based on the structure of human conversation, which is obtained through natural language processing (NLP). NLP allows algorithms to understand, interpret, and manipulate human language [6]. In this sense, the main objective of a chatbot is to simulate an intelligent human conversation so that the interlocutor has an experience that is as similar as possible to a conversation with another person [1]. It is for this reason that chatbot facilitate interactions between users and information in the form of friendly conversations [7] on a certain topic or in a specific domain in a natural and conversational way through text and/or voice [8].

In addition to voice-operated chatbots, text-based chatbots are found on websites that help users with tasks, such as answering a question, finding a product, or helping with a booking, to name a few. These programs run continuously and conversations between software and users are automated and personalised [7].

The study presented in this paper is part of the research and innovation project Analysis of the effects of feedback supported by digital monitoring technologies on generic competencies (e-FeedSkill). One of the objectives of this project is to evaluate the effectiveness of a chatbot monitoring system for the development of learning self-regulation skills. To achieve this objective, the design and development of a conversational agent was required. The main idea of this chatbot, named EDUguia, was to support self-regulated learning while performing a complex task subject to a peer-assessment process. A complex task can be defined as one that involves the activation of knowledge, the management of different types of disciplinary content, and the execution of different competencies [9]. Considering that chatbots are still an emerging technology in educational contexts [10], particularly ones aimed at enhancing the self-regulation learning skills, the work reported in this paper intends to contribute to the understanding of students’ interactions with chatbots during their learning process.

1.1. Chatbots in Education

Education is a field where chatbots have the potential to make a significant positive impact on learning [11]. Although it is true that their incorporation must be preceded by prior reflection to define what a chatbot’s true purpose is, the need to share information and resolve doubts make these tools very useful in educational environments [1]. It is for this reason that chatbot technology can be seen as an important innovation for e-learning and in fact, they are proving to be one of the most innovative solutions for bridging the gap between technology and education [4].

Turning to education, chatbots have been developed to support the educational process in e-learning environments [12]. These systems are known as pedagogical conversational agents, and include, for example, chatbots used for educational purposes [2]. These have a long history of use as pedagogical agents in educational settings. Since the early 1970s, pedagogical agents known as intelligent tutors have been developed within digital learning environments [13]. In fact, one of chatbots’ applications is in the field of educational and professional guidance [4]. In this context, there has recently been an increase in chatbots on e-learning platforms to support student learning [14]. Even so, the inclusion of chatbots in no way replaces the figure of the teacher or administrative and service staff but rather replaces, complements, or helps them in some of their tasks [1].

Although chatbots in education are at a very early stage [15], recent studies claim that students’ learning outcomes and memory retention are significantly affected while learning through chatbots [16]. As a tutoring system, chatbots have a positive and significant impact on student learning at different educational levels and different subjects evaluated [5].

Research by Lindsey et al. [17] revealed that personalised reviews significantly increased course retention compared to current educational practice. Along the same lines, Benotti et al. [18] found that in a classroom environment, the chatbot system is very efficient in improving student retention and engagement.

Villegas et al. [1] asserted that this system could become the ideal teaching assistant by notifying the student of all the activities that they must carry out. Other studies have found that these conversational agents also influence motivation, which in turn can facilitate learning. Specifically, they can significantly increase the positivity of students’ perception of their learning experiences [5,11].

Hadwin et al. [19] found that conversational chat agents could increase student interest, memory retention, and knowledge transmission. Other authors argued that the incorporation of this tool in distance education environments has a promising opportunity to improve communication and the overall course experience for students and online teachers who teach these courses [20]. A representative example could be TecCoBot, a chatbot not only supporting students in reading texts by offering writing assignments and providing automated feedback on these, but also implementing a design for self-study activities [21]. In another study, Mokmin and Ibrahim [22] showed how a conversational agent could increase subject literacy (in this case, health literacy), especially among students and young adults. Their study showed a significant low exit rate (where less than 37% of users exited the application), highlighting the importance of studying the factors that affect users’ trust and acceptance of chatbot technology [23]. In this sense, Abd-Alrazaq et al. [24] indicate that a chatbot should be evaluated based on usability, performance, responses, and aesthetics.

These studies show that the potential of the chatbot to interact with the student could improve their interest in the subject by recommending an activity aligned with the needs and ways of learning of each student [1]. Even through a chatbot, it may be possible to provide a personalised learning experience. If each student learns at a different pace, the chatbot could adapt to the speed at which this student can learn [4].

Thus, the incorporation of chatbots in education over the last decade has increased the interest in the ways in which they can be implemented in teaching and learning processes [8]. For students, they seem to be a useful way to resolve doubts quickly and immediately. And for teachers and learning managers, this tool could allow them to better follow the progress of their students or could be used as a resource to support their learning [25].

However, the use of chatbots in academia has received only limited attention and are only just emerging as a topic in the scientific community [4]. In a literature review, Wilfred and Ade-Ibijola [26] found that the number of studies on the use of chatbots in education was increasing, demonstrating the integration of this tool in the educational field. Specifically, the study revealed that chatbots in education were being used in a variety of ways, including teaching and learning, administration, assessment, advisory, and research and development. Other systematic literature reviews [27] point to three main challenges of research on chatbots in education: (1) aligning chatbot evaluations with implementation objectives, (2) exploring the potential of chatbots for mentoring students, and (3) exploring and leveraging the adaptation capabilities of chatbots.

In this vein, Wollny et al. [27] warned of some of the drawbacks that using chatbots in educational contexts could have. For example, and just to mention a few: the anthropomorphism tendency, language use, attention, and the frustrating effect. The anthropomorphism tendency is an ethical issue that should be considered; understanding that there are psychological dimensions involved in a conversation between a human and a chatbot [28]. Regarding language use, Winkler et al. [15] mentioned that there is a difference in the quality of vocabulary students use when interacting with a chatbot. These authors also mention that students tend to be more easily distracted when talking with a conversational agent [15]. Brandtzaeg and Følstad [29] also mention that if the chatbot does not meet the request of the user, it could trigger a frustration effect. Other research shows that, among the limitations, the functions of any chatbot should be explicitly detailed and users should decide how to interact with the bot. Understanding a user’s expectations of a conversational agent is critical to avoid abusing their trust [26]. The same authors found another drawback to be the issue of supervision and maintenance. The information stored in the chatbot should be updated on a regular basis so that it could provide current and accurate information on any subject.

1.2. Chatbots for Self-Regulated Learning

Self-regulation can be defined as self-generated thoughts, feelings, and goal-oriented behaviours, and it is understood as a cyclical process composed of three phases: forethought, performance, and self-reflection [30]. The self-regulation of learning is fundamental for the development of lifelong learning skills, and this is one of the main reasons why education should contribute to its development [31]. While Zimmerman’s cyclical model is widely used for its structured description of the process, it should be noted that there are multiple theoretical models of self-regulated learning. From the review of the different models, Panadero [32] analysed the contributions of six different models, showing that they all agree in that self-regulation is a cyclical process. Of all the models, Pintrich’s [33] contributions on the role of motivation in the self-regulation process are fundamental. Pintrich [34] understands self-regulation as an active and constructive process, including in his proposal the importance of the social context in self-regulated learning.

The project framework in which the chatbot intervention proposed in this paper was developed is based on the self-regulation theory of Zimmerman [30,31]. In this sense, the work of Panadero and Alonso-Tapia [35] was used as a guide for the creation of the dialogue and infographics that the chatbot offers users. However, during the process of creation and design of the materials offered by the tool EDUguia, the contributions of Pintrich [33,34] were considered with regard to the motivational and affective dimension of the process.

Researchers have pointed out the potential of chatbot technologies for supporting students to self-regulate their learning activity [36]. In this vein, recent studies have shown that the use of conversational agents could be useful to support students’ self-regulated learning in online environments [36,37]).

In a recent study, Sáiz-Manzanares et al. [38] implemented an ad hoc chatbot as a tool for self-regulated learning and after analysing the students’ interaction with this chatbot, they found low levels of use of metacognitive strategies by students. On other hand, the results obtained by Ortega-Ochoa et al. [39] show that a chatbot that provided affective feedback have positive impact in motivation, self-regulation, and metacognition.

It is in this context that it could be claimed that this technology plays an essential role for the improvement of competencies related to the self-regulation capacity of learning [4]. But, as Winkler et al. [15] highlighted, it is crucial to develop chatbots supported by pedagogical and educational principles in order to enhance students’ self-regulation of learning.

Scheu and Benke [36] present the preliminary results of a systematic literature review study providing a state-of-the-art overview of digital assistants supporting SRL. Their results show that research on SRL support by digital assistants mainly focuses on cognition regulation, whereas motivation and affect, behavioural, and context regulation remain underexplored. Moreover, the results showed that most studies were conducted over short timeframes, which makes it impossible to assess the long-term effects the interactions with the tool induce. Another literature review mentioned before [27] concluded that chatbots should provide support information, tools, or other materials for specific learning tasks but should also encourage students according to their goals and achievements and help them develop metacognitive skills like self-regulation. In fact, Calle et al. [40] have already proposed the design and implementation of a chatbot-type recommendation system to help students self-regulate their learning, providing recommendations for time and sessions, resources, and actions within the platform to obtain better results. In this case the limitations of this chatbot were aligned with its own programming language used due to Moodle limitations.

In the same vein, Du et al. [41] have already proposed future designs of chatbot supporting SRL activities. In that case, their chatbot Learning Buddy, integrated into the course page, tried to inspire students to think about their goals and expectations of the course before starting the learning journey. After its implementation, and through an interview, students were asked to explain how the chatbot helped them during the goal setting process and their suggestions or expectations toward future development of chatbots. In that case, participants agreed on the adoption of a chatbot as a way of promoting students’ SRL abilities; however, the research also indicated the need of more interactions with the chatbot in later course sessions and of using the tool in various learning activities.

To the best of our knowledge, there are no studies that analyse the conversation pathways that students follow when using a chatbot designed to contribute to the development of their self-regulated learning strategies. In this sense, analysing these conversation pathways could improve the acknowledgment of students’ user experience with chatbots to enhance their self-regulation strategies, and the findings could be useful to adjust and ameliorate the development and design of the tool. Furthermore, the findings from this study could be a good starting point for developing chatbots for educational purposes.

Our objective is to explore university students’ interactions with EDUguia chatbot to understand whether there are patterns of use linked to phases of self-regulated learning and academic task completion. Based on our results, we aim to analyse students’ behaviours when using our tool to consider whether it could contribute to supporting self-regulation.

In order to achieve our objective, we aim to answer the following research questions:

- R.Q.1. How many messages make up the conversations and what is their frequency?

- R.Q.2. How are users’ interactions with the tool distributed over time?

- R.Q.3. At what point in the conversational flow do users leave the interaction with the tool?

- R.Q.4. What is the duration (in time) of the conversations?

- R.Q.5. How do user-selected options define the flow of the conversation?

- R.Q.6. What are the most common replica sequences?

- R.Q.7. How do users interact with the infographic resources for SRL embedded in the tool?

- R.Q.8. How do users respond to the chatbot’s open questions?

2. Materials and Methods

2.1. Research Background

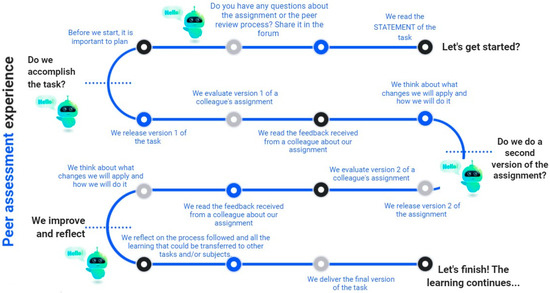

The work presented in this paper is part of the research project e-FeedSkill. This project was implemented in various courses of different degrees of a traditional higher education institution (Universitat de Barcelona) and of a distance higher education institution (Universitat Oberta de Catalunya), both of them Spanish universities located in the autonomous community of Catalunya. For this purpose, a didactic sequence was developed and set up on Moodle [42]. Through it, students participated in activities to enhance self-regulation of learning, including a peer feedback activity. Specifically, the sequence was carried out following the three phases proposed by Zimmerman’s [30] self-regulated learning theory. This didactic sequence included the EDUguia chatbot as a monitoring technology, embedded as a link in the different steps of the sequence, as shown in Figure 1.

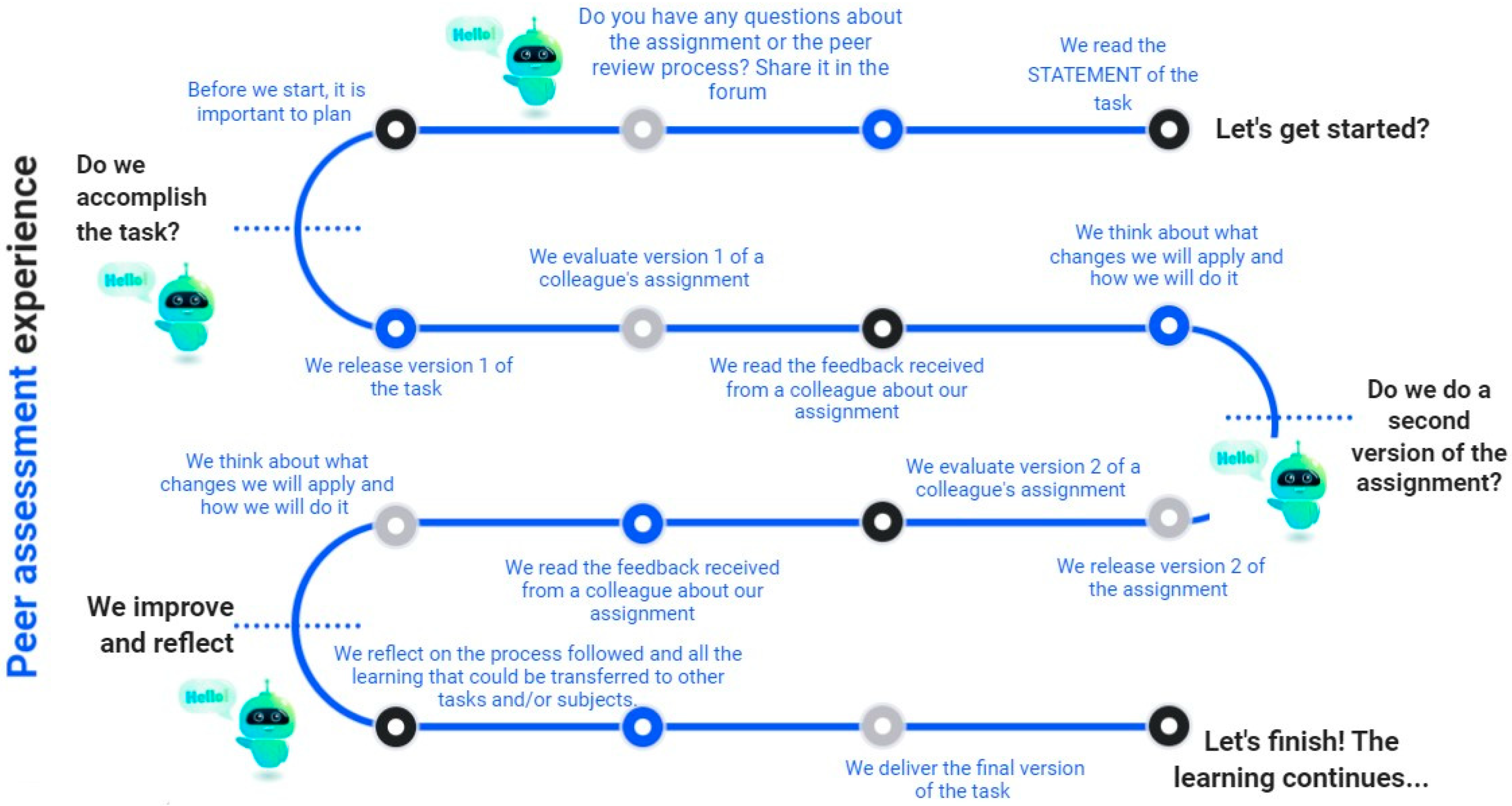

Figure 1.

Steps of the didactic sequence. Source.Lluch, L. (2023), CC-BY-NC-ND. Note: The design of the infographic corresponds to the project member Dr. Laia Lluch and was adapted for this paper with her authorisation. The colours of the different pots are merely decorative.

As is shown in Figure 1, the sequence was composed of several activities and the chatbot was embedded as a link in the learning management system (LMS) in four instances: the first after students read the statement of the task and before completing a planning activity; the second just before delivering the first version of the task; the third instance was after they received the first peer assessment and before delivering the second version of the task; and the last was after the second peer review and before completing a reflective activity together with the submission of the final version of the assignment.

It is worth clarifying that the tool was accessible all the time from the beginning of the educational intervention, but the four instances were set up specifically considering that students should have more support in these phases of performing the task and of the learning self-regulatory process. So, the four instances aimed to work as incentive measures to interact with the tool.

2.2. EDUguia Chatbot

The EDUguia chatbot was designed following a collaborative design methodology [10] through carrying out co-design workshops with higher education students and teachers. During these workshops, a clear requirement from the students was that the tool was to be totally anonymous, that is, that it should not require identifiers to log in and that the information it collected should not allow users to be identified. This requirement was respected, and therefore the information that was registered in the tool was totally anonymous.

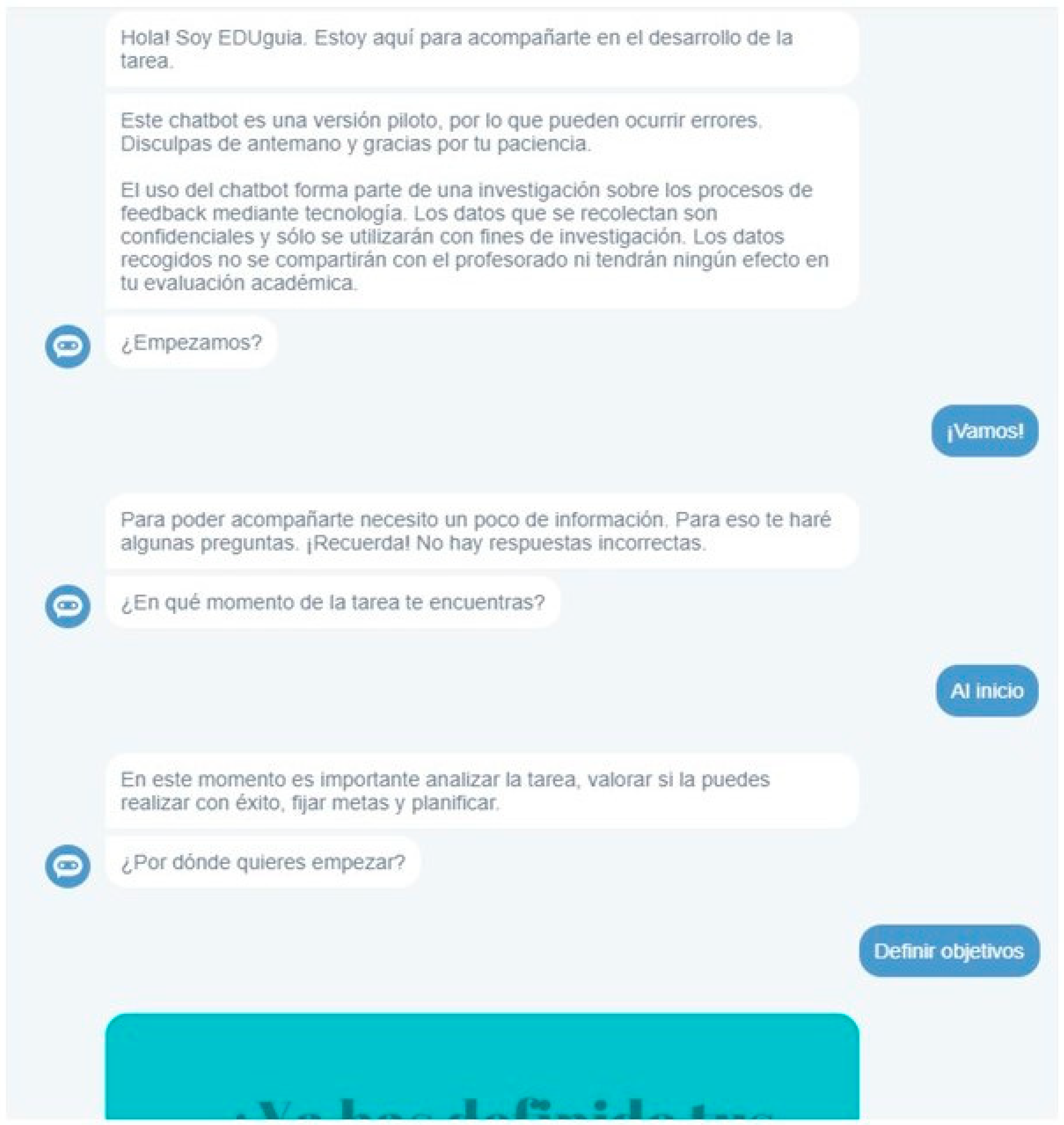

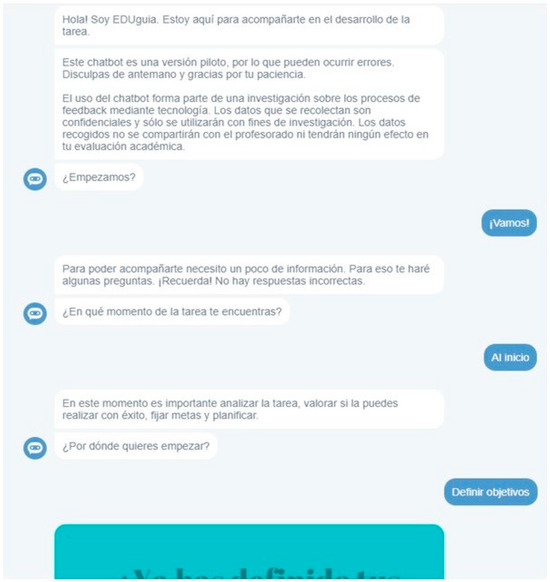

It is worth mentioning that our chatbot works as a menu of conversational options. That is, after each text, students were given options to continue the conversation so they could only choose one answer which was limited among the given options (Figure 2). In short, the conversation flow of the EDUguia chatbot was created as a conversation tree. As in this case, despite the fact that no NLP was used and no attempt was made to understand the students’ messages, it was possible to study the pathways. Unlike what happens with EDUguia chatbot, analysing the trajectories of chatbots based on artificial intelligence (AI) could be more complicated because their responses would not be predefined.

Figure 2.

Visualisation of chatbot interface. Note: Original messages are in Spanish. Translation of messages from Spanish to English: Hi, I’m EDUguia. I’m here to accompany you in the development of the task.; This chatbot is a pilot version, so errors may occur. Apologies in advance and thank you for your patience.; The use of the chatbot is part of an investigation into technology-enabled feedback processes. The data collected are confidential and will only be used for research purposes. The data collected will not be shared with teaching staff or have any effect on your academic assessment. Shall we start?; Let’s go!; In order to be able to accompany you, I need some information. For that I will ask you some questions. Remember! There are no wrong answers; At what stage of the task are you?; At the beginning; At this point it is important to analyse the task, assess whether you can do it successfully, set goals and plan; Where do you want to start?; Define objectives.

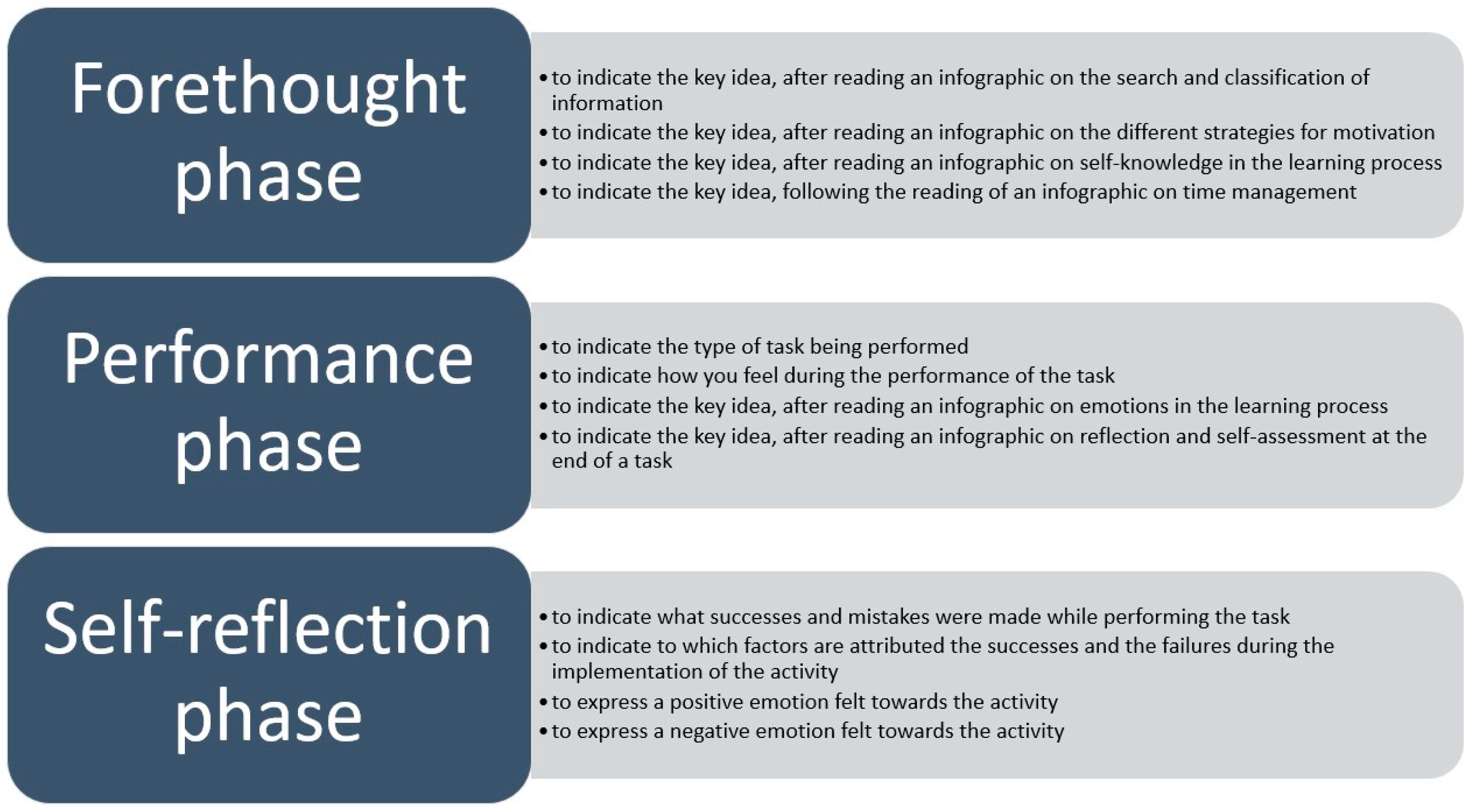

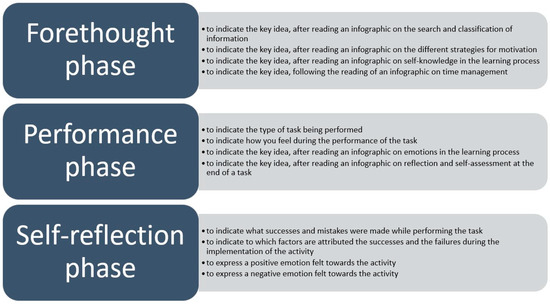

To develop the tool’s conversational flow, the three phases (forethought, performance, self-reflection) of the SRL cyclical model [41,42] were considered. In this sense, in order to apply it to the tool development, a relationship was established between the moments of performing the task (at the beginning, in the middle, and almost at the end) and the phases of self-regulation. At the same time, as shown in Table 1, for each phase/moment, different options to select skills or strategies to be developed were settled. For each strategy or skill, several infographic resources were created. A total of 39 infographics were developed (19 for the forethought phase, 13 for the performance phase and 7 for the self-reflection phase). As we mentioned before, these resources were created taking into account the cognitive, metacognitive, motivational, and affective dimensions of the learning process.

Table 1.

SRL phases, moment of task performance, and skills or strategies to be developed.

When the interaction starts, users should indicate which moment of the task they are in by selecting the option, as can be seen in the figure below (Figure 2). After this first interaction, the user will continue the conversation selecting the options of preference. Depending on this selection, the chatbot will provide the responses and the corresponding resources.

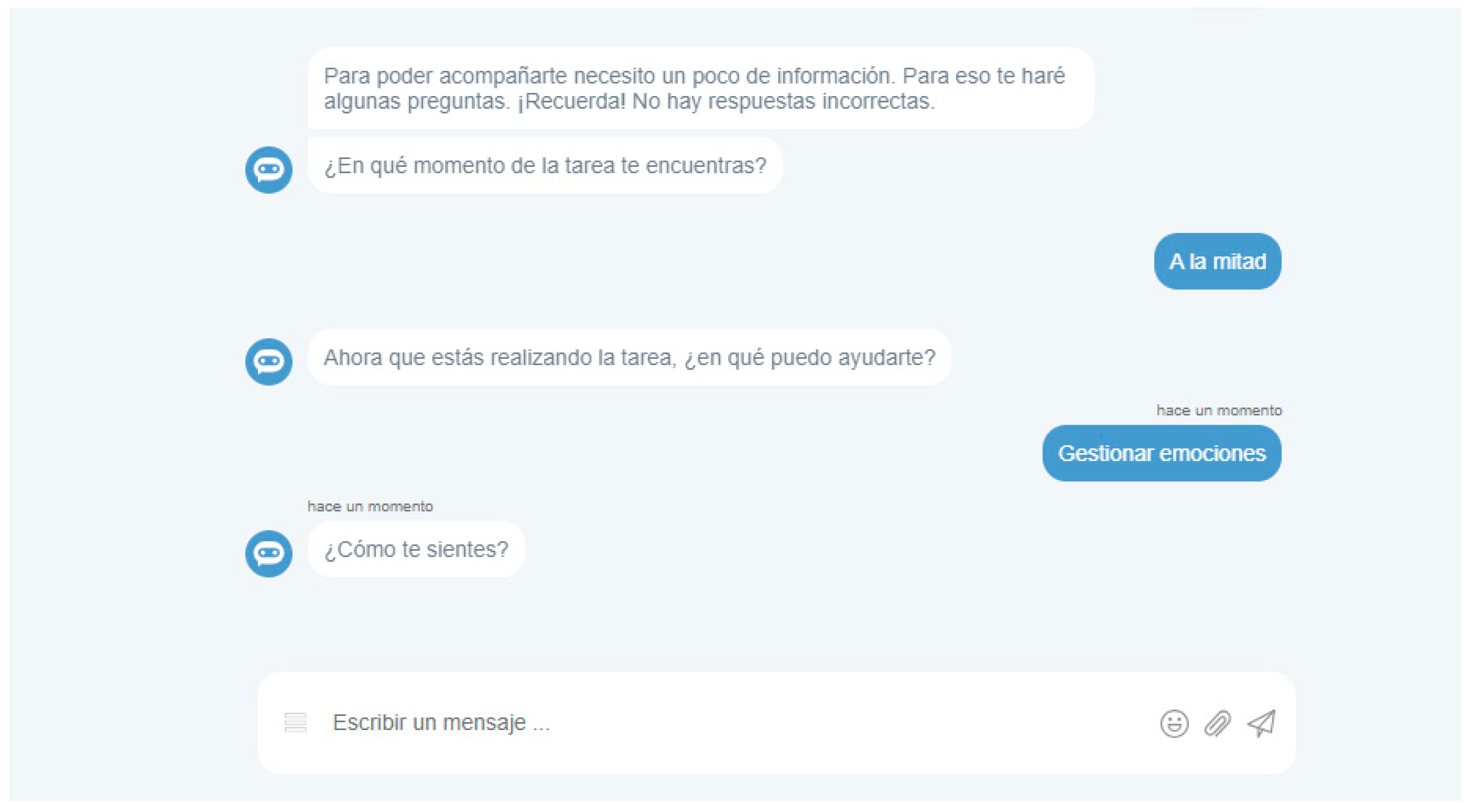

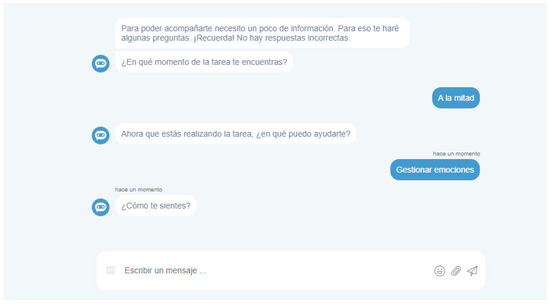

Despite being a chatbot where the response options were predefined, there were stages throughout the conversation where users could write a free text answer to better express themselves. The purposes for which answers were required in accordance with the self-regulation phase are detailed in Figure 3. As an example, Figure 4 shows the interface of the tool with an open question in the performance phase.

Figure 3.

Purposes for which the chatbot requires the users to input text.

Figure 4.

Visualisation of chatbot interface with an open question (how are you feeling?) in the performance phase. Note: Original messages are in Spanish. Translation of messages from Spanish to English: In order to be able to accompany you, I need some information. For that I will ask you some questions. Remember! There are no wrong answers; At what stage of the task are you?; In the middle; Now that you are doing your task, how can I help you?, Managing emotions, How are you feeling?

2.3. Data Collection and Analysis

After its implementation, during the second semester of the 2021–2022 academic year, all the information was downloaded from the analytics offered by the software tool (https://www.botstar.com/, accessed on 29 May 2024) with which the chatbot has been created. This tool anonymously collects the activity carried out by users. In case the user does not delete the cookies and always uses the same browser, the session remains logged in and the conversation resumes from the last point. Otherwise, the conversation starts again.

Specifically, chat sessions from 14 February to 12 July 2022 were downloaded, i.e., from the beginning of the semester until the end. This decision was taken in order to guarantee that most of the conversations corresponded to students from the courses participating in the educational innovation intervention carried out in the context of e-FeedSkill project. As mentioned, it was established at the co-design stage that the tool would not require user registration. This is why the chat sessions are anonymous. The tool’s analytics keep the sessions under pseudonyms (e.g., Guest Hunter). While it could be considered that the sessions correspond to a user, this is not fully guaranteed. For this reason, all messages identified with the same pseudonym have been considered as part of a conversation session.

From here, the downloaded file with 340 conversation sessions was analysed. To carry out this study, we followed quantitative and qualitative methods. Considering that EDUguia chatbot was developed as a rule-based conversational agent, the analysis was conducted following the structure of the flowchart. In the first place, Python scripting was used to analyse the downloaded conversations. For this purpose, we developed scripts for the following purposes: (i) filtering messages by date, (ii) measuring conversations by number of messages, (iii) measuring conversations by duration, (iv) finding top images (infographics and GIFs), (v) finding the last message in each conversation session, (vi) defining paths of replicas, (vii) setting up question-and-answer sequences grouped by questions, and (viii) setting up the numbers of replica paths according to number of interactions level by level.

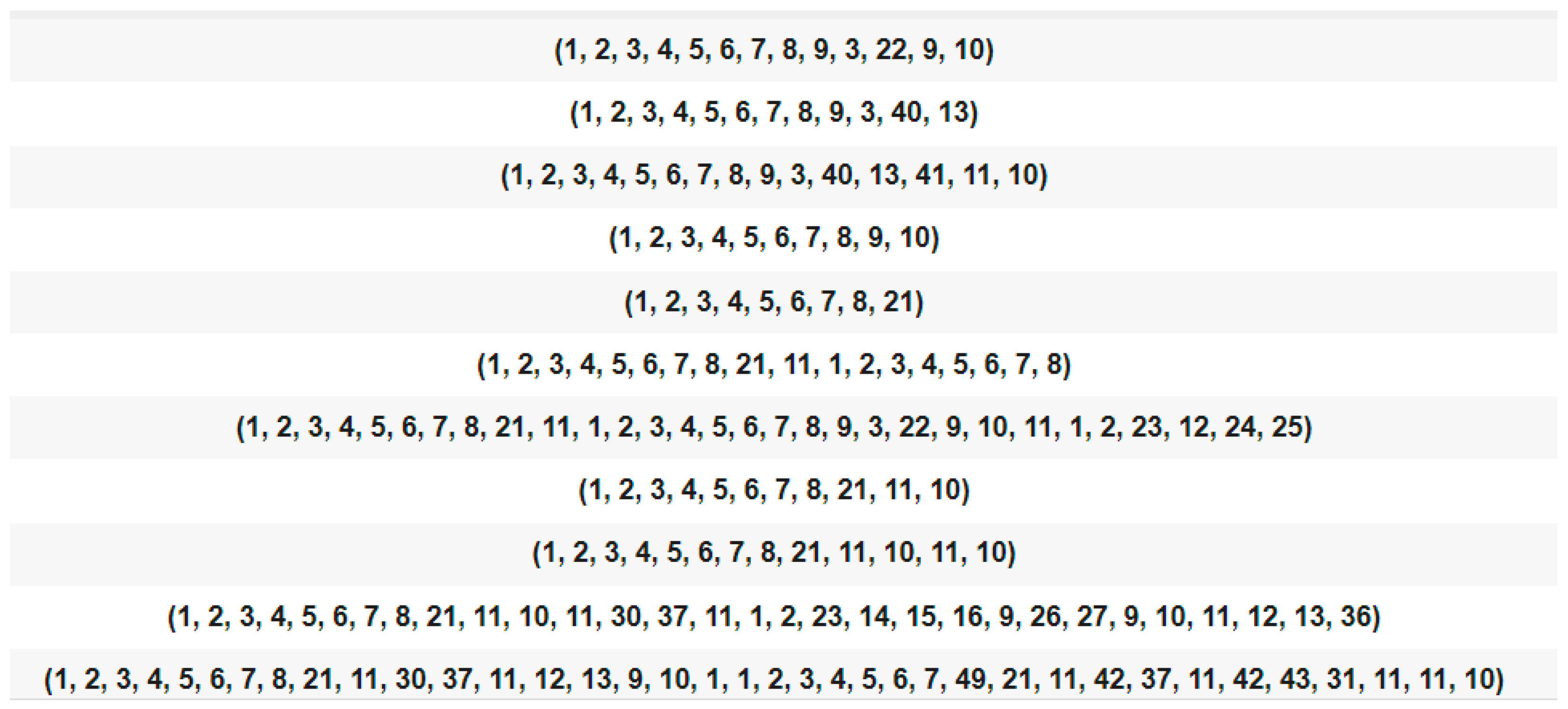

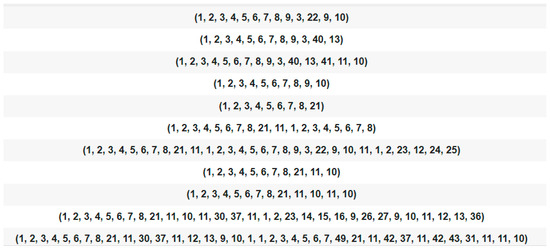

When using Python scripting to define paths of replicas, we coded all the different steps in the conversations (bot automata); then, we applied this codification to all the conversations. So, all the steps in the conversation were coded, and a number was assigned to each EDUguia chatbot message in the conversation. Table 2 provides an example of coding a part of the dialogue flow. With this, a Python script was run to obtain the different pathways (combination of codes) in each conversation session. In Figure 5, a screenshot of the paths obtained is shown.

Table 2.

Visualisation of a dialogue flow section coded.

Figure 5.

Screenshot of the pathways obtained using Python script.

For the quantitative analysis, we conducted basic exploratory statistics, analysing the frequencies of users’ selected options using SPSS v.27 and Microsoft Excel. For the qualitative analysis, we defined different categories for classifying the data following the storyline with which the dialogue flow was created. Also, considering that the conversation content and flow was created based on SRL phases, the options selected by the users were classified according to the phase of the self-regulation learning cycle for which they had been created. Of the conversation sessions analysed, it was found that some sessions occurred in the same day, but in other cases the users abandoned the dialogue flow in a given day but then returned to the conversation later in different moments. For this reason, we decided to establish a classification that reflected the different patterns found in the sessions. Considering the number of conversations sessions, we made a manual analysis, using the filters and excel tools. The codification was made manually, using the filter tool provided by Microsoft Excel. The proposed classification is detailed below, in Table 3.

Table 3.

Classification of session patterns.

In addition, infographic resources were searched in the conversation file in order to count the number of views. Finally, in order to analyse the users’ answers to the open questions, the file with the conversations per session (Guest) was filtered and the questions following the storyline with which the dialogue flow was created were identified. In this way, the answers that the users had written immediately after the question were collected. Similar answers were grouped by content and their frequencies were analysed.

3. Results

3.1. R.Q.1. How Many Messages Make Up the Conversations and What Is Their Frequency?

Considering that a few interactions are required for a conversation to be set up, from the statistical analysis of conversation length (Table 4) of 340 conversation sessions, we found that the longest conversation was composed of 171 messages, in which 60 messages corresponded to user responses, so the remaining 111 were the chatbot’s messages. The shortest conversations were composed of four messages, with only one user response.

Table 4.

Statistics of conversation length.

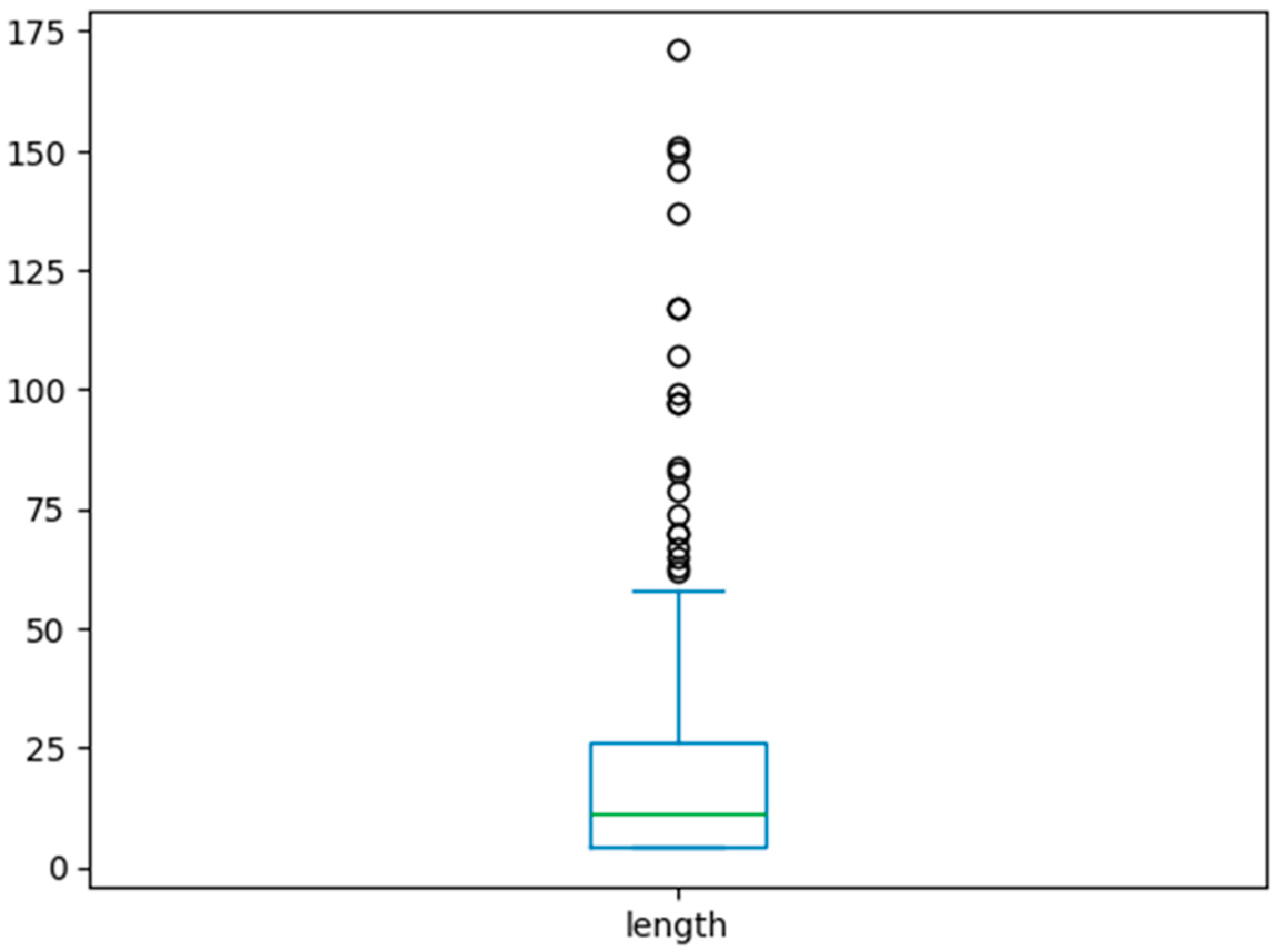

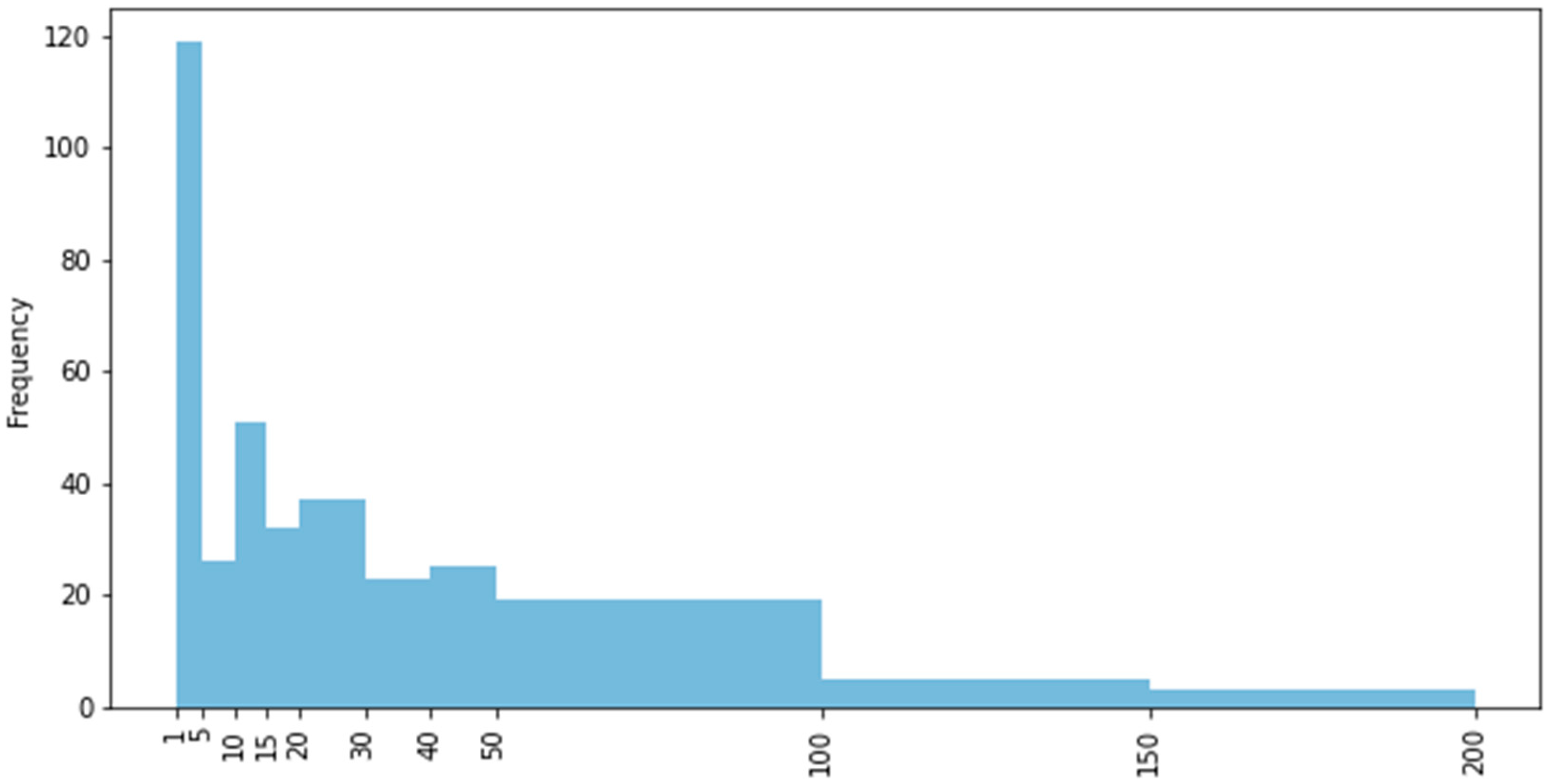

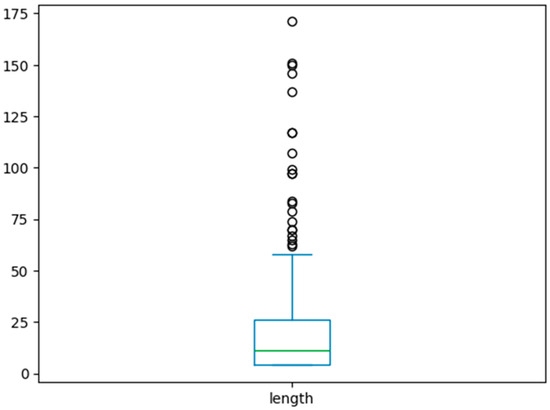

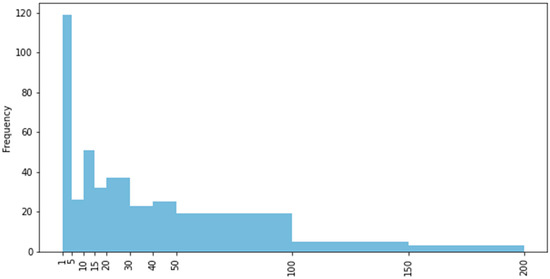

In the frequency analysis, it was found that the most frequent conversations were composed of four messages (N = 119, 35%), followed by ones composed of ten messages (N = 22, 6.5%) and others composed of seven messages (N = 17, 5%). Conversations with other lengths, both longer and shorter, represent smaller percentages of the overall sessions. So, the shortest conversations were the most frequent interaction. The distribution of conversation lengths is shown in Figure 6; and the frequency distribution of conversation lengths is shown in Figure 7. The length of most of the conversations was less than 50 messages. As can be seen in this figure (Figure 7), from conversations composed of 65 messages onwards, the frequency of conversations enters a plateau and then declines.

Figure 6.

Boxplot of length distribution. Note: circles in boxplot represents outliers and extremes values.

Figure 7.

Histogram of conversation length measurement.

3.2. R.Q.2. How Are Users’ Interactions with the Tool Distributed over Time?

From our proposed classification of session patterns, we share the number of cases found for each session type (Table 5). As can be seen, the most frequent session type is One-day, followed by “Type A” (N = 46).

Table 5.

Number of cases by session type.

3.3. R.Q.3. At What Point in the Conversational Flow Do Users Leave the Interaction with the Tool?

The endpoints of each of the 340 conversation sessions were analysed. It was found that 119 sessions finished after the chatbot’ message “Shall we start?” with no response from the user. This chatbot message is the beginning of the dialogue flow and it is only shown when a user initiates a session. This means that 35% of the sessions ended before the user could interact with the chatbot. In addition, it was found that 18 conversation sessions ended with the chatbot’s message “At what point in the task are you?”. In the dialogue flow, this message could appear in two moments: (i) at the beginning of the session; or (ii) after following a certain path and being asked to return to the start for further interaction. Of those 18 sessions, 17 ended the interaction with the chatbot after seeing this question for the first time. Besides this, 71 sessions (20.88%) ended with a goodbye GIF. This GIF was selected in the design and development of the chatbot’ script to mark the ending point of different paths. So, these sessions could be identified as successful.

A similar pattern was repeated when analysing the endpoints of one-day sessions (N = 284). It was found that 120 sessions (42.25%) ended with the message “Shall we start?”, while 51 ended with the goodbye GIF (17.96%). Considering the sessions that were longer than a day, it was also found that the message “Shall we start?” was the endpoint of the first interaction in twenty-two cases, of the second interaction in two cases, and in one case of both the third interaction and fourth interaction.

3.4. R.Q.4. What Is the Duration (in Time) of the Conversations?

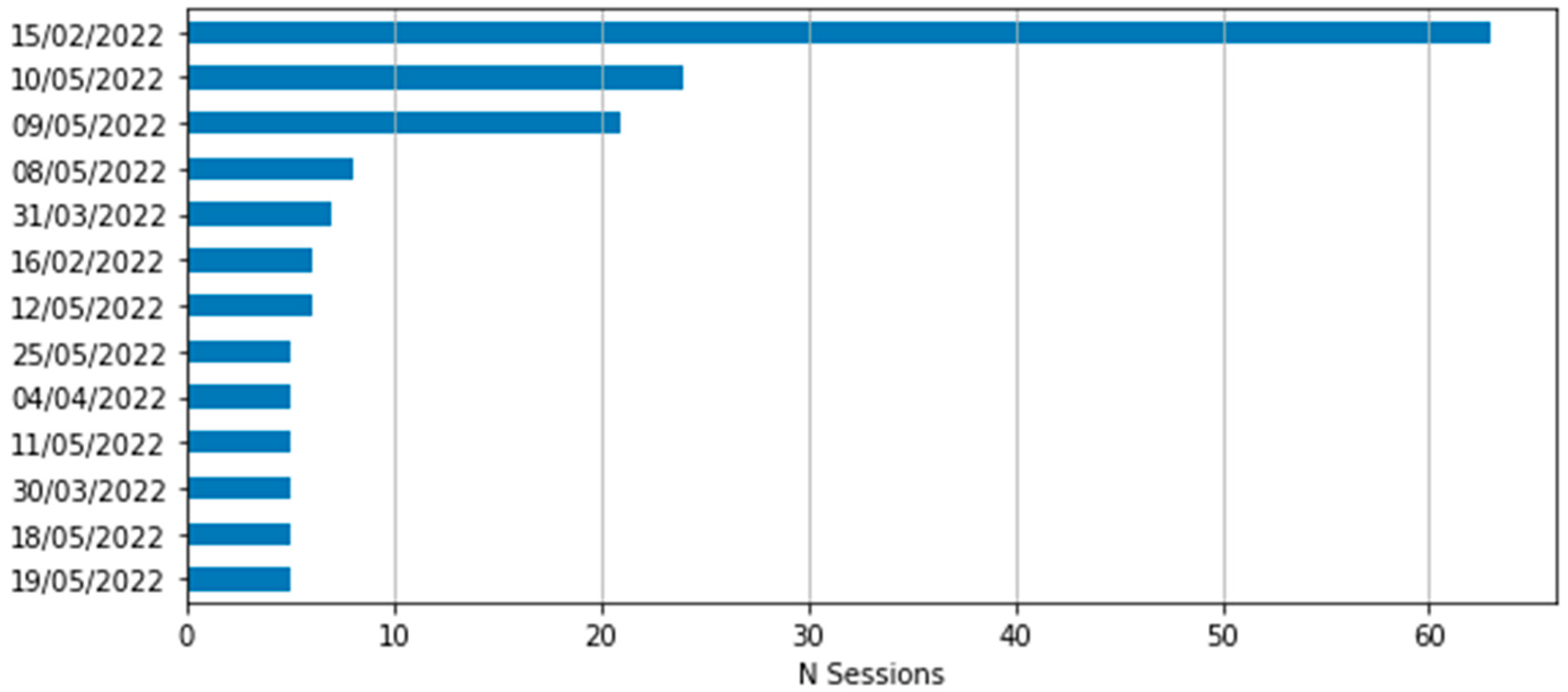

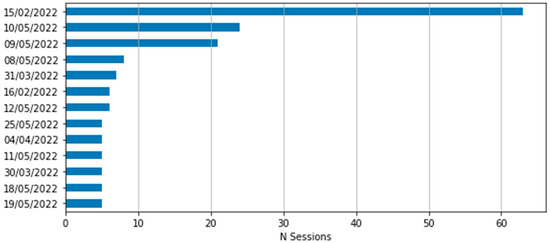

The one-day sessions’ average duration was 3 min 32 s. The shortest conversation was 6 s and the longest was 3 h 2 min 18 s. Regarding the date of the one-day sessions, as is shown in Figure 8, the date with the most sessions (N = 63) was 15 February 2022, followed by 10 May 2022 (N = 24). These dates coincide, respectively, with the beginning of the implementation of the sequence in the subjects of the two universities involved.

Figure 8.

Number of one-day sessions per date.

As for the duration of the sessions that took multiple days, for the first interactions, the average duration was 3 min 4 s. The shortest conversations were 6 s and the longest 29 min 59 s. Again, the date with the most first interactions was 15 February 2022. For the second interaction, the average duration was 4 min 31 s. For the third interaction, the average duration was 4 min 18 s, and for the fourth interaction, it was 39 s.

3.5. R.Q.5. How Do User-Selected Options Define the Flow of Conversation?

Regarding the pathways, that is the options selected by the users that define the flow of the conversation, 140 pathways (types of trajectories) were found. That is to say that some trajectories were replicated in several conversation sessions. But, as expected, in most cases, they were single trajectories. Each conversation session followed a unique combination of messages within the established dialogue flow.

As mentioned before, within these 140 pathways, cases of users who held conversations including a considerable number of messages were found (for example, from 171 messages to 65 messages). From the frequency analysis of selected options, there were differences in the number of options selected by users depending on the moment of performing the task and the skills or strategies to be developed they selected (Table 6).

Table 6.

Options selected by users according to the self-regulation phase.

3.6. R.Q.6. What Are the Most Common Replica Sequences?

In Table 7, we show the most common sequences of replicas offered by the chatbot according to the length of the interactions. We can see how the shorter sequences are centred on the first phase (planning), but longer ones are more centred on monitoring tasks; this means that longer conversations with the chatbot started later, when the users were already performing their tasks.

Table 7.

Most common sequences of EDUguia chatbot replicas for each total number of interactions.

3.7. R.Q.7. How Do Users Interact with the Infographic Resources for SRL Embedded in the Tool?

Regarding the resources offered in infographic format, it was found that the most shown infographic (77 times) was: “Have you already defined your objectives?”. Following the script and flowchart used for the design of the chatbot, this infographic was developed to start the conversation and provide strategies for the definition of goals, as a fundamental action of the planning phase according to the cyclical process of the self-regulation of learning. This would indicate that when initiating the conversation, a representative number of users started the conversation indicating that they were at the beginning of the task, choosing the option “define objectives” to start.

The second most shown infographic (54 times) was “Have you thought about what to do first to achieve your learning objectives?”. This would indicate that some users, after viewing the infographic “Have you already defined your goals?”, chose to answer “yes” to the chatbot’s next message, “Defining objectives is important, but it is not so easy! Do you know how to define SMART objectives?”, going directly to its next message: “Meeting a goal can involve several actions. Do you want to know how you can achieve your goals?”. When opting for “yes”, the message “Let’s start from the beginning” was displayed and followed by the infographic “Have you thought about what to do first to achieve your learning objectives?”.

The infographic “What do you want to achieve at the end of the whole process?” was shown 49 times, which indicates that some users went from viewing the infographic “Have you already defined your goals?” to answer “no” to the chatbot’s message “Defining objectives is important, but it is not so easy! Do you know how to define SMART goals?”

Next, in Table 8, we present the infographics in order from the most to the least shown, and according to the phases for which they were designed as resources to strengthen the development of learning self-regulation strategies.

Table 8.

Most viewed infographic resources for self-regulation enhancement.

3.8. R.Q.8. How Do Users Respond to the Chatbot’s Open Questions?

Regarding the conversation points where the user could write an answer, we found that 34 sessions arrived at the question “What kind of task are you doing?”. In this group, 28 users answered: essay (sixteen cases), peer assessment (four cases), programming (one case), draft upload (one case), writing (one case), power point presentation (one case), “think about how to make the client receive the new commands and the logic of the game” (one case), and “comparing two instructional models” (one case). This indicates that before answering that question, the remaining eight users closed the conversation.

It was also found that 13 users arrived at the question “How do you feel?”. So, regarding the feelings and emotions during the performance of the task, students expressed: bad (two cases), overwhelmed (two cases), sad (one case), saturated with tasks (one case), tired (one case), a little overwhelmed (one case), fine (one case), and one case with a nonsensical answer. This indicates that three users closed the conversation before answering the question.

In order for students to be able to answer these questions, it was necessary by default to leave the text field available. As such, users were able to write messages addressed to the conversational agent on several occasions. This provided us with an opportunity to analyse some of these messages, which express the students’ expectations, needs, behaviours, and emotions when using the tool.

For example, one user (Guest Curry) wrote “are you an AI?”, “you shut up?”, “I don’t want to!”. These messages show the low quality of language used and the vocabulary that students would not use with their professors at university. Other messages also exemplify the tendency to attribute human characteristics to the conversational agent: “Hello handsome” (Guest Mari), “What is your name?” (Guest Roberts), and “Hello, what else can you tell me?” (Guest Yokoyama).

Although when presenting the tool, it was reported that EDUguiawas a chatbot for self-regulation and therefore it would not give answers about the course or the task, some users expected that the tool would answer questions like “How should I deliver the work?” (Guest Catalano), “How do you upload a task in the workshop?” (Guest Brooks), “once I have submitted the first task what should I do?” (Guest Green), “When are the exam days?” (Guest Hunter), and “I would like to know how I can visualize the feedback given to my work” (Guest Blackburn).

We also found that, although the chatbot offered response options after a question, some users tended to write a response in the open text field.

3.9. Limitations and Implications

The development of EDUguia chatbot faced some drawbacks and challenges that are important to consider. In the first place, the creation of this tool was just a part of a bigger R + D project, which implies that the tool had to be thought of, designed, and launched within a limited time period. In addition, this project had a limited budget for the development of the tool. In this sense, some features that could have improved the functionality of the tool had to be dismissed.

The kind of data that could be collected should be considered a delimitation, particularly for the analysis carried out in this study. The fact of not having log-in information constrained the chance to establish better patterns of use or users’ profiles. This also impedes the analysis of differences between students attending an online university and a traditional university or the differences between students from different courses performing different tasks. Moreover, it is worth mentioning that due to the characteristics of the data, it could be possible that some of the conversations were not from a student but from a teacher or member of the project team.

There is a fact that should be considered for this case study, which might not be a limitation but a factor involved in the results. The EDUguia chatbot was embedded in a specific didactic sequence. This sequence was composed of a considerable number of actions for the students to undertake. In addition, it implied two instances of peer assessment. In this vein, the complexity of the activities and tasks and the time commitment involved in them could have affected the willingness of students to use the tool. While the tool was developed with the intention of fostering a self-regulation learning process, it is possible that students struggled to use the tool properly. As for the reasons for this, it is a reasonable assumption that students’ focus could have been placed on delivering the tasks and completing the activities rather than the self-regulation process. Besides the mentioned limitations, as far as we know, there are few studies analysing conversation pathways with digital tools for enhancing self-regulation skills in higher education. For this reason, this work could contribute to other educators, designers, and developers interested in developing a tool addressed to this goal. In the section “Findings and their impact on design”, some relevant learnings from this experience are shared and could be used to guide future studies.

4. Discussion

This study presents an analysis of conversation pathways with a chatbot tutor to enhance self-regulation skills in higher education. As mentioned before, the conversation flow of the EDUguia chatbot was created as a conversation tree, and no NLP was used. This made it possible to analyse how users, that is higher education students, interact with a tool designed to enhance the self-regulation of learning during the performance of a complex task in different courses.

EDUguia chatbot was developed considering not only cognitive regulation but also the motivational and emotional aspects of the self-regulation learning process. In this regard, Scheu and Benke [36] already highlighted the importance of research focused on affect and motivation factors when SRL is supported by digital assistants. Our findings show that students express some negative emotions when facing a complex task, so it is crucial to develop tools that can offer them suitable support and orientation.

In our case, we found that 119 sessions finished after the chatbot’ message “Shall we start?” with no response from the user. This means that 35% of the sessions ended before the user could interact with the chatbot. Considering that these results are based on the analysis of 340 conversation sessions, it means that more than 60% of the sessions continued. In this regard, Mokmin and Ibrahim [22] found, in their study on the evaluation of a chatbot for health literacy, similar percentages of users that exited the application. In our study, taking into account the last message before leaving the session, it was found that on occasions the conversation did not take place, which means that the conversation was abandoned in the first interaction. This could be caused by curiosity about a novel tool, meaning that users clicked on the link just to see what the chatbot looked like. But this scarcity of engagement could also be caused by a lack of time to spend on an untested activity, disinterest, or unfamiliarity with the tool.

Following the views of the infographic resources and messages, it was found that most of the interactions occurred in the sections in the conversation flow designed to enhance the strategies for the forethought phase of self-regulated learning. A possible explanation for this could be that at the beginning of the task, students needed more support, but the decrease in use could also be explained by the loss of the initial novelty or not finding the type of tool or answers they expected. In this regard, and as mentioned before, some users tended to write a response in the open text field when they were not supposed to. Brandtzaeg and Følstad [29] already warned that not finding the expected responses could have a frustrating effect on users.

As other authors indicated, the particularities of communication between a human and a chatbot must be considered from an ethical point of view [37]. In this sense, as we mentioned in the results section, examples of the tendency of human attribution to the conversational agent were found. Another interesting finding was the quality of the vocabulary and the use of language employed by the students [15]. Although the messages were generally respectful, some expressions were found to be not entirely appropriate for an academic context.

Finally, along the lines expressed Du et al. [41] and oriented by the findings of the study presented in this paper, it should be recommended to implement this kind of tool in different learning activities and in different courses, allowing for more interactions with the chatbot throughout the semester.

5. Conclusions

Based on our results, some concluding remarks could be provided. In this sense, we share some conclusions regarding the impact of our findings on the design of tools for self-regulated learning.

5.1. Findings and Their Impact on Design

Our findings are relevant to the analysis of the link between design and user experience, but they can also be related to implementation decisions. In the first place, the fact that the most frequent conversation was composed of four messages (N = 119) should be considered when developing the initial messages. These should be engaging so that the conversation goes as smoothly as possible to ensure that the tool is used extensively.

Most user sessions started the day that the intervention and the activities of the didactic sequence were presented in class or in the virtual campus. This could constitute a good indicator that the curiosity caused by the novelty works as a powerful motor to initiate the interaction; however, this it is not enough to sustain users’ interactions with the tool for a considerable period of time. Moreover, it is possible to argue that users also initiated sessions on those specific dates because a teacher or project researcher introduced the existence of the tool and encouraged its usage.

The fact that sessions decreased over the semester could be considered, from an intervention design perspective, an indicator of the fact that embedding the tool in the learning management system (inserted in the Moodle interface as a link resource) was not carried out in a convincing way.

The finding that the one-day sessions’ average duration was 3 min 32 s could point in the direction that the infographics were not an adequate resource for conveying information. This is because infographics require far more time to be read in full and to make sense of the information provided.

Finally, from the analysis of the open field text, it was found that users requested orientation or help in tackling different aspects of the process involved in the didactic sequence. This reveals that a reworking of the flow of the tool should be considered so as to respond to users’ expectations (give guidance on following the didactic sequence) but still providing scaffolding for learning self-regulation. This could also mean the possibility of considering a less general tool but rather one more centred on the didactic sequence and their activities connecting them with the self-regulatory process. In that way, maybe students could realise that the tool is deeply connected with what they are doing in the course, and in the meantime, they could benefit from the orientation towards self-regulation.

5.2. EDUguiachatbot and Self-Regulation Learning

EDUguia chatbot is a digital tool that was designed in a collaborative way involving different stakeholders, especially university students who would be the end users of the chatbot.

The dialogue flow of this tool was developed following the theoretical contributions on the process of self-regulation of learning. Taking into account that this process requires the development of strategies and skills that can be trained, this chatbot offers the student the possibility to do it in a dialogic way. In this sense, the tool appeals to the learner’s agency by allowing them to select options based on the moment they are in in the process of completing a task. For each of these moments, the tool offers resources that seek to support the cognitive, metacognitive, motivational, and affective dimensions of the learning process.

The aim of this work was to explore how users interacted with this tool in order to understand whether any relationship could be established with the self-regulation process it seeks to support.

As for our results, the fact that the most frequent conversation was composed of four messages suggests that the interaction is not extensive enough to develop complex skills, such as those expected of a self-regulated learner. Also, the result that most of the sessions took place in a single day and the average duration was 3 min 32 s would be inconsistent with the notion of a cyclical process that requires iterations over a period of time.

As mentioned in the Discussion section above, the fact that most sessions took place on dates on which the tool was presented in the classroom may suggest the importance of lecturers encouraging students to use the tool. Moreover, the role of lecturers would be crucial in the integration of the tool into the pedagogical sequence of their course in order to make the most out of the chatbot.

In addition to these, our results show that the shorter sequences are centred on the first phase (planning), but longer ones are more centred on monitoring tasks. This may suggest that students seek support when performing their tasks. Furthermore, we found more activity that consulted resources related to the forethought phase. This could also be due to the novelty effect of the tool. Based on the most consulted infographics and the path that led to it, this would reflect that some students require support in defining their learning goals when faced with a complex task.

Regarding the motivational and affect dimensions of self-regulated learning, our data collected from the responses to the open answer “How do you feel?” reveal that this is an important factor that is sometimes not fully considered. As such, the fact that most responses express negative feelings indicates that tools should be carefully designed to accommodate and provide adequate support for this dimension.

In conclusion, this study shows that the tool has the potential to support all dimensions of self-regulation process, but some considerations about its implementation must be considered. Also, future works should explore user-related factors, such as previous experience with chatbots, level of metacognition, and overall digital literacy.

While the implementation of this chatbot has been finalised, the outcomes identified in this study will steer future developments in order to address the shortcomings faced. We suggest that to improve the functionalities of the tool for the effective development of self-regulation skills and strategies, developers must work collaboratively with users. In this way, options can be assessed to improve engagement with the interaction, sustain interactions over time, and optimise the information provided by the chatbot.

Author Contributions

Conceptualization, L.M., M.F.-F. and E.P.; methodology, L.M.; software, E.P.; validation, L.M.; formal analysis, L.M. and E.P.; investigation, L.M. and M.F.-F.; resources, L.M., M.F.-F. and E.P; data curation, L.M. and E.P; writing—original draft preparation, L.M. and M.F.-F.; writing—review and editing, L.M.; visualization, L.M. and E.P.; supervision, L.M. All authors have read and agreed to the published version of the manuscript. This article is part of the first author PhD thesis by publication. The writing has been conducted in the context of AYUDAS PARA CONTRATOS PREDOCTORALES PARA LA FORMACIÓN DE DOCTORES (PRE2020-095434) funded by MCIN/AEI.

Funding

This research was funded by Spanish State Research Agency, Spain grant number AEI/PID2019-104285GB-I00. The APC charges of this publication have been covered by: (1) Ajuts de la Universitat de Barcelona per publicar en accés obert – Convocatòria 2024, and (2) The Departament de Recerca i Universitats de la Generalitat de Catalunya through the consolidated research group Learning, Media & Social Interactions (2021 SGR00694).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and the R + D project was approved by the Institutional Review Board (IRB00003099)of Universitat de Barcelona date of approval: 17 November 2021) and the Ethics Committee of Universitat de Catalunya (date of approval: 15 December 2021).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Villegas-Ch, W.; Arias-Navarrete, A.; Palacios-Pacheco, X. Proposal of an Architecture for the Integration of a Chatbot with Artificial Intelligence in a Smart Campus for the Improvement of Learning. Sustainability 2020, 12, 1500. [Google Scholar] [CrossRef]

- Hobert, S. How Are You, Chatbot? Evaluating Chatbots in Educational Settings–Results of a Literature Review; DELFI; Gesellschaft für Informatik e.V.: Bonn, Germany, 2019. [Google Scholar] [CrossRef]

- Fichter, D.; Wisniewski, J. Chatbots Introduce Conversational User Interfaces. Online Search 2017, 41, 56–58. [Google Scholar]

- Zahour, O.; El Habib Benlahmar, A.E.; Ouchra, H.; Hourrane, O. Towards a Chatbot for educational and vocational guidance in Morocco: Chatbot E-Orientation. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 2479–2487. [Google Scholar] [CrossRef]

- Dutta, D. Developing an Intelligent Chat-Bot Tool to Assist High School Students for Learning General Knowledge Subjects. Georgia Institute of Technology. 2017. Available online: http://hdl.handle.net/1853/59088 (accessed on 21 May 2024).

- Cahn, B.J. CHATBOT: Architecture, Design, & Development. Master’s Thesis, University of Pennsylvania School of Engineering and Applied Science Department of Computer and Information Science, Philadelphia, PA, USA, 2017. Available online: https://www.academia.edu/download/57035006/CHATBOTthesisfinal.pdf (accessed on 21 May 2024).

- Han, V. Are Chatbots the Future of Training? TD Talent Dev. 2017, 71, 42–46. [Google Scholar]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar] [CrossRef]

- Monereo, C. La evaluación del conocimiento estratégico a través de tareas auténticas. Rev. Pensam. Educ. 2003, 32, 71–89. [Google Scholar]

- Durall Gazulla, E.; Martins, L.; Fernández-Ferrer, M. Designing learning technology collaboratively: Analysis of a chatbot co-design. Educ. Inf. Technol. 2023, 28, 109–134. [Google Scholar] [CrossRef] [PubMed]

- Kerly, A.; Hall, P.; Bull, S. Bringing chatbots into education: Towards natural language negotiation of open learner models. Knowl.-Based Syst. 2007, 20, 177–185. [Google Scholar] [CrossRef]

- Pradana, A.; Goh, O.S.; Kumar, Y.J. Intelligent conversational bot for interactive marketing. J. Telecommun. Electron. Electron. Comput. Eng. 2018, 10, 1–7. [Google Scholar]

- Laurillard, D. Rethinking University Teaching: A Conversational Framework for the Effective Use of Learning Technologies; Routledge: London, UK, 2013. [Google Scholar]

- D’aniello, G.; Gaeta, A.; Gaeta, M.; Tomasiello, S. Self-regulated learning with approximate reasoning and situation awareness. J. Ambient. Intell. Humaniz. Comput. 2016, 9, 151–164. [Google Scholar] [CrossRef]

- Winkler, R.; Söllner, M. Unleashing the Potential of Chatbots in Education: A State-Of-The-Art Analysis; Dins Academy of Management Annual Meeting (AOM): Chicago, IL, USA, 2018; Available online: https://www.alexandria.unisg.ch/254848/1/JML_699.pdf (accessed on 21 May 2024).

- Suhni, A.; Hameedullah, K. Measuring effectiveness of learning chatbot systems on Student’s learning outcome and memory retention. Asian J. Appl. Sci. Eng. 2014, 3, 57–66. [Google Scholar]

- Lindsey, R.V.; Shroyer, J.D.; Pashler, H.; Mozer, M.C. Improving students’ long-term knowledge retention through personalized review. Psychol. Sci. 2014, 25, 639–647. [Google Scholar] [CrossRef] [PubMed]

- Benotti, L.; Martínez, M.C.; Schapachnik, F. Engaging high school students using chatbots. In ITiCSE’14, Proceedings of the 2014 Conference on Innovation & Technology in Computer Science Education, Uppsala, Sweden, 21–25 June 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 63–68. [Google Scholar] [CrossRef]

- Hadwin, A.F.; Winne, P.H.; Nesbit, J.C. Roles for software technologies in advancing research and theory in educational psychology. Br. J. Educ. Psychol. 2005, 75, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Sandoval, Z.V. Design and implementation of a chatbot in online higher education settings. Issues Inf. Syst. 2018, 19, 44–52. [Google Scholar]

- Pengel, N.; Martin, A.; Meissner, R.; Arndt, T.; Meumann, A.T.; de Lange, P.; Wollersheim, H.W. TecCoBot: Technology-aided support for self-regulated learning? Automatic feedback on writing tasks with chatbots. arXiv 2021, arXiv:2111.11881. [Google Scholar] [CrossRef]

- Mokmin, N.A.M.; Ibrahim, N.A. The evaluation of chatbot as a tool for health literacy education among undergraduate students. Educ. Inf. Technol. 2021, 26, 6033–6049. [Google Scholar] [CrossRef] [PubMed]

- Følstad, A.; Brandtzaeg, P.B.; Feltwell, T.; Law, E.L.-C.; Tscheligi, M.; Luger, E.A. SIG: Chatbots for social good. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems; SIG06:1-SIG06:4; ACM: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Abd-Alrazaq, A.; Safi, Z.; Alajlani, M.; Warren, J.; Househ, M.; Denecke, K. Technical metrics used to evaluate health care Chatbots: Scoping review. J. Med. Internet Res. 2020, 22, e18301. [Google Scholar] [CrossRef] [PubMed]

- Pilato, G.; Augello, A.; Gaglio, S. A modular architecture for adaptive ChatBots. In Proceedings of the 5th IEEE International Conference on Semantic Computing, Palo Alto, CA, USA, 18–21 September 2011. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Wollny, S.; Schneider, J.; DiMitri, D.; Weidlich, J.; Rittberger, M.; Drachsler, H. Are We There Yet?—A Systematic Literature Review on Chatbots in Education. Front. Artif. Intell 2021, 4, 654924. [Google Scholar] [CrossRef]

- Dimitriadis, G. Evolution in education: Chatbots. Homo Virtualis 2020, 3, 47–54. [Google Scholar] [CrossRef]

- Brandtzaeg, P.B.; Følstad, A. Why people use chatbots. In Internet Science, Proceedings of the 4th International Conference, INSCI 2017, Thessaloniki, Greece, 22–24 November 2017; Springer International Publishing: Cham, Switzerland, 2017; pp. 377–392. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Theories of self-regulated learning and academic achievement: An overview and analysis. In Self-regulated Learning and Academic Achievement: Theoretical Perspectives; Zimmerman, B.J., Schunk, D.H., Eds.; Lawrence Erlbaum: London, UK, 2001; pp. 1–37. [Google Scholar]

- Zimmerman, B.J. Becoming a Self-Regulated Learner: An Overview. Theory Into Pract. 2002, 41, 64–70. [Google Scholar] [CrossRef]

- Panadero, E. A Review of Self-regulated Learning: Six Models and Four Directions for Research. Front. Psychol. 2017, 8, 422. [Google Scholar] [CrossRef] [PubMed]

- Pintrich, P.R. The role of goal orientation in self-regulated learning. In Handbook of Self-Regulation; Academic Press: Cambridge, MA, USA, 2000; pp. 451–502. [Google Scholar] [CrossRef]

- Pintrich, P.R. A Conceptual Framework for Assessing Motivation and Self-Regulated Learning in College Students. Educ. Psychol. Rev. 2004, 16, 385–407. [Google Scholar] [CrossRef]

- Panadero, E.; Alonso-Tapia, J. ¿Cómo autorregulan nuestros alumnos? Modelo de Zimmerman sobre estrategias de aprendizaje. An. Psicol./Ann. Psychol. 2014, 30, 450–462. [Google Scholar] [CrossRef]

- Scheu, S.; Benke, I. Digital Assistants for Self-Regulated Learning: Towards a State-Of-The-Art Overview. ECIS 2022 Research-in-Progress Papers. 2022. 46. Available online: https://aisel.aisnet.org/ecis2022_rip/46 (accessed on 21 May 2024).

- Song, D.; Kim, D. Effects of self-regulation scaffolding on online participation and learning outcomes. J. Res. Technol. Educ. 2021, 53, 249–263. [Google Scholar] [CrossRef]

- Sáiz-Manzanares, M.C.; Marticorena-Sánchez, R.; Martín-Antón, L.J.; Díez, I.G.; Almeida, L. Perceived satisfaction of university students with the use of chatbots as a tool for self-regulated learning. Heliyon 2023, 9, e12843. [Google Scholar] [CrossRef] [PubMed]

- Ortega-Ochoa, E.; Pérez, J.Q.; Arguedas, M.; Daradoumis, T.; Puig, J.M.M. The Effectiveness of Empathic Chatbot Feedback for Developing Computer Competencies, Motivation, Self-Regulation, and Metacognitive Reasoning in Online Higher Education. Internet Things 2024, 25, 101101. [Google Scholar] [CrossRef]

- Calle, M.; Edwin, N.; Maldonado-Mahauad, J. Proposal for the Design and Implementation of Miranda: A Chatbot-Type Recommender for Supporting Self-Regulated Learning in Online Environments. In Proceedings of the LALA’21: IV Latin American Conference on Learning Analytics, 2021, Arequipa, Peru, 19–21 October 2021. [Google Scholar]

- Du, J.; Huang, W.; Hew, K.F. Supporting students goal setting process using chatbot: Implementation in a fully online course. In Proceedings of the 2021 IEEE International Conference on Engineering, Technology & Education (TALE), Wuhan, China, 5–8 December 2021; pp. 35–41. Available online: https://ieeexplore.ieee.org/document/9678564 (accessed on 21 May 2024).

- Lluch Molins, L.; Cano García, E. How to Embed SRL in Online Learning Settings? Design through Learning Analytics and Personalized Learning Design in Moodle. J. New Approaches Educ. Res. 2023, 12, 120–138. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).