Boosting Active Learning Through a Gamified Flipped Classroom: A Retrospective Case Study in Higher Engineering Education

Abstract

1. Introduction

2. Materials and Methods

2.1. Active Learning

2.2. Blended Learning

2.3. Flipped Classroom Learning

2.4. Gamified Learning

2.5. The Course of Interest

2.5.1. Course Historical Background

2.5.2. Course Setup

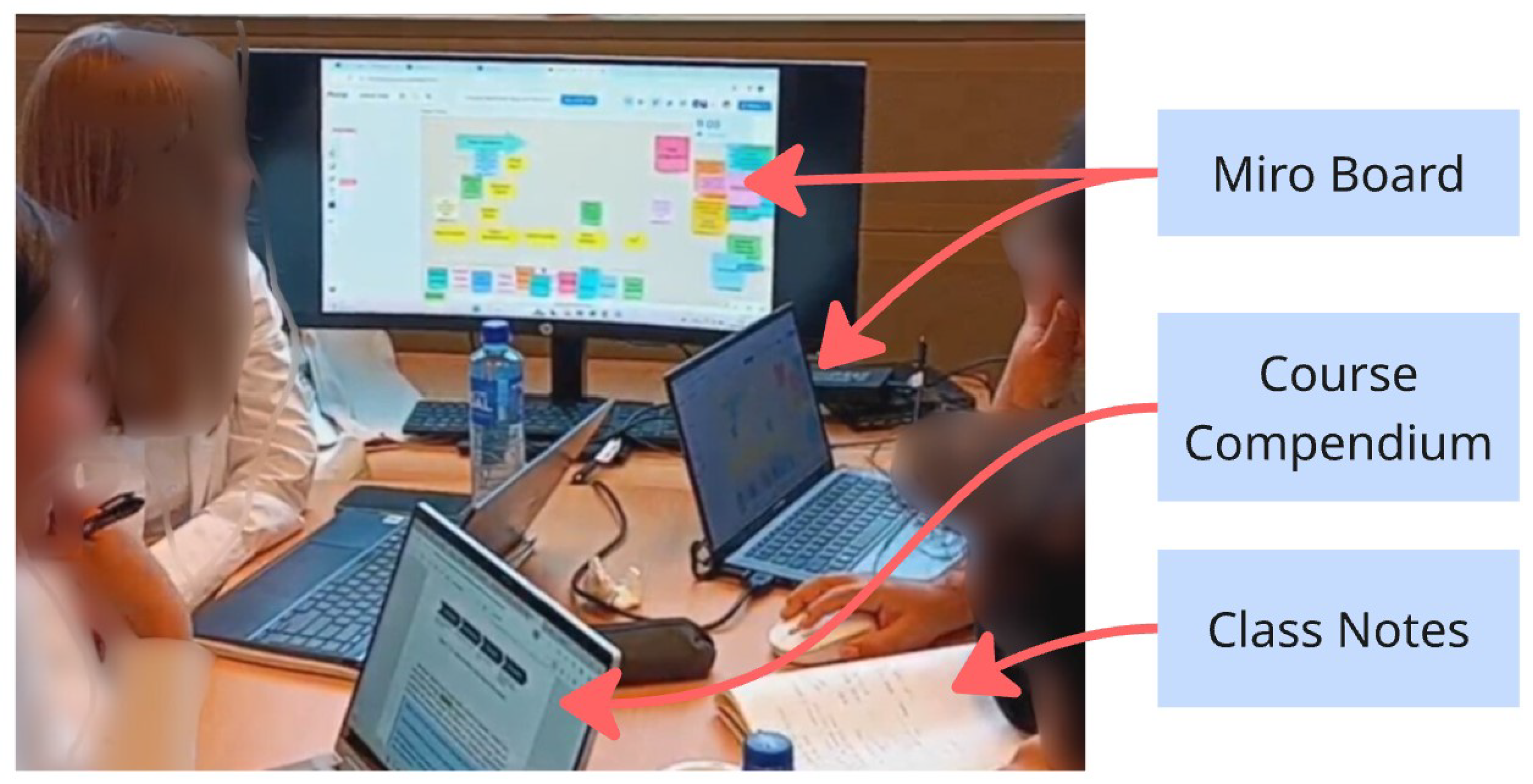

2.5.3. Gamified Flipped Classroom

2.5.4. Game Playing Approach

2.5.5. Concept Assignment

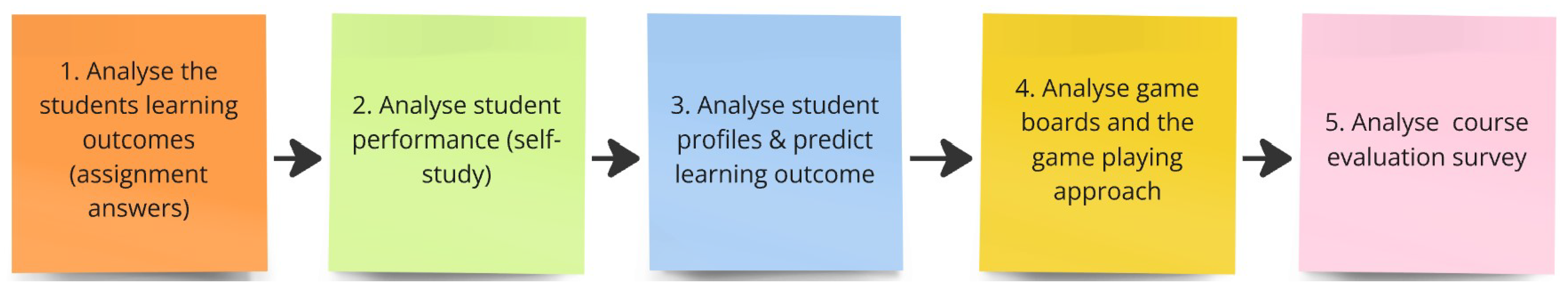

2.6. Methodology

2.6.1. Analysis of Learning Outcomes

Quote 1: “Time domain technique is ineffective for Complex Systems, Not ideal for systems with multiple overlapping signals or separating out components of a multi-frequency system”.—Student 1, Concept assignment, Answer to Question 10

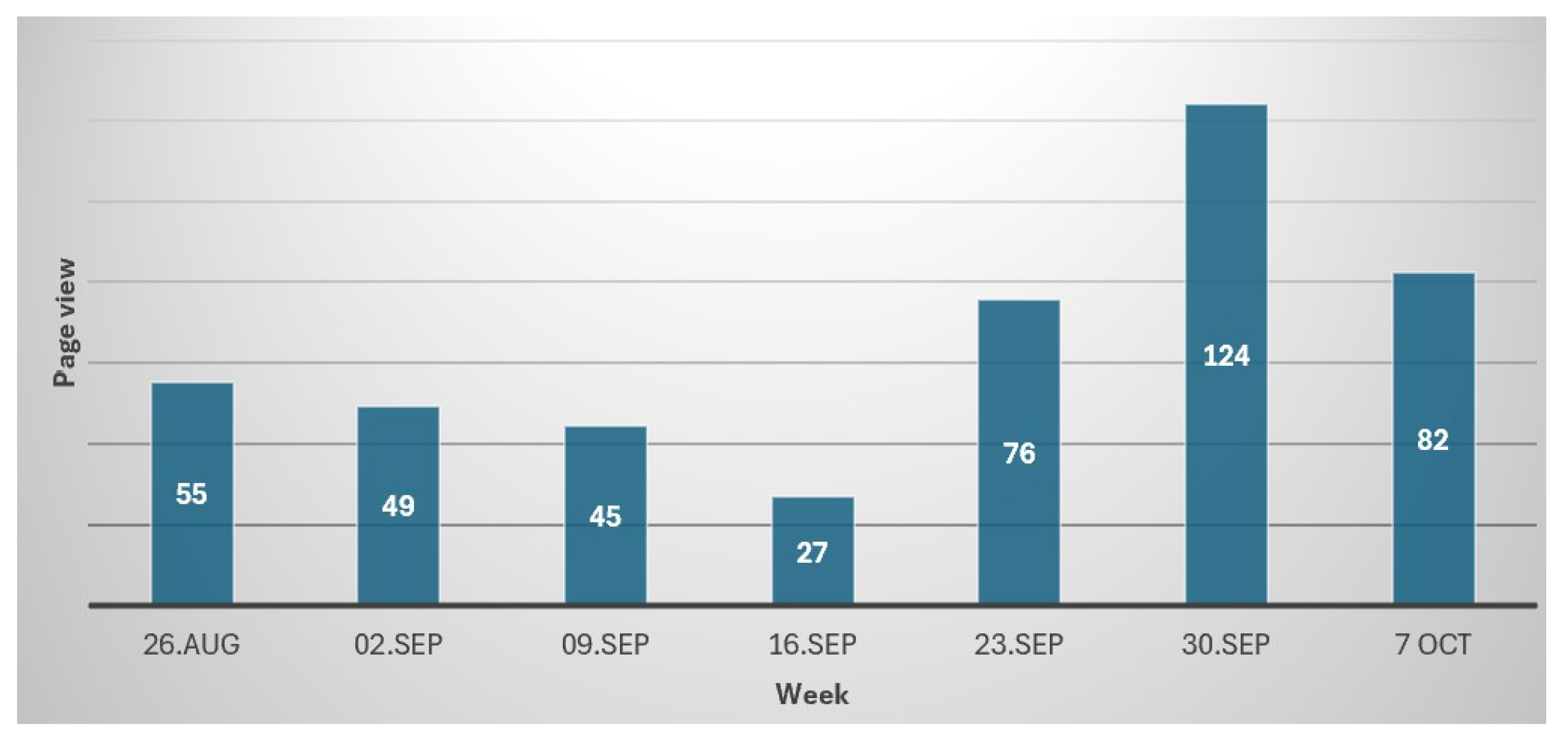

2.6.2. Student Learning Performance Analysis

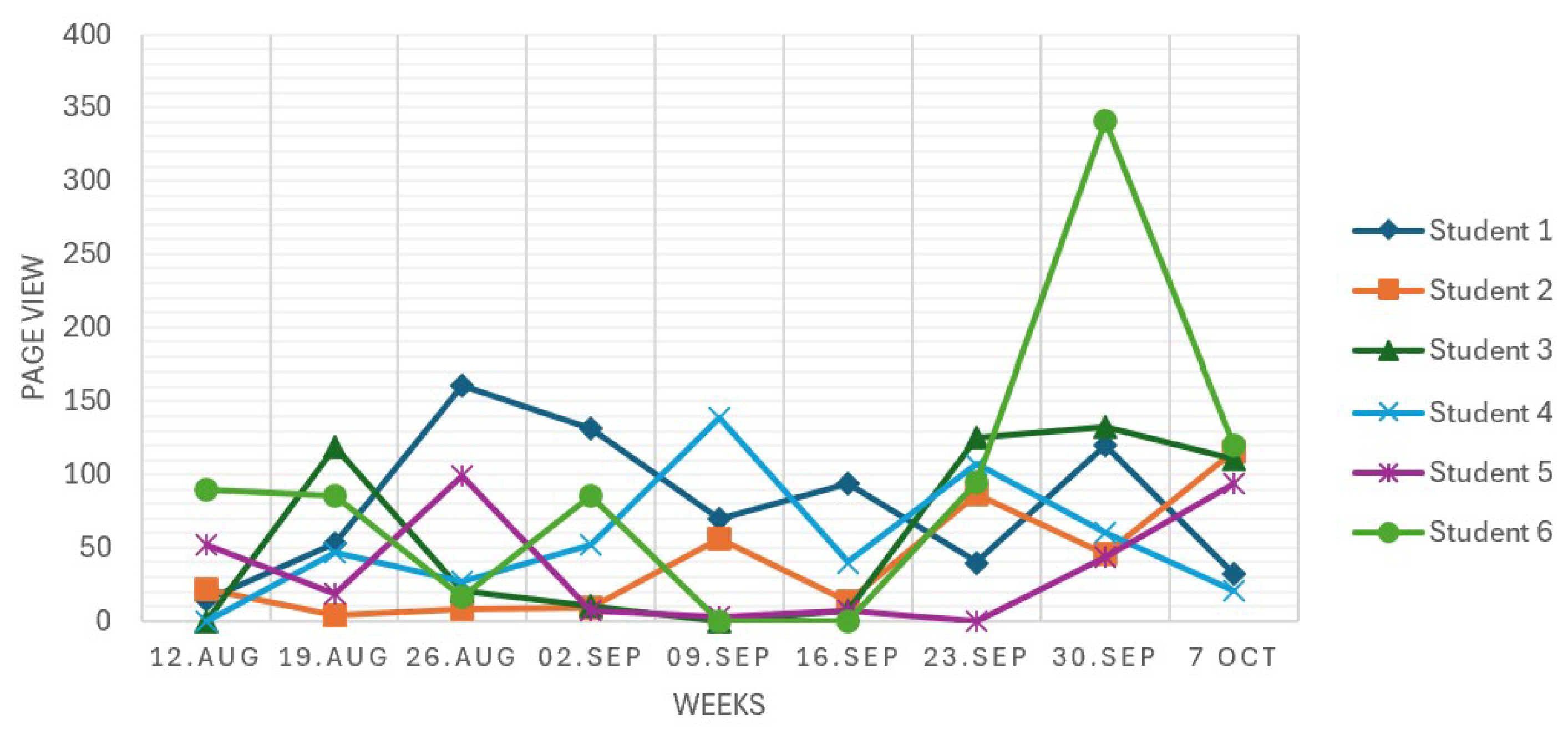

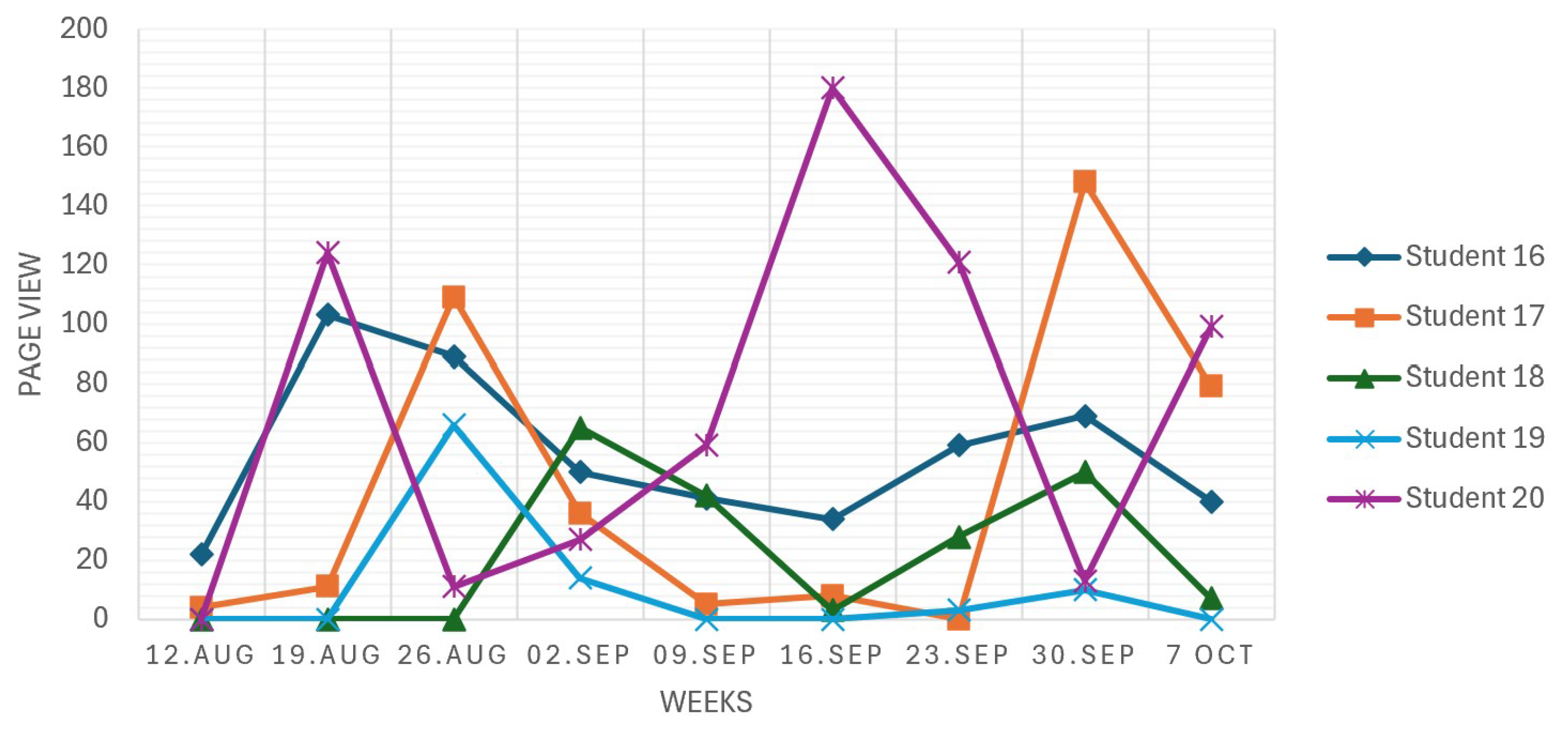

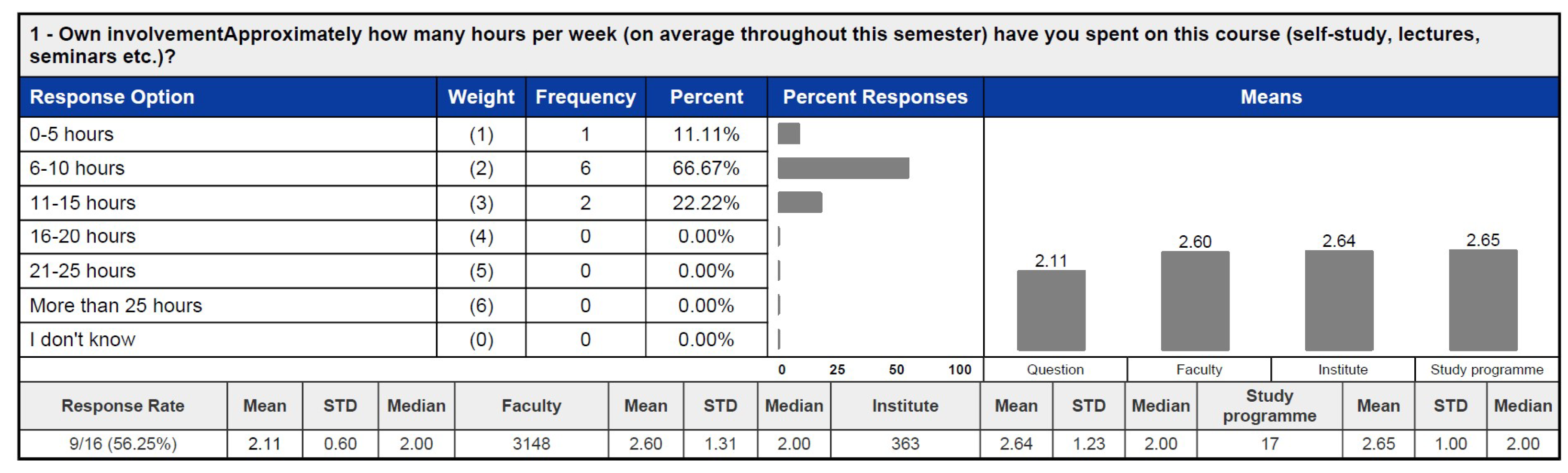

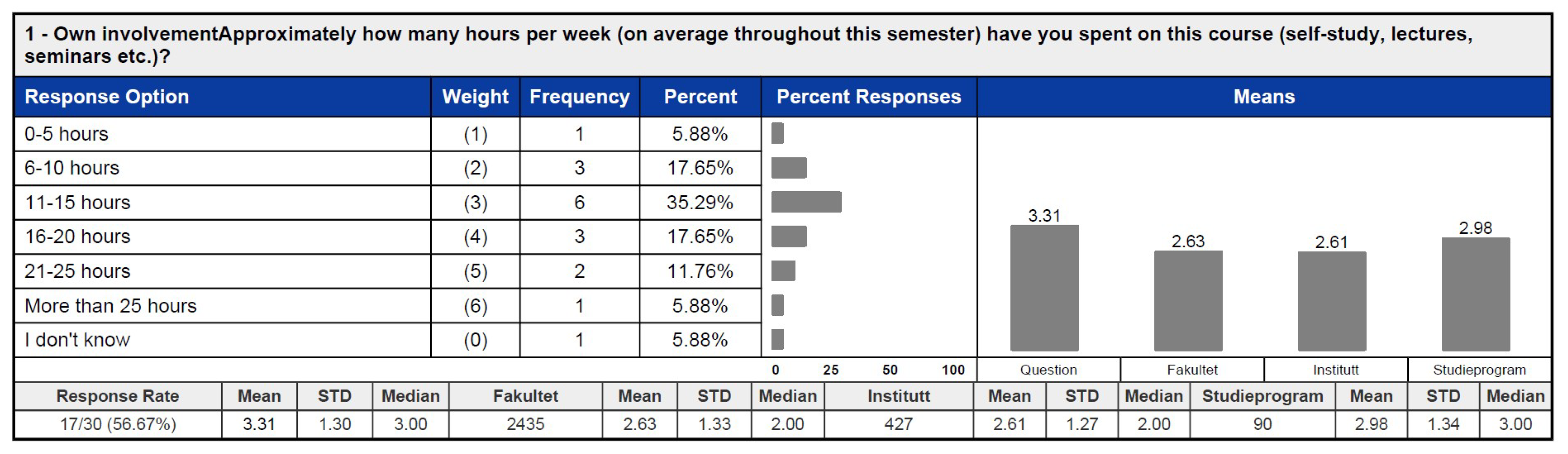

2.6.3. Student Profile Analysis

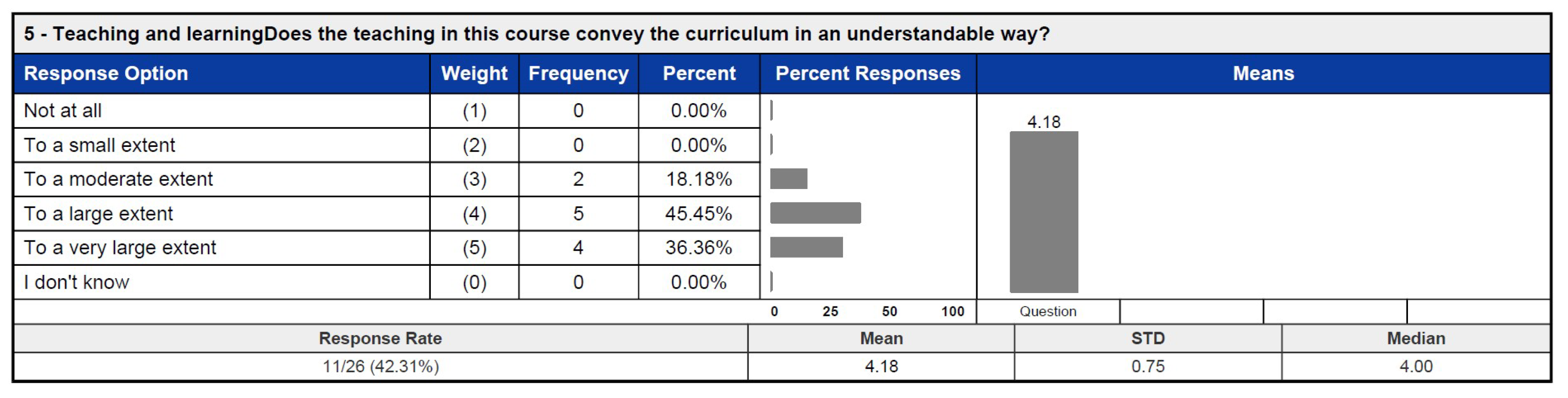

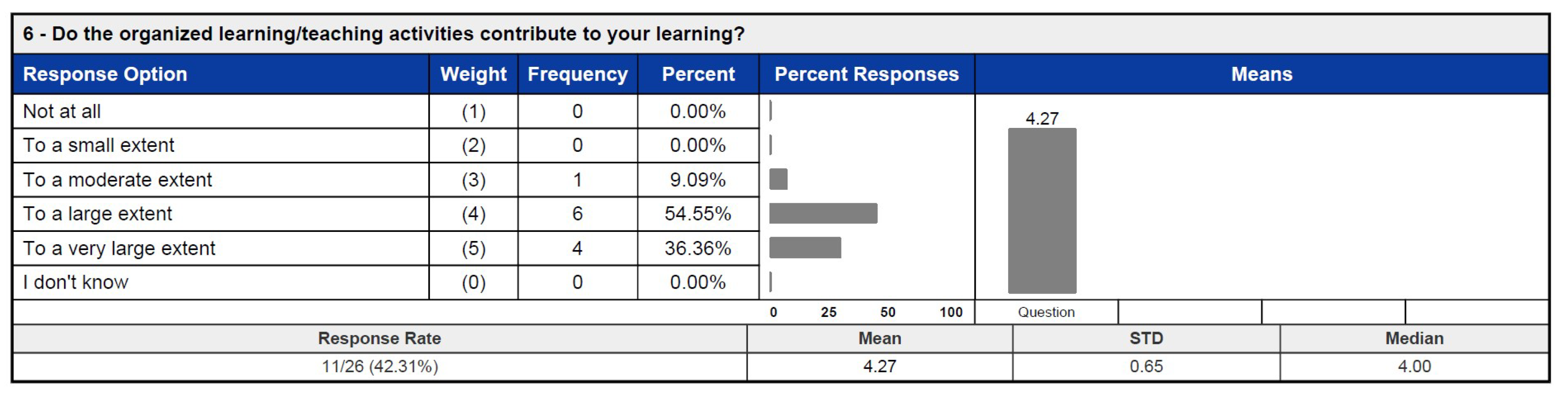

2.6.4. Classroom Performance and Course Evaluation Analyses

2.6.5. Ethical Considerations

3. Results

3.1. Observations on Concept Assignment Grades

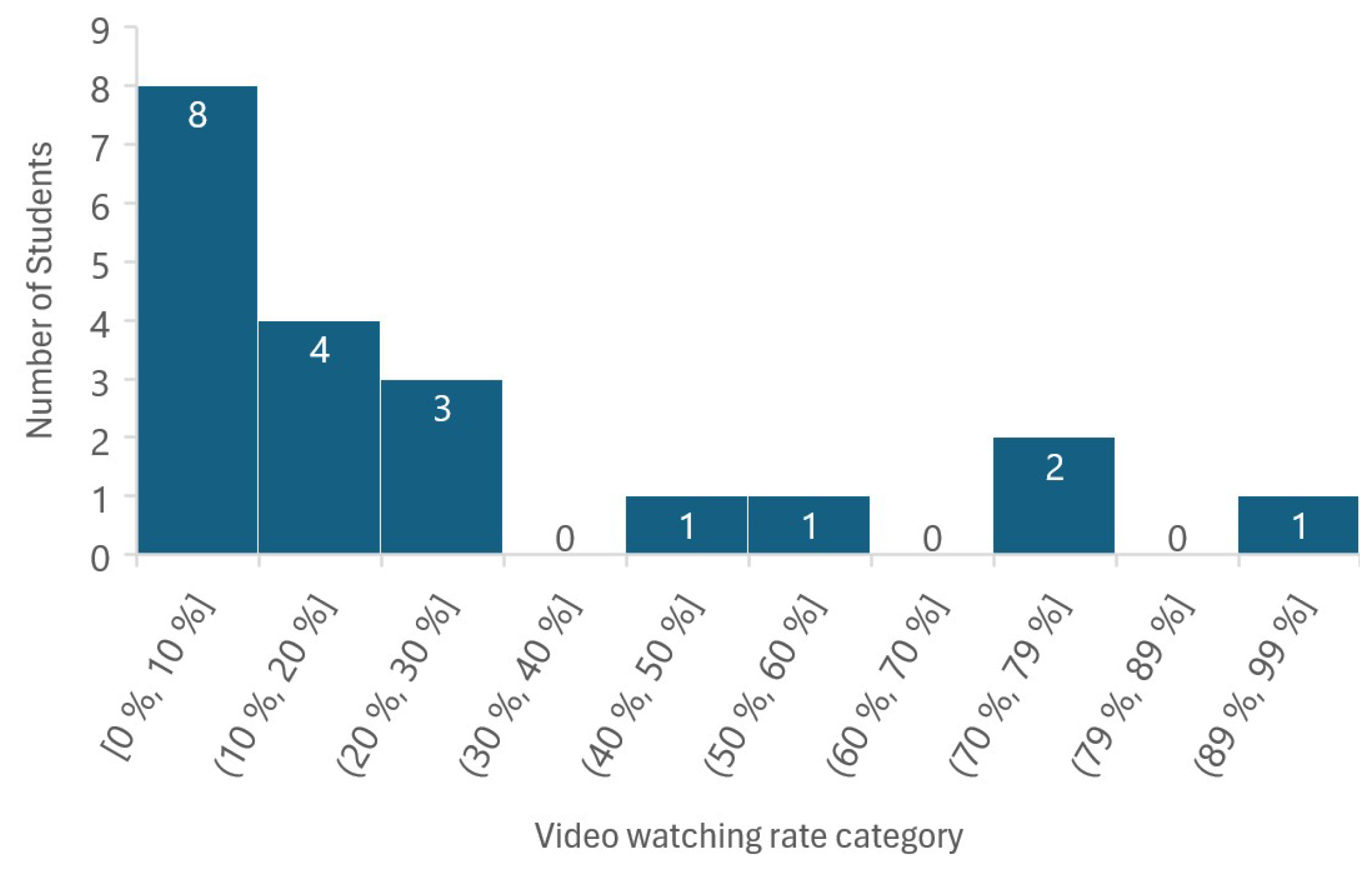

3.2. Observations Based on Course Analytics

3.3. Observations Based on Student Profiles: Predicted and Actual

3.4. Observations at the Workshops

Quote 2: “They do contribute to my learning and the teacher is very supportive and helpful, but at the beginning of the course it would be useful if some more lectures were held before we did the Miro exercises because now it is more turned into a guessing game”.—One student out of 21 student, Course Evaluation Report, Question 7

Quote 3: “Keep the exercises but add a few lectures in the beginning to give the students a good foundation”.—One student out of 21 student, Course Evaluation Report, Question 14

Quote 4: “I didn’t get this issue when I read the lecture notes, the game gives life to the text”.—One student out of 21 student, Comment after game session 2

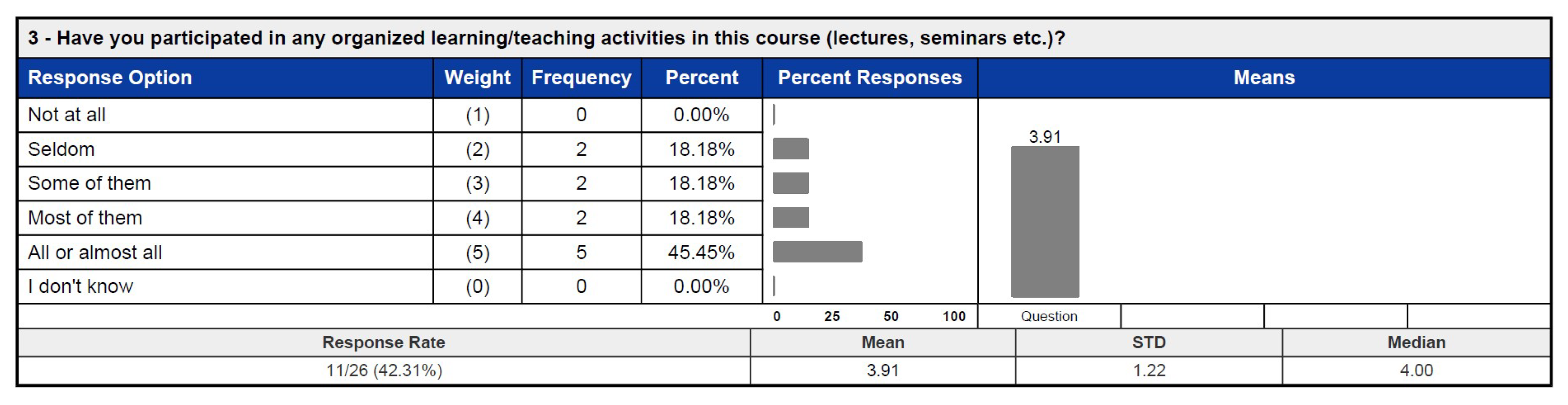

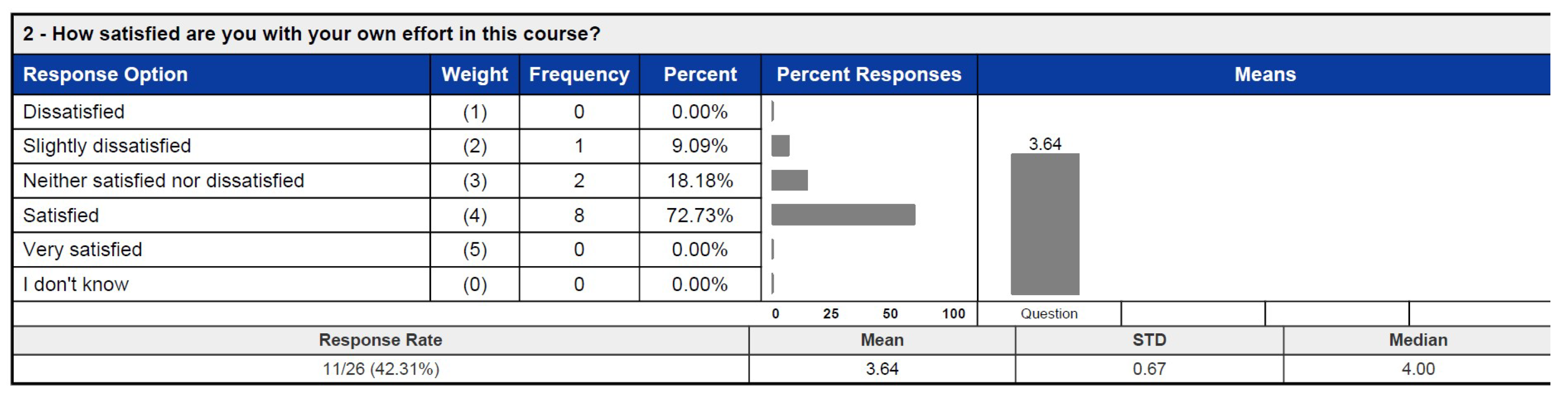

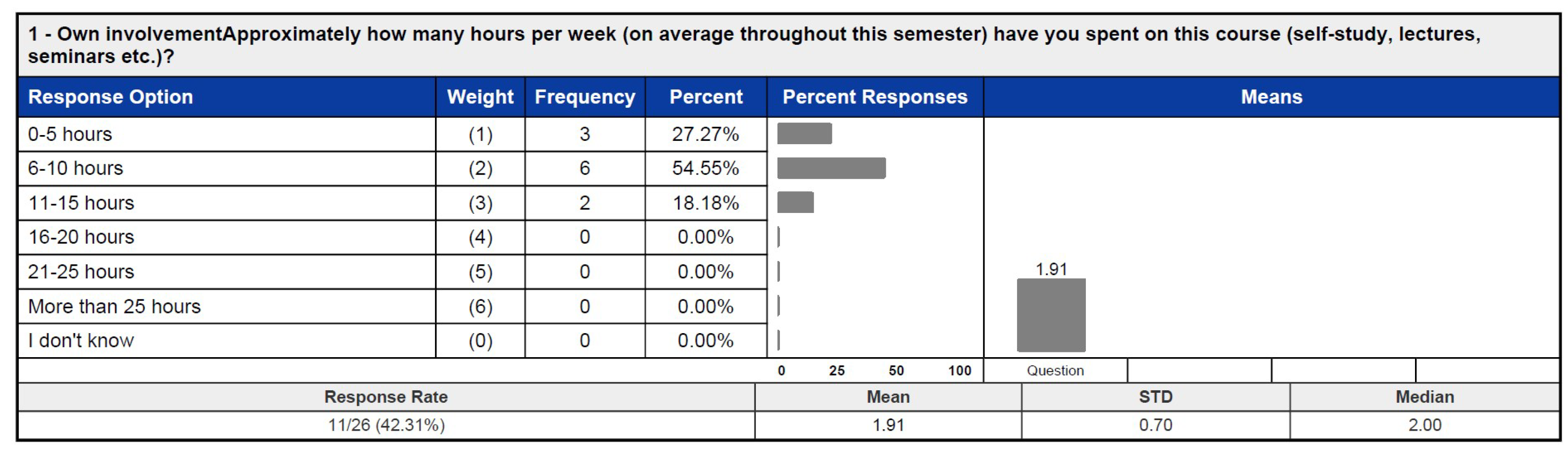

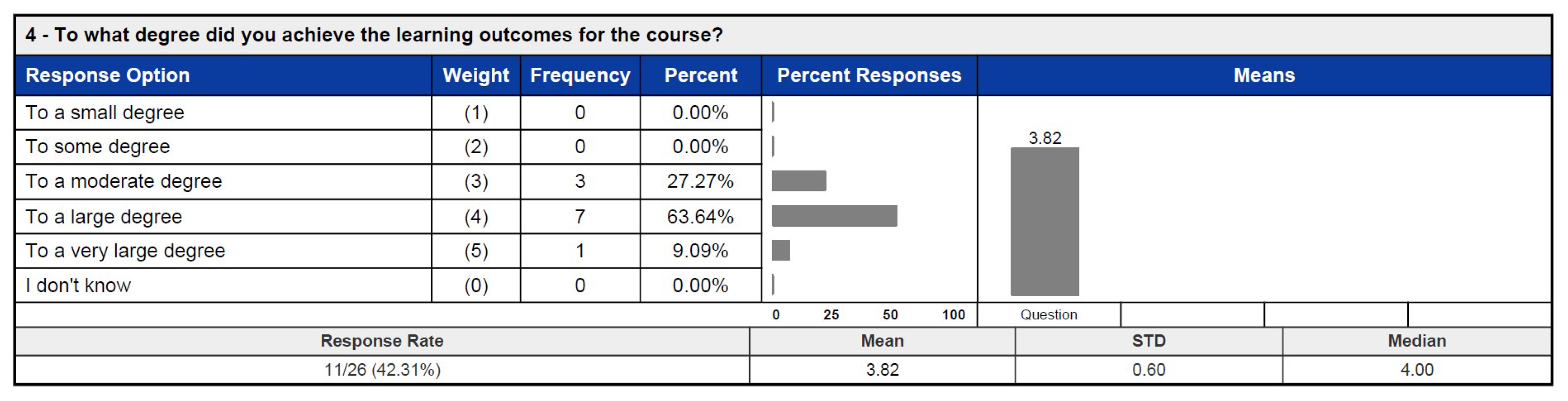

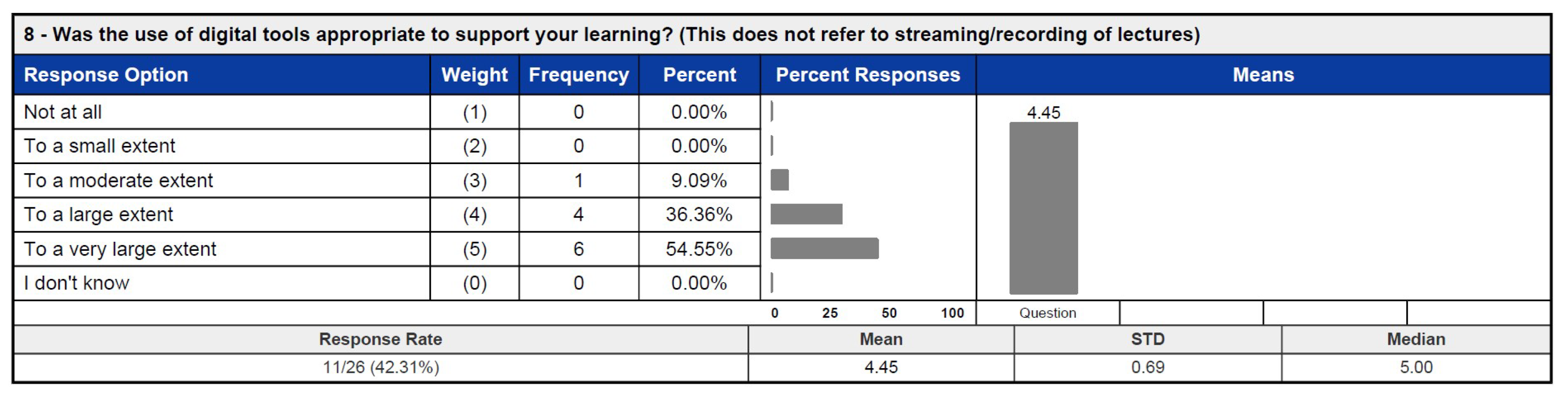

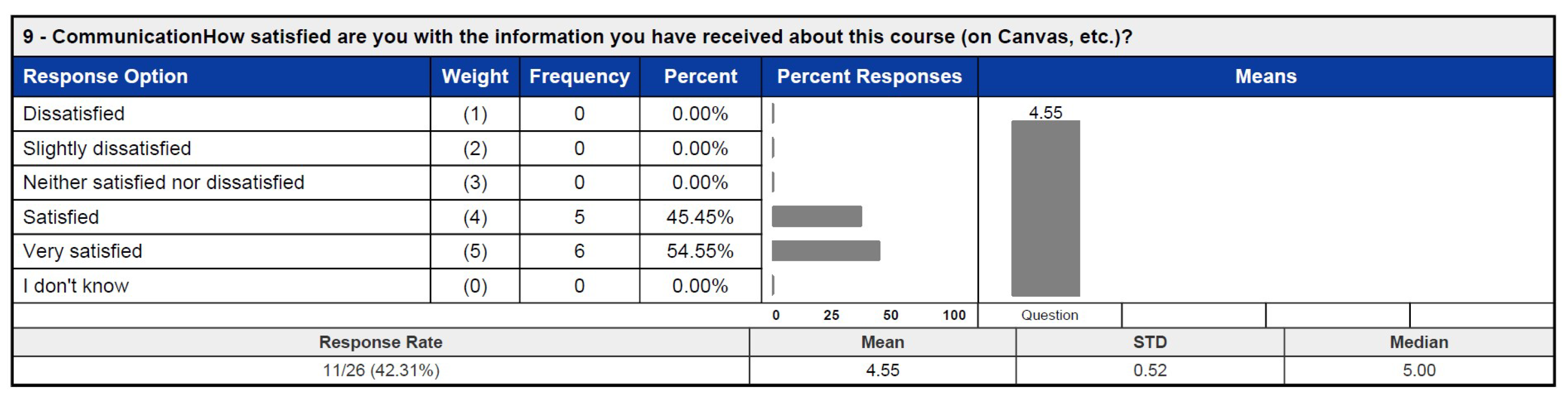

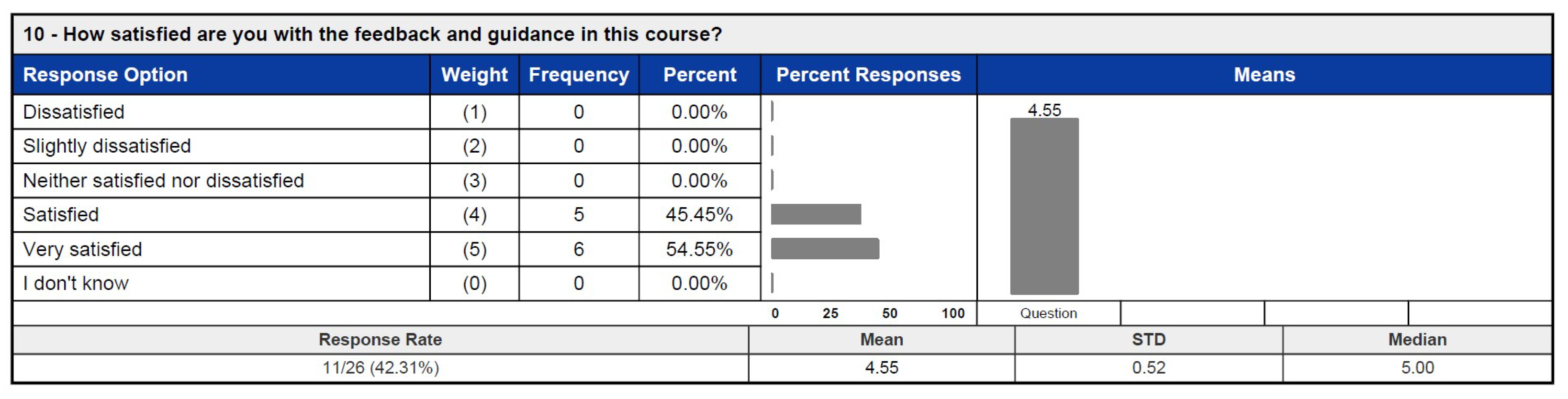

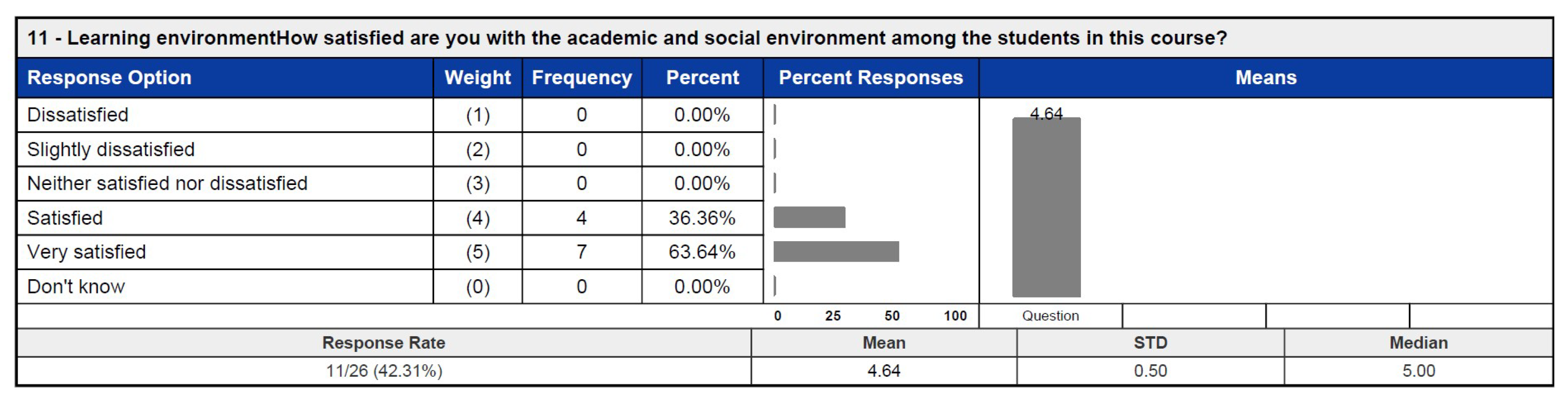

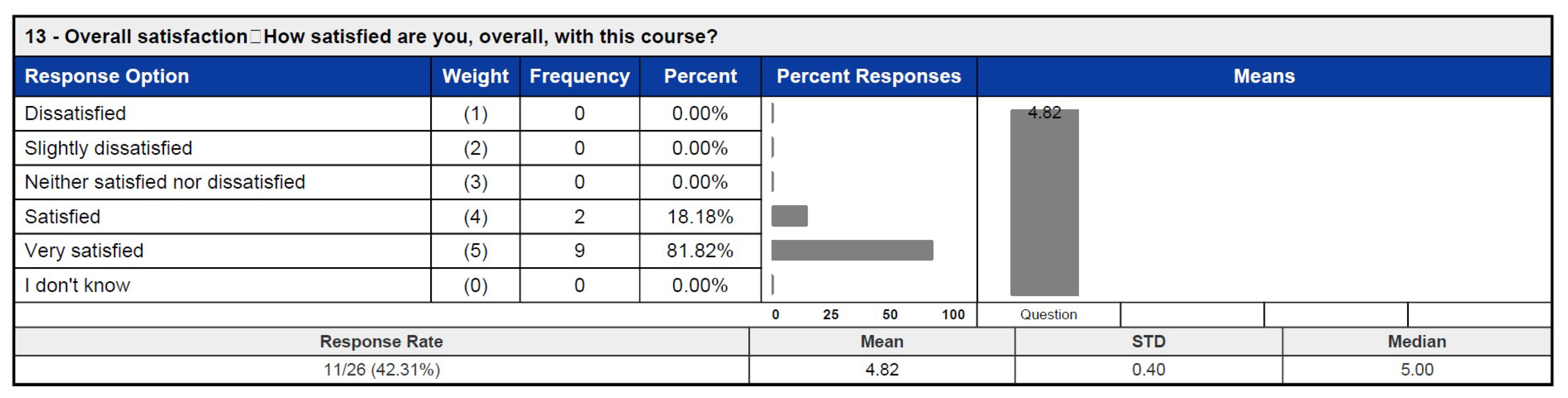

3.5. Observations Based on Course Evaluation

Quote 5: “It is already great. he spends a lot of time making the course enjoyable for everyone. This creates a great academic and social environment and contributes to the environment among the students too”.—One student out of 11 student, Course Evaluation Report, Question 12

4. Discussion and Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | Each ECTS credit represents 25–30 study hours. |

References

- Al-Najjar, N., Al Bulushi, M. Y., Al Seyabi, F. A., Al-Balushi, S. M., Al-Harthi, A. S., & Emam, M. M. (2024). Perceived impact of initial Student teaching practice on teachers’ teaching performance and professional skills: A retrospective study. Pedagogies: An International Journal, 1–20. [Google Scholar] [CrossRef]

- An, Y. (2020). Designing effective gamified learning experiences. International Journal of Technology in Education (IJTE), 3(2), 62–69. [Google Scholar] [CrossRef]

- Asok, D., Abirami, A., Angeline, N., & Lavanya, R. (2016, December 9–10). Active learning environment for achieving higher-order thinking skills in engineering education. [Conference session]. 2016 IEEE 4th International Conference on Moocs, Innovation and Technology in Education (mite) (pp. 47–53), Madurai, India. [Google Scholar] [CrossRef]

- Baig, M. I., & Yadegaridehkordi, E. (2023). Flipped classroom in higher education: A systematic literature review and research challenges. International Journal of Educational Technology in Higher Education, 20(1), 61. [Google Scholar] [CrossRef]

- Bergmann, J., & Sams, A. (2012). Flip your classroom: Reach every student in every class every day. International Society for Technology in Education. [Google Scholar]

- Bonwell, C. C., & Eison, J. A. (1991). Active learning: Creating excitement in the classroom. School of Education and Human Development, George Washington University. [Google Scholar]

- Bozkurt, A. (2022). A retro perspective on blended/hybrid learning: Systematic review, mapping and visualization of the scholarly landscape. Journal of Interactive Media in Education, 2022, 2. [Google Scholar] [CrossRef]

- Brandao, E., Adelfio, M., Hagy, S., & Thuvander, L. (2021). Collaborative pedagogy for co-creation and community outreach: An experience from architectural education in social inclusion using the miro tool. In D. Raposo, N. Martins, & D. Brandão (Eds.), Advances in human dynamics for the development of contemporary societies (pp. 118–126). Springer International Publishing. [Google Scholar]

- Chan, T. A. C. H., Ho, J. M. B., & Tom, M. (2023). Miro: Promoting collaboration through online whiteboard interaction. SAGE Publications Ltd. [Google Scholar] [CrossRef]

- Chen, D.-P., Chang, S.-W., Burgess, A., Tang, B., Tsao, K.-C., Shen, C.-R., & Chang, P.-Y. (2023). Exploration of the external and internal factors that affected learning effectiveness for the students: A questionnaire survey. BMC Medical Education, 23(1), 49. [Google Scholar] [CrossRef]

- Chu, J., & Huang, A. D. (2024). Curricular, interactional, and structural diversity: Identifying factors affecting learning outcomes. Studies in Higher Education, 49(12), 2475–2490. [Google Scholar] [CrossRef]

- Collado-Valero, J., Rodríguez-Infante, G., Romero-González, M., Gamboa-Ternero, S., Navarro-Soria, I., & Lavigne-Cerván, R. (2021). Flipped classroom: Active methodology for sustainable learning in higher education during social distancing due to COVID-19. Sustainability, 13(10), 5336. [Google Scholar] [CrossRef]

- Creemers, B., & Kyriakides, L. (2010). School factors explaining achievement on cognitive and affective outcomes: Establishing a dynamic model of educational effectiveness. Scandinavian Journal of Educational Research, 54(3), 263–294. [Google Scholar] [CrossRef]

- Dahalan, F., Alias, N., & Shaharom, M. S. N. (2024). Gamification and game based learning for vocational education and training: A systematic literature review. Education and Information Technologies, 29, 1279–1317. [Google Scholar] [CrossRef]

- de Menéndez, M. H., Guevara, A. V., Martínez, J. C. T., Alcántara, D. H., & Morales-Menendez, R. (2019). Active learning in engineering education. A review of fundamentals, best practices and experiences. International Journal on Interactive Design and Manufacturing, 13, 909–922. [Google Scholar] [CrossRef]

- Deslauriers, L., McCarty, L. S., Miller, K., Callaghan, K., & Kestin, G. (2019). Measuring actual learning versus feeling of learning in response to being actively engaged in the classroom. Proceedings of the National Academy of Sciences of the United States of America, 116, 19251–19257. [Google Scholar] [CrossRef] [PubMed]

- Deterding, S., Dixon, D., Khaled, R., & Nacke, L. (2011, September 29–30). From game design elements to gamefulness: Defining “gamification”. [Conference session]. 15th International Academic MindTrek Conference: Envisioning Future Media Environments (pp. 9–15), Tampere, Finland. [Google Scholar] [CrossRef]

- Ding, L. (2018). Applying gamifications to asynchronous online discussions: A mixed methods study. Computers in Human Behavior, 91, 1–11. [Google Scholar] [CrossRef]

- Doolittle, P., Wojdak, K., & Walters, A. (2023). Defining active learning: A restricted systematic review. Teaching and Learning Inquiry, 11. [Google Scholar] [CrossRef]

- Doron, E., & Spektor-Levy, O. (2019). Transformations in teachers’ views in one-to-one classes—Longitudinal case studies. Technology, Knowledge and Learning, 24(3), 437–460. [Google Scholar] [CrossRef]

- Driessen, E. P., Knight, J. K., Smith, M. K., & Ballen, C. J. (2020). Demystifying the meaning of active learning in postsecondary biology education. CBE Life Sciences Education, 19, 1–9. [Google Scholar] [CrossRef]

- Dziuban, C., Graham, C. R., Moskal, P. D., Norberg, A., & Sicilia, N. (2004). Blended learning. Educause Center for Applied Research Bulletin, 2004(7), 1–12. [Google Scholar]

- Emily, C., Clark, J., & Post, G. (2021). Preparation and synchronous participation improve student performance in a blended learning experience. Available online: https://ajet.org.au/index.php/AJET/article/view/6811 (accessed on 28 December 2024).

- Faiella, F., & Ricciardi, M. (2015). Gamification and learning: A review of issues and research. Journal of e-Learning and Knowledge Society Je-LKS The Italian e-Learning Association Journal, 11, 3. [Google Scholar] [CrossRef]

- Fazio, C. (2020). Active learning methods and strategies to improve student conceptual understanding: Some considerations from physics education research. In J. Guisasola, & K. Zuza (Eds.), Research and innovation in physics education: Two sides of the same coin (pp. 15–35). Springer International Publishing. [Google Scholar] [CrossRef]

- Felder, R. M., & Brent, R. (2009). Active learning: An introduction. ASQ Higher Education Brief, 2(4), 1–5. [Google Scholar]

- Ferriz-Valero, A., Østerlie, O., Martínez, S. G., & García-Jaén, M. (2020). Gamification in physical education: Evaluation of impact on motivation and academic performance within higher education. International Journal of Environmental Research and Public Health, 17(12), 4465. [Google Scholar] [CrossRef]

- Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., & Wenderoth, M. P. (2014). Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences of the United States of America, 111, 8410–8415. [Google Scholar] [CrossRef]

- Gleason, B. L., Peeters, M. J., Resman-Targoff, B. H., Karr, S., McBane, S., Kelley, K., Thomas, T., & Denetclaw, T. H. (2011). An active-learning strategies primer for achieving ability-based educational outcomes. American Journal of Pharmaceutical Education, 75, 186. [Google Scholar] [CrossRef] [PubMed]

- Göksün, D. O., & Gürsoy, G. (2019). Comparing success and engagement in gamified learning experiences via Kahoot and Quizizz. Computers & Education, 135, 15–29. [Google Scholar] [CrossRef]

- Graffam, B. (2007). Active learning in medical education: Strategies for beginning implementation. Medical Teacher, 29(1), 38–42. [Google Scholar] [CrossRef] [PubMed]

- Guimarães, L. M., & da Silva Lima, R. (2021). Active learning application in engineering education: Effect on student performance using repeated measures experimental design. European Journal of Engineering Education, 46(5), 813–833. [Google Scholar] [CrossRef]

- Halachev, P. (2024). Gamification as an e-learning tool: A literature review. E-Learning Innovations Journal, 2, 4–20. [Google Scholar] [CrossRef]

- Hassan, M. A., Habiba, U., Majeed, F., & Shoaib, M. (2019). Adaptive gamification in e-learning based on students’ learning styles. Interactive Learning Environments, 29(5), 545–565. [Google Scholar] [CrossRef]

- Hess, D. R. (2004). Retrospective studies and chart reviews. Respiratory Care, 49(10), 1171–1174. [Google Scholar]

- Hui, T., Lau, S. S., & Yuen, M. (2021). Active learning as a beyond-the-classroom strategy to improve university students’ career adaptability. Sustainability, 13, 6246. [Google Scholar] [CrossRef]

- Jabbar, A. I. A., & Felicia, P. (2015). Gameplay engagement and learning in game-based learning: A systematic review. Review of Educational Research, 85(4), 740–779. [Google Scholar] [CrossRef]

- Jing, J., & Canter, D. (2023). Developing a mixed-methods digital multiple sorting task procedure using zoom and miro. Methodological Innovations, 16(2), 250–262. [Google Scholar] [CrossRef]

- Johnson, E. K. (2022, October 6–8). Miro, Miro: Student perceptions of a visual discussion board. 40th ACM International Conference on Design of Communication (pp. 96–101), Boston, MA, USA. [Google Scholar] [CrossRef]

- Kapur, M., Hattie, J., Grossman, I., & Sinha, T. (2022). Fail, flip, fix, and feed—Rethinking flipped learning: A review of meta-analyses and a subsequent meta-analysis (Vol. 7). Frontiers Media S.A. [Google Scholar] [CrossRef]

- Kiryakova, G., Angelova, N., & Yordanova, L. (2014, October 16–88). Gamification in education. 9th International Balkan Education and Science Conference (Vol. 1, pp. 679–684), Trakya University, Edirne, Turkey. Available online: https://dosyalar.trakya.edu.tr/egitim/docs/Kongreler/FProceedings.pdf (accessed on 3 March 2025).

- Kobrin, J. L. (2016). Examining changes in teaching practices using a retrospective case study approach. SAGE Publications Ltd. [Google Scholar] [CrossRef]

- Konopka, C. L., Adaime, M. B., & Mosele, P. H. (2015). Active teaching and learning methodologies: Some considerations. Creative Education, 6, 1536–1545. [Google Scholar] [CrossRef]

- Kumar, A., Krishnamurthi, R., Bhatia, S., Kaushik, K., Ahuja, N. J., Nayyar, A., & Masud, M. (2021). Blended learning tools and practices: A comprehensive analysis. IEEE Access, 9, 85151–85197. [Google Scholar] [CrossRef]

- Kutergina, E. (2017). Computer-based simulation games in public administration education. NISPAcee Journal of Public Administration and Policy, 10, 119–133. [Google Scholar] [CrossRef]

- Kyewski, E., & Krämer, N. C. (2018). To gamify or not to gamify? An experimental field study of the influence of badges on motivation, activity, and performance in an online learning course. Computers & Education, 118, 25–37. [Google Scholar] [CrossRef]

- Landers, R. N. (2014). Developing a theory of gamified learning: Linking serious games and gamification of learning. Simulation and Gaming, 45, 752–768. [Google Scholar] [CrossRef]

- Lavi, R., & Bertel, L. B. (2024). Active learning pedagogies in high school and undergraduate stem education. Education Science, 14, 1011. [Google Scholar] [CrossRef]

- Li, M., Ma, S., & Shi, Y. (2023). Examining the effectiveness of gamification as a tool promoting teaching and learning in educational settings: A meta-analysis (Vol. 14). Frontiers Media SA. [Google Scholar] [CrossRef]

- Lim, D. H., & Morris, M. L. (2009). Learner and instructional factors influencing learning outcomes within a blended learning environment. Educational Technology & Society, 12, 282–293. [Google Scholar]

- Limaymanta, C. H., Apaza-Tapia, L., Vidal, E., & Gregorio-Chaviano, O. (2021). Flipped classroom in higher education: A bibliometric analysis and proposal of a framework for its implementation. International Journal of Emerging Technologies in Learning, 16, 133–149. [Google Scholar] [CrossRef]

- Lobos, E., Catanzariti, A., & McMillen, R. (2024). Critical analysis of retrospective study designs: Cohort and case series. Clinics in Podiatric Medicine and Surgery, 41(2), 273–280. [Google Scholar] [CrossRef]

- Lopez-Caudana, E., Ramirez-Montoya, M. S., Martínez-Pérez, S., & Rodríguez-Abitia, G. (2020). Using robotics to enhance active learning in mathematics: A multi-scenario study. Mathematics, 8, 2163. [Google Scholar] [CrossRef]

- Maldonado-Trapp, A., & Bruna, C. (2024). The evolution of active learning in response to the pandemic: The role of technology. In The COVID-19 Aftermath: Volume II: Lessons Learned (pp. 247–261). Springer. [Google Scholar] [CrossRef]

- Martella, A. M., Yatcilla, J. K., Park, H., Marchand-Martella, N. E., & Martella, R. C. (2021). Investigating the active learning research landscape through a bibliometric analysis of an influential meta-analysis on active learning. SN Social Sciences, 1, 228. [Google Scholar] [CrossRef]

- Mercan, G., & Varol Selçuk, Z. (2024). Investigating the impact of game-based learning and gamification strategies in physical education: A comprehensive systematic review. Journal of Interdisciplinary Education: Theory and Practice, 6(1), 1–14. [Google Scholar] [CrossRef]

- Michael, J. (2006). How we learn where’s the evidence that active learning works? Advances in Physiology Education, 30, 159–167. [Google Scholar] [CrossRef] [PubMed]

- Mills, A., Durepos, G., & Wiebe, E. (2010). Retrospective case study. In Encyclopedia of case study research (pp. 825–827). SAGE Publications, Inc. Available online: https://sk.sagepub.com/ency/edvol/casestudy/toc (accessed on 3 March 2025). [CrossRef]

- Miro. (2024). About miro. Available online: https://miro.com/about/ (accessed on 26 December 2024).

- Miyachi, T., Iga, S., Hirokawa, M., & Furuhata, T. (2016, November 10–12). A collaborative active learning for enhancing creativity for multiple disciplinary problems. 2016 11th International Conference on Knowledge, Information and Creativity Support Systems (KICSS) (pp. 1–6), Yogyakarta, Indonesia. [Google Scholar] [CrossRef]

- Nelson, L. P., & Crow, M. L. (2014). Do active-learning strategies improve students’ critical thinking? Higher Education Studies, 4(2), 77–90. [Google Scholar] [CrossRef]

- Nguyen, K. A., Borrego, M., Finelli, C. J., DeMonbrun, M., Crockett, C., Tharayil, S., Shekhar, P., Waters, C., & Rosenberg, R. (2021). Instructor strategies to aid implementation of active learning: A systematic literature review. International Journal of STEM Education, 8, 9. [Google Scholar] [CrossRef]

- Nowak, M. K., Speakman, E., & Sayers, P. (2016). Evaluating PowerPoint presentations: A retrospective study examining educational barriers and strategies. Nursing Education Perspectives, 37(1), 28–31. Available online: https://pubmed.ncbi.nlm.nih.gov/27164774/ (accessed on 3 April 2024).

- Owens, D. C., Sadler, T. D., Barlow, A. T., & Smith-Walters, C. (2020). Student motivation from and resistance to active learning rooted in essential science practices. Research in Science Education, 50, 253–277. [Google Scholar] [CrossRef]

- Pilotti, M. A. E., Alaoui, K. E., Abdelsalam, H. M., & Khan, R. (2023). Sustainable development in action: A retrospective case study on students’ learning before, during, and after the pandemic. Sustainability, 15(9), 7664. [Google Scholar] [CrossRef]

- Prince, M. (2004). Does active learning work? A review of the research. Journal of Engineering Education, 93(3), 223–231. [Google Scholar] [CrossRef]

- Sala, R., Maffei, A., Pirola, F., Enoksson, F., Ljubić, S., Skoki, A., Zammit, J. P., Bonello, A., Podržaj, P., Žužek, T., Priarone, P. C., Antonelli, D., & Pezzotta, G. (2024). Blended learning in the engineering field: A systematic literature review. Computer Applications in Engineering Education, 32(3), e22712. [Google Scholar] [CrossRef]

- Theobald, E. J., Hill, M. J., Tran, E., Agrawal, S., Arroyo, E. N., Behling, S., Chambwe, N., Cintrón, D. L., Cooper, J. D., Dunster, G., Grummer, J. A., Hennessey, K., Hsiao, J., Iranon, N., Jones, L., Jordt, H., Keller, M., Lacey, M. E., Littlefield, C. E., … Freeman, S. (2020). Active learning narrows achievement gaps for underrepresented students in undergraduate science, technology, engineering, and math. Proceedings of the National Academy of Sciences, 117(12), 6476–6483. [Google Scholar] [CrossRef]

- Ting, F. S. T., Lam, W. H., & Shroff, R. H. (2019). Active learning via problem-based collaborative games in a large mathematics university course in Hong Kong. Education Sciences, 9(3), 172. [Google Scholar] [CrossRef]

- Tomas, L., Evans, N. S., Doyle, T., & Skamp, K. (2019). Are first year students ready for a flipped classroom? A case for a flipped learning continuum. International Journal of Educational Technology in Higher Education, 16(1), 5. [Google Scholar] [CrossRef]

- Torralba, K. D., & Doo, L. (2020). Active learning strategies to improve progression from knowledge to action. Rheumatic Disease Clinics of North America, 46(1), 1–19. Available online: https://www.sciencedirect.com/science/article/pii/S0889857X19300778 (accessed on 1 March 2025). [CrossRef] [PubMed]

- University of Stavanger. (2024). Condition monitoring and predictive maintenance(IAM540). Available online: https://www.uis.no/en/course/IAM540_1 (accessed on 22 December 2024).

- van Alten, D. C., Phielix, C., Janssen, J., & Kester, L. (2019). Effects of flipping the classroom on learning outcomes and satisfaction: A meta-analysis (Vol. 28). Elsevier Ltd. [Google Scholar] [CrossRef]

- Wang, K., & Zhu, C. (2019). MOOC-based flipped learning in higher education: Students’ participation, experience and learning performance. International Journal of Educational Technology in Higher Education, 16(1), 33. [Google Scholar] [CrossRef]

- Werbach, K., & Hunter, D. (2012). For the win: How game thinking can revolutionize your business. Wharton Digital Press. Available online: https://picture.iczhiku.com/resource/paper/shkSGKokAIOeIcNc.pdf (accessed on 22 December 2024).

- White, P. J., Larson, I., Styles, K., Yuriev, E., Evans, D. R., Rangachari, P. K., Short, J. L., Exintaris, B., Malone, D. T., Davie, B., & Eise, N. (2016). Adopting an active learning approach to teaching in a research-intensive higher education context transformed staff teaching attitudes and behaviours. Higher Education Research & Development, 35(3), 619–633. [Google Scholar] [CrossRef]

- Otegui, X., & Raimondi, C. (2024). Enhancing pedagogical practices in engineering education: Evaluation of a training course on active learning methodologies. In M. E. Auer, U. R. Cukierman, E. V. Vidal, & E. T. Caro (Eds.), Towards a hybrid, flexible and socially engaged higher education (pp. 255–266). Springer Nature Switzerland. [Google Scholar]

- Yıldız, E., Doğan, U., Özbay, Ö., & Seferoğlu, S. S. (2022). Flipped classroom in higher education: An investigation of instructor perceptions through the lens of TPACK. Education and Information Technologies, 27(8), 10757–10783. [Google Scholar] [CrossRef]

- Zaric, N., Roepke, R., Lukarov, V., & Schroeder, U. (2021). Gamified learning theory: The moderating role of learners’ learning tendencies. International Journal of Serious Games, 8, 71–91. [Google Scholar] [CrossRef]

- Zhang, Y., Tan, W. H., & Zhou, J. A bibliometric review of research on gamification in education: Reflections for moving forward. Journal of Education, Humanities and Social Sciences, 41, 112–127.

| Objective Set | Learning Objective |

|---|---|

| Knowledge | Gain a comprehensive understanding of condition monitoring (CM), condition-based maintenance (CBM) and predictive maintenance (PdM). |

| Gain a comprehensive understanding of common machine faults: causes, mechanisms, symptoms, and modes. | |

| Gain a basic understanding and theories behind the monitoring techniques, e.g., vibration, acoustic emission, ultrasonic, oil-debris, thermal and process parameters. | |

| Gain a basic understanding and theories behind signal analysis, diagnosis and prognosis analysis. | |

| Gain a basic understanding and theories behind the non-destructive testing (NDT) methods such as penetrant, flux leakage, eddy current, and radiography. | |

| Skills | Be able to apply the project execution model to design monitored and PdM-ready equipment and deliver concept and front-end engineering (FEED) studies. |

| Be able to perform engineering analysis methods, e.g., failure mode analysis, symptom analysis, sensor diagnostic coverage analysis, and PdM concept study. | |

| Be able to perform time and frequency domain signal analysis. | |

| Be able to perform diagnosis analysis and determine the fault type, location and severity level. | |

| Be able to perform prognosis analysis (physics-based and/or data-driven) to predict the remaining useful lifetime. | |

| General competence | Can analyze relevant academic, professional, and research ethical problems. |

| Can work in teams and plan and manage projects. | |

| Can apply his/her knowledge and skills in new areas in order to carry out assignments and projects. | |

| Can communicate about academic issues, analyses and conclusions in the field, both with specialists and with the general public. |

| Updated Aspect | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | 2024 |

|---|---|---|---|---|---|---|---|---|

| No. of students | 47 | 37 | 34 | 34 | 42 | 35 | 16 | 26 |

| Lectures | X | X | X | X | X | X | ||

| Project-based learning | X | X | X | X | X | X | X | X |

| Systems thinking skills | X | X | X | X | X | X | X | |

| Lab exercises | X | X | X | X | X | X | X | |

| Project partitioning and feedback | X | X | X | X | X | X | ||

| Job embedded content | X | X | X | X | X | X | ||

| Digital context, streamed lectures | X | X | X | X | X | |||

| Introduce individual concept assignment | X | X | X | X | ||||

| Introduce individual reflection assignment | X | X | X | X | ||||

| Animated videos | X | X | X | |||||

| Lecture notes based compendium | X | X | X | |||||

| Expand the course from 5 to 10 Ects | X | X | ||||||

| Active learning with a flipped classroom | X | X | ||||||

| Cloud-based and non-coding machine learning | X | X |

| Date | Description | Lecture Type |

|---|---|---|

| 28.8 | Introduction to IAM540 | Traditional |

| 4.9 | Condition monitoring techniques | Gamified flipped classroom |

| 11.9 | Early dialogue session | 15 min discussion |

| 11.9 | Time Waveform analysis | Gamified flipped classroom |

| 18.9 | Frequency Domain analysis | Gamified flipped classroom |

| 25.9 | Machine faults, Diagnostics and Prognostics | Gamified flipped classroom |

| 30.9 | Concept Assignment, Extended to 4th October |

| No. | Board Title | Board Description |

|---|---|---|

| B1 | PdM in RAMI4.0 | Define the industry 4.0 architecture, layers and predictive maintenance (PdM) functions |

| B2 | Asset Hierarchy | Define the asset layers and apply it on equipment from a new context (wind energy) |

| B3 | P-F curve | Define the potential (P) and functional (F) failure points on the deterioration curve |

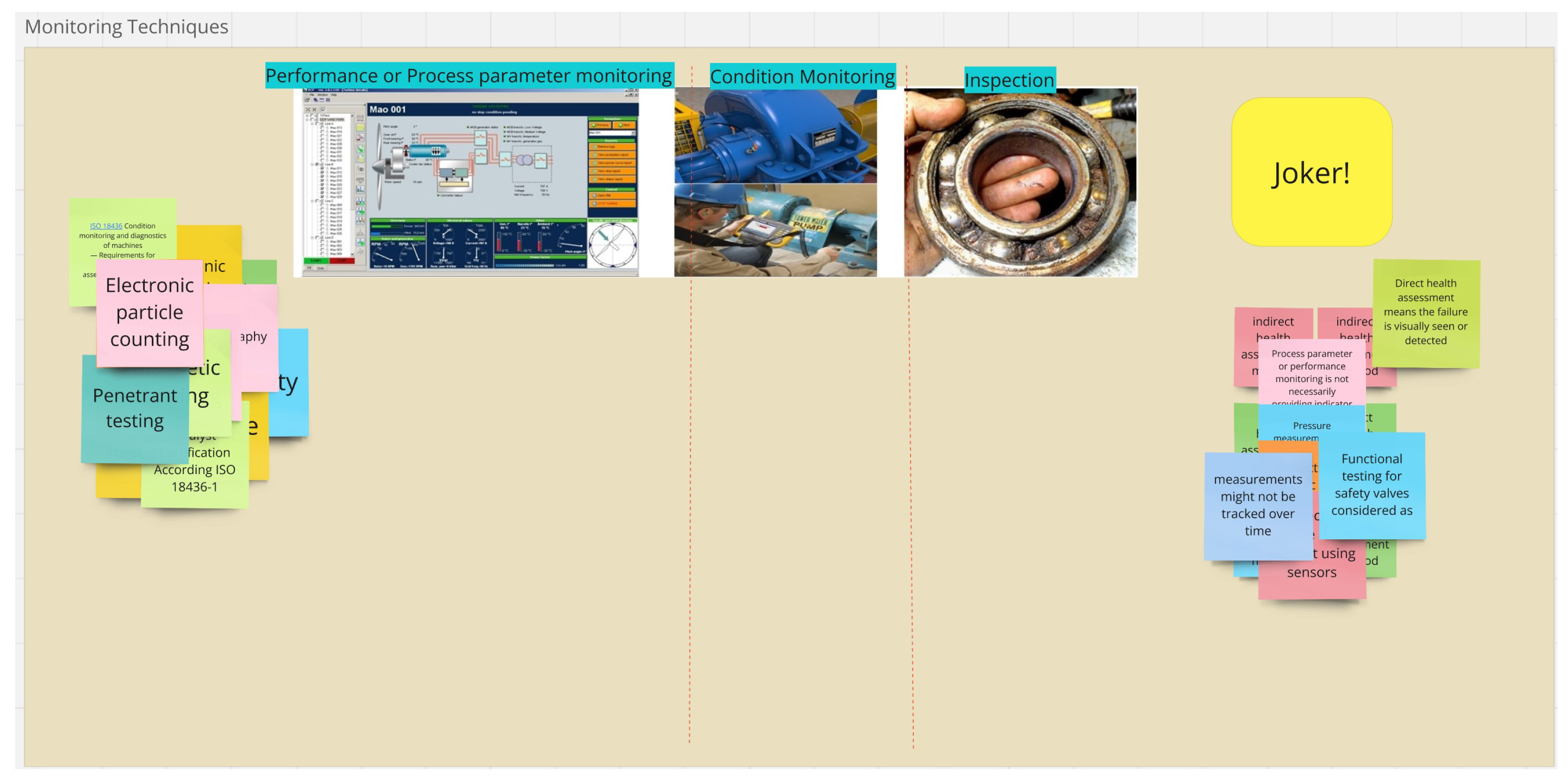

| B4 | Monitoring techniques | Discuss different monitoring and inspection techniques and compare them |

| B5 | Vibration versus AE | Compare results from vibration and acoustic emission (AE) for detected fault |

| B6 | Vibration versus Ultrasonic | Compare results from vibration and ultrasonic for detected fault under different rotation speed conditions |

| B7 | CM, CBM, PdM | Compare between condition monitoring (CM), condition-based maintenance (CBM) and predictive maintenance (PdM) in terms of functions, techniques and benefits |

| B8 | Uptime and downtime plot | Identify the difference between reliability, maintainability, supportability, dependability, time to failure, time to main, time to support |

| B9 | Failure Event | Reflect the terms (failure cause, mechanism, mode, symptom, effect) on the failure curve |

| B10 | Failure Engineering Methods | Define the s between failure mode and effect analysis, failure mode and symptom analysis, diagnostic coverage analysis, and predictive maintenance analysis |

| B11 | PEM for PdM | Fit the engineering analysis into the project execution model (PEM) and industrial work process to build a predictive maintenance (PdM) program and identify the decision gates and required tasks |

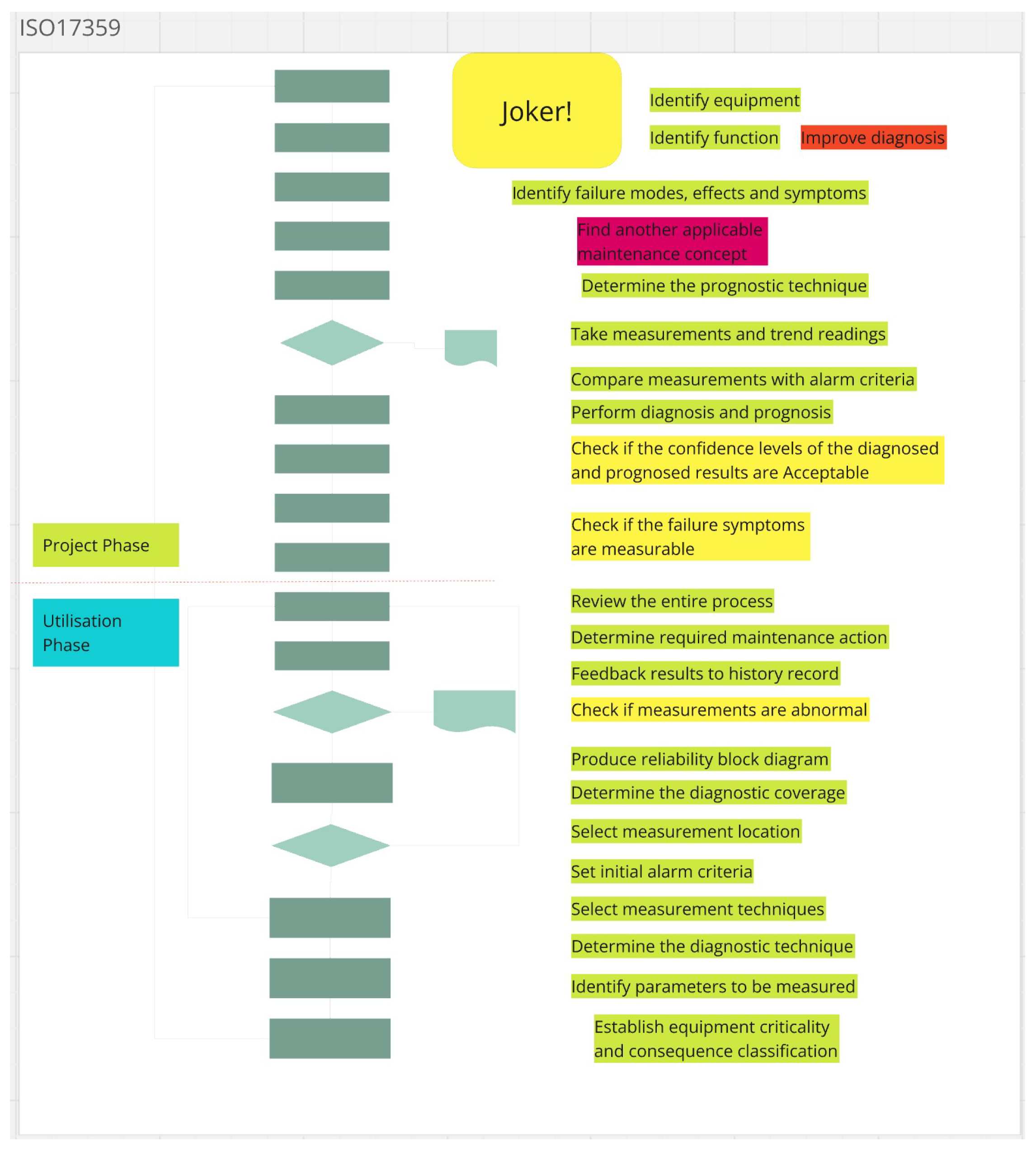

| B12 | ISO17359 | Construct the work-process to engineer a condition monitoring system |

| No. | Question | Related Miro Board |

|---|---|---|

| Q1 | What are the similarities and differences between condition monitoring (condition-based maintenance) and predictive maintenance? | B7 |

| Q2 | Explain the main stages/steps in ISO 17359 standard, and what is the logic behind its sequence? | B12 |

| Q3 | What are the main potential benefits of the Predictive Maintenance program over the condition monitoring program? | B8 |

| Q4 | What are the differences between FMECA, FMSA, and PdMA? | B10 |

| Q5 | What is the difference between performance monitoring and health monitoring techniques? which one is more accurate in providing an early fault indicator? | B4 |

| Q6 | Explain how can the vibration technique be effective to detect machine faults e.g., imbalance, misalignment? | B3, B9 |

| Q7 | What is the principal difference between the AE technique and the Ultrasonic technique? | B4, B5, B6 |

| Q8 | What are the capabilities and limitations of the Infrared spectroscopy and Debris counter techniques? | B4 |

| Q9 | What is the difference between Non-Destructive Testing (NDT) techniques and monitoring techniques? please use an example to clarify that. | B4 |

| Q10 | What are the capabilities and limitations of the time-domain detection analysis? | No board |

| Q11 | What are the capabilities and limitations of frequency-domain detection analysis? | No board |

| Q12 | What is the difference between trend projection prognosis and extrapolation prognosis? | No board |

| Student Type | Class Attendance | Self Study | Experience Level | Predicted Student Outcome Level |

|---|---|---|---|---|

| Attending class, self-studying and has experience | High | High | High | High |

| Attending class, self-studying and no experience | High | High | Low | Medium |

| Attending class, not self-studying and has experience | High | Low | High | Medium |

| Attending class, not self-studying and no experience | High | Low | Low | Low |

| Not attending class, self-studying and has experience | Low | High | High | Medium |

| Not attending class, self-studying and no experience | Low | High | Low | Low |

| Not attending class, not self-studying, has experience | Low | Low | High | Low |

| Not attending class, not self-studying, no experience | Low | Low | Low | Low (Very) |

| Predicted Class | ||||

|---|---|---|---|---|

| High (H) | Medium (M) | Low (L) | ||

| Actual Class | High (H) | H | ||

| Medium (M) | M | |||

| Low (L) | L | |||

| Aspect | Full Mark | S1 | S2 | S3 | S4 | S5 | S6 | Question Index |

|---|---|---|---|---|---|---|---|---|

| Q(2)1 | 2 | 1 | 1 | 2 | 1 | 2 | 1 | 67% |

| Q2 | 2 | 1 | 1 | 1 | 1 | 2 | 2 | 67% |

| Q3 | 2 | 0 | 2 | 1 | 1 | 2 | 1 | 58% |

| Q4 | 2 | 1 | 1 | 2 | 1 | 1 | 2 | 67% |

| Q5 | 2 | 1 | 1 | 2 | 2 | 2 | 2 | 83% |

| Q6 | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 92% |

| Q7 | 1 | 0.5 | 0.5 | 1 | 1 | 1 | 1 | 83% |

| Q8 | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 92% |

| Q9 | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 92% |

| Q10 | 2 | 1 | 1 | 1 | 1 | 2 | 2 | 67% |

| Q11 | 2 | 0.5 | 1 | 1 | 1 | 2 | 2 | 63% |

| Q12 | 2 | 1 | 2 | 2 | 2 | 2 | 2 | 92% |

| Total score | 20 | 8.5 | 13.5 | 16 | 14 | 19 | 18 | Index |

| Incompleteness | 1 | 2 | 0 | 4 | 1 | 1 | 1.5 3 | |

| Misconception | 8 | 3 | 3 | 2 | 0 | 2 | 3 4 | |

| Hallucination | 12 | 2 | 9 | 0 | 0 | 0 | 3.83 5 | |

| Comment | AI style | AI style and Lecture notes | Lecture notes | Lecture notes | ||||

| Level of understanding | L | M | H | M | H | H |

| Aspect | Full Mark | S7 | S8 | S9 | S10 | S11 | S12 | S13 | S14 | S15 | S16 | S17 | S18 | S19 | S20 | Question Index |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Q(2)1 | 2 | 2 | 1 | 2 | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 71% |

| Q2 | 2 | 2 | 1 | 2 | 1 | 1 | 2 | 2 | 2 | 1 | 2 | 1 | 2 | 2 | 2 | 82% |

| Q3 | 2 | 2 | 2 | 2 | 0 | 2 | 1 | 1 | 2 | 1 | 1 | 2 | 2 | 2 | 2 | 79% |

| Q4 | 2 | 2 | 2 | 1 | 1 | 1 | 2 | 2 | 1 | 1 | 2 | 1 | 2 | 2 | 2 | 79% |

| Q5 | 2 | 2 | 2 | 2 | 1 | 1 | 2 | 2 | 2 | 1 | 2 | 2 | 2 | 2 | 2 | 89% |

| Q6 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 100% |

| Q7 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 100% |

| Q8 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 100% |

| Q9 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 100% |

| Q10 | 2 | 2 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 1.5 | 1.5 | 2 | 68% |

| Q11 | 2 | 1 | 1 | 2 | 1 | 2 | 1 | 1 | 1 | 1 | 1.5 | 1 | 1.5 | 2 | 2 | 64% |

| Q12 | 2 | 2 | 2 | 2 | 2 | 1 | 1 | 1 | 2 | 0 | 2 | 1 | 2 | 0 | 2 | 71% |

| Total score | 20 | 19 | 16 | 18 | 12 | 14 | 15 | 16 | 17 | 11 | 16.5 | 14 | 19 | 17.5 | 20 | Index |

| Incompleteness | 1 | 3 | 2 | 3 | 4 | 4 | 3 | 3 | 4 | 2 | 3 | 0 | 2 | 0 | 2.29 3 | |

| Misconception | 0 | 1 | 2 | 5 | 1 | 2 | 1 | 0 | 4 | 1 | 3 | 0 | 0 | 0 | 1.29 4 | |

| Hallucination | 0 | 0 | 2 | 3 | 1 | 1 | 5 | 0 | 5 | 1 | 1 | 0 | 4 | 0 | 1.43 5 | |

| Comment | AI style | AI style | AI style | AI style | ||||||||||||

| Level of understanding | H | H | H | L | M | M | H | H | L | H | M | H | H | H |

| Student No. | Type | Experience | Attending Workshops | Video Viewing % | Page Viewing | Level of Self Study | Predicted Level of Understanding | Actual Level of Understanding |

|---|---|---|---|---|---|---|---|---|

| 1 | Subject | Yes | No | 30% | 649 | High | Medium | Low |

| 2 | Program | Yes | No | 7% | 335 | Medium | Medium | Medium |

| 3 | Subject | Yes | No | 76% | 407 | High | Medium | High |

| 4 | Program | Yes | No | 7% | 446 | High | Medium | Medium |

| 5 | Program | Yes | No | 9% | 254 | Medium | Medium | High |

| 6 | Program | Yes | No | 25% | 658 | High | Medium | High |

| 7 | Program | No | Yes | 74% | 495 | High | Medium | High |

| 8 | Exchange | No | Yes | 7% | 277 | Medium | Medium | High |

| 9 | Program | No | Yes | 14% | 228 | Medium | Medium | High |

| 10 | Program | No | Yes | 18% | 227 | Medium | Medium | Low |

| 11 | Exchange | No | Yes | 10% | 224 | Medium | Medium | Medium |

| 12 | Program | No | Yes | 7% | 179 | Low | Low | Medium |

| 13 | Program | No | Yes | 0% | 96 | Low | Low | High |

| 14 | Exchange | No | Yes | 7% | 106 | Low | Low | High |

| 15 | Program | No | Partially | 11% | 48 | Low | Low | Low |

| 16 | Program | Yes | Yes | 26% | 382 | Medium | Medium | High |

| 17 | Program | Yes | Yes | 57% | 385 | Medium | Medium | Medium |

| 18 | Subject | Yes | Yes | 7% | 195 | Low | Low | High |

| 19 | Program | Yes | Yes | 0% | 93 | Low | Low | High |

| 20 | Program | Yes | Yes | 99% | 510 | High | High | High |

| Predicted Class | ||||

|---|---|---|---|---|

| High (H) | Medium (M) | Low (L) | ||

| Actual Class | High (H) | 0 | 3 | 0 |

| Medium (M) | 0 | 2 | 0 | |

| Low (L) | 0 | 1 | 0 | |

| Predicted Class | ||||

|---|---|---|---|---|

| High (H) | Medium (M) | Low (L) | ||

| Actual Class | High (H) | 1 | 4 | 4 |

| Medium (M) | 0 | 2 | 1 | |

| Low (L) | 0 | 1 | 1 | |

| No. | Description | Total Game Cards | Group No. | Correct Cards in 1st Trial | Correct Cards in 2nd Trial | Correct Cards in 3rd Trial | Comment |

|---|---|---|---|---|---|---|---|

| 1 | RAMI4.0 | 18 | 1 | 8 | 10 cards left | Winner | |

| 2 | 7 | 11 cards left | |||||

| 3 | 5 | 13 cards left | |||||

| 2 | Asset hierarchy | 12 | 1 | 5 | 7 cards left | Winner | |

| 2 | 6 | 6 cards left | |||||

| 3 | 3 | 9 cards left | |||||

| 3 | P-F curve | 10 | 1 | 9 | 1 card left | ||

| 2 | 8 | 2 cards left | Winner | ||||

| 3 | 8 | 2 cards left | |||||

| 4 | Monitoring techniques | 27 | 1 | 13 | 10 | 4 | |

| 2 | 15 | 11 | 1 | Winner | |||

| 3 | 8 | 9 | 5 | 5 cards left | |||

| 5&6 | Vibration, AE, Ultrasonic | 9 | 1 | 9 | Winner, one round | ||

| 2 | 8 | 1 card left | |||||

| 3 | 8 | 1 card left | |||||

| 7 | CM, CBM, PdM | 35 | 1 | 18 | 8 | 5 | Winner, 4 cards left |

| 2 | 16 | 8 | 6 | 5 cards lefts | |||

| 3 | 14 | 9 | 5 | 7 cards lefts | |||

| 8 | Lifetime benefits | 10 | 1 | 8 | 0 | 2 cards left | Winner, two rounds |

| 2 | 8 | 0 | 2 cards left | Winner, two rounds | |||

| 3 | 7 | 1 | 2 cards left | two rounds | |||

| 9 | Failure event | 38 | 1 | 15 | 12 | 5 | Winner, 6 cards left |

| 2 | 16 | 13 | 4 | Winner, 6 cards left | |||

| 3 | 14 | 10 | 6 | 8 cards left | |||

| 10 | Failure engineering | 12 | 1 | 8 | 4 | ||

| 2 | 9 | 3 | Winner, two rounds | ||||

| 3 | 7 | 5 | |||||

| 11 | PEM for PdM | 19 | 1 | 15 | 4 | Winner, two rounds | |

| 2 | 15 | 3 | 1 card left | ||||

| 3 | 10 | 5 | 4 cards left | ||||

| 12 | ISO17359 | 25 | 1 | 20 | 4 | 1 card left | Winner, two rounds |

| 2 | 17 | 8 | Winner | ||||

| 3 | 10 | 13 | 2 cards left |

| Group No. | Student No. | Level of Self-Study |

|---|---|---|

| Group 1 | 7, 8, 9, 16, 17 | H, M, M, M, M |

| Group 2 | 11, 14, 18, 20 | M, L, L, H |

| Group 3 | 10, 12, 13, 15, 19 | M, L, L, L, L |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El-Thalji, I. Boosting Active Learning Through a Gamified Flipped Classroom: A Retrospective Case Study in Higher Engineering Education. Educ. Sci. 2025, 15, 430. https://doi.org/10.3390/educsci15040430

El-Thalji I. Boosting Active Learning Through a Gamified Flipped Classroom: A Retrospective Case Study in Higher Engineering Education. Education Sciences. 2025; 15(4):430. https://doi.org/10.3390/educsci15040430

Chicago/Turabian StyleEl-Thalji, Idriss. 2025. "Boosting Active Learning Through a Gamified Flipped Classroom: A Retrospective Case Study in Higher Engineering Education" Education Sciences 15, no. 4: 430. https://doi.org/10.3390/educsci15040430

APA StyleEl-Thalji, I. (2025). Boosting Active Learning Through a Gamified Flipped Classroom: A Retrospective Case Study in Higher Engineering Education. Education Sciences, 15(4), 430. https://doi.org/10.3390/educsci15040430