Multi-Step-Ahead Electricity Price Forecasting Based on Temporal Graph Convolutional Network

Abstract

:1. Introduction

2. Methods

2.1. Electricity Price Series Modeling

2.2. Graph Convolution Module

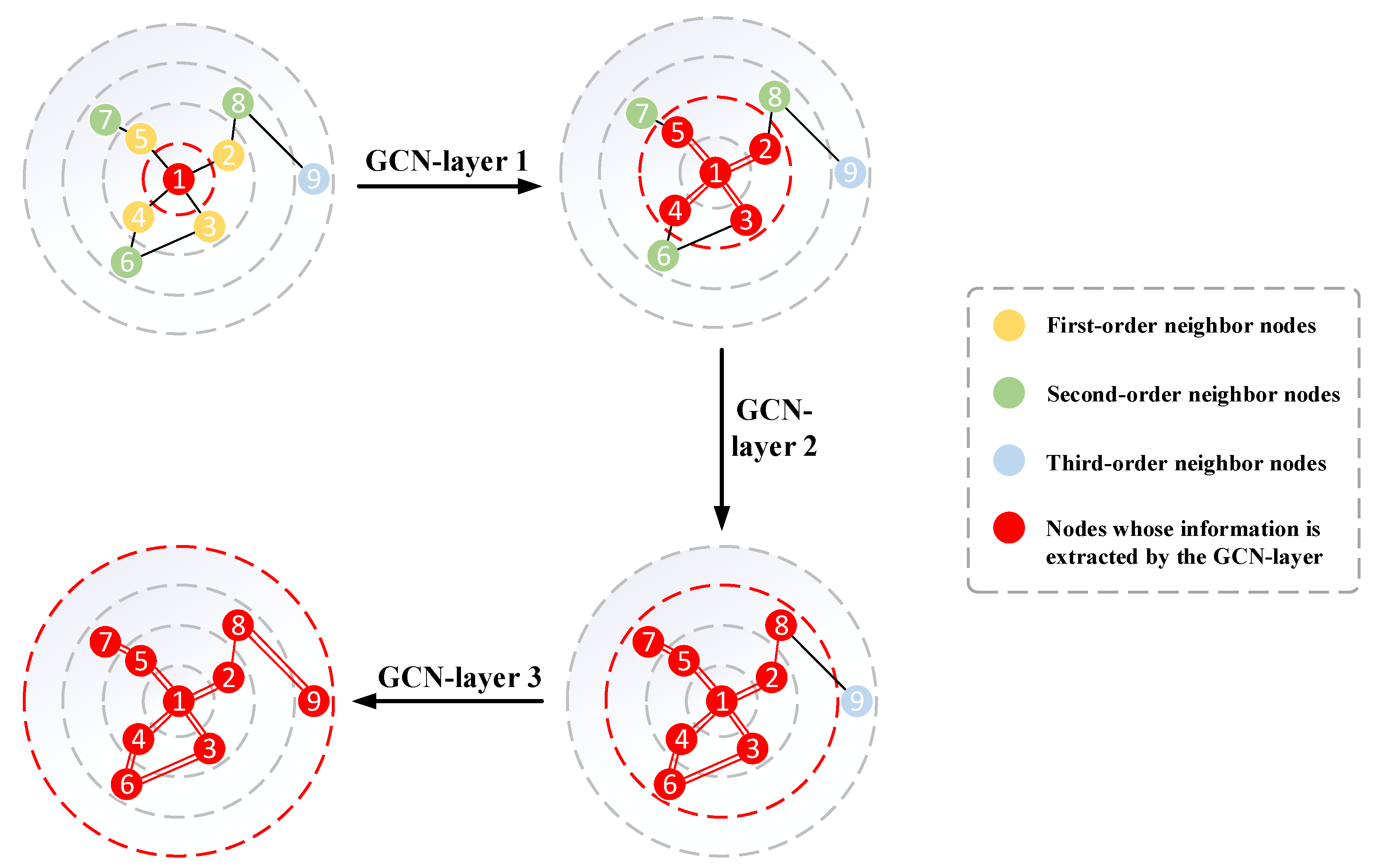

2.2.1. Traditional Propagation Layer

2.2.2. Mix-Jump Propagation Layer

2.3. Graph Construction Module

2.4. Temporal Convolution Module

2.4.1. Splicing Architecture

2.4.2. Dilated Convolution

2.4.3. Dilated Splicing Layer

2.5. Residual Connections

2.6. Model

| Algorithm 1 The training algorithm of T-GCN. |

| 1: Input: The initialized T-GCN model f with Ω, batch size b, step size s, learning rate ζ, the price dataset O |

| 2: set iter = 1, h = 1 |

| 3: repeat |

| 4: extract a batch (x ∈ Rb×T×n×D, y ∈ Rb×T’×n) from O. |

| 5: if iter% s = 0 and h < = T’ then |

| 6: h = h + 1 |

| 7: end if |

| 8: compute ŷ = f (x[:, :, :, :]; Ω) |

| 9: compute L = loss (ŷ [:, : h, :], y[:, : h, :]) |

| 10: compute the stochastic gradient of Ω according to L. |

| 11: update model parameters Ω according to their gradients and the learning rate ζ. |

| 12: iter = iter + 1 |

| 13: until convergence |

3. Experiments

3.1. Data Collection

3.2. Evaluation Metrics

3.3. Experimental Results

- Autoregressive integrated moving average model (ARIMA) [38].

- Support vector regression model (SVR) [39].

- Graph convolutional network model (GCN), see Section 2.2 for details.

- Temporal convolutional network model (TCN), see Section 2.4 for details.

3.4. Discussion

- (1)

- Higher prediction accuracy. It was found that neural network-based approaches that include temporal feature modeling, such as T-GCN models and TCN models, typically have better prediction accuracy than other methods, such as ARIMA models and SVR models. For example, for a 1-h electricity price prediction task, compared with the ARIMA model, MAE errors of the T-GCN model and TCN model are reduced by 68.99% and 60.76%, respectively; RMSE errors are reduced by 69.73% and 61.25%, respectively. Compared with the SVR model, MAE errors of the T-GCN model and TCN model are reduced by 52.15% and 39.45%, and RMSE errors are reduced by 53.59% and 40.59%. This is mainly due to the difficulty of methods such as ARIMA and SVR to handle complex non-stationary time series data.

- (2)

- Spatio-temporal prediction capability. We compared the T-GCN model with the GCN model and TCN model to verify the ability of the T-GCN model to capture its temporal and spatial characteristics from electricity price data of multiple price areas. As shown in Figure 9, the T-GCN model based on spatio-temporal features has higher prediction accuracy than the GCN and TCN models based on a single feature, indicating that the T-GCN model can capture spatio-temporal features from the electricity price data. For example, the MAE error of the T-GCN model is reduced by about 27.74% and 29.94% for the 1-h and 2-h prediction tasks, respectively, compared with the GCN model that considered only spatial features, indicating that the T-GCN model can capture spatial dependence. Compared with the TCN model, which only considers temporal characteristics, the MAE error of the T-GCN model is reduced by about 20.97% and 11.56% for 1-h and 2-h electricity price forecasts, respectively, indicating that the T-GCN model is able to capture temporal correlation well.

- (3)

- Long-term forecasting capability. The T-GCN model can obtain the best prediction performance by training, regardless of the change of the prediction horizon, indicating that the proposed model is insensitive to the prediction horizons and has strong stability. Therefore, the T-GCN model can be used for both short-term and long-term forecasting. Figure 9 shows the comparison of RMSE of different models, with the T-GCN model achieving the best results for different prediction horizons. Figure 10 shows the changes of T-GCN’s performance at different forecasting horizons. It can be seen that the trend of increasing error is small and has some stability.

3.5. Further Illustration of the Model

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, J.; Wang, J.; Cardinal, J. Evolution and reform of UK electricity market. Renew. Sustain. Energy Rev. 2022, 161, 112317. [Google Scholar] [CrossRef]

- Shamsi, M.; Cuffe, P. A Prediction Market Trading Strategy to Hedge Financial Risks of Wind Power Producers in Electricity Markets. IEEE Trans. Power Syst. 2021, 36, 4513–4523. [Google Scholar] [CrossRef]

- Mashlakov, A.; Kuronen, T.; Lensu, L.; Kaarna, A.; Honkapuro, S. Assessing the performance of deep learning models for multivariate probabilistic energy forecasting. Appl. Energ. 2021, 285, 116405. [Google Scholar] [CrossRef]

- Sun, W.; Huang, C. A carbon price prediction model based on secondary decomposition algorithm and optimized back propagation neural network. J. Clean. Prod. 2020, 243, 118671. [Google Scholar] [CrossRef]

- Fraunholz, C.; Kraft, E.; Keles, D.; Fichtner, W. Advanced price forecasting in agent-based electricity market simulation. Appl. Energ. 2021, 290, 116688. [Google Scholar] [CrossRef]

- Lu, H.; Ma, X.; Ma, M.; Zhu, S. Energy price prediction using data-driven models: A decade review. Comput. Sci. Rev. 2021, 39, 100356. [Google Scholar] [CrossRef]

- Rabiya, K.; Nadeem, J. A survey on hyperparameters optimization algorithms of forecasting models in smart grid. Sustain. Cities Soc. 2020, 61, 102275. [Google Scholar]

- Lago, J.; Marcjasz, G.; De Schutter, B.; Weron, R. Forecasting day-ahead electricity prices: A review of state-of-the-art algorithms, best practices and an open-access benchmark. Appl. Energ. 2021, 293, 116983. [Google Scholar] [CrossRef]

- Sujit, K.D.; Pradipta, K.D. Short-term mixed electricity demand and price forecasting using adaptive autoregressive moving average and functional link neural network. J. Mod. Power Syst. Clean 2019, 7, 1241–1255. [Google Scholar]

- Radhakrishnan, A.C.; Anupam, M.; Mitch, C.; Hossein, S.; Timothy, M.H.; Jeremy, L.; Prakash, R. A Multi-Stage Price Forecasting Model for Day-Ahead Electricity Markets. Forecasting 2018, 1, 26–46. [Google Scholar]

- Shibalal, M. Estimating and forecasting residential electricity demand in Odisha. J. Public Aff. 2020, 20, e2065. [Google Scholar]

- Zheng, L.; Yushan, W.; Jiayu, W.; Lin, Z.; Jian, S.; Xu, W. Short-term electricity price forecasting G-LSTM model and economic dispatch for distribution system. IOP Conf. Ser. Earth Environ. Sci. 2020, 467, 012186. [Google Scholar]

- Wendong, Y.; Jianzhou, W.; Rui, W. Research and Application of a Novel Hybrid Model Based on Data Selection and Artificial Intelligence Algorithm for Short Term Load Forecasting. Entropy 2017, 19, 52. [Google Scholar]

- Lehna, M.; Scheller, F.; Herwartz, H. Forecasting day-ahead electricity prices: A comparison of time series and neural network models taking external regressors into account. Energ Econ. 2022, 106, 105742. [Google Scholar] [CrossRef]

- Li, W.; Becker, D.M. Day-ahead electricity price prediction applying hybrid models of LSTM-based deep learning methods and feature selection algorithms under consideration of market coupling. Energy 2021, 237, 121543. [Google Scholar] [CrossRef]

- Aslam, S.; Ayub, N.; Farooq, U.; Alvi, M.J.; Albogamy, F.R.; Rukh, G.; Haider, S.I.; Azar, A.T.; Bukhsh, R. Towards Electric Price and Load Forecasting Using CNN-Based Ensembler in Smart Grid. Sustainability 2021, 13, 12653. [Google Scholar] [CrossRef]

- Yang, H.; Schell, K.R. Real-time electricity price forecasting of wind farms with deep neural network transfer learning and hybrid datasets. Appl. Energ. 2021, 299, 117242. [Google Scholar] [CrossRef]

- Yiyuan, C.; Yufeng, W.; Jianhua, M.; Qun, J. BRIM: An Accurate Electricity Spot Price Prediction Scheme-Based Bidirectional Recurrent Neural Network and Integrated Market. Energies 2019, 12, 2241. [Google Scholar]

- Xiao, C.; Sutanto, D.; Muttaqi, K.M.; Zhang, M.; Meng, K.; Dong, Z.Y. Online Sequential Extreme Learning Machine Algorithm for Better Predispatch Electricity Price Forecasting Grids. IEEE Trans. Ind. Appl. 2021, 57, 1860–1871. [Google Scholar] [CrossRef]

- Yi-Kuang, C.; Hardi, K.; Philipp, A.G.; Jon, G.K.; Klaus, S.; Hans, R.; Torjus, F.B. The role of cross-border power transmission in a renewable-rich power system—A model analysis for Northwestern Europe. J. Environ. Manag. 2020, 261, 110194. [Google Scholar]

- Jorge, M.U.; Stephanía, M.; Montserrat, G. Characterizing electricity market integration in Nord Pool. Energy 2020, 208, 118368. [Google Scholar]

- Egerer, J.; Grimm, V.; Kleinert, T.; Schmidt, M.; Zöttl, G. The impact of neighboring markets on renewable locations, transmission expansion, and generation investment. Eur. J. Oper Res. 2020, 292, 696–713. [Google Scholar] [CrossRef]

- Tessoni, V.; Amoretti, M. Advanced statistical and machine learning methods for multi-step multivariate time series forecasting in predictive maintenance. Procedia Comput. Sci. 2022, 200, 748–757. [Google Scholar] [CrossRef]

- Guokun, L.; Wei-Cheng, C.; Yiming, Y.; Hanxiao, L. Modeling Long- and Short-Term Temporal Patterns with Deep Neural Networks. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 95–104. [Google Scholar] [CrossRef] [Green Version]

- Shih, S.Y.; Sun, F.K.; Lee, H.Y. Temporal pattern attention for multivariate time series forecasting. Mach. Learn. 2019, 108, 1421–1441. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Cui, P.; Zhu, W. Deep Learning on Graphs: A Survey. IEEE Trans. Knowl. Data Eng. 2020, 34, 249–270. [Google Scholar] [CrossRef] [Green Version]

- Asif, N.A.; Sarker, Y.; Chakrabortty, R.K.; Ryan, M.J.; Ahamed, M.H.; Saha, D.K.; Badal, F.R.; Das, S.K.; Ali, M.F.; Moyeen, S.I.; et al. Graph Neural Network: A Comprehensive Review on Non-Euclidean Space. IEEE Access 2021, 9, 60588–60606. [Google Scholar] [CrossRef]

- Cui, Z.; Henrickson, K.; Ke, R.; Wang, Y. Traffic Graph Convolutional Recurrent Neural Network: A Deep Learning Framework for Network-Scale Traffic Learning and Forecasting. IEEE Trans. Intell. Transp. Syst. 2019, 21, 4883–4894. [Google Scholar] [CrossRef] [Green Version]

- Zhou, X.; Wang, H. The Generalization Error of Graph Convolutional Networks May Enlarge with More Layers. Neurocomputing 2020, 424, 97–106. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef] [Green Version]

- Lin, G.; Kang, X.; Liao, K.; Zhao, F.; Chen, Y. Deep graph learning for semi-supervised classification. Pattern Recogn. 2021, 118, 108039. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- KyungHyun, C.; Bart, V.M.; Dzmitry, B.; Yoshua, B. On the Properties of Neural Machine Translation: Encoder-Decoder Approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar]

- Bai, S.; Kolter, J.Z.; Koltun, V. An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Oord, A.V.D.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. WaveNet: A Generative Model for Raw Audio. arXiv 2016, arXiv:1609.03499. [Google Scholar]

- Kaiming, H.; Xiangyu, Z.; Shaoqing, R.; Jian, S. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Souhaib, B.T.; Gianluca, B.; Amir, F.A.; Antti, S. A review and comparison of strategies for multi-step ahead time series forecasting based on the NN5 forecasting competition. Expert Syst. Appl. 2012, 39, 7067–7083. [Google Scholar]

- Patrícia, R.; Nicolau, S.; Rui, R. Performance of state space and ARIMA models for consumer retail sales forecasting. Robot. Comput. Integr. Manuf. 2015, 34, 151–163. [Google Scholar]

- Alex, J.S.; Bernhard, S.L. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar]

| T | Index | ARIMA | SVR | GCN | TCN | T-GCN |

|---|---|---|---|---|---|---|

| 1-h | MAE | 5.1537 | 3.3396 | 2.2115 | 2.0220 | 1.5979 |

| RMSE | 8.1943 | 5.3433 | 3.4941 | 3.1745 | 2.4799 | |

| MAPE(%) | 9.33 | 6.04 | 3.82 | 3.63 | 2.82 | |

| 2-h | MAE | 6.2299 | 5.1288 | 3.2331 | 2.5614 | 2.2652 |

| RMSE | 9.6862 | 8.2573 | 5.0759 | 4.0162 | 3.4912 | |

| MAPE(%) | 11.15 | 9.23 | 5.68 | 4.51 | 3.98 | |

| 3-h | MAE | 6.2376 | 5.9534 | 3.8765 | 2.9550 | 2.6800 |

| RMSE | 9.7306 | 9.4063 | 6.0473 | 4.6157 | 4.1127 | |

| MAPE(%) | 11.17 | 10.71 | 7.01 | 5.38 | 4.71 | |

| 4-h | MAE | 6.2539 | 6.2182 | 4.0456 | 3.3649 | 2.9038 |

| RMSE | 9.6935 | 9.9491 | 6.2707 | 5.2156 | 4.4272 | |

| MAPE(%) | 11.19 | 11.31 | 7.20 | 6.07 | 5.12 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, H.; Peng, X.; Liu, H.; Quan, H.; Wu, K.; Chen, Z. Multi-Step-Ahead Electricity Price Forecasting Based on Temporal Graph Convolutional Network. Mathematics 2022, 10, 2366. https://doi.org/10.3390/math10142366

Su H, Peng X, Liu H, Quan H, Wu K, Chen Z. Multi-Step-Ahead Electricity Price Forecasting Based on Temporal Graph Convolutional Network. Mathematics. 2022; 10(14):2366. https://doi.org/10.3390/math10142366

Chicago/Turabian StyleSu, Haokun, Xiangang Peng, Hanyu Liu, Huan Quan, Kaitong Wu, and Zhiwen Chen. 2022. "Multi-Step-Ahead Electricity Price Forecasting Based on Temporal Graph Convolutional Network" Mathematics 10, no. 14: 2366. https://doi.org/10.3390/math10142366