An Improved Gray Wolf Optimization Algorithm with a Novel Initialization Method for Community Detection

Abstract

:1. Introduction

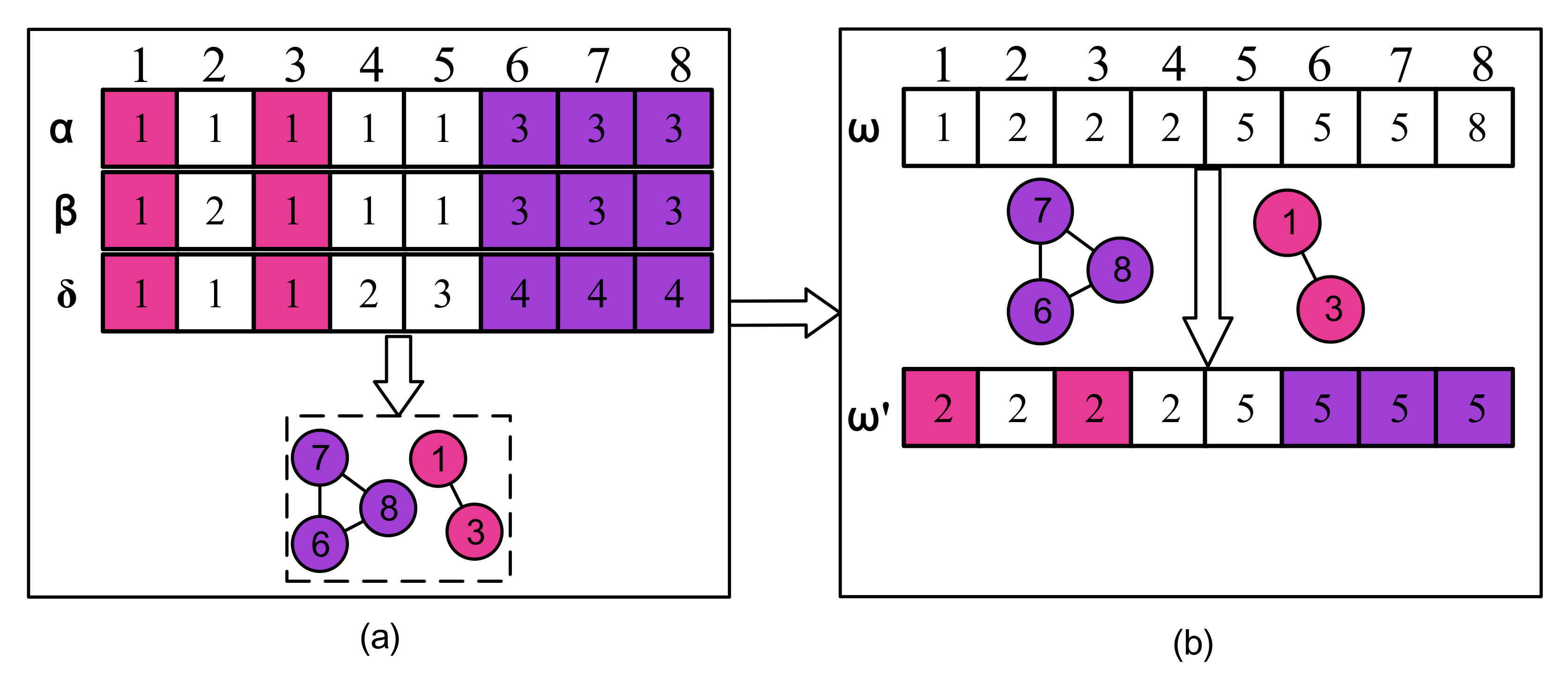

- We propose a new initialization method that combines the importance of node and elite community structures to generate an initial elite population quickly, which effectively improves the quality of solutions. The elite substructures are obtain by utilizing motifs and their intersections. The importance of node is generated according to matrices depending on dependency and the other features of nodes.

- The GWO is adopted in discrete spaces of the CD. We design an improved hunting prey behavior of GWO to maintain the good community substructure and evolve the population to improve the performance of the CD.

- A multi-scales mutation operator is designed to improve the encircling prey behavior of GWO from node level to community level. The multi-scales mutation includes boundary node-level mutation and community-level mutation. Specifically, the boundary nodes and small communities are mutated on the basis of the proposed formula to effectively improve the search efficiency and reduce the computational cost.

- The effectiveness of the algorithm on small and large datasets is demonstrated by extensive experiments on artificial networks and real-world networks.

2. Related Work and Methodology

2.1. Swarm Intelligence Algorithms for the CD

2.2. Initialization Methods for the CD

2.3. GWO

3. Proposed NIGWO

3.1. Problem Definition

3.2. Solutions Representation

3.3. Gray Wolf Optimization with a Novel Initialization and Two Improved Stages (NIGWO)

3.3.1. The Framework of NIGWO

| Algorithm 1: NIGWO |

|

3.3.2. Fast Elite Population Initialization

| Algorithm 2: Fast elite population Initialization |

|

3.3.3. Improved Hunting Stage

3.3.4. Improved Encircling Stage

3.4. Time Complexity Analysis

4. Experiment and Evaluation

4.1. Datasets and Comparison Algorithms

4.2. Parameters Setting and Evaluation Metrics

4.3. Experiments on Real-World Networks

4.4. Experiments on LFR Networks

4.5. Visualization of NIGWO

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, X.; Zhou, K.; Pan, H.; Zhang, L.; Zeng, X.; Jin, Y. A network reduction-based multiobjective evolutionary algorithm for community detection in large-scale complex networks. IEEE Trans. Cybern. 2018, 50, 703–716. [Google Scholar] [CrossRef] [PubMed]

- Karataş, A.; Şahin, S. Application areas of community detection: A review. In Proceedings of the 2018 International Congress on Big Data, Deep Learning and Fighting Cyber Terrorism (IBIGDELFT), Ankara, Turkey, 3–4 December 2018; pp. 65–70. [Google Scholar]

- Qing, Q.; Jian, C.; Yancen, L. The Evolution of Software Ecosystem in GitHub. J. Comput. Res. Dev. 2020, 57, 513. [Google Scholar]

- Yang, T.; Xie, J.; Zhao, J.; Dong, H.; Hu, K. Design and Application of Chinese Medicine Association Discovery Algorithm Based on Association Network and Hierarchical Clustering. Mod. Tradit. Chin. Med. Mater. Med.—Sci. Technol. 2020, 22, 1962–1968. [Google Scholar]

- Lu, D.D. Leader-Based Community Detection Algorithm in Attributed Networks. IEEE Access 2021, 9, 119666–119674. [Google Scholar] [CrossRef]

- Liu, Q.; Su, Y.; Peng, Q.; Chen, K.; Lu, Y. An Overlapping Community Detection Algorithm for Label Propagation Based on Node Influence. In Proceedings of the 2021 3rd International Conference on Advances in Computer Technology, Information Science and Communication (CTISC), Shanghai, China, 23–25 April 2021; pp. 183–187. [Google Scholar] [CrossRef]

- Roghani, H.; Bouyer, A. A Fast Local Balanced Label Diffusion Algorithm for Community Detection in Social Networks. IEEE Trans. Knowl. Data Eng. 2022. [Google Scholar] [CrossRef]

- Huang, J.; Hou, Y.; Li, Y. Efficient community detection algorithm based on higher-order structures in complex networks. Chaos Interdiscip. J. Nonlinear Sci. 2020, 30, 023114. [Google Scholar] [CrossRef]

- Li, C.; Tang, Y.; Tang, Z.; Cao, J.; Zhang, Y. Motif-based embedding label propagation algorithm for community detection. Int. J. Intell. Syst. 2022, 37, 1880–1902. [Google Scholar] [CrossRef]

- Li, P.Z.; Huang, L.; Wang, C.D.; Lai, J.H.; Huang, D. Community detection by motif-aware label propagation. ACM Trans. Knowl. Discov. Data (TKDD) 2020, 14, 1–19. [Google Scholar] [CrossRef] [Green Version]

- He, C.; Zheng, Y.; Fei, X.; Li, H.; Hu, Z.; Tang, Y. Boosting nonnegative matrix factorization based community detection with graph attention auto-encoder. IEEE Trans. Big Data 2021, 8, 968–981. [Google Scholar] [CrossRef]

- Ye, F.; Chen, C.; Zheng, Z. Deep autoencoder-like nonnegative matrix factorization for community detection. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 1393–1402. [Google Scholar]

- Wang, X.; Cui, P.; Wang, J.; Pei, J.; Zhu, W.; Yang, S. Community preserving network embedding. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Guendouz, M.; Amine, A.; Hamou, R.M. A discrete modified fireworks algorithm for community detection in complex networks. Appl. Intell. 2017, 46, 373–385. [Google Scholar] [CrossRef]

- Liu, F.; Xue, S.; Wu, J.; Zhou, C.; Hu, W.; Paris, C.; Nepal, S.; Yang, J.; Yu, P.S. Deep learning for community detection: Progress, challenges and opportunities. arXiv 2020, arXiv:2005.08225. [Google Scholar]

- Wang, P.; Kong, B.; Bao, C.; Zhou, L.; Wang, C. Community Detection Based On Graph Neural Network. In Proceedings of the 2021 6th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 9–11 April 2021; pp. 89–93. [Google Scholar] [CrossRef]

- Su, X.; Xue, S.; Liu, F.; Wu, J.; Yang, J.; Zhou, C.; Hu, W.; Paris, C.; Nepal, S.; Jin, D.; et al. A Comprehensive Survey on Community Detection With Deep Learning. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Kang, Y.; Pu, B.; Kou, Y.; Yang, Y.; Chen, J.; Muhammad, K.; Yang, P.; Xu, L.; Hijji, M. A deep graph network with multiple similarity for user clustering in human–computer interaction. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM), 2022; just accepted. [Google Scholar] [CrossRef]

- Le, B.D.; Shen, H.; Nguyen, H.; Falkner, N. Improved network community detection using meta-heuristic based label propagation. Appl. Intell. 2019, 49, 1451–1466. [Google Scholar] [CrossRef]

- Feng, Y.; Chen, H.; Li, T.; Luo, C. A novel community detection method based on whale optimization algorithm with evolutionary population. Appl. Intell. 2020, 50, 2503–2522. [Google Scholar] [CrossRef]

- Teymourian, N.; Izadkhah, H.; Isazadeh, A. A fast clustering algorithm for modularization of large-scale software systems. IEEE Trans. Softw. Eng. 2020, 48, 1451–1462. [Google Scholar] [CrossRef]

- Liu, F.; Wu, J.; Xue, S.; Zhou, C.; Yang, J.; Sheng, Q. Detecting the evolving community structure in dynamic social networks. World Wide Web 2020, 23, 715–733. [Google Scholar] [CrossRef]

- Milo, R.; Shen-Orr, S.; Itzkovitz, S.; Kashtan, N.; Chklovskii, D.; Alon, U. Network motifs: Simple building blocks of complex networks. Science 2002, 298, 824–827. [Google Scholar] [CrossRef] [Green Version]

- Tang, J.; Liu, G.; Pan, Q. A review on representative swarm intelligence algorithms for solving optimization problems: Applications and trends. IEEE/CAA J. Autom. Sin. 2021, 8, 1627–1643. [Google Scholar] [CrossRef]

- Lou, S.; Wu, P.; Guo, L.; Duan, Y.; Zhang, X.; Gao, J. Sparse principal component analysis using particle swarm optimization. J. Chem. Eng. Jpn. 2020, 53, 327–336. [Google Scholar] [CrossRef]

- Ramirez-Figueroa, J.A.; Martin-Barreiro, C.; Nieto-Librero, A.B.; Leiva, V.; Galindo-Villardón, M.P. A new principal component analysis by particle swarm optimization with an environmental application for data science. Stoch. Environ. Res. Risk Assess. 2021, 35, 1969–1984. [Google Scholar] [CrossRef]

- Li, T.; Shi, J.; Deng, W.; Hu, Z. Pyramid particle swarm optimization with novel strategies of competition and cooperation. Appl. Soft Comput. 2022, 121, 108731. [Google Scholar] [CrossRef]

- Bas, E.; Egrioglu, E.; Kolemen, E. Training simple recurrent deep artificial neural network for forecasting using particle swarm optimization. Granul. Comput. 2022, 7, 411–420. [Google Scholar] [CrossRef]

- Cai, Q.; Gong, M.; Shen, B.; Ma, L.; Jiao, L. Discrete particle swarm optimization for identifying community structures in signed social networks. Neural Netw. 2014, 58, 4–13. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, K.; Hafez, A.I.; Hassanien, A.E. A discrete krill herd optimization algorithm for community detection. In Proceedings of the 2015 11th International Computer Engineering Conference (ICENCO), Cairo, Egypt, 29–30 December 2015; pp. 297–302. [Google Scholar]

- Ma, T.; Xia, Z. A community detection algorithm based on local double rings and fireworks algorithm. In Proceedings of the International Conference on Intelligent Data Engineering and Automated Learning, Guilin, China, 30 October–1 November 2017; Springer: Cham, Switzerland, 2017; pp. 129–135. [Google Scholar]

- Zhang, Y.; Liu, Y.; Li, J.; Zhu, J.; Yang, C.; Yang, W.; Wen, C. WOCDA: A whale optimization based community detection algorithm. Phys. A Stat. Mech. Its Appl. 2020, 539, 122937. [Google Scholar] [CrossRef]

- Ma, T.; Xia, Z.; Yang, F. An ant colony random walk algorithm for overlapping community detection. In Proceedings of the International Conference on Intelligent Data Engineering and Automated Learning, Guilin, China, 30 October–1 November 2017; Springer: Cham, Switzerland, 2017; pp. 20–26. [Google Scholar]

- Raghavan, U.N.; Albert, R.; Kumara, S. Near linear time algorithm to detect community structures in large-scale networks. Phys. Rev. E 2007, 76, 036106. [Google Scholar] [CrossRef] [Green Version]

- Zeng, X.; Wang, W.; Chen, C.; Yen, G.G. A consensus community-based particle swarm optimization for dynamic community detection. IEEE Trans. Cybern. 2019, 50, 2502–2513. [Google Scholar] [CrossRef]

- Handl, J.; Knowles, J. An evolutionary approach to multiobjective clustering. IEEE Trans. Evol. Comput. 2007, 11, 56–76. [Google Scholar] [CrossRef]

- Wang, Y.J.; Song, J.X.; Sun, P. Research on Dynamic Community Detection Method Based on an Improved Pity Beetle Algorithm. IEEE Access 2022, 10, 43914–43933. [Google Scholar] [CrossRef]

- Li, H.; Zhang, R.; Zhao, Z.; Liu, X. LPA-MNI: An Improved Label Propagation Algorithm Based on Modularity and Node Importance for Community Detection. Entropy 2021, 23, 497. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. Hybrid Grey Wolf Optimizer with Mutation Operator. In Soft Computing for Problem Solving; Advances in Intelligent Systems and Computing; Bansal, J., Das, K., Nagar, A., Deep, K., Ojha, A., Eds.; Springer: Singapore, 2019; Volume 817, pp. 961–968. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. Enhanced leadership-inspired grey wolf optimizer for global optimization problems. Eng. Comput. 2020, 36, 1777–1800. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, J.; Yu, H.; Wang, Y.; Zhou, X. An Improved Grey Wolf Optimizer Based on Differential Evolution and OTSU Algorithm. Appl. Sci. 2020, 10, 6343. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man, Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Lancichinetti, A.; Fortunato, S. Benchmarks for testing community detection algorithms on directed and weighted graphs with overlapping communities. Phys. Rev. E 2009, 80, 016118. [Google Scholar] [CrossRef]

- Leskovec, J.; Krevl, A. SNAP Datasets: Stanford Large Network Dataset Collection. 2014. Available online: http://snap.stanford.edu/data (accessed on 7 May 2022).

- Sun, B.J.; Shen, H.; Gao, J.; Ouyang, W.; Cheng, X. A non-negative symmetric encoder-decoder approach for community detection. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 597–606. [Google Scholar]

- Yang, J.; Leskovec, J. Overlapping community detection at scale: A nonnegative matrix factorization approach. In Proceedings of the Sixth ACM International Conference on Web Search and Data Mining, Rome, Italy, 4–8 February 2013; pp. 587–596. [Google Scholar]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. Deepwalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 701–710. [Google Scholar]

| Variable | Formulation | Comments |

|---|---|---|

| G | () | The graph structure of a network |

| n | — | The number of nodes in G |

| — | The i-th node | |

| m | — | The number of edges in G |

| k | — | The number of communities |

| — | Number of iterations | |

| — | Size of the gray wolfs population | |

| C | The set of communities | |

| The t-th generation grey wolf population | ||

| X | The current generation gray wolf population | |

| The j-th individual in the current gray wolf population | ||

| — | The i-th dimension value in the individual | |

| — | Position of prey | |

| The leaders of the gray wolf population | ||

| — | A neighbor node of is randomly selected | |

| — | Indicates that neighbor nodes set of | |

| — | Indicates that a set is empty | |

| — | Intersection of for network partition | |

| — | The number of intra-community links for community c | |

| — | The sum of degrees of the nodes in community c | |

| — | Mutation probability |

| Dataset | n | m | ||

|---|---|---|---|---|

| Karate | 34 | 78 | 17 | 4 |

| Football | 115 | 613 | 12 | 10 |

| Dolphon | 62 | 159 | 12 | 5 |

| Polbooks | 105 | 441 | 25 | 8 |

| Lesmis | 77 | 254 | 36 | 6 |

| Netscience | 1589 | 2742 | 34 | 3 |

| CA-GrQc | 5242 | 14,496 | 81 | 5 |

| CA-HepTh | 9877 | 25,998 | 65 | 5 |

| Cora | 2708 | 5429 | 168 | 3 |

| Citeseer | 3312 | 4732 | 99 | 3 |

| Pebmud | 19,717 | 44,327 | 171 | 4 |

| Algorithm | Types | Reference |

|---|---|---|

| Meta-LPAM+ | Swarm intelligence | [19] |

| DMFWA | Swarm intelligence | [14] |

| EP-WOCD | Swarm intelligence | [20] |

| RMOEA | Swarm intelligence | [1] |

| NSED | NMF | [46] |

| BigClam | NMF | [47] |

| MNMF | NMF | [13] |

| DANMF | NMF | [12] |

| NMFGAAE | NMF | [11] |

| DeepWalk | Other | [48] |

| LPA | Other | [34] |

| Algorithm | Reference | |||

|---|---|---|---|---|

| Meta-LPAm+ | 1 | 100 | — | [19] |

| DMFWA | 100 | 40 | — | [14] |

| EP-WOCD | 100 | 40 | 0.3 | [20] |

| RMOEA | 100 | 40 | 0.1 | [1] |

| NIGWO | 100 | 40 | 0.4 | [ours] |

| Networks | Meta-LPAm+ | DMFWA | NSED | BigClam | DeepWalk | LPA | MNMF | DANMF | EP-WOCD | RMOEA | NMFGAAE | NIGWO |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Karate | 0.4198 | 0.4038 | 0.2181 | 0.3005 | 0.2448 | 0.3049 | 0.2321 | 0.3644 | 0.4198 | 0.3875 | — | 0.4198 |

| Football | 0.6044 | 0.6041 | 0.4877 | 0.2177 | 0.5543 | 0.5297 | 0.5706 | 0.6091 | 0.6023 | 0.6041 | — | 0.6044 |

| Dolphins | 0.5174 | 0.5013 | 0.3134 | 0.3481 | 0.0192 | 0.4934 | 0.3627 | 0.5175 | 0.5242 | 0.5264 | — | 0.5275 |

| Polbooks | 0.5027 | 0.5081 | 0.3763 | 0.2238 | 0.3714 | 0.4519 | 0.3845 | 0.3986 | 0.5269 | 0.5264 | — | 0.5271 |

| Lesmis | 0.5562 | 0.5349 | 0.4538 | 0.3862 | — | 0.5171 | 0.4719 | 0.4246 | 0.5596 | 0.4398 | — | 0.5491 |

| Netscience | 0.9478 | 0.9124 | 0.7196 | 0.8285 | — | 0.8916 | 0.6864 | 0.7944 | 0.9183 | 0.9521 | — | 0.9548 |

| Cora | — | — | 0.6921 | 0.4713 | 0.6514 | 0.6275 | 0.7120 | 0.7129 | 0.6489 | 0.6636 | 0.7413 | 0.7535 |

| Citeseer | — | — | 0.5910 | 0.5802 | 0.4904 | 0.7002 | 0.6319 | 0.6524 | 0.6805 | 0.7454 | 0.7211 | 0.7693 |

| CA-GrQc | — | — | 0.3287 | 0.5052 | — | 0.7198 | 0.6329 | 0.6492 | — | 0.7962 | — | 0.7598 |

| CA-HepTh | — | — | 0.3775 | 0.3277 | — | 0.5472 | 0.5392 | 0.1417 | — | 0.4532 | — | 0.5257 |

| Pebmud | — | — | 0.3921 | 0.5317 | 0.3214 | 0.5073 | 0.5119 | 0.5213 | — | 0.5068 | 0.5501 | 0.5389 |

| Nodes | NSED | BigClam | DeepWalk | LPA | MNMF | DANMF | EP-WOCD | RMOEA | NMFGAAE | NIGWO |

|---|---|---|---|---|---|---|---|---|---|---|

| 1000 | 0.20 | 0.03 | 0.19 | 0.47 | 0.18 | 0.41 | 0.32 | 0.36 | 0.46 | 0.50 |

| 2000 | 0.30 | 0.14 | 0.19 | 0.55 | 0.28 | 0.47 | 0.33 | 0.44 | 0.52 | 0.57 |

| 3000 | 0.34 | 0.20 | 0.19 | 0.56 | 0.24 | 0.53 | 0.33 | 0.45 | 0.58 | 0.59 |

| 4000 | 0.38 | 0.22 | 0.18 | 0.60 | 0.26 | 0.56 | 0.32 | 0.48 | 0.60 | 0.62 |

| 5000 | 0.39 | 0.22 | 0.18 | 0.62 | 0.40 | 0.58 | 0.32 | 0.50 | 0.61 | 0.63 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, Y.; Xu, Z.; Wang, H.; Yuan, Y.; Yang, X.; Pu, K. An Improved Gray Wolf Optimization Algorithm with a Novel Initialization Method for Community Detection. Mathematics 2022, 10, 3805. https://doi.org/10.3390/math10203805

Kang Y, Xu Z, Wang H, Yuan Y, Yang X, Pu K. An Improved Gray Wolf Optimization Algorithm with a Novel Initialization Method for Community Detection. Mathematics. 2022; 10(20):3805. https://doi.org/10.3390/math10203805

Chicago/Turabian StyleKang, Yan, Zhongming Xu, Haining Wang, Yanchong Yuan, Xuekun Yang, and Kang Pu. 2022. "An Improved Gray Wolf Optimization Algorithm with a Novel Initialization Method for Community Detection" Mathematics 10, no. 20: 3805. https://doi.org/10.3390/math10203805