Abstract

In this paper, a quasi-quadratic online adaptive dynamic programming (QOADP) algorithm is proposed to realize optimal economic dispatch for smart buildings. Load demand of high volatility is considered, which is modeled by an uncontrollable state. To reduce residual errors of the approximation structure, a quasi-quadratic-form parametric structure was designed elaborately with a bias term to counteract effects of uncertainties. Based on action-dependent heuristic dynamic programming (ADHDP), an implementation of the QOADP algorithm is presented that involved obtaining optimal economic dispatch for smart buildings. Finally, hardware-in-loop (HIL) experiments were conducted, and the performance of the proposed QOADP algorithm is superior to that of two other typical algorithms.

Keywords:

adaptive dynamic programming; smart buildings; economic dispatch; energy management systems MSC:

37N35

1. Introduction

The world is seeing tremendous growth in independent smart buildings due to the development of generators and battery techniques. When distributed generators and storage equipment are connected to smart buildings, there are new challenges for traditional energy management mechanisms due to uncertainties of user behaviors. These uncertainties are likely to result in a load demand sequence with high volatility. Therefore, an intelligent energy management system with the ability to confront with the uncertainties is necessary for smart buildings to realize optimal and economic dispatch.

1.1. Literature Review

Optimal economic dispatch is generally formulated as an optimization problem [,]. Therefore, traditional optimization techniques are widely adopted to obtain optimal economic dispatch solutions. The convex optimization algorithm is a fundamental and well-developed method for dispatch tasks. In [], the authors developed a Lyaponov-based optimization method for a transformed problem of the original non-convex problem. In [], a robust convex optimization algorithm was designed for single-phase or balanced three-phase microgrids with adverse conditions of random demand and renewable energy resources. In [], a chance-constraint-based stochastic optimization technique was applied to design an optimal energy scheduling policy for multi-service battery energy storage. However, it is still very difficult for some nonlinear and strongly coupled problems to be transformed into a convex formulation. Motivated by the development mechanism in nature, heuristic algorithms were developed to search for optimal solutions in the feasible domain. In [], the authors developed a particle swarm optimization-based algorithm to form a reliable hybrid microgrid with optimal economic dispatch for non-sensitive loads and energy storage systems. In [], a genetic-algorithm-based, online, real-time optimized energy management strategy was proposed for plug-in hybrid electric vehicles. Most of the literature above focused on static optimization problems whose solutions were in a space with finite dimensions. For optimization problems of dynamical systems, dynamic programming is a promising technique, since the optimality principle simplifies the numerical computation. In [], the authors developed a two-scale dynamic-programming-based optimal energy management method for wind–battery-power systems. A multidimensional dynamic-programming-based energy control policy was proposed for global composite operating cost minimization in []. However, dynamic programming suffers from the so-called “curse of dimensionality” as the number of time steps increases. The solution space evolves with a exponential rate, which hinders the application of dynamic programming.

To avoid the “curse of dimensionality”, adaptive dynamic programming (ADP) was developed to solve the optimal economic dispatch problem with unknown system dynamics []. One can refer to [,] for the pioneering work and an overview of ADP. Due to its self-learning ability and adaptivity, it is popular in optimal economic dispatch problems of smart buildings. An optimal battery-control policy was obtained by designing a time-based ADP algorithm for residential buildings in []. In [], the authors designed action-dependent, heuristic dynamic programming-based algorithms for optimal economic dispatch of multiple buildings. In [], the authors proposed a temporal difference learning-based ADP algorithm for fast computation. In a recent work [], a novel, model-free, real-time RT-ADP algorithm was verified in a hardware-in-the-loop experimental platform. However, models studied in the above literature are deterministic. In engineering practice, uncertainties are inevitable in most circumstances. Therefore, ADP was developed for optimal economic dispatch of smart buildings with uncertainties in the past few years [,,,]. To make the ADP algorithms more convincing, stability analysis was provided for ADP algorithms of energy systems in [,,,,]. In [], the authors proposed a dual, iterative Q-learning algorithm to solve the optimal battery-control law for a smart microgrid. Extensions of [] were created in [] for cases with renewable generation and in [] for cases with actuator constraints. For application in appliance-level economic dispatch, a data-based ADP algorithm was studied for ice-storage air conditioning systems in []. The above works commonly assumed that the load demand of users is periodic. However, the load demand of users is random with high volatility in practice; thus, the periodic assumption cannot be satisfied in most real cases.

In the implementation of an ADP algorithm, the design of a function approximator shows significant effects on the performance of the overall algorithm. The feedforward neural network is the most commonly used as a function approximator. Leaving aside feedforward neural networks, the authors of [] employed an echo-state networks as a parametric structure. The authors of [] approximated the iterative value function by a fuzzy structure. However, these general-function approximators were employed without considering features of the considered systems, which can result in large residual errors.

1.2. Motivation and Contributions

According to the above discussion, the existing algorithms are mainly concerned with optimal economic dispatch for smart buildings with periodic load demands. In practice, load demands are not periodic due to uncertain user behaviors, and even with high volatility, which inspires the study of our work. In this paper, a QOADP algorithm is proposed to obtain the optimal economic dispatch for smart buildings with high volatility load demand. A quasi-quadratic form parametric structure is designed elaborately to approximate the value function precisely. Compared with the existing literature, the main contributions of this paper are listed as follows:

- A kind of online ADP is proposed to iteratively obtain optimal economic dispatch for smart buildings with high volatility of load demand. The online algorithm allows parameters of controller to achieve optimal control with the changing of load demand.

- A quasi-quadratic form parametric structure is designed for the implementation of QOADP with a bias term to counteract the effects of uncertainties. To simplify the function approximation structure in the proposed algorithm, the feedforward information of the uncontrollable state is taken into account in the iterative value function and the iterative controller.

The rest of this paper is organized as follows. In Section 2, the mathematical model for the EMS is established. In Section 3, the QOADP is proposed for the EMS, and the optimality is analyzed. Then, parametric structures are designed for implementation. In Section 4, the data-driven approach is proposed. Section 5 provides hardware-in-loop (HIL) experimental results of the proposed method, verifying the effectiveness of QOADP. In Section 6, conclusions are drawn.

2. EMS of Smart Buildings

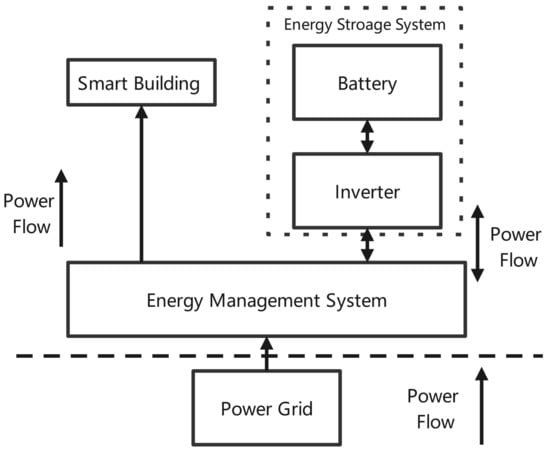

A smart building is composed of an utility grid; an energy storage system, which is connected to the point of common coupling (PCC) with the power electronic converter; and the building load. The diagram of the EMS of a smart building is shown in Figure 1.

Figure 1.

EMS of a smart building.

Power balance at the PCC is

The battery model is

where denotes battery charging and denotes battery discharging. In reality, dispatch of the storage system must satisfy the following constraints:

Constraint (3) makes sure the battery energy is bounded within an allowable range. Constraint (4) limits the charging/discharging power of the battery.

The transition function of the load demand in continuous form can be defined as

The optimal energy management problem is formulated as a discrete-time control problem with h, which is consistent with the real-time price (RTP). Using the Euler method with h leads to

According to (6)–(8), the state of the system is defined as , i.e., , , . Let be the control vector.

For convenience of analysis, delays are introduced in and . Therefore, the discrete-time building management system is

where is controllable and is uncontrollable. The drift, input and disturbance dynamics of controllable subsystem are , and , respectively.

It should be noted that the building energy system (9) is partly controllable, since it is assumed that users in the building are not sensitive to the electricity price. Differently from completely controllable systems, the system state will deviate from the equilibrium point. There is not a continuous control law asymptotically stabilizing the system. Hence, no fixed optimal control law is able to maintain the optimality from step k to .

It is essential for smart building users to find appropriate discharging/charging power of battery to reduce the electricity cost under the RTP scheme. Hence, EMS is designed to maximize economic benefits of users and extend the battery life. Therefore, a utility function is defined as

where is a time-varying matrix due to dynamic price. The first term denotes the electricity cost, the second term represents the depth of discharge and the fourth term is to shorten the charging/discharging cycle. The utility function is continuous positive definite, which ensures that the performance index function is convex. Then, the performance index function can be defined as the sum of utility functions from time step k to ∞—i.e.,

Now, our goal is to design an optimal control sequence which minimizes the performance index function (11). For , the optimal control is

where

The discount factor should be less than one to ensure convergence of the series.

3. Building Energy Management Strategy

In this section, a QOADP-based building energy management strategy is proposed to solve the optimal control problem formulated in Section 2. Moreover, the iterative QOADP algorithm and the corresponding parametric structure are designed.

3.1. Iterative QOADP Algorithm

ADP is an effective tool for solving the nonlinear optimal control problem based on the principle of optimality [,]. Generally, the iterative ADP algorithm can be classified into value iteration (VI) and policy iteration (PI). The main idea of ADP is the solution of the Hamilton–Jacobi–Bellman (HJB) equation can be iteratively approximated. We focus on the value iteration in this study.

The building energy system in Section 2 is time-varying, since the utility function varies with time. Hence, the performance index function is time-varying; i.e., .

An initial value function is used to approximate . Then, the initial iterative control policy can be solved by

Next, the iterative value function at time index can be improved by

For , , the iterative control policy and iterative value function can be updated by

and

For , the real-time iterative control policy and the updating iterative value function will be obtained by

The above description explains the offline iterative algorithm. However, it is nearly impossible to be applied in the real engineering tasks. The reasons are listed as follows:

(1) The number of iterations is infinite between time step k and time step , which cannot be carried out.

(2) The iterative control policy obtained by finite iterations may not be stable. Hence, it is likely to lead to abnormal operations of the system.

To overcome the difficulties, it is meaningful to develop an online algorithm, in which the time index k is equal to iteration index i. Hence, the online value iteration can be summarized as

and

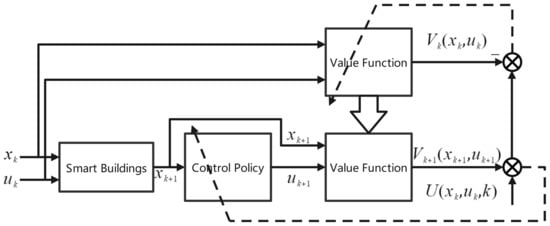

with a positive semidefinite initial function . The whole structure diagram is shown in Figure 2.

Figure 2.

The structure diagram of online value iteration.

Note that the online value iteration cannot directly be applied to the EMS, as it is not completely controllable, which differs from most nonlinear systems in the literature [,,]. For controllable systems, there is an admissible control law [] to bring states of systems to the equilibrium point. Hence, the discount factor should not be omitted in the EMS control. Moreover, the dynamics of load demand is very complex and stochastic. However, it is not desirable to use a complex controller in the real application.

To solve this problem, load forecasting module is separated from the controller, and the controller is modified by , where is the predictive value of load demand at time step k. The predictive module can be implemented by an exponential smoothing model [], neural network model, etc. Therefore, the complexity of the controller is reduced, which is beneficial for the real application.

3.2. Parametric Structure Design for Value Function

Function approximation structures should be specified to approximate and . The most commonly used parametric structures are the feedforward neural network [] and quadratic form neural network []. However, they are not the most fitting function approximators to be used in EMS. Here, the iterative value function and iterative control policy are defined as

and

where the coefficients of (22) and (23) can be expressed as

The iterative control law is composed of a proportional control term and a bias vector. If the load demand for , it becomes a standard LQR problem. The challenge of this paper comes from the dynamic load demand . To solve this problem, the bias term is designed to confront with effects of the uncontrollable state . It is should be pointed out that the bias term at time k is a function of . Moreover, (25) shows that the bias term is a function of , which means that the optimal controller contains feedback and feedforward signals.

4. Implementation of the Proposed Algorithm

The recursive formula for parameters of the iterative value function is given in Section 3, but one still can hardly identify parameters of the EMS. Therefore, an online data-driven implementation is necessary.

To carry out the above online iterative ADP algorithm, it is required to design a parametric structure to approximate and . The output of the parametric structure is formulated as

where , and l is the dimension of vector .

The ADHDP technique is adopted to implement the QOADP algorithm, which combines the system dynamics and iterative value []. The Q function for ADHDP is defined as

Inputs of the parametric structure of iterative Q function are and , and the activation function is defined as

The input of the iterative control policy is , and the activation function is defined as

The target of function Q is

The error of Q function can be defined as

and the parameter-update rule for is a gradient-based iteration given by

where is the learning rate of the iterative Q function and m is the iterative step for updating . Define the maximum number of iteration steps as ; then,

The target of the iterative control policy is given by

Additionally, error of the iterative control policy is

Similarly, the weight update is

where is the learning rate of the iterative control policy

In summary, the data-driven QOADP algorithm implemented by parametric structures is shown in Algorithm 1.

| Algorithm 1: Data-driven QOADP algorithm |

Initialization: 1: Collect data of an EMS 2: Choose an initial array of , which ensures initial to be positive semi-definite. 3: Choose the maximum time step . Iteration (Online): 4: Let , and let . 6: Let . 8: If , go to next step. Otherwise, go to Step 6. 9: return. |

5. Hardware-in-Loop Experimental Verification

In this section, two cases of numerical experiments were performed to examine the proposed QOADP algorithm. Comparisons will also be given to prove the superiority of QOADP. In case 1, QOADP was carried out under the assumption that the building load demand and electricity rate are periodic. In case 2, the dual iterative Q-learning (DIQL) algorithm [], the fuzzy periodic value iteration (FPVI) algorithm [] and the QOADP algorithm were, respectively, applied to the load demand of high volatility. The advantages of QOADP were verified by comparing the systems states and electricity cost of the three EMSs. For the purpose of comparison, the dual iterative Q-learning (DIQL) algorithm [] and the fuzzy periodic value iteration (FPVI) algorithm [] were also applied to the same load demand. Some important parameters for experiment can be found in Table 1.

Table 1.

Parameters for experiment.

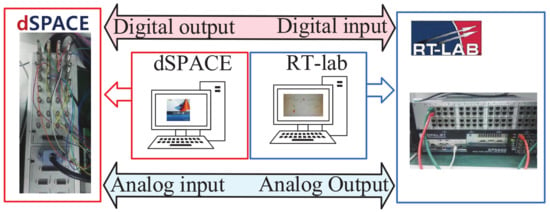

5.1. HIL Platform

The proposed QOADP algorithm was verified by an HIL experimental platform, as shown in Figure 3. The HIL platform was composed of an OPAL-RT OP5600 digital simulator as the controlled plant emulator and a dSPACE DS1103 as the controller. DS1103 is a controller board for digital control, which enables the connection between hardware and MATLAB/Simulink simulation environment. By Simulink, algorithms were implemented, compiled and converted to C-code, which was then loaded to the real-time dSPACE processor. The RT-LAB rapid control prototype (RCP) drive system loaded the smart building model, including the utility grid, battery and loads onto a real-time simulation platform configured with the I/O interface required for the study. MATLAB R2021a was the platform, and CPU was an 11th Gen Intel(R) Core(TM) i7-1165G7 @ 2.80GHz.

Figure 3.

HIL experimental platform.

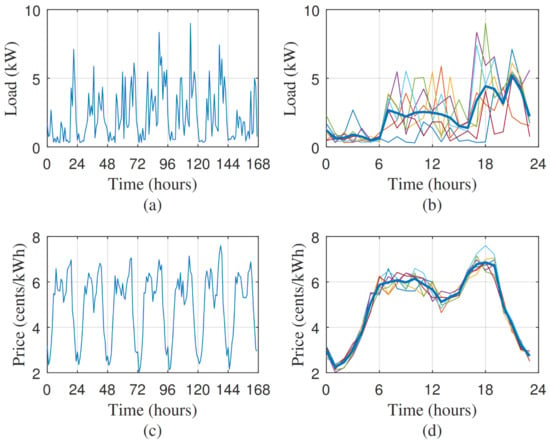

5.2. Dataset

The load demand data were taken from the IEEE Power-Energy Society (PES) Open Dataset. The load demand contained the active power of a single household in a family housing from 25 December 2011 to 15 March 2013. The sampling time was 1 h. Figure 4a shows the load demand of a smart building in a week, which has high volatility. In Figure 4b, the load demand in every single day is plotted. The bold line is the average daily load demand. The bold line shows the average load demand in a 24 h time horizon. The electricity price is consistent with the one in []. Figure 4c shows the electricity price. The electricity price is quasi-periodic, since it is determined by the aggregated load demand, which has a smaller fluctuation than a single building. Similarly, Figure 4d shows seven daily electricity prices ranging from 1 to 24, and the average electricity price is plotted by a bold line. It is assumed that users are not aware of the electricity price, which means that the load is not scheduled, and the target is to design a control strategy for the battery system.

Figure 4.

The weekly load demand and its average load curve. (a) Load demand of a smart building for one week. (b) Seven daily load demands and average load demand (bold line). (c) Electricity prices for one week. (d) Seven daily electricity prices and average electricity price (bold line).

5.3. Case 1: Periodic Load Demand

In this case, the load demand and electricity price are periodic. Without rescheduling of the energy storage system, the power cost over one week was 1999.2 cents.

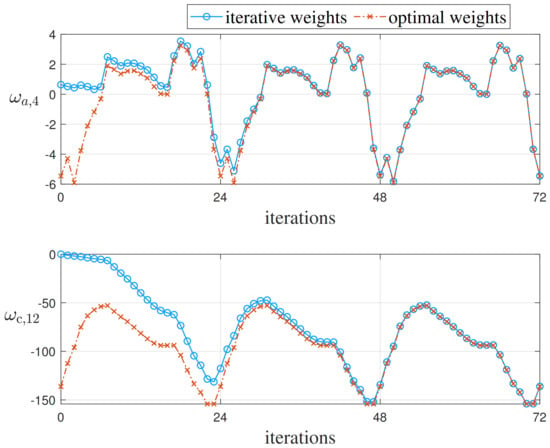

The iterative weights of value function and controller are depicted in Figure 5. The learning process shows that weights converge to a periodic sequence. Since the iterative algorithm has an exponential convergence rate, the initial weights have little influence on the performance.

Figure 5.

The iterative weights of the QOADP under periodic assumption.

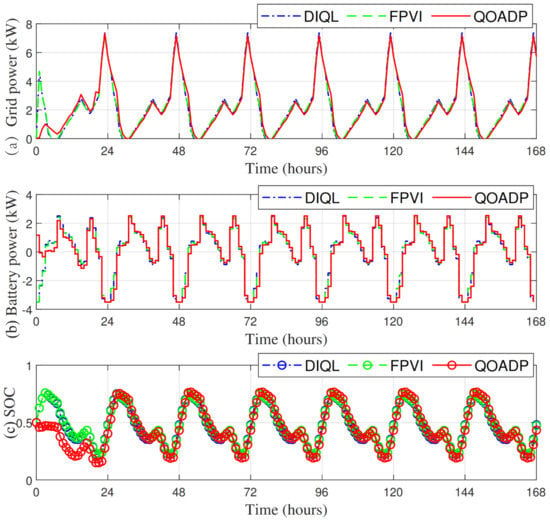

The grid power is plotted in Figure 6a. Each of them works in a safe zone. The battery power is plotted in Figure 6b. Figure 6c shows the SOC of three EMSs. The battery charges when the electricity price is low at midnight and discharges in the daytime. Compared with QOADP, the maximum battery power levels of DIQL- and FPVI-based EMS are larger, which is not beneficial to battery life. Moreover, the total electricity cost of QOADP is less than those of DIQL and FPVI. Hence, QOADP has advantages in both economic costs and battery life degradation. QQADP-based EMS has higher grid power than DIQL and FPVI from 1 am to 6 am only when the electricity price is low.

Figure 6.

System state and control under periodic assumption: (a) Grid power; (b) Battery power; (c) SOC.

5.4. Case 2: Load Demand of High Volatility

Consider the load demand with high volatility shown in Figure 4a,c, which depicts the electricity price.

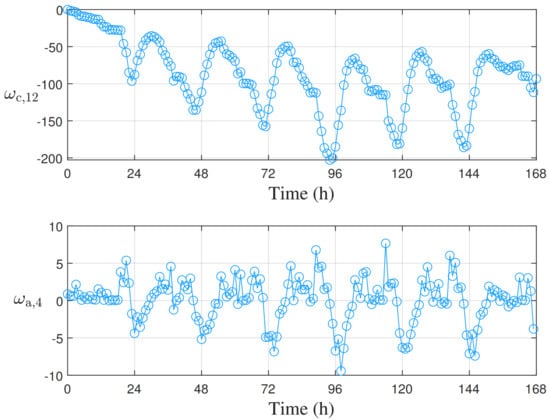

Figure 7 shows and of the iterative value function and iterative controller. Differently from the periodic case, weights will not converge to an array of parameters, but iterate towards the optimal. In Figure 7, optimal parameters are plotted under load demand of high volatility.

Figure 7.

The iterative weights of parametric structures (load demand of high volatility).

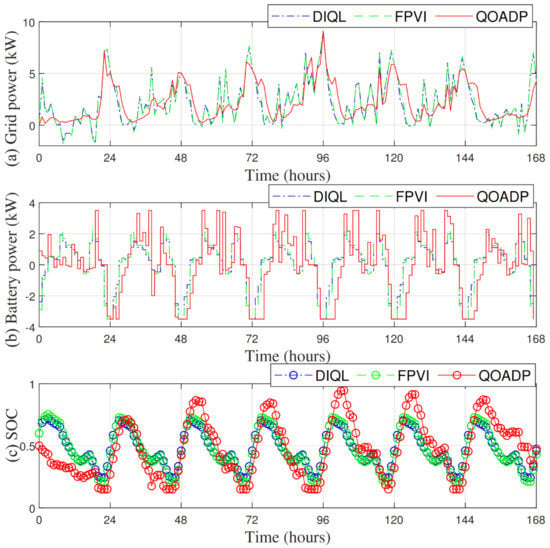

The state and control variables of three EMSs are plotted in Figure 8. Similar to the first case, batteries charge when price is low and discharge when price is high. In QOADP-based EMS, the control variable, i.e., , can adapt to the real-time change in load demand. Hence, the battery power compensates the load demand in a good way. Furthermore, the grid power of QOADP is more stable than those of DIQL and FPVI, which is a desirable feature in the utility grid.

Figure 8.

System state and control under load demand of high volatility: (a) Grid power; (b) Battery power; (c) SOC.

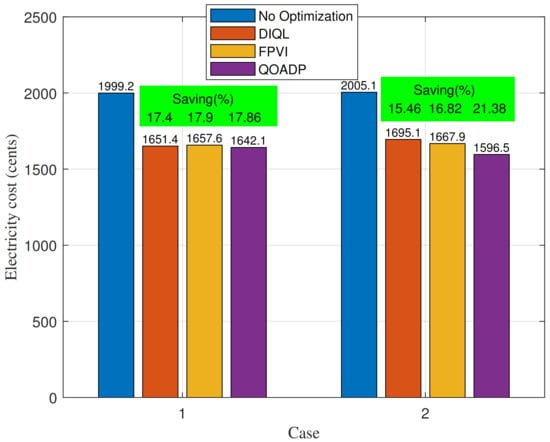

The electricity costs of three methods are listed in Figure 9. The proposed algorithm is not only able to adapt to the change of load demand, but also saves more electricity cost for residential users.

Figure 9.

Electricity costs of three methods.

6. Conclusions

This paper proposes a QOADP algorithm to realize optimal economic dispatch in smart buildings. The load demand is allowed to be uncertain with high volatility, which is modeled by an uncontrollable state in the EMS. Firstly, the framework of iterative QOADP algorithm is designed to obtain optimal economic dispatch control law. Then, a quasi-quadratic-based function approximation structure with a bias term is utilized to approximate the value function precisely. Moreover, the implementation of the proposed algorithm is given out with detailed steps. The design of QOADP has paved the way for applying ADP technique to EMS of smart buildings and some other systems with uncertainties. Finally, experimental results reveal that QOADP not only can be applied to periodic load demand but also can be employed to load demand of high volatility with superior performance. Our future work will be concerned with expanding the proposed algorithm to more practical engineering systems and formulating a general way to apply ADP.

Author Contributions

Methodology, J.W.; Validation, Z.Z.; Investigation, K.C.; Data curation, Z.Z.; Writing-original draft, K.C.; Writing-review & editing, Z.Z. and J.W.; Project administration, J.W.; Funding acquisition, K.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded in part by the National Natural Science Foundation of China under grant 62103115, in part by the Natural Science Foundation of Guangdong Province under grants 2021A1515011636, in part by the Science and Technology Research Program of Guangzhou under grant 202102020975 and in part by the basic and applied basic research projects jointly funded by Guangzhou and schools (colleges) under grant 202201020233.

Data Availability Statement

Load demand data for the experiment can be found in IEEE Power-Energy Society (PES) Open Dataset at https://open-power-system-data.org/ (accessed on 20 October 2022).

Conflicts of Interest

No conflict of Interest.

Abbreviations

| i | iteration steps. |

| time steps (hours). | |

| Power from the utility grid at time t (kW). | |

| Battery discharging/charging power at time t (kW). | |

| Building load at time t (kW). | |

| Charging/discharging efficiency of Power electronic converter in the storage system. | |

| State of charge of the battery at time t. | |

| Rated energy of battery (kWh). | |

| Minimum value of . | |

| Maximum value of . | |

| Battery rated discharging/charging power at time t (kW). | |

| Transition function of building load (continuous time). | |

| Transition function of building load (discrete time). | |

| Sampling time. | |

| Controllable system state vector. | |

| Unontrollable system state vector. | |

| System state vector, , . | |

| System control vector, . | |

| control sequence from time k to ∞. | |

| System transition function. | |

| Drift dynamics, input dynamics, disturbance dynamics. | |

| Utility function. | |

| Real-time electricity price at time k (cents/kWh) | |

| Performance index function. | |

| Discount factor, . |

References

- Zhou, B.; Zou, J.; Chung, C.Y.; Wang, H.; Liu, N.; Voropai, N.; Xu, D. Multi-microgrid Energy Management Systems: Architecture, Communication, and Scheduling Strategies. J. Mod. Power Syst. Clean Energy 2021, 9, 463–476. [Google Scholar] [CrossRef]

- Yu, L.; Qin, S.; Zhang, M.; Shen, C.; Jiang, T.; Guan, X. A Review of Deep Reinforcement Learning for Smart Building Energy Management. IEEE Internet Things J. 2021, 8, 12046–12063. [Google Scholar] [CrossRef]

- Zhong, W.; Xie, K.; Liu, Y.; Yang, C.; Xie, S.; Zhang, Y. Online Control and Near-Optimal Algorithm for Distributed Energy Storage Sharing in Smart Grid. IEEE Trans. Smart Grid 2020, 11, 2552–2562. [Google Scholar] [CrossRef]

- Giraldo, J.S.; Castrillon, J.A.; López, J.C.; Rider, M.J.; Castro, C.A. Microgrids Energy Management Using Robust Convex Programming. IEEE Trans. Smart Grid 2019, 10, 4520–4530. [Google Scholar] [CrossRef]

- Zhong, W.; Xie, K.; Liu, Y.; Xie, S.; Xie, L. Chance Constrained Scheduling and Pricing for Multi-Service Battery Energy Storage. IEEE Trans. Smart Grid 2021, 12, 5030–5042. [Google Scholar] [CrossRef]

- Zhang, G.; Yuan, J.; Li, Z.; Yu, S.S.; Chen, S.Z.; Trinh, H.; Zhang, Y. Forming a Reliable Hybrid Microgrid Using Electric Spring Coupled With Non-Sensitive Loads and ESS. IEEE Trans. Smart Grid 2020, 11, 2867–2879. [Google Scholar] [CrossRef]

- Fan, L.; Wang, Y.; Wei, H.; Zhang, Y.; Zheng, P.; Huang, T.; Li, W. A GA-based online real-time optimized energy management strategy for plug-in hybrid electric vehicles. Energy 2022, 241, 122811. [Google Scholar] [CrossRef]

- Zhang, L.; Li, Y. Optimal Energy Management of Wind-Battery Hybrid Power System With Two-Scale Dynamic Programming. IEEE Trans. Sustain. Energy 2013, 4, 765–773. [Google Scholar] [CrossRef]

- Meng, X.; Li, Q.; Zhang, G.; Chen, W. Efficient Multidimensional Dynamic Programming-Based Energy Management Strategy for Global Composite Operating Cost Minimization for Fuel Cell Trams. IEEE Trans. Transp. Electrif. 2022, 8, 1807–1818. [Google Scholar] [CrossRef]

- Liu, D.; Xue, S.; Zhao, B.; Luo, B.; Wei, Q. Adaptive Dynamic Programming for Control: A Survey and Recent Advances. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 142–160. [Google Scholar] [CrossRef]

- Lewis, F.L.; Vrabie, D. Reinforcement learning and adaptive dynamic programming for feedback control. IEEE Circuits Syst. Mag. 2009, 9, 32–50. [Google Scholar] [CrossRef]

- Lewis, F.L.; Vrabie, D.; Vamvoudakis, K.G. Reinforcement Learning and Feedback Control: Using Natural Decision Methods to Design Optimal Adaptive Controllers. IEEE Control. Syst. Mag. 2012, 32, 76–105. [Google Scholar] [CrossRef]

- Fuselli, D.; De Angelis, F.; Boaro, M.; Squartini, S.; Wei, Q.; Liu, D.; Piazza, F. Action dependent heuristic dynamic programming for home energy resource scheduling. Int. J. Electr. Power Energy Syst. 2013, 48, 148–160. [Google Scholar] [CrossRef]

- Liu, D.; Xu, Y.; Wei, Q.; Liu, X. Residential energy scheduling for variable weather solar energy based on adaptive dynamic programming. IEEE/CAA J. Autom. Sin. 2018, 5, 36–46. [Google Scholar] [CrossRef]

- Zhao, Z.; Keerthisinghe, C. A Fast and Optimal Smart Home Energy Management System: State-Space Approximate Dynamic Programming. IEEE Access 2020, 8, 184151–184159. [Google Scholar] [CrossRef]

- Yuan, J.; Yu, S.S.; Zhang, G.; Lim, C.P.; Trinh, H.; Zhang, Y. Design and HIL Realization of an Online Adaptive Dynamic Programming Approach for Real-Time Economic Operations of Household Energy Systems. IEEE Trans. Smart Grid 2022, 13, 330–341. [Google Scholar] [CrossRef]

- Shuai, H.; Fang, J.; Ai, X.; Tang, Y.; Wen, J.; He, H. Stochastic Optimization of Economic Dispatch for Microgrid Based on Approximate Dynamic Programming. IEEE Trans. Smart Grid 2019, 10, 2440–2452. [Google Scholar] [CrossRef]

- Li, H.; Wan, Z.; He, H. Real-Time Residential Demand Response. IEEE Trans. Smart Grid 2020, 11, 4144–4154. [Google Scholar] [CrossRef]

- Shang, Y.; Wu, W.; Guo, J.; Ma, Z.; Sheng, W.; Lv, Z.; Fu, C. Stochastic dispatch of energy storage in microgrids: An augmented reinforcement learning approach. Appl. Energy 2020, 261, 114423. [Google Scholar] [CrossRef]

- Shuai, H.; He, H. Online Scheduling of a Residential Microgrid via Monte-Carlo Tree Search and a Learned Model. IEEE Trans. Smart Grid 2021, 12, 1073–1087. [Google Scholar] [CrossRef]

- Wei, Q.; Liu, D.; Shi, G. A novel dual iterative Q-learning method for optimal battery management in smart residential environments. IEEE Trans. Ind. Electron. 2015, 62, 2509–2518. [Google Scholar] [CrossRef]

- Wei, Q.; Liu, D.; Liu, Y.; Song, R. Optimal constrained self-learning battery sequential management in microgrid via adaptive dynamic programming. IEEE/CAA J. Autom. Sin. 2017, 4, 168–176. [Google Scholar] [CrossRef]

- Wei, Q.; Shi, G.; Song, R.; Liu, Y. Adaptive Dynamic Programming-Based Optimal Control Scheme for Energy Storage Systems With Solar Renewable Energy. IEEE Trans. Ind. Electron. 2017, 64, 5468–5478. [Google Scholar] [CrossRef]

- Wei, Q.; Liao, Z.; Song, R.; Zhang, P.; Wang, Z.; Xiao, J. Self-Learning Optimal Control for Ice-Storage Air Conditioning Systems via Data-Based Adaptive Dynamic Programming. IEEE Trans. Ind. Electron. 2021, 68, 3599–3608. [Google Scholar] [CrossRef]

- Wei, Q.; Liao, Z.; Shi, G. Generalized Actor-Critic Learning Optimal Control in Smart Home Energy Management. IEEE Trans. Ind. Inform. 2021, 17, 6614–6623. [Google Scholar] [CrossRef]

- Shi, G.; Liu, D.; Wei, Q. Echo state network-based Q-learning method for optimal battery control of offices combined with renewable energy. IET Control. Theory Appl. 2017, 11, 915–922. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhao, D.; Li, X.; Wang, D. Control-Limited Adaptive Dynamic Programming for Multi-Battery Energy Storage Systems. IEEE Trans. Smart Grid 2019, 10, 4235–4244. [Google Scholar] [CrossRef]

- Al-Tamimi, A.; Lewis, F.L.; Abu-Khalaf, M. Discrete-Time Nonlinear HJB Solution Using Approximate Dynamic Programming: Convergence Proof. IEEE Trans. Syst. Man Cybern. Part (Cybernetics) 2008, 38, 943–949. [Google Scholar] [CrossRef]

- Wang, D.; Liu, D.; Wei, Q.; Zhao, D.; Jin, N. Optimal control of unknown nonaffine nonlinear discrete-time systems based on adaptive dynamic programming. Automatica 2012, 48, 1825–1832. [Google Scholar] [CrossRef]

- Liu, D.; Wei, Q. Policy Iteration Adaptive Dynamic Programming Algorithm for Discrete-Time Nonlinear Systems. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 621–634. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, Y.; He, H.; Ren, S.; Weng, G. Real-Time Demand Side Management for a Microgrid Considering Uncertainties. IEEE Trans. Smart Grid 2019, 10, 3401–3414. [Google Scholar] [CrossRef]

- Wei, Q.; Lewis, F.L.; Liu, D.; Song, R.; Lin, H. Discrete-Time Local Value Iteration Adaptive Dynamic Programming: Convergence Analysis. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 875–891. [Google Scholar] [CrossRef]

- Xue, S.; Luo, B.; Liu, D. Event-Triggered Adaptive Dynamic Programming for Zero-Sum Game of Partially Unknown Continuous-Time Nonlinear Systems. IEEE Trans. Syst. Man, Cybern. Syst. 2020, 50, 3189–3199. [Google Scholar] [CrossRef]

- Liu, D.; Wei, Q.; Wang, D.; Yang, X.; Li, H. Adaptive Dynamic Programming with Applications in Optimal Control; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).