A Method for Perception and Assessment of Semantic Textual Similarities in English

Abstract

1. Introduction

2. State of the Art

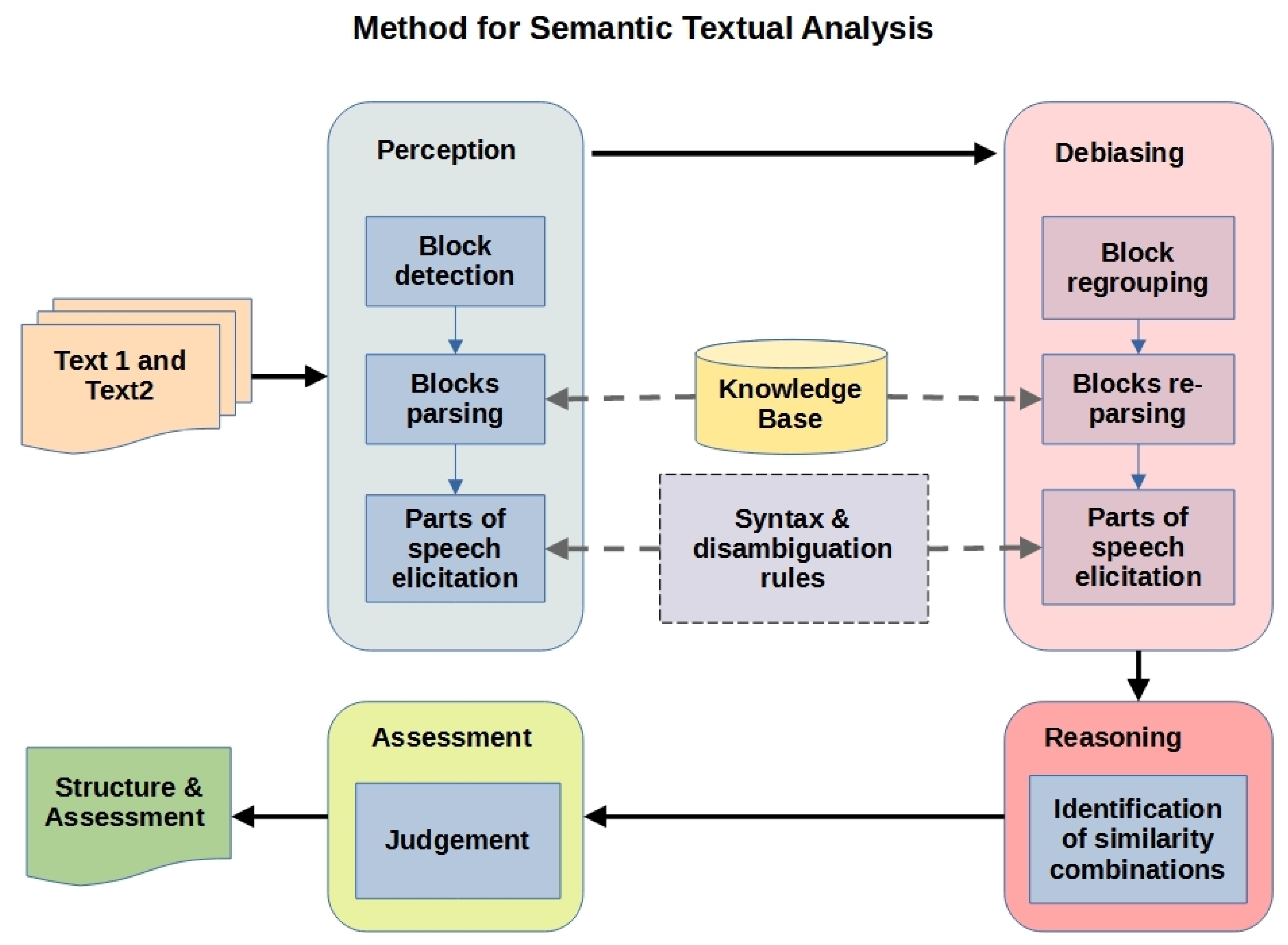

3. Method for Semantic Textual Analysis

3.1. Notation Used in This Work

- T: a text snippet that may contain either a non phrase, sentence or question.

- NP: a noun phrase consisting either by a pronoun, (adjective)* noun, or entity.

- Q: a question consisting either by a structure denoting a question ending with a question mark (?), or a sentence that implies a question such “I wonder if”.

- SVO: a structure that contains a well defined sentence consisting of the subject phrase, verb phrase, and object phrase.

- Block: a block is a fragment of sequential words extracted from a text and produced by preprocessing tasks to enact the parts of speech (either from a sentence, question or noun phrase).

- Synset: a set of synonyms or words related by their meanings.

3.2. Description of the Method

3.3. Perception

| Algorithm 1 Perception |

|

Perception Example

3.4. Debiasing

Example of the Debiasing Process

| Algorithm 2 Debiasing |

|

3.5. Reasoning

3.5.1. Semantic Similarity Judgement

3.5.2. Cases of Combinations on POS Alignment

3.5.3. Examples of Reasoning on Combinations

| Algorithm 3 Similarity reasoning |

|

3.6. Assessment

Examples of the Assessment Process

4. Experiments

5. Results

5.1. Comparison of the Experiment with Related Algorithms

5.2. Performance of the Proposed Method with Regards the State of the Art

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Doolittle, P.E. Understanding Cooperative Learning through Vygotsky’s Zone of Proximal Development. In Proceedings of the Lilly National Conference on Excellence in College Teaching, Columbia, SC, USA, 2–4 June 1995; Available online: https://files.eric.ed.gov/fulltext/ED384575.pdf (accessed on 17 April 2023).

- Delprato, D.J.; Midgley, B.D. Some fundamentals of BF Skinner’s behaviorism. Am. Psychol. 1992, 47, 1507. [Google Scholar] [CrossRef]

- Wang, Y.; Zatarain, O.A. A Novel Machine Learning Algorithm for Cognitive Concept Elicitation by Cognitive Robots. Int. J. Cogn. Inform. Nat. Intell. 2017, 11, 31–46. [Google Scholar] [CrossRef]

- Wang, Y. Concept Algebra: A Denotational Mathematics for Formal Knowledge Representation and Cognitive Robot Learning. J. Adv. Math. Appl. 2015, 4, 61–86. [Google Scholar] [CrossRef]

- Navigli, R.; Ponzetto, S.P. BabelNet: The automatic construction, evaluation and application of a wide-coverage multilingual semantic network. Artif. Intell. 2012, 193, 217–250. [Google Scholar] [CrossRef]

- Wang, Y.; Zatarain, O.A. Design and Implementation of a Knowledge Base for Machine Knowledge Learning. In Proceedings of the IEEE 17th International Conference on Cognitive Informatics and Cognitive Computing, ICCI*CC, Berkeley, CA, USA, 16–18 July 2018; pp. 70–77. [Google Scholar]

- Miller, G.A. WordNet: A lexical database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short Term Computation. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Maharjan, N.; Banjade, R.; Gautam, D.; Tamang, L.J.; Rus, V. DT_Team at SemEval-2017 Task 1: Semantic Similarity Using Alignments, Sentence-Level Embeddings and Gaussian Mixture Model Output. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 120–124. [Google Scholar]

- Tian, J.; Zhou, Z.; Lan, M.; Wu, Y. ECNU at SemEval-2017 Task 1: Leverage Kernel-based Traditional NLP features and Neural Networks to Build a Universal Model for Multilingual and Cross-lingual Semantic Textual Similarity. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 191–197. [Google Scholar]

- Wu, H.; Huang, H.; Jian, P.; Guo, Y.; Su, C. BIT at SemEval-2017 Task 1: Using Semantic Information Space to Evaluate Semantic Textual Similarity. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 77–84. [Google Scholar]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; Jeffrey, D. Distributed Representations ofWords and Phrases and their Compositionality. In Proceedings of the NIPS’13: Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2016; pp. 3111–3119. [Google Scholar]

- Maharjan, N.; Banjade, R.; Rus, V. Automated Assessment of Open-ended Student Answers in Tutorial Dialogues Automated Assessment of Open-ended Student Answers in Tutorial Dia- logues Using Gaussian Mixture Models. In Proceedings of the Thirtieth International Florida Artificial Intelligence Research Society Conference, Marco Island, FL, USA, 22–24 May 2017; pp. 98–103. [Google Scholar]

- Sari´c, F.; Glavaš, G.; Karan, M.; Snajder, J.; Dalbelo, B.; Baši´c, B. TakeLab: Systems for Measuring Semantic Text Similarity. In Proceedings of the First Joint Conference on Lexical and Computational Semantics, Montreal, QC, Canada, 7–8 June 2012; pp. 441–448. [Google Scholar]

- Moschitti, A. Efficient Convolution Kernels for Dependency and Constituent Syntactic Trees. In Proceedings of the 17th European Conference on Machine Learning Machine Learning: ECML 2006, Berlin, Germany, 18–22 September 2006; Fürnkranz, J., Scheffer, T., Spiliopoulou, M., Eds.; Springer: Berlin/Heidelberg, Germany; pp. 318–329. [Google Scholar]

- Sultan, M.A.; Bethard, S.; Sumner, T. DLS@CU: Sentence Similarity from Word Alignment and Semantic Vector Composition. In Proceedings of the 9th International Workshop on Semantic Evaluation (SemEval 2015), Denver, CO, USA, 4–5 June 2015; pp. 148–153. [Google Scholar] [CrossRef]

- Iyyer, M.; Manjunatha, V.; Boyd-Graber, J.; Iii, H.D. Deep Unordered Composition Rivals Syntactic Methods for Text Classification. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 1681–1691. [Google Scholar]

- Manning, C.D.; Bauer, J.; Finkel, J.; Bethard, S.J. The Stanford CoreNLP Natural Language Processing Toolkit Christopher. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Baltimore, MD, USA, 23–24 June 2014; pp. 55–60. [Google Scholar]

- Resnik, P. Using Information Content to Evaluate Semantic Similarity in a Taxonomy. In Proceedings of the IJCAI’95: 14th International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 20–25 August 1995; Volume 7, pp. 448–453. [Google Scholar]

- Chang, C.C.; Lin, C.J. LIBSVM: A Library for Support Vector Machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Annual Conference of the North American Chapter of the Association for Computational Linguistics NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A Lite BERT for self-supervised learning of language representations. In Proceedings of the Eighth International Conference on Learning Representations ICLR 2020, Online, 26 April–1 May 2020. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 3982–3992. [Google Scholar]

- Xu, C.; Zhou, W.; Ge, T.; Wei, F.; Zhou, M. BERT-of-Theseus: Compressing BERT by Progressive Module Replacing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 7859–7869. [Google Scholar]

- Sheng, T.; Wang, L.; He, Z.; Sun, M.; Jiang, G. An Unsupervised Sentence Embedding Method by Maximizing the Mutual Information of Augmented Text Representations. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2022, Bristol, UK, 6–7 September 2022; Springer: Cham, Switzerland, 2022; pp. 174–185. [Google Scholar]

- Jiao, X.; Yin, Y.; Shang, L.; Jiang, X.; Chen, X.; Li, L.; Wang, F.; Liu, Q. TinyBERT: Distilling BERT for Natural Language Understanding. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online, 16–20 November 2020; pp. 4163–4174. [Google Scholar] [CrossRef]

- Izsak, P.; Berchansky, M.; Levy, O. How to Train BERT with an Academic Budget. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 10644–10652. [Google Scholar] [CrossRef]

- Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Zhao, T. SMART: Robust and Efficient Fine-Tuning for Pre-trained Natural Language Models through Principled Regularized Optimization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 6–8 July 2020; pp. 2177–2190. [Google Scholar] [CrossRef]

- Hassan, B.; Abdelrahman, S.E.; Bahgat, R.; Farag, I. UESTS: An Unsupervised Ensemble Semantic Textual Similarity Method. IEEE Access 2019, 7, 85462–85482. [Google Scholar] [CrossRef]

- Duma, M.S.; Menzel, W. SEF@UHH at SemEval-2017 Task 1: Unsupervised Knowledge-Free Semantic Textual Similarity via Paragraph Vector. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 170–174. [Google Scholar]

- Cer, D.; Diab, M.; Agirre, E.; Iñigo, L.G.; Specia, L. SemEval-2017 Task 1: Semantic Textual Similarity Multilingual and Cross-lingual Focused Evaluation. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 1–14. [Google Scholar]

- Liu, W.; Sun, C.; Lin, L.; Liu, B. ITNLP-AiKF at SemEval-2017 Task 1: Rich Features Based SVR for Semantic Textual Similarity Computing. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 159–163. [Google Scholar] [CrossRef]

- Ganitkevitch, J.; Van Durme, B.; Callison-Burch, C. PPDB: The Paraphrase Database. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Atlanta, GA, USA, 9–14 June 2013; pp. 758–764. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global Vectors for Word Representation. In Proceedings of the Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Loper, E.; Bird, S. NLTK: The Natural Language Toolkit. In Proceedings of the ACL Interactive Poster and Demonstration Sessions, Barcelona, Spain, 21–26 July 2004; pp. 214–217. [Google Scholar]

- Henderson, J.; Merkhofer, E.; Strickhart, L.; Zarrella, G. MITRE at SemEval-2017 Task 1: Simple Semantic Similarity. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 185–190. [Google Scholar] [CrossRef]

- Shao, Y. HCTI at SemEval-2017 Task 1: Use convolutional neural network to evaluate Semantic Textual Similarity. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 130–133. [Google Scholar] [CrossRef]

- Al-Natsheh, H.T.; Martinet, L.; Muhlenbach, F.; Zighed, D.A. UdL at SemEval-2017 Task 1: Semantic Textual Similarity Estimation of English Sentence Pairs Using Regression Model over Pairwise Features. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 115–119. [Google Scholar] [CrossRef]

- Kohail, S.; Salama, A.R.; Biemann, C. STS-UHH at SemEval-2017 Task 1: Scoring Semantic Textual Similarity Using Supervised and Unsupervised Ensemble. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 175–179. [Google Scholar] [CrossRef]

- Lee, I.T.; Goindani, M.; Li, C.; Jin, D.; Johnson, K.M.; Zhang, X.; Pacheco, M.L.; Goldwasser, D. PurdueNLP at SemEval-2017 Task 1: Predicting Semantic Textual Similarity with Paraphrase and Event Embeddings. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 198–202. [Google Scholar] [CrossRef]

- Zhuang, W.; Chang, E. Neobility at SemEval-2017 Task 1: An Attention-based Sentence Similarity Model. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 164–169. [Google Scholar] [CrossRef]

- Śpiewak, M.; Sobecki, P.; Karaś, D. OPI-JSA at SemEval-2017 Task 1: Application of Ensemble learning for computing semantic textual similarity. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 139–143. [Google Scholar] [CrossRef]

- Fialho, P.; Patinho Rodrigues, H.; Coheur, L.; Quaresma, P. L2F/INESC-ID at SemEval-2017 Tasks 1 and 2: Lexical and semantic features in word and textual similarity. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 213–219. [Google Scholar] [CrossRef]

- España-Bonet, C.; Barrón-Cedeño, A. Lump at SemEval-2017 Task 1: Towards an Interlingua Semantic Similarity. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 144–149. [Google Scholar] [CrossRef]

- Bjerva, J.; Östling, R. ResSim at SemEval-2017 Task 1: Multilingual Word Representations for Semantic Textual Similarity. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 154–158. [Google Scholar] [CrossRef]

- Le, Q.; Mikolov, T. Distributed Representations of Sentences and Documents. In Proceedings of the 31st International Conference on International Conference on Machine Learning-Volume 32, JMLR.org, ICML’14, Beijing, China, 21–26 June 2014; pp. II-1188–II-1196. [Google Scholar]

- Meng, F.; Lu, W.; Zhang, Y.; Cheng, J.; Du, Y.; Han, S. QLUT at SemEval-2017 Task 1: Semantic Textual Similarity Based on Word Embeddings. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 150–153. [Google Scholar] [CrossRef]

| Type | Structures | Blocks |

|---|---|---|

| SVO /NP | 1 Block | |

| Q | 1 Block | |

| Conjunction | >1 Blocks | |

| Present | present; present continuous; present perfect; conditionals 0, 1, 2 3 | >1 Blocks |

| Past | pas; past continuous; past perfect | >1 Blocks |

| Future | future; future continuous; | >1 Blocks |

| Sentence | Blocks | Parsing | Verb Phrases |

|---|---|---|---|

| A woman is working as a nurse. | 1: A woman is working as | 1. A: DETERMINER | indices: 3, 4 |

| 2. woman: ADJECTIVE, NOUN | |||

| 3. is: TO BE | |||

| 4. working: COUNTABLE, NOUN, PLURAL, ‘SINGULAR’ UNCOUNT, VERB | |||

| 5. as: CONJUNCTION | |||

| 2: a nurse | 1. a: DETERMINER | [] | |

| 2. nurse: NOUN |

| Sentence | Blocks | Parsing | Verb Phrases |

|---|---|---|---|

| A woman is working as a nurse. | 1: A woman is working as a nurse. | 1. A: DETERMINER | indices: 3, 4 |

| 2. woman: ADJECTIVE, NOUN | |||

| 3. is: TO BE | |||

| 4. working: COUNTABLE, NOUN, PLURAL, ‘SINGULAR’ UNCOUNT, VERB | |||

| 5. as: CONJUNCTION | |||

| 6. a: DETERMINER | |||

| 7. nurse: NOUN |

| Case | Type | Assessment Criteria | POS | Integration |

|---|---|---|---|---|

| I | The noun phrases are compared on morphology, use of synonyms and by direct matching | (1), (2) | S() (3) | |

| II | The sentences are compared on the parts of speech (subject, object, and verbs), an order analysis is applied to identify if the main actors are related. | (3) | Sim(,) (4) SimNV(,) (5) | |

| III | The questions are compared on the parts of speech (subject, object, and verbs), an order analysis is applied to identify if the main actors are related. | (3) | Sim(, ) (4) | |

| IV | The sentence is compared using its subject and object against the noun phrase. A discount factor is applied due to the absence of a verb. | (1), (2) | (3) | |

| V | The sentence and the question are compared at their subjects, objects and verbs, a discount factor is applied due to the interrogative nature of the latter. | (3) | 0.75 ∗ Sim(SVO,Q) (4) | |

| VI | The sentence is compared using its subject and object against the noun phrase. A discount factor is applied due to the absence of a verb. | (1), (2) | (3) |

| Case | Text 1 | Text 2 |

|---|---|---|

| I | A young person deep in thought. | A young man deep in thought. |

| II | A person is on a baseball team. | A person is playing basketball on a team. |

| III | How exactly is Germany being ‘punished’ for the stupidity of WW? | How exactly are they being punished? |

| IV | A dog under the stairs | A dog is resting on the stairs. |

| V | We never got out of it in the first place! | Where does the money come from in the first place? |

| VI | Why are Russians in Damascus? | Russians in Damascus! |

| Case | Computation (1) | Sim > 0 |

|---|---|---|

| I | yes | |

| II | yes | |

| III | yes | |

| IV | yes | |

| V | yes | |

| VI | yes |

| Case | Integration | Sim |

|---|---|---|

| I | (3) | 5.0 |

| II | (3) | |

| (3) | ||

| (3) | ||

| (5) | 4.0 | |

| III | (3) | |

| (3) | ||

| (3) | ||

| (4) | 1.65 | |

| IV | (3) | 3.75 |

| V | (3) | |

| (3) | ||

| (3) 3 | ||

| (4) | 1.24 | |

| VI | (3) | 3.75 |

| KB | Dictionary | Thesaurus | Found Concepts | Missing Concept |

|---|---|---|---|---|

| 1 | Collins | Thesaurus.com | 826/802 | 46/70 |

| 2 | Wordnet | Synonym.com | 800/827 | 72/45 |

| 3 | Dictionary.com | Synonym.com | 828/827 | 44/45 |

| 4 | MacMillan | Synonym.com | 621/827 | 251/45 |

| 5 | Oxford | Synonym.com | 733/827 | 139/45 |

| 6 | Merriam Webster | Synonym.com | 798/827 | 74/45 |

| 7 | Collins | Synonym.com | 826/827 | 46/45 |

| KB 1 | KB 2 | KB 3 | KB 4 | KB 5 | KB 6 | KB 7 |

|---|---|---|---|---|---|---|

| 77.46% | 70.43% | 68.12% | 71.63% | 70.40% | 57.16% | 72.44% |

| SemEval 2017 Pairs | Top Models | Proposed Method | ||||

|---|---|---|---|---|---|---|

| Pairs [33] | (GS) [33] | Score | (Difference) | Model | Our | (Difference) |

| Pair 14 | 1.8 | 3.2 | (+1.4) | DT_team [9] | 1.67 | (−0.13) |

| Pair 78 | 1.0 | 1.9 | (+0.9) | FCICU [31] | 2.70 | (+1.70) |

| Pair 84 | 4.0 | 3.6 | (−0.4) | BIT [11] | 2.50 | (−1.50) |

| Pair 115 | 5.0 | 4.5 | (−0.5) | ITNLP [34] | 5.00 | (+0.00) |

| Pair 184 | 3.0 | 4.0 | (+1.4) | BIT [11] | 2.50 | (−0.50) |

| Pair 195 | 0.2 | 0.8 | (+0.6) | FCICU [31] | 0.14 | (−0.06) |

| Model | Pearson ×100 | Synsets | Align. | Train | KB | WEmb | StopWords | POS | Sim |

|---|---|---|---|---|---|---|---|---|---|

| Supervised | |||||||||

| ECNU [10] | 85.18 | - | BOW, Dependency, | DAN [18], LSTM [8] | PPDB [35] | Glove [36], Paragram | - | Stanford [19] | Regression (RF, GB) |

| BIT [11] | 84.00 | - | - | LR, SVM | British National Corpus | Word2vec [13] + IDF | - | NLTK [37] | cosine + IDF |

| DT_team [9] | 83.60 | - | Word and chunk | DSSM, CDSSM | PPDB [35] | Word2vec [13], Sent2vec | - | own POS | LR, GB |

| ITNLPAiKF [34] | 82.31 | - | Semantics, context | SVR | Wikipedia, twitter | Word2vec [13], Glove [36] | - | NLTK [37] | stat. freq. (IC) [20] |

| MITRE [38] | 80.53 | - | based on cosine | CRNN, LSTM | Wikipedia | Word2vec [13] | - | - | string sim. |

| HCTI [39] | 81.56 | - | - | CNN | GloVe [36] | GloVe [36] | - | NLTK [37] | Cosine |

| Udl [40] | 80.04 | Alig. POS | Reg. RF | GloVe [36] | GloVe [36] | cosine | |||

| STS-UHH [41] | 80 | - | Glove [36], Dependency Graph | LDA | Distributional Thesaurus | Glove [36] | - | Stanford [19], TLCS | weighted cosine |

| PurdueNLP [42] | 79.28 | - | - | Skip–Gram | PPDB [35] | Paraphrase and Event | - | - | Regression |

| neobility [43] | 79.25 | - | N-gram overlap | RNN, GRU | Wikipedia, Wordnet [7] | Word2vec [13] | - | - | Cosine |

| OPI-JSA [44] | 78.50 | - | - | RNN, MLP | BNC, BookCorpus | GloVe [36] | - | PoS weighted on cosine | cosine |

| L2F/INESC-ID [45] | 78.11 | - | - | NN | SICK | Vectors | - | - | SMATCH |

| Lump [46] | 73.76 | from BabelNet [5] | Explicit Analysis | GB, SVM | BabelNet [5] | Word2vec [13] | - | - | Cosine |

| ResSim [47] | 69.06 | - | Word Alig. | NN | Europarl | Europarl | - | - | Adam alg. |

| Unsupervised | |||||||||

| FCICU [31] | 82.80 | from BabelNet [5] | Similarity metric | - | BabelNet [5] | - | yes | Stanford [19] | Synset, Alignment |

| BIT [11] | 81.61 | - | Sentence Alignment | - | British National Corpus | - | - | NLTK [37] | stat. freq. (IC) [20] |

| SEF@UHH [32] | 78.80 | - | - | PV-DBOW | Common-crawl, others | Doc2Vec [48] | - | - | cosine |

| Our | 77.40 | deducted from KB | Driven by POS | - | Dictionary, Thesaurus | - | yes | Rules (SVO) | Weighted by POS |

| STS-UHH [41] | 73 | - | GloVe [36] | - | - | Glove [36] | - | Stanford [19] | Cosine |

| QLUT [49] | 68.87 | - | - | - | Wikipedia | Word2vec [13] | yes | Stanford [19] | Cosine |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zatarain, O.; Rumbo-Morales, J.Y.; Ramos-Cabral, S.; Ortíz-Torres, G.; Sorcia-Vázquez, F.d.J.; Guillén-Escamilla, I.; Mixteco-Sánchez, J.C. A Method for Perception and Assessment of Semantic Textual Similarities in English. Mathematics 2023, 11, 2700. https://doi.org/10.3390/math11122700

Zatarain O, Rumbo-Morales JY, Ramos-Cabral S, Ortíz-Torres G, Sorcia-Vázquez FdJ, Guillén-Escamilla I, Mixteco-Sánchez JC. A Method for Perception and Assessment of Semantic Textual Similarities in English. Mathematics. 2023; 11(12):2700. https://doi.org/10.3390/math11122700

Chicago/Turabian StyleZatarain, Omar, Jesse Yoe Rumbo-Morales, Silvia Ramos-Cabral, Gerardo Ortíz-Torres, Felipe d. J. Sorcia-Vázquez, Iván Guillén-Escamilla, and Juan Carlos Mixteco-Sánchez. 2023. "A Method for Perception and Assessment of Semantic Textual Similarities in English" Mathematics 11, no. 12: 2700. https://doi.org/10.3390/math11122700

APA StyleZatarain, O., Rumbo-Morales, J. Y., Ramos-Cabral, S., Ortíz-Torres, G., Sorcia-Vázquez, F. d. J., Guillén-Escamilla, I., & Mixteco-Sánchez, J. C. (2023). A Method for Perception and Assessment of Semantic Textual Similarities in English. Mathematics, 11(12), 2700. https://doi.org/10.3390/math11122700