1. Introduction

The rapid developments of robotics fundamental techniques and Artificial Intelligence (AI) models have enabled versatile outdoor robots to reduce human efforts in performing dull, dirty, and repetitive tasks, thereby resolving labour shortage issues and workplace-related safety infection risks. There is a vast market demand for diverse outdoor applications such as pavement sweeping, fumigation and fogging, landscaping, inspection, logistics, and security. Based on ABI Research, an expected shipment volume of outdoor mobile robots is 350,000 units by 2030, compared to 40,000 units in 2021 [

1]. Much research has been published on autonomous performance improvement to fit the outdoor environment; for example, environmental perception [

2], visual navigation [

3], path planning [

4], localisation [

5], motion control [

6], and pedestrian safety [

7]. Though the system degradation in an outdoor robot is faster due to its exposure to extreme terrain and weather changes, focus on Condition Monitoring (CM) studies are still not commonly used to assess health states and terrain-related flaws, causing accelerated deterioration and potential hazards. The above mentioned research works are mainly focused on performing operations autonomously. However, there is still a long way to go to make these robots operate without human guidance by considering the open, hostile, and unsafe outdoor environment. Also, local regulations and certifications are to be checked and complied with for deploying robots autonomously on public pavements [

8].

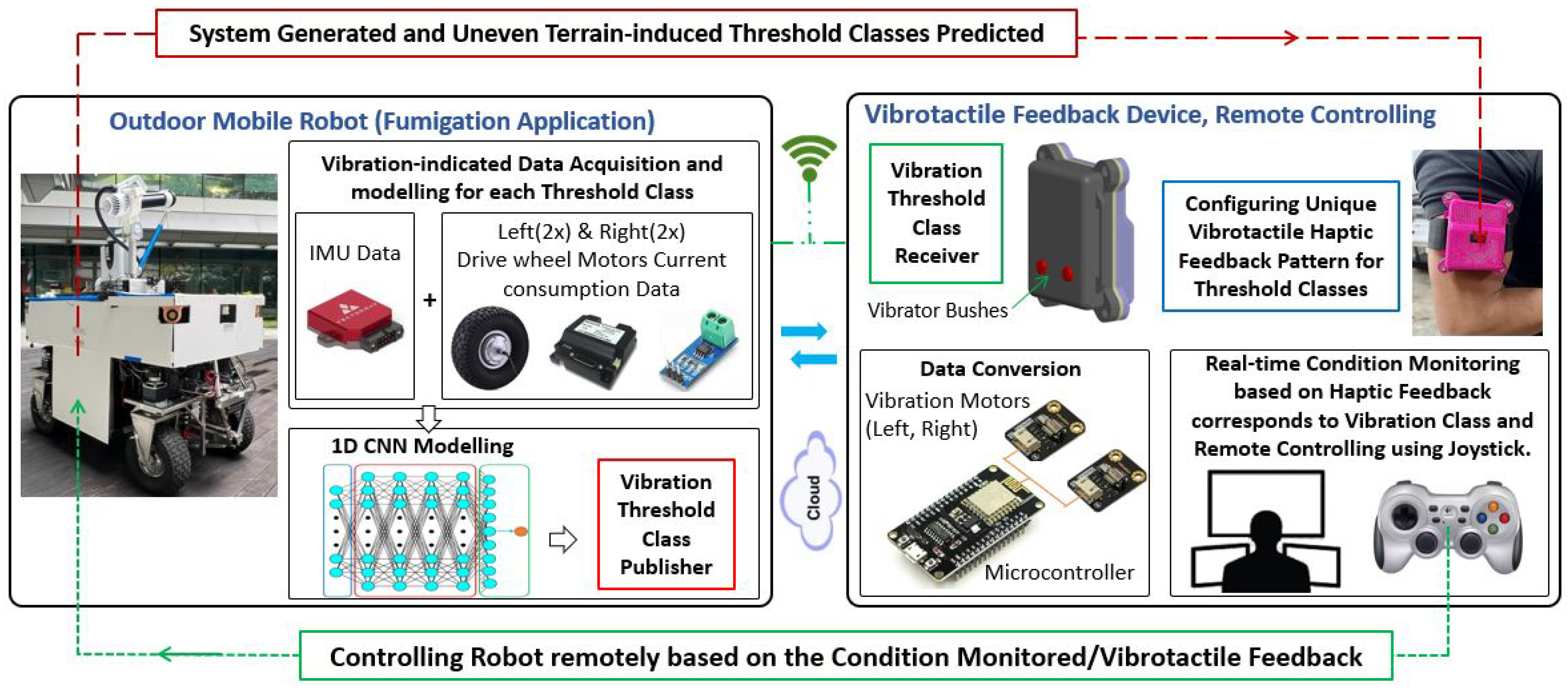

In this context, shared autonomy, where the robot’s autonomous system and operator input work collaboratively to make decisions and achieve common goals [

9,

10], is a safe and feasible option for outdoor robot deployment. Also, studies on haptic-feedback-based motion control of robots [

11,

12,

13,

14] have enabled effective remote operation. An environment-independent sensor selection for data acquisition and a fast and accurate classifier model is crucial for real-time CM frameworks for the outdoor environment. There are numerous AI-enabled and vibration-based terrainability studies for outdoor robots using different sensors to classify tile, stone, sand, asphalt, grass, and gravel [

15,

16,

17,

18,

19], which will help to decide the traversability of the robot, but there is not a proper CM approach for assessing the health condition of the robot and level of terrain-induced concerns that accelerate system degradation. Hence, an automated CM and feasible remote controlling framework involving a shared autonomy and haptic feedback system is imperative for outdoor robots assisting in either stopping or minimising the exposure to extreme terrain conditions based on the internal health and external terrain states of the robot. Towards this effort, this paper proposes and discusses vibration-based anomalies prediction using Inertial Measurement Unit (IMU) and current sensors data and a 1D Convolutional Neural Network (1D CNN) as the classifier, along with real-time robot control, by developing a wearable vibrotactile haptic feedback system, assuring productivity and operational safety in outdoor mobile robots.

1.1. Problem Statement

The deployment of mobile robots in a dynamically changing and unsafe outdoor environment is still a challenging problem, and condition monitoring studies performed to assess the health conditions of the robot and the uneven terrain features affecting accelerated deterioration is an unexplored research area, resulting in catastrophic failure, a high maintenance cost, unexpected downtime, operational hazards, and customer dissatisfaction. The aforesaid terrainability studies may help the last mail delivery or outdoor security robots in path planning, avoiding terrain-related flaws. However, in fumigation, lawn mowing, and pavement sweeping applications, the robot is supposed to cover a given area for the intended function. In particular, traversing through grass fields is more challenging where exteroceptive techniques are impractical as most ground-level imperfections are grass-covered. The system also degrades naturally due to continuous usage, even when deployed in a plain area, or there may be accelerated deterioration due to the terrain flaws depending on the workspace deployed. Hence, periodic maintenance is not advised for outdoor robots. The manual procedure is generally practised to assess the robot’s condition and how good the given area is for deployment, which is labour-oriented and results in high maintenance costs. Hence, a study on automated CM strategy for outdoor mobile robots is vital for addressing this problem.

Selecting proper sensors to extract the characteristics of both system degradation and terrain-induced concerns is critical, and it should be environment-independent for outdoor robots. Vibration is widely accepted for fault detection, and most of the studies used an IMU sensor for vibration-indicated data modelling based on the change in linear and angular motions. However, sometimes robots are stopped by ground-level obstacles where no abnormal vibrations are noticed, so more suitable sensors must be integrated and explored. A fast and computationally low-cost neural network model with higher prediction accuracy is mandated for real-time applications to avoid false positive actions. The shared autonomy and haptic-feedback-based approach is a feasible approach for CM in outdoor robots; however, the current studies are mainly focused on controlling robot arms, motion control, and safe navigation, avoiding obstacles in mobile robots and drones in their heading direction, and do not explore CM applications in outdoor mobile robots. Since CM covers internal and external factors and different threshold levels are involved, a multi-level haptic feedback patterns system is essential for effectively monitoring and controlling the robot. The proposed study analyses the abovementioned problems for a feasible solution for CM applications in outdoor mobile robots.

1.2. Related Works

Abnormal vibration is an indicator of system failure; hence, many fault detection and prognosis studies are conducted based on vibration-indicated data collected through suitable sensors, mainly accelerometers [

20,

21,

22]. The advent of deep learning models, especially 1D CNN, is found to be accurate in extracting the unique features in order to classify and assess the severity of anomalies, and they are used for real-time applications due to their simple structure, low computational complexity, and easy deployability [

23]. The vibration and 1D-CNN-based works are common for the CM and fault assessment of machine components [

24,

25], structural systems [

26], and industrial robots [

27]. Other CM and fault diagnosis works for industrial robots include minimising downtime in a wafer transfer robot [

28], failure prediction in a packaging robot [

29], and a safe stop for a collaborative robot [

30]. However, such CM works are not extended for outdoor mobile robots, though a great research scope using the typical onboard sensors and a necessity because of their vast market demand and safety. Towards this CM effort, we introduced two vibration-based approaches in previous works for indoor mobile robots, considering both internal and external sources of vibration. In an IMU sensor-based work [

31], the change in angular velocity and linear acceleration data due to vibration sources is mainly modelled as vibration data and, in the second work, a monocular camera sensor [

32] is used and the change in optical flow 2D vector displacement data is modelled as vibration-indicated data for training. In both works, a 1D CNN was adapted as a classifier and compared with other models, such as a Support Vector Machine (SVM), Multilayer Perceptron (MLP), Long Short-Term Memory (LSTM), and CNN-LSTM, showing better accuracy and inference time.

The CM studies for outdoor robots are more challenging and unexplored, and the existing studies are limited to terrain classification using different sensors and AI models to assess traversability only; for instance, a terrain type study using IMU and a Probabilistic Neural Network (PNN) for autonomous ground vehicles in [

15], classifying asphalts, gravel, mud, and grass. A terrain feature study based on texture-based descriptors is conducted in [

17] using a camera and Random Forest (RF) classifier. A sensor fusion approach using IMU and camera is used in [

18] for terrain classification, adapting an SVM as the classifier and mentioning that a faster model is needed for real-time application. A 3D LiDAR sensor is used for traversability analysis in [

19] with the positive naive Bayes classifier by defining the feature vectors with different dimensions. Regarding the wheel–terrain tough interactions due to ground-level imperfections and obstacles, a higher torque is applied to overcome the flaws and, naturally, a higher current consumption occurs. However, the current sensors are more used for energy efficient strategy studies in mobile robots [

33,

34] rather than CM applications, except an earlier study in [

35] for fault diagnosis in motor-driven equipment by monitoring current readings.

The vibrotactile haptic feedback approach is used in many systems, conveying information to the user or operator through vibration patterns, mainly for human health monitoring or assistance, by developing wearable devices, such as the health monitoring of an offshore operator in [

36] and wheelchair navigation for disability people in [

37]. Recently, these techniques have been applied to remotely controlled industrial and mobile robots. For instance, haptic-feedback-based teleoperation using a Phantom Omni haptic feedback master device and a 6-DOF robot arm was used with a force sensor as a slave, enabling the operator to adjust the position and direction of the arm safely from a distance based on the distance from the haptic feedback to the manipulator [

38], and a similar study was conducted in [

39] to stop the manipulator avoiding any collision. An inexpensive electro-tactile-based haptic feedback method is presented in [

40] for obstacle avoidance in a cluttered workspace of a teleoperated robot arm. A wearable hand gesture recognition and vibrotactile system is developed in [

41] to control drone navigation, where the obstacle information in the heading direction is fed back to the operator. A haptic feedback and shared autonomy-based teleoperation for the safe navigation of mobile robots is explained in [

11], where obstacle range information is fed back to the operator as a force through a haptic probe. Similar force feedback studies for mobile robots were conducted, including [

12,

13,

14]. These haptic-feedback-based works are mainly undertaken for indoor robots for smooth navigation and avoiding obstacles in the heading direction. However, to our knowledge, there are no such works for CM applications in outdoor robots, feeding back the system deterioration stage and terrain-related features to ensure the robot’s health and operational safety.

1.3. Contributions

- 1.

An unexplored vibration-based Condition Monitoring (CM) framework is introduced for outdoor mobile robots considering both the system deterioration state and uneven terrain features.

- 2.

Eight abnormal states are identified for an outdoor wheeled robot set at different threshold levels for CM in view of real-world cases.

- 3.

The vibration-indicated data are modelled based on IMU and current sensors data, which complement each other for system-generated/degraded and wheel–terrain interaction-related robot behaviour.

- 4.

A simple 1D CNN-based model is structured for the fast, accurate, and computationally low cost enabling of the real-time prediction of vibration threshold classes.

- 5.

A novel wearable vibrotactile haptic feedback device architecture is presented for CM applications in outdoor robots and configured with unique tactile feedback patterns for each threshold class.

- 6.

The real-time field case studies conducted using an in-house-developed outdoor fumigation robot show the impacts of the proposed research framework, enhancing the robot’s health and operational safety.

- 7.

The proposed AI-enabled vibrotactile feedback-based CM framework for real-time remote controlling is the first of its kind for outdoor mobile robots.

The remainder of this paper is structured as follows.

Section 2 presents an overview and methodology of the proposed work.

Section 3 elaborates on the framework evaluation and results.

Section 4 explains field case studies conducted to validate the proposed method for real-time application, and

Section 5 concludes with a summary of the work.

3. Training and Evaluation of 1D-CNN-Based Threshold Class Prediction Model

This section composes data acquisition methods representing the nine classes based on the two heterogeneous sensors and their visual representation, followed by the 1D CNN model training for optimum performance and evaluation results.

3.1. Training Dataset Preparation for the Vibration Threshold Classes

The data acquisition representing the nine classes for training the 1D CNN model, using the two heterogeneous sensors integrated with the fumigation robot, is critical. Hence, it is firstly confirmed that the Vectonav VN-100 IMU sensor and the four ACS712 30 A current sensors are mounted firmly with the chassis and wheel motors, and the data subscription rate (80 Hz) and dataset collection algorithm work properly, compiling the data in an array of [n × 128 × 11] as explained in

Section 2.1.3. The robot’s linear speed is set at 0.3 m/s, which is the same as the operating speed, and the robot is driven in a zig-zag pattern while collecting the training data. For the safe class dataset preparation, the robot is tested, assuring good health, and is operated on plain and well-maintained pavements and grass fields, where no apparent vibrations are observed. The dataset collected during this trial is saved and labelled as the safe class. The data collection for the abnormal threshold classes involves robot modification representing system-generated classes and running through uneven and simulated ground-level obstacle environments, including positive and negative obstacles/features and unstructured grass fields. Some of these setups used to capture the internal system-generated classes (health issues) and external uneven/undetected ground-level obstacles/features for terrain-induce classes data collection are shown in

Figure 8.

The robot was modified on a minor level for the Moderately safe System-generated class (MS). This includes loosening the components assembly mounting brackets, setting the air pressure of one or more wheels as low, reducing the suspension system performance, and placing offset the heavier items such as the battery. Then, the robot was driven on the same plain terrains as the safe class and a minor level of vibration was noticed compared to the safe class, which is purely due to the degraded system causes. The data were collected and labelled as the MS class. Further, for the Unsafe System-generated class (US), the modifications above intensified more with close observations and safety measures, such as an emergency stop, resulting in a higher vibration of the robot compared to the MS class. The data collected during these trials were labelled as the US class.

The left and right wheels of the robot are kept 0.7 m apart; hence the spot ground-level obstacles are exposed to the particular wheel only, and the deterioration rate is affected by the specific wheel and related assembly only. In this view, if the robot is exposed to the spot obstacles at left-side wheels (front and rear), it will be labelled as MTL or UTL depending on the size of the ground-level obstacles, and, similarly for right-side wheels, MTR or UTR. The advantage of this approach is that the operator can easily avoid the terrain-induced vibration, detecting left or right spot obstacles by moving left and right, utilising the holonomic mobility of the robot as well. Alternatively, if the obstacle is spread like a long root or a large uneven pebbled area, both left/right wheels undergo the same experience; hence, such data are labelled as MT or UT.

Next, for collecting moderately safe terrain-induced classes data, the robot is exposed to typical pavements and grass fields with uneven/unstructured surfaces, damaged/missing tiles pavement, stones, roots, pits, utility hole raised lids, and gutters, including simulated obstacles. Here such selected imperfections had a moderate size of around 2–4 cm, both positive and negative. As explained above, these Moderate Terrain-induced data are recorded as MTL, MTR, and MT. The same exercise and approach are repeated for Unsafe Terrain-induced classes (UTL, UTR, and UT), driving through the undesired terrain features of sizes of around 4-6 cm height, and the data were labelled accordingly.

For all Unsafe classes (US, UTL, UTR, and UT), as the robot vibrates more severely, the deterioration rate become faster, and chances of total failure and hazards are high. Hence, during such trials, the robot stopped intermittently and adjusted to continue trials as an unsafe class. Also, all safety measures like close observation and an emergency stop button were taken if needed. For each threshold class, a total of 2500 samples, forming an array of [2500 × 128 × 11], were saved and split into 80% and 20% for training and validation, respectively, for training the 1D CNN model. Additionally, a total of 500 dataset samples were recorded for each class to evaluate the accuracy of the trained model. The vibration-indicated IMU and current sensors data across the nine classes found different values/patterns, enabling a faster convergence in extracting the unique characteristics of each class and a higher prediction accuracy. For a visual representation of this unique dataset for each class, we randomly collected a single dataset of 128 elements and used only five features here for plotting, i.e., features from IMU (angular velocity in X, Y, and Z axes) and the current sensor (left and right wheel current consumption) as shown in

Figure 9 and

Figure 10.

As the data features are in different scales, especially the current sensors values, normalisation pre-processing is conducted for the standard scale, supporting faster convergence during the 1D CNN training. Here, IMU data were normalised into −1 to +1 and 0 to 1 for current consumption data following Equations (5) and (6), respectively.

3.2. 1D CNN Model Training and Evaluation Results

This section provides the 1D CNN model training methodology and the results. The heterogeneous sensors-based unique dataset compiled is trained following a supervised learning strategy using an Nvidia GeForce GTX 1080 Ti-powered workstation and Tensorflow DL-library [

43]. To avoid over-learning and for generalisation, a K-fold cross-validation technique is applied, where k = 5, assuring the dataset quality. A momentum with gradient descent optimising strategy is adapted for faster learning and in order not to be stuck with local minima. After tuning with different functions and values, the following hyperparameters are used for the optimum performance of the model. An adaptive learning rate approach optimisation algorithm—an adaptive moment optimisation (Adam) optimiser [

44] is applied with a learning rate of 0.001. Here, the exponential decay rates of 0.9 and 0.999 are used for the first and second moment, respectively. Considering this model’s multinomial classification and probability prediction, a categorical cross-entropy loss function is used, ensuring minimum loss while compiling the model. The model performed better when set with a batch size of 32 and an epoch size of 100, as plotted in

Figure 11, depicting the loss and accuracy curve graphs during training and validation.

The prediction accuracy of the trained model is assessed based on the statistical metrics precision, recall, F1-score, and accuracy following Equations (7)–(10) [

45], where TP, TN, FP, and FN are True Positive, True Negative, False Positive, and False Negative, respectively. The additional 500 samples recorded for each class during data acquisition, which were not part of training, were used for the 1D CNN model evaluation, resulting in an average prediction accuracy of 92.6% with an inference time of 0.238 ms.

Table 1 lists the detailed results of this offline evaluation test for each class. Hence, this proposed model is suitable for accurately predicting the vibration threshold classes and condition monitoring applications in outdoor mobile robots

A summary of the various challenges and fixes during the 1D-CNN-based classifier training and an evaluation are as follows. The training data acquisition and compilation representing all nine classes were challenging; the data subscription rate for both types of sensors selected (IMU and current sensors) should be the same, and a higher data subscription rate is preferred for a maximum number of predictions per unit of time, where each data feature (a total of 11 features from two types of sensors) with a process window size (128 temporal data) is to be confirmed, forming a single dataset for training. This was realised by developing a data subscription and compiling algorithm, lowering the delay in the Arduino program during the current consumption data subscription for the maximum data subscription rate, and ensuring that the dataset was prepared for all the features with a uniform processing window size. As the proposed IMU and current heterogeneous sensor data fall on different scales, there are difficulties in converging speed during training; hence, the data are brought to a common scale by normalisation. A fast and low computation cost and higher prediction accuracy are crucial for the CM in a mobile robot; hence, the model is structured with a minimum number (four) of convolution layers and tested with different filter sizes and convolution windows in order to choose ideal parameters to fit the given dataset. We opted for a suitable activation function (ReLU) for this classification model, tested with different configurations of pooling and dropout layers to reduce the computation time and overfitting, and finalised the structure of the 1D CNN model for a fast and accurate prediction model. Similarly, the challenges faced during the training for fast convergence and better accuracy were fixed by exploring the various hyperparameters of the model. We used the accuracy and loss curve tool to monitor how well the proposed 1D CNN model fits training data (training loss) and new data (validation loss) and updated the hyperparameters accordingly to finalise a fast and accurate model.

4. Real-Time Experiments and Framework Validation

The proposed haptic-feedback-based real-time condition monitoring and remote controlling framework to assess system-generated issues mainly deterioration and minimise traverse through terrain-related flaws reducing the chances of accelerated deterioration and hazards, is validated by conducting three field case studies. Prior to the case studies, a threshold class inference and haptic feedback trigger engine is developed as illustrated in the flowchart,

Figure 12. Here, the engine applies the 1D CNN model’s knowledge for every new data sample for inferencing in real-time. A total of 128 temporal data (

Data) and 11 features (

Feature) form a new dataset [128 × 11] for prediction. A placeholder

TemporalBuffer is used during dataset collection, and

InferenceBuffer holds one complete dataset as input to the 1D CNN model, runs on the TensorFlow platform, and returns the predicted threshold class. The algorithm also switches the predicted class (

PC) to the corresponding vibration pattern, as illustrated. Next, the robot’s shared autonomy and control operations over cloud computing, including the class inference and haptic feedback engine performance, are verified by running the robot on a plain pavement and intruding rods of different sizes, testing safe, MTL, MTR, MT, UTL, UTR, and UT classes as shown in

Figure 13.

Field case studies are planned to assess all the threshold classes’ real-time prediction accuracy and how the vibrotactile haptic feedback system enables operators to stop, avoid/minimise ground-level obstacles, and ensure a safe class. Two engineers are trained to learn vibration patterns for each threshold class and are assigned these field trials to monitor and switch over to manual control and operate based on haptic feedback threshold class patterns. The robot is driven in a zig-zag pattern to cover the given test area at a linear speed of 0.3 m/s. The IMU and current sensors data subscription ensure the same at 80 Hz. Before starting each trial, it is also ensured that the robot’s health conditions are good.

A plain ground with uniformly paved tiles is identified for the first case study and a total area of 10 × 12 m

2 is selected for testing. There are few drainage utility holes with raised covers as ground-level obstacles and also some aluminium profiles of different sizes are kept, randomly inducing terrain threshold classes. Secondly, an uneven grass field of a test area of 15 × 20 m

2 is selected, where many natural surface level flaws of different sizes are observed, such as small pits, stones, and tree roots spread over the ground, that are either passable or blocking. Most of these imperfections are covered by grass so that they can be detected and controlled only through this proposed retrospective technique. Finally, a pavement area is identified with ground-level obstacles, such as missing or broken tiles, small pits, cliffs, kerbs, gutters, and drainage cover at varied heights, and more obstacles are added, which is the same as the first case study, simulating a poorly maintained pavement. Here, a total area of 8 × 16 m

2 was selected and isolated from other users, considering further testing as a long-term trial for system-generated classes. The test areas for these three field case studies are shown in

Figure 14.

We planned three operation modes for each case study to validate the effectiveness of this proposed haptic-feedback-based condition monitoring and robot controlling framework. The first mode is without executing the proposed haptic feedback system and observing the total number of abnormal threshold classes predicted by the system. This means that the robot is exposed to each test area with uneven terrain, which will cause an accelerated system deterioration or become hazardous if driven continuously. The second and third modes run with the proposed haptic feedback system, and the robot is controlled remotely by operators 1 and 2, respectively. Here, we mainly tested how many ground-level obstacles are avoided based on the haptic feedback when the robot hit the terrain obstacles and control, avoiding the severe features or being limited to the moderate class, or helping not to repeat this for the rear wheels by adequately changing the heading direction. So, here, we mainly checked the total number of abnormal threshold classes reduced compared to without the haptic feedback and controlling system. Also, with two operators, the repetitiveness of the system was tested and validated. The robot drove on the three field areas selected for each mode and covered the whole area once in a zig-zag pattern for each test. The test results summary of these three case studies (CS 1-3) for the three modes of operation is plotted in

Figure 15.

Here, for instance, in case study 1, without integrating the haptic feedback system for real-time control, which is mode 1, the robot encountered 62 abnormal terrain features. At the same time, the operator could control the robot for the same test environment, avoiding such terrain anomalies based on the proposed haptic feedback, and encountered only 17 terrain features (as shown in mode 2), reducing the chances of accelerated system degradation and operational hazards.

Accordingly, the total number of abnormal classes exposed without haptic feedback and hence the chances of system deterioration and hazards is four times more than with the proposed system. Also, there is not much difference between modes 2 and 3, i.e., the ease of operation in detecting the feedback pattern and controlling is validated. Additionally, for mode 1 without haptic-feedback-based operations, a safety operator on the spot was involved whenever the robot got stuck, especially when encountering MT, UTL, UTR, and UT classes. The total number of instances of manual involvement needed to resume the operations as per mode 1 for case studies 1–3 was 4, 13, and 5, respectively. This also shows additional time and effort for a robot without integrating such a proposed CM framework, other than the system degradation and chances of hazardous events.

Here, no system-deteriorated classes are observed as the robot was tested in healthy condition and the test was completed in the short term to cover the given area once. So, for testing system-generated classes, the third case study was planned to continue for the long term (average 4 h a day) by removing the comparatively bigger ground-level obstacles, allowing the robot to pass over the obstacles limited to moderated safe terrain-induced classes. The robot was driven in a closed loop and zig-zag pattern in the predefined area. The prediction classes and haptic feedback were tested daily. On the 20th day, an intermittent prediction of the MS class and corresponding haptic feedback pattern was observed. We continued this state for a few hours, closely monitoring the ground truth with safety precautions such as e-stop, and, further, one of the wheels became punctured and generated wobbling. The system occasionally predicted the US class as haptic feedback whenever the wobbling effect was severe. To avoid total failure or hazards, we stopped the operations and triggered maintenance actions to fix the loose assembly and replace the wheel.

We randomly collected 300 samples of each class from the above case studies and long-term tests, closely observing the ground truth and actual haptic feedback to assess the real-time prediction accuracy. Accordingly, the average accuracy was 91.1%, as listed in

Table 2 for each class, close to the offline evaluation results. This shows the feasibility and repetitive accuracy of the proposed vibrotactile haptic-feedback-based condition monitoring framework for outdoor mobile robots for real-time applications.

The proposed study is distinct from the existing techniques, such as shared autonomy, haptic feedback, and terrain classification. The usual shared autonomy works mainly to switch to operator control due to the limitation of safe navigation, i.e., mainly avoiding obstacles in its heading direction. The haptic feedback devices are generally used for human health monitoring and to collect information about the obstacles in mobile robots. The terrain classification intends to detect the type of terrain in order to assess the traversability. However, our proposed work is for CM applications in outdoor mobile robots detecting both the robot’s degradation level and terrain extremities, causing accelerated deterioration and operational hazards. Hence, this is an additional protection other than shared autonomy capabilities.