ReqGen: Keywords-Driven Software Requirements Generation

Abstract

1. Introduction

- Enough domain knowledge is required to state the right content, as well as to select appropriate words and expressions. However, domain analysis typically requires non-trivial human effort [4].

- Writing the specifications word-by-word is time-consuming, let alone that many expressions are repeated, especially in similar or related requirements. Writing or locating, copying, and then pasting the repeated content is a waste of human effort.

- Generally accepted requirements syntax, such as EARS [5], is suggested for writing well-formed specifications. Learning and carefully applying non-business-related knowledge is also a burden on requirements analysts.

- An approach, ReqGen, for automatically generating software requirements statements based on two or more keywords and their syntax roles.

- The evaluation of ReqGen on two public datasets from different domains with promising results and suggestions for knowledge injection into pre-trained models.

- The source code and experimental data made publicly available on Github https://github.com/ZacharyZhao55/ReqGen, accessed on 1 September 2022.

2. Background

2.1. UniLM

2.2. Attention Mechanism

2.3. Bi-LSTM

3. Related Work

3.1. Automated Requirements Generation

3.2. Automated NLG with Lexical Constraints

4. Our Approach: ReqGen

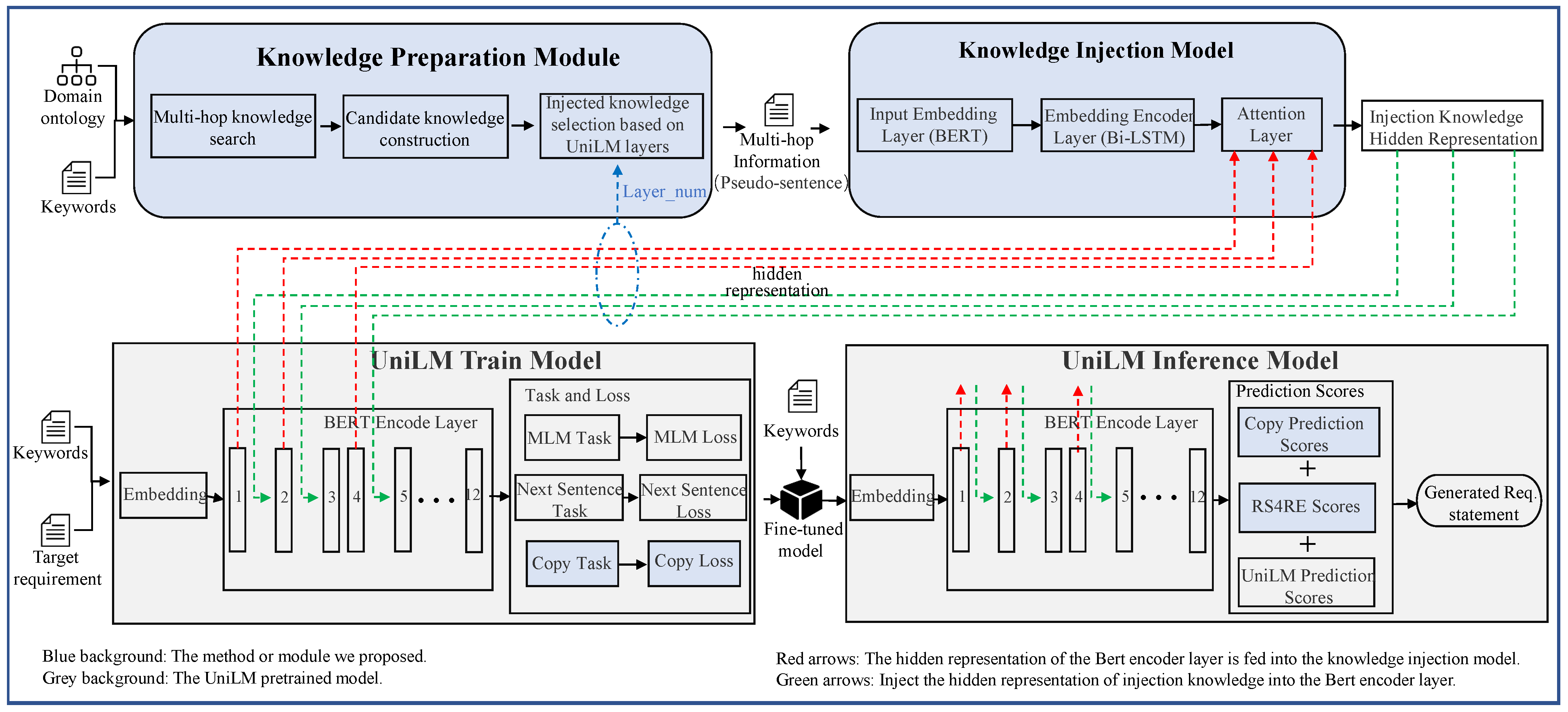

- A knowledge preparation module that retrieves keywords-related information from the domain ontology (in terms of triples consisting of entity pairs and their relationships), transforms all related information into pseudo-sentences, and selects the knowledge to be injected into the different layers of UniLM.

- A knowledge-injection model to inject the knowledge produced by the knowledge preparation module into UniLM. The injected knowledge sequence is encoded by a Bi-LSTM structure using a BERT-based sentence embedding and is injected through the attention mechanism.

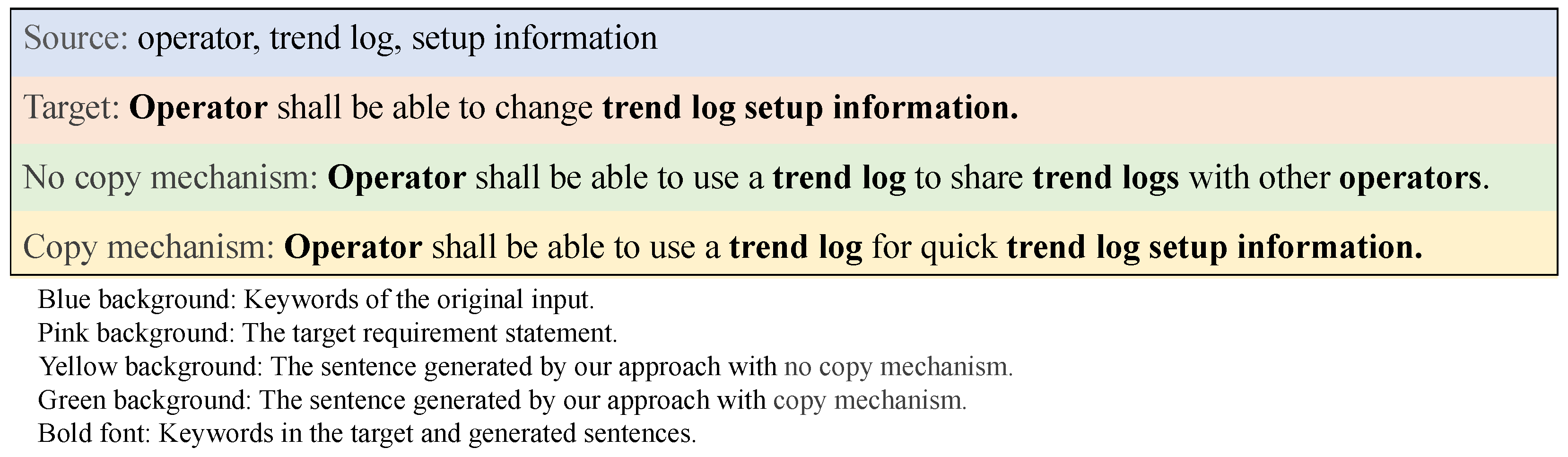

- A keywords copy mechanism was added in the original UniLM, which enables it to perform a copied-word classification task in the UniLM training. Moreover, a prediction method was added to the UniLM inference model to decide whether the next token is a copied word.

- A requirements-syntax-constrained decoding module that includes a semantics-related metric used in the inference model with the original beam search to optimize the final statements towards a specific syntax.

4.1. Knowledge Preparation Module

4.1.1. Multi-Hop Knowledge Search

4.1.2. Pseudo-Sentence Construction

- subClassOf or hasSuperClasses: For the triple of , we created a sentence of “a is subclass of b”. We converted the triple of into “a has super class b.”

- subPropertyOf: For the triple of , we created a sentence like “a is subproperty of b”.

- has domain: specifies the domain of a property P, indicating that any resource with a given property is an instance of the domain class (e.g., ).

- has range: specifies the range of a property P, indicating that the value of a property is an instance of the range class (e.g., ).

4.1.3. Knowledge Selection towards the UniLM Layers

4.2. Knowledge Injection Model

4.2.1. Pseudo-Sentence Embedding and Encoding

4.2.2. Attention Mechanism

4.3. UniLM Module

4.3.1. Copy Mechanism

4.3.2. Requirement-Syntax-Constrained Decoding

5. Experimental Evaluation

- RQ1: How well does ReqGen perform in comparison with existing NLG approaches on requirements specification generation based on keywords?

- RQ2: To what extent does the multi-layer knowledge injection contribute to the requirements specification generation?

- RQ3: To what extent does the knowledge frequency filtering contribute to the requirements specification generation?

- RQ4: To what extent does each proposed design component contribute to the requirements specification generation?

5.1. Experimental Design

5.1.1. Data Preparation

5.1.2. Baselines

- BERT [40] jointly conditions on contextual information, which enables pre-training and deep bidirectional representation from unlabeled text. We fine-tuned the BERT base model on our task, and the number of parameters was 110 M.

- Generative Pre-trained Transformer 3 (GPT3) [58] uses the one-way language model training of GPT2. The model size was increased to 175 billion, and 45 terabytes of data were used for training. GPT3 can perform downstream tasks without fine-tuning in a zero-shot setting. We also did not perform fine-tuning.

- Bidirectional and Auto-Regressive Transformers (BART) [63] is a denoising autoencoder built with a sequence-to-sequence model suitable for various tasks. It uses a standard Transformer-based neural machine translation architecture. It is trained using text corrupted with an arbitrary noising function and by learning to reconstruct the original text. We used the BART-large model on our task, and the number of parameters was 400 M.

- UniLM [32] uses three types of language modeling tasks for pre-training, which is achieved by employing a shared Transformer network and using specific self-attention masks to control the context of the prediction conditions. We used the UniLM base model, whose parameter amount is 340 M.

- Zhang et al. [72] proposed POINTER, which is based on the inserted non-autoregressive pre-training method. A beam search method was proposed to achieve log-level non-autoregressive generation.

- The constrained generation by Metropolis–Hastings sampling (CGMH) method [53] can cope with both hard and soft constraints during sentence generation. Different from the traditional latent space usage, it directly samples from the sentence space using the Metropolis–Hastings sampling.

5.1.3. Metrics

5.2. Experimental Results and Analysis

5.2.1. RQ1 Effectiveness of Our Approach

5.2.2. RQ2 Effectiveness of Multi-Layer Knowledge Injection

5.2.3. RQ3 Effectiveness of Frequency Filtering in the Knowledge Search

5.2.4. RQ4 Ablation Experiment

6. Discussion

6.1. Threats to Validity

6.2. Implications

6.3. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Terzakis, J. The impact of requirements on software quality across three product generations. In Proceedings of the 2013 21st IEEE International Requirements Engineering Conference (RE), Rio de Janeiro, Brazil, 15–19 July 2013; pp. 284–289. [Google Scholar]

- Aurum, A.; Wohlin, C. Engineering and Managing Software Requirements; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar] [CrossRef]

- Wiegers, K. Software Requirements; Microsoft Press: Redmond, WA, USA, 2013. [Google Scholar]

- Lian, X.; Rahimi, M.; Cleland-Huang, J.; Zhang, L.; Ferrai, R.; Smith, M. Mining Requirements Knowledge from Collections of Domain Documents. In Proceedings of the 24th IEEE International Requirements Engineering Conference (RE), Beijing, China, 12–16 September 2016; IEEE Computer Society: New York, NY, USA, 2016; pp. 156–165. [Google Scholar] [CrossRef]

- Mavin, A.; Wilkinson, P.; Harwood, A.; Novak, M. Easy Approach to Requirements Syntax (EARS). In Proceedings of the 17th IEEE International Requirements Engineering Conference, Atlanta, GA, USA, 31 August 2009–4 September 2009; pp. 317–322. [Google Scholar] [CrossRef]

- Maiden, N.A.M.; Manning, S.; Jones, S.; Greenwood, J. Generating requirements from systems models using patterns: A case study. Requir. Eng. 2005, 10, 276–288. [Google Scholar] [CrossRef]

- Yu, E.S.K.; Bois, P.D.; Dubois, E.; Mylopoulos, J. From Organization Models to System Requirements: A ’Cooperating Agents’ Approach. In Proceedings of the Third International Conference on Cooperative Information Systems (CoopIS-95), Vienna, Austria, 9–12 May 1995; pp. 194–204. [Google Scholar]

- Letier, E.; van Lamsweerde, A. Deriving operational software specifications from system goals. In Proceedings of the Tenth ACM SIGSOFT Symposium on Foundations of Software Engineering 2002, Charleston, SC, USA, 18–22 November 2002; pp. 119–128. [Google Scholar]

- Landtsheer, R.D.; Letier, E.; van Lamsweerde, A. Deriving tabular event-based specifications from goal-oriented requirements models. Requir. Eng. 2004, 9, 104–120. [Google Scholar] [CrossRef]

- Van Lamsweerde, A. Goal-oriented requirements enginering: A roundtrip from research to practice [enginering read engineering]. In Proceedings of the 12th IEEE International Requirements Engineering Conference, Kyoto, Japan, 6–10 September 2004; pp. 4–7. [Google Scholar]

- van Lamsweerde, A.; Willemet, L. Inferring Declarative Requirements Specifications from Operational Scenarios. IEEE Trans. Softw. Eng. 1998, 24, 1089–1114. [Google Scholar] [CrossRef]

- Meziane, F.; Athanasakis, N.; Ananiadou, S. Generating Natural Language specifications from UML class diagrams. Requir. Eng. 2008, 13, 1–18. [Google Scholar] [CrossRef]

- Berenbach, B. The Automated Extraction of Requirements from UML Models. In Proceedings of the 11th IEEE International Conference on Requirements Engineering (RE 2003), Monterey Bay, CA, USA, 8–12 September 2003; IEEE Computer Society: New York, NY, USA, 2003; p. 287. [Google Scholar]

- Souag, A.; Mazo, R.; Salinesi, C.; Comyn-Wattiau, I. Using the AMAN-DA Method to Generate Security Requirements: A Case Study in the Maritime Domain. Requir. Eng. 2018, 23, 557–580. [Google Scholar] [CrossRef]

- Lian, X.; Cleland-Huang, J.; Zhang, L. Mining Associations Between Quality Concerns and Functional Requirements. In Proceedings of the 25th IEEE International Requirements Engineering Conference, RE 2017, Lisbon, Portugal, 4–8 September 2017; Moreira, A., Araújo, J., Hayes, J., Paech, B., Eds.; IEEE Computer Society: New York, NY, USA, 2017; pp. 292–301. [Google Scholar] [CrossRef]

- Lian, X.; Liu, W.; Zhang, L. Assisting engineers extracting requirements on components from domain documents. Inf. Softw. Technol. 2020, 118, 106196. [Google Scholar] [CrossRef]

- Li, Y.; Guzman, E.; Tsiamoura, K.; Schneider, F.; Bruegge, B. Automated Requirements Extraction for Scientific Software. Procedia Comput. Sci. 2015, 51, 582–591. [Google Scholar] [CrossRef]

- Shi, L.; Xing, M.; Li, M.; Wang, Y.; Li, S.; Wang, Q. Detection of Hidden Feature Requests from Massive Chat Messages via Deep Siamese Network. In Proceedings of the 2020 IEEE/ACM 42nd International Conference on Software Engineering (ICSE), Seoul, Republic of Korea, 5–11 October 2020; pp. 641–653. [Google Scholar]

- Dumitru, H.; Gibiec, M.; Hariri, N.; Cleland-Huang, J.; Mobasher, B.; Castro-Herrera, C.; Mirakhorli, M. On-demand feature recommendations derived from mining public product descriptions. In Proceedings of the 33rd International Conference on Software Engineering, ICSE, Honolulu, HI, USA, 21–28 May 2011; pp. 181–190. [Google Scholar]

- Sree-Kumar, A.; Planas, E.; Clarisó, R. Extracting software product line feature models from natural language specifications. In Proceedings of the 22nd International Systems and Software Product Line Conference—Volume 1, SPLC 2018, Gothenburg, Sweden, 10–14 September 2018; ACM: New York, NY, USA, 2018; pp. 43–53. [Google Scholar]

- Zhou, J.; Hänninen, K.; Lundqvist, K.; Lu, Y.; Provenzano, L.; Forsberg, K. An environment-driven ontological approach to requirements elicitation for safety-critical systems. In Proceedings of the 23rd IEEE International Requirements Engineering Conference, RE 2015, Ottawa, ON, Canada, 24–28 August 2015; IEEE Computer Society: New York, NY, USA, 2015; pp. 247–251. [Google Scholar]

- Khan, J.A.; Xie, Y.; Liu, L.; Wen, L. Analysis of Requirements-Related Arguments in User Forums. In Proceedings of the 27th IEEE International Requirements Engineering Conference, RE 2019, Jeju, Republic of Korea, 23–27 September 2019; pp. 63–74. [Google Scholar]

- Astegher, M.; Busetta, P.; Perini, A.; Susi, A. Requirements for Online User Feedback Management in RE Tasks. In Proceedings of the 29th IEEE International Requirements Engineering Conference Workshops, RE 2021 Workshops, Notre Dame, IN, USA, 20–24 September 2021; p. 336. [Google Scholar]

- Johann, T.; Stanik, C.; Alizadeh, B.A.M.; Maalej, W. SAFE: A Simple Approach for Feature Extraction from App Descriptions and App Reviews. In Proceedings of the 25th IEEE International Requirements Engineering Conference, RE 2017, Lisbon, Portugal, 4–8 September 2017; IEEE Computer Society: New York, NY, USA, 2017; pp. 21–30. [Google Scholar]

- Dąbrowski, J.; Kifetew, F.M.; Muñante, D.; Letier, E.; Siena, A.; Susi, A. Discovering Requirements through Goal-Driven Process Mining. In Proceedings of the 2017 IEEE 25th International Requirements Engineering Conference Workshops (REW), Lisbon, Portugal, 4–8 September 2017; pp. 199–203. [Google Scholar] [CrossRef]

- Sleimi, A.; Ceci, M.; Sannier, N.; Sabetzadeh, M.; Bri, L.; Dann, J. A Query System for Extracting Requirements-Related Information from Legal Texts. In Proceedings of the 27th IEEE International Requirements Engineering Conference, RE 2019, Jeju, Republic of Korea, 23–27 September 2019; pp. 319–329. [Google Scholar]

- Falkner, A.; Palomares, C.; Franch, X.; Schenner, G.; Aznar, P.; Schoerghuber, A. Identifying Requirements in Requests for Proposal: A Research Preview. In Proceedings of the Requirements Engineering: Foundation for Software Quality—25th International Working Conference, REFSQ 2019, Essen, Germany, 18–21 March 2019; Knauss, E., Goedicke, M., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2019; Volume 11412, pp. 176–182. [Google Scholar]

- Devine, P.; Koh, Y.S.; Blincoe, K. Evaluating Unsupervised Text Embeddings on Software User Feedback. In Proceedings of the 29th IEEE International Requirements Engineering Conference Workshops, RE 2021 Workshops, Notre Dame, IN, USA, 20–24 September 2021; pp. 87–95. [Google Scholar]

- Henao, P.R.; Fischbach, J.; Spies, D.; Frattini, J.; Vogelsang, A. Transfer Learning for Mining Feature Requests and Bug Reports from Tweets and App Store Reviews. In Proceedings of the 29th IEEE International Requirements Engineering Conference Workshops, RE 2021 Workshops, Notre Dame, IN, USA, 20–24 September 2021; pp. 80–86. [Google Scholar]

- Sainani, A.; Anish, P.R.; Joshi, V.; Ghaisas, S. Extracting and Classifying Requirements from Software Engineering Contracts. In Proceedings of the 28th IEEE International Requirements Engineering Conference, RE 2020, Zurich, Switzerland, 31 August–4 September 2020; pp. 147–157. [Google Scholar]

- Tizard, J. Requirement Mining in Software Product Forums. In Proceedings of the 27th IEEE International Requirements Engineering Conference, RE 2019, Jeju, Republic of Korea, 23–27 September 2019; pp. 428–433. [Google Scholar]

- Dong, L.; Yang, N.; Wang, W.; Wei, F.; Liu, X.; Wang, Y.; Gao, J.; Zhou, M.; Hon, H.W. Unified Language Model Pre-training for Natural Language Understanding and Generation. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; pp. 13042–13054. [Google Scholar]

- Lin, B.Y.; Zhou, W.; Shen, M.; Zhou, P.; Bhagavatula, C.; Choi, Y.; Ren, X. CommonGen: A Constrained Text Generation Challenge for Generative Commonsense Reasoning. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online Event, 16–20 November 2020; Findings of ACL. Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; Volume EMNLP 2020, pp. 1823–1840. [Google Scholar]

- Rothe, S.; Narayan, S.; Severyn, A. Leveraging pre-trained checkpoints for sequence generation tasks. Trans. Assoc. Comput. Linguist. 2020, 8, 264–280. [Google Scholar] [CrossRef]

- Wu, C.; Wu, F.; Qi, T.; Huang, Y. Empowering news recommendation with pre-trained language models. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 11–15 July 2021; pp. 1652–1656. [Google Scholar]

- Guo, W.; Zhang, L.; Lian, X. Putting software requirements under the microscope: Automated extraction of their semantic elements. In Proceedings of the 29th IEEE International Requirements Engineering Conference, RE, Notre Dame, IN, USA, 20–24 September 2021; pp. 416–417. [Google Scholar]

- ISO/IEC/IEEE 29148:2018(E); ISO/IEC/IEEE International Standard—Systems and Software Engineering—Life Cycle Processes—Requirements Engineering. IEEE: New York, NY, USA, 2018; pp. 1–104. [CrossRef]

- Zhao, Z.; Zhang, L.; Lian, X. What can Open Domain Model Tell Us about the Missing Software Requirements: A Preliminary Study. In Proceedings of the 29th IEEE International Requirements Engineering Conference, RE, Notre Dame, IN, USA, 20–24 September 2021; pp. 24–34. [Google Scholar]

- Li, Y.; Goel, P.; Rajendra, V.K.; Singh, H.S.; Francis, J.; Ma, K.; Nyberg, E.; Oltramari, A. Lexically-constrained Text Generation through Commonsense Knowledge Extraction and Injection. arXiv 2020, arXiv:2012.10813. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Graves, A.; Mohamed, A.; Hinton, G.E. Speech recognition with deep recurrent neural networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2013, Vancouver, BC, Canada, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Türetken, O.; Su, O.; Demirörs, O. Automating software requirements generation from business process models. In Proceedings of the 1st Conference on the Principles of Software Eng.(PRISE’04), Buenos Aires, Argentina, 22–26 November 2004. [Google Scholar]

- Cox, K.; Phalp, K.; Bleistein, S.J.; Verner, J.M. Deriving requirements from process models via the problem frames approach. Inf. Softw. Technol. 2005, 47, 319–337. [Google Scholar] [CrossRef]

- Osama, M.; Zaki-Ismail, A.; Abdelrazek, M.; Grundy, J.; Ibrahim, A. DBRG: Description-Based Non-Quality Requirements Generator. In Proceedings of the IEEE 29th International Requirements Engineering Conference (RE), Notre Dame, IN, USA, 20–24 September 2021; pp. 424–425. [Google Scholar]

- Novgorodov, S.; Elad, G.; Guy, I.; Radinsky, K. Generating Product Descriptions from User Reviews. In Proceedings of the World Wide Web Conference, WWW 2019, San Francisco, CA, USA, 13–17 May 2019; pp. 1354–1364. [Google Scholar]

- Novgorodov, S.; Guy, I.; Elad, G.; Radinsky, K. Descriptions from the Customers: Comparative Analysis of Review-based Product Description Generation Methods. ACM Trans. Internet Technol. 2020, 20, 44:1–44:31. [Google Scholar] [CrossRef]

- Elad, G.; Guy, I.; Novgorodov, S.; Kimelfeld, B.; Radinsky, K. Learning to Generate Personalized Product Descriptions. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, CIKM 2019, Beijing, China, 3–7 November 2019; pp. 389–398. [Google Scholar]

- Mou, L.; Yan, R.; Li, G.; Zhang, L.; Jin, Z. Backward and forward language modeling for constrained sentence generation. arXiv 2015, arXiv:1512.06612. [Google Scholar]

- Liu, D.; Fu, J.; Qu, Q.; Lv, J. BFGAN: Backward and Forward Generative Adversarial Networks for Lexically Constrained Sentence Generation. IEEE ACM Trans. Audio Speech Lang. Process. 2019, 27, 2350–2361. [Google Scholar] [CrossRef]

- Hokamp, C.; Liu, Q. Lexically Constrained Decoding for Sequence Generation Using Grid Beam Search. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, ACL 2017, Volume 1: Long Papers, Vancouver, BC, Canada, 30 July–4 August 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 1535–1546. [Google Scholar]

- Miao, N.; Zhou, H.; Mou, L.; Yan, R.; Li, L. CGMH: Constrained Sentence Generation by Metropolis-Hastings Sampling. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 6834–6842. [Google Scholar] [CrossRef]

- Sha, L. Gradient-guided Unsupervised Lexically Constrained Text Generation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 8692–8703. [Google Scholar]

- Ding, T.; Pan, S. Personalized Emphasis Framing for Persuasive Message Generation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, EMNLP 2016, Austin, TX, USA, 1–4 November 2016; The Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; pp. 1432–1441. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Keskar, N.S.; McCann, B.; Varshney, L.R.; Xiong, C.; Socher, R. CTRL: A Conditional Transformer Language Model for Controllable Generation. arXiv 2019, arXiv:1909.05858. [Google Scholar]

- Du, Z.; Qian, Y.; Liu, X.; Ding, M.; Qiu, J.; Yang, Z.; Tang, J. GLM: General Language Model Pretraining with Autoregressive Blank Infilling. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), ACL 2022, Dublin, Ireland, 22–27 May 2022; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 320–335. [Google Scholar]

- Song, K.; Tan, X.; Qin, T.; Lu, J.; Liu, T. MASS: Masked Sequence to Sequence Pre-training for Language Generation. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 5926–5936. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2020, 21, 140:1–140:67. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Bechhofer, S.; Van Harmelen, F.; Hendler, J.; Horrocks, I.; McGuinness, D.L.; Patel-Schneider, P.F.; Stein, L.A. OWL web ontology language reference. W3C Recomm. 2004, 10, 1–53. [Google Scholar]

- Gu, J.; Lu, Z.; Li, H.; Li, V.O.K. Incorporating Copying Mechanism in Sequence-to-Sequence Learning. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, ACL 2016, Volume 1: Long Papers, Berlin, Germany, 7–12 August 2016; The Association for Computer Linguistics: Stroudsburg, PA, USA, 2016. [Google Scholar]

- Su, J. SPACES: Extract Generate Long Text Summaries. Available online: https://spaces.ac.cn/archives/8046/comment-page-1 (accessed on 1 January 2021).

- Cleland-Huang, J.; Vierhauser, M.; Bayley, S. Dronology: An incubator for cyber-physical systems research. In Proceedings of the 40th International Conference on Software Engineering: New Ideas and Emerging Results, ICSE (NIER), Gothenburg, Sweden, 27 May–3 June 2018; pp. 109–112. [Google Scholar]

- Standard Building Automation System (BAS) Specification; Technical Report; City of Toronto, Standard Specifications. Available online: https://www.toronto.ca/wp-content/uploads/2017/10/918d-Standard-Building-Automation-System-BAS-Specification-for-City-Buildings-2015.pdf (accessed on 1 October 2017).

- Manning, C.D.; Surdeanu, M.; Bauer, J.; Finkel, J.R.; Bethard, S.; McClosky, D. The Stanford CoreNLP Natural Language Processing Toolkit. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, ACL 2014, Baltimore, MD, USA, 22–27 June 2014; The Association for Computer Linguistics: Stroudsburg, PA, USA, 2014; pp. 55–60. [Google Scholar]

- Zhao, Z.; Zhang, L.; Lian, X. A Preliminary Study on the Potential Usefulness of Open Domain Model for Missing Software Requirements Recommendation. arXiv 2022, arXiv:2208.06757. [Google Scholar]

- Zhong, H.; Zhang, J.; Wang, Z.; Wan, H.; Chen, Z. Aligning knowledge and text embeddings by entity descriptions. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 267–272. [Google Scholar]

- Zhang, Y.; Wang, G.; Li, C.; Gan, Z.; Brockett, C.; Dolan, B. POINTER: Constrained progressive text generation via insertion-based generative pre-training. arXiv 2020, arXiv:2005.00558. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Jawahar, G.; Sagot, B.; Seddah, D. What Does BERT Learn about the Structure of Language? In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Volume 1: Long Papers, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 3651–3657. [Google Scholar]

- Martín-Lammerding, D.; Astrain, J.J.; Córdoba, A.; Villadangos, J. An ontology-based system to avoid UAS flight conflicts and collisions in dense traffic scenarios. Expert Syst. Appl. 2022, 215, 119027. [Google Scholar] [CrossRef]

- Martın-Lammerding, D.; Astrain, J.J.; Córdoba, A. A Reference Ontology for Collision Avoidance Systems and Accountability. In Proceedings of the Fifteenth International Conference on Advances in Semantic Processing, Barcelona, Spain, 3–7 October 2021. [Google Scholar]

- Martín-Lammerding, D.; Astrain, J.J.; Córdoba, A.; Villadangos, J. A Multi-UAS Simulator for High Density Air Traffic Scenarios. In Proceedings of the VEHICULAR 2022, The Eleventh International Conference on Advances in Vehicular Systems, Technologies and Applications, Venice, Italy, 22–26 May 2022; pp. 32–37. [Google Scholar]

- Kučera, A.; Pitner, T. Semantic BMS: Allowing usage of building automation data in facility benchmarking. Adv. Eng. Inform. 2018, 35, 69–84. [Google Scholar] [CrossRef]

| Method | BLEU1 | BLEU2 | ROUGE-1 | ROUGE-2 | ROUGE-L | RS4RE | Time (HRS) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R. | P. | F. | R. | P. | F. | R. | P. | F. | ||||||

| UAV | Bert_base | 37.92 ±3.3 | 23.53 ±2.54 | 54.33 ±2.29 | 42.04 ±3.61 | 47.40 ±2.33 | 31.32 ±2.37 | 24.88 ±3.25 | 27.73 ±2.57 | 51.29 ±2.77 | 39.90 ±3.38 | 44.88 ±2.68 | 8.00 ±1.27 | 1.5 |

| GPT3 | 34.20 ±6.64 | 18.28 ±5.41 | 34.51 ±8.66 | 59.42 ±11.39 | 43.66 ±9.39 | 16.49 ±6.77 | 14.82 ±8.05 | 15.61 ±7.12 | 31.02 ±8.39 | 56.22 ±10.64 | 39.98 ±8.89 | 1.31 ±0.26 | - | |

| UniLM | 34.27 ±8.95 | 17.83 ±5.69 | 55.15 ±10.99 | 41.53 ±13.26 | 47.38 ±11.68 | 18.21 ±6.71 | 14.44 ±8.42 | 16.11 ±7.19 | 49.73 ±10.27 | 37.71 ±12.42 | 42.89 ±10.92 | 2.54 ±0.14 | 1 | |

| BART | 42.66 ±7.90 | 23.99 ±9.17 | 71.75 ±6.23 | 52.12 ±6.16 | 60.38 ±4.85 | 37.86 ±8.95 | 26.90 ±7.70 | 31.45 ±8.16 | 66.07 ±7.20 | 47.79 ±6.56 | 55.47 ±5.89 | 5.76 ±1.45 | 1 | |

| CGMH | 12.07 ±2.63 | 1.84 ±0.56 | 35.06 ±4.45 | 17.86 ±9.99 | 23.66 ±6.06 | 5.48 ±0.92 | 2.47 ±2.95 | 3.41 ±1.39 | 32.42 ±4.24 | 16.52 ±9.34 | 21.89 ±5.75 | 0.00 ±0.00 | 22 | |

| POINTER | 17.26 ±3.63 | 2.46 ±0.98 | 24.15 ±3.86 | 38.66 ±3.58 | 29.73 ±3.72 | 2.83 ±1.61 | 5.20 ±1.09 | 3.67 ±1.26 | 19.92 ±2.60 | 32.07 ±2.76 | 24.58 ±2.80 | 4.60 ±1.87 | 1 | |

| ReqGen | 42.15 ±6.55 | 25.04 ±6.19 | 69.93 ±5.44 | 49.91 ±6.13 | 58.25 ±5.66 | 39.03 ±6.04 | 28.21 ±8.27 | 32.75 ±6.87 | 65.12 ±5.96 | 46.47 ±6.92 | 54.23 ±6.27 | 8.89 ±1.18 | 1.2 | |

| BAS | Bert_base | 29.08 ±2.23 | 9.28 ±1.63 | 46.69 ±2.58 | 35.90 ±3.31 | 40.59 ±2.54 | 12.84 ±1.73 | 10.57 ±1.86 | 11.60 ±1.66 | 40.79 ±2.51 | 31.64 ±2.98 | 35.64 ±2.45 | 12.48 ±2.05 | 1 |

| GPT3 | 28.34 ±2.47 | 7.87 ±1.21 | 37.17 ±2.56 | 41.07 ±4.25 | 33.56 ±3.18 | 10.02 ±1.15 | 9.64 ±1.56 | 9.30 ±1.28 | 31.98 ±1.85 | 35.74 ±3.12 | 30.53 ±2.33 | 7.08 ±1.33 | - | |

| UniLM | 30.08 ±2.15 | 13.31 ±1.06 | 42.44 ±1.36 | 33.58 ±1.98 | 37.49 ±1.29 | 24.42 ±1.14 | 18.86 ±0.99 | 21.28 ±1.01 | 39.58 ±1.41 | 31.47 ±1.22 | 35.06 ±1.16 | 13.56 ±2.43 | 0.8 | |

| BART | 27.05 ±1.63 | 10.60 ±0.55 | 63.67 ±0.36 | 37.37 ±3.93 | 47.10 ±1.38 | 20.59 ±0.56 | 12.43 ±1.64 | 15.50 ±0.77 | 58.78 ±0.34 | 34.14 ±3.73 | 43.19 ±1.39 | 14.58 ±1.79 | 0.7 | |

| CGMH | 10.58 ±2.25 | 1.85 ±0.32 | 57.69 ±4.33 | 20.94 ±11.21 | 30.73 ±5.99 | 4.23 ±0.55 | 1.64 ±1.41 | 2.36 ±0.77 | 44.70 ±3.06 | 16.41 ±8.05 | 24.01 ±4.26 | 3.26 ±0.84 | 72 | |

| POINTER | 21.34 ±3.74 | 2.34 ±0.75 | 25.34 ±5.05 | 43.82 ±3.36 | 32.11 ±4.02 | 1.93 ±1.43 | 3.69 ±0.74 | 2.53 ±0.96 | 21.01 ±3.74 | 36.53 ±2.59 | 26.68 ±3.07 | 5.72 ±0.57 | 1 | |

| ReqGen | 38.07 ±1.62 | 15.62 ±0.87 | 58.96 ±1.33 | 44.63 ±3.21 | 50.80 ±1.95 | 20.35 ±1.35 | 16.45 ±2.11 | 18.19 ±1.62 | 52.82 ±1.16 | 40.17 ±2.76 | 45.63 ±1.65 | 17.42 ±2.83 | 0.9 | |

| Keywords: landing, internal simulator, ground Target: When given a landing command the internal simulator shall move the UAV from to the ground altitude corresponding to its current longitude and latitude. | ||

| Alg. | Generated Sentence | Required Modifications |

| Bert_base | when a flight simulator is activated the flight simulator shall compute the location of the UAV. | Useless: no semantic overlap with the target. |

| GPT3 | When a UAV lands the Internal Simulator shall record the time of landing. | Useless: no semantic overlap with the target. |

| UniLM | When a UAV is loaded the internal simulator shall display the location of the UAV. | Useless:no semantic overlap with the target. |

| BART | landinginternal flight simulator shall compute the ground position of a UAV. | The trigger is missing, and the main clause is partially right: when given a landing command , the internal flight simulator shall compute the ground position of a UAV and move the UAV to ground latitude. |

| CGMH | simulator owners for takeoff at a corresponding latitude. | Useless: syntax errors and no semantic overlap with the target. |

| POINTER | in which a single point landing system, when a single point that is assigned a separate GPS system or for an active navigation system has to orient at its current position and to display the current ground coordinates. | Useless: syntax errors and no semantic overlap with the target. |

| ReqGen | When a landing UAV is assigned to a UAV, the internal simulator shall compute the ground longitude latitude and latitude of the UAV. | The trigger is right, and the main clause is partially right: when a landing UAV command is assigned to a UAV, the internal simulator shall compute the ground longitude latitude and latitude of the UAV and move the UAV to ground latitude. |

| Layer (Hop) | BLEU1 | BLEU2 | ROUGE-1 | ROUGE-2 | ROUGE-L | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R. | P. | F. | R. | P. | F. | R. | P. | F. | ||||

| UAV | 1(5), 2(1) | 43.23 ±5.76 | 24.63 ±6.18 | 63.21 ±5.44 | 50.51 ±4.69 | 56.15 ±4.18 | 34.90 ±7.02 | 27.61 ±6.00 | 29.86 ±6.15 | 58.83 ±5.25 | 47.04 ±5.80 | 51.12 ±4.96 |

| 1(5), 2(2), 4(1) | 43.87 ±6.07 | 25.05 ±6.89 | 65.69 ±6.82 | 50.34 ±6.54 | 57.00 ±6.15 | 35.59 ±9.57 | 27.18 ±7.04 | 30.82 ±7.98 | 61.13 ±7.60 | 46.86 ±6.95 | 53.05 ±6.91 | |

| 1(5), 2(3), 4(2), 8(1) | 42.57 ±3.29 | 23.63 ±4.65 | 66.22 ±7.59 | 49.96 ±3.98 | 56.95 ±4.35 | 35.14 ±9.76 | 26.10 ±5.86 | 29.95 ±6.52 | 61.39 ±7.77 | 46.57 ±4.72 | 52.95 ±5.02 | |

| 1(5), 2(4), 4(3), 8(2), 11(1) | 40.2 ±5.82 | 21.64 ±5.97 | 63.04 ±6.08 | 47.37 ±5.91 | 54.09 ±5.05 | 32.40 ±8.58 | 24.17 ±6.44 | 26.89 ±7.04 | 58.65 ±6.15 | 44.17 ±7.07 | 49.42 ±6.17 | |

| BAS | 1(5), 2(1) | 34.51 ±2.69 | 13.25 ±1.77 | 55.54 ±3.00 | 41.74 ±2.20 | 47.66 ±2.36 | 17.86 ±1.94 | 14.13 ±1.82 | 15.78 ±1.84 | 49.87 ±2.38 | 37.66 ±1.92 | 42.91 ±1.96 |

| 1(5), 2(2), 4(1) | 34.55 ±2.28 | 13.73 ±0.69 | 55.02 ±2.97 | 41.76 ±2.07 | 47.48 ±2.38 | 18.30 ±1.22 | 14.68 ±0.99 | 16.29 ±1.01 | 49.83 ±2.54 | 38.03 ±1.66 | 43.14 ±1.95 | |

| 1(5), 2(3), 4(2), 8(1) | 33.26 ±2.37 | 12.92 ±1.20 | 54.51 ±1.72 | 40.64 ±2.53 | 46.56 ±2.14 | 18.13 ±0.99 | 14.19 ±1.24 | 15.92 ±1.14 | 49.21 ±1.31 | 36.92 ±2.08 | 42.19 ±1.80 | |

| 1(5), 2(4), 4(3), 8(2), 11(1) | 32.33 ±2.05 | 12.31 ±1.12 | 54.27 ±2.99 | 40.16 ±2.43 | 46.16 ±2.55 | 17.38 ±1.49 | 13.77 ±1.23 | 15.37 ±1.30 | 49.02 ±2.57 | 36.55 ±2.14 | 41.88 ±2.23 | |

| Frequency Filtering | BLEU1 | BLEU2 | ROUGE-1 | ROUGE-2 | ROUGE-L | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R. | P. | F. | R. | P. | F. | R. | P. | F. | ||||

| UAV | no | 42.78 ±3.84 | 24.14 ±5.23 | 66.54 ±6.54 | 49.85 ±4.57 | 57.00 ±4.25 | 35.51 ±7.55 | 26.59 ±5.37 | 30.41 ±5.87 | 61.76 ±6.55 | 46.45 ±5.08 | 53.02 ±4.79 |

| 10 | 43.50 ±5.07 | 23.97 ±5.04 | 65.80 ±7.59 | 49.92 ±5.27 | 56.77 ±5.20 | 34.99 ±8.68 | 26.22 ±5.58 | 29.24 ±6.14 | 61.75 ±7.30 | 47.04 ±6.11 | 52.46 ±5.79 | |

| 50 | 40.47 ±5.80 | 21.39 ±6.09 | 61.87 ±4.00 | 46.58 ±4.71 | 53.15 ±4.27 | 32.05 ±7.58 | 23.63 ±6.10 | 27.21 ±6.60 | 57.90 ±4.34 | 43.70 ±4.94 | 49.80 ±4.58 | |

| BAS | no | 34.15 ±1.90 | 13.37 ±1.03 | 54.75 ±1.99 | 41.30 ±1.10 | 47.08 ±1.28 | 18.01 ±1.22 | 14.49 ±1.05 | 16.06 ±1.03 | 49.41 ±2.02 | 37.52 ±1.34 | 42.65 ±1.48 |

| 10 | 34.43 ±1.80 | 13.65 ±1.38 | 55.93 ±2.41 | 41.76 ±0.98 | 47.82 ±1.10 | 18.84 ±1.29 | 14.80 ±0.92 | 16.58 ±0.97 | 50.29 ±2.22 | 37.76 ±0.77 | 43.13 ±0.96 | |

| 50 | 34.33 ±1.83 | 13.45 ±1.31 | 55.68 ±1.64 | 41.69 ±0.96 | 47.68 ±1.22 | 18.65 ±1.59 | 14.66 ±1.07 | 16.41 ±1.28 | 50.28 ±1.87 | 37.83 ±1.09 | 43.18 ±1.39 | |

| Settings | BLEU1 | BLEU2 | ROUGE-1 | ROUGE-2 | ROUGE-L | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R. | P. | F. | R. | P. | F. | R. | P. | F. | ||||

| UAV | Layer 1 | 42.78 ±3.84 | 24.14 ±5.23 | 66.54 ±6.54 | 49.85 ±4.57 | 57.00 ±4.25 | 35.51 ±7.55 | 26.59 ±5.37 | 30.41 ±5.87 | 61.76 ±6.55 | 46.45 ±5.08 | 53.02 ±4.79 |

| Layer 1, 2, 4 | 43.87 ±6.07 ↑ | 25.05 ±6.89 ↑ | 65.69 ±6.82 | 50.34 ±6.54 ↑ | 57.00 ±6.15 | 35.59 ±9.57 ↑ | 27.18 ±7.04 ↑ | 30.82 ±7.98 ↑ | 61.13 ±7.60 | 46.86 ±6.95 ↑ | 53.05 ±6.91 ↑ | |

| Layer 1, 2, 4 + 10 Fre. | 44.80 ±4.00 ↑ | 25.12 ±5.23 ↑ | 65.35 ±6.64 | 52.18 ±4.26 ↑ | 58.03 ±4.22 ↑ | 35.48 ±8.98 | 28.11 ±5.62 ↑ | 31.37 ±6.66 ↑ | 60.95 ±7.35 | 48.93 ±5.94 ↑ | 54.28 ±5.56 ↑ | |

| Layer 1, 2, 4 + 10 Fre. + Copy | 42.15 ±6.55 | 25.04 ±6.19 | 69.93 ±5.44 ↑ | 49.91 ±6.13 | 58.25 ±5.66 ↑ | 39.03 ±6.04 ↑ | 28.21 ±8.27 ↑ | 32.75 ±6.87 ↑ | 65.12 ±5.96 ↑ | 46.47 ±6.92 | 54.23 ±6.27 | |

| Layer 1, 2, 4 + 10 Fre. + Copy + Syntax cons. (ReqGen) | 42.15 ±6.55 | 25.04 ±6.19 | 69.93 ±5.44 | 49.91 ±6.13 | 58.25 ±5.66 | 39.03 ±6.04 | 28.21 ±8.27 | 32.75 ±6.87 | 65.12 ±5.96 | 46.47 ±6.92 | 54.23 ±6.27 | |

| BAS | Layer 1 | 34.15 ±1.90 | 13.37 ±1.03 | 54.75 ±1.99 | 41.30 ±1.10 | 47.08 ±1.28 | 18.01 ±1.22 | 14.49 ±1.05 | 16.06 ±1.03 | 49.41 ±2.02 | 37.52 ±1.34 | 42.65 ±1.48 |

| Layer 1, 2, 4 | 34.55 ±2.28 ↑ | 13.73 ±0.69 ↑ | 55.02 ±2.97 ↑ | 41.76 ±2.07 ↑ | 47.48 ±2.38 ↑ | 18.30 ±1.22 ↑ | 14.68 ±0.99 ↑ | 16.29 ±1.01 ↑ | 49.83 ±2.54 ↑ | 38.03 ±1.66 ↑ | 43.14 ±1.95 ↑ | |

| Layer 1, 2, 4 + 10 Fre. | 37.99 ±1.61 ↑ | 15.57 ±0.07 ↑ | 56.30 ±2.27 ↑ | 45.05 ±1.60 ↑ | 50.05 ±1.67 ↑ | 19.58 ±1.29 ↑ | 16.57 ±0.85 ↑ | 17.95 ±0.99 ↑ | 50.60 ±2.14 ↑ | 40.68 ±1.27 ↑ | 45.10 ±1.38 ↑ | |

| Layer 1, 2, 4 + 10 Fre. + Copy | 38.41 ±2.51 ↑ | 15.06 ±1.13 | 59.47 ±3.23 ↑ | 44.93 ±2.34 | 51.18 ±2.82 ↑ | 19.46 ±1.83 | 15.75 ±1.00 | 17.41 ±1.38 | 53.30 ±2.70 ↑ | 40.48 ±2.00 | 46.01 ±2.40 ↑ | |

| Layer 1, 2, 4 + 10 Fre. + Copy + Syntax cons. (ReqGen) | 38.07 ±1.62 | 15.62 ±0.87 ↑ | 58.96 ±1.33 | 44.63 ±3.21 | 50.80 ±1.95 | 20.35 ±1.35 ↑ | 16.45 ±2.11 ↑ | 18.19 ±1.62 ↑ | 52.82 ±1.16 | 40.17 ±2.76 | 45.63 ±1.65 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Z.; Zhang, L.; Lian, X.; Gao, X.; Lv, H.; Shi, L. ReqGen: Keywords-Driven Software Requirements Generation. Mathematics 2023, 11, 332. https://doi.org/10.3390/math11020332

Zhao Z, Zhang L, Lian X, Gao X, Lv H, Shi L. ReqGen: Keywords-Driven Software Requirements Generation. Mathematics. 2023; 11(2):332. https://doi.org/10.3390/math11020332

Chicago/Turabian StyleZhao, Ziyan, Li Zhang, Xiaoli Lian, Xiaoyun Gao, Heyang Lv, and Lin Shi. 2023. "ReqGen: Keywords-Driven Software Requirements Generation" Mathematics 11, no. 2: 332. https://doi.org/10.3390/math11020332

APA StyleZhao, Z., Zhang, L., Lian, X., Gao, X., Lv, H., & Shi, L. (2023). ReqGen: Keywords-Driven Software Requirements Generation. Mathematics, 11(2), 332. https://doi.org/10.3390/math11020332