Deep Successive Convex Approximation for Image Super-Resolution

Abstract

1. Introduction

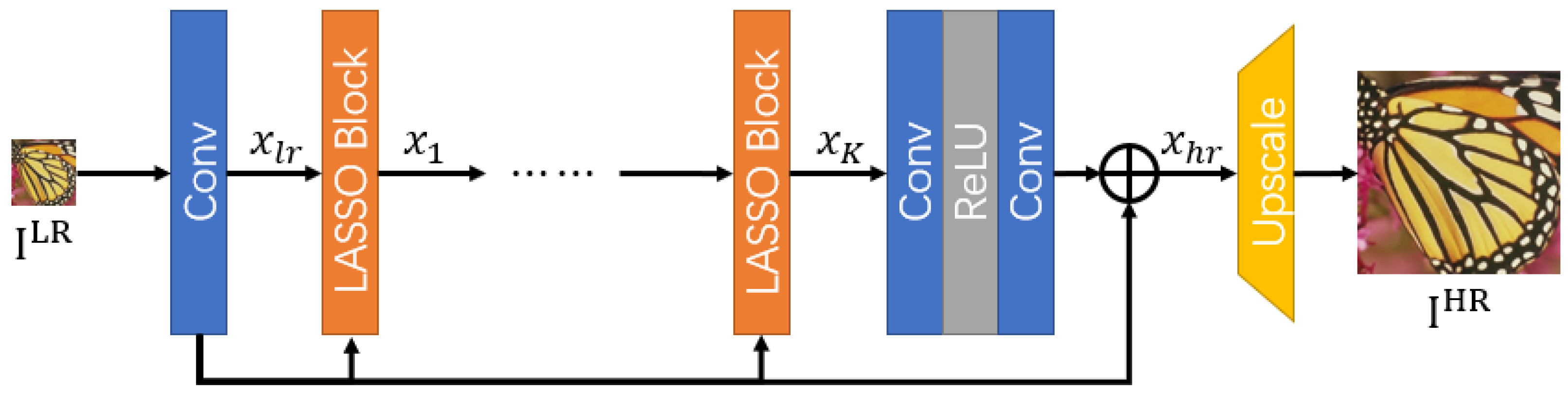

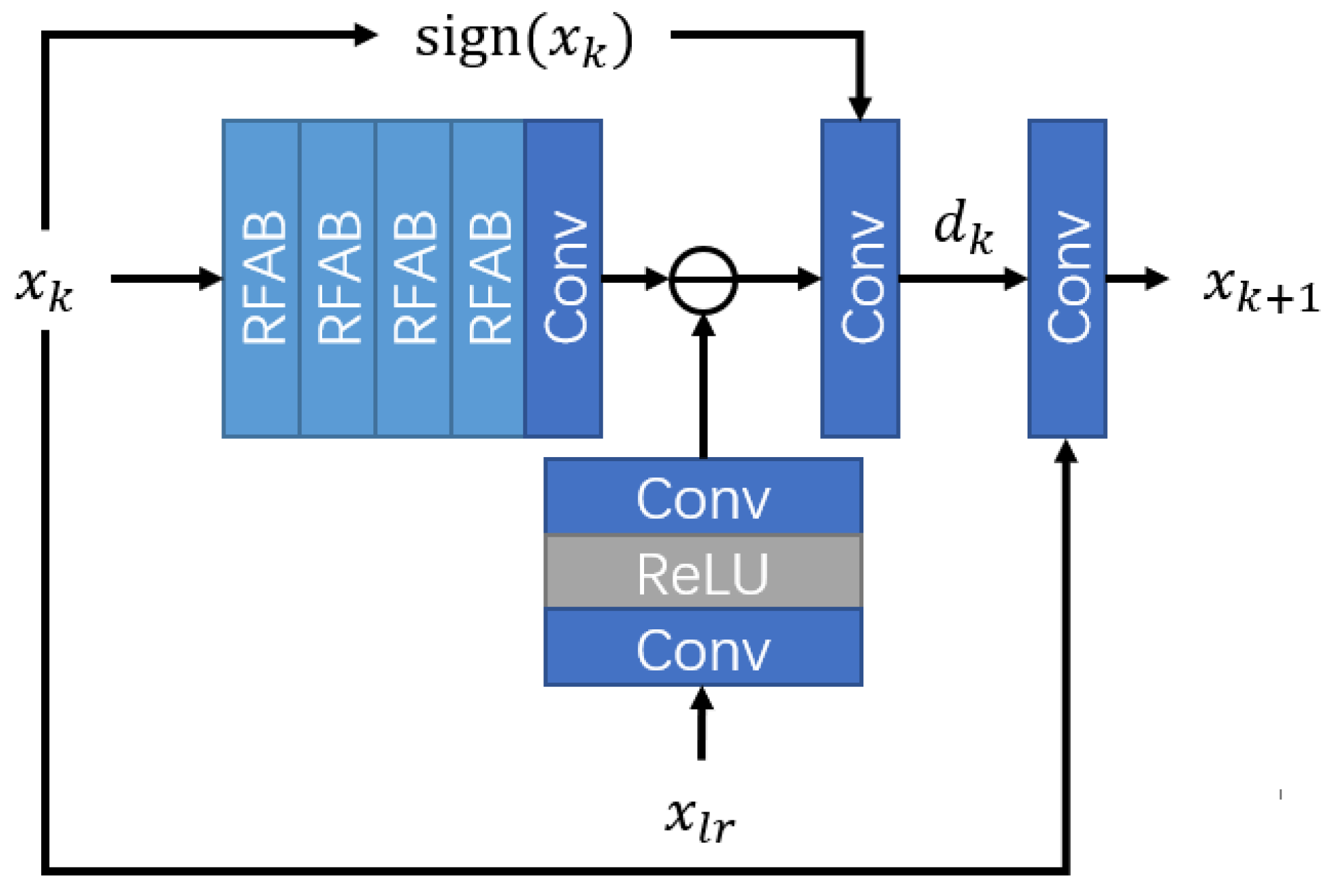

- We analyze image SR from a non-convex optimization perspective and propose an iterative solution by dividing the task into several convex LASSO sub-problems, which is more effective than other iteration solutions. Based on this analysis, we devise a SCANet for image super-resolution.

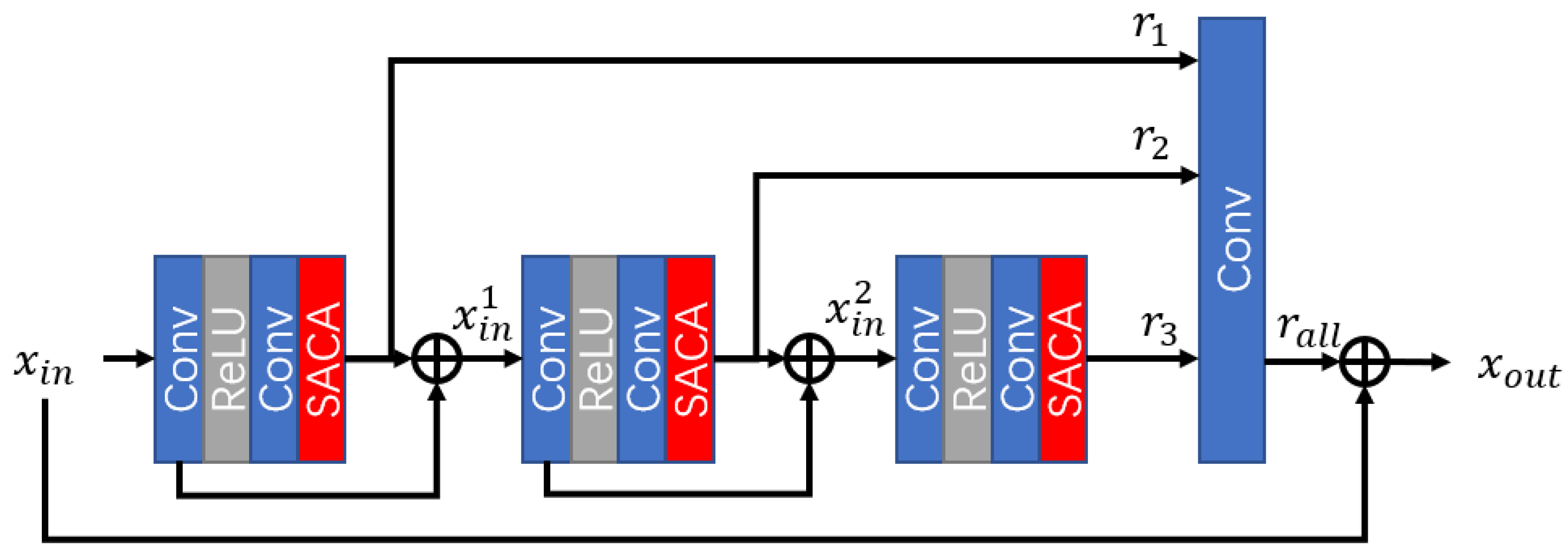

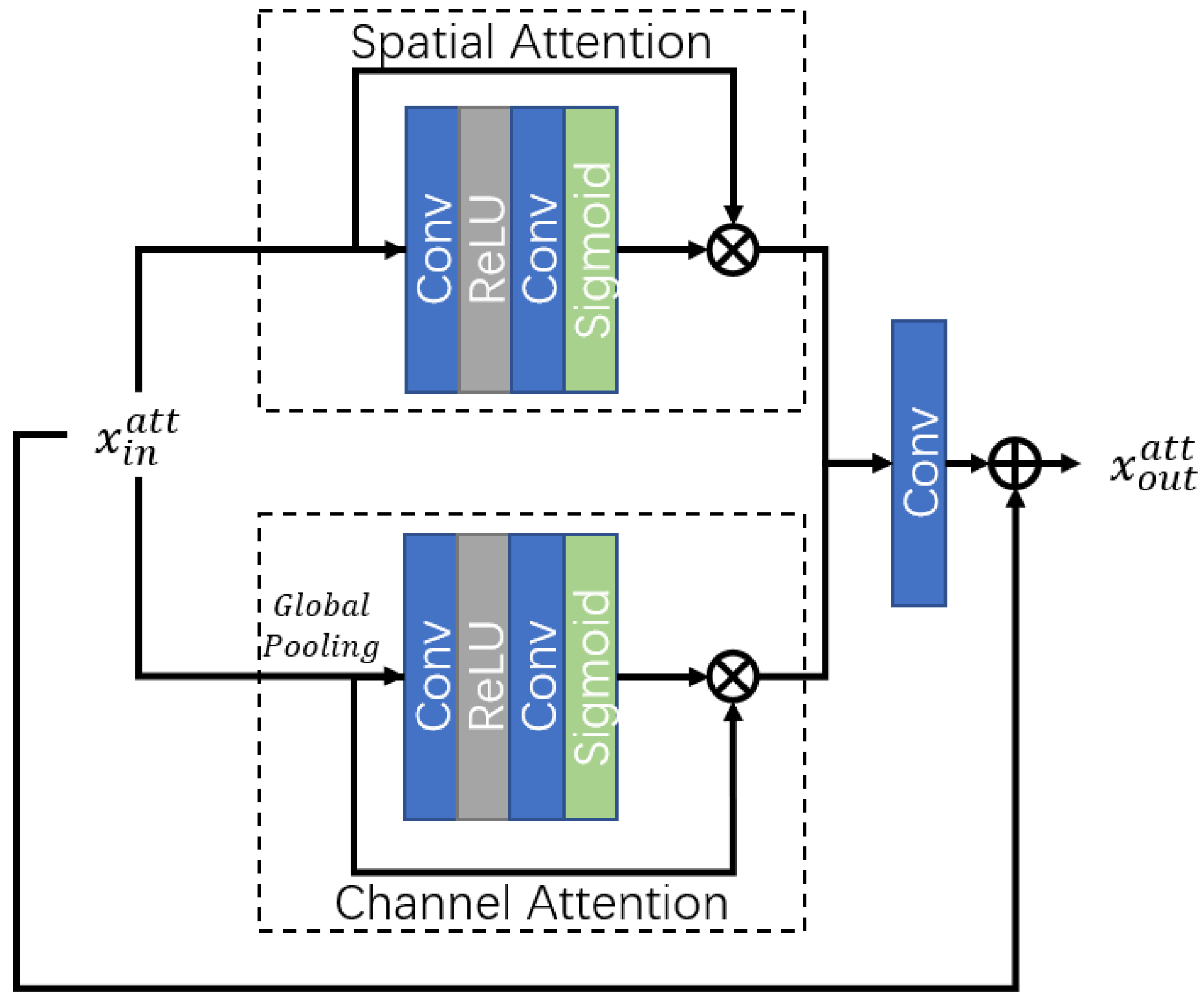

- We utilize residual feature aggregation (RFA) blocks and develop a spatial and channel attention (SACA) mechanism in SCANet, which can improve network representation and restoration capacity.

- The experimental results show that the proposed SCANet achieves better subjective and objective performance than other works, with higher PSNR/SSIM results.

2. Related Works

3. Methodology

3.1. Successive Convex Approximation for Image Super-Resolution

3.2. Deep Successive Convex Approximation Network

3.3. Implementation Details

4. Experiment

4.1. Settings

4.2. Ablation Study

4.3. Results

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, Y.W.; Kim, J.S.; Park, K.R. Ocular Biometrics with Low-Resolution Images Based on Ocular Super-Resolution CycleGAN. Mathematics 2022, 10, 3818. [Google Scholar] [CrossRef]

- Batchuluun, G.; Nam, S.H.; Park, C.; Park, K.R. Super-Resolution Reconstruction-Based Plant Image Classification Using Thermal and Visible-Light Images. Mathematics 2023, 11, 76. [Google Scholar] [CrossRef]

- Lee, Y.W.; Park, K.R. Recent Iris and Ocular Recognition Methods in High-and Low-Resolution Images: A Survey. Mathematics 2022, 10, 2063. [Google Scholar] [CrossRef]

- Lee, S.J.; Yoo, S.B. Super-resolved recognition of license plate characters. Mathematics 2021, 9, 2494. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a Deep Convolutional Network for Image Super-Resolution. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the Super-Resolution Convolutional Neural Network. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 391–407. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar]

- Zhang, K.; Zuo, W.; Gu, S.; Zhang, L. Learning Deep CNN Denoiser Prior for Image Restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2808–2817. [Google Scholar]

- Zhang, K.; Zuo, W.; Zhang, L. Deep Plug-And-Play Super-Resolution for Arbitrary Blur Kernels. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1671–1681. [Google Scholar]

- Wei, Y.; Li, Y.; Ding, Z.; Wang, Y.; Zeng, T.; Long, T. SAR Parametric Super-Resolution Image Reconstruction Methods Based on ADMM and Deep Neural Network. IEEE Trans. Geosci. Remot. Sens. 2021, 59, 10197–10212. [Google Scholar] [CrossRef]

- Ma, Q.; Jiang, J.; Liu, X.; Ma, J. Deep Unfolding Network for Spatiospectral Image Super-Resolution. IEEE Trans. Comput. Imaging 2022, 8, 28–40. [Google Scholar] [CrossRef]

- Ran, Y.; Dai, W. Fast and Robust ADMM for Blind Super-Resolution. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Virtual, 6–12 June 2021; pp. 5150–5154. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, W.; Tang, Y.; Tang, J.; Wu, G. Residual Feature Aggregation Network for Image Super-Resolution. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; pp. 2356–2365. [Google Scholar]

- Anwar, S.; Khan, S.H.; Barnes, N. A Deep Journey into Super-resolution: A Survey. ACM Comput. Surv. 2021, 53, 60:1–60:34. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Tai, Y.; Yang, J.; Liu, X. Image Super-Resolution via Deep Recursive Residual Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2790–2798. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Deeply-Recursive Convolutional Network for Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1637–1645. [Google Scholar]

- Ahn, N.; Kang, B.; Sohn, K. Fast, Accurate, and Lightweight Super-Resolution with Cascading Residual Network. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Volume 11214, pp. 256–272. [Google Scholar]

- He, X.; Mo, Z.; Wang, P.; Liu, Y.; Yang, M.; Cheng, J. ODE-Inspired Network Design for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1732–1741. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual Dense Network for Image Restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2480–2495. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Tong, T.; Li, G.; Liu, X.; Gao, Q. Image Super-Resolution Using Dense Skip Connections. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4809–4817. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Volume 11211, pp. 294–310. [Google Scholar]

- Dai, T.; Cai, J.; Zhang, Y.; Xia, S.; Zhang, L. Second-Order Attention Network for Single Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11057–11066. [Google Scholar]

- Fang, B.; Qian, Z.; Zhong, W.; Shao, W. Iterative precoding for MIMO wiretap channels using successive convex approximation. In Proceedings of the 2015 IEEE 4th Asia-Pacific Conference on Antennas and Propagation (APCAP), Bali Island, Indonesia, 30 June–3 July 2015; pp. 65–66. [Google Scholar] [CrossRef]

- Pang, C.; Au, O.C.; Zou, F.; Zhang, X.; Hu, W.; Wan, P. Optimal dependent bit allocation for AVS intra-frame coding via successive convex approximation. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 1520–1523. [Google Scholar] [CrossRef]

- Yang, Y.; Pesavento, M.; Chatzinotas, S.; Ottersten, B. Successive Convex Approximation Algorithms for Sparse Signal Estimation With Nonconvex Regularizations. IEEE J. Sel. Top. Signal Process. 2018, 12, 1286–1302. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar] [CrossRef]

- Liu, J.; Tang, J.; Wu, G. Residual Feature Distillation Network for Lightweight Image Super-Resolution. In Proceedings of the Computer Vision—ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 41–55. [Google Scholar] [CrossRef]

- Agustsson, E.; Timofte, R. NTIRE 2017 Challenge on Single Image Super-Resolution: Dataset and Study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1122–1131. [Google Scholar]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi Morel, M.L. Low-Complexity Single-Image Super-Resolution based on Nonnegative Neighbor Embedding. In Proceedings of the British Machine Vision Conference (BMVC), Surrey, UK, 3–7 September 2012; pp. 135.1–135.10. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On single image scale-up using sparse-representations. In Proceedings of the International Conference on Curves and Surfaces, Avignon, France, 24–30 June 2010; pp. 711–730. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 416–423. [Google Scholar]

- Huang, J.; Singh, A.; Ahuja, N. Single image super-resolution from transformed self-exemplars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5197–5206. [Google Scholar]

- Matsui, Y.; Ito, K.; Aramaki, Y.; Fujimoto, A.; Ogawa, T.; Yamasaki, T.; Aizawa, K. Sketch-based manga retrieval using manga109 dataset. Multimed. Tools Appl. (MTA) 2017, 76, 21811–21838. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Ren, H.; El-Khamy, M.; Lee, J. Image Super Resolution Based on Fusing Multiple Convolution Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1050–1057. [Google Scholar]

- Lai, W.; Huang, J.; Ahuja, N.; Yang, M. Deep Laplacian Pyramid Networks for Fast and Accurate Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5835–5843. [Google Scholar]

- Fan, Y.; Shi, H.; Yu, J.; Liu, D.; Han, W.; Yu, H.; Wang, Z.; Wang, X.; Huang, T.S. Balanced Two-Stage Residual Networks for Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1157–1164. [Google Scholar]

- Tai, Y.; Yang, J.; Liu, X.; Xu, C. MemNet: A Persistent Memory Network for Image Restoration. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4549–4557. [Google Scholar]

- Choi, J.; Kim, M. A Deep Convolutional Neural Network with Selection Units for Super-Resolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1150–1156. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, L.; Wang, H.; Li, P. Resolution-Aware Network for Image Super-Resolution. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 1259–1269. [Google Scholar] [CrossRef]

- Xie, C.; Zeng, W.; Lu, X. Fast Single-Image Super-Resolution via Deep Network With Component Learning. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 3473–3486. [Google Scholar] [CrossRef]

- Li, F.; Bai, H.; Zhao, Y. FilterNet: Adaptive Information Filtering Network for Accurate and Fast Image Super-Resolution. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 1511–1523. [Google Scholar] [CrossRef]

- He, Z.; Cao, Y.; Du, L.; Xu, B.; Yang, J.; Cao, Y.; Tang, S.; Zhuang, Y. MRFN: Multi-Receptive-Field Network for Fast and Accurate Single Image Super-Resolution. IEEE Trans. Multimed. 2020, 22, 1042–1054. [Google Scholar] [CrossRef]

- Yang, W.; Feng, J.; Yang, J.; Zhao, F.; Liu, J.; Guo, Z.; Yan, S. Deep Edge Guided Recurrent Residual Learning for Image Super-Resolution. IEEE Trans. Image Process. 2017, 26, 5895–5907. [Google Scholar] [CrossRef]

- Fang, F.; Li, J.; Zeng, T. Soft-Edge Assisted Network for Single Image Super-Resolution. IEEE Trans. Image Process. 2020, 29, 4656–4668. [Google Scholar] [CrossRef]

- Hui, Z.; Gao, X.; Yang, Y.; Wang, X. Lightweight Image Super-Resolution with Information Multi-Distillation Network. In Proceedings of the ACM International Conference on Multimedia (MM), Nice, France, 21–25 October 2019; pp. 2024–2032. [Google Scholar]

- He, J.; Shi, W.; Chen, K.; Fu, L.; Dong, C. GCFSR: A Generative and Controllable Face Super Resolution Method Without Facial and GAN Priors. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–20 June 2022; pp. 1879–1888. [Google Scholar] [CrossRef]

- Menon, S.; Damian, A.; Hu, S.; Ravi, N.; Rudin, C. PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2434–2442. [Google Scholar] [CrossRef]

- Kim, Y.; Hao, J.; Mallavarapu, T.; Park, J.; Kang, M. Hi-LASSO: High-Dimensional LASSO. IEEE Access 2019, 7, 44562–44573. [Google Scholar] [CrossRef]

| RFAB | SACA | B100 [34] | Urban100 [35] | Manga109 [36] |

|---|---|---|---|---|

| ✗ | ✗ | 27.50/0.7338 | 25.76/0.7763 | 30.05/0.9027 |

| ✓ | ✗ | 27.58/0.7364 | 26.12/0.7876 | 30.45/0.9079 |

| ✓ | ✓ | 27.58/0.7365 | 26.17/0.7893 | 30.50/0.9083 |

| Scale | Model | Set5 [32] | Set14 [33] | B100 [34] | Urban100 [35] | Manga109 [36] |

|---|---|---|---|---|---|---|

| PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | ||

| SRCNN [5] | 36.66(-)/ 0.9542(-) | 32.42(-)/ 0.9063(-) | 31.36(-)/ 0.8879(-) | 29.50(-)/ 0.8946(-) | 35.74(-)/ 0.9661(-) | |

| FSRCNN [6] | 37.00(-)/ 0.9558(-) | 32.63(-)/ 0.9088(-) | 31.53(-)/ 0.8920(-) | 29.88(-)/ 0.9020(-) | 36.67(-)/ 0.9694(-) | |

| VDSR [7] | 37.53(-)/ 0.9587(-) | 33.03(-)/ 0.9124(-) | 31.90(-)/ 0.8960(-) | 30.76(-)/ 0.9140(-) | 37.22(-)/ 0.9729(-) | |

| DRCN [18] | 37.63(-)/ 0.9588(-) | 33.04(-)/ 0.9118(-) | 31.85(-)/ 0.8942(-) | 30.75(-)/ 0.9133(-) | 37.63(-)/ 0.9723(-) | |

| CNF [38] | 37.66(-)/ 0.9590(-) | 33.38(-)/ 0.9136(-) | 31.91(-)/ 0.8962(-) | - | - | |

| LapSRN [39] | 37.52(-)/ 0.9590(-) | 33.08(-)/ 0.9130(-) | 31.80(-)/ 0.8950(-) | 30.41(-)/ 0.9100(-) | 37.27(-)/ 0.9740(-) | |

| DRRN [17] | 37.74(-)/ 0.9591(-) | 33.23(-)/ 0.9136(-) | 32.05(-)/ 0.8973(-) | 31.23(-)/ 0.9188(-) | 37.92(-)/ 0.9760(-) | |

| BTSRN [40] | 37.75(-)/- | 33.20(-)/- | 32.05(-)/- | 31.63(-)/- | - | |

| MemNet [41] | 37.78(-)/ 0.9597(-) | 33.28(-)/ 0.9142(-) | 32.08(-)/ 0.8978(-) | 31.31(-)/ 0.9195(-) | 37.72(-)/ 0.9740(-) | |

| SelNet [42] | 37.89(-)/ 0.9598(-) | 33.61(-)/ 0.9160(-) | 32.08(-)/ 0.8984(-) | - | - | |

| CARN [19] | 37.76(-)/ 0.9590(-) | 33.52(-)/ 0.9166(-) | 32.09(-)/ 0.8978(-) | 31.92(-)/ 0.9256(-) | 38.36(-)/ 0.9765(-) | |

| RAN [43] | 37.58(-)/ 0.9592(-) | 33.10(-)/ 0.9133(-) | 31.92(-)/ 0.8963(-) | - | - | |

| DNCL [44] | 37.65(-)/ 0.9599(-) | 33.18(-)/ 0.9141(-) | 31.97(-)/ 0.8971(-) | 30.89(-)/ 0.9158(-) | - | |

| FilterNet [45] | 37.86(-)/ 0.9610(-) | 33.34(-)/ 0.9150(-) | 32.09(-)/ 0.8990(-) | 31.24(-)/ 0.9200(-) | - | |

| MRFN [46] | 37.98(-)/ 0.9611(-) | 33.41(-)/ 0.9159(-) | 32.14(-)/ 0.8997(-) | 31.45(-)/ 0.9221(-) | 38.29(-)/ 0.9759(-) | |

| SeaNet-baseline [48] | 37.99(-)/ 0.9607(-) | 33.60(-)/ 0.9174(-) | 32.18(-)/ 0.8995(-) | 32.08(-)/ 0.9276(-) | 38.48(-)/ 0.9768(-) | |

| DEGREE [47] | 37.58(-)/ 0.9587(-) | 33.06(-)/ 0.9123(-) | 31.80(-)/ 0.8974(-) | - | - | |

| SCANet (Ours) | 38.05/0.9607 | 33.54/0.9174 | 32.16/0.8995 | 32.10/0.9281 | 38.62/0.9770 | |

| SRCNN [5] | 32.75(-)/ 0.9090(-) | 29.28(-)/ 0.8209(-) | 28.41(-)/ 0.7863(-) | 26.24(-)/ 0.7989(-) | 30.59(-)/ 0.9107(-) | |

| FSRCNN [6] | 33.16(-)/ 0.9140(-) | 29.43(-)/ 0.8242(-) | 28.53(-)/ 0.7910(-) | 26.43(-)/ 0.8080(-) | 30.98(-)/ 0.9212(-) | |

| VDSR [7] | 33.66(-)/ 0.9213(-) | 29.77(-)/ 0.8314(-) | 28.82(-)/ 0.7976(-) | 27.14(-)/ 0.8279(-) | 32.01(-)/ 0.9310(-) | |

| DRCN [18] | 33.82(-)/ 0.9226(-) | 29.76(-)/ 0.8311(-) | 28.80(-)/ 0.7963(-) | 27.15(-)/ 0.8276(-) | 32.31(-)/ 0.9328(-) | |

| CNF [38] | 33.74(-)/ 0.9226(-) | 29.90(-)/ 0.8322(-) | 28.82(-)/ 0.7980(-) | - | - | |

| DRRN [18] | 34.03(-)/ 0.9244(-) | 29.96(-)/ 0.8349(-) | 28.95(-)/ 0.8004(-) | 27.53(-)/ 0.8378(-) | 32.74(-)/ 0.9390(-) | |

| BTSRN [40] | 34.03(-)/- | 29.90(-)/- | 28.97(-)/- | 27.75(-)/- | - | |

| MemNet [41] | 34.09(-)/ 0.9248(-) | 30.00(-)/ 0.8350(-) | 28.96(-)/ 0.8001(-) | 27.56(-)/ 0.8376(-) | 32.51(-)/ 0.9369(-) | |

| SelNet [42] | 34.27(-)/ 0.9257(-) | 30.30(-)/ 0.8399(-) | 28.97(-)/ 0.8025(-) | - | - | |

| CARN [19] | 34.29(-)/ 0.9255(-) | 30.29(-)/ 0.8407(-) | 29.06(-)/ 0.8034(-) | 28.06(-)/ 0.8493(-) | 33.50(-)/ 0.9440(-) | |

| IMDN [49] | 34.36(-)/ 0.9270(-) | 30.32(-)/ 0.8417(-) | 29.09(-)/ 0.8046(-) | 28.17(+)/ 0.8519(-) | 33.61(+)/ 0.9445(+) | |

| RAN [43] | 33.71(-)/ 0.9223(-) | 29.84(-)/ 0.8326(-) | 28.84(-)/ 0.7981(-) | - | - | |

| DNCL [44] | 33.95(-)/ 0.9232(-) | 29.93(-)/ 0.8340(-) | 28.91(-)/ 0.7995(-) | 27.27(-)/ 0.8326(-) | - | |

| FilterNet [45] | 34.08(-)/ 0.9250(-) | 30.03(-)/ 0.8370(-) | 28.95(-)/ 0.8030(-) | 27.55(-)/ 0.8380(-) | - | |

| MRFN [46] | 34.21(-)/ 0.9267(-) | 30.03(-)/ 0.8363(-) | 28.99(-)/ 0.8029(-) | 27.53(-)/ 0.8389(-) | 32.82(-)/ 0.9396(-) | |

| SeaNet-baseline [48] | 34.36(-)/ 0.9280(+) | 30.34(+)/ 0.8428(+) | 29.09(-)/ 0.8053(+) | 28.17(+)/ 0.8527(+) | 33.40(-)/ 0.9444(+) | |

| DEGREE [47] | 33.76(-)/ 0.9211(-) | 29.82(-)/ 0.8326(-) | 28.74(-)/ 0.7950(-) | - | - | |

| SCANet (Ours) | 34.42/0.9272 | 30.32/0.8421 | 29.09/0.8051 | 28.14/0.8524 | 33.55/0.9443 | |

| SRCNN [5] | 30.48(-)/ 0.8628(-) | 27.49(-)/ 0.7503(-) | 26.90(-)/ 0.7101(-) | 24.52(-)/ 0.7221(-) | 27.66(-)/ 0.8505(-) | |

| FSRCNN [6] | 30.71(-)/ 0.8657(-) | 27.59(-)/ 0.7535(-) | 26.98(-)/ 0.7150(-) | 24.62(-)/ 0.7280(-) | 27.90(-)/ 0.8517(-) | |

| VDSR [7] | 31.35(-)/ 0.8838(-) | 28.01(-)/ 0.7674(-) | 27.29(-)/ 0.7251(-) | 25.18(-)/ 0.7524(-) | 28.83(-)/ 0.8809(-) | |

| DRCN [18] | 31.53(-)/ 0.8854(-) | 28.02(-)/ 0.7670(-) | 27.23(-)/ 0.7233(-) | 25.14(-)/ 0.7510(-) | 28.98(-)/ 0.8816(-) | |

| CNF [38] | 31.55(-)/ 0.8856(-) | 28.15(-)/ 0.7680(-) | 27.32(-)/ 0.7253(-) | - | - | |

| LapSRN [39] | 31.54(-)/ 0.8850(-) | 28.19(-)/ 0.7720(-) | 27.32(-)/ 0.7280(-) | 25.21(-)/ 0.7560(-) | 29.09(-)/ 0.8845(-) | |

| DRRN [18] | 31.68(-)/ 0.8888(-) | 28.21(-)/ 0.7720(-) | 27.38(-)/ 0.7284(-) | 25.44(-)/ 0.7638(-) | 29.46(-)/ 0.8960(-) | |

| BTSRN [40] | 31.85(-)/- | 28.20(-)/- | 27.47(-)/- | 25.74(-)/- | - | |

| MemNet [41] | 31.74(-)/ 0.8893(-) | 28.26(-)/ 0.7723(-) | 27.40(-)/ 0.7281(-) | 25.50(-)/ 0.7630(-) | 29.42(-)/ 0.8942(-) | |

| SelNet [42] | 32.00(-)/ 0.8931(-) | 28.49(-)/ 0.7783(-) | 27.44(-)/ 0.7325(-) | - | - | |

| SRDenseNet [23] | 32.02(-)/ 0.8934(-) | 28.50(-)/ 0.7782(-) | 27.53(-)/ 0.7337(-) | 26.05(-)/ 0.7819(-) | - | |

| CARN [19] | 32.13(-)/ 0.8937(-) | 28.60(-)/ 0.7806(-) | 27.58(-)/ 0.7349(-) | 26.07(-)/ 0.7837(-) | 30.47(-)/ 0.9084(+) | |

| IMDN [49] | 32.21(+)/ 0.8948(+) | 28.58(-)/ 0.7811(-) | 27.56(-)/ 0.7353(-) | 26.04(-)/ 0.7838(-) | 30.45(-)/ 0.9075(-) | |

| RAN [43] | 31.43(-)/ 0.8847(-) | 28.09(-)/ 0.7691(-) | 27.31(-)/ 0.7260(-) | - | - | |

| DNCL [44] | 31.66(-)/ 0.8871(-) | 28.23(-)/ 0.7717(-) | 27.39(-)/ 0.7282(-) | 25.36(-)/ 0.7606(-) | - | |

| FilterNet [45] | 31.74(-)/ 0.8900(-) | 28.27(-)/ 0.7730(-) | 27.39(-)/ 0.7290(-) | 25.53(-)/ 0.7680(-) | - | |

| MRFN [46] | 31.90(-)/ 0.8916(-) | 28.31(-)/ 0.7746(-) | 27.43(-)/ 0.7309(-) | 25.46(-)/ 0.7654(-) | 29.57(-)/ 0.8962(-) | |

| SeaNet-baseline [48] | 32.18(-)/ 0.8948(-) | 28.61(-)/ 0.7822(+) | 27.57(-)/ 0.7359(-) | 26.05(-)/ 0.7896(+) | 30.44(-)/ 0.9088(+) | |

| DEGREE [47] | 31.47(-)/ 0.8837(-) | 28.10(-)/ 0.7669(-) | 27.20(-)/ 0.7216(-) | - | - | |

| SCANet (Ours) | 32.20/0.8947 | 28.61/0.7821 | 27.58/0.7365 | 26.17/0.7893 | 30.50/0.9083 |

| Indicators | SRCNN | VDSR | SCANet |

|---|---|---|---|

| NIQE ↓ | 7.17 | 5.85 | 5.48 |

| BRISQUE ↓ | 58.87 | 41.64 | 39.51 |

| Methods | SRCNN | VDSR | SCANet |

|---|---|---|---|

| SRCNN | 0 | 1 | 1 |

| VDSR | −1 | 0 | 1 |

| SCANet | −1 | −1 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Wang, J.; Liu, X. Deep Successive Convex Approximation for Image Super-Resolution. Mathematics 2023, 11, 651. https://doi.org/10.3390/math11030651

Li X, Wang J, Liu X. Deep Successive Convex Approximation for Image Super-Resolution. Mathematics. 2023; 11(3):651. https://doi.org/10.3390/math11030651

Chicago/Turabian StyleLi, Xiaohui, Jinpeng Wang, and Xinbo Liu. 2023. "Deep Successive Convex Approximation for Image Super-Resolution" Mathematics 11, no. 3: 651. https://doi.org/10.3390/math11030651

APA StyleLi, X., Wang, J., & Liu, X. (2023). Deep Successive Convex Approximation for Image Super-Resolution. Mathematics, 11(3), 651. https://doi.org/10.3390/math11030651