Broad Embedded Logistic Regression Classifier for Prediction of Air Pressure Systems Failure

Abstract

:1. Introduction

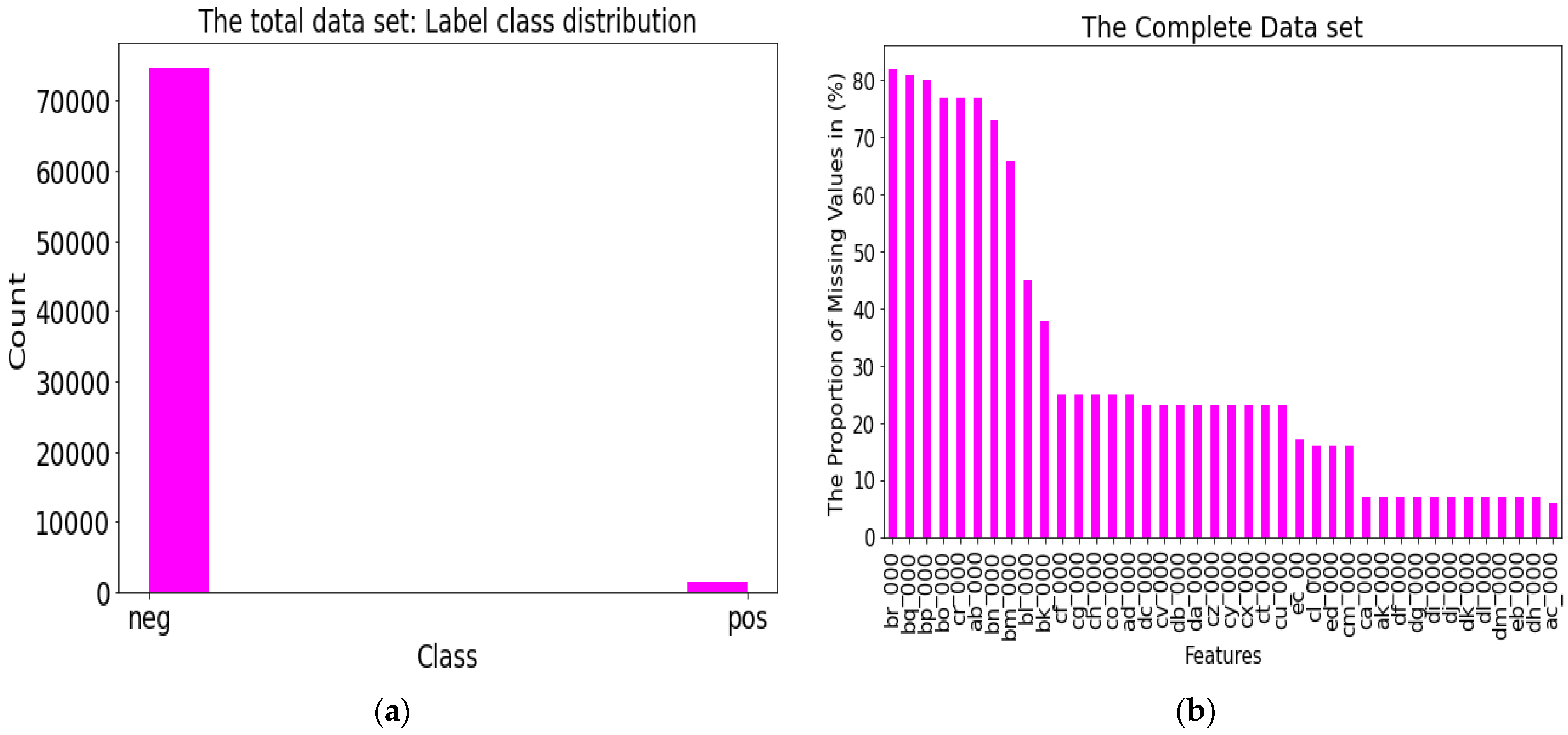

- High volume of missing values in the data.

- Strongly imbalanced distribution of classes.

- We propose broad embedded logistic regression (BELR). It is the fusion of broad learning system and logistic regression. We apply the proposed BELR to predict APS’s failure.

- We propose a hybrid objective function based on the classical logistic regression objective function.

- We impute the missing value using the KNN algorithm.

- We propose and explore feature-mapped nodes of the BLS to extract discriminative features from the input data and enhancement nodes for further separation of the two classes such that the skewed distribution data set cannot affect the performance of the proposed broad embedded logistic regression (BELR).

- We explore principal component analysis (PCA) for dimensionality reduction and combine BLS and logistic regression classifier for the prediction of air pressure failure detection.

2. Related Work

2.1. APS Failure Prediction

2.2. Broad Learning System (BLS) and Logistic Regression (LogR)

2.2.1. Broad Learning System (BLS)

2.2.2. Operation of BLS Networks

- (a)

- Feature-mapped nodes

- (b)

- Enhancement nodes

- (c)

- Network Output

2.2.3. Construction of Weight Matrices and Vectors

- (a)

- Construction of the Projection Matrix

- (b)

- Construction of the Weight Matrices of the Enhancement Nodes

- (c)

- Construction of Output Weight Vector

2.2.4. Logistic Regression

3. The Proposed Technique

4. Experiment and Settings

The Comparison of the Performance of the Compared Algorithms

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ML | Machine learning |

| APS | Air Pressure System |

| BELR | Broad Embedded Logistic Regression |

| BLS | Broad Learning System |

| LogR | Logistic Regression |

| PCA | Principal Component Analysis |

| RVFLNN | Random Vector Functional-link neural networks |

| IIoT | Industrial Internet of Things |

| SVM | Support Vector Machine |

| MLP | Multi-layer Perceptron |

| SMOTE | Synthetic Minority Oversampling Technique |

| ELM | Extreme Learning Machine |

| KNN | K-Nearest Neighbour |

| ADMM | Alternating Direction Method of Multipliers |

| RF | Random Forest |

| GNB | Gaussian Naïve Bayes |

| ROC | Receiver Operating Characteristics |

| max AUC | Maximizes Area Under the Curves |

| MAR | Missing at random |

| MNR | Missing not at random |

| MCAR | Missing completely at random |

| GAN | Generative Adversarial Network |

| f | Total number of feature-mapped nodes in the BLS network |

| e | Total number of enhancement nodes in the BLS network |

References

- Yuantao, F.; Nowaczyk, S.; Antonelo, E.A. Predicting air compressor failures with echo state networks. PHM Soc. Eur. Conf. 2016, 3, 1. [Google Scholar]

- Lokesh, Y.; Nikhil, K.S.S.; Kumar, E.V.; Mohan, G.K. Truck APS Failure Detection using Machine Learning. In Proceedings of the 2020 4th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 13–15 May 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Qu, W.; Balki, I.; Mendez, M.; Valen, J.; Levman, J.; Tyrrell, P.N. Assessing and mitigating the effects of class imbalance in machine learning with application to X-ray imaging. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 2041–2048. [Google Scholar] [CrossRef] [PubMed]

- Zolanvari, M.; Teixeira, M.A.; Jain, R. Effect of imbalanced datasets on security of industrial IoT using machine learning. In Proceedings of the IEEE International Conference on Intelligence and Security Informatics (ISI), Miami, FL, USA, 9–11 November 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Akarte, M.M.; Hemachandra, N. Predictive Maintenance of Air Pressure System Using Boosting Trees: A Machine Learning Approach; ORSI: Melle, Belgium, 2018. [Google Scholar]

- Wang, G.K.; Sim, C. Context-dependent modelling of deep neural network using logistic regression. In Proceedings of the IEEE Workshop on Automatic Speech Recognition and Understanding, Olomouc, Czech Republic, 8–12 December 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar]

- Wright, R.E. Logistic regression. In Reading and Understanding Multivariate Statistics; Grimm, L.G., Yarnold, P.R., Eds.; American Psychological Association: Washington, DC, USA, 1995; pp. 217–244. [Google Scholar]

- Chen, C.P.; Liu, Z. Broad learning system: An effective and efficient incremental learning system without the need for deep architecture. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 10–24. [Google Scholar] [CrossRef] [PubMed]

- Adegoke, M.; Leung, C.S.; Sum, J. Fault Tolerant Broad Learning System. In International Conference on Neural Information Processing; Springer: Cham, Switzerland, 2019; pp. 95–103. [Google Scholar]

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Köppen, M. The curse of dimensionality. In Proceedings of the 5th Online World Conference on Soft Computing in Industrial Applications (WSC5), Online, 4–18 September 2000; Volume 1. [Google Scholar]

- Rubin, D.B. Inference and missing data. Biometrika 1976, 63, 581–592. [Google Scholar] [CrossRef]

- Little, R.J.; Rubin, D.B. Statistical Analysis with Missing Data; John Wiley & Sons: Hoboken, NJ, USA, 2019; p. 793. [Google Scholar]

- Guo, Z.; Wan, Y.; Ye, H. A data imputation method for multivariate time series based on generative adversarial network. Neurocomputing 2019, 360, 185–197. [Google Scholar] [CrossRef]

- Zhang, S. Nearest neighbor selection for iteratively kNN imputation. J. Syst. Softw. 2012, 85, 2541–2552. [Google Scholar] [CrossRef]

- Gondek, C.; Daniel, H.; Oliver, R.S. Prediction of failures in the air pressure system of scania trucks using a random forest and feature engineering. In International Symposium on Intelligent Data Analysis; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Rengasamy, D.; Jafari, M.; Rothwell, B.; Chen, X.; Figueredo, G.P. Deep learning with dynamically weighted loss function for sensor-based prognostics and health management. Sensors 2020, 20, 723. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nowaczyk, S.; Prytz, R.; Rögnvaldsson, T.; Byttner, S. Towards a machine learning algorithm for predicting truck compressor failures using logged vehicle data. In Proceedings of the 12th Scandinavian Conference on Artificial Intelligence, Aalborg, Denmark, 20–23 November 2013; IOS Press: Washington, DC, USA, 2013; pp. 20–22. [Google Scholar]

- Costa, C.F.; Nascimento, M.A. Ida 2016 industrial challenge: Using machine learning for predicting failures. In International Symposium on Intelligent Data Analysis; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Cerqueira, V.; Pinto, F.; Sá, C.; Soares, C. Combining boosted trees with meta feature engineering for predictive maintenance. In International Symposium on Intelligent Data Analysis; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Ozan, E.C.; Riabchenko, E.; Kiranyaz, S.; Gabbouj, M. An optimized k-nn approach for classification on imbalanced datasets with missing data. In International Symposium on Intelligent Data Analysis; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Jose, C.; Gopakumar, G. An Improved Random Forest Algorithm for classification in an imbalanced dataset. In Proceedings of the 2019 URSI Asia-Pacific Radio Science Conference (AP-RASC), New Delhi, India, 9–15 March 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Syed, M.N.; Hassan, R.; Ahmad, I.; Hassan, M.M.; De Albuquerque, V.H.C. A Novel Linear Classifier for Class Imbalance Data Arising in Failure-Prone Air Pressure Systems. IEEE Access 2020, 9, 4211–4222. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Huang, G.B.; Ding, X.J.; Zhou, H.M. Optimization method based extreme learning machine for classification. Neurocomputing 2010, 74, 155–163. [Google Scholar] [CrossRef]

- Ding, S.; Zhao, H.; Zhang, Y.; Xu, X.; Nie, R. Extreme learning machine: Algorithm, theory and applications. Artif. Intell. Rev. 2015, 44, 103–115. [Google Scholar] [CrossRef]

- Gong, X.; Zhang, T.; Chen, C.L.P.; Liu, Z. Research review for broad learning system: Algorithms, theory, and applications. IEEE Trans. Cybern. 2021, 52, 8922–8950. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.; Han, M.; Chen, C.L.P.; Qiu, T. Recurrent broad learning systems for time series prediction. IEEE Trans. Cybern. 2018, 50, 1405–1417. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Zheng, J.; Xu, J.; Deng, W. Fault diagnosis method based on principal component analysis and broad learning system. IEEE Access 2019, 7, 99263–99272. [Google Scholar] [CrossRef]

- Liu, Z.; Chen, C.P. Broad learning system: Structural extensions on single-layer and multi-layer neural networks. In Proceedings of the 2017 International Conference on Security, Pattern Analysis, and Cybernetics (SPAC), Shenzhen, China, 15–17 December 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Feng, S.; Chen, C.P. Fuzzy broad learning system: A novel neuro-fuzzy model for regression and classification. IEEE Trans. Cybern. 2018, 50, 414–424. [Google Scholar] [CrossRef] [PubMed]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Jin, J.; Liu, Z.; Chen, C.L. Discriminative graph regularized broad learning system for image recognition. Sci. China Inf. Sci. 2018, 61, 112209. [Google Scholar] [CrossRef]

- Muideen, A.; Wong, H.T.; Leung, C.S. A fault aware broad learning system for concurrent network failure situations. IEEE Access 2021, 9, 46129–46142. [Google Scholar]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006; Volume 4, No. 4. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Asuncion, A.; Newman, D. UCI Machine Learning Repository. 2007. Available online: https://scholar.google.com/scholar?hl=en&as_sdt=0%2C5&q=31.%09Asuncion%2C+A.%3B+Newman%2C+D.+UCI+ma-chine+learning+repository&btnG=#d=gs_cit&t=1676228269803&u=%2Fscholar%3Fq%3Dinfo%3AbqbHDUKR2lMJ%3Ascholar.google.com%2F%26output%3Dcite%26scirp%3D0%26hl%3Den (accessed on 11 November 2022).

- Available online: https://www.kaggle.com/datasets/uciml/aps-failure-at-scania-trucks-data-set (accessed on 3 October 2022).

| Evaluation Metrics | Equivalent Equation |

|---|---|

| Precision | |

| Recall | |

| F1-Score | |

| Accuracy |

| Total Number of Data Points | No Rows of Training Data | No Rows of Test Data | |

|---|---|---|---|

| 76,000 | 68,400 | 7600 | |

| Training Set | Test Set | ||

| Negative Case | Positive Case | Negative Case | Positive Case |

| 67,162 | 123 | 7462 | 137 |

| Score (%) | GNB | LogR | RF | SVM | KNN | BELR |

|---|---|---|---|---|---|---|

| Precision | 32.45 | 77.81 | 82.6 | 92.05 | 80.23 | 80.91 |

| Sensitivity (Recall) | 79.35 | 57.89 | 57.67 | 27.78 | 55.78 | 62.25 |

| F1-Score | 46.06 | 66.39 | 67.92 | 42.68 | 65.81 | 70.39 |

| Accuracy | 96.64 | 98.94 | 99.01 | 98.65 | 98.95 | 99.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muideen, A.A.; Lee, C.K.M.; Chan, J.; Pang, B.; Alaka, H. Broad Embedded Logistic Regression Classifier for Prediction of Air Pressure Systems Failure. Mathematics 2023, 11, 1014. https://doi.org/10.3390/math11041014

Muideen AA, Lee CKM, Chan J, Pang B, Alaka H. Broad Embedded Logistic Regression Classifier for Prediction of Air Pressure Systems Failure. Mathematics. 2023; 11(4):1014. https://doi.org/10.3390/math11041014

Chicago/Turabian StyleMuideen, Adegoke A., Carman Ka Man Lee, Jeffery Chan, Brandon Pang, and Hafiz Alaka. 2023. "Broad Embedded Logistic Regression Classifier for Prediction of Air Pressure Systems Failure" Mathematics 11, no. 4: 1014. https://doi.org/10.3390/math11041014

APA StyleMuideen, A. A., Lee, C. K. M., Chan, J., Pang, B., & Alaka, H. (2023). Broad Embedded Logistic Regression Classifier for Prediction of Air Pressure Systems Failure. Mathematics, 11(4), 1014. https://doi.org/10.3390/math11041014