DDSG-GAN: Generative Adversarial Network with Dual Discriminators and Single Generator for Black-Box Attacks

Abstract

1. Introduction

- (1)

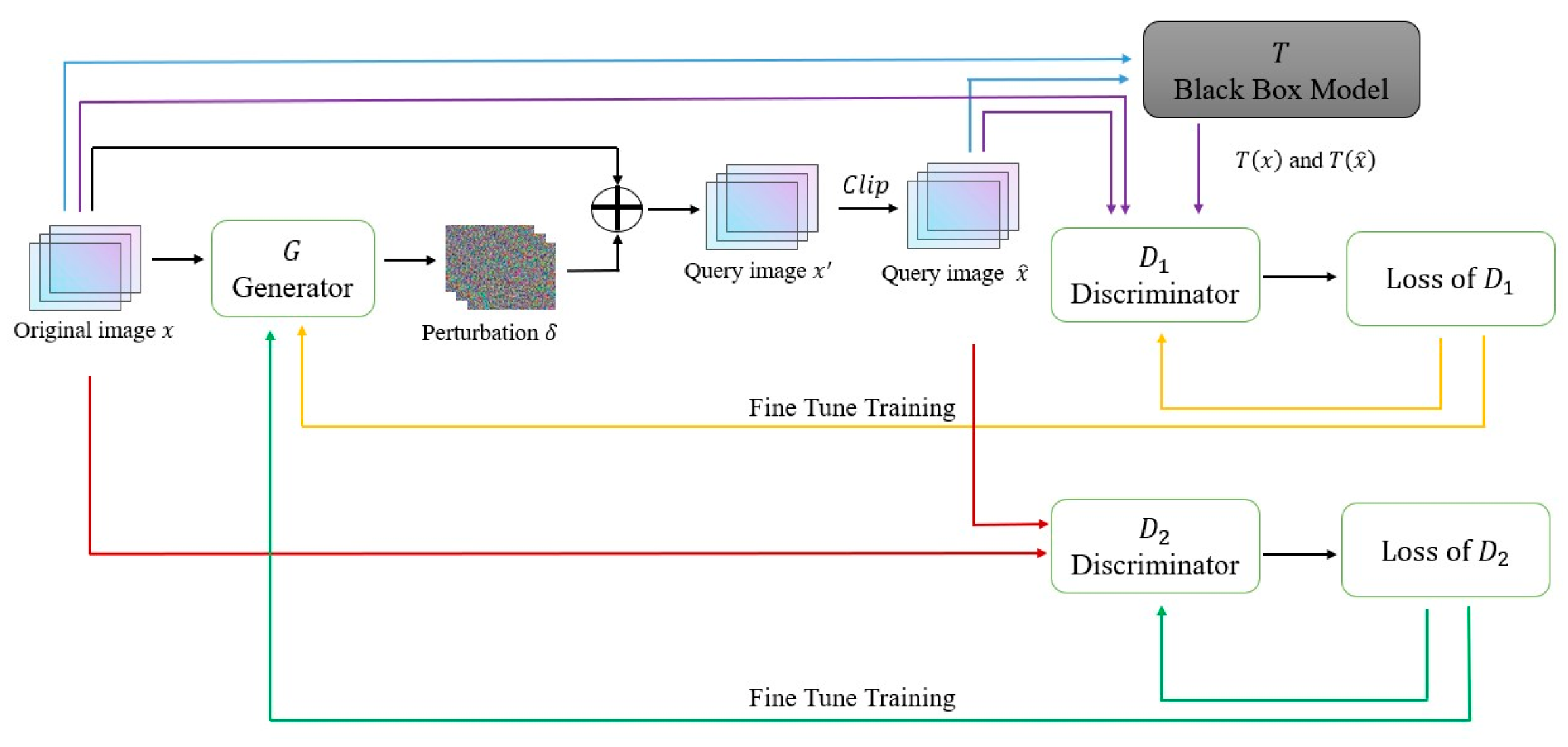

- This study presents a novel image AE generation method based on the GANs of dual discriminators. The generator generates adversarial perturbation, and two discriminators constrain the generator in different aspects. The constraint of discriminator guarantees the success of the attack, and the constraint of discriminator ensures the visual quality of the generated AE.

- (2)

- This study designs a new method to train the surrogate model; we use original images and AEs to train our substitute model together. The training process contains two stages: pre-training and fine-tuning. To make the most of the query results of the AE, we put the query results of the AEs into the circular queue for the subsequent training, which greatly reduces the query requirement of the target model and makes efficient use of the query results.

- (3)

- This study introduces a clipping mechanism so that the generated AEs are within the neighborhood of the original image.

2. Related Work

2.1. White-Box attack

2.2. Black-Box attack

3. Methodology

3.1. Preliminaries

3.1.1. Adversarial Examples and Adversarial Attack

3.1.2. Attack Scenarios

- (1)

- Score-based attacks. In this scenario, the attacker is unknown to any structure and parameter information of the target model, but for any input, the adversary can acquire the classification confidence.

- (2)

- Decision-based attacks. Similar to the attack scenario of score-based attacks, the adversary doesn’t know any structure and parameter information of the target model, but for any input, the attacker can acquire the classification label.

3.2. Model Architecture

3.2.1. The Training of Discriminator

| Algorithm 1 | Training procedure of the Discriminator |

| Input: | Training dataset and , where is the original image and is the sample after adding perturbation, target model , the discriminator and its parameters , the generator and its parameters ; loss function is defined in Equation (7). |

| Parameters: | Batch number , learning rate , iterations , weight factor and , clipping upper bound and lower bound . |

| Output: | The trained Discriminator . |

| 1: | for to do |

| 2: | for to do |

| 3: | |

| 4: | if do |

| 5: | |

| 6: | |

| 7: | elifdo |

| 8: | |

| 9: | |

| 10: | end if |

| 11: | |

| 12: | |

| 13: | |

| 14: | end for |

| 15: | end for |

| 16: | return |

3.2.2. The Training of Discriminator

| Algorithm 2 | Training procedure of the Discriminator |

| Input: | Training dataset and , where is the original image and are the query samples, the discriminator and its parameters , loss function is defined in Equation (9). |

| Parameters: | Batch number , Learning rate , iterations . |

| Output: | The trained Discriminator . |

| 1: | for to do |

| 2: | for to do |

| 3: | |

| 4: | |

| 5: | end for |

| 6: | end for |

| 7: | return |

3.2.3. The Training of Generator

3.2.4. Improved Model

| Algorithm 3 | Training procedure of the DDSG-GAN. |

| input: | Target model , generator and it’s parameters , discriminator and its parameters , discriminator and it’s parameters , original image–label pair the learning rate , and . |

| output: | The trained generator . |

| 1: | Initialize the model of , and . |

| 2: | for to do |

| 3: | for to do |

| 4: | |

| 5: | if do |

| 6: | |

| 7: | |

| 8: | elif do |

| 9: | |

| 10: | |

| 11: | end if |

| 12: | query example |

| 13: | if and do |

| 14: | Input into the targeted model to get the query result |

| Add to the circular queue | |

| 16: | end if |

| 17: | if do pre-training of |

| 18: | |

| 19: | elif do fine tuning of |

| 20: | is taken from the circular queue |

| 21: | end if |

| 22: | |

| 23: | end for |

| 24: | for to do |

| 25: | |

| 26: | |

| 27: | end for |

| 28: | for to do |

| 29: | |

| 30: | |

| 31: | end for |

| 32: | end for |

| 33: | return |

3.2.5. Generate Adversarial Examples

4. Experiment

4.1. Experiment Setting

4.2. Experiments on MNIST

4.3. Experiments on CIFAR10 and Tiny-ImageNet

4.4. Model Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- McAllister, R.; Gal, Y.; Kendall, A.; Van Der Wilk, M.; Shah, A. Concrete problems for autonomous vehicle safety: Advantages of bayesian deep learning. In Proceedings of the Twenty-Sixth International Joint Conferences on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 4745–4753. [Google Scholar]

- Grosse, K.; Papernot, N.; Manoharan, P.; Backes, M.; McDaniel, P. Adversarial perturbations against deep neural networks for malware classification. arXiv 2016, arXiv:1606.04435. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572.2014. [Google Scholar]

- LeCun, Y. The Mnist Database of Handwritten Digits. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 10 January 2023).

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical Report; University of Toronto: Toronto, CA, USA, 2009. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017; pp. 39–57. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial examples in the physical world. In Artificial Intelligence Safety and Security; Roman, V.Y., Ed.; Chapman and Hall/CRC: Boca Raton, FL, USA, 2018; pp. 99–112. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. arXiv 2017, arXiv:1706.06083. [Google Scholar]

- Baluja, S.; Fischer, I. Adversarial Transformation Networks: Learning to Generate Adversarial Examples. arXiv 2017, arXiv:1703.09387. [Google Scholar]

- Xiao, C.; Li, B.; Zhu, J.Y.; He, W.; Liu, M.; Song, D. Generating Adversarial Examples with Adversarial Networks. arXiv 2018, arXiv:1801.02610. [Google Scholar]

- Moosavi-Dezfooli, S.M.; Fawzi, A.; Frossard, P. Deepfool: A Simple and Accurate Method to Fool Deep Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2574–2582. [Google Scholar]

- Su, J.; Vargas, D.V.; Sakurai, K. One pixel attack for fooling deep neural networks. IEEE Trans. Evol. Comput. 2019, 23, 828–841. [Google Scholar] [CrossRef]

- Chen, P.Y.; Zhang, H.; Sharma, Y.; Yi, J.; Hsieh, C.J. Zoo: Zeroth order optimization based black-box attacks to deep neural networks without training substitute models. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, 3 November 2017; pp. 15–26. [Google Scholar]

- Tu, C.C.; Ting, P.; Chen, P.Y.; Liu, S.; Zhang, H.; Yi, J.; Cheng, S.M. Autozoom: Autoencoder-based zeroth order optimization method for attacking black-box neural networks. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 February 2019; pp. 742–749. [Google Scholar]

- Ilyas, A.; Engstrom, L.; Madry, A. Prior convictions: Black-box adversarial attacks with bandits and priors. arXiv 2018, arXiv:1807.07978. [Google Scholar]

- Guo, C.; Gardner, J.; You, Y.; Wilson, A.G.; Weinberger, K. Simple black-box adversarial attacks. In Proceedings of the International Conference on Machine Learning, Boca Raton, FL, USA, 16–19 December 2019; pp. 2484–2493. [Google Scholar]

- Yang, J.; Jiang, Y.; Huang, X.; Ni, B.; Zhao, C. Learning black-box attackers with transferable priors and query feedback. In Proceedings of the NeurIPS 2020, Advances in Neural Information Processing Systems 33, Beijing, China, 6 December 2020; pp. 12288–12299. [Google Scholar]

- Du, J.; Zhang, H.; Zhou, J.T.; Yang, Y.; Feng, J. Query efficient meta attack to deep neural networks. arXiv 2019, arXiv:1906.02398. [Google Scholar]

- Ma, C.; Chen, L.; Yong, J.H. Simulating unknown target models for query-efficient black-box attacks. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11835–11844. [Google Scholar]

- Biggio, B.; Corona, I.; Maiorca, D.; Nelson, B.; Šrndić, N.; Laskov, P. Evasion attacks against machine learning at test time. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Prague, Czech Republic, 22–26 September 2013; pp. 387–402. [Google Scholar]

- Xie, C.; Zhang, Z.; Zhou, Y.; Bai, S.; Wang, J.; Ren, Z.; Yuille, A.L. Improving transferability of adversarial examples with input diversity. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2730–2739. [Google Scholar]

- Demontis, A.; Melis, M.; Pintor, M.; Matthew, J.; Biggio, B.; Alina, O.; Roli, F. Why do adversarial attacks transfer? explaining transferability of evasion and poisoning attacks. In Proceedings of the 28th USENIX Security Symposium, Santa Clara, CA, USA, 14–16 August 2019; pp. 321–338. [Google Scholar]

- Kariyappa, S.; Prakash, A.; Qureshi, M.K. Maze: Data-free model stealing attack using zeroth-order gradient estimation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 18–24 June 2022; pp. 13814–13823. [Google Scholar]

- Wang, Y.; Li, J.; Liu, H.; Wang, Y.; Wu, Y.; Huang, F.; Ji, R. Black-box dissector: Towards erasing-based hard-label model stealing attack. In Proceedings of the 2021 European Conference on Computer Vision, Montreal, Canada, 11 October 2021; pp. 192–208. [Google Scholar]

- Yuan, X.; Ding, L.; Zhang, L.; Li, X.; Wu, D.O. ES attack: Model stealing against deep neural networks without data hurdles. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 6, 1258–1270. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial nets. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Zhao, Z.; Dua, D.; Singh, S. Generating Natural Adversarial Examples. arXiv 2017, arXiv:1710.11342. [Google Scholar]

- Zhou, M.; Wu, J.; Liu, Y.; Liu, S.; Zhu, C. Dast: Data-Free Substitute Training for Adversarial Attacks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 234–243. [Google Scholar]

- Tramèr, F.; Kurakin, A.; Papernot, N.; Goodfellow, I.; Boneh, D.; McDaniel, P. Ensemble adversarial training: Attacks and defenses. arXiv 2017, arXiv:1705.07204. [Google Scholar]

- Papernot, N.; McDaniel, P.; Goodfellow, I.; Jha, S.; Celik, Z.B.; Swami, A. Practical black-box attacks against machine learning. In Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security, Abu Dhabi, United Arab Emirates, 2–6 April 2017; pp. 506–519. [Google Scholar]

- Brendel, W.; Rauber, J.; Bethge, M. Decision-based adversarial attacks: Reliable attacks against black-box machine learning models. arXiv 2017, arXiv:1712.04248. [Google Scholar]

- Cheng, M.; Le, T.; Chen, P.Y.; Yi, J.; Zhang, H.; Hsieh, C.J. Query efficient hard-label black-box attack: An optimization based approach. arXiv 2018, arXiv:1807.04457. [Google Scholar]

| A | B | C | D |

|---|---|---|---|

| Conv(64,5,5) + Relu Conv(64,5,5) + Relu Dropout(0.25) FC(128) + Relu Dropout(0.5) FC + Softmax | Dropout (0.2) Conv(64,8,8) + Relu Conv(128,6,6) + Relu Conv(128,5,5) + Relu Dropout(0.5) FC + Softmax | Conv(32,3,3) + Relu Conv(32,3,) + Relu Conv(64,3,3) + Relu Conv(64,3,3) + Relu FC(200) + Relu Dropout (0.5) FC(200) + Relu FC + Softmax | Conv(128,3,3) + tanh Conv(64,3,3) + tanh FC(128) + Relu FC + Softmax |

| Name | Description | ||

|---|---|---|---|

| 0.0001 | 0.0001 | the learning rate for updating | |

| 0.0001 | 0.0001 | the learning rate for updating | |

| 0.001 | 0.001 | the learning rate for updating | |

| 20 | 10 | query target model’s interval | |

| length | 60,001 | 60,001 | the maximum length of |

| 20 | 5 | updating queue H’s interval | |

| 1 | 1 | weight factor of (15) | |

| 1 | 1 | weight factor of (15) | |

| 10 () | 1 | weight factor of (15) | |

| 20 () |

| Target Model A | Target Model B | Target Model C | ||

|---|---|---|---|---|

| Untargeted attack | Accuracy | 99.33% | 99.01% | 99.16% |

| Similarity | 99.19% | 99.04% | 99.13% | |

| Targeted attack | Accuracy | 99.29% | 99.12% | 99.27% |

| Similarity | 99.19% | 99.16% | 99.22% | |

| Target Model | Accuracy | Method | ASR | ϵ |

|---|---|---|---|---|

| A | 98.97% | Black-box (Surrogate Model [32] + FGSM) | 69.4% | 0.4 |

| Black-box (Surrogate Model [32] + PGD) | 68.0% | 0.4 | ||

| Black-box ( as Surrogate Model + FGSM) | 74.1% | 0.3 | ||

| Black-box ( as Surrogate Model + PGD) | 90.2% | 0.3 | ||

| DaST [30] | 76.4% | 0.3 | ||

| DDSG-GAN (Proposed) | 100% | 0.3 | ||

| B | 99.6% | Black-box (Surrogate Model [32]+ FGSM) | 74.7% | 0.4 |

| Black-box (Surrogate Model [32]+ PGD) | 70.6% | 0.4 | ||

| Black-box ( as Surrogate Model + FGSM) | 77.1% | 0.3 | ||

| Black-box ( as Surrogate Model + PGD) | 82.8% | 0.3 | ||

| DaST [30] | 82.3% | 0.3 | ||

| DDSG-GAN (Proposed) | 99.9% | 0.3 | ||

| C | 99.17% | Black-box (Surrogate Model [32]+ FGSM) | 69.2% | 0.4 |

| Black-box (Surrogate Model [32]+ PGD) | 67.4% | 0.4 | ||

| Black-box ( as Surrogate Model + FGSM) | 73.5% | 0.3 | ||

| Black-box ( as Surrogate Model + PGD) | 91.3% | 0.3 | ||

| DaST [30] | 68.4% | 0.3 | ||

| DDSG-GAN (Proposed) | 100% | 0.3 |

| Target Model | Accuracy | Method | ASR | ϵ |

|---|---|---|---|---|

| A | 98.97% | Black-box (Surrogate Model [32]+ FGSM) | 11.3% | 0.4 |

| Black-box (Surrogate Model [32]+ PGD) | 24.9% | 0.4 | ||

| AdvGAN [12] | 93.4% | 0.3 | ||

| Black-box ( as Surrogate Model + FGSM) | 18.3% | 0.3 | ||

| Black-box ( as Surrogate Model + FGSM) | 50.3% | 0.3 | ||

| DaST [30] | 28.7% | 0.3 | ||

| DDGS-GAN (proposed) | 98.0% | 0.3 | ||

| B | 99.6% | Black-box (Surrogate Model [32]+ FGSM) | 17.6% | 0.4 |

| Black-box (Surrogate Model [32]+ PGD) | 22.3% | 0.4 | ||

| AdvGAN [12] | 90.1% | 0.3 | ||

| Black-box ( as Surrogate Model + FGSM) | 25.1% | 0.3 | ||

| Black-box ( as Surrogate Model + PGD) | 53.9% | 0.3 | ||

| DaST [30] | 40.3% | 0.3 | ||

| DDGS-GAN (proposed) | 97.6% | 0.3 | ||

| C | 99.17% | Black-box (Surrogate Model [32]+ FGSM) | 11.0% | 0.4 |

| Black-box (Surrogate Model [32]+ PGD) | 29.3% | 0.4 | ||

| AdvGAN [12] | 94.02% | 0.3 | ||

| Black-box ( as Surrogate Model + FGSM) | 18.0% | 0.3 | ||

| Black-box ( as Surrogate Model + PGD) | 65.8% | 0.3 | ||

| DaST [30] | 25.6% | 0.3 | ||

| DDGS-GAN (proposed) | 94.6% | 0.3 |

| Attack Type | Method | ASR | ϵ | Agv. Queries |

|---|---|---|---|---|

| Untargeted attack | Bandits [17] | 73% | 1.99 | 2771 |

| Decision Boundary [33] | 100% | 1.85 | 13,630 | |

| Opt-attack [34] | 100% | 1.85 | 12,925 | |

| DDSG-GAN | 90.6% | 1.85 | 1431 |

| Target Model/ Accuracy | Attack Type | Method | ASR | ϵ |

|---|---|---|---|---|

| ResNet-32/ 92.4% | Untargeted attack | Black-box (Surrogate Model [32]+ FGSM) | 79.5% | 0.4 |

| Black-box (Surrogate Model [32] + PGD) | 20.7% | 0.031 | ||

| Black-box ( as Surrogate Model + FGSM) | 84.4% | 0.031 | ||

| Black-box ( as Surrogate Model + PGD) | 86.9% | 0.031 | ||

| DaST [30] | 68.0% | 0.031 | ||

| DDSG-GAN (Proposed) | 89.5% | 0.031 | ||

| Targeted attack | Black-box (Surrogate Model [32]+ FGSM) | 7.6% | 0.4 | |

| Black-box (Surrogate Model [32]+ PGD) | 4.7% | 0.031 | ||

| Black-box ( as Surrogate Model + FGSM) | 19.5% | 0.031 | ||

| Black-box ( as Surrogate Model + PGD) | 16.9% | 0.031 | ||

| AdvGAN [12] | 78.47% | 0.032 | ||

| DaST [30] | 18.4% | 0.031 | ||

| DDSG-GAN (Proposed) | 79.4% | 0.031 |

| Attack Type | ϵ | ASR | |

|---|---|---|---|

| Untargeted attack | 4.6 | ResNet18 | 72.15% |

| ResNet50 | 83.76% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, F.; Ma, Z.; Zhang, X.; Li, Q.; Wang, C. DDSG-GAN: Generative Adversarial Network with Dual Discriminators and Single Generator for Black-Box Attacks. Mathematics 2023, 11, 1016. https://doi.org/10.3390/math11041016

Wang F, Ma Z, Zhang X, Li Q, Wang C. DDSG-GAN: Generative Adversarial Network with Dual Discriminators and Single Generator for Black-Box Attacks. Mathematics. 2023; 11(4):1016. https://doi.org/10.3390/math11041016

Chicago/Turabian StyleWang, Fangwei, Zerou Ma, Xiaohan Zhang, Qingru Li, and Changguang Wang. 2023. "DDSG-GAN: Generative Adversarial Network with Dual Discriminators and Single Generator for Black-Box Attacks" Mathematics 11, no. 4: 1016. https://doi.org/10.3390/math11041016

APA StyleWang, F., Ma, Z., Zhang, X., Li, Q., & Wang, C. (2023). DDSG-GAN: Generative Adversarial Network with Dual Discriminators and Single Generator for Black-Box Attacks. Mathematics, 11(4), 1016. https://doi.org/10.3390/math11041016