Abstract

In this paper, we study a distributionally robust optimization (DRO) problem with affine decision rules. In particular, we construct an ambiguity set based on a new family of Wasserstein metrics, shortfall–Wasserstein metrics, which apply normalized utility-based shortfall risk measures to summarize the transportation cost random variables. In this paper, we demonstrate that the multi-dimensional shortfall–Wasserstein ball can be affinely projected onto a one-dimensional one. A noteworthy result of this reformulation is that our program benefits from finite sample guarantee without a dependence on the dimension of the nominal distribution. This distributionally robust optimization problem also has computational tractability, and we provide a dual formulation and verify the strong duality that enables a direct and concise reformulation of this problem. Our results offer a new DRO framework that can be applied in numerous contexts such as regression and portfolio optimization.

Keywords:

distributionally robust optimization; Wasserstein metrics; utility-based shortfall risk measures MSC:

90C17; 91B05; 91G70

1. Introduction

In the literature of operations research (OR) and machine learning (ML), stochastic optimization problems of the following form have been widely studied:

where is a random vector with distribution , is the decision variable restricted to the set , represents a cost/loss function and is a measure of risk under the constraint that the distribution of the random variable is . In ML applications, the function typically takes the form . In OR, always typifies the disutility function, and is used as a tool to quantify risks.

In practice, the true distribution of is often unknown. To overcome the lack of knowledge on this distribution, distributionally robust optimization (DRO) was proposed as an alternative modeling paradigm. It seeks to find a decision variable that minimizes the worst-case expected loss , where is referred to as the ambiguity set characterized through some known properties of the true distribution . The choice of is of great significance and there are typically two ways to construct it. The first is moment-based ambiguity which contains distributions whose moments satisfy certain conditions [1]. The other, more popular approach is the discrepancy-based ambiguity set which is generally taken as a ball that contains distributions close to a nominal distribution with respect to a statistical distance. Popular choices of the statistical distance include Kullback–Leibler (KL) divergence [2,3], the Wasserstein metric [4], etc. Since the Wasserstein metric can be defined between a discrete distribution and a continuous distribution, the ambiguity set based on the Wasserstein metric includes richer distributions than that based on divergence [5]. This makes the Wasserstein metric more popular in modeling the ambiguity set. However, the authors of [5] point out that the ball is desirable as it contains various forms of distributions; however, the flip side is that it may be considered overly-conservative as distributions differing greatly from the empirical distribution may also be included.

Motivated by this, we extend classic Wasserstein metrics to shortfall–Wasserstein metrics by applying the utility-based shortfall risk measures to summarize the distribution of a transportation cost random variable. The formal definition will be given in Section 2. It is worth noting that the properties of the utility-based shortfall risk measure are widely researched in the literature of risk measures [6,7] and that it naturally includes the expectation as special cases. Based on the shortfall–Wasserstein metrics, we define an ambiguity set and formulate a new DRO problem. The utility based shortfall risk measure has been applied well in DRO; see, e.g., [8,9]. However, in the literature, the shortfall risk measure serves as an objective risk measure. In this paper, we employ a utility-based shortfall risk measure to construct the ambiguity set instead of the objective function, which is novel. Moreover, in this paper, we study the tractability of the new problem. In particular, we study the reformulation of the problem and reduce the problem to a convex problem with tractability. For the new metric, the property of the finite sample guarantee is also studied. In particular, to obtain those results, a key result is the projection result of the ambiguity set based on the shortfall–Wasserstein metric. Such projection result for different ambiguity sets have been studied by [10,11]. In this paper, a necessary and sufficient condition for the projection result of the new ambiguity set is given.

The main contribution of the paper can be summarized as follows.

- A new family of Wasserstein metrics based on utility-based shortfall risk measures is introduced and is called the shortfall–Wasserstein metric. We propose a data-driven DRO problem based on the shortfall–Wasserstein metric. It is shown that the new DRO model has the benefits of desirable properties of finite sample guarantee and computational tractability.

- For the new shortfall–Wasserstein metric, we define the corresponding uncertainty set, which is called the shortfall–Wasserstein ball, and give an equivalent characterization of the projection result to a one-dimensional ball. Based on the projection result, we show that the multi-dimensional constraint of our distributionally robust optimization model can be reformulated as a one-dimensional one. Based on this reformulation, we established the finite sample guarantee of the DRO problem which is free from the curse of dimensionality.

- We obtain a dual formulation for the robust optimization problem and verify the strong duality. In addition, the dual form admits a reformulation that can be completely characterized when taking the discrete empirical distribution as the center of ambiguity sets.

Related Work

The central idea behind DD-DRO is to model the distribution of uncertain parameters by constructing a uncertainty set from the sample. Designing a good uncertainty set is crucial. There are two kinds of sets in literature: the moment-based ambiguity set [1] and a “ball” structure based on a statistical distance [4]. Popular choices of the statistical distance include Kullback–Leibler (KL) divergence [2,3], the Wasserstein metric [4,12,13] and the CVaR– and expectile–Wasserstein metrics [5]. The shortfall–Wasserstein metrics proposed in this paper employ a utility-based shortfall risk measure to construct the ambiguity set which includes the expectile–Wasserstein metric as a special case. We also point out that there are also uncertainty sets defined based on stochastic dominance; see [14].

Notational conventions Throughout this paper, we denote as the extended reals and . Let denote the set of all distributions supported on . For , denotes the norm on . By we denote the Dirac distribution concentrating unit mass at and and . For any , let us denote . Moreover, by S we denote a Polish space and by the corresponding -algebra and we let be the set of all finite signed measures on . We use to denote the set of all finite continuous functions from S to . is denoted as as the set of all probability measures. For any , we use to denote the completion of with respect to . The extension of to is unique, and we interpret in as this extension defined on when is not measurable but is measurable [15]. For any and , by we denote the set of all Borel-measurable functions satisfying . Let , then we shall use to denote the set of all measurable functions .

2. Shortfall–Wasserstein Metric

Motivated by data-drivenness, the choice of is usually chosen as the empirical distribution. Let us denote the training dataset by and the empirical distribution by , with denoting the Dirac distribution at . In the DRO models introduced above, the choice of the probability metric d plays a significant role in constructing the ambiguity set . One of the most widely used probability metrics is the (type-1) Wasserstein metric [16]

which is applicable to any distributions with finite first moments. In this paper, we take norm with . The ambiguity set based on the Wasserstein metric is naturally defined as

which is called the Wasserstein ball centered at with radius . In the literature, the distributional robust problem with the ambiguity set being the above Wasserstein ball has been widely studied [4,12,13] and is known as the Wasserstein data-driven distributionally robust optimization model (W-DD-DRO),

As argued by [5], the ball is desirable as it contains various forms of distributions; however, the flip side is that it may be considered overly-conservative as distributions differing greatly from the empirical distribution may also be included. Motivated by this, they proposed the CVaR–Wasserstein and expectile–Wasserstein balls and studied the reformulation and tractability of the corresponding DRO problems. In this paper, we extend the expectile–Wasserstein metrics to Shortfall–Wasserstein metrics by applying normalized utility-based shortfall risk measures to evaluate the transportation cost.

2.1. Risk Measures

We first introduce some notions of risk measures. Let be the probability space, and is the -algebra on . Following the convention in mathematical finance, we describe a risk by a random variable . A risk measure is a functional mapping from a set of risks to . We list some desired properties as follows:

- (P1)

- (Translation invariance) for ;

- (P2)

- (Positive homogeneity) for any ;

- (P3)

- (Monotonicity) for any ;

- (P4)

- (Subadditivity) ;

- (P5)

- (Convexity) ;

- (P6)

- (Law invariance) for any .

A risk measure satisfying the above properties (P1) and (P2) is called a monetary risk measure and a risk measure satisfying the above properties (P1)–(P4) is called a coherent risk measure, which has been viewed as one of the most important risk measures since the seminal work [17]. A risk measure is called a convex risk measure if it satisfies properties (P1), (P2) and (P5). We then introduce the definition of utility-based shortfall risk measures.

Definition 1

(Utility-based shortfall risk measures). Let be non-decreasing and continuous, satisfying . For a random variable X, the utility-based shortfall risk measure is defined as

It is well known that any utility-based shortfall risk measure satisfies the monotonicity and translation invariance, and thus is a monetary risk measure. Based on the utility-based shortfall risk measure, we now formally give the definition of shortfall–Wasserstein metric as follows.

Definition 2

(Shortfall–Wasserstein metric). A metric is called the Shortfall–Wasserstein metric if it has the form of

where and is the norm on .

We first study the basic properties of the shortfall–Wasserstein metric.

Proposition 1.

Let be a convex, non-decreasing and continuous function satisfying . We have the following statements:

- (i)

- satisfies the identity of indiscernibles, i.e., if and only if ;

- (ii)

- satisfies the symmetry, that is, for any ;

- (iii)

- satisfies the non-negativity, i.e., for any ;

- (iv)

- If u is positively homogeneous, then satisfies the triangle inequality: for any , , .

Proof.

(i) Denote by the set of all distributions on with margins and . To see the sufficiency, note that when and , the set contains the joint distribution of . Therefore, as . To see the necessity, note that under the condition, satisfies convexity. By Theorem 4.2 of [18], we have . By that, the Wasserstein metric satisfies the identity of indiscernibles, then we have .

(ii) The symmetry follows immediately.

(iii) Note that and u is non-decreasing, we have for every . As the norm is non-negative and u is non-decreasing, we have , which means that satisifes the non-negativity.

(iv) From the definition, is convex and homogeneous as u is convex and homogeneous. It follows that for any , exists such that

From Theorem 6.10 of [19], we know that exists such that has the joint distribution and has the joint distribution . As a result,

where the second equality is the result of the homogeneity of and the last inequality holds due to the convexity of and the subadditivity of the norm. □

Proposition 1 tells us that when u is increasing, convex and positively homogeneous with , then the metric satisfies all the desired properties of a distance metric.

2.2. Formula of DRO Problems Based on Shortfall-Wasserstein Metric

Based on the shortfall–Wasserstein metric, we define the following ambiguity set

which is called the shortfall–Wasserstein ball centered at the empirical distribution . We consider the following problem

where is the decision vector, is the feasible set of decision vector, and l is the loss function. The above problem (2) is called the shortfall–Wasserstein data-driven distributionally robust optimization model (SW-DD-DRO).

To end the section, we present two applications of stochastic optimization (1): regression and risk minimization.

2.2.1. Regression

Considering a linear regression problem, our purpose is to find a linear predictor function with the regression coefficient vector. Our attention here is to find an accurate estimator of that is robust to adversarial perturbations of the data. Thus, distributionally robust regression models are constructed in the following form:

In the literature, the loss function always takes the form with , and the regression model is known as least-squares regression when . For convenience, we denote and , then the problem can be reformulated as the form of (2).

2.2.2. Portfolio Optimization

If we denote by a random vector of returns from n different financial assets and by the allocation vector, then the random variable means the total return of the portfolio. Thus, the problem (1) is considered a portfolio optimization problem. As an example, the risk measure is a well-known class of downside risk measure as the loss function takies the form of .

3. Shortfall–Wasserstein Data-Driven DRO

3.1. Reformulation of the Shortfall–Wasserstein DRO

To solve problem (2), the key step is to solve the inner maximization problem of (2), that is, for fixed ,

By the definition of the metric, the above problem can be rewritten as

As satisifes the translation invariance, for fixed , we have the constraint of the above problem being equivalent to , that is, . Therefore, we can further rewrite the above problem (5) as

To further simplify this problem, we first define the following notation:

and

We study the projection result of the shortfall–Wasserstein ball. Throughout the following subsections, denotes the set of all strictly increasing continuous functions on with . In the following theorem, we show that the constraint of the above problem (6) can be conveniently converted to a univariate setting.

Theorem 1.

Assume random vector with distribution function , and , we obtain that holds for any , if and only if exists such that

Proof.

To see the sufficiency, assume that is given by (9) with . Then we can verify that for any and . For any , exists such that satisfies , and . Due to the increasing property of and the Hölder inequality, we have

Thus, , then .

The converse direction of the set inclusion is presented next. For any , exists satisfying . Let , , in which is defined of the form

and is the sign function. Thus, we compute , . Then,

the fourth equality comes from . As , we obtain . This implies . Hence, we conclude that .

Specifically, choose , where Y is a random variable on and with . Let , then we have

where the second equality comes from and . Noting that , from (10) we obtain if and only if for any . By the arbitrariness of random variable Y and , we obtain for any random variable X on

It follows that is positively homogeneous. By the proposition 2.9 of [20], we can obtain the representation of (9). This completes the proof. □

By Theorem 1, a multi-dimensional sphere can be affinely projected to a one-dimensional sphere; thus, the multi-dimensional constraint of (6) can be simplified to the following one-dimensional one which enables the problem to be much more tractable.

3.2. Finite Sample Guarantee

Next, we will demonstrate that SW-DD-DRO has the great property of finite sample guarantee. In practice, the optimal solution is constructed from the training dataset , but we always pay more attention to the out-of-sample performance of the optimizer. As the true distribution is always unknown, the out-of-sample performance is hard to evaluate. Thus, we hope to establish a tight upper bound to provide performance guarantees for the solution as our main concern is to control the costs from above. To clarify our analysis, we first introduce some notations and assumptions.

- : the optimal risk we target at, i.e.,where is the true distribution.

- : the in-sample risk achieved by SW-DD-DRO, i.e.,where is the empirical distribution.

- : the out-of-sample risk achieved by the SW-DD-DRO solution, i.e.,where is the optimal solution of the problem in (13).

To establish the result of finite sample guarantee, the following assumptions are needed.

Assumption 1

(Light-tailed distribution). With β identified in the function defined in Theorem 1, an exponent exists such that .

Assumption 2.

The feasible set is bounded from above such that , where .

Assumption 3.

The feasible set is away from the origin such that , where .

Assumption 1 is a common assumption that demands the rate of decay of the tail of the distribution . Assumptions 2 and 3 are requirements of the feasible set , which are also mentioned in [21,22].

Proposition 2

(Finite sample guarantee). Assumptions 1–3 are in force, has the form defined in Theorem 1. Let and denote the optimal value and an optimizer of the problem (13). The radius of the ambiguity set is defined as the following form, with and a constant C relies on ,

where , only rely on a, A, and β. Thus, it holds the finite sample guarantee

Proof.

Denote , for all nonnegative random variables X,

Define , we have , . Solving the equation , we obtain

Denote , then as . When , we obtain , thus is concave. In the interval , attains its infimum at the endpoints, which means . When , we have , is a convex function and attain its infimum at . We have . Then holds for all . Denoting .Therefore, , and

As a result, for any , if . The -type Wasserstein metric is defined as the following:

Therefore, we have

From the assumption, , exists, satisfying . We have

where , and the first inequality is due to the Hölder inequality. The above inequality implies that satisfies the condition of Theorem 2 of [23]. As the corresponding empirical distribution of is , we know that the finite sample guarantee holds when is applied to summarize the transportation cost random variable and is specified, that is

where , only rely on a, A, and . We define for the shortfall–Wasserstein metric ,

From (17), we obtain

where . The first inequation comes from the fact that implies . This completes the proof. □

We show in Proposition 2 that the out-of-sample performance of can be bounded, when the radius of the ambiguity set is properly calibrated, by the optimal value with some confidence level. It is noteworthy that the order of the radius in this paper is , independent of the dimension of the nominal distribution, while the order of the radius suffers seriously from the curse of dimensionality in [4].

4. Worst-Case Expectation under the Shortfall–Wasserstein Metric

This section studies the tractability of solving (4). For notational convenience, denote , and X has a known probability distribution . We define the primal problem in the following form:

where . Let , [15]. For every such , define

For any , , as is finite. As a result, for every measurable , . Thus we have

According to the tradition in optimization theory, we refer to the following problem as the dual to the primal problem:

Consequently, the weak duality holds:

To further identify the equivalence between and , for every , we define as follows:

To simplify the notation, we write whenever and ; thus, we have . In Theorem 2, we show that, for performance measures l satisfying Assumption 4, equals .

Assumption 4.

The function is upper semicontinuous with .

Theorem 2.

Under Assumption 4 and , we can conclude

- (a)

- ;

- (b)

- A dual optimizer of the form exists for some and defined as in (20). Moreover, any feasible solutions and are optimizers of the primal and dual problem, satisfying , if and only if

Additionally, if the primal optimizer exists and there is solely one y in that attains the supremum in for μ, almost every , then is unique.

Remark 1.

From (21), if the optimal measure exists, , which means the worst-case joint probability is identified by a transport plan that transports mass from x to the optimizer of the local optimization problem . Furthermore, when , we obtain .

Remark 2.

If a subset exists, for any , the rate the loss function grows to is faster than the rate of growth, then . The reason is that for every and , ; thus, . Therefore, sometimes, it may be necessary to require the loss function l not to grow faster than c.

The proof of Theorem 2 is provided in Appendix A.

Corollary 2.

Under the Assumption 4 and , we can conclude

Proof.

From the proof of Theorem 2, we can see that there always exist , then we conclude

The proof is complete. □

Remark 3.

This conclusion that the optimal value of the multi-dimensional primal problem can be obtained by paying attention to the univariate reformulation in the right-hand side of (23) is of great significance. Moreover, the right-hand side of (23) is completely characterized given and the training dataset .

Indeed, this result implies that this multi-dimensional optimization problem in (5) can also be solved by putting effort into the completely characterized reformulation of (24). Considering the problem with the ambiguity set constructed by the classic Wasserstein metric (the norm defined in the metric is also with ),

Denote the inner maximum problem , we can obtain a similar result by applying Theorem 7 of [10] and Theorem 1 of [15], that is

Observing the formulas above, we can find that when . The result (24) is much more flexible than the result (25) as the form of the is optional.

To elaborate on the way our results help to solve practical problems, several simulations are introduced in the following.

5. Simulation

In this section, we show the application of Corollary 2 in solving the regression problem and the portfolio optimization problem. Moreover, several simulations are operated to investigate the performance of our model.

5.1. Regression Model

As mentioned in Section 2, our result can help to find an accurate estimator of the regression coefficient vector in linear regression problems

with and . In the literature, loss functions with the form are widely studied, especially when and . To elaborate on how this result helps to solve the tractability of regression programs, an example is presented below.

Example 1.

The Least Absolute Deviation (LAD) regression model seeks the regression coefficient estimator that minimizes the sum of absolute residuals . Take , and more specifically, we take , . Then we have

Let , then can be further broken down into the following form:

Considering the case , then

To make the supremum bounded, it is necessary to have , such that . Similarly, when , we can also derive . We next consider the following three cases.

- Case A.

- Considering when , we can see that is non-increasing whether is non-negative or non-positive; thus, attains its supremum at , with

- Case B.

- Considering when , we are supposed to further consider the form of under the cases and .(Case B.1) If ,The supremum in this case is .(Case B.2) If and ,The supremum in this case is .(Case B.3) If and and ,The supremum in this case is .(Case B.4) If and and ,The supremum in this case is .Combining the above four subcases, we conclude that the supremum is in Case B. Moreover, in all different situations, keeps the property of first monotonically increasing and then monotonically decreasing.

- Case C.

- Considering when , similar to Case A, is non-decreasing and attains its supremum at , with

Combining the above three cases, we have that is monotonically increasing and then monotonically decreasing under all situations. The supremum is . As a result, we have the optimum value

We can also consider this problem under the Wasserstein metric. A similar discussion yields that

Although the two results share similar formulas, the result under the shortfall–Wasserstein metric is more flexible as it is possible to adjust the parameters to achieve better performance.

The model that we simulated here is as follows:

Applying the above result under the shortfall–Wasserstein metric to this practical problem, we are supposed to find an estimator that minimizes

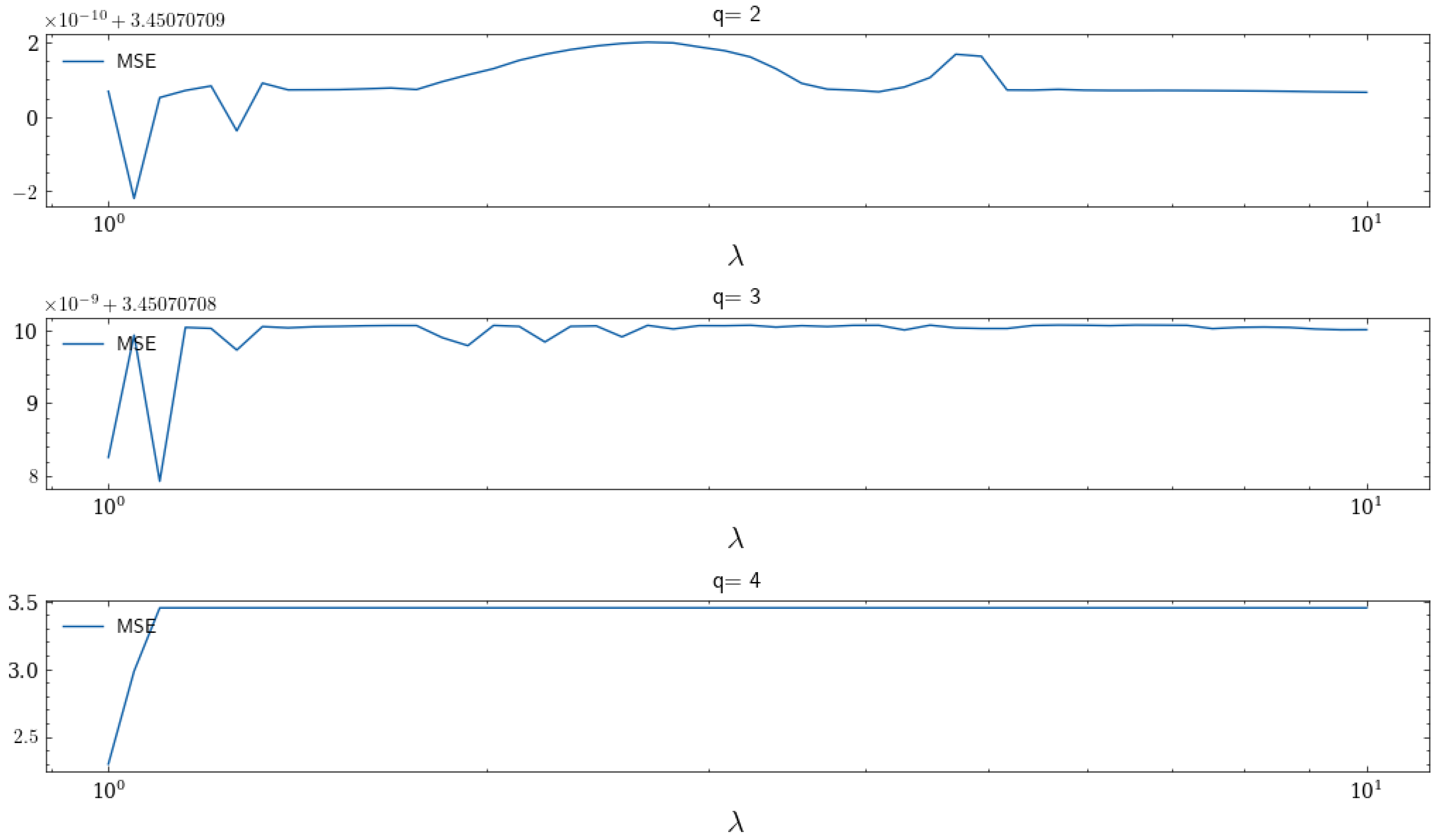

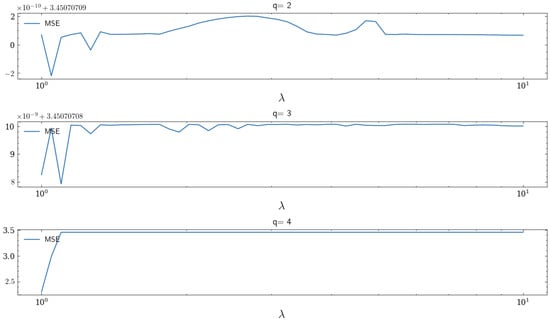

When , it reduces to the case of the classic Wasserstein metric. To illustrate their performance, we generate six hundred sets of samples with generated from the distribution and generated from the distribution independently and identically. The trend of MSE as ranges from one to ten is presented below.

In Figure 1, we find that it is possible to achieve smaller MSE by adjusting the value of . Moreover, when and , the value of that minimizes MSE is larger than 1, which means that the shortfall–Wasserstein robust regression can achieve a better prediction effect than the Wasserstein robust regression.

Figure 1.

MSE with respect to for different values of q.

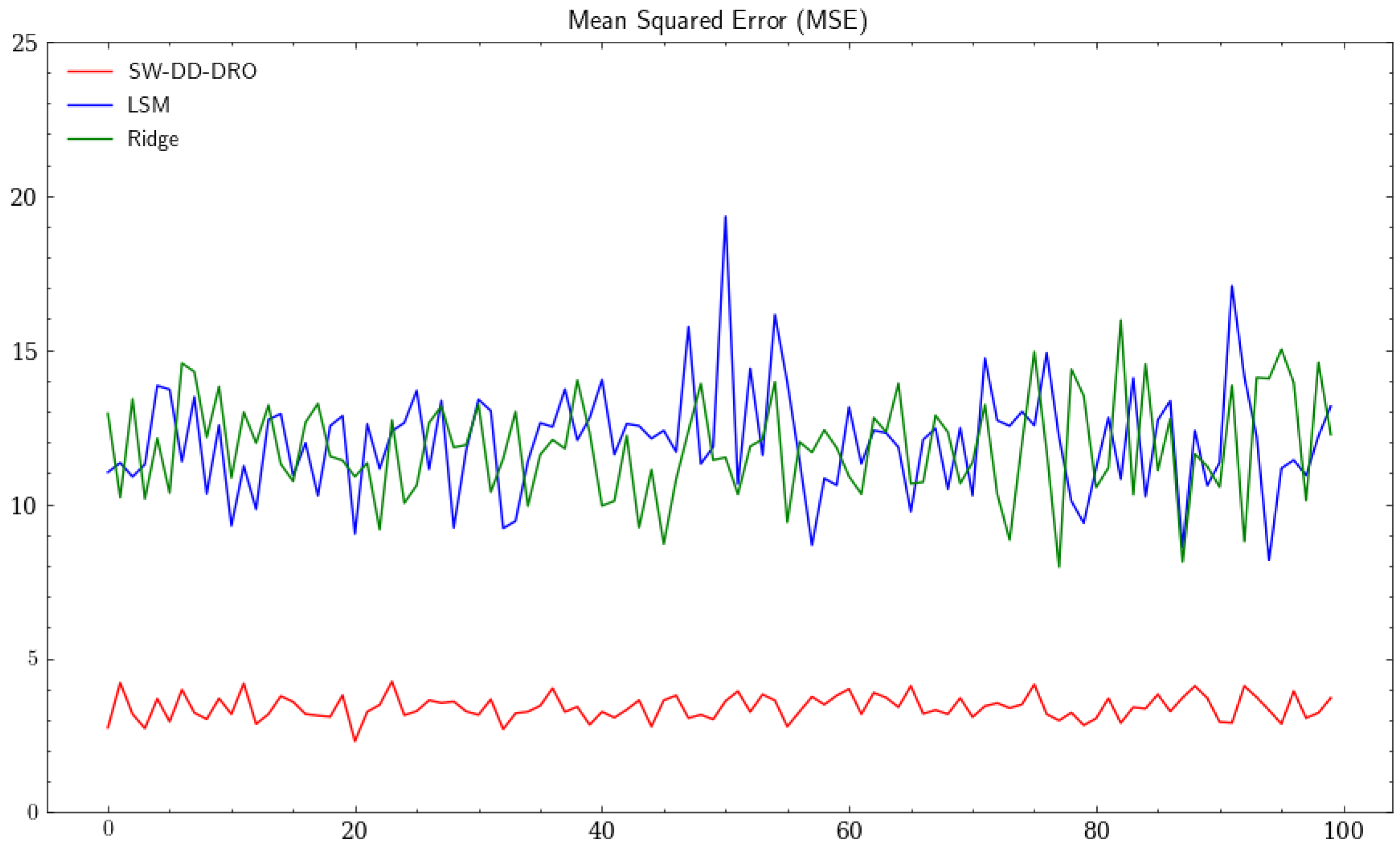

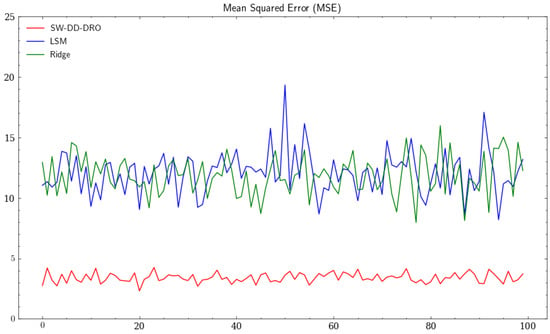

To test the robustness of our model, we further introduce some outliers and compare the performance with the least-squares regression (LSR) model and the ridge regression model. We set for the shortfall–Wasserstein robust regression model and the regularization coefficient 1 for the ridge regression. We conduct one hundred times iterations, producing one hundred sets of samples each time, half of which served as the training set while the rest served as the testing set. Moreover, ten sets of outlier samples are added to the training set at each iteration. The result is presented below.

In Figure 2, the MSE under the shortfall–Wasserstein robust regression model is much smaller and less volatile than the MSE under the other two models, which means the shortfall–Wasserstein robust regression model is better in resisting the large deviations in the predictors. As a result, the result based on the shortfall–Wasserstein metric is reliable, stable, and superior to the one based on the Wasserstein metric.

Figure 2.

The red, blue, and green curves represent the MSE under the SW-DD-DRO, the least-squares methods, and the ridge regression, respectively.

5.2. Portfolio Optimization

With being a random vector of returns from n different financial assets and being the allocation vector, the problem becomes a portfolio optimization problem. In this subsection, we take to characterize the downside risk, and the problem we are interested in is of the following form:

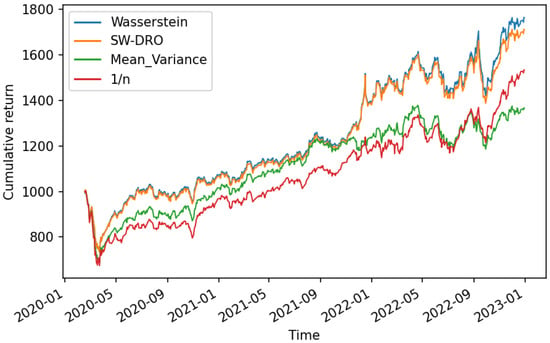

where c is a constant. By Corollary 2, with , the inner maximum problem can be reformulated to the following form:

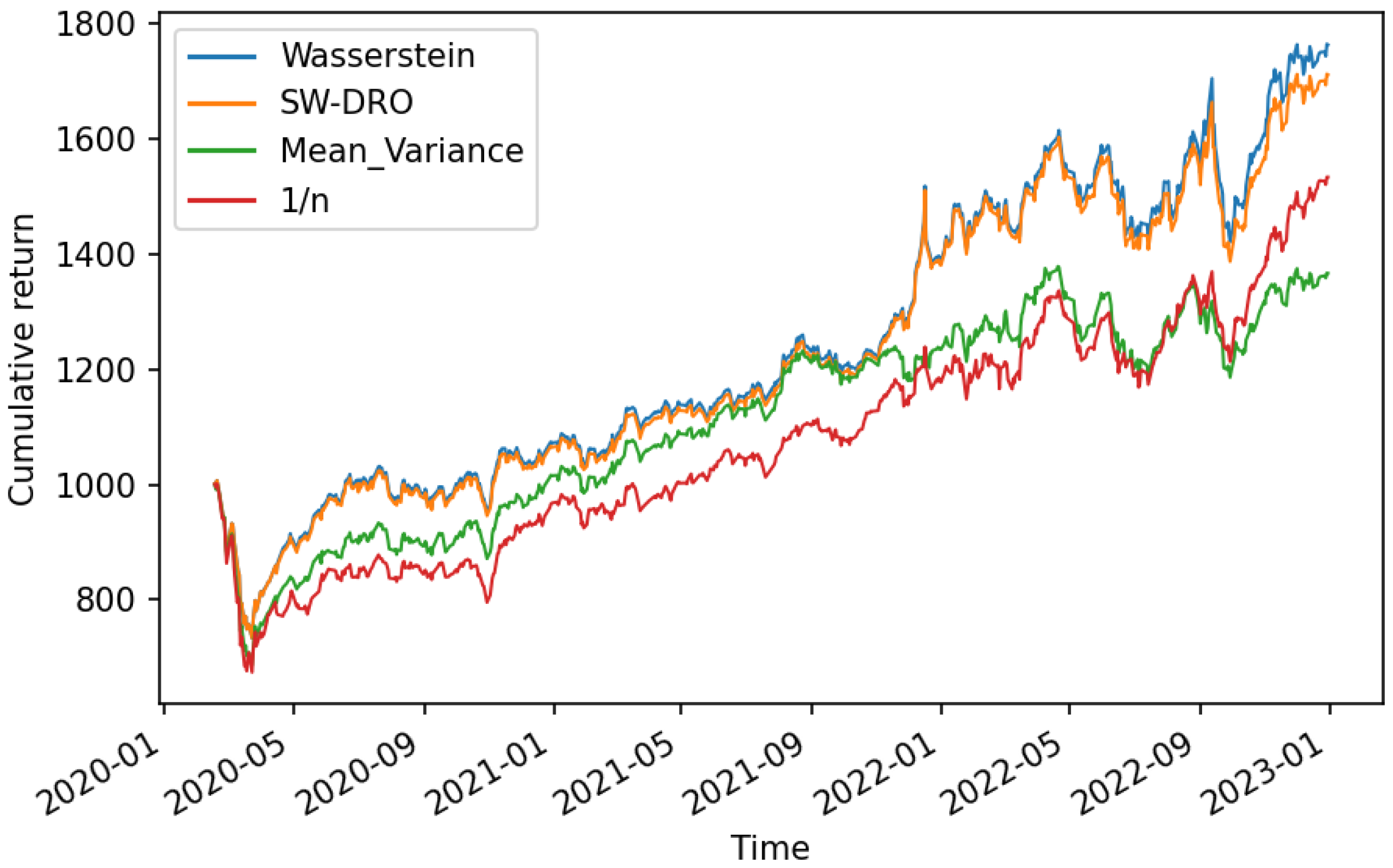

With the ambiguity set constructed by the classic Wasserstein metric, the result is the same as the form of the above formula with . For simulation, we choose four MSCI index assets, which are the MSCI Denmark index, the MSCI Turkey index, the MSCI Greece index, and the MSCI Norway index. We collect the daily closing prices of those indexes ranging from 1 January 2020 to 31 December 2022 from cn.investing.com. It is noteworthy that the COVID-19 outbreak began in 2020, and the distribution of assets is highly uncertain during this period. Based on those data, assume the initial asset is USD 1000 and there is no short sale. We set a 30-day time sliding window, the optimal weight is calculated based on the previous 30 days of historical data, and this result is taken as the investment decision of the next day. As the time window rolls, the cumulative return curves under different strategies are presented below.

The four curves represent the cumulative returns under the model constructed by the shortfall–Wasserstein metric with , the model constructed by the classic Wasserstein metric, the mean-variance model and the portfolio model, respectively. The mean-variance model, a model that aims to minimize investment variance under certain expected returns, was proposed by [24]. The portfolio model divides the money equally among each asset, and [25] found that the portfolio performs well when the overall distribution of assets is highly uncertain.

In Figure 3, we can see that the first two cumulative return curves under the robust optimization models outperform the curves under the mean-variance model and the portfolio model. Because of the similarity of the result of models under the shortfall–Wasserstein metric and the classic Wasserstein metric, the first two curves essentially behave the same. Since 2020, the global economy has been in recession due to the pandemic. In 2022, the epidemic was relatively stable and the economy slowly began to recover. During this period, the distribution of the four assets is highly uncertain. All four of these models perform well as the curves climb steadily, but the robust models take the variability into account and make better decisions, especially during the recovery period.

Figure 3.

Cumulative return curves under different strategies.

In general, in regression problems, our results are more reliable and robust when the sample is contaminated; in portfolio optimization problems, when the distribution of assets is highly uncertain, our results perform significantly better. Moreover, our result can be more widely used than the result under the Wasserstein model as it is applicable for many complex forms of the function l. Even when the loss function is relatively simple, our model can achieve the same or even better performance than the classic Wasserstein model by adjusting the form of the function .

6. Conclusions

In this paper, we propose a new DRO framework by extending the classic Wasserstein metrics to the shortfall–Wasserstein metrics. We study the tractability and reformulations of the shortfall–Wasserstein DRO problems for the loss function which is linear in the decision vector. This case of objective function includes many applications, such as regression and portfolio selection. One interesting result in the paper is that we give an equivalent characterization of the projection result to a one-dimensional ball. Based on the projection result, we show that the multi-dimensional constraint of our distributionally robust models can be reformulated as a one-dimensional one. Based on this reformulation, we established the finite sample guarantee of the DRO problem which is free from the curse of dimensionality. We present the application of our model in regression and provide simulation results to illustrate the performance of our results. In addition, we also present the real-data analysis on a portfolio selection to illustrate the performance of our new DRO model. Since this paper focuses on the linear cost function , a possible future study is to consider the general loss function and study the reformulations and tractability of the general DRO problems.

Author Contributions

Conceptualization, R.L.; methodology, R.L., W.L. and T.M.; software, R.L.; validation, R.L., W.L. and T.M.; formal analysis, R.L. and W.L.; investigation, W.L. and T.M.; resources, W.L. and T.M.; data curation, R.L.; writing—original draft preparation, R.L.; writing—review and editing, W.L. and T.M.; visualization, R.L. and W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China (Nos. 71671176, 71871208) and Anhui Natural Science Foundation (No. 2208085MA07).

Data Availability Statement

The data that support the analysis of this study are openly available in https://cn.investing.com/.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 2

The proof procedure of Theorem 2 follows a similar idea as Theorem 1 of [15]. For completely, we have included it in the Appendix. We verify the strong duality in compact Polish spaces S first and then generalize to .

Appendix A.1. Strong Duality in Compact Spaces

To prepare for the verification, let us denote , , and for , define

Proposition A1.

Suppose S is a compact Polish space, and satisfies Assumption 4, , then for any , , and a primal optimizer satisfying exists.

Proof.

To prepare to apply the Fenchel duality theorem (see Theorem 1 in Chapter 7 of [26]), denote and its topological dual , which is a consequence of the Riesz representation theorem. Next, let

In the set C, each g is identified by the pair

for some in S, . Define functions and as below:

From the definition, it is evident that the functional is convex and is concave,

The corresponding conjugate functions and are as below:

For every ,

Therefore,

Next, to determine , we can see that if a measure is not non-negative, (for more details, see Lemma B.6 of [15]). When is non-negative, as l is an upper semicontinuous function that is bounded from above, we can use a monotonically decreasing sequence of continuous functions to approximate l point-by-point. As a result of the monotone convergence theorem, we have the following equality:

Then,

Then,

Then,

Because there are some points of the relative interiors of C and D contained in the set and the epigraph of the function has a nonempty interior, further is finite, by the Fenchel’s duality theorem,

Moreover, some that attain the supremum exist in the right. As , then

Similar to the proof of weak duality, we can verify that . Then we obtain and some exist such that . The proof is complete. □

Appendix A.2. Strong Duality in Non-Compact Spaces

Proposition A1 has established the strong duality in compact Polish spaces. We intend to extend the strong duality to , the verification is broken down into the following steps, and Proposition A2 is the first step.

For convenience, we first introduce some notations. For any , denote

in which and are, respectively, referred to as the supports of marginals and . We have that the set is -compact as every probability measure defined on a Polish space has -compact support; thus, in Proposition A2, can be expressed as the union of an increasing sequence of compact subsets [15]. Then we can apply the results of Proposition A1 via a sequential argument. For any closed subset , let

where and denotes the set of measurable functions . With this notation, . Furthermore, the function is measurable. A more detailed explanation of this can be found in [15]. Finally, let us denote E as

Proposition A2.

If Assumption 4 holds and , then for any ,

Proof.

This proof is similar to [15]. We give a proof for completeness here. From the discussion above the proposition, we know that is -compact. By the definition of -compactness, an increasing sequence of compact subsets of exists such that . As and are finite, one is able to find and to satisfy

where . Define and its corresponding marginals as below:

For every , define

whose supports are . As is compact, from Proposition A1, we know that a exists, satisfying

Construct a measure ,

It can be verified that for some . From the definition of , we have

while the third equality is because , and . Furthermore,

Then, , and consequently,

Thus, we have , and as , we have . Proposition A2 already holds when , so we take ; for every and any , take an -optimal solution for ,

As belongs to , from definition, . Then, for every and every n, we have

Combining the fact that , we further have

Since , then is a lower bound of the integrand above on , and we also have , we obtain the following two results:

(a) From (A1) and the fact that , we have are finite, so has convergent subsequences, which means that a subsequence exists at least such that as for some ;

(b) By Fatou’s lemma and dominated convergence theorem, we obtain

Here, these facts are used: as and . We also used the fact that (see Lemma B.7 in Appendix B of [15]). If we let , then , and due to the arbitrariness of , it follows that

This completes the proof. □

Proposition A3.

Suppose that Assumption 4 is in force, , then for any ,

Proof.

Let , for and , define

Noting that g is upper semicontinuous and the sets are Borel-measurable, we have that their projections are also Borel-measurable subsets of . Additionally, from the definition, the collection is disjoint. Then, due to the Jankov–von Neumann selection theorem [27], a universally measurable function for each exits such that , satisfying

Next, as and is disjoint, we define :

Since each is measurable, we have that is also measurable. For , if k exists such that , then , and . If such k do not exists, then and is an empty set, then we obtain

Let , the we have

Define the family of probability measures as

From (A2), almost surely; thus, and . Moreover, since , then . Finally, since , by Fatou’s lemma,

Then, holds. Since for any , the proof is complete. □

Proof of Theorem 2.

If , then , the proof is complete. Consider the case that . As a result of Proposition A2, for every , we have

For any and , define

As ,

Since for every , we restrict attention to the compact subset , from (A3), we can see

As is a lower semicontinuous function with respect to the variable , we can verify that is lower semicontinuous in as well. For any , as (A4) holds, we can apply Fatou’s lemma,

is a convex function with respect to for fixed . Additionally, for any and ,

which means that is concave in for fixed . By applying the minimax theorem, we can conclude

In conjunction with (A5), the following is yielded:

Now, since for any , from Proposition A3, we have

In conjunction with (A6), we further obtain

Let , by Fatou’s lemma, as then is lower semicontinuous, and when as and . As a result, are compact for every m. Therefore, attains its infimum, i.e., we can find such that . As , we have ; thus, . In conjunction with (A7), we have , then the proof of assertion(i) is complete.

Through the above verification, it is known that an optimizer of the dual problem always exists. Next, if exists satisfying , which means it is an optimizer to the primal problem, then we have

In addition, as and :

Thus, we can conclude

References

- Wiesemann, W.; Kuhn, D.; Sim, M. Distributionally robust convex optimization. Oper. Res. 2014, 62, 1358–1376. [Google Scholar] [CrossRef]

- Jiang, R.; Guan, Y. Data-driven chance constrained stochastic program. Math. Program. 2016, 158, 291–327. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Mohajerin Esfahani, P.; Kuhn, D. Data-driven distributionally robust optimization using the wasserstein metric: Performance guarantees and tractable reformulations. Math. Program. 2018, 171, 115–166. [Google Scholar] [CrossRef]

- Li, J.Y.M.; Mao, T. A general wasserstein framework for data-driven distributionally robust optimization: Tractability and applications. arXiv 2022, arXiv:2207.09403. [Google Scholar] [CrossRef]

- Föllmer, H.; Schied, A. Convex measures of risk and trading constraints. Financ. Stoch. 2002, 6, 429–447. [Google Scholar] [CrossRef]

- Föllmer, H.; Schied, A. Stochastic Finance: An Introduction in Discrete Time, 4th ed.; Walter de Gruyter: Berlin, Germany, 2016. [Google Scholar]

- Delage, E.; Guo, S.; Xu, H. Shortfall risk models when information on loss function is incomplete. Oper. Res. 2022, forthcoming. [Google Scholar] [CrossRef]

- Guo, S.; Xu, H. Distributionally robust shortfall risk optimization model and its approximation. Math. Program. 2019, 174, 473–498. [Google Scholar] [CrossRef]

- Mao, T.; Wang, R.; Wu, Q. Model Aggregation for Risk Evaluation and Robust Optimization. arXiv 2022, arXiv:2201.06370. [Google Scholar] [CrossRef]

- Popescu, I. Robust mean-covariance solutions for stochastic optimization. Oper. Res. 2007, 55, 98–112. [Google Scholar] [CrossRef]

- Hanasusanto, G.A.; Kuhn, D. Conic programming reformulations of two-stage distributionally robust linear programs over wasserstein balls. Oper. Res. 2018, 66, 849–869. [Google Scholar] [CrossRef]

- Kuhn, D.; Esfahani, P.M.; Nguyen, V.A.; Shafieezadeh-Abadeh, S. Wasserstein distributionally robust optimization: Theory and applications in machine learning. In Operations Research & Management Science in the Age of Analytics; Informs: Catonsville, MD, USA, 2019; pp. 130–166. [Google Scholar]

- Peng, C.; Delage, E. Data-driven optimization with distributionally robust second order stochastic dominance constraints. Oper. Res. 2022. [Google Scholar] [CrossRef]

- Blanchet, J.; Murthy, K. Quantifying distributional model risk via optimal transport. Math. Oper. Res. 2019, 44, 565–600. [Google Scholar] [CrossRef]

- Kantorovich, L.V.; Rubinshtein, S.G. On a space of totally additive functions. Vestn. St. Petersburg Univ. Math. 1958, 13, 52–59. [Google Scholar]

- Artzner, P.; Delbaen, F.; Eber, J.-M.; Heath, D. Coherent measures of risk. Math. Financ. 1999, 9, 203–228. [Google Scholar] [CrossRef]

- Bäuerle, N.; Müller, A. Stochastic orders and risk measures: Consistency and bounds. Insur. Math. Econ. 2006, 38, 132–148. [Google Scholar] [CrossRef]

- Kallenberg, O.; Kallenberg, O. Foundations of Modern Probability; Springer: Berlin/Heidelberg, Germany, 1997; Volume 2. [Google Scholar]

- Mao, T.; Cai, J. Risk measures based on behavioural economics theory. Financ. Stoch. 2018, 22, 367–393. [Google Scholar] [CrossRef]

- Shafieezadeh-Abadeh, S.; Kuhn, D.; Esfahani, P.M. Regularization via mass transportation. J. Mach. Learn. Res. 2019, 20, 1–68. [Google Scholar]

- Wu, Q.; Li, J.Y.M.; Mao, T. On generalization and regularization via wasserstein distributionally robust optimization. arXiv 2022, arXiv:2212.05716. [Google Scholar] [CrossRef]

- Fournier, N.; Guillin, A. On the rate of convergence in wasserstein distance of the empirical measure. Probab. Theory Relat. Fields 2015, 162, 707–738. [Google Scholar] [CrossRef]

- Markovitz, H.M. Portfolio selection. J. Financ. 1952, 7, 77–91. [Google Scholar]

- Plyakha, Y.; Uppal, R.; Vilkov, G. Why Does an Equal-Weighted Portfolio Outperform Value-and Price-Weighted Portfolios? 2012. Available online: https://ssrn.com/abstract=2724535 (accessed on 16 September 2017).

- Luenberger, D.G. Optimization by Vector Space Methods; John Wiley & Sons: Hoboken, NJ, USA, 1997. [Google Scholar]

- Bertsekas, D.; Shreve, S.E. Stochastic Optimal Control: The Discrete-Time Case; Athena Scientific: Belmont, MA, USA, 1996; Volume 5. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).