1. Introduction

In the context of teaching languages, vocabulary is considered one of the main components that have to be mastered by learners, due to its significance and use for communication. According to [

1], vocabulary contributes a lot to an understanding of written and spoken texts and it also helps in learning the functions and applicability of new words. Vocabulary mastery affects the way of thinking of students and their creativity in the language learning process, so that the quality of their language learning can also improve. As [

2] said, second language learners require an adequate bank of words so as not to impede effective and meaningful communication. The vocabulary of children aged 5–12 can be improved by letter games, especially cubic-based letter games.

Research studies [

3,

4,

5,

6] show the importance of word games in the teaching process for school children. They enable children to improve their vocabulary retention skills and also help them to learn words and arrange the letters which make up a word. In the initial stage of a word game, players are able to recognize the letters and words. In the next stages, they learn to form a word by using the correct order of letters, and, in the process, improve their memory building, which expands their vocabulary.

The matching letter game (MLG) aims to construct words by using letter cubes. The MLG develops various skills in transforming letters into words for children [

7,

8]. It is specially designed for different levels of difficulty, customized for 3–12 year old children. MLG is very suitable for early childhood education and entertainment. Not only can it enlighten children’s learning and word formation ability, as well as early vocabulary recognition skills, but it can also help children practice thinking and hands-on skills, such as sorting, grouping, taking turns, and sharing.

The game contains letter cubes, flashcards with predefined words, including a picture defining the word, and a game tray with a slot for cubes while matching letters into a word. The child looks at the picture and word on a card and, then, tries to find each matching letter from the big letter cubes to form a word and puts the letters on the tray in correct order for the word.

Currently realized cubic-oriented matching letter games [

9] are mainly focused on children aged 3–8 and on the English language. Most of them consist of 8 to 16 letter cubes with options forming up to 64 words from given word flashcards, and the length of the words is 3–4 letters. This means the games include a lot of number cubes, even with options forming a limited number of words. The letter placement algorithm for this type of game has not been defined for the Uzbek language.

Uzbek language (native: .

O’zbek tili) is a low-resource, highly-agglutinative language [

10] with null-subject and null-gender characteristics from the Karluk branch of the Turkic language family. One of the most noticeable distinctions between Uzbek and other Turkic languages is the rounding of the vowel, a feature that was influenced by Persian. The Uzbek language has been written in various scripts, such as Arabic, Cyrillic, and Latin. The Latin script

https://en.wikipedia.org/wiki/Uzbek_alphabet (accessed on 1 November 2022) is an official alphabet [

11] in the Uzbek language.

Taking into account the aforementioned issues, we created a model for designing a cubic-oriented matching-letter game that minimizes the number of letter cubes, while maintaining coverage of as many words as possible. The model is trained in a selected dataset of the Uzbek language in Latin script and the output is a set of letters to allocate to the sides of the cubes sides to form words. To evaluate the performance of the developed models, we created a new dataset for the Uzbek language. For the English language, we used an extracted dataset. For the Russian and Slovenian languages, we generated datasets which include 3–5 letter words, according to the suggestion of experts in the field. A simple stochastic method was also applied to see if it could further improve the overall coverage of the proposed models.

The structure of the rest of the paper is as follows.

Section 2 presents an overview of existing methods related to our algorithm. The problem definition and our contributions are stated in

Section 3. A detailed description of our proposed model is provided in

Section 4.

Section 5 outlines the main results obtained by the proposed models followed by

Section 6, that concludes the paper and highlights our future plans.

2. Scientific Background

In computer science literature, there are some methods of designing word games for different languages, and some research on the characteristics of a target language has been conducted. Since there is no method of design for cubic-word games in the Uzbek language, our current research can be considered a good contribution to this field for the Uzbek language. In this section, we discuss the research performed in the word-game modeling field.

In [

12], the authors introduced a solution algorithm for the Uzbek language version of the Wordle game, including the Cyrillic script of this language. This work studied character statistics of the Uzbek five-letter words in Cyrillic script to determine the solving strategy of the word game. The proposed methodology covered computing the letter frequency and positional letter frequency of Uzbek words in Cyrillic script and calculating a word score, based on statistics suggesting the best probability words to obtain a solution in the game with minimal attempts.

The authors in [

13] conducted new research to show the effectiveness of the Big Cube game in improving students’ mastery of vocabulary. They designed Big Cube to play a “words and pictures guessing” game by involving 40 grade 8 students as subjects of the research. The authors proved the importance of the cubic game to achieve good success in teaching through experimental evaluations. Pre-test and post-test (both tests comprised 20 questions divided into two sections: multiple choice and sentence completion) results obtained from the 40 students were compared, and statistically proved the significance of cubic games in the study process. This work also shows the importance of our research, because we designed a new model to produce cubic-game cards for children while the study in [

13] demonstrated the usefulness of such games in teaching and improving mastery of vocabulary for students, as well as for children.

In [

14], the Vietnamese researchers manifested the appropriateness and efficacy of incorporating word games into English lexical instruction at a middle school in Vietnam. They conducted research on two classes of grade 7 students for eight weeks in order to measure the efficacy of using games in the selected school. The authors statistically proved that the post-test findings after eight weeks of treatment were better in vocabulary retention and showed the importance of word games in the teaching process, especially in vocabulary retention, and in learning words for school children.

The study in [

15] showed the effectiveness of using the Build-A-Sentence Cubes Game in teaching simple past tense. They involved 16 students from the Eighth Level of the Q-Learning Course Pontianak as subjects of the research. The results of the post-test were revealed to be noticeably higher than the results obtained in the pre-test. This means that the use of the Build-A-Sentence Cubes Game had a moderate effect in teaching the simple past tense to Eighth Level students of the Q-Learning Course Pontianak.

The main goal in our study is similar to those of the aforementioned works, but we developed novel methods to build a cubic game, while existing studies applied word games in the pedagogical process. The Uzbek language is a low-resource language and there are no publicly available corpora. Therefore, designing algorithms for the Uzbek language brings forth challenges. Since we did not find statistics for Letter frequency and two-letter sequence (Bi-gram) calculated in the Uzbek corpus, we focused on a training dataset in the Uzbek language.

3. Problem Statement

The input dataset, consisting of 3–5 letter words, is given, and our task was to suggest a set of letters to be described in cubes for the matching letter game. There are six sides of a cube, and a letter is placed on each of the six sides of each cube. Before the game’s design, we needed to identify the number of cubes it would have. We selected cases of five, six, seven, and eight cubes because these numbers of cubes are appropriate to cover 3–5 letter words.

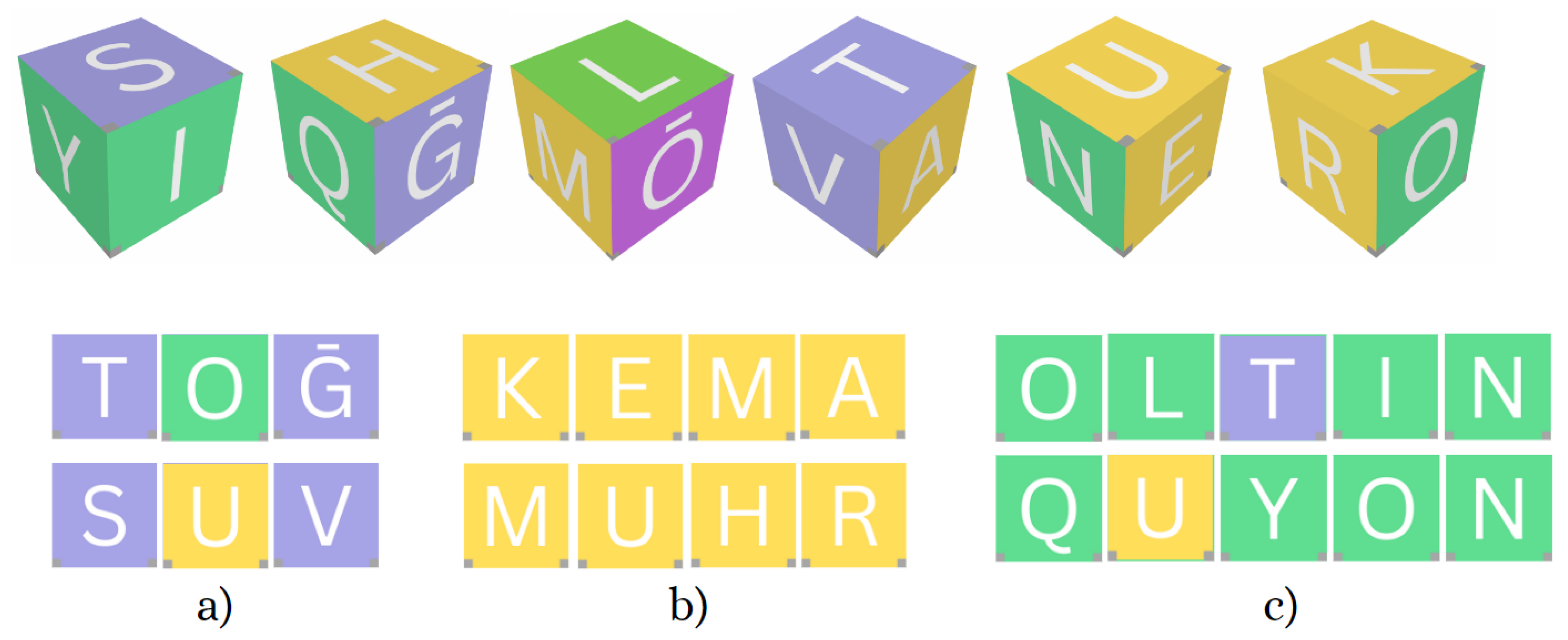

We wanted the word game, made as a result of the proposed method, to enable the formation of as many words as possible from the words in the selected dataset with a minimal number of cubes. Some examples of the letter-matching word game for the Uzbek, English, and Russian languages are illustrated in the following figures.

Figure 1 shows an illustrative example of matching 3–5 letter words by using 6 cubes for the Uzbek language. (a) Examples of 3 letter words constructed from the above cubes (b) and (c) illustrate 4 and 5 letter words made by the same cubes, respectively.

The examples of matching 3–5 letter English words by using 5 cubes are presented in

Figure 2. The cases in (a)–(c) describe the matching of 3, 4, and 5 letter words constructed by 5 cubes for the English language.

In

Figure 3, the same examples of matching 3–5 letter words are illustrated for the Russian language.

While proposing the approach, we set some conditions and restrictions. Since the game is designed for children to get acquainted with letters, the condition was that all letters of the alphabet must be present in the game cubes, at least once. We defined two restrictions to optimize the model. Firstly, a letter is not placed more than once on a single cube to increase the effectiveness, because a player can only use one side of a cube when matching letters for a word. By using different letters, more words can be formed than by using the same letters. Secondly, the number of vowel letters for each cube should be two (or three, depending on the method) to balance the distribution of vowels in cubes.

As a result of the proposed methods, the obtained set of words from the resulting cubes of the game was computed to measure the accuracy of the model.

Our main contributions and goals are as follows:

Since Uzbek is one of the low-resource languages, and there are not enough datasets for it, we created a novel dataset for the Uzbek language to conduct this research;

We developed new models by using character-level N-gram and statistics to design a cubic-oriented matching letter game for Uzbek and other languages;

Experimental evaluations on 4 datasets were performed to show the advantages of our methods.

4. Methodology

In this work, we proposed two models for constructing the cubes, which include the following steps: (1) Data preparation; (2) Frequency of letters generation, using Uni-gram and Bi-gram methods; (3) Extraction of the sequence of letters which are potential candidates to be placed on the cubes; (4) Creation of a replacement algorithm by considering some restrictions in placing the letters on the final cubes. The proposed approaches are illustrated in

Figure 4.

Each of the aforementioned steps in the above figure is described in the following subsections.

4.1. Data Preparation

To test our models, We created a new dataset for the Uzbek language, because there is no dataset for young children in the Uzbek language. The dataset generation part is described in the following steps:

- (1)

Collection of words. The potential words were extracted from the Madvaliyev and Begmatov book [

16], which is the largest dictionary book for the Uzbek language.

- (2)

Normalization. After the generation of the word list for our dataset, we performed normalization to simplify the coding.

- (a)

There are some digraphs in Uzbek language with diacritical signs which cause problems in calculating letter frequency. g’ and o’ replaced with ḡ and ō. With these changes, we replaced the digraph with one character, which was considered as two characters in a word, and this helped in correctly computing the letter frequency. Other digraph letters, ch and sh are divided into s and h, two independent letters, while c is not a letter for the Uzbek alphabet. We decided to keep it as a digraph and it participated as a separate character in letter frequency calculation.

- (b)

The Uzbek alphabet has a character that is called a phonetic glottal stop (native: Tutuq belgisi). Although it is not a real letter, it is still considered a part of the Uzbek alphabet. There are only 18 words with this character in the Uzbek dataset, so, therefore, we omitted these words in the filtering process.

- (3)

Filtering. After the normalization, 7948 words, with 3–5 characters, were left in our dataset. Then, these words were filtered by a native Uzbek speaker and an expert in Uzbek linguistics, by removing very rare words and unfamiliar words for school children to create a final dataset (the newly created dataset for the Uzbek language is publicly available

https://github.com/UlugbekSalaev/Cubic-Word-Game-Modeling (accessed on 1 November 2022)) with 4558 words.

To check the performance of our model in other languages, an English dataset was obtained from ESL Forums online page

https://eslforums.com (accessed on 10 October 2022), designed for young children to learn the language. The datasets contained a list of common words having 3-5 letters in the English language. Russian and Slovenian datasets [

17,

18] were generated with the support of experts in the Russian and Slovenian languages, and they helped to extract useful and interesting words for school children.

4.2. Statistics

We calculated the statistics for the positional distribution of letters on the selected datasets to find out the regularities between occurrence of vowels and consonants in words.

The Uzbek language has 27 letters and six of them are vowel phonemes: a, e, i, o, u, o‘. English and Russian alphabets consist of 26 and 33 letters, including 5 (a, e, i, o, u) and 10 {а, у, o, ы, и, э, я, ю, ё, е} vowels, respectively. The Slovenian alphabet includes 25 letters, 5 of them being vowels a, e, i, o, u.

It can be seen from

Table 1 that most of the vowels have high frequencies in all languages, which means that vowels actively participate in forming words.

The subsets of vowels {a, i, o} for the Uzbek language, {e, a, o, i} for the English language, {a, e, o, i} for the Slovenian language and {а, o, е} for the Russian language are the most frequent vowels, having relative frequencies higher than .

Table 1 shows that vowel letters stand out in high-ranked Uzbek words. Considering the active participation of vowels in the formation of words, we studied the dynamics of positional occurrences of letters at vowel and consonant levels for the selected datasets.

Table 2 illustrates the pattern view of word formation with vowel and consonant letters.

A vowel’s frequency is higher in words with short word length (3–5 letters). In order to increase the possibility of forming words, we can increase the number of vowels in a game cube.

Table 2 shows that most of the 4 and 5 letter words consist of two vowels.

Taking into consideration the letter distribution of all datasets which we wanted to experiment with in the proposed approach, we decided to develop another model by increasing the priority of vowel letters in the word formation stage. Increasing the number of vowel letters in words also improves the overall coverage of the model.

4.3. Methods

According to the letter distribution and the statistics of vowel positional occurrence, we proposed two approaches to design a cubic game. Both approaches are mainly based on character-level Uni-gram and Bi-gram models [

19]. Algorithm 1 describes the frequency of the character-level n-gram model.

| Algorithm 1: Algorithm of the method to calculate of character-level n-gram model frequency |

| Input: dataset D, number of character n |

| Output: Dictionary (list of key-value pair) |

Initialization: Empty dictionary to store the frequency of character n-gram

- 1:

for each do - 2:

for do - 3:

if then - 4:

Increment by 1 - 5:

else - 6:

= 1 - 7:

end if - 8:

end for - 9:

end for - 10:

return

|

algorithm finds the frequencies of words, based on character n-gram. If n is equal to 1, it is called Uni-gram and the method returns a dictionary consisting of 1-letter words as well as their frequencies (i.e., a:45, b:30). If n is equal to 2, the method is called Bi-gram and it returns a dictionary including 2 letter chunks with their frequencies (i.e., ab:45, bc:30).

4.3.1. Letter Frequency () Approach

In the first method, we designed a model based on letter frequency called

. Bigram frequency plays an important role in the perception of words and non-words [

20]. More precisely, we utilized the Bi-gram method to sort the alphabet letters, based on the frequencies, and remaining letters were obtained based on the Uni-gram method as duplicate letters. The procedures of the first model are highlighted in Algorithm 2.

| Algorithm 2: Algorithm for designing letters for cubic game based on approach |

| Input: A set of an alphabet letters L, dataset D, number of cubes N |

| Output: Set of letters to form cubes corresponding to N |

| Initialization: Assign list of alphabet to A, number of cubes to N, empty list to store the duplicate letters, empty list to store letters sequence, empty 2D array with length Nx6 |

- 1:

= (D, 1); ▹ Returns dictionary which contains a letter (key) and its frequency (value), i.e., [:90, :75, ...] - 2:

= (D, 2); ▹ Returns dictionary which contains 2 letters (key) and its frequency (value), i.e., [:62, :23, ...] - 3:

= (, value); - 4:

= sort(, value); - 5:

for each do - 6:

if then - 7:

.add(); ▹ first letter of key - 8:

end if - 9:

if then - 10:

.add(); ▹ second letter of key - 11:

end if - 12:

if then - 13:

break - 14:

end if - 15:

end for - 16:

if then - 17:

.add() - 18:

end if - 19:

= - 20:

= sort(, ) - 21:

for do - 22:

for 6 do - 23:

= - 24:

end for - 25:

end for - 26:

return

|

Algorithm 2 takes a training dataset, a number of cubes, and the alphabet of the language as input parameters. Lines 1–2 compute the Uni-gram and Bi-gram frequencies, based on Algorithm 1. The next 2 lines sort the Uni-gram and Bi-gram frequencies according to their values in descending order. The sequence of the letters is constructed, based on Bi-gram positions, in lines 5–15. The resulting sequence of letters includes the alphabet letters but in a different order. If some of the alphabet letters do not exist in the dataset, those letters were added to the resulting sequence of letters in lines 16–18. The sequence of letters may not be equal to the intended number of letters for cubes, and the remaining letters are selected based on Uni-gram frequencies. Lines 21–25 place the sequence of letters into the cubes, and the last line returns the final set of cubes.

4.3.2. Vowel Priority () Approach

In all four languages considered, vowel letters play the most important role to construct 3–4–5 letter words, as demonstrated in

Table 2. By taking into account that fact, we decided to design another model, called

, which gives an advantage to inclusion of vowel letters in the cubes. The selection process of vowel letters to be included in the cubes is shown in Algorithm 3.

| Algorithm 3: method returning a subset of vowels having frequencies higher than 5% of the dataset |

| Input: Letter frequency dictionary (item has key-value pair) |

| Output: A list of vowels |

Initialization: Empty list , Integer number to 0 - 1:

- 2:

for each do - 3:

if then - 4:

.add() - 5:

end if - 6:

end for - 7:

return

|

The input of Algorithm 3 is a sorted

(Unigram_Frequency). Line 1 counts the total number of characters of a dataset from the given Letter Frequency. Lines 2–6 extract a subset of vowels, having occurrence frequency higher than 5%. The occurrence frequency is calculated by dividing the value of letter frequency by the total number of characters. Line 7 returns an extracted list of vowels as frequent vowels. The proposed model is defined in Algorithm 4.

| Algorithm 4: Algorithm for designing of letters for cubic game based on approach |

| Input: A set of an alphabet letters L, a list of vowels to V, a list of consonant to C, dataset D, number of cubes N |

| Output: Set of letters to form cubes corresponding to N |

Initialization: Assign list of alphabet to A, number of cubes to N, empty list to store the duplicate letters, empty list to store the sequence of letters, empty 2D array with length of Nx6

- 1:

= (D, 1); ▹ Returns dictionary which contains a letter (key) and its frequency (value), i.e., [:90, :75, ...] - 2:

= (D, 2); ▹ Returns dictionary which contains 2 letters (key) and its frequency (value), i.e., [:62, :23, ...] - 3:

= (, value); - 4:

= sort(, value); - 5:

for each do - 6:

for ( do - 7:

if then - 8:

.add(); ▹ i-letter of key - 9:

end if - 10:

end for - 11:

if then - 12:

break - 13:

end if - 14:

end for - 15:

) - 16:

- 17:

= sort(, ) - 18:

for do - 19:

for 6 do - 20:

= - 21:

end for - 22:

end for - 23:

return

|

Algorithm 4 has the alphabet letters, a list of vowel and consonant letters, a dataset, and the number of cubes as input parameters. The first 4 lines are the same as in the first model , which sorts the Uni-gram and Bi-gram frequencies in descending order. Lines 5–14 build the positions of letters, based on the Bi-gram method, and puts them into the list. While some of the alphabet letters are not included in the resulting sequence generated by lines 5–14, those letters are added to the list in line 15. The resulting list of letters should be equal to , so the remaining letters from the alphabet are filled by frequent vowels (line 16), described in Algorithm 3. Line 17 sorts the list, based on the letter sequence. The letters are placed into cubes in lines 18–22 and the resulting set of cubes is returned in the last line.

5. Experimental Results

Developed models (

and

) were tested in 4 cases of numbers of cubes: 5, 6, 7, and 8 cubes. A 5-fold cross-validation evaluation method was utilized to perform the experiments. We evaluated our models on three datasets taken from the Uzbek, English, Russian, and Slovenian languages. Since our main goal was to apply the proposed model to the Uzbek language, we created a new dataset by involving language experts. The detailed information about datasets is presented in

Table 3.

The developed datasets mostly contain words from “Noun”, “Verb” and “Adjective” word classes. The detailed distribution of words by word class is manifested in

Table 4.

Overall coverage (average values over the 5-fold cross-validation with standard deviations) of

and

models in the case of 5, 6, 7, and 8 cubes are shown in

Table 5,

Table 6,

Table 7 and

Table 8. The best coverage for each dataset is shown in bold.

Table 5 illustrates the performance of

and

models in the case of 5 cubes. It can be seen from the result that both models resulted in lower coverage in all datasets for 4–5 letter cases. The probability of constructing 4–5 letter words (especially, 5 letter words) with 5 cubes is really low. Both models obtained the same coverage on the Russian dataset with 48.6% in the 3 letter case, 18.2% in the 4 letter case, and 3.0% in the 5 letter case. The reason is that the cubic letters (30 letters for 5 cubes) did not include all the alphabet letters because the Russian language’s alphabet has 33 letters.

Table 6 manifests the overall coverage in the case of 6 cubes. Overall coverage of both models was improved by around 30% in all datasets, compared to the case of 5 cubes.

approach achieved higher accuracy than the

method in all cases with 3–4–5 letters. The same experiment for the case of 7 cubes is shown in

Table 7. Interestingly, the standard deviation of

method was slightly high (4.3%) on the Uzbek dataset, which meant that the results over 5-fold cross-validation fluctuated.

In the case of 7 cubes, the

method achieved reasonably high coverage (over 90%) in the English and Slovenian languages in 3–4 letter cases. Although the

gained comparable results with the

model on the Uzbek and English datasets, it resulted in approximately 15% lower average coverage on the Russian and Slovenian datasets. The average coverage of both models increased around 17–20% for the case of 5 letter words in all datasets, but this result was still not what we desired, so we continued the experiment with 8 cubes, as illustrated in

Table 8.

The results in

Table 8 show that

model achieved better coverage than the

model on all datasets, except the Russian. More precisely, the improvement of the

model was 7% for the Uzbek, 4.7% for the English, and 2.1% for the Slovenian languages compared to the

model. The main reason is that the

model includes more vowel letters in the case of 8 cubes, compared to the cases of 5, 6, or 7 cubes. In general, all models achieved higher results in all datasets in the case of 8 cubes, with, especially, over 98% in the 3 letter case and over 95% in the 4 letter case for the Uzbek, Slovenian, and English datasets. This was an expected behavior because the chance of constructing 3–4–5 letter words from 8 cubes (containing 48 letters) became really high. In all experiments, the

method resulted in a lower result than the

approach on the Russian dataset, because when the number of cubes increased, the proposed methods tended to achieve reasonably high coverage on all datasets. We achieved the intended coverage with 8 cubes, and, therefore, this version was the last case of the experiment. The average coverages, based on 5–8 cubes obtained by

and

models, are presented in

Figure 5.

Figure 5 illustrates that both models achieved comparable results in terms of average coverage under 5–8 cube conditions.

method gained slightly better results than

method on 3–4–5 letter average coverage for Slovenian and Russian datasets, while this result was comparable in Uzbek and English datasets.

To check the time complexity of the proposed models, we recorded execution time while performing the experiment, shown in

Table 9. The experiments were performed on a computer with an Intel Core i5 processor and 8 GB of RAM.

It can be seen from

Table 9 that average execution times of both models (over 5-fold) ranged between 0.04–0.06 seconds in all datasets.

6. Discussion of Results

The experimental results showed that both the and models achieved reasonable dataset coverage across various languages. The coverage of both models significantly improved with increase in the number of cubes, which was an expected behaviour. The proposed models achieved the intended coverage with 8 cubes which was selected as an optimal number of cubes. Once we generated the cubic letters by the and models, we further wondered if it was possible to improve the overall coverage by considering all the combinations of swapping the letters between any pairs of cubes (found by and models).

utilized a simple stochastic method, based on the letters found by , and used the same method for letters generated by the model.

achieved 94.7% overall coverage, which was 5.8% higher than the method on the Uzbek dataset, 94.4% (2.3% better) on the English dataset, 88.0% (3.9% better) on the Russian dataset and 94.2% (2.1% better) on the Slovenian dataset.

The method attained overall coverage of 96.3% and 97.5% on the Uzbek and English datasets, which represented similar results to the method. Similarly, the method achieved 75.0% coverage on the Russian dataset, which was 3.1% better than the method. Finally, on the Slovenian dataset, the method yielded a coverage of 94.8%, indicating a 0.6% improvement compared to the method.

Although using the optimization technique improved the coverage slightly, their time complexity was significantly worse than that of the proposed models ( and ). This means that the optimization method is significantly more time-consuming and may not be practical for large datasets.

7. Conclusions and Future Work

A matching-letter game is an essential tool for a child to improve letter recognition and vocabulary, as well as orthography. In this paper, we proposed two models, based on Letter Frequency () and Vowel Priority () methods, for modeling a cubic-oriented word game. Experimental evaluations showed that both models had their own advantages, depending on the number of cubes. In the case of 8 cubes, the model achieved higher overall coverage (over 94%) than the approach (over 89%) on Uzbek, English and Slovenian datasets, because the datasets of those languages have less frequent vowels. Both models covered around 99% of 3-letter words in the Uzbek, English and Slovenian datasets, while this coverage was over 85% in 5-letter words. Both models can be applied to other languages by providing their alphabets and datasets consisting of 3–5 letter words.

The results obtained in this research suggest that, while our models can provide a good starting point for designing word games, there is still room for further optimization using stochastic methods. Future research can explore the use of more advanced stochastic optimization methods to improve the overall coverage, while minimizing the number of cubes used.