A Mandarin Tone Recognition Algorithm Based on Random Forest and Feature Fusion † †

Abstract

1. Introduction

- RF is first applied to identify Mandarin tone in a speaker-independent manner.

- Through a large number of experiments, with three FFSs from only sound source features we find that RF for tone recognition is a high-stability, low-complexity approach.

- It is proven that the method proposed has good recognition for small sample sets and has strong generalization ability.

2. Materials and Methods

2.1. Data Description

2.2. Preprocessing

2.3. Feature Fusion

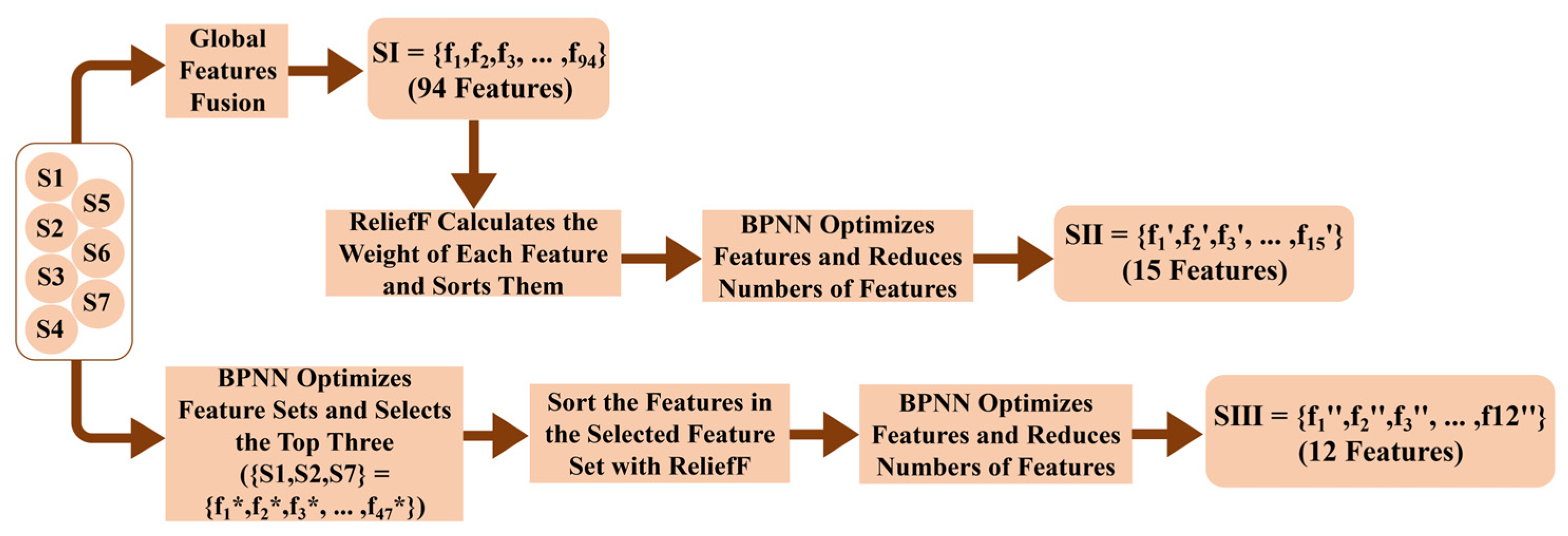

- FFS SI was directly composed of all 94 feature parameters from S1 to S7.

- The second method involved a BPNN, which has fine performance and wide application and was selected as a fixed classifier model to optimize the features of a tone recognition task. The number of nodes in the BPNN’s hidden layer was set to 32. The process was as follows: For each feature in SI, the ReliefF algorithm [22] was used to calculate the weight of each feature, and the weight was ranked from large to small. We then inputted the features into the BPNN in order for the purposes of tone recognition and stopped this process once recognition accuracy no longer rose. FFS SII was thus formed, which contained fifteen features.

- FFS SIII, which included twelve features, was obtained using the third method. Firstly, the top three feature sets of S1 to S7 were selected by the BPNN. Each feature from the top three sets was then ranked by ReliefF. Lastly, the twelve features could be optimized according to a process similar to that of the second method.

2.4. Classifier

2.5. Tone Recognition Classifier Based on Random Forest

2.5.1. CART Decision Tree and Random Forest Modeling

- First, during the construction process of each decision tree, the training set is randomly selected and put back M1 times to obtain a sample set with sample size M1, where some data in the training set are selected multiple times and some are not. Thus, the M1*N features matrix F = {fi,j, i = 1,…, M1, j = 1,…, N} is formed.

- Second, at the root node of each decision tree, one optimal feature with the smallest Gini index is selected from M1*N features, and its feature value is the decision at the root node.

- 3.

- In the next step, the matrix M1*N is divided into two parts: M11*N and M12*N. One optimal feature with the smallest Gini index is selected from M11*N features, and its feature parameter is the decision at the branch node. A similar step is performed in M12. M11 is also divided into two parts, and the above process is repeated until all features are used or the tone type is provided as output, which is the leaf node.

- 4.

- Repeat 1, 2, and 3 T times to construct T decision trees, which thus form a random forest. Figure 2 shows one decision tree.

2.5.2. Tone Recognition Based on the Random Forest Classifier

- The test set is fed into the pre-trained random forest classifier.

- Starting from the root node of the current decision tree, the random forest classifier compares the feature parameters based on the value of the current node on each decision tree until the decision reaches the leaf node, which outputs the corresponding tone type.

- Since each decision tree is independent in the recognition process of each test sample, the final recognition result of the test sample is obtained via a voting process involving the results of multiple decision trees.

3. Experiment and Result

3.1. Optimization Experiment of RF Classifier’s Hyperparameter T

3.2. Preliminary Experiment

3.2.1. Analysis of the Role of Vocal Tract Features in Tone Recognition

3.2.2. Analysis of Classifiers in Tone Recognition

3.3. Comparative Experiment

3.3.1. Comparative Experiments of Different Fusion Feature Sets

3.3.2. Comparative Experiments of Small Sample Sets

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. List of the 600 Syllables from Fifteen Speakers in the SCSC Database

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | a1 | ai1 | ao1 | cheng1 | e1 | fang1 | feng1 | hou1 | ji1 | lao1 |

| Tone 2 | a2 | ai2 | ao2 | cheng2 | e2 | fang2 | feng2 | hou2 | ji2 | lao2 |

| Tone 3 | a3 | ai3 | ao3 | cheng3 | e3 | fang3 | feng3 | hou3 | ji3 | lao3 |

| Tone 4 | a4 | ai4 | ao4 | cheng4 | e4 | fang4 | feng4 | hou4 | ji4 | lao4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | ang1 | en1 | eng1 | wei1 | wo1 | wu1 | yan1 | yang1 | yao1 | yi1 |

| Tone 2 | a2 | ai2 | ang2 | ao2 | er2 | wang2 | wei2 | wen2 | tu2 | e2 |

| Tone 3 | fa3 | lou3 | yi3 | pai3 | yuan3 | wen3 | wo3 | xiang3 | yan3 | yang3 |

| Tone 4 | a4 | ang4 | er4 | gun4 | lian4 | lie4 | lun4 | ou4 | si4 | weng4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | fan1 | bie1 | chai1 | cun1 | en1 | mo1 | tuo1 | wu1 | xiong1 | yi1 |

| Tone 2 | a2 | ai2 | fo2 | cun2 | fang2 | cu2 | ju2 | she2 | wang2 | wen2 |

| Tone 3 | wu3 | yan3 | wei3 | fa3 | yao3 | ha3 | lou3 | wang3 | weng3 | xiang3 |

| Tone 4 | lie4 | na4 | lun4 | gun4 | mie4 | mi4 | ou4 | si4 | tie4 | wen4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | a1 | bie1 | tuo1 | en1 | yue1 | wa1 | tou1 | you1 | yuan1 | mo1 |

| Tone 2 | ai2 | wen2 | fo2 | fang2 | ju2 | lou2 | wang2 | wu2 | she2 | zhuo2 |

| Tone 3 | shu3 | a3 | yang3 | yan3 | ti3 | yong3 | you3 | wo3 | wei3 | yu3 |

| Tone 4 | ci4 | gun4 | lie4 | lian4 | lun4 | xian4 | hu4 | pao4 | ou4 | zuan4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | weng1 | en1 | eng1 | wo1 | wu1 | yao1 | yin1 | you1 | yuan1 | yue1 |

| Tone 2 | a2 | ai2 | er2 | ban2 | wang2 | wen2 | wu2 | cu2 | yong2 | yuan2 |

| Tone 3 | fa3 | er3 | wen3 | weng3 | xiang3 | yao3 | yong3 | you3 | yuan3 | yun3 |

| Tone 4 | ou4 | wen4 | wu4 | yang4 | yao4 | ye4 | ying4 | you4 | yuan4 | yun4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | en1 | eng1 | weng1 | wo1 | wu1 | yao1 | yin1 | you1 | yuan1 | yue1 |

| Tone 2 | a2 | ai2 | er2 | wan2 | wang2 | wen2 | wu2 | ang2 | yong2 | yuan2 |

| Tone 3 | ai3 | er3 | wen3 | weng3 | wu3 | yao3 | yong3 | you3 | yuan3 | yun3 |

| Tone 4 | ou4 | wen4 | wu4 | yang4 | yao4 | ye4 | ying4 | you4 | yuan4 | yun4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | an1 | ang1 | e1 | ou1 | wai1 | wan1 | wei1 | yan1 | yang1 | gou1 |

| Tone 2 | ao2 | e2 | fang2 | zhuo2 | qu2 | ye2 | ying2 | yu2 | yun2 | chao2 |

| Tone 3 | a3 | an3 | yao3 | wei3 | zhai3 | yang3 | yan3 | ye3 | yin3 | fa3 |

| Tone 4 | a4 | an4 | ang4 | en4 | er4 | wa4 | wang4 | weng4 | ying4 | yong4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | gou1 | hao1 | ji1 | nie1 | luo1 | mang1 | nang1 | ao1 | yong1 | wen1 |

| Tone 2 | chao2 | gen2 | tong2 | pu2 | nu2 | ping2 | yan2 | yi2 | cheng2 | zhi2 |

| Tone 3 | ao3 | gan3 | gou3 | gen3 | wa3 | wai3 | wan3 | gu3 | yin3 | yu3 |

| Tone 4 | bai4 | dong4 | he4 | hou4 | ze4 | nan4 | miu4 | wan4 | wei4 | zun4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | dun1 | fei1 | hai1 | hui1 | jia1 | qiu1 | sui1 | tan1 | xian1 | zha1 |

| Tone 2 | chou2 | da2 | ji2 | jia2 | li2 | nang2 | niang2 | peng2 | ruan2 | gu2 |

| Tone 3 | da3 | dang3 | dian3 | jia3 | lin3 | qian3 | rao3 | shi3 | ta3 | zen3 |

| Tone 4 | cheng4 | cun4 | guan4 | jing4 | niu4 | qing4 | sui4 | xia4 | zhe4 | zuan4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | cui1 | fu1 | gai1 | gu1 | hei1 | jiao1 | liao1 | man1 | dun1 | zi1 |

| Tone 2 | fen2 | hai2 | hao2 | tuan2 | kang2 | ruo2 | qin2 | nong2 | shao2 | gu2 |

| Tone 3 | chang3 | dai3 | ga3 | nian3 | shui3 | tu3 | xing3 | xue3 | zhuang3 | lao3 |

| Tone 4 | ba4 | bi4 | dao4 | duan4 | ju4 | nie4 | nong4 | shui4 | qu4 | kao4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | li1 | lao1 | tei1 | meng1 | shang1 | tong1 | pian1 | za1 | zhe1 | peng1 |

| Tone 2 | kuang2 | chu2 | chuang2 | gang2 | hen2 | po2 | jie2 | lun2 | zha2 | zhuo2 |

| Tone 3 | hen3 | mu3 | jiang3 | jue3 | liang3 | meng3 | niao3 | kou3 | tui3 | zhang3 |

| Tone 4 | bao4 | chao4 | gang4 | gui4 | lue4 | nuo4 | qi4 | rui4 | shang4 | shuo4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | sheng1 | pan1 | dao1 | de1 | kou1 | sen1 | shai1 | shou1 | che1 | deng1 |

| Tone 2 | cen2 | luo2 | mu2 | qie2 | lin2 | shou2 | hong2 | ta2 | tai2 | xu2 |

| Tone 3 | shun3 | guang3 | kua3 | li3 | qiang3 | zun3 | gei3 | nang3 | zhe3 | sun3 |

| Tone 4 | kuai4 | den4 | di4 | guang4 | kong4 | mu4 | sa4 | shen4 | shou4 | cuan4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | gan1 | gao1 | hong1 | hou1 | lu1 | pa1 | po1 | qi1 | zhen1 | zhi1 |

| Tone 2 | biao2 | cong2 | hang2 | wa2 | luo2 | na2 | nan2 | nian2 | pi2 | qia2 |

| Tone 3 | bang3 | dia3 | dou3 | gai3 | jie3 | ka3 | mai3 | qia3 | zhen3 | bie3 |

| Tone 4 | cuan4 | duo4 | gai4 | jie4 | juan4 | mai4 | qia4 | she4 | shua4 | zhan4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | bu1 | chang1 | pin1 | dui1 | huang1 | kai1 | kua1 | lin1 | ba1 | zhao1 |

| Tone 2 | bu2 | chui2 | eng2 | ge2 | hu2 | kui2 | min2 | pei2 | ting2 | tou2 |

| Tone 3 | bie3 | chai3 | huang3 | na3 | re3 | bei3 | sheng3 | za3 | zhao3 | zu3 |

| Tone 4 | biao4 | bie4 | cha4 | kun4 | pei4 | pie4 | pu4 | rao4 | tie4 | zong4 |

| Syllable 1 | Syllable 2 | Syllable 3 | Syllable 4 | Syllable 5 | Syllable 6 | Syllable 7 | Syllable 8 | Syllable 9 | Syllable 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Tone 1 | beng1 | dai1 | he1 | jie1 | jing1 | long1 | bin1 | han1 | qian1 | jiu1 |

| Tone 2 | die2 | huan2 | ke2 | liao2 | nao2 | neng2 | nuo2 | pian2 | qiu2 | qu2 |

| Tone 3 | beng3 | duan3 | pi3 | fou3 | mian3 | fu3 | niu3 | mou3 | tao3 | yao3 |

| Tone 4 | chou4 | guai4 | heng4 | huang4 | jiang4 | mao4 | ba4 | ren4 | xiong4 | xiu4 |

References

- Pelzl, E. What makes second language perception of Mandarin tones hard? A non-technical review of evidence from psycholinguistic research. Chin. Second Lang. 2019, 54, 51–78. [Google Scholar]

- Peng, S.C.; Tomblin, J.B.; Cheung, H.; Lin, Y.S.; Wang, L.S. Perception and production of mandarin tones in prelingually deaf children with cochlear implants. Ear Hear. 2004, 25, 251–264. [Google Scholar] [CrossRef] [PubMed]

- Fu, D.; Li, S.; Wang, S. Tone recognition based on support vector machine in continuous Mandarin Chinese. Comput. Sci. 2010, 37, 228–230. [Google Scholar]

- Gogoi, P.; Dey, A.; Lalhminghlui, W.; Sarmah, P.; Prasanna, S.R.M. Lexical Tone Recognition in Mizo using Acoustic-Prosodic Features. In Proceedings of the 12th Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020. [Google Scholar]

- Zheng, Y. Phonetic Pitch Detection and Tone Recognition of the Continuous Chinese Three-Syllabic Words. Master’s Thesis, Jilin University, Jilin, China, 2004. [Google Scholar]

- Shen, L.J.; Wang, W. Fusion Feature Based Automatic Mandarin Chinese Short Tone Classification. Technol. Acoust. 2018, 37, 167–174. [Google Scholar]

- Liu, C.; Ge, F.; Pan, F.; Dong, B.; Yan, Y. A One-Step Tone Recognition Approach Using MSD-HMM for Continuous Speech. In Proceedings of the Interspeech 2009, Brighton, UK, 6–10 September 2009. [Google Scholar]

- Chang, K.; Yang, C. A real-time pitch extraction and four-tone recognition system for Mandarin speech. J. Chin. Inst. Eng. 1986, 9, 37–49. [Google Scholar] [CrossRef]

- Chen, C.; Bunescu, R.; Xu, L.; Liu, C. Tone Classification in Mandarin Chinese using Convolutional Neural Networks. In Proceedings of the Interspeech 2016, San Francisco, CA, USA, 8–12 September 2016. [Google Scholar]

- Gao, Q.; Sun, S.; Yang, Y. ToneNet: A CNN Model of Tone Classification of Mandarin Chinese. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019. [Google Scholar]

- Breimanl, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Biemans, M. Gender Variation in Voice Quality. Ph.D. Thesis, Catholic University of Nijmegen, Nijmegen, The Netherlands, 2000. [Google Scholar]

- SCSC-Syllable Corpus of Standard Chinese|Laboratory of Phonetics and Speech Science, Institute of Linguistics, CASS. Available online: http://paslab.phonetics.org.cn/?p=1741 (accessed on 20 March 2023).

- He, R. Endpoint Detection Algorithm for Speech Signal in Low SNR Environment. Master’s Thesis, Shandong University, Jinan, China, 2018. [Google Scholar]

- Li, M. Study on Multi-Feature Fusion Chinese Tone Recognition Algorithm Based on Machine Learning. Master’s Thesis, Shandong University, Jinan, China, 2021. [Google Scholar]

- Zhang, W. Study on Acoustic Features and Tone Recognition of Speech Recognition. Master’ Thesis, Shanghai Jiaotong University, Shanghai, China, 2003. [Google Scholar]

- Nie, K. Study on Speech Processing Strategy for Chinese-Spoken Cochlear Implants on the Basis of Characteristics of Chinese Language. Ph.D. Thesis, Tsinghua University, Beijing, China, 1999. [Google Scholar]

- Taylor, P. Analysis and synthesis of intonation using the Tilt model. J. Acoust. Soc. Am. 2000, 107, 1697–1714. [Google Scholar] [CrossRef] [PubMed]

- Quang, V.M.; Besacier, L.; Castelli, E. Automatic question detection: Prosodic-lexical features and crosslingual experiments. In Proceedings of the Interspeech 2007, Antwerp, Belgium, 27–31 August 2007. [Google Scholar]

- Ma, M.; Evanini, K.; Loukina, A.; Wang, X.; Zechner, K. Using F0 Contours to Assess Nativeness in a Sentence Repeat Task. In Proceedings of the Interspeech 2015, Dresden, Germany, 6–10 September 2015. [Google Scholar]

- Robnik-Sikonja, M.; Kononenko, I. Theoretical and Empirical Analysis of ReliefF and RReliefF. Mach. Learn. 2003, 53, 23–69. [Google Scholar] [CrossRef]

- Onan, A.; Korukoglu, S.; Bulut, H. Ensemble of keyword extraction methods and classifiers in text classification. Expert Syst. Appl. 2016, 57, 232–247. [Google Scholar] [CrossRef]

- Yan, J.; Tian, L.; Wang, X.; Liu, J.; Li, M. A Mandarin Tone Recognition Algorithm Based on Random Forest and Features Fusion. In Proceedings of the 7th International Conference on Control Engineering and Artificial Intelligence, CCEAI 2023, Sanya, China, 28–30 January 2023. [Google Scholar]

- Bittencourt, H.R.; Clarke, R.T. Use of classification, and regression trees (CART) to classify remotely-sensed digital images. In Proceedings of the IGARSS 2003, Toulouse, France, 21–25 July 2003. [Google Scholar]

- Javed Mehedi Shamrat, F.M.; Ranjan, R.; Hasib, K.M.; Yadav, A.; Siddique, A.H. Performance Evaluation Among ID3, C4.5, and CART Decision Tree Algorithm. In Proceedings of the ICPCSN 2021, Salem, India, 19–20 March 2021. [Google Scholar]

- Xie, X.; Liu, H.; Chen, D.; Shu, M.; Wang, Y. Multilabel 12-Lead ECG Classification Based on Leadwise Grouping Multibranch Network. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Paul, B.; Bera, S.; Paul, R.; Phadikar, S. Bengali Spoken Numerals Recognition by MFCC and GMM Technique. In Proceedings of the Advances in Electronics, Communication and Computing, Odisha, India, 5–6 March 2020. [Google Scholar]

- Koolagudi, S.G.; Rastogi, D.; Rao, K.S. Identification of Language using Mel-Frequency Cepstral Coefficients (MFCC). In Proceedings of the International Conference on Modelling Optimization and Computing, Kumarakoil, India, 10–11 April 2012. [Google Scholar]

- Hao, Y. Second language acquisition of Mandarin Chinese tones by tonal and non-tonal language speakers. J. Phon. 2012, 40, 269–279. [Google Scholar] [CrossRef]

| Feature Set Name | Source | Features Number |

|---|---|---|

| S1 | Reference [6] | 22 |

| S2 | Reference [17] | 13 |

| S3 | Reference [18] | 6 |

| S4 | Reference [3] | 16 |

| S5 | Reference [5] | 18 |

| S6 | Reference [19] | 7 |

| S7 | References [20,21] | 12 |

| Number of Decision Trees (T) | 100 | 200 | 300 | 350 | 400 | 450 | 500 |

|---|---|---|---|---|---|---|---|

| ACC (%) | 98.17 | 98.00 | 98.00 | 98.00 | 98.33 | 98.00 | 98.00 |

| AUROC (%) | 98.79 | 98.69 | 98.68 | 98.68 | 98.88 | 98.68 | 98.68 |

| AUPRC (%) | 98.15 | 97.99 | 97.98 | 97.98 | 98.32 | 97.98 | 97.98 |

| Number of Decision Trees (T) | 100 | 200 | 250 | 300 | 350 | 400 | 500 |

|---|---|---|---|---|---|---|---|

| ACC (%) | 97.00 | 97.17 | 97.33 | 97.33 | 97.50 | 97.17 | 97.33 |

| AUROC (%) | 98.02 | 98.12 | 98.23 | 98.23 | 98.35 | 98.12 | 98.23 |

| AUPRC (%) | 97.02 | 97.17 | 97.32 | 97.32 | 97.50 | 97.17 | 97.32 |

| Number of Decision Trees (T) | 100 | 200 | 300 | 350 | 400 | 450 | 500 |

|---|---|---|---|---|---|---|---|

| ACC (%) | 97.50 | 97.50 | 97.67 | 98.00 | 97.83 | 97.83 | 97.67 |

| AUROC (%) | 98.33 | 98.31 | 98.42 | 98.65 | 98.52 | 98.55 | 98.42 |

| AUPRC (%) | 97.47 | 97.48 | 97.63 | 97.97 | 97.80 | 97.81 | 97.63 |

| Set | SI | SII | SIII |

|---|---|---|---|

| ACC (%) | 98.33 | 97.50 | 98.00 |

| AUROC (%) | 98.88 | 98.35 | 98.65 |

| AUPRC (%) | 98.32 | 97.50 | 97.97 |

| APTPS (s) | 0.0022 | 0.0011 | 0.0007 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, J.; Meng, Q.; Tian, L.; Wang, X.; Liu, J.; Li, M.; Zeng, M.; Xu, H. A Mandarin Tone Recognition Algorithm Based on Random Forest and Feature Fusion †. Mathematics 2023, 11, 1879. https://doi.org/10.3390/math11081879

Yan J, Meng Q, Tian L, Wang X, Liu J, Li M, Zeng M, Xu H. A Mandarin Tone Recognition Algorithm Based on Random Forest and Feature Fusion †. Mathematics. 2023; 11(8):1879. https://doi.org/10.3390/math11081879

Chicago/Turabian StyleYan, Jiameng, Qiang Meng, Lan Tian, Xiaoyu Wang, Junhui Liu, Meng Li, Ming Zeng, and Huifang Xu. 2023. "A Mandarin Tone Recognition Algorithm Based on Random Forest and Feature Fusion †" Mathematics 11, no. 8: 1879. https://doi.org/10.3390/math11081879

APA StyleYan, J., Meng, Q., Tian, L., Wang, X., Liu, J., Li, M., Zeng, M., & Xu, H. (2023). A Mandarin Tone Recognition Algorithm Based on Random Forest and Feature Fusion †. Mathematics, 11(8), 1879. https://doi.org/10.3390/math11081879