Novel Parametric Families of with and without Memory Iterative Methods for Multiple Roots of Nonlinear Equations

Abstract

:1. Introduction

2. Construction of New Iterative Schemes and Their Convergence Analysis

2.1. Parametric Family of Two-Point without Memory Methods and Its Convergence Analysis

Some Particular Cases of the Weight Function, :

2.2. Parametric Families of Two-Point with Memory Methods and Their Convergence Analysis

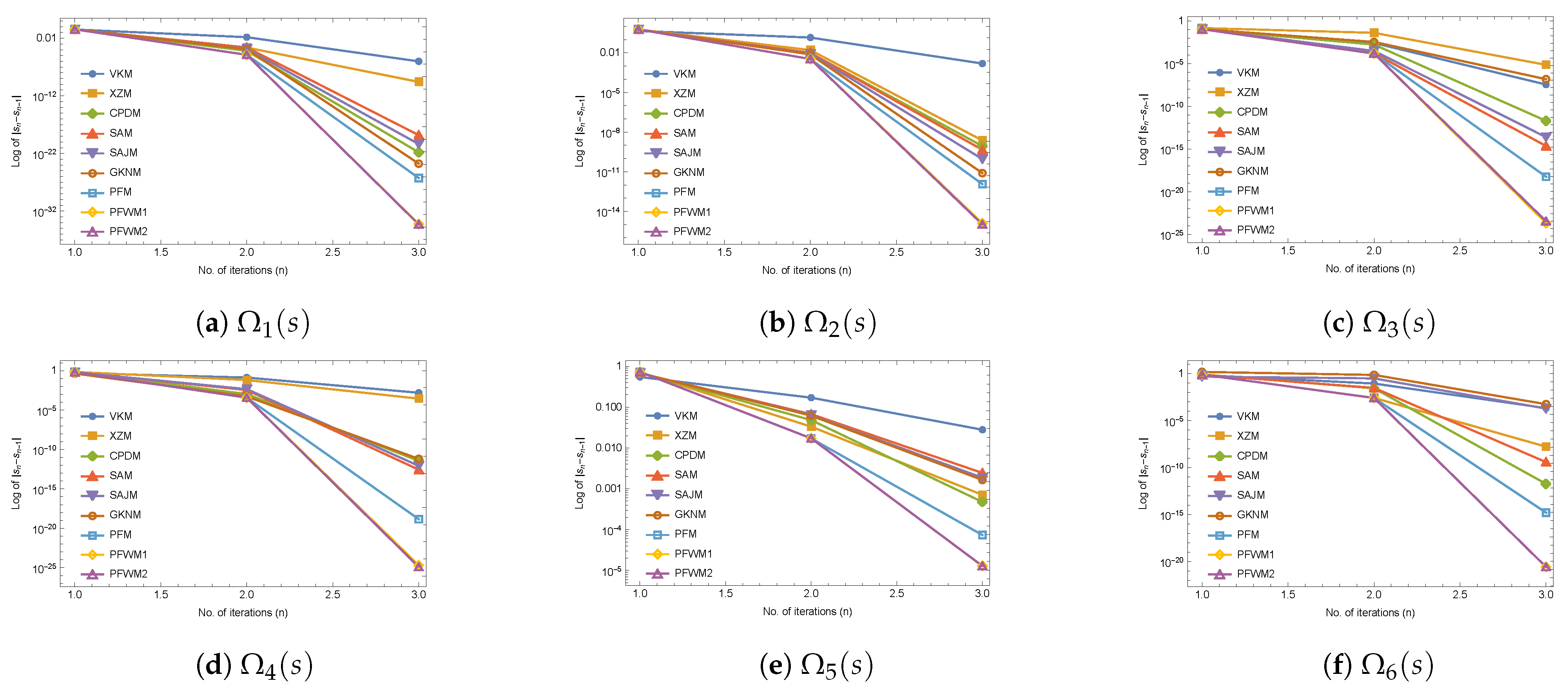

3. Numerical Results

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Behl, R.; Salimi, M.; Ferrara, M.; Sharifi, S.; Alharbi, S.K. Some Real-Life Applications of a Newly Constructed Derivative Free Iterative Scheme. Symmetry 2019, 11, 239. [Google Scholar] [CrossRef]

- Chanu, W.H.; Panday, S.; Thangkhenpau, G. Development of Optimal Iterative Methods with Their Applications and Basins of Attraction. Symmetry 2022, 14, 2020. [Google Scholar] [CrossRef]

- Naseem, A.; Rehman, M.A.; Abdeljawad, T.A. Novel Root-Finding Algorithm With Engineering Applications and its Dynamics via Computer Technology. IEEE Access 2022, 10, 19677–19684. [Google Scholar] [CrossRef]

- Panday, S.; Sharma, A.; Thangkhenpau, G. Optimal fourth and eighth-order iterative methods for non-linear equations. J. Appl. Math. Comput. 2023, 69, 953–971. [Google Scholar] [CrossRef]

- Abdul-Hassan, N.Y.; Ali, A.H.; Park, C. A new fifth-order iterative method free from second derivative for solving nonlinear equations. J. Appl. Math. Comput. 2021, 68, 2877–2886. [Google Scholar] [CrossRef]

- Thangkhenpau, G.; Panday, S. Optimal Eight Order Derivative-Free Family of Iterative Methods for Solving Nonlinear Equations. IAENG Int. J. Comput. Sci. 2023, 50, 335–341. [Google Scholar]

- Kanwar, V.; Cordero, A.; Torregrosa, J.R.; Rajput, M.; Behl, R. A New Third-Order Family of Multiple Root-Findings Based on Exponential Fitted Curve. Algorithms 2023, 16, 156. [Google Scholar] [CrossRef]

- Geum, Y.H.; Kim, Y.I.; Neta, B. A class of two-point sixth-order multiple-zero finders of modified double-Newton type and their dynamics. Appl. Math. Comput. 2015, 270, 387–400. [Google Scholar] [CrossRef]

- Behl, R. A Derivative Free Fourth-Order Optimal Scheme for Applied Science Problems. Mathematics 2022, 10, 1372. [Google Scholar] [CrossRef]

- Sharma, J.R.; Arora, H. A Family of Fifth-Order Iterative Methods for Finding Multiple Roots of Nonlinear Equations. Numer. Anal. Appl. 2021, 14, 168–199. [Google Scholar] [CrossRef]

- Zhou, X.; Liu, B. Iterative methods for multiple roots with memory using self-accelerating technique. J. Comput. Appl. Math. 2023, 428, 115181. [Google Scholar] [CrossRef]

- Kanwar, V.; Bhatia, S.; Kansal, M. New optimal class of higher-order methods for multiple roots, permitting f′(xn) = 0. Appl. Math. Comput. 2013, 222, 564–574. [Google Scholar] [CrossRef]

- Zafar, F.; Cordero, A.; Torregrosa, J.R. Stability analysis of a family of optimal fourth-order methods for multiple roots. Numer. Algor. 2019, 81, 947–981. [Google Scholar] [CrossRef]

- Chanu, W.H.; Panday, S.; Dwivedi, M. New Fifth Order Iterative Method for Finding Multiple Root of Nonlinear Function. Eng. Lett. 2021, 29, 942–947. [Google Scholar]

- Singh, T.; Arora, H.; Jäntschi, L. A Family of Higher Order Scheme for Multiple Roots. Symmetry 2023, 15, 228. [Google Scholar] [CrossRef]

- Sharma, J.R.; Kumar, D.; Cattani, C. An Efficient Class of Weighted-Newton Multiple Root Solvers with Seventh Order Convergence. Symmetry 2019, 11, 1054. [Google Scholar] [CrossRef]

- Zafar, F.; Cordero, A.; Rizvi, D.E.Z.; Torregrosa, J.R. An optimal eighth order derivative free multiple root finding scheme and its dynamics. AIMS Math. 2023, 8, 8478–8503. [Google Scholar] [CrossRef]

- Petković, M.S. Remarks on “On a general class of multipoint root-finding methods of high computational efficiency”. SIAM J. Numer. Math 2011, 49, 1317–1319. [Google Scholar] [CrossRef]

| Methods | n | ∣∣ | ∣∣ | ∣∣ | COC | |

|---|---|---|---|---|---|---|

| VKM | 6 | 3.0000 | ||||

| XZM | 6 | 1.9972 | ||||

| CPDM | 5 | 5.0000 | ||||

| SAM | 5 | 5.0000 | ||||

| SAJM | 5 | 5.0000 | ||||

| GKNM | 5 | 6.0000 | ||||

| PFM | 5 | 5.0000 | ||||

| PFWM1 | 4 | 7.0000 | ||||

| PFWM2 | 4 | 7.0000 |

| Methods | n | ∣∣ | ∣∣ | ∣∣ | COC | |

|---|---|---|---|---|---|---|

| VKM | 7 | 3.0000 | ||||

| XZM | 6 | 3.0017 | ||||

| CPDM | 5 | 5.0000 | ||||

| SAM | 5 | 5.0000 | ||||

| SAJM | 5 | 5.0000 | ||||

| GKNM | 6 | 6.0000 | ||||

| PFM | 5 | 5.0000 | ||||

| PFWM1 | 5 | 7.0000 | ||||

| PFWM2 | 5 | 7.0000 |

| Methods | n | ∣∣ | ∣∣ | ∣∣ | COC | |

|---|---|---|---|---|---|---|

| VKM | 6 | 3.0000 | ||||

| XZM | 7 | 2.9992 | ||||

| CPDM | 5 | 5.0000 | ||||

| SAM | 5 | 3.9991 | ||||

| SAJM | 5 | 3.9991 | ||||

| GKNM | 6 | 0.11076 | 7.4893 | |||

| PFM | 5 | 0.10931 | 5.0000 | |||

| PFWM1 | 4 | 6.9999 | ||||

| PFWM2 | 4 | 7.0000 |

| Methods | n | ∣∣ | ∣∣ | ∣∣ | COC | |

|---|---|---|---|---|---|---|

| VKM | 7 | 3.0000 | ||||

| XZM | 6 | 2.0024 | ||||

| CPDM | 5 | 5.0000 | ||||

| SAM | 5 | 3.9978 | ||||

| SAJM | 5 | 3.9978 | ||||

| GKNM | 6 | 3.0028 | ||||

| PFM | 5 | 5.0000 | ||||

| PFWM1 | 4 | 7.0000 | ||||

| PFWM2 | 4 | 7.0000 |

| Methods | n | ∣∣ | ∣∣ | ∣∣ | COC | |

|---|---|---|---|---|---|---|

| VKM | 9 | 3.0000 | ||||

| XZM | 6 | 5.0000 | ||||

| CPDM | 6 | 6.0000 | ||||

| SAM | 6 | 5.0000 | ||||

| SAJM | 6 | 5.0000 | ||||

| GKNM | 6 | 6.0000 | ||||

| PFM | 6 | 5.0000 | ||||

| PFWM1 | 5 | 7.0000 | ||||

| PFWM2 | 5 | 7.0000 |

| Methods | n | ∣∣ | ∣∣ | ∣∣ | COC | |

|---|---|---|---|---|---|---|

| VKM | 7 | 3.0000 | ||||

| XZM | 6 | 1.9986 | ||||

| CPDM | 5 | 6.0000 | ||||

| SAM | 5 | 5.0000 | ||||

| SAJM | 6 | 5.0000 | ||||

| GKNM | 6 | 6.0000 | ||||

| PFM | 5 | 5.0000 | ||||

| PFWM1 | 5 | 7.0000 | ||||

| PFWM2 | 5 | 7.0000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thangkhenpau, G.; Panday, S.; Mittal, S.K.; Jäntschi, L. Novel Parametric Families of with and without Memory Iterative Methods for Multiple Roots of Nonlinear Equations. Mathematics 2023, 11, 2036. https://doi.org/10.3390/math11092036

Thangkhenpau G, Panday S, Mittal SK, Jäntschi L. Novel Parametric Families of with and without Memory Iterative Methods for Multiple Roots of Nonlinear Equations. Mathematics. 2023; 11(9):2036. https://doi.org/10.3390/math11092036

Chicago/Turabian StyleThangkhenpau, G, Sunil Panday, Shubham Kumar Mittal, and Lorentz Jäntschi. 2023. "Novel Parametric Families of with and without Memory Iterative Methods for Multiple Roots of Nonlinear Equations" Mathematics 11, no. 9: 2036. https://doi.org/10.3390/math11092036

APA StyleThangkhenpau, G., Panday, S., Mittal, S. K., & Jäntschi, L. (2023). Novel Parametric Families of with and without Memory Iterative Methods for Multiple Roots of Nonlinear Equations. Mathematics, 11(9), 2036. https://doi.org/10.3390/math11092036