Reconstructing the Colors of Underwater Images Based on the Color Mapping Strategy

Abstract

:1. Introduction

- (1)

- We propose ACM that analyzes the causes of color degradation in underwater images and focuses on recovering areas with severe degradation. It leverages the benefits of color constancy theory and deep neural network knowledge to enhance the performance of underwater image restoration.

- (2)

- We introduce an MHR module that preserves the interaction between local features and channel information, extracting pixel-level cross-channel context information. This enables the underwater image to achieve ideal contrast and overcome the challenges of low contrast and blurred details.

- (3)

- Our CM-Net achieves state-of-the-art performance across various visual quality and quantitative metrics. It demonstrates the effectiveness of our proposed approach for UIE tasks by comparing it with other methods.

2. Related Works

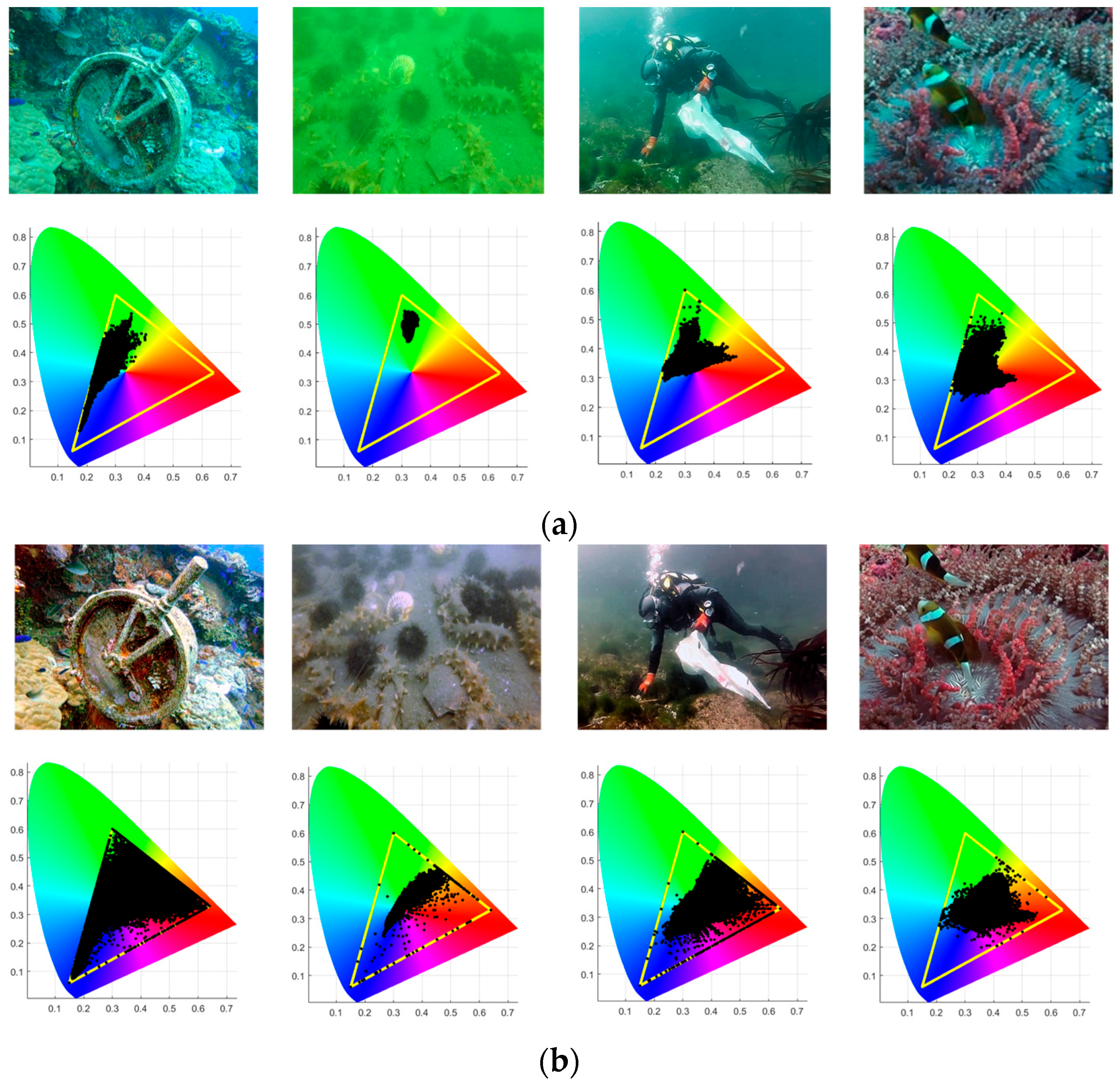

2.1. Color Features of Underwater Images

2.2. Gamut Mapping

2.3. UIE Methods

2.3.1. Traditional Methods

2.3.2. Deep Learning Models

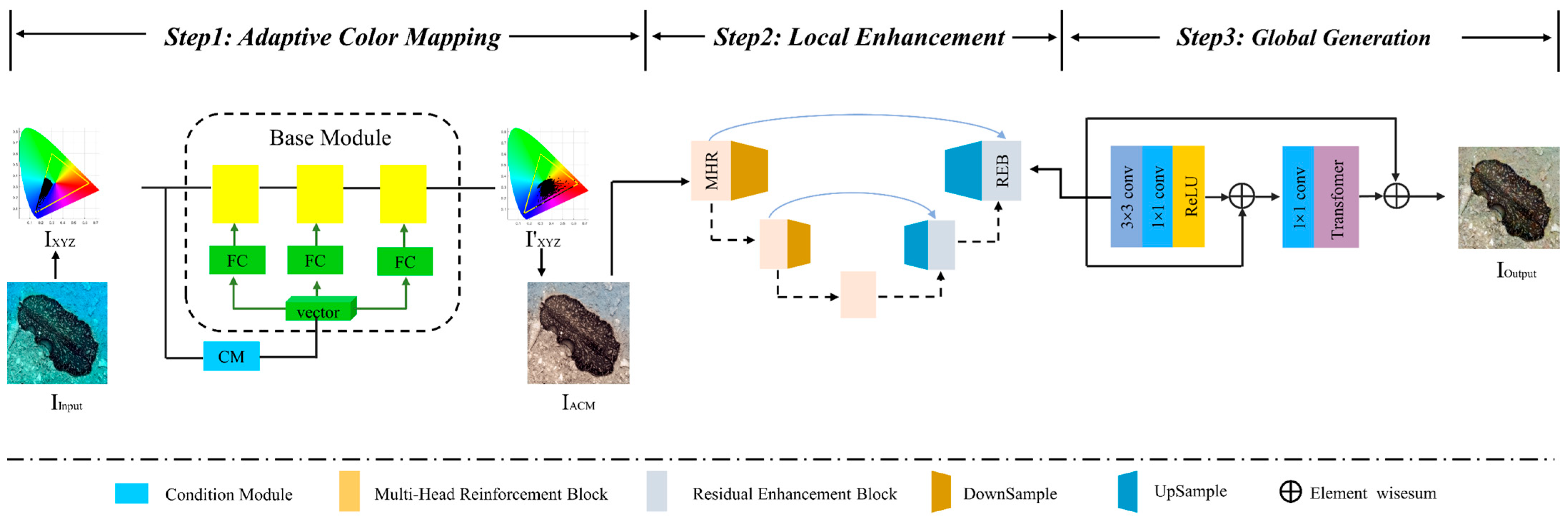

3. The Proposed Method

3.1. Adaptive Color Mapping

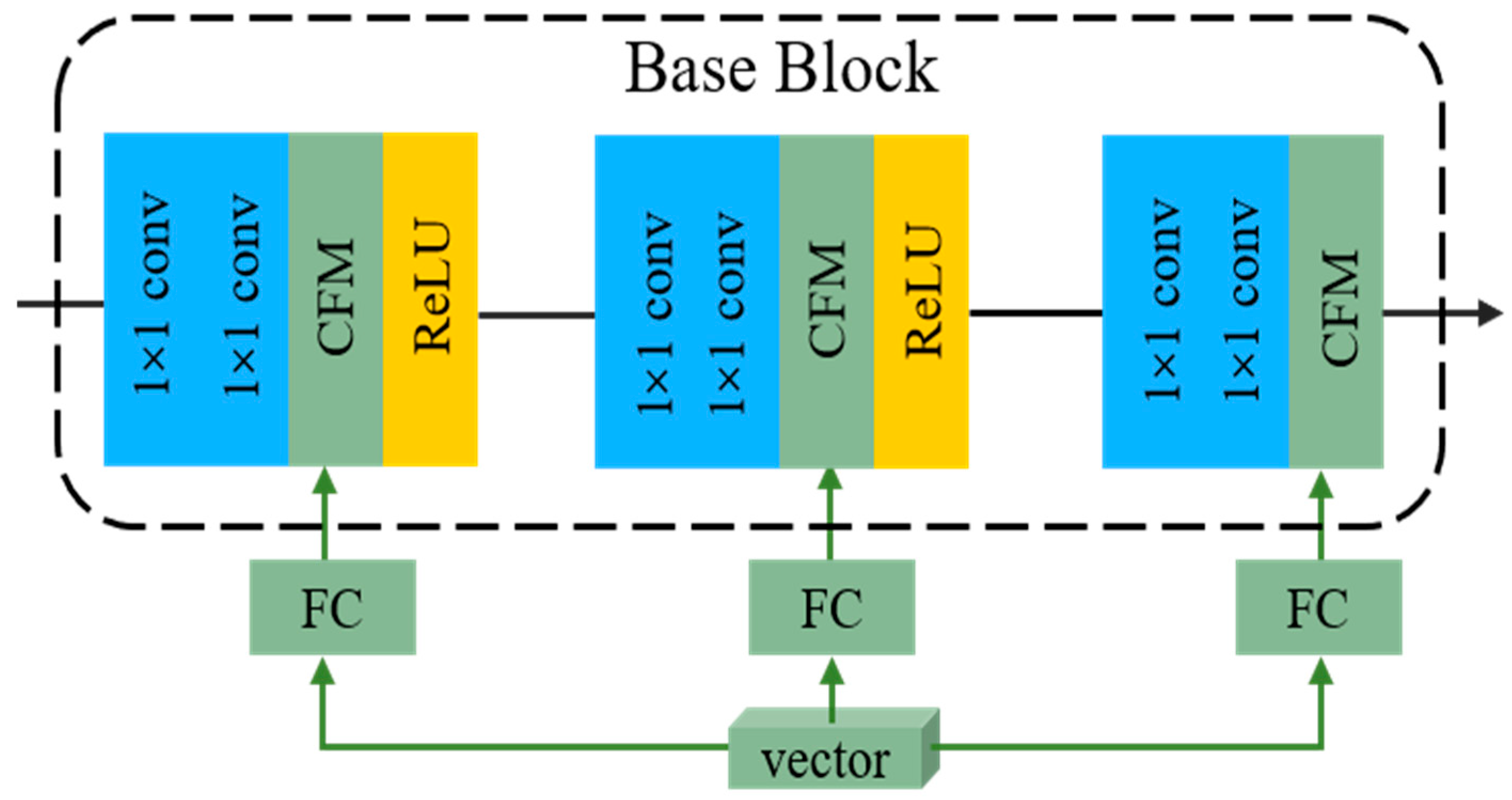

3.1.1. Base Module

3.1.2. Conditional Module

3.2. Local Enhancement

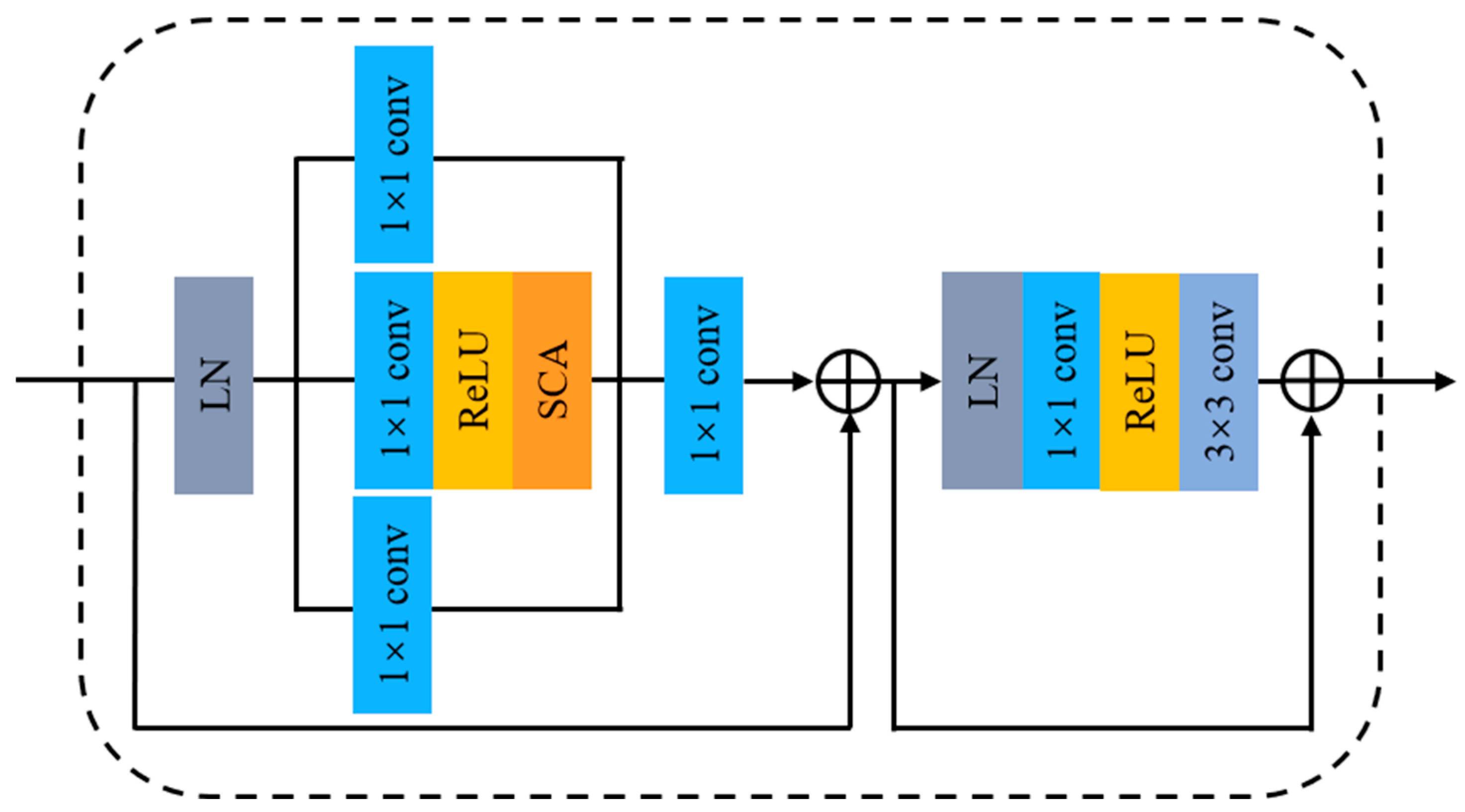

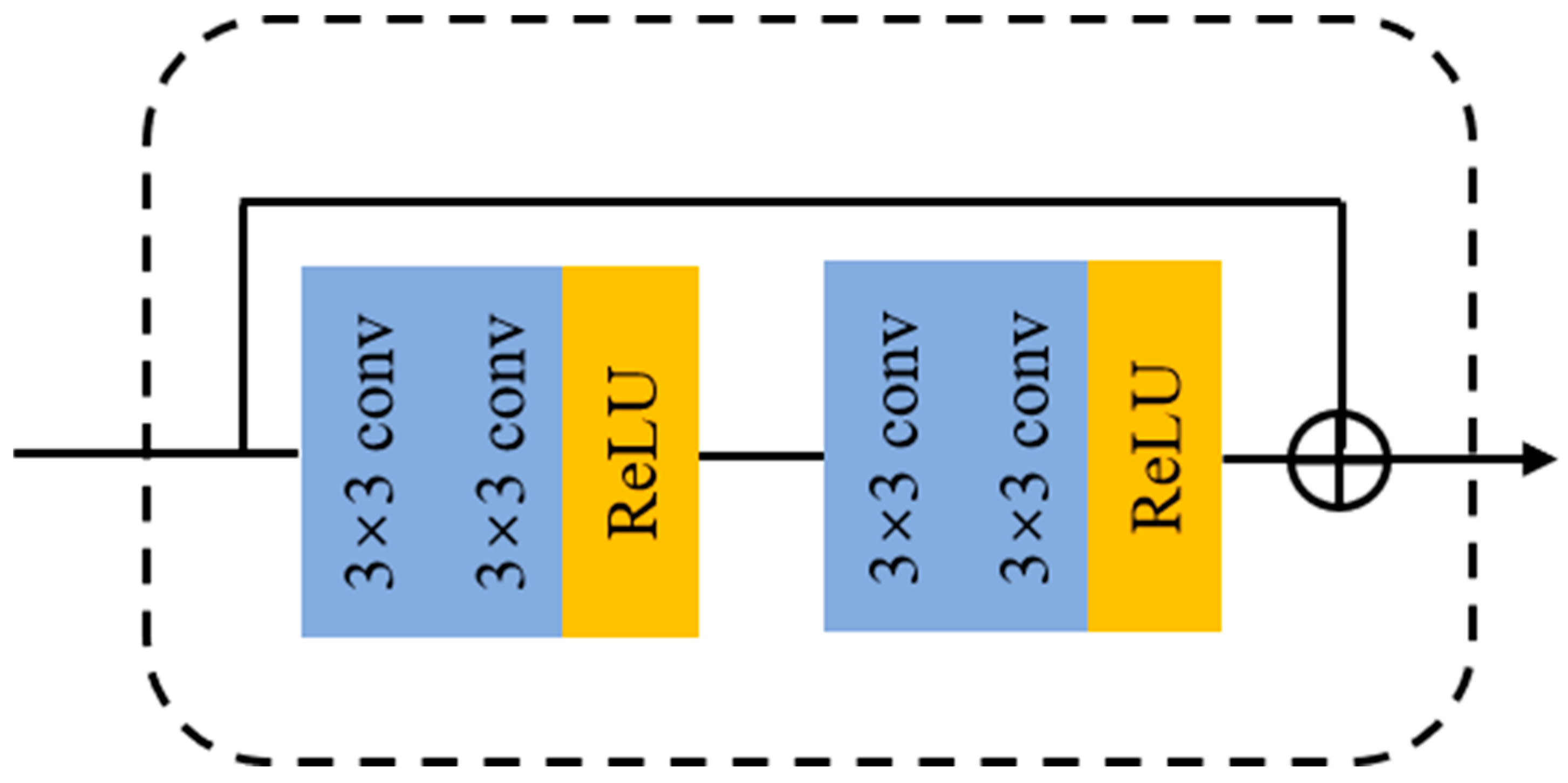

3.2.1. Multi-Head Reinforcement Block

3.2.2. Residual Enhancement Block

3.3. Global Generation

3.4. Loss Function

4. Experiments and Results

4.1. Implementation Details

4.2. Experimental Settings

4.2.1. Datasets

4.2.2. Evaluation Metrics

4.2.3. Comparison Methods

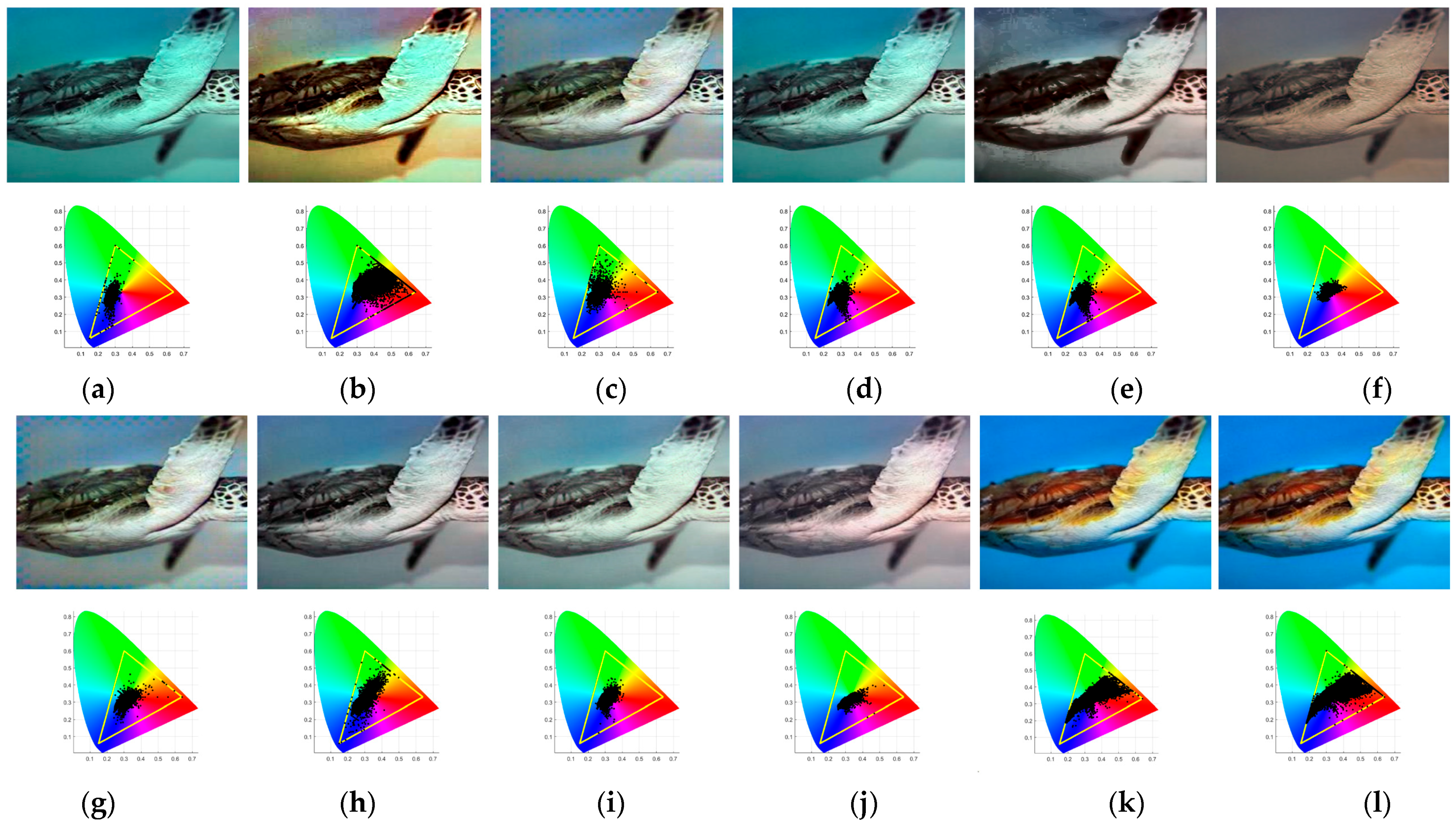

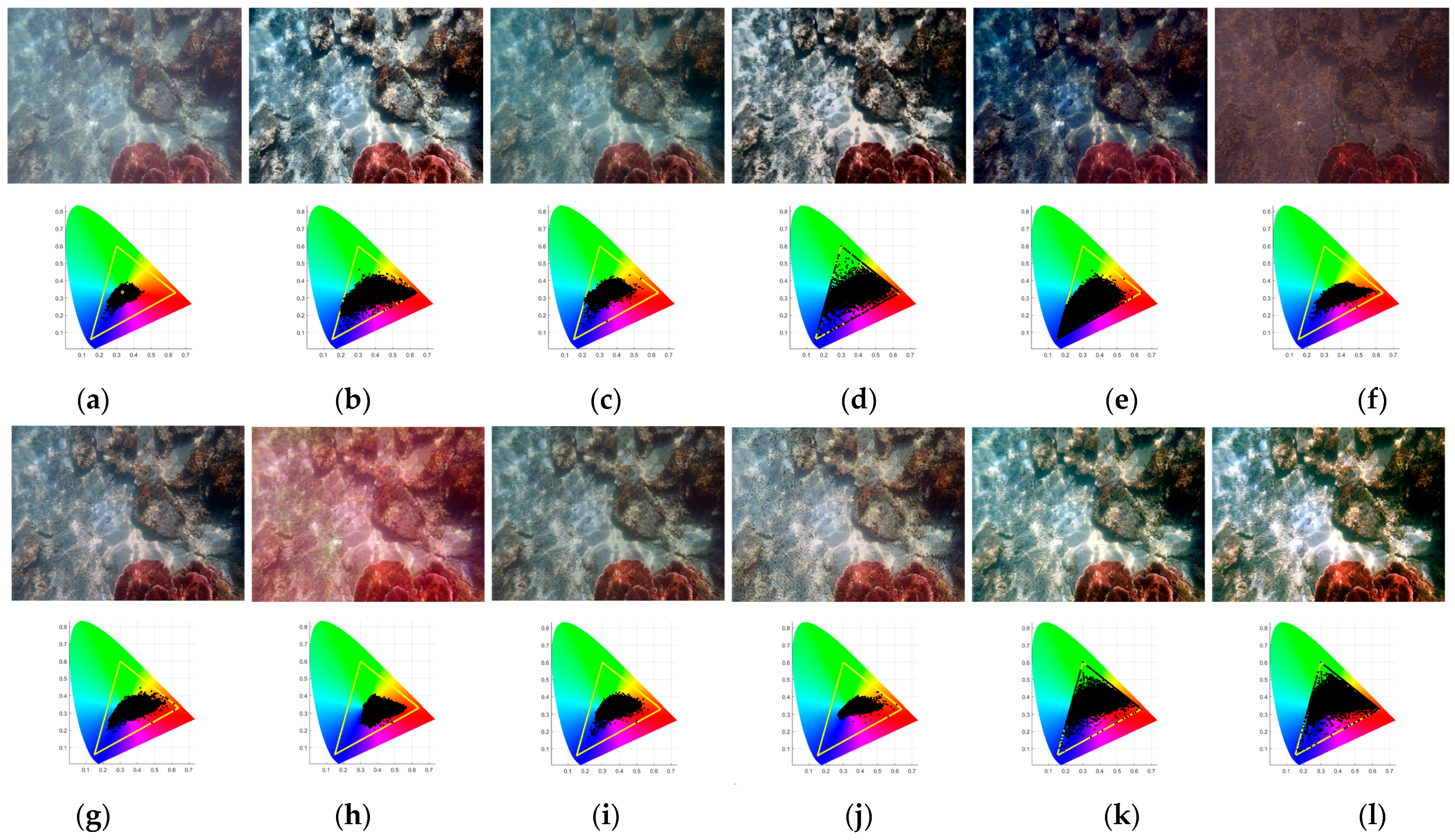

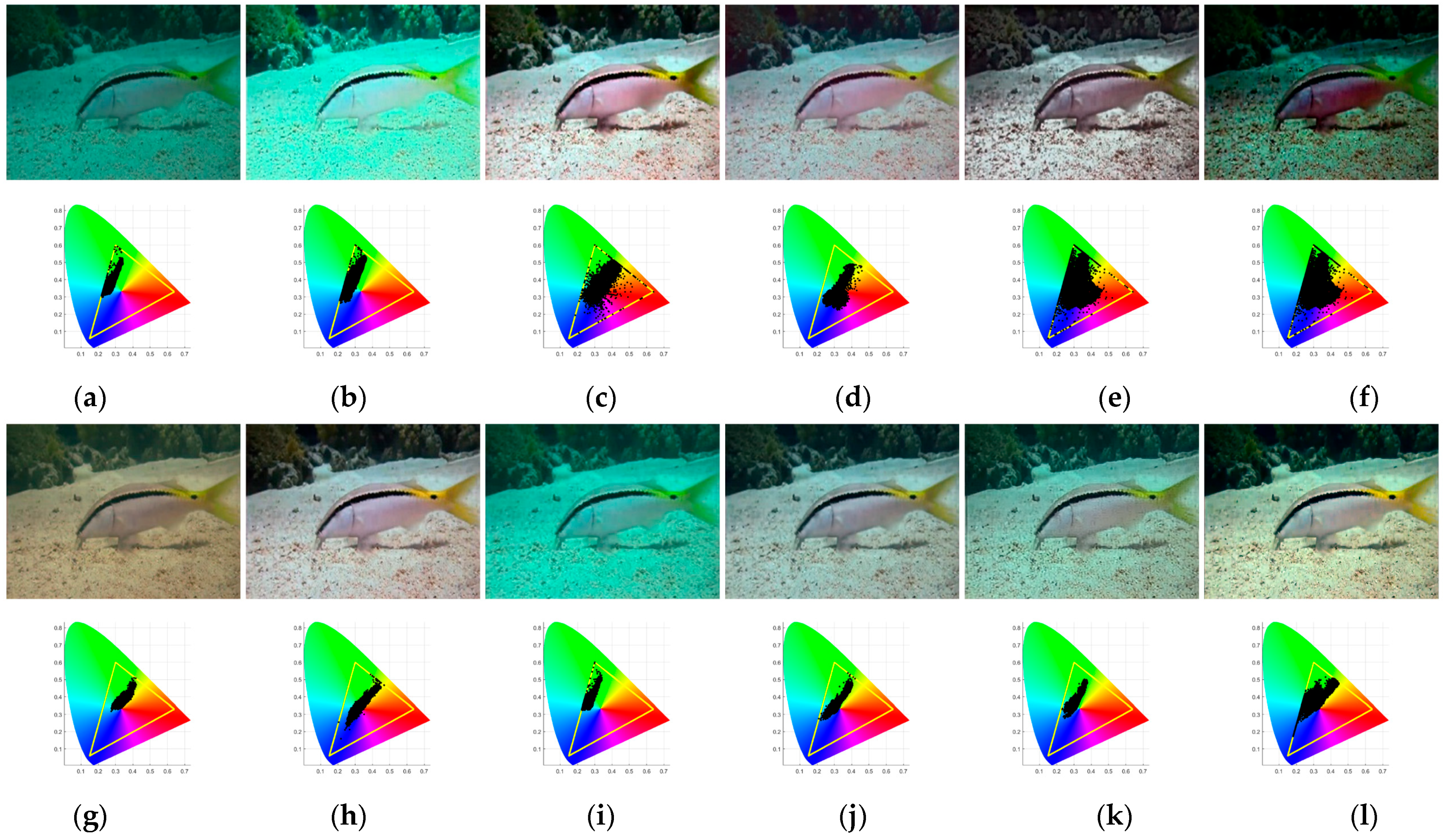

4.3. Visual Comparisons

4.4. Quantitative Comparisons

4.5. Ablation Study

- We assessed the positional relationship between the modules. ACC, ACC + LE, and ACC + LE + GG represent combinations of adaptive color mapping, local enhancement, and global generation networks, respectively. CM-Net is the complete model consisting of the three networks ACC, LE, and GG. The LE + ACC + GG model has the local enhancement network positioned in front of the ACC network;

- We assessed the number of MHNBs in LE. The quantities 3 MHNB and 5 MHNB represent the usage of three and five MHNBs, respectively, in the down-sampling process of LE. In CM-Net, four MHNBs are utilized for overall image processing and enhancement.

- As presented in Table 4, our CM-Net achieves the best quantitative performance across two testing datasets when compared with the ablated models, which implies the effectiveness of the combinations of ACC, LE, and GG networks selected in this experiment;

- We experimented with MHRB numbers of {3, 4, 5} in the encoder section. As shown in Table 5, we found that the selected four MHRBs can be the most beneficial to the CM-Net. The results indicate that aimlessly adding the same number of MHNBs will not bring extra performance improvement to enhance underwater images;

- As shown in Figure 14 and Figure 15, only the images generated by the ACC module have insufficient brightness, and the overall color of the LE enhancement results in front of the ACC network is inaccurate. The five MHNBs may make the network overfit, and the color quality deteriorates instead. The overall color of CM-Net’s results is close to that of the reference image, as LE networks help reconstruct local detail, and GG is good for boosting global brightness. The three modules studied have their functions during the enhancement process, and the combination can improve the overall performance of our network.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Guo, Y.; Li, H.; Zhuang, P. Underwater Image Enhancement Using a Multiscale Dense Generative Adversarial Network. IEEE J. Ocean. Eng. 2020, 45, 862–870. [Google Scholar] [CrossRef]

- Li, C.Y.; Guo, J.C.; Guo, C.L. Emerging from water: Underwater image color correction based on weakly supervised color transfer. IEEE Signal Process. Lett. 2018, 25, 323–327. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Xu, L.; Zhao, B.; Luo, M.R. Colour gamut mapping between small and large colour gamuts: Part I. gamut compression. Opt. Express 2018, 26, 11481–11495. [Google Scholar] [CrossRef]

- Mcglamery, B.L. A computer model for underwater camera systems. Proc. SPIE 1980, 208, 221–231. [Google Scholar]

- Akkaynak, D.; Treibitz, T. Sea-Thru: A Method for Removing Water from Underwater Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1682–1691. [Google Scholar]

- Chiang, J.Y.; Chen, Y.C. Underwater Image Enhancement by Wavelength Compensation and Dehazing. IEEE Trans. Image Process. 2012, 21, 1756–1769. [Google Scholar] [CrossRef] [PubMed]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic Red-Channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- HE, K.M.; Sun, J.; Tang, X.O. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Robert, H. Image enhancement by histogram transformation. Comput. Graph. Image Process. 1977, 6, 184–195. [Google Scholar]

- Stephen, M.P.; Philip, A.E.; John, D.A.; Robert, C.; Ari, G.; Trey, G.; Bart, T.H.R.; John, B.Z.; Karel, Z. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–369. [Google Scholar]

- Liu, Y.C.; Chan, W.H.; Chen, Y.Q. Automatic white balance for digital still camera. IEEE Trans. Consum. Electron. 2002, 41, 460–466. [Google Scholar]

- Land, E.H. The Retinex Theory of Color Vision. Sci. Am. 1978, 237, 108–128. [Google Scholar] [CrossRef] [PubMed]

- Land, E.H. An alternative technique for the computation of the designator in the retinex theory of color vision. Proc. Natl. Acad. Sci. USA 1986, 83, 3078–3080. [Google Scholar] [CrossRef]

- Hitam, M.S.; Awalludin, E.A.; Jawahir, H.W.; Yussof, W.N.; Bachok, Z. Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In Proceedings of the 2013 International Conference on Computer Applications Technology (ICCAT), Sousse, Tunisia, 20–22 January 2013; pp. 1–5. [Google Scholar]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar]

- Reinhard, E.; Adhikhmin, M.; Gooch, B.; Shirley, P. Color transfer between images. IEEE Comput. Graph. Appl. 2001, 21, 34–41. [Google Scholar] [CrossRef]

- Xiao, X.Z.; Ma, L.Z. Color transfer in correlated color space. In Proceedings of the 2006 ACM International Conference on Virtual Reality Continuum and Its Applications (VRCIA’06), New York, NY, USA, 12–26 June 2006; pp. 305–309. [Google Scholar]

- Liu, R.S.; Fan, X.; Zhu, M.; Hou, M.J.; Luo, Z.X. Real-World Underwater Enhancement: Challenges, Benchmarks, and Solutions Under Natural Light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Li, C.Y.; Anwar, S.; Hou, J.H.; Cong, R.M.; Guo, C.L.; Ren, W.Q. Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef] [PubMed]

- Ye, X.C.; Li, Z.P.; Sun, B.L.; Wang, Z.H.; Xu, R.; Li, H.J.; Fan, X. Deep Joint Depth Estimation and Color Correction from Monocular Underwater Images Based on Unsupervised Adaptation Networks. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 3995–4008. [Google Scholar] [CrossRef]

- Zhou, J.C.; Sun, J.M.; Zhang, W.S.; Lin, Z.F. Multi-view underwater image enhancement method via embedded fusion mechanism. Eng. Appl. Artif. Intell. 2023, 121, 105946. [Google Scholar] [CrossRef]

- He, J.; Liu, Y.; Qiao, Y.; Dong, C. Conditional Sequential Modulation for Efficient Global Image Retouching. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 679–695. [Google Scholar]

- Liu, Y.H.; He, J.W.; Chen, X.Y.; Zhang, Z.W.; Zhao, H.Y.; Dong, C.; Qiao, Y. Very Lightweight Photo Retouching Network with Conditional Sequential Modulation. IEEE Trans. Multimed. 2022, 25, 4638–4652. [Google Scholar] [CrossRef]

- Chen, X.Y.; Zhang, Z.W.; Ren, J.S.; Tian, L.; Qiao, Y.; Dong, C. A New Journey from SDRTV to HDRTV. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 4480–4489. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient Transformer for High-Resolution Image Restoration. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5718–5729. [Google Scholar]

- Li, C.Y.; Guo, C.L.; Ren, W.Q.; Cong, R.M.; Hou, J.H.; Kwong, S.; Tao, D.C. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Korhonen, J.; You, J. Peak signal-to-noise ratio revisited: Is simple beautiful? In Proceedings of the 2012 Fourth International Workshop on Quality of Multimedia Experience, Melbourne, VIC, Australia, 5–7 July 2012; pp. 37–38. [Google Scholar]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Tao, Y.; Dong, L.L.; Xu, W.H. A novel two-step strategy based on white-balancing and fusion for underwater image enhancement. IEEE Access 2020, 8, 217651–217670. [Google Scholar] [CrossRef]

- Sharma, G.; Wu, W.C.; Dalal, E.N. The CIEDE2000 color-difference formula: Implementation notes, supplementary test data, and mathematical observations. Color Res. Appl. 2005, 30, 21–30. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cao, K.M.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef]

- Fu, X.Y.; Zhuang, P.X.; Huang, Y.; Liao, Y.H.; Zhang, X.P.; Ding, X.H. A retinex-based enhancing approach for single underwater image. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4572–4576. [Google Scholar]

- Drews, P., Jr.; do Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission estimation in underwater single images. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 825–830. [Google Scholar]

- Li, C.Y.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Peng, L.T.; Zhu, C.L.; Bian, L.H. U-Shape Transformer for Underwater Image Enhancement. IEEE Trans. Image Process. 2023, 32, 3066–3079. [Google Scholar] [CrossRef]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater Single Image Color Restoration Using Haze-Lines and a New Quantitative Dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2822–2837. [Google Scholar] [CrossRef]

- Ren, T.D.; Xu, H.Y.; Jiang, G.Y.; Yu, M.; Zhang, X.; Wang, B.; Luo, T. Reinforced Swin-Convs Transformer for Underwater Image Enhancement. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar]

| Method | PSNR | SSIM | UCIQE | UIQM | ΔE00 |

|---|---|---|---|---|---|

| GDCP | 11.71 | 0.636 | 0.467 | 2.30 | 23.53 |

| Fusion | 16.65 | 0.790 | 0.446 | 3.12 | 16.11 |

| Red | 17.87 | 0.851 | 0.424 | 5.15 | 16.23 |

| Retinex | 16.16 | 0.728 | 0.411 | 4.07 | 16.72 |

| UWCNN | 18.19 | 0.821 | 0.361 | 6.47 | 13.88 |

| W-Net | 15.87 | 0.819 | 0.410 | 3.01 | 19.38 |

| F-GAN | 19.93 | 0.785 | 0.395 | 4.42 | 12.65 |

| U-Color | 19.11 | 0.849 | 0.380 | 6.16 | 13.57 |

| U-Shape | 19.18 | 0.857 | 0.386 | 5.72 | 13.86 |

| CM-Net | 22.66 | 0.908 | 0.369 | 5.50 | 10.31 |

| Method | PSNR | SSIM | UCIQE | UIQM | ΔE00 |

|---|---|---|---|---|---|

| GDCP | 0.735 | 0.44 | 3.67 | 17.77 | 13.27 |

| Fusion | 0.842 | 0.48 | 4.46 | 8.84 | 20.50 |

| Red | 0.814 | 0.41 | 5.31 | 11.93 | 17.47 |

| Retinex | 0.759 | 0.44 | 5.09 | 14.08 | 17.75 |

| UWCNN | 0.680 | 0.31 | 7.01 | 16.30 | 13.83 |

| W-Net | 0.845 | 0.48 | 6.10 | 14.71 | 19.35 |

| F-GAN | 0.734 | 0.40 | 5.14 | 13.69 | 17.32 |

| U-Color | 0.870 | 0.37 | 5.58 | 7.98 | 20.99 |

| U-Shape | 0.800 | 0.38 | 6.33 | 7.88 | 21.32 |

| CM-Net | 0.906 | 0.45 | 5.30 | 7.78 | 22.71 |

| Method | UIQM | UCIQE |

|---|---|---|

| GDCP | 5.03 | 0.42 |

| Fusion | 5.20 | 0.48 |

| Red | 6.19 | 0.42 |

| Retinex | 6.00 | 0.42 |

| UDCP | 1.37 | 0.48 |

| UWCNN | 7.01 | 0.32 |

| W-Net | 6.34 | 0.41 |

| F-GAN | 4.56 | 0.39 |

| U-Color | 6.84 | 0.38 |

| U-Shape | 4.71 | 0.39 |

| CM-Net | 5.29 | 0.43 |

| Component | PSNR | SSIM | ΔE00 |

|---|---|---|---|

| ACC | 20.48 | 0.867 | 10.10 |

| ACC + LE | 21.43 | 0.893 | 8.98 |

| LE + ACC + GG | 21.90 | 0.893 | 8.67 |

| CM-Net | 22.71 | 0.906 | 7.98 |

| Component | PSNR | SSIM | ΔE00 |

|---|---|---|---|

| 3 MHRB | 21.61 | 0.882 | 9.27 |

| 5 MHRB | 22.21 | 0.899 | 8.69 |

| CM-Net | 22.71 | 0.906 | 7.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, S.; Sun, B.; Yang, X.; Han, W.; Tan, J.; Gao, X. Reconstructing the Colors of Underwater Images Based on the Color Mapping Strategy. Mathematics 2024, 12, 1933. https://doi.org/10.3390/math12131933

Wu S, Sun B, Yang X, Han W, Tan J, Gao X. Reconstructing the Colors of Underwater Images Based on the Color Mapping Strategy. Mathematics. 2024; 12(13):1933. https://doi.org/10.3390/math12131933

Chicago/Turabian StyleWu, Siyuan, Bangyong Sun, Xiao Yang, Wenjia Han, Jiahai Tan, and Xiaomei Gao. 2024. "Reconstructing the Colors of Underwater Images Based on the Color Mapping Strategy" Mathematics 12, no. 13: 1933. https://doi.org/10.3390/math12131933