Joint UAV Deployment and Task Offloading in Large-Scale UAV-Assisted MEC: A Multiobjective Evolutionary Algorithm

Abstract

1. Introduction

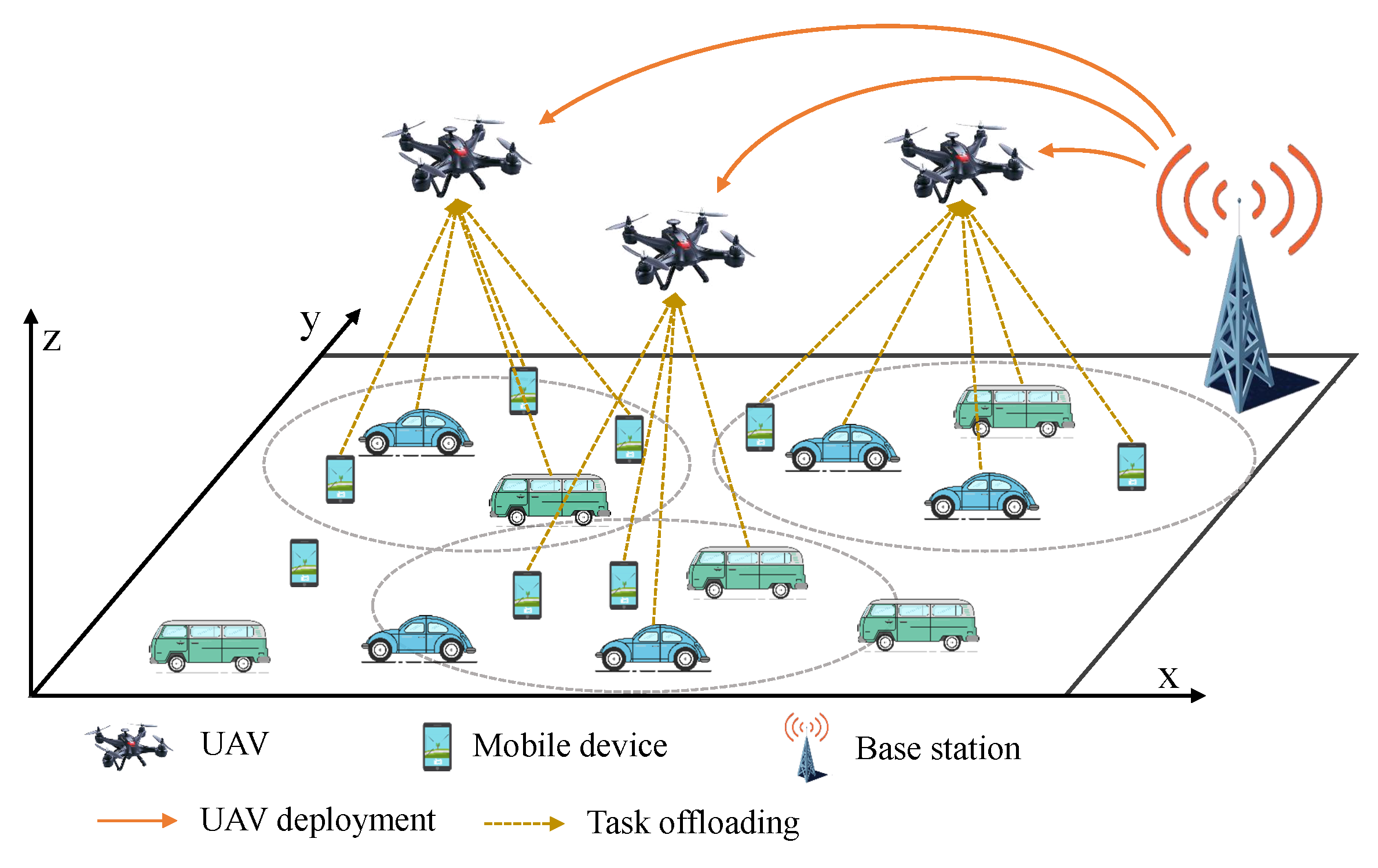

- This paper focuses on UAV deployment and task offloading optimization in a large-scale UAV-assisted MEC and formulates it as a large-scale multiobjective optimization problem, consisting of two optimization objectives (i.e., energy consumption and user satisfaction) with several constraints.

- This paper proposes an efficient multiobjective optimization evolutionary algorithm, called LDOMO, to address the above-formulated problem. Specifically, LDOMO proposes two evolutionary strategies to explore two solution spaces with different characteristics for accelerating convergence and maintaining the diversity of population, and and designs two local search optimizers to improve the quality of the solution.

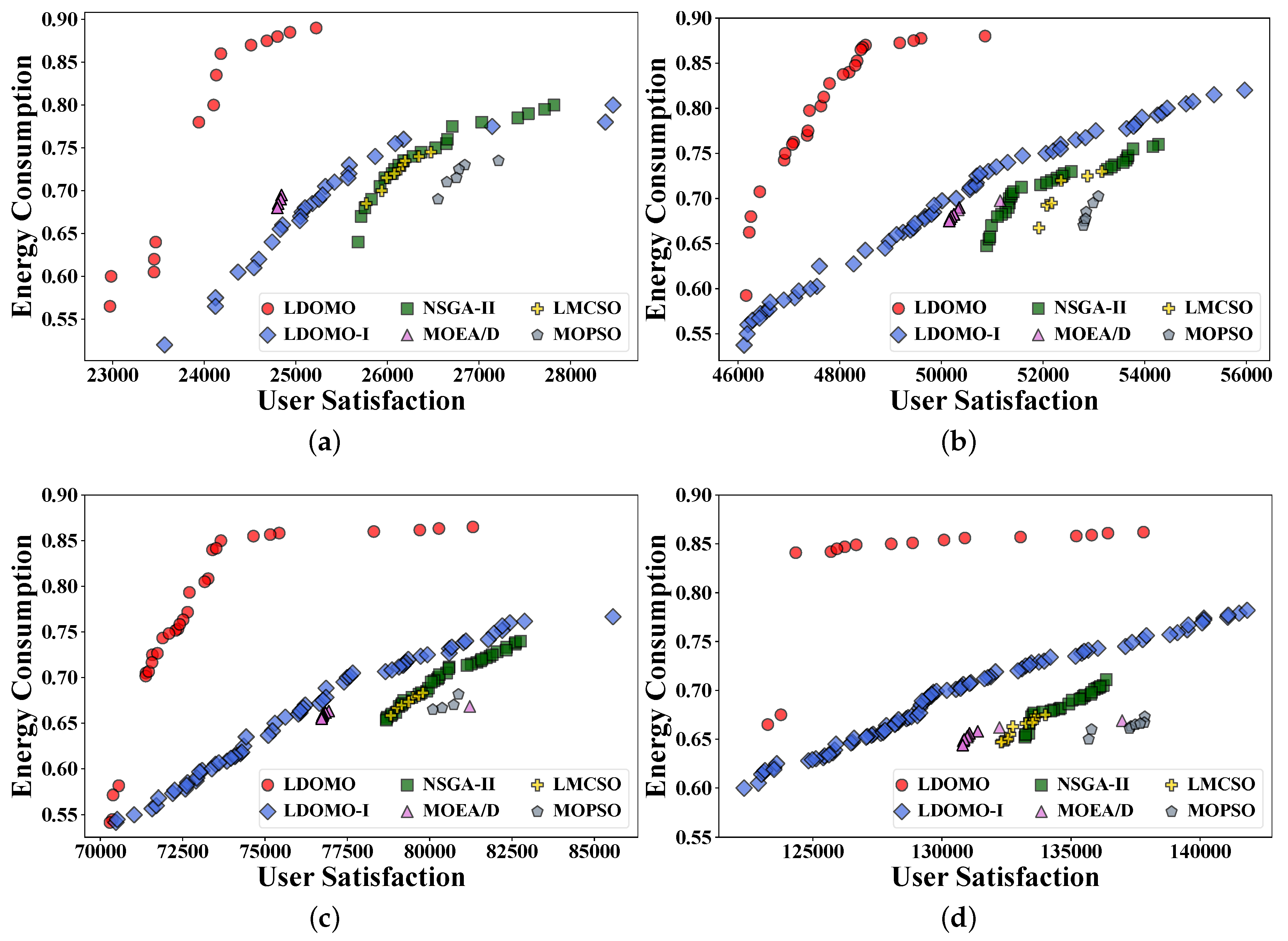

- Extensive simulation results show that LDOMO outperforms several representative multiobjective optimization algorithms in terms of energy consumption and user satisfaction.

2. Background and Related Work

2.1. Large-Scale Multiobjective Optimization

2.2. Related Work and Our Motivations

3. System Model and Problem Formulation

3.1. System Model and Assumption

3.2. Task Offloading Model

3.3. UAV Hover Model

3.4. Problem Formulation

4. Optimization Method

4.1. The Overall Framework

| Algorithm 1 LDOMO |

|

4.2. Population Initialization

4.3. Competition Phase

| Algorithm 2 Competition Phase |

|

4.4. Evolution Phase

4.5. Optimization Phase

| Algorithm 3 Evolution Phase |

|

| Algorithm 4 Optimization phase |

|

5. Simulation Results

5.1. Environmental Setup

5.2. Experimental Setup

5.3. Simulation Results

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yin, Y.; Zheng, P.; Li, C.; Wang, L. A State-of-The-Art Survey on Augmented Reality-Assisted Digital Twin for Futuristic Human-Centric Industry Transformation. Robot. Comput.-Integr. Manuf. 2023, 81, 102515. [Google Scholar] [CrossRef]

- Chen, Z.; Hu, J.; Min, G.; Luo, C.; El-Ghazawi, T. Adaptive and efficient resource allocation in cloud datacenters using actor-critic deep reinforcement learning. IEEE Trans. Parallel Distrib. Syst. 2021, 33, 1911–1923. [Google Scholar] [CrossRef]

- Chen, Z.; Xiong, B.; Chen, X.; Min, G.; Li, J. Joint Computation Offloading and Resource Allocation in Multi-edge Smart Communities with Personalized Federated Deep Reinforcement Learning. IEEE Trans. Mob. Comput. 2024. [Google Scholar] [CrossRef]

- Li, L.; Qiu, Q.; Xiao, Z.; Lin, Q.; Gu, J.; Ming, Z. A Two-Stage Hybrid Multi-Objective Optimization Evolutionary Algorithm for Computing Offloading in Sustainable Edge Computing. IEEE Trans. Consum. Electron. 2024, 70, 735–746. [Google Scholar] [CrossRef]

- Chen, Z.; Yu, Z. Intelligent offloading in blockchain-based mobile crowdsensing using deep reinforcement learning. IEEE Commun. Mag. 2023, 61, 118–123. [Google Scholar] [CrossRef]

- Xiao, Z.; Qiu, Q.; Li, L.; Feng, Y.; Lin, Q.; Ming, Z. An Efficient Service-Aware Virtual Machine Scheduling Approach Based on Multi-Objective Evolutionary Algorithm. IEEE Trans. Serv. Comput. 2023. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, J.; Zheng, X.; Min, G.; Li, J.; Rong, C. Profit-Aware Cooperative Offloading in UAV-Enabled MEC Systems Using Lightweight Deep Reinforcement Learning. IEEE Internet Things J. 2023, 11, 21325–21336. [Google Scholar] [CrossRef]

- Adnan, M.H.; Zukarnain, Z.A.; Amodu, O.A. Fundamental Design Aspects of UAV-Enabled MEC Systems: A Review on Models, Challenges, and Future Opportunities. Comput. Sci. Rev. 2024, 51, 100615. [Google Scholar] [CrossRef]

- Shi, B.; Chen, Z.; Xu, Z. A Deep Reinforcement Learning Based Approach for Optimizing Trajectory and Frequency in Energy Constrained Multi-UAV Assisted MEC System. IEEE Trans. Netw. Serv. Manag. 2024. [Google Scholar] [CrossRef]

- Wang, Y.; Ru, Z.Y.; Wang, K.; Huang, P.Q. Joint Deployment and Task Scheduling Optimization for Large-scale Mobile Users in Multi-UAV-Enabled Mobile Edge Computing. IEEE Trans. Cybern. 2019, 50, 3984–3997. [Google Scholar] [CrossRef]

- Goudarzi, S.; Soleymani, S.A.; Wang, W.; Xiao, P. UAV-Enabled Mobile Edge Computing for Resource Allocation Using Cooperative Evolutionary Computation. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 5134–5147. [Google Scholar] [CrossRef]

- Kumbhar, F.H.; Shin, S.Y. Innovating Multi-Objective Optimal Message Routing for Unified High Mobility Networks. IEEE Trans. Veh. Technol. 2023, 72, 6571–6583. [Google Scholar] [CrossRef]

- Guo, H.; Wang, Y.; Liu, J.; Liu, C. Multi-UAV Cooperative Task Offloading and Resource Allocation in 5G Advanced and Beyond. IEEE Trans. Wirel. Commun. 2024, 23, 347–359. [Google Scholar] [CrossRef]

- Ning, Z.; Hu, H.; Wang, X.; Guo, L.; Guo, S.; Wang, G.; Gao, X. Mobile Edge Computing and Machine Learning in The Internet of Unmanned Aerial Vehicles: A Survey. ACM Comput. Surv. 2023, 56, 13. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, J.; Huang, Z.; Wang, P.; Yu, Z.; Miao, W. Computation offloading in blockchain-enabled MCS systems: A scalable deep reinforcement learning approach. Future Gener. Comput. Syst. 2024, 153, 301–311. [Google Scholar] [CrossRef]

- Asim, M.; Mashwani, W.K.; Shah, H.; Belhaouari, S.B. An Evolutionary Trajectory Planning Algorithm for Multi-UAV-Assisted MEC System. Soft Comput. 2022, 26, 7479–7492. [Google Scholar] [CrossRef]

- Pehlivanoglu, Y.V.; Pehlivanoglu, P. An Enhanced Genetic Algorithm for Path Planning of Autonomous UAV in Target Coverage Problems. Appl. Soft Comput. 2021, 112, 107796. [Google Scholar] [CrossRef]

- Abhishek, B.; Ranjit, S.; Shankar, T.; Eappen, G.; Sivasankar, P.; Rajesh, A. Hybrid PSO-HSA and PSO-GA Algorithm for 3D Path Planning in Autonomous UAVs. SN Appl. Sci. 2020, 2, 1805. [Google Scholar] [CrossRef]

- Tang, Q.; Wen, S.; He, S.; Yang, K. Multi-UAV-Assisted Offloading for Joint Optimization of Energy Consumption and Latency in Mobile Edge Computing. IEEE Syst. J. 2024, 18, 1414–1425. [Google Scholar] [CrossRef]

- Mousa, M.H.; Hussein, M.K. Efficient UAV-based Mobile Edge Computing Using Differential Evolution and Ant Colony Optimization. PeerJ Comput. Sci. 2022, 8, e870. [Google Scholar] [CrossRef]

- Samriya, J.K.; Kumar, M.; Tiwari, R. Energy-aware ACO-DNN Optimization Model for Intrusion Detection of Unmanned Aerial Vehicle (UAVs). J. Ambient Intell. Humaniz. Comput. 2023, 14, 10947–10962. [Google Scholar] [CrossRef]

- Shurrab, M.; Mizouni, R.; Singh, S.; Otrok, H. Reinforcement Learning Framework for UAV-based Target Localization Applications. Internet Things 2023, 23, 100867. [Google Scholar] [CrossRef]

- Yu, Z.; Hu, J.; Min, G.; Zhao, Z.; Miao, W.; Hossain, M.S. Mobility-aware proactive edge caching for connected vehicles using federated learning. IEEE Trans. Intell. Transp. Syst. 2020, 22, 5341–5351. [Google Scholar] [CrossRef]

- AlShathri, S.I.; Chelloug, S.A.; Hassan, D.S. Parallel Meta-Heuristics for Solving Dynamic Offloading in Fog Computing. Mathematics 2022, 10, 1258. [Google Scholar] [CrossRef]

- Wei, D.; Wang, R.; Xia, C.; Xia, T.; Jin, X.; Xu, C. Edge Computing Offloading Method Based on Deep Reinforcement Learning for Gas Pipeline Leak Detection. Mathematics 2022, 10, 4812. [Google Scholar] [CrossRef]

- Mei, J.; Dai, L.; Tong, Z.; Deng, X.; Li, K. Throughput-Aware Dynamic Task Offloading Under Resource Constant for MEC with Energy Harvesting Devices. IEEE Trans. Netw. Serv. Manag. 2023, 20, 3460–3473. [Google Scholar] [CrossRef]

- Ren, J.; Liu, J.; Zhang, Y.; Li, Z.; Lyu, F.; Wang, Z.; Zhang, Y. An Efficient Two-Layer Task Offloading Scheme for MEC System with Multiple Services Providers. In Proceedings of the IEEE Conference on Computer Communications, Online, 2–5 May 2022; pp. 1519–1528. [Google Scholar]

- Deng, T.; Chen, Y.; Chen, G.; Yang, M.; Du, L. Task Offloading Based on Edge Collaboration in MEC-Enabled IoV Networks. J. Commun. Netw. 2023, 25, 197–207. [Google Scholar] [CrossRef]

- Liu, T.; Guo, D.; Xu, Q.; Gao, H.; Zhu, Y.; Yang, Y. Joint Task Offloading and Dispatching for MEC with Rational Mobile Devices and Edge Nodes. IEEE Trans. Cloud Comput. 2023, 11, 3262–3273. [Google Scholar] [CrossRef]

- Zeng, F.; Tang, J.; Liu, C.; Deng, X.; Li, W. Task-Offloading Strategy Based on Performance Prediction in Vehicular Edge Computing. Mathematics 2022, 10, 1010. [Google Scholar] [CrossRef]

- Tung, T.V.; An, T.T.; Lee, B.M. Joint Resource and Trajectory Optimization for Energy Efficiency Maximization in UAV-Based Networks. Mathematics 2022, 10, 3840. [Google Scholar] [CrossRef]

- Arif, M.; Kim, W. Analysis of Fluctuating Antenna Beamwidth in UAV-Assisted Cellular Networks. Mathematics 2023, 11, 4706. [Google Scholar] [CrossRef]

- Elgendy, I.A.; Meshoul, S.; Hammad, M. Joint Task Offloading, Resource Allocation, and Load-Balancing Optimization in Multi-UAV-Aided MEC Systems. Appl. Sci. 2023, 13, 2625. [Google Scholar] [CrossRef]

- Zhu, A.; Lu, H.; Ma, M.; Zhou, Z.; Zeng, Z. DELOFF: Decentralized Learning-Based Task Offloading for Multi-UAVs in U2X-Assisted Heterogeneous Networks. Drones 2023, 7, 656. [Google Scholar] [CrossRef]

- Xu, Y.; Deng, F.; Zhang, J. UDCO-SAGiMEC: Joint UAV Deployment and Computation Offloading for Space–Air–Ground Integrated Mobile Edge Computing. Mathematics 2023, 11, 4014. [Google Scholar] [CrossRef]

- Tian, J.; Wang, D.; Zhang, H.; Wu, D. Service Satisfaction-Oriented Task Offloading and UAV Scheduling in UAV-Enabled MEC Networks. IEEE Trans. Wirel. Commun. 2023, 22, 8949–8964. [Google Scholar] [CrossRef]

- Xia, J.; Wang, P.; Li, B.; Fei, Z. Intelligent Task Offloading and Collaborative Computation in Multi-UAV-Enabled Mobile Edge Computing. China Commun. 2022, 19, 244–256. [Google Scholar] [CrossRef]

- Chen, Z.; Zheng, H.; Zhang, J.; Zheng, X.; Rong, C. Joint Computation Offloading and Deployment Optimization in Multi-UAV-Enabled MEC Systems. Peer Netw. Appl. 2022, 15, 194–205. [Google Scholar] [CrossRef]

- Liu, B.; Liu, C.; Peng, M. Resource Allocation for Energy-Efficient MEC in NOMA-Enabled Massive IoT Networks. IEEE J. Sel. Areas Commun. 2020, 39, 1015–1027. [Google Scholar] [CrossRef]

- Liu, Y.; Xiong, K.; Ni, Q.; Fan, P.; Letaief, K.B. UAV-Assisted Wireless Powered Cooperative Mobile Edge Computing: Joint Offloading, CPU Control, and Trajectory Optimization. IEEE Internet Things J. 2019, 7, 2777–2790. [Google Scholar] [CrossRef]

- Li, L.; Lin, Q.; Ming, Z.; Wong, K.C.; Gong, M.; Coello, C.A.C. An Immune-Inspired Resource Allocation Strategy for Many-Objective Optimization. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 3284–3297. [Google Scholar] [CrossRef]

- Raquel, C.R.; Naval, P.C., Jr. An Effective Use of Crowding Distance in Multiobjective Particle Swarm Optimization. In Proceedings of the 7th Annual Conference on Genetic and Evolutionary Computation, Washington, DC, USA, 25–29 June 2005; pp. 257–264. [Google Scholar] [CrossRef]

- Liu, S.; Li, J.; Lin, Q.; Tian, Y.; Tan, K.C. Learning to Accelerate Evolutionary Search for Large-scale Multiobjective Optimization. IEEE Trans. Evol. Comput. 2022, 27, 67–81. [Google Scholar] [CrossRef]

- Li, L.; Li, Y.; Lin, Q.; Liu, S.; Zhou, J.; Ming, Z.; Coello, C.A.C. Neural Net-Enhanced Competitive Swarm Optimizer for Large-Scale Multiobjective Optimization. IEEE Trans. Cybern. 2023, 54, 3502–3515. [Google Scholar] [CrossRef] [PubMed]

- Zhu, J.; Wang, X.; Huang, H.; Cheng, S.; Wu, M. A NSGA-II Algorithm for Task Scheduling in UAV-Enabled MEC System. IEEE Trans. Intell. Transp. Syst. 2021, 23, 9414–9429. [Google Scholar] [CrossRef]

- Yao, Z.; Wu, H.; Chen, Y. Multi-Objective Cooperative Computation Offloading for MEC in UAVs Hybrid Networks via Integrated Optimization Framework. Comput. Commun. 2023, 202, 124–134. [Google Scholar] [CrossRef]

- Tian, Y.; Zheng, X.; Zhang, X.; Jin, Y. Efficient Large-Scale Multiobjective Optimization Based on A Competitive Swarm Optimizer. IEEE Trans. Cybern. 2019, 50, 3696–3708. [Google Scholar] [CrossRef]

- Zhu, X.; Zhou, M. Multiobjective Optimized Cloudlet Deployment and Task Offloading for Mobile-Edge Computing. IEEE Internet Things J. 2021, 8, 15582–15595. [Google Scholar] [CrossRef]

| Symbol | Definition |

|---|---|

| the set of mobile devices | |

| I | the number of mobile devices |

| the set of UAVs | |

| J | the number of UAVs |

| the task data size of | |

| the CPU cycles of | |

| the maximum admissible delay of | |

| the computational resource of | |

| the storage resource of | |

| the spatial location of | |

| the spatial location of | |

| whether the task of is offloaded to | |

| the channel bandwidth between and | |

| the transmit power | |

| the hovering power of the UAV | |

| whether the task of can be completed |

| Parameter | Value |

|---|---|

| Distribution area of UAVs and mobile devices | 1000 × 1000 m2 |

| Number of mobile devices (I) | 100, 200, …, 1000 |

| Number of UAVs (J) | 50, 100, …, 500 |

| Transmission data of one task | [0.5, 2] Mbits |

| CPU cycles to compute one bit | [100, 1000] CPU cycles |

| Maximum delay of one task | [100, 200] ms |

| Transmission bandwidth | 100 Mbps |

| Computation capabilities of UAVs | GHz |

| Transmit power | 1 W |

| Hovering power of UAVs | 1 kW |

| Noise power | −117 dB |

| Central frequency | 2 GHz |

| Minimum allowable distance of UAVs | 4 m |

| Parameters | Common Parameters | |

|---|---|---|

| LDOMO, LDOMO-I | = 0.1, e = 20, K = 40 | Population Size = 100, Iteration = 200, Runtimes = 20 |

| NSGA-II | = 0.9, = 0.08 | |

| MOEA/D | T = 20, = 0.9, = 0.8, = 0.01 | |

| LMCSO | = 0.1 | |

| MOPSO | w = 0.4, = 2.0, = 2.0 |

| I (J = 500) | Energy Consumption | User Satisfaction | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| LDOMO | NSGA-II | MOEA/D | LMCSO | MOPSO | LDOMO | NSGA-II | MOEA/D | LMCSO | MOPSO | |

| 100 | 56,970.1 | 57,431.5 | 57,002.0 | 57,853.4 | 58,077.6 | 0.888 | 0.830 | 0.720 | 0.770 | 0.760 |

| 200 | 63,414.9 | 65,684.9 | 64,640.7 | 65,974.2 | 66,650.8 | 0.884 | 0.790 | 0.700 | 0.740 | 0.730 |

| 300 | 71,332.8 | 74,111.9 | 72,910.3 | 74,336.2 | 75,317.4 | 0.860 | 0.743 | 0.697 | 0.690 | 0.687 |

| 400 | 77,574.7 | 81,653.4 | 80,162.3 | 81,585.6 | 82,668.7 | 0.880 | 0.772 | 0.700 | 0.702 | 0.702 |

| 500 | 84,481.2 | 90,214.1 | 89,105.9 | 90,339.2 | 91,916.8 | 0.876 | 0.730 | 0.688 | 0.690 | 0.686 |

| 600 | 92,729.7 | 98,438.2 | 97,719.3 | 98,768.7 | 100,130.7 | 0.867 | 0.722 | 0.673 | 0.683 | 0.685 |

| 700 | 102,604.7 | 107,783.9 | 105,357.2 | 106,677.9 | 109,443.0 | 0.867 | 0.713 | 0.667 | 0.690 | 0.680 |

| 800 | 103,946.7 | 115,604.6 | 113,217.9 | 115,313.4 | 117,403.9 | 0.867 | 0.712 | 0.670 | 0.680 | 0.677 |

| 900 | 112,344.3 | 124,771.6 | 122,638.6 | 124,138.6 | 126,358.2 | 0.863 | 0.710 | 0.660 | 0.677 | 0.677 |

| 1000 | 123,255.4 | 133,216.1 | 130,804.1 | 132,296.6 | 135,684.6 | 0.862 | 0.711 | 0.669 | 0.675 | 0.673 |

| I (J = 1000) | Energy Consumption | User Satisfaction | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| LDOMO | NSGA-II | MOEA/D | LMCSO | MOPSO | LDOMO | NSGA-II | MOEA/D | LMCSO | MOPSO | |

| 50 | 74,540.7 | 87,534.9 | 83,418.2 | 86,323.6 | 89,026.3 | 0.844 | 0.787 | 0.657 | 0.682 | 0.666 |

| 100 | 77,093.4 | 92,497.9 | 89,635.2 | 90,925.3 | 95,479.4 | 0.857 | 0.716 | 0.675 | 0.674 | 0.680 |

| 150 | 85,530.7 | 97,687.4 | 94,877.5 | 99,300.1 | 100,112.3 | 0.857 | 0.705 | 0.664 | 0.698 | 0.667 |

| 200 | 86,474.1 | 102,975.9 | 99,698.2 | 104,327.6 | 104,663.8 | 0.859 | 0.722 | 0.677 | 0.703 | 0.668 |

| 250 | 92,963.8 | 108,354.3 | 105,686.8 | 106,151.1 | 110,291.5 | 0.861 | 0.715 | 0.665 | 0.664 | 0.679 |

| 300 | 99,043.7 | 112,999.2 | 110,784.6 | 112,751.4 | 115,239.3 | 0.862 | 0.721 | 0.657 | 0.676 | 0.670 |

| 350 | 106,903.4 | 117,161.8 | 114,714.7 | 118,218.3 | 119,621.3 | 0.861 | 0.701 | 0.665 | 0.678 | 0.668 |

| 400 | 112,741.3 | 122,807.7 | 120,043.5 | 122,736.8 | 124,896.7 | 0.860 | 0.719 | 0.669 | 0.680 | 0.664 |

| 450 | 113,291.5 | 128,049.4 | 125,420.1 | 127,362.4 | 129,851.3 | 0.862 | 0.711 | 0.666 | 0.671 | 0.661 |

| 500 | 123,255.4 | 133,216.1 | 130,804.1 | 132,296.6 | 135,684.6 | 0.862 | 0.711 | 0.669 | 0.675 | 0.673 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, Q.; Li, L.; Xiao, Z.; Feng, Y.; Lin, Q.; Ming, Z. Joint UAV Deployment and Task Offloading in Large-Scale UAV-Assisted MEC: A Multiobjective Evolutionary Algorithm. Mathematics 2024, 12, 1966. https://doi.org/10.3390/math12131966

Qiu Q, Li L, Xiao Z, Feng Y, Lin Q, Ming Z. Joint UAV Deployment and Task Offloading in Large-Scale UAV-Assisted MEC: A Multiobjective Evolutionary Algorithm. Mathematics. 2024; 12(13):1966. https://doi.org/10.3390/math12131966

Chicago/Turabian StyleQiu, Qijie, Lingjie Li, Zhijiao Xiao, Yuhong Feng, Qiuzhen Lin, and Zhong Ming. 2024. "Joint UAV Deployment and Task Offloading in Large-Scale UAV-Assisted MEC: A Multiobjective Evolutionary Algorithm" Mathematics 12, no. 13: 1966. https://doi.org/10.3390/math12131966

APA StyleQiu, Q., Li, L., Xiao, Z., Feng, Y., Lin, Q., & Ming, Z. (2024). Joint UAV Deployment and Task Offloading in Large-Scale UAV-Assisted MEC: A Multiobjective Evolutionary Algorithm. Mathematics, 12(13), 1966. https://doi.org/10.3390/math12131966