From Classical to Modern Nonlinear Central Limit Theorems

Abstract

:1. Introduction

2. The Classical Central Limit Theorems

3. The Martingale Central Limit Theorems

4. Nonlinear Central Limit Theorems

4.1. Nonlinear CLT under Nonlinear Expectations

- (1)

- Monotonicity: If , then ;

- (2)

- Preserving constants: , for all ;

- (3)

- Subadditivity: , for all ;

- (4)

- Positive homogeneity: , for all .

4.2. Nonlinear CLT under a Set of Probability Measures

- Case I: CLT with mean uncertainty

- (1)

- If φ is increasing on , then

- (2)

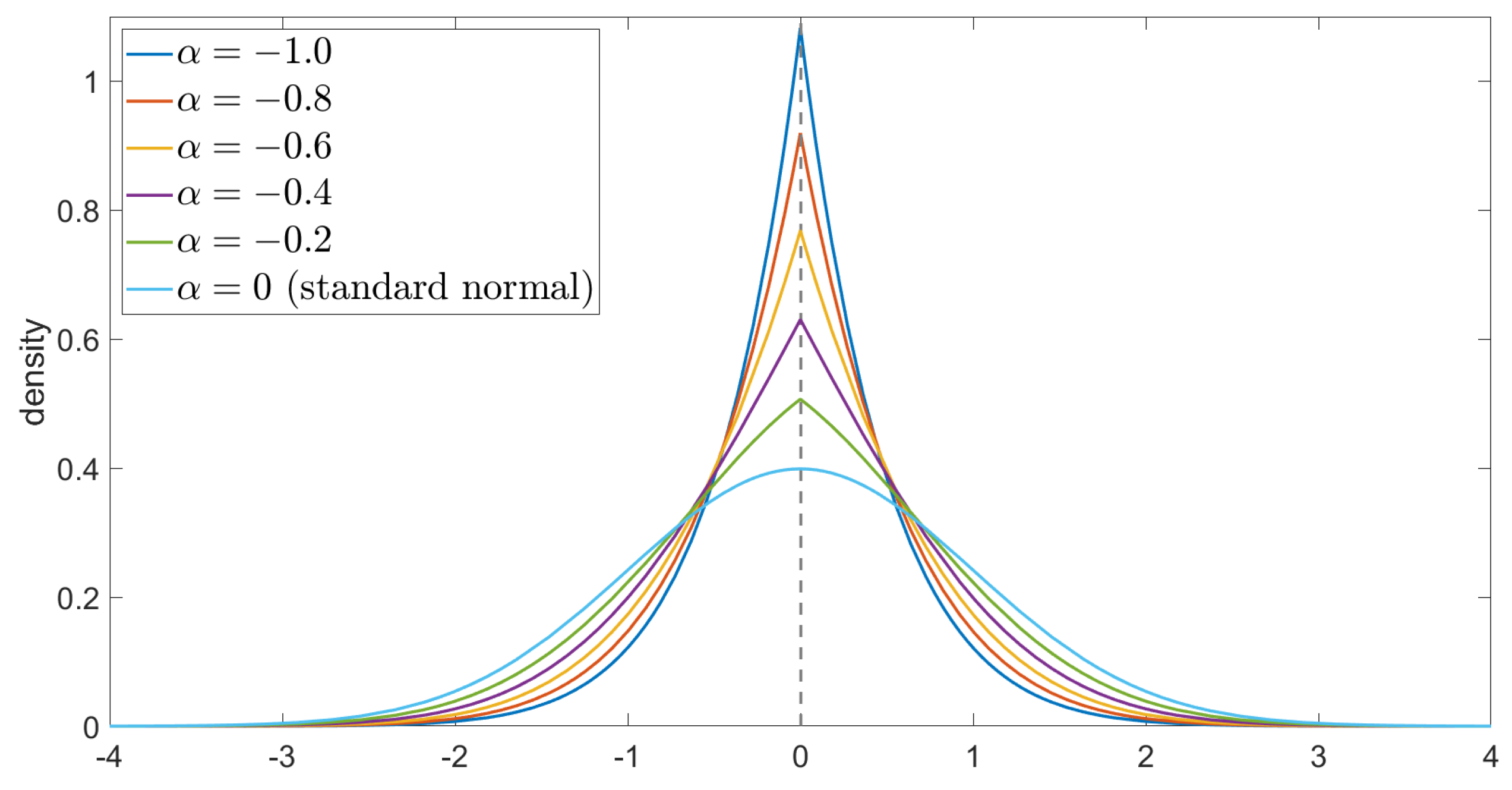

- If φ is decreasing on , thenwhere the density function is given as follows:The above density function of the Chen–Epstein distribution degenerates into the density function of the classical normal (Gaussian) distribution only when .

- Case II: CLT with variance uncertainty

- (1)

- If for , then

- (2)

- If for , then

5. Differences between Classical CLT and Nonlinear CLT

5.1. Frameworks

- C-CLT: The classical CLT is mainly considered on a probability space with a single probability measure . And is a sequence of random variables defined on . The distribution of each is fixed under the probability measure .

- NE-CLT: The NE-CLT is considered on the sublinear expectation space , and the random variables sequence is defined on . One can use the sublinear expectation to describe the distribution uncertainty of . When becomes a linear expectation, the nonlinear CLT degenerates into a classical one.

- NP-CLT: The NP-CLT is considered under a set of probability measures on , and the random variables sequence is defined on . One can use to describe the distribution uncertainty of . When equals the singlton , the nonlinear CLT degenerates into a classical one.

5.2. Assumptions

5.2.1. Independence

- C-CLT: Usually, are independent or is a sequence of martingale differences.

- NE-CLT: Peng provided the concept of independence on sublinear expectation space. That is, are independent on , if

- NP-CLT: When the CLT is considered on , there is no concept of independence. However, one should assume that and satisfy a property similar to independence, which can be described as followsIn fact, this holds naturally when is rectangular; see Lemma 2.2 from [40].

5.2.2. Mean and Variance

- C-CLT: Usually are identically distributed; notably, have the same mean and variance.

- NE-CLT: Peng defined the upper and lower means as followswhen , he stated that has no mean uncertainty, and defined the upper and lower variances as follows:

- NP-CLT: There are two main assumptions for the conditional means and variances of . Since there is no independence here, and the conditional means and variances of , given the information , will vary for different measures in , Chen and co-authors focused on the conditional means and variances of .

5.3. Results

5.3.1. Expression Form

- C-CLT: One usually investigates the limit behavior ofwhich is the standardization of .One haswhich has many equivalent expressions:

- NE-CLT: Usually, has no mean uncertainty, and the limit behavior of is investigated. Peng also introduced the corresponding notion of convergence in distribution in sublinear expectation space: we say that converges in distribution to G-normal distribution , if

- NP-CLT: Considering CLT with variance uncertainty, Chen and co-authors similarly investigated the limiting behavior of , assuming that has a common conditional mean of 0.Considering CLT with mean uncertainty, they investigated the limiting behavior ofThe second part is the standardization, which is similar in form to the classical CLT. Since they wanted to consider the mean uncertainty, and the standardization in the second part does not actually reflect the mean uncertainty, they added a sample mean to reflect the mean uncertainty.On the other hand, they investigated the limit behavior of the upper (or lower) expectation of the statistics for given test function, that is:Since, for a set of measures , the upper expectation or probability, that is or resp., do not have the additivity property, the above limit behavior is not equivalent to the problems (but contains them)

5.3.2. Limit Distribution

- C-CLT: The normal distribution is mostly used to describe the limit distribution.

- NE-CLT: Peng introduced the notion of G-normal distribution to characterize the limit distribution. When , it degenerated to the classical normal distribution.

- NP-CLT:

- (1)

- For the CLT with mean uncertainty, Chen and Epstein use the g-expectation or , which corresponds to the solution of BSDE (9) or (10), to describe the limit distribution. We know that the BSDE usually does not have an explicit solution, i.e., it does not have an explicit expression like the density of normal distribution. However, for some classes of symmetric test functions , Chen and coauthors found the explicit density to describe the limit distribution.

- (2)

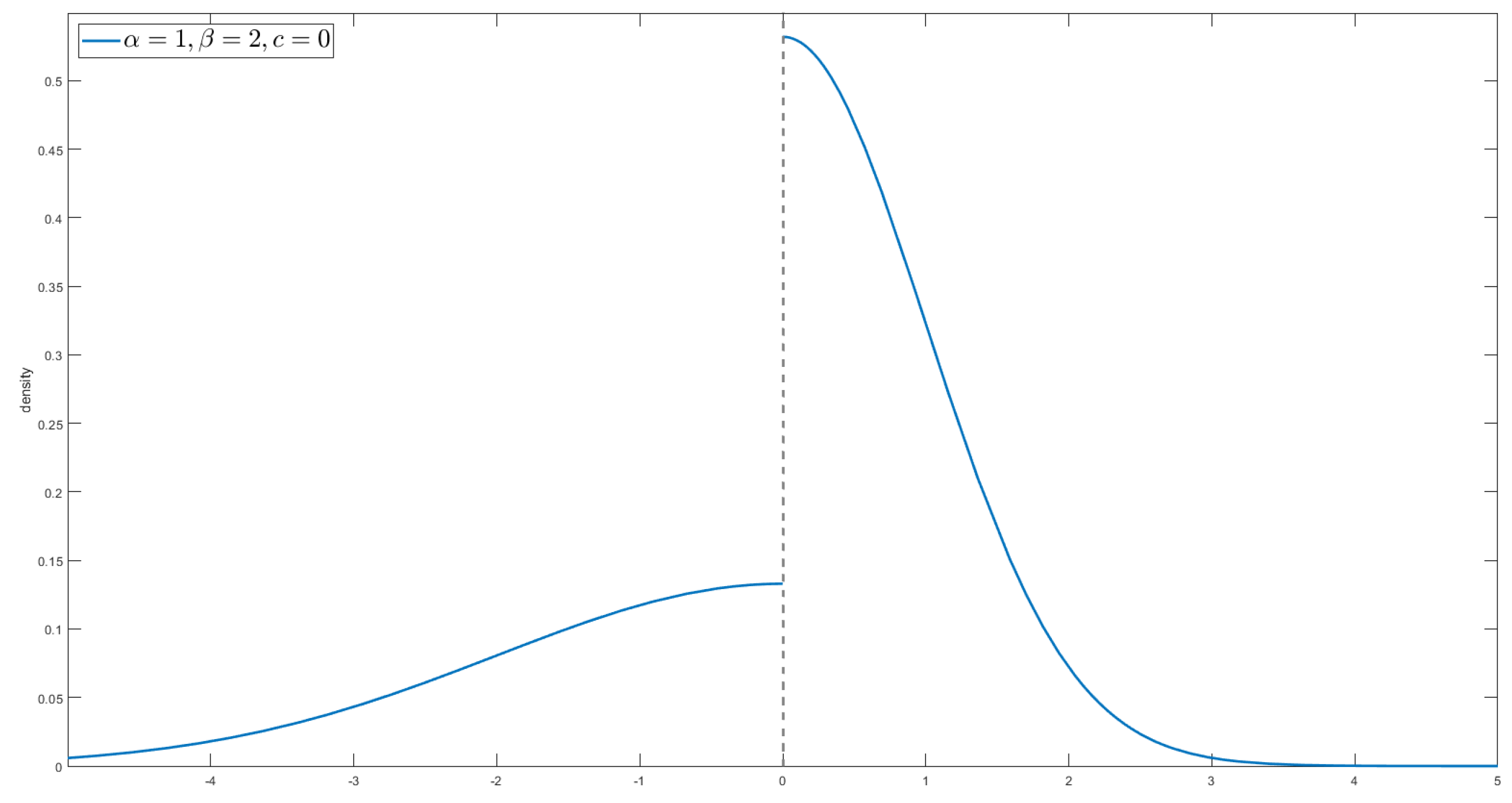

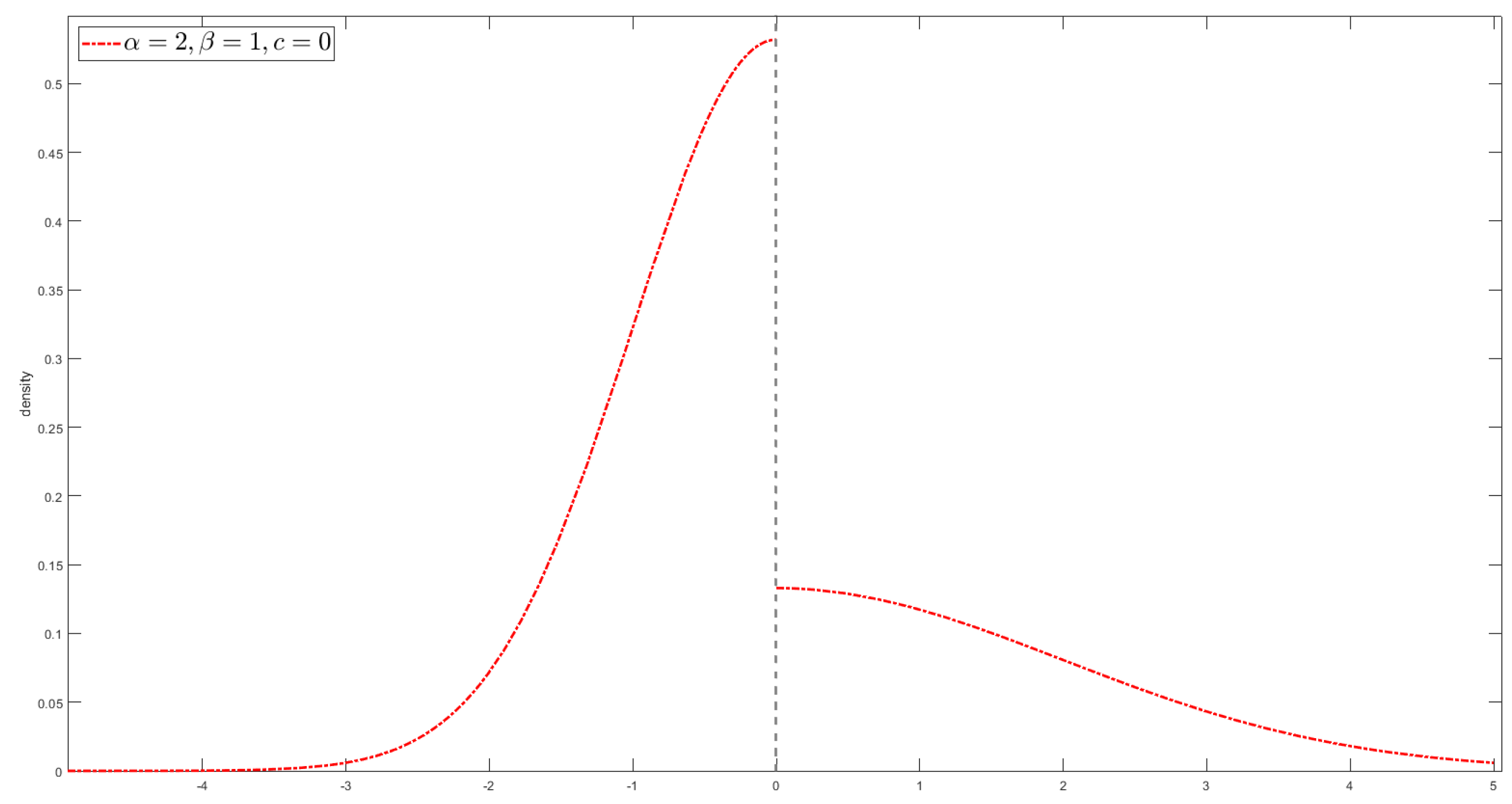

- For the CLT with variance uncertainty, similar to the NE-CLT, one can still use the G-normal distribution to describe the limit distribution. It is also known that the G-normal distribution usually does not have an explicit expression like the density of the normal distribution. Therefore, similar to CLT with mean uncertainty, Chen and coauthors tried to find some class of functions that provides an explicit expression for the limit distribution. Then, they considered two classes of functions, and , given by (18) and (19), which are two kinds of “S-Shaped” function. For these test functions, they found the explicit expression for the density function of the limit distribution.

5.4. Proofs

5.4.1. Methods to Prove C-CLT

- Method of characteristic functions;

- Method of moments;

- Stein’s method;

- The Lindeberg exchange method.

5.4.2. Methods to Prove NE-CLT

5.4.3. Methods to Prove NP-CLT

- Step 1: Guess the form of the limiting distribution, for example, the solution of BSDE or the G-Normal distribution. Use it to construct a family of basic functions , such thatwhere is the g-expectation corresponding to the BSDE (9).The key to constructing the function is to ensure that and equals the limit distribution.Note: In fact, the above definition is not rigorous; this is just to make it easier to understand. In the formal proof, the actual definition of differs slightly from the above definition to facilitate the proof of properties such as the smoothness and boundedness of . For example, the terminal time should not be 1 but for a sufficiently small h, and the generators of the g-expectation should be modified. See (6.3) in [40] and (A.3) in [39].

- Step 3: We use the function to connect the left- and right-hand sides of the equation in the limit theorem. Therefore, to prove the CLT, it suffices to prove thatSimilar to Lindeberg’s exchange method, as well as Peng’s method, we can divide the above differences into n parts, e.g., for CLT with variance uncertainty, we have the following:where .For the CLT with mean uncertianty, the corresponding is defined as follows

- Step 4: Using Taylor’s expansion for at , prove that the sum of the residuals converges to 0; that is,Further, using the dynamic consistency of under , one can prove thatThis leads to relation .On the other hand, using the dynamic consistency of , one has, for exampleThen, combining this with Taylor’s expansion, one can prove that .

6. Conclusions

- How should the nonlinear CLT be interpreted in the case of multidimensional or high-dimensional situations?

- The convergence rate in the classical CLT has been studied quite well and has been successfully used in many applications. However, the rate of convergence in the nonlinear central limit theorem is much less investigated. How should it be treated?

Funding

Acknowledgments

Conflicts of Interest

References

- Rootzén, H. On the Functional Central Limit Theorem for Martingales. Z. Wahrscheinlichkeitstheorie Verw. Geb. 1977, 38, 199–210. [Google Scholar] [CrossRef]

- Hall, P.; Heyde, C.C. Martingale Limit Theory and Its Application; Academic Press: New York, NY, USA, 1980. [Google Scholar]

- Adams, W.J. The Life and Times of the Central Limit Theorem, 2nd ed. In History of Mathematics, Volume 35; American Mathematical Society: Providence, RI, USA, 2009. [Google Scholar]

- Fischer, H. A History of the Central Limit Theorem, From Classical to Modern Probability Theory; Springer: Berlin, Germany, 2011. [Google Scholar]

- Petrov, V.V. Limit Theorems of Probability Theory; Oxford Science Publications: Oxford, UK, 1995. [Google Scholar]

- Götze, F.; Naumov, A.A.; Ulyanov, V.V. Asymptotic analysis of symmetric functions. J. Theor. Probab. 2017, 30, 876–897. [Google Scholar] [CrossRef]

- Shevtsova, I.G. On absolute constants in inequalities of Berry-Esseen type. Dokl. Math. 2014, 89, 378–381. [Google Scholar] [CrossRef]

- Fujikoshi, F.; Ulyanov, V.V. Non-Asymptotic Analysis of Approximations for Multivariate Statistics; SpringerBriefs in Statistics; Springer: Singapore, 2020. [Google Scholar]

- Dedecker, J.; Merlevède, F.; Rio, E. Rates of convergence in the central limit theorem for martingales in the non-stationary setting. Ann. Inst. H. Poincaré Probab. Statist. 2022, 58, 945–966. [Google Scholar] [CrossRef]

- Bhattacharya, R.N.; Ranga Rao, R. Normal Approximation and Asymptotic Expansions; Wiley: New York, NY, USA, 1976. [Google Scholar]

- Sazonov, V.V. Normal Approximation—Some Recent Advances; Springer: Berlin, Germany, 1981. [Google Scholar]

- Bentkus, V.; Götze, F. Optimal rates of convergence in the CLT for quadratic forms. Ann. Probab. 1996, 24, 466–490. [Google Scholar] [CrossRef]

- Götze, F.; Zaitsev, A.Y. Explicit rates of approximation in the CLT for quadratic forms. Ann. Probab. 2014, 42, 354–397. [Google Scholar] [CrossRef]

- Prokhorov, Y.V.; Ulyanov, V.V. Some Approximation Problems in Statistics and Probability. In Limit Theorems in Probability, Statistics and Number Theory. Springer Proceedings in Mathematics and Statistics; Eichelsbacher, P., Elsner, G., Kösters, H., Löwe, M., Merkl, F., Rolles, S., Eds.; Springer: Berlin, Germany, 2013; Volume 42, pp. 235–249. [Google Scholar]

- Chen, Z.; Yan, X.; Zhang, G. Strategic two-sample test via the two-armed bandit process. J. R. Stat. Soc. Ser. B Stat. Methodol. 2023, 85, 1271–1298. [Google Scholar] [CrossRef]

- Peng, S.; Yao, J.; Yang, S. Improving Value-at-Risk prediction under model uncertainty. J. Financ. Econom. 2023, 21, 228–259. [Google Scholar] [CrossRef]

- Hölzermann, J. Pricing interest rate derivatives under volatility uncertainty. Ann. Oper. Res. bf 2024, 336, 153–182. [Google Scholar] [CrossRef]

- Ji, X.; Peng, S.; Jang, S. Imbalanced binary classification under distribution uncertainty. Inf. Sci. 2023, 621, 156–171. [Google Scholar] [CrossRef]

- de Moivre, A. The Doctrine of Chances, 2nd ed.; Woodfall: London, UK, 1738. [Google Scholar]

- Gauss, C.F. Theoria Motus Corporum Coelestium; Perthes & Besser: Hamburg, Germany, 1809. [Google Scholar]

- Laplace, P.S. Mémoire sur la probabilités. Mémoires de l’Académie Royale des Sciences de Paris Année 1781, 1778, 227–332. [Google Scholar]

- Lyapunov, A.M. Sur une proposition de la théorie des probabilités. Bulletin de l’Académie Impériale des Sciences de St.-Pétersbourg 1900, 13, 359–386. [Google Scholar]

- Lyapunov, A.M. Nouvelle Forme du Théorème sur la Limite de Probabilité; Mémoires de l’Académie Impériale des Sciences de St.-Pétersbourg VIIIe Série, Classe Physico-Mathématique; Imperial Academy Nauk: Russia, 1901; Volume 12, pp. 1–24. Available online: https://books.google.com/books/about/Nouvelle_forme_du_th%C3%A9or%C3%A8me_sur_la_limi.html?id=XDZinQEACAAJ (accessed on 17 July 2024).

- Lévy, P. Sur la rôle de la loi de Gauss dans la théorie des erreurs. Comptes Rendus Hebdomadaires de l’Académie des Sciences de Paris 1922, 174, 855–857. [Google Scholar]

- Lindeberg, J.W. Eine neue Herleitung des Exponentialgesetzes in der Wahrscheinlichkeitsrechnung. Math. Z. 1922, 15, 211–225. [Google Scholar] [CrossRef]

- Feller, W. Über den zentralen Grenzwertsatz der Wahrscheinlichkeitsrechnung. Math. Z. 1935, 40, 521–559. [Google Scholar] [CrossRef]

- Bernstein, S. Sur l’extension du théorème limite du calcul des probabilités aux sommes de quantités dépendantes. Math. Ann. 1926, 97, 1–59. [Google Scholar] [CrossRef]

- Lévy, P. Propriétés asymptotiques des sommes de variables aléatoires indépendantes ou enchaînées. J. Math. Appl. 1935, 14, 347–402. [Google Scholar]

- Doob, J.L. Stochastic Processes; Wiley: New York, NY, USA, 1953. [Google Scholar]

- Billingsley, P. The Lindeberg-Lévy theorem for martingales. Proc. Am. Math. Soc. 1961, 12, 788–792. [Google Scholar]

- Ibragimov, I.A. A central limit theorem for a class of dependent random variables. Theory Probab. Its Appl. 1963, 8, 83–89. [Google Scholar] [CrossRef]

- Csörgő, M. On the strong law of large numbers and the central limit theorem for martingales. Trans. Am. Math. Soc. 1968, 131, 259–275. [Google Scholar] [CrossRef]

- Brown, B.M. Martingale central limit theorems. Ann. Math. Stat. 1971, 42, 59–66. [Google Scholar] [CrossRef]

- McLeish, D.L. Dependent central limit theorems and invariance principles. Ann. Probab. 1974, 2, 620–628. [Google Scholar] [CrossRef]

- Hall, P. Martingale invariance principles. Ann. Probab. 1977, 5, 875–887. [Google Scholar] [CrossRef]

- Peng, S. Backward SDE and related g-expectation. In Backward Stochastic Differential Equations. Pitman Research Notes in Math. Series; El Karoui, N., Mazliak, L., Eds.; Longman: London, UK, 1997; pp. 141–159. [Google Scholar]

- Peng, S. Nonlinear Expectations and Stochastic Calculus under Uncertainty: With Robust CLT and G-Brownian Motion; Springer: Berlin, Germany, 2019; Volume 95. [Google Scholar]

- Peng, S. A new central limit theorem under sublinear expectations. arXiv 2008, arXiv:0803.2656. [Google Scholar]

- Chen, Z.; Epstein, L.G.; Zhang, G. A central limit theorem, loss aversion and multi-armed bandits. J. Econ. Theory 2023, 209, 105645. [Google Scholar] [CrossRef]

- Chen, Z.; Epstein, L.G. A central limit theorem for sets of probability measures. Stoch. Process. Their Appl. 2022, 152, 424–451. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, S.; Qian, Z.; Xu, X. Explicit solutions for a class of nonlinear BSDEs and their nodal sets. Probab. Uncertain. Quant. Risk 2022, 7, 283–300. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ulyanov, V.V. From Classical to Modern Nonlinear Central Limit Theorems. Mathematics 2024, 12, 2276. https://doi.org/10.3390/math12142276

Ulyanov VV. From Classical to Modern Nonlinear Central Limit Theorems. Mathematics. 2024; 12(14):2276. https://doi.org/10.3390/math12142276

Chicago/Turabian StyleUlyanov, Vladimir V. 2024. "From Classical to Modern Nonlinear Central Limit Theorems" Mathematics 12, no. 14: 2276. https://doi.org/10.3390/math12142276