Unveiling Malicious Network Flows Using Benford’s Law

Abstract

1. Introduction

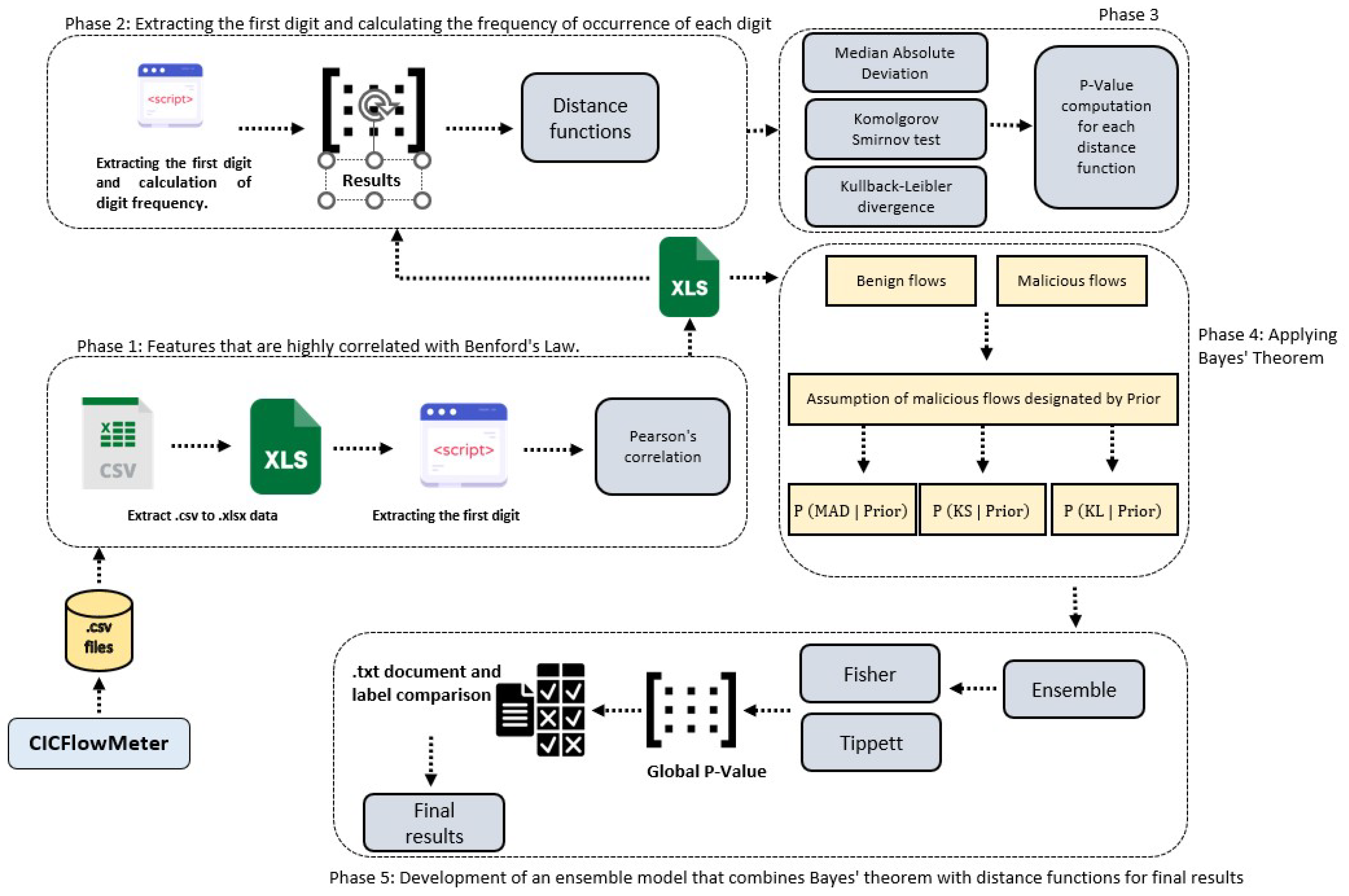

- Mean Absolute Deviation (MAD), where the dispersion of the data is calculated by averaging the absolute differences between the observed and expected frequencies, providing a precise measure of the variance about Benford’s Law.

- Kolmogorov–Smirnov (KS) test compares the cumulative distributions of the observed frequencies of the digits with those predicted by Benford’s Law, identifying significant discrepancies that may indicate anomalies.

- Kullback–Leibler (KL) divergence measures the information lost when the observed distribution is used to estimate the distribution expected by Benford’s Law. This metric quantifies the degree of divergence between the two distributions.

- A model based on the joint application of Benford’s Law and three distance functions, namely the Mean Absolute Deviation, the Kullback–Leibler divergence, and the Komolgorov–Smirnov test in analyzing and identifying anomalies in the flows obtained from a computer network.

- The development of a set of Matlab scripts that facilitated the implementation of Benford’s Law in conjunction with three distance functions. These scripts were used to extract the first digit, calculate each digit’s frequency of occurrence, and generate an ensemble that integrates the distance functions with Benford’s Law, applying Bayes’ Theorem, and can be found in https://github.com/pacfernandes/Unvelling-Network-Malicious-flows.git (1 July 2024).

- The comparison between the results obtained with this model and those attained with automatic learning-based methods.

2. Benford’s Law and Distance Functions in the Detection of Malicious Flows

2.1. Related Work

2.2. Challenges and Strengths

3. Benford’s Law and Distance Functions

3.1. Benford’s Law

- The distribution of significant digits is invariant concerning the change of scale.

- The distribution of significant digits is continuous and invariant concerning the change of base.

- The frequencies are uniformly distributed in the range of , relative to the fractional parts of the logarithm.

3.2. Distance Functions

3.2.1. Kolmogorov–Smirnov Test

3.2.2. Median Absolute Deviation

3.2.3. Kullback–Leibler Divergence

- Non-symmetric, ;

- Non-negative measure, and if or .

- Robustness of comparisons: The KS test is robust for comparisons between the empirical frequency of Benford’s Law and the frequency of occurrence of the digits.

- Sensitivity to deviations: This test shows greater sensitivity to distribution deviations.

- Non-Parametric Nature: Considering that data from a computer network can be irregular, the non-parametric nature of the KS test is advantageous, as it does not require the data to follow a specific distribution.

- Chi-Square Test: Unlike the chi-square test, the KS test does not rely on predefined data categories, thus avoiding information loss. In addition, the KS test can be applied when the null hypothesis is well defined, which is not always possible with the chi-square test.

- Anderson–Darling test: Although similar to the KS, the Anderson–Darling test is more complex and less intuitive, making the KS preferable for many applications.

- Assessment of Proximity between Distributions: The KL divergence is widely used in data mining literature to check the closeness between two distributions. The lower the value obtained, the closer the distributions are.

- Directed and Asymmetric Analysis: The asymmetric and directed nature of KL divergence allows for a detailed analysis of discrepancies between the frequency of digits and the empirical frequency of Benford’s Law.

- Sensitivity to Small Differences: KL divergence is particularly sensitive to slight differences between distributions, making it helpful in detecting subtle anomalies.

- Jensen–Shannon Divergence: Despite being a symmetrical version of KL, KL’s simplicity and sensitivity are preferable for many analyses.

- Mahalanobis distance: Although the Mahalanobis distance effectively detects multivariate anomalies, the KL is better suited to measuring differences in probability distributions.

- Simplicity and straightforward interpretation: The Mean Absolute Deviation is simple to calculate and interpret, directly measuring the discrepancies between the observed frequencies and those expected by Benford’s Law.

- Less Sensitivity to Outliers: This method is less sensitive to outliers, especially in digit 1 of Benford’s Law, which makes it preferable to the mean square deviation.

3.3. Fisher’s Method

3.4. Tippett’s Method

4. Model Architecture

4.1. Natural Law-Based Method

- “The network flow is benign”;

- “The network flow is malicious”.

4.2. Dataset

- High data quality and controlled environment: CICIDS2017 offers high-quality data captured in a controlled environment, guaranteeing the reliability and consistency of the results.

- Well-defined variety of attack types: The dataset presents an apparent diversity of attack types and precise labelling of flows as malicious or benign, allowing the results obtained by applying Benford’s Law to be compared with the original results, facilitating the classification of flows.

- Extensive use in previous studies: Numerous studies using CICIDS2017 allow for directly comparing the results obtained with those of other investigations. One example is Mbona’s work, which used CICIDS2017 with Benford’s Law for feature selection.

- Time features: flow duration, time between packets (minimum, average, maximum time, and standard deviation).

- Size features: smallest, average, largest, and total packet size.

- Count features: total number of packets in the flow and count of TCP, UDP, and ICMP packets.

- Header features: number of TCP flags.

- Statistical features include the calculation of flow entropy, packet per second rate, and byte per second rate.

4.3. Evaluation Metrics for Classification

5. Results of the Proposed Model

- First phase:

- -

- First Stage: Features with a correlation of or more were intriguingly grouped into Cluster 1, which indicates a substantial correlation between the observed frequencies.

- -

- Second Stage: Features with a correlation of or more were significantly grouped into Cluster 2, reflecting a strong correlation between the frequencies.

- -

- Third Stage: Finally, features with a correlation of or more were grouped into Cluster 3, highlighting a robust correlation. Each stage was meticulously planned to ensure a rigorous and detailed analysis of data trends by Benford’s Law.

- -

- Fourth Stage: A comparison was made between the number of features extracted by the method based on Pearson’s correlation and other methods based on distance functions. The results show that the correlation technique more effectively selects the ideal features for identifying malicious flows.

- Second phase:

- -

- An ensemble was developed from the p-values to maximize the detection of malicious flows, reducing the number of false positives and improving the evaluation of the model.

5.1. First Stage: Features with a Correlation of or More

| Digits and Flows | 2 | 30 | 18,342 | 18,361 |

|---|---|---|---|---|

| 1 | 0.5789 | 0.3333 | 0.2857 | 0.3429 |

| 2 | 0.2105 | 0.1389 | 0.1714 | 0.2286 |

| 3 | 0 | 0.1014 | 0.1143 | 0.0571 |

| 4 | 0 | 0.1667 | 0.1429 | 0.0286 |

| 5 | 0 | 0.0278 | 0.0571 | 0.1143 |

| 6 | 0.2105 | 0.0556 | 0.0286 | 0 |

| 7 | 0 | 0.0833 | 0.0857 | 0.1143 |

| 8 | 0 | 0.0278 | 0.0286 | 0.1143 |

| 9 | 0 | 0.0278 | 0.0857 | 0 |

| MAD | 0 | 1 | 1 | 1 |

| KS | 1 | 0 | 1 | 0 |

| KL | 1 | 0 | 0 | 0 |

| Original Label | 0 | 0 | 1 | 1 |

5.2. Second Stage: Features with a Correlation of or More

5.3. Third Stage: Features with a Correlation of or More

5.4. Fourth Stage and Second Phase: Method Combining the Three Distance Functions, Benford’s Law, and Bayes’ Theorem

6. Conclusions and Future Work

- Detection of Fraudulent Financial Activities: The model will be able to identify possible fraudulent financial activities, detecting fraudulent transactions that do not follow the frequency of occurrence of the digits based on Benford’s Law.

- False Positive Reduction: Adjusting detection thresholds based on digit analysis may reduce the false positive rate, allowing network administrators to focus on real threats.

- Integration with Accounting and ERP Systems: Integrating the model into accounting and ERP (Enterprise Resource Planning) systems will enable real-time and continuous monitoring of financial activities.

- Critical Infrastructure Protection: The method will detect malicious activity in critical infrastructures like energy and telecommunications by analyzing SCADA (Supervisory Control and Data Acquisition) data flows.

- Analyzing industrial protocols: The proposed model makes it possible to detect flows resulting from injection attacks by analyzing the traffic obtained from the Modbus and DNP3 (Distributed Network Protocol 3) protocols.

- Anomaly Detection in Water and Sanitation Systems: The model could be used to identify possible anomalies in sensors or control systems, guaranteeing the safety and continuity of operations in them.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| BL | Benford’s Law |

| DDoS | Distributed Denial of Service |

| DNP3 | Distributed Network Protocol 3 |

| ERP | Enterprise Resource Planning |

| ICMP | Internet Control Message Protocol |

| IDS | Intrusion Detection Systems |

| IoT | Internet of Things |

| IP | Internet Protocol |

| KL | Kullback–Leibler Divergence |

| KS | Komolgorov–Smirnov test |

| MAD | Mean Absolute Deviation |

| MDPI | Multidisciplinary Digital Publishing Institute |

| ML | Machine Learning |

| NIDS | Network Intrusion Detection |

| NTA | Network Traffic Analysis |

| ROC | Receiver Operating Characteristic |

| SCADA | Supervisory Control and Data Acquisition |

| SIEM | Security Information and Event Management Systems |

| SSD | Sum of Squared Deviation |

| TCP | Transmission Control Protocol |

| UDP | User Datagram Protocol |

References

- Yurtseven, I.; Bagriyanik, S. A Review of Penetration Testing and Vulnerability Assessment in Cloud Environment. In Proceedings of the 2020 Turkish National Software Engineering Symposium (UYMS), İstanbul, Turkey, 7–9 October 2020; IEEE: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Norton. 115 Cybersecurity Statistics + Trends to Know in 2024; Technical report; Norton: Mountain View, CA, USA, 2022. [Google Scholar]

- RFC. RFC 2722: Traffic Flow Measurement: Architecture. Technical Report. 1999. Available online: https://datatracker.ietf.org/doc/rfc2722/ (accessed on 27 May 2024).

- RFC. RFC 3697: Specification of the Differentiated Services Field (DS Field) in the IPv4 and IPv6 Headers; Technical Report; Internet Engineering Task Force (IETF): Fremont, CA, USA, 2004. [Google Scholar]

- Milano, F.; Gomez-Exposito, A. Detection of Cyber-Attacks of Power Systems Through Benford’s Law. IEEE Trans. Smart Grid 2021, 12, 2741–2744. [Google Scholar] [CrossRef]

- Mbona, I.; Eloff, J.H.P. Detecting Zero-Day Intrusion Attacks Using Semi-Supervised Machine Learning Approaches. IEEE Access 2022, 10, 69822–69838. [Google Scholar] [CrossRef]

- Erickson, J. Hacking; No Starch Press: San Francisco, CA, USA, 2007; p. 296. [Google Scholar]

- Stallings, W. Network Security Essentials Applications and Standards; Pearson: London, UK, 2016; p. 464. [Google Scholar]

- Jaswal, N. Hands-On Network Forensics; Packt Publishing Limited: Birmingham, UK, 2019; p. 358. [Google Scholar]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of intrusion detection systems: Techniques, datasets and challenges. Cybersecurity 2019, 2. [Google Scholar] [CrossRef]

- Cascavilla, G.; Tamburri, D.A.; Van Den Heuvel, W.J. Cybercrime threat intelligence: A systematic multi-vocal literature review. Comput. Secur. 2021, 105, 102258. [Google Scholar] [CrossRef]

- Carrier, B. File System Forensic Analysis; Addison-Wesley: San Francisco, CA, USA, 2005; p. 569. [Google Scholar]

- Casey, E. Handbook of Digital Forensics and Investigation; Elsevier Science & Technology Books: Amsterdam, The Netherlands, 2009. [Google Scholar]

- Wang, F.; Tang, Y. Diverse Intrusion and Malware Detection: AI-Based and Non-AI-Based Solutions. J. Cybersecur. Priv. 2024, 4, 382–387. [Google Scholar] [CrossRef]

- Aljanabi, M.; Ismail, M.A.; Ali, A.H. Intrusion Detection Systems, Issues, Challenges, and Needs. Int. J. Comput. Intell. Syst. 2021, 14, 560. [Google Scholar] [CrossRef]

- Dini, P.; Elhanashi, A.; Begni, A.; Saponara, S.; Zheng, Q.; Gasmi, K. Overview on Intrusion Detection Systems Design Exploiting Machine Learning for Networking Cybersecurity. Appl. Sci. 2023, 13, 7507. [Google Scholar] [CrossRef]

- Arshadi, L.; Jahangir, A.H. Benford’s law behavior of Internet traffic. J. Netw. Comput. Appl. 2014, 40, 194–205. [Google Scholar] [CrossRef]

- Sun, L.; Anthony, T.S.; Xia, H.Z.; Chen, J.; Huang, X.; Zhang, Y. Detection and classification of malicious patterns in network traffic using Benford’s law. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; IEEE: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Sethi, K.; Kumar, R.; Prajapati, N.; Bera, P. A Lightweight Intrusion Detection System using Benford’s Law and Network Flow Size Difference. In Proceedings of the 2020 International Conference on COMmunication Systems & NETworkS (COMSNETS), Bengaluru, India, 7–11 January 2020; IEEE: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Nigrini, M.J. Benford’s Law: Applications for Forensic Accounting, Auditing, and Fraud Detection; John Wiley & Sons: Hoboken, NJ, USA, 2012; Volume 586. [Google Scholar]

- Cerqueti, R.; Maggi, M. Data validity and statistical conformity with Benford’s Law. Chaos Solitons Fractals 2021, 144, 110740. [Google Scholar] [CrossRef]

- Thottan, M.; Ji, C. Anomaly detection in IP networks. IEEE Trans. Signal Process. 2003, 51, 2191–2204. [Google Scholar] [CrossRef]

- Wang, Y. Statistical Techniques for Network Security; Information Science Reference: Hershey, PA, USA, 2008; p. 476. [Google Scholar]

- Ahmed, M.; Naser Mahmood, A.; Hu, J. A survey of network anomaly detection techniques. J. Netw. Comput. Appl. 2016, 60, 19–31. [Google Scholar] [CrossRef]

- Hero, A.; Kar, S.; Moura, J.; Neil, J.; Poor, H.V.; Turcotte, M.; Xi, B. Statistics and Data Science for Cybersecurity. Harv. Data Sci. Rev. 2023, 5. [Google Scholar] [CrossRef]

- Iorliam, A. Natural Laws (Benford’s Law and Zipf’s Law) for Network Traffic Analysis. In Cybersecurity in Nigeria; Springer International Publishing: Cham, Switzerland, 2019; pp. 3–22. [Google Scholar] [CrossRef]

- Sun, L.; Ho, A.; Xia, Z.; Chen, J.; Zhang, M. Development of an Early Warning System for Network Intrusion Detection Using Benford’s Law Features. In Communications in Computer and Information Science; Springer: Singapore, 2019; pp. 57–73. [Google Scholar] [CrossRef]

- Hajdarevic, K.; Pattinson, C.; Besic, I. Improving Learning Skills in Detection of Denial of Service Attacks with Newcombe—Benford’s Law using Interactive Data Extraction and Analysis. TEM J. 2022, 11, 527–534. [Google Scholar] [CrossRef]

- Mbona, I.; Eloff, J.H. Feature selection using Benford’s law to support detection of malicious social media bots. Inf. Sci. 2022, 582, 369–381. [Google Scholar] [CrossRef]

- Campanelli, L. On the Euclidean distance statistic of Benford’s law. Commun. Stat. Theory Methods 2022, 53, 451–474. [Google Scholar] [CrossRef]

- Kossovsky, A.E. On the Mistaken Use of the Chi-Square Test in Benford’s Law. Stats 2021, 4, 419–453. [Google Scholar] [CrossRef]

- Fernandes, P.; Antunes, M. Benford’s law applied to digital forensic analysis. Forensic Sci. Int. Digit. Investig. 2023, 45, 301515. [Google Scholar] [CrossRef]

- Berger, A.; Hill, T.P. The mathematics of Benford’s law: A primer. Stat. Methods Appl. 2020, 30, 779–795. [Google Scholar] [CrossRef]

- Wang, L.; Ma, B.Q. A concise proof of Benford’s law. Fundam. Res. 2023, in press. [CrossRef]

- Bunn, D.W.; Gianfreda, A.; Kermer, S. A Trading-Based Evaluation of Density Forecasts in a Real-Time Electricity Market. Energies 2018, 11, 2658. [Google Scholar] [CrossRef]

- Andriulli, M.; Starling, J.K.; Schwartz, B. Distributional Discrimination Using Kolmogorov-Smirnov Statistics and Kullback-Leibler Divergence for Gamma, Log-Normal, and Weibull Distributions. In Proceedings of the 2022 Winter Simulation Conference (WSC), Singapore, 11–14 December 2022; IEEE: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Pham-Gia, T.; Hung, T. The mean and median absolute deviations. Math. Comput. Model. 2001, 34, 921–936. [Google Scholar] [CrossRef]

- Fernandes, P.; Ciardhuáin, S.Ó.; Antunes, M. Uncovering Manipulated Files Using Mathematical Natural Laws. In Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2023; pp. 46–62. [Google Scholar] [CrossRef]

- Bulinski, A.; Dimitrov, D. Statistical Estimation of the Kullback–Leibler Divergence. Mathematics 2021, 9, 544. [Google Scholar] [CrossRef]

- Li, J.; Fu, H.; Hu, K.; Chen, W. Data Preprocessing and Machine Learning Modeling for Rockburst Assessment. Sustainability 2023, 15, 13282. [Google Scholar] [CrossRef]

- Zaidi, Z.R.; Hakami, S.; Landfeldt, B.; Moors, T. Real-time detection of traffic anomalies in wireless mesh networks. Wirel. Netw. 2009, 16, 1675–1689. [Google Scholar] [CrossRef][Green Version]

- Zhou, W.; Lv, Z.; Li, G.; Jiao, B.; Wu, W. Detection of Spoofing Attacks on Global Navigation Satellite Systems Using Kolmogorov–Smirnov Test-Based Signal Quality Monitoring Method. IEEE Sens. J. 2024, 24, 10474–10490. [Google Scholar] [CrossRef]

- Bouyeddou, B.; Harrou, F.; Kadri, B.; Sun, Y. Detecting network cyber-attacks using an integrated statistical approach. Clust Comput. 2020, 24, 1435–1453. [Google Scholar] [CrossRef]

- Bouyeddou, B.; Harrou, F.; Sun, Y.; Kadri, B. Detection of smurf flooding attacks using Kullback-Leibler-based scheme. In Proceedings of the 2018 4th International Conference on Computer and Technology Applications (ICCTA), Istanbul, Turkey, 3–5 May 2018; IEEE: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Romo-Chavero, M.A.; Cantoral-Ceballos, J.A.; Pérez-Díaz, J.A.; Martinez-Cagnazzo, C. Median Absolute Deviation for BGP Anomaly Detection. Future Internet 2024, 16, 146. [Google Scholar] [CrossRef]

- Ham, H.; Park, T. Combining p-values from various statistical methods for microbiome data. Front. Microbiol. 2022, 13, 990870. [Google Scholar] [CrossRef] [PubMed]

- Borenstein, M.; Hedges, L.; Higgins, J.; Rothstein, H. Introduction to Meta-Analysis; Wileyl: Hoboken, NJ, USA, 2011. [Google Scholar]

- Chen, Z. Optimal Tests for Combining p-Values. Appl. Sci. 2021, 12, 322. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization. In Proceedings of the International Conference on Information Systems Security and Privacy, Madeira, Portugal, 22–24 January 2018. [Google Scholar]

- UNB. Intrusion Detection Evaluation Dataset. 2017. Available online: https://www.unb.ca/cic/datasets/ids-2017.html (accessed on 1 July 2024).

- Lashkari, A.H. CICFlowMeter; Github: San Francisco, CA, USA, 2021. [Google Scholar]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning—ICML ’06, Pittsburgh, PA, USA, 25–29 June 2006; ACM Press: New York, NY, USA, 2006. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Ferreira, S.; Antunes, M.; Correia, M.E. A Dataset of Photos and Videos for Digital Forensics Analysis Using Machine Learning Processing. Data 2021, 6, 87. [Google Scholar] [CrossRef]

| First Digit | Cumulative Frequency for Flow 14: | Cumulative Frequency for Benfords’ Law: | |

|---|---|---|---|

| 1 | 0.2222 | 0.3010 | 0.0788 |

| 2 | 0.2778 | 0.4771 | 0.1993 |

| 3 | 0.3889 | 0.6021 | 0.2132 |

| 4 | 0.4444 | 0.6990 | 0.2545 |

| 5 | 0.5556 | 0.7782 | 0.2226 |

| 6 | 0.7222 | 0.8451 | 0.1229 |

| 7 | 0.8889 | 0.9031 | 0.0142 |

| 8 | 0.9444 | 0.9542 | 0.0098 |

| 9 | 1 | 1 | 0 |

| Calculation of the Cumulative Empirical Distribution Function for one flow in general; |

| Calculation of the Empirical Cumulative Distribution Function for Benford’s Law; |

| Calculation of the Kolmogorov–Smirnov Statistic using Equation (7); |

| will be the largest value of the calculated in the previous step; |

| Compare with a critical value of D; |

| If , there is not enough statistical evidence to reject . |

| Destination Port | Bwd Packet Length Max | Fwd IAT Total | Fwd Flags |

| Flow Duration | Bwd Packet Length Min | Fwd IAT Mean | Bwd PSH Flags |

| Total Fwd Packets | Bwd Packet Length Mean | Fwd IAT Std | Fwd URG Flags |

| Total Backward Packets | Bwd Packet Length Std | Fwd IAT Max | Bwd URG Flags |

| Total Length of Fwd Packets | Flow Bytes/s | Fwd IAT Min | Fwd Header Length |

| Total Length of Bwd Packets | Flow Packets/s | Bwd IAT Total | Bwd Header Length |

| Fwd Packet Length Max | Flow IAT Mean | Bwd IAT Mean | Fwd Packets/s |

| Fwd Packet Length Min | Flow IAT Std | Bwd IAT Std | Bwd Packets/s |

| Fwd Packet Length Mean | Flow IAT Max | Bwd IAT Max | Min Packet Length |

| Fwd Packet Length Std | Flow IAT Min | Bwd IAT Min | Max Packet Length |

| Packet Length Mean | ECE Flag Count | Bwd Avg Packets/Bulk | Active Mean |

| Packet Length Std | Down/Up Ratio | Bwd Avg Bulk Rate | Active Std |

| Packet Length Variance | Average Packet Size | Subflow Fwd Packets | Active Max |

| FIN Flag Count | Avg Fwd Segment Size | Subflow Fwd Bytes | Active Min |

| SYN Flag Count | Avg Bwd Segment Size | Subflow Bwd Packets | Idle Mean |

| RST Flag Count | Fwd Header Length_1 | Subflow Bwd Bytes | Idle Std |

| PSH Flag Count | Fwd Avg Bytes/Bulk | Init_Win_bytes_forward | Idle Max |

| ACK Flag Count | Fwd Avg Packets/Bulk | Init_Win_bytes_backward | Idle Min |

| URG Flag Count | Fwd Avg Bulk Rate | act_data_pkt_fwd | |

| CWE Flag Count | Bwd Avg Bytes/Bulk | min_seg_size_forward |

| Statistical Test | Parameters | Settings | Threshold Setting | Settings |

|---|---|---|---|---|

| Kolmogorov–Smirnov test | The significance level used was . Sample size: 29,000 flows | The distribution of digit occurrence frequencies was calculated and compared with the empirical distribution of Benford’s Law. The KS test was applied to verify the most significant difference between the empirical cumulative distributions of the observed data and Benford’s Law. | A threshold value was established for the 1%, 5%, and significance levels. | p-values obtained lower than the critical value were considered malicious. |

| Kullback–Leibler divergence | was added to all observed probabilities to avoid division by zero. The probabilities were normalized to add up to 1. | We calculated the probability distributions for the first digit of each feature in the dataset and Benford’s Law. The KL divergence was calculated to measure the difference between the observed distribution of digits and the distribution expected by Benford’s Law. | ||

| Mean Absolute Deviation | The first digit of the dataset was considered. | The KL divergence was calculated to measure the difference between the observed distribution of digits and the distribution expected by Benford’s Law. |

| Week Date | Type of Activity | Flows Extracted | |

|---|---|---|---|

| Monday | Only benign flows | 2000 | - |

| Tuesday | Benign flows | 2000 | - |

| FTP-Patator | - | 2000 | |

| SSH-Patator | - | 2000 | |

| Wednesday | Benign flows | 2000 | - |

| DoS/DDoS | - | 2000 | |

| DoS slowloris | - | 2000 | |

| DoS Slowhttptest | - | 2000 | |

| DoS Hulk | - | 2000 | |

| DoS GoldenEye | - | 2000 | |

| Thursday | Benign flows | 2000 | - |

| Web Attack—Brute Force | - | 1000 | |

| Web Attack—XSS | - | 1000 | |

| Web Attack—Sql Injection | - | 1000 | |

| Infiltration | - | 1000 | |

| Friday | Benign Flows | 2000 | - |

| DDoS LOIT | - | 1000 | |

| Total: | 10,000 | 19,000 | |

| Predicted Observation | |||

|---|---|---|---|

| Positive | Negative | ||

| Real observation | Positive | Malicious network flow True positive (TP) | Malicious network flow rated as benign False negative (FN) |

| Negative | Benign network flow rated malicious False positive (FP) | Benign network flow True negative (TN) | |

| Bwd Packet Length Mean | Fwd IAT Total | Flow IAT Mean | Packet Length Std |

| Flow Duration | Fwd IAT Mean | Flow IAT Std | Packet Length Variance |

| Total Fwd Packets | Fwd IAT Std | Flow IAT Max | Down/Up Ratio |

| Total Backward Packets | Fwd IAT Max | Subflow Fwd Packets | Avg Fwd Segment Size |

| Total Length of Fwd Packets | Fwd IAT Min | Subflow Fwd Bytes | Avg Bwd Segment Size |

| Total Length of Bwd Packets | Bwd IAT Total | Subflow Bwd Packets | Max Packet Length |

| Fwd Packet Length Mean | Bwd IAT Std | Subflow Bwd Bytes | Packet Length Mean |

| Fwd Packet Length Std | Bwd IAT Max | act_data_pkt_fwd | Flow Packets/s |

| Fwd Packet’s | Bwd Packet Length Std | Active Mean | Idle Std |

| Bwd Packet’s | Flow Bytes/s | Active Std | Active Max |

| Active Min |

| Distance Function | Degree of Significance | TP | TN | FP | FN |

|---|---|---|---|---|---|

| MAD | 0.05 | 3745 | 7495 | 15,255 | 2505 |

| 0.01 | 0 | 10,000 | 0 | 19,000 | |

| 0.1 | 17,143 | 1726 | 8274 | 1857 | |

| KS test | 0.05 | 8996 | 3826 | 6174 | 10,004 |

| 0.01 | 4783 | 6026 | 3974 | 14,217 | |

| 0.1 | 13,504 | 2522 | 7478 | 5496 | |

| Kullback-Leibler | 0.05 | 3053 | 8447 | 1553 | 15,947 |

| 0.01 | 1126 | 9304 | 696 | 17,874 | |

| 0.1 | 3359 | 7841 | 2159 | 15,641 |

| Distance Function | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|

| MAD () | 0.6745 | 0.9023 | 0.7719 | 0.6507 |

| KS test () | 0.6436 | 0.7107 | 0.6755 | 0.5526 |

| Kullback–Leibler () | 0.6628 | 0.1607 | 0.2587 | 0.3966 |

| Flow Packets/s | Bwd IAT Max | Flow IAT Mean | Packet Length Std |

| Flow Duration | Fwd IAT Mean | Flow IAT Std | Packet Length Variance |

| Total Fwd Packets | Fwd IAT Std | Active Std | Down/Up Ratio |

| Total Backward Packets | Bwd IAT Total | Subflow Fwd Packets | Idle Std |

| Total Length of Fwd Packets | Bwd IAT Std | Subflow Fwd Bytes | Bwd Packet’s |

| Total Length of Bwd Packets | Bwd Packet Length Std | Subflow Bwd Packets | act_data_pkt_fwd |

| Fwd Packet’s | Flow Bytes/s | Subflow Bwd Bytes |

| Distance Function | Degree of Significance | TP | TN | FP | FN |

|---|---|---|---|---|---|

| MAD | 0.1 | 16,389 | 1788 | 8212 | 2611 |

| KS test | 0.1 | 13,504 | 2522 | 7478 | 5496 |

| Kullback–Leibler | 0.05 | 2715 | 8290 | 1710 | 16,285 |

| Distance Function | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|

| MAD () | 0.6662 | 0.8626 | 0.7518 | 0.6268 |

| KS test () | 0.6436 | 0.7107 | 0.6755 | 0.5526 |

| Kullback–Leibler () | 0.6136 | 0.1429 | 0.2318 | 0.3795 |

| Flow Duration | Bwd Packets/s |

| Total Backward Packets | Packet Length Std |

| Total Length of Fwd Packets | Packet Length Variance |

| Bwd Packet Length Std | Subflow Fwd Packets |

| Flow Bytes/s | Subflow Bwd Packets |

| Flow Packets/s | act_data_pkt_fwd |

| Flow IAT Mean | Active Std |

| Flow IAT Std | Idle Std |

| Fwd IAT Mean | Bwd IAT Std |

| Fwd IAT Std | Fwd Packets/s |

| Distance Function | Degree of Significance | TP | TN | FP | FN |

|---|---|---|---|---|---|

| MAD | 0.1 | 15,757 | 1938 | 8062 | 3243 |

| KS test | 0.1 | 13,504 | 2522 | 7478 | 5496 |

| Kullback-Leibler | 0.05 | 2735 | 8197 | 1803 | 16,265 |

| Flow | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | Decision by MAD 0.1 | Original Label | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Benign | 2 | 0.6667 | 0.3333 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 81 | 0.5384 | 0 | 0.1538 | 0.0769 | 0 | 0.0769 | 0.1538 | 0 | 0 | 1 | 0 | |

| Malicious | 23777 | 0.6250 | 0 | 0.125 | 0 | 0 | 0.25 | 0 | 0 | 0 | 0 | 1 |

| 28690 | 0.5000 | 0.2500 | 0 | 0 | 0.0833 | 0 | 0.1666 | 0 | 0 | 1 | 1 |

| Flow Duration | Packet Length Mean |

| Fwd Packet Length Mean | Packet Length Std |

| Fwd Packet Length Std | Packet Length Variance |

| Bwd Packet Length Mean | Avg Fwd Segment Size |

| Flow Bytes/s | Avg Bwd Segment Size |

| Flow Packets/s | Subflow Fwd Packets |

| Flow IAT Mean | Subflow Fwd Bytes |

| Flow IAT Std | Subflow Bwd Packets |

| Fwd Packets/s | Avg Fwd Segment Size |

| Max Packet Length | Avg Bwd Segment Size |

| Features | Correlation | Features | Correlation |

|---|---|---|---|

| Bwd Packet Length Min | Bwd Avg Packets/Bulk | - | |

| Flow IAT Min | Bwd Avg Bulk Rate | - | |

| Average Packet Size | Init_Win_bytes_backward | ||

| Fwd Avg Bytes/Bulk | - |

| Distance Function | Degree of Significance | TP | TN | FP | FN |

|---|---|---|---|---|---|

| MAD | 0.1 | 15,686 | 1905 | 8095 | 3314 |

| KS test | 0.1 | 12745 | 3998 | 6002 | 6255 |

| Kullback–Leibler | 0.05 | 3235 | 7978 | 2022 | 15,765 |

| Distance Function | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|

| MAD () | 0.6596 | 0.8256 | 0.7333 | 0.6066 |

| KS test () | 0.6798 | 0.6708 | 0.6753 | 0.5773 |

| Kullback–Leibler () | 0.6154 | 0.1703 | 0.2667 | 0.3867 |

| TP | TN | FP | FN | ||

|---|---|---|---|---|---|

| Fisher method | 0.05 | 8733 | 5360 | 4640 | 10,267 |

| 0.01 | 4136 | 7622 | 2378 | 14,864 | |

| 0.1 | 12,885 | 3134 | 6866 | 6115 | |

| Tippett method | 0.05 | 18,890 | 204 | 9796 | 110 |

| 0.01 | 2448 | 9211 | 789 | 16,552 | |

| 0.1 | 19,000 | 0 | 10,000 | 0 |

| Ensemble Method | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|

| Fisher method () | 0.6524 | 0.6782 | 0.6650 | 0.5524 |

| Tippett method () | 0.6585 | 0.9942 | 0.7923 | 0.6584 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fernandes, P.; Ciardhuáin, S.Ó.; Antunes, M. Unveiling Malicious Network Flows Using Benford’s Law. Mathematics 2024, 12, 2299. https://doi.org/10.3390/math12152299

Fernandes P, Ciardhuáin SÓ, Antunes M. Unveiling Malicious Network Flows Using Benford’s Law. Mathematics. 2024; 12(15):2299. https://doi.org/10.3390/math12152299

Chicago/Turabian StyleFernandes, Pedro, Séamus Ó Ciardhuáin, and Mário Antunes. 2024. "Unveiling Malicious Network Flows Using Benford’s Law" Mathematics 12, no. 15: 2299. https://doi.org/10.3390/math12152299

APA StyleFernandes, P., Ciardhuáin, S. Ó., & Antunes, M. (2024). Unveiling Malicious Network Flows Using Benford’s Law. Mathematics, 12(15), 2299. https://doi.org/10.3390/math12152299