Optimizing Electric Vehicle (EV) Charging with Integrated Renewable Energy Sources: A Cloud-Based Forecasting Approach for Eco-Sustainability

Abstract

:1. Introduction

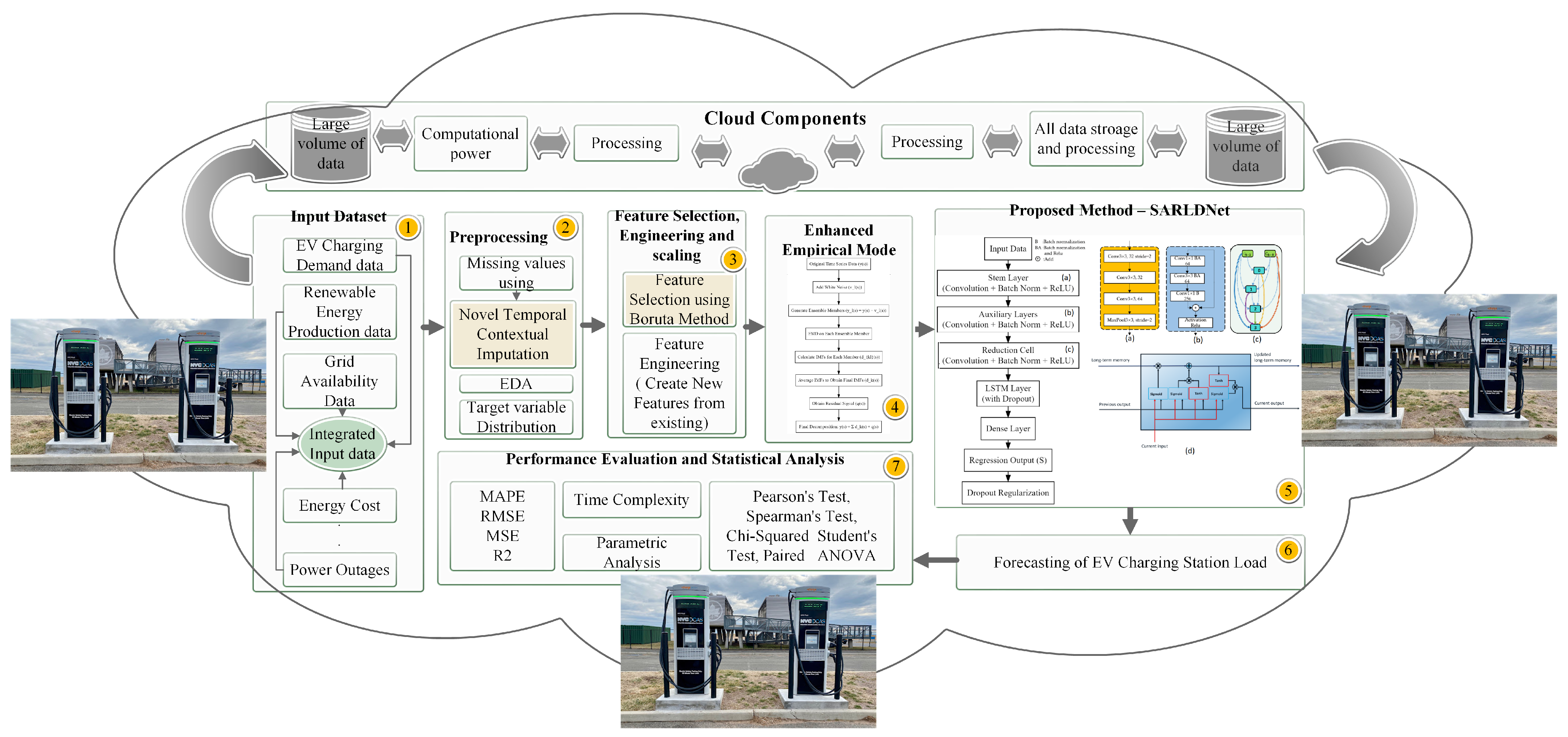

1.1. A Cloud-Based Forecasting Approach for Eco-Sustainability

1.2. Integrating Renewable Energy Sources into Electric Vehicle Charging Infrastructure

1.3. Contributions

- Introduced a new approach called Temporal Contextual Imputation (TCI) to deal with missing data in temporal sequences while keeping time dynamics intact and ensuring that EVC station load forecasts are consistent in context.

- Integration of renewable energy and weather data: incorporated solar and wind energy statistics into EVC station energy consumption forecasting across multiple California locations.

- Enhanced EMD signal decomposition: implemented empirical mode decomposition (EMD) to extract temporal patterns from EVC station load data, enhancing forecasting accuracy.

- Feature engineering: utilized the Boruta method for feature selection and engineering, creating new features to capture inherent data relationships effectively.

- SARLDNet architecture development: introduced SARLDNet (stem-auxiliary-reduction-LSTM-dense network), a novel deep learning architecture optimized for robust EVC station load forecasting.

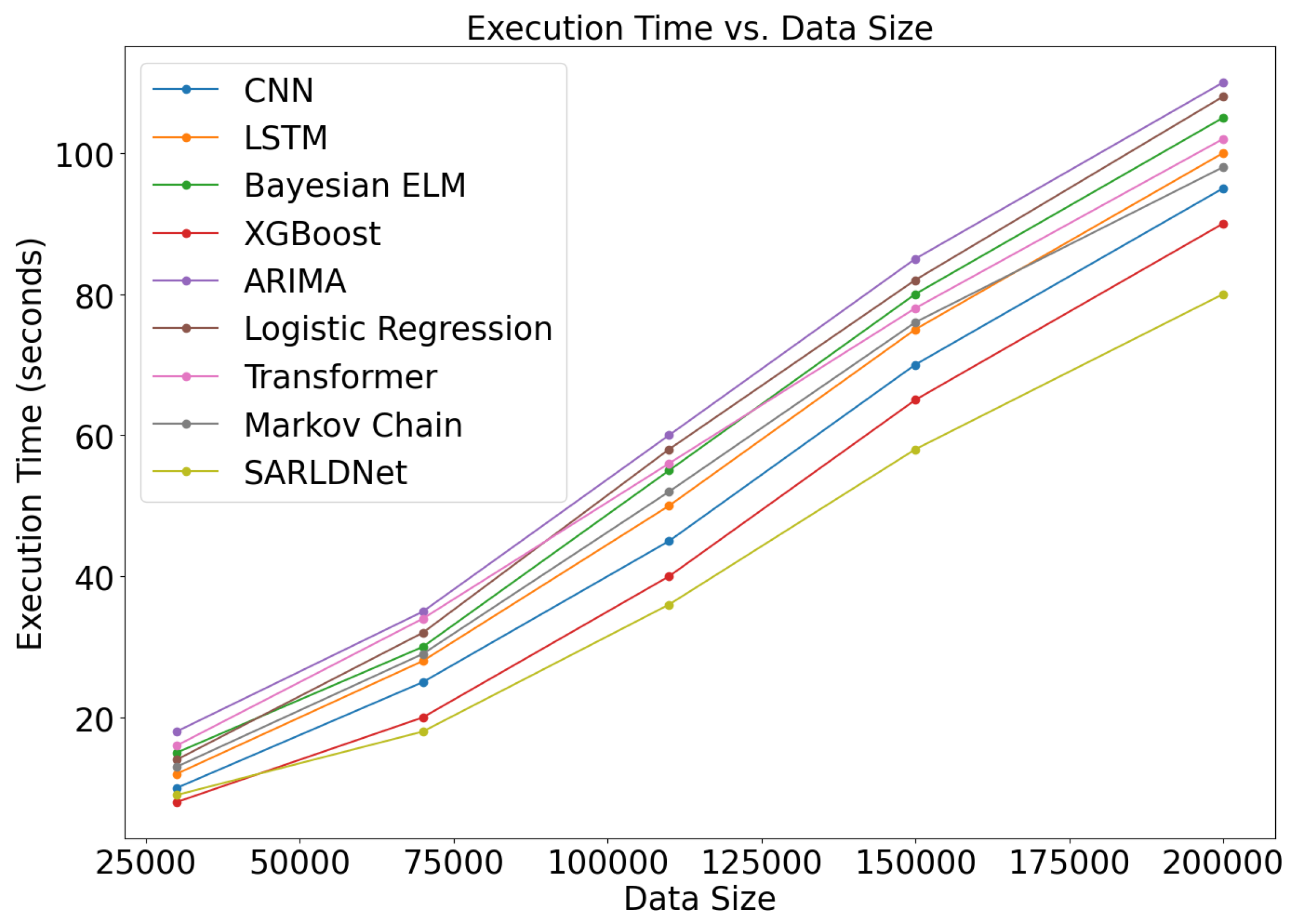

- Execution efficiency: achieved efficient execution time and low complexity with SARLDNet, contributing to accurate and scalable forecasting solutions.

1.4. Organization of Paper

2. Related Work

3. Proposed Methodology

3.1. Impact of Key Features

- Weather conditions: Temperature, humidity, and wind speed are crucial factors that directly influence renewable energy production. For instance, solar energy production mainly depends on sunlight, which is subject to atmospheric influences. By incorporating these features, our model can more precisely account for variations in renewable energy supply.

- Time of day and day of week: These attributes facilitate the identification of daily and weekly fluctuations in demand for electric vehicle charging. Understanding peak utilization times facilitates better demand forecasting and resource allocation.

- Historical charging patterns: Analyzing historical charging patterns offers valuable insights into utilization trends and enhances the accuracy of future demand predictions.

3.2. Grid Stability Index Calculation

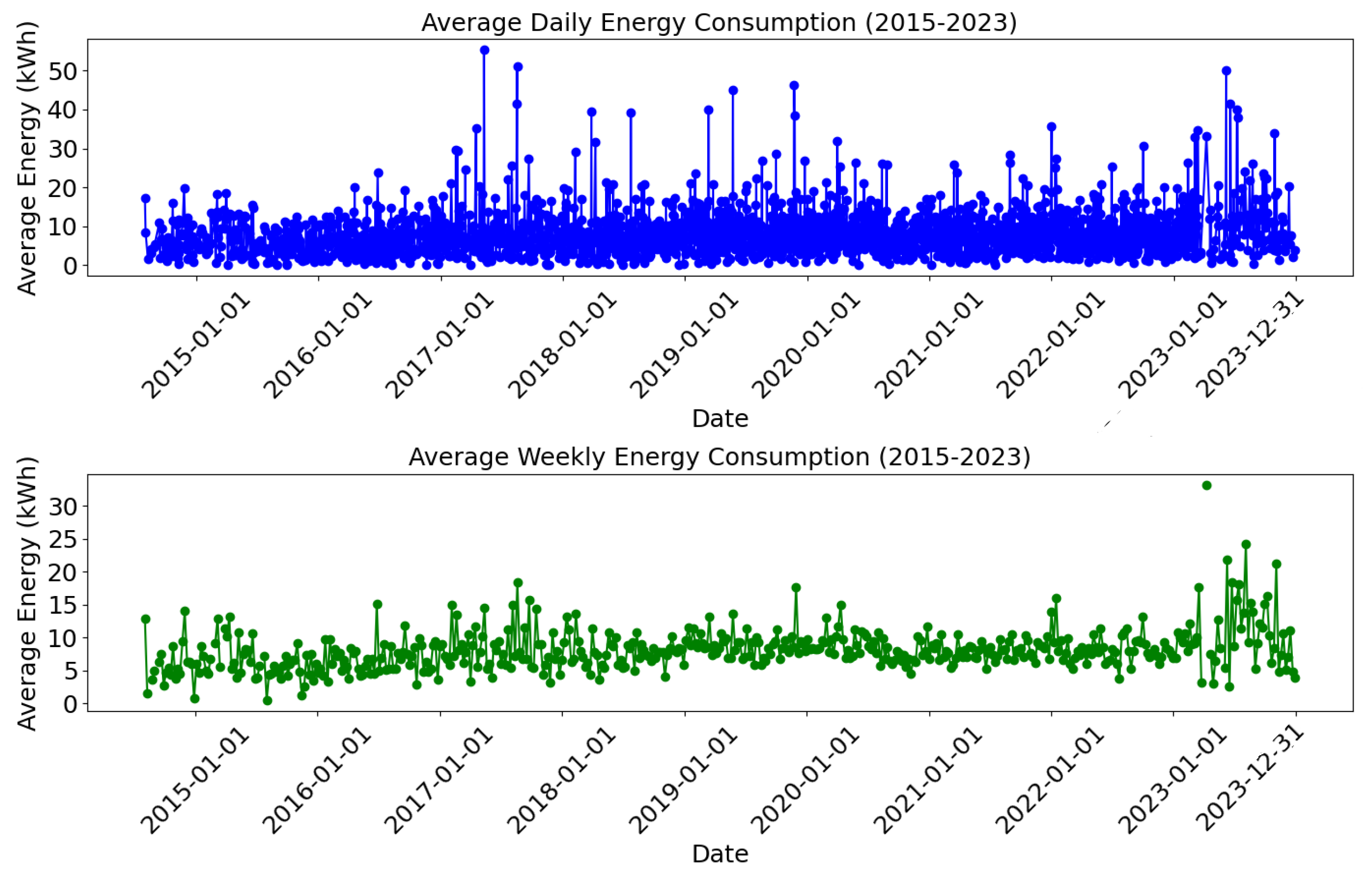

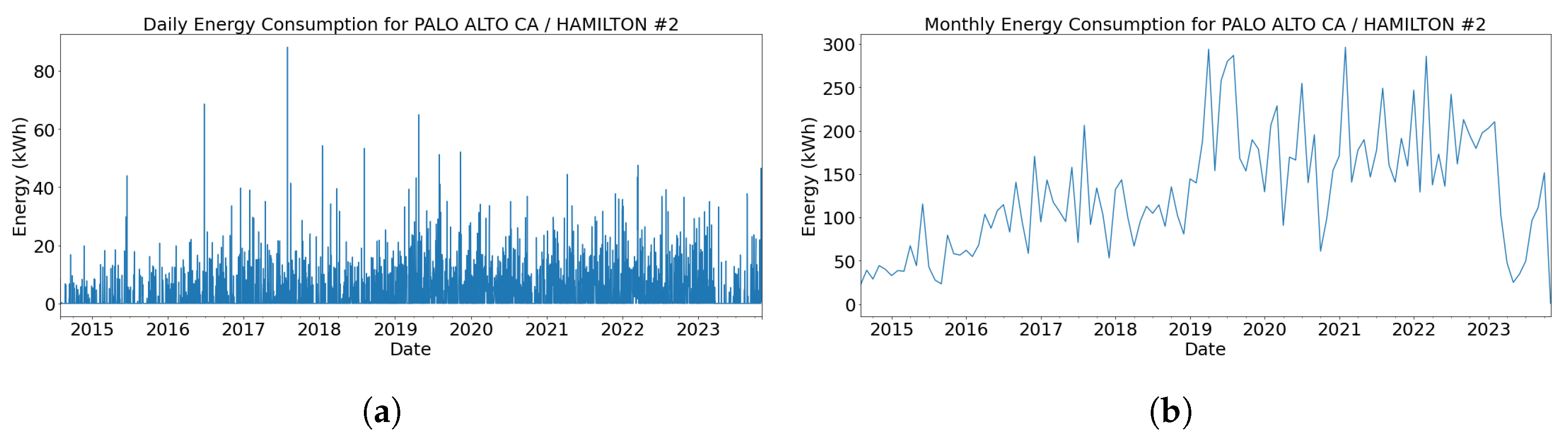

3.3. Dataset Description

3.3.1. Derived Features

3.4. Missing Values Imputation Using Novel Temporal Contextual Imputation (TCI) Method

- Autocorrelation analysis: we calculate each feature’s autocorrelation function (ACF) to identify temporal dependencies.

- Seasonal decomposition: using seasonal and trend segmentation using Loess (STL), we separate the time series data into three parts: seasonal, trend, and residual. The formula for the seasonal breakdown of the time series is as follows:The trend component is denoted as , the seasonal component is denoted as , and the residual component is denoted as .

- Feature correlation analysis: we calculate the correlation between different features to understand their interdependencies.

- Cluster analysis: we group similar data points using clustering algorithms such as K-means to identify patterns and relationships within the data.

- Local context extraction: for each missing value, we extract the local context by considering the values of neighboring data points within a defined temporal window and similar contexts identified through clustering. The local context mean is calculated aswhere is the set of indices within the temporal window around i.

- Contextual weighting: we calculate imputed values by applying a weighted average of the neighboring observed values and values from similar contexts. The weights are determined based on temporal proximity and contextual similarity. The cluster context mean is calculated as:where is the set of data point indices within the same cluster as i. The imputed value is then calculated as a weighted average of the local and cluster context, which meanswhere is a weighting factor that balances the contributions of the local and cluster contexts.

- Iterative refinement: we refine the imputed values through multiple iterations to ensure convergence and consistency with the observed data.

- Preservation of temporal dynamics: by analyzing temporal dependencies and applying seasonal decomposition, TCI ensures that the imputed values reflect the temporal patterns of the original data.

- Contextual consistency: using clustering and feature correlation analysis allows TCI to maintain the contextual relationships within the data, ensuring more accurate imputations.

- Flexibility: the iterative refinement process and the use of both local and contextual information make TCI robust to varying patterns and distributions in the data.

| Algorithm 1 Temporal Contextual Imputation (TCI) method |

| Require: X (dataset with missing values), (the feature to impute), W (temporal window size), n (number of clusters), (weighting factor)

Ensure: Imputed dataset X Initialize Calculate autocorrelation function (ACF) and perform STL decomposition to obtain , , and for Perform feature correlation analysis to identify interdependencies between features in X Apply K-means clustering to identify n clusters based on relevant features Assign each data point in X to its corresponding cluster for each missing value in do Extract temporal window Calculate local context mean : Identify the cluster to which data point i belongs Extract cluster context Calculate cluster context mean : Calculate imputed value : Update end for return |

3.5. Feature Selection Using the Boruta Method

| Algorithm 2 Boruta feature selection algorithm |

|

4. Enhanced Ensemble Empirical Mode Decomposition (EEMD) for Improved Load Forecasting

- Capturing complex dynamics: in recharging demand data, EEMD excels in detecting both short-term variations and longer-term trends and other complicated and nonlinear patterns.

- Improve model interpretability: understanding the daily, seasonal, and movement-related components responsible for charging loads was aided by the decomposed IMFs from the original time series.

- Improving forecast accuracy: using these decomposed IMFs as additional features or inputs in our forecasting model, we can utilize the rich information present at different scales, improving our load predictions’ accuracy.

4.1. Proposed SARLDNet with Regularization

- Number of layers: indices the architectural level of the model. More layers let the model learn more intricate patterns but could raise computing requirements.

- Indicates whether the model is basic or complex: model complexity usually provides superior performance, complex models might need more resources and extended training periods.

- Filters: describe the model’s usage of filters. Convolutional neural networks must include filters to extract features from input data.

- Shows the model’s capacity to handle and analyze big datasets effectively. Real-world applications depend on the ability to handle vast amounts of data well.

- Regularization: indicates if the model uses methods to reduce overfitting and improve generalizing capacity.

- Scalability is the capacity of the model to grow to manage more data or more complex jobs without appreciable performance loss.

- Essential for successful forecasting, temporal dependency capture shows if the model can collect and use temporal relationships in time series or sequential data.

- Time complexity: calculates the computing time needed for the model to generate findings from data processing. Effective operations call for models with reduced temporal complexity.

4.2. Performance Evaluation Metrics

- One measure of the average size of discrepancies between predicted and observed data is the Mean Absolute Error (MAE). It is calculated as follows:M is the total number of data points; is the observed values; is the projected values.

- Mean squared error (MSE): the average squared variances between expected and actual values are quantified by the mean squared error (MSE). It is computed as [45]

- Root Mean Squared Error (RMSE): RMSE is the MSE’s square root that offers an understandable scale commensurate with the original dataset. It is stated as [45]

- R-squared (): the percentage of the variance in the dependent variable that the independent variables can explain is measured by R-squared (). A better match is indicated by higher values ranging from 0 to 1 [45]:where denotes the mean of the recorded values .

- Mean absolute percentage error (MAPE): a measure of the average absolute percentage discrepancy between anticipated and actual values, MAPE is reported as a percentage:

- Explained Variance Score: measuring the percentage of the variance in the expected values the model explains; it is described aswhere Var denotes variance.

- Cross-valuation techniques—such as k-fold cross-valuation—are used to partition the data into several subgroups, validating the model’s resilience. For every fold, metrics like MAE, MSE, RMSE, and are calculated and averaged to evaluate the model’s performance across many data splits.

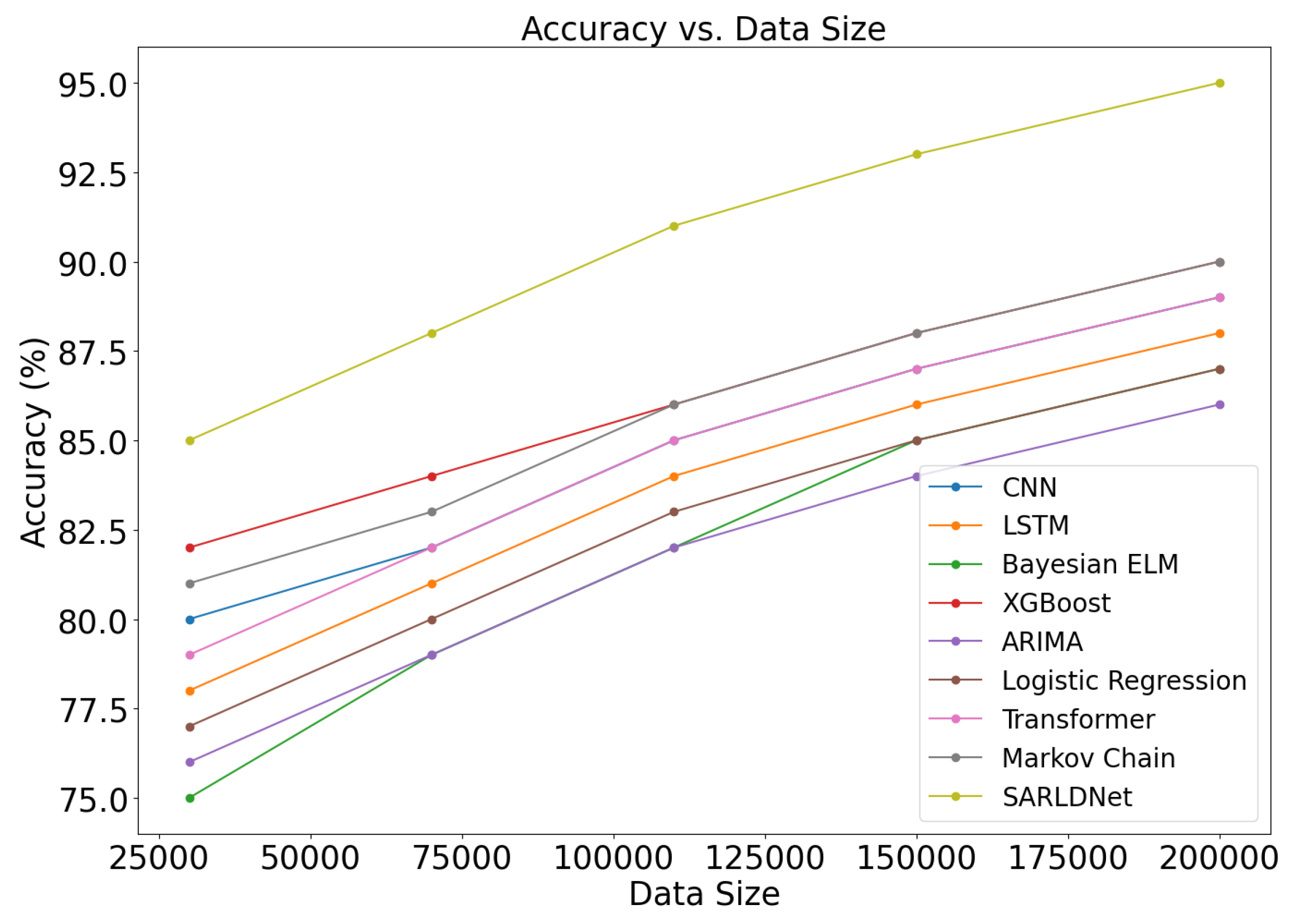

5. Simulation Results

- SARLDNet utilizes an optimized network architecture that minimizes superfluous computing burden, improving efficiency. This architecture is precisely engineered to expedite and optimize data processing.

- Parallel processing: the network utilizes the ability to execute several tasks at the same time, enabling it to manage and process enormous volumes of data efficiently. This dramatically decreases the amount of time needed for both training and inference.

- SARLDNet incorporates self-attention techniques, allowing the network to selectively concentrate on the most pertinent aspects of the incoming data. Consequently, there is an enhancement in feature extraction and an increase in model correctness.

- Residual learning: the use of residual connections in SARLDNet aids in addressing the issue of vanishing gradient, hence enhancing the network’s ability to train deeper layers effectively. This improves the model’s precision since it can catch more complex patterns in the data.

- SARLDNet incorporates multiple improvements, including efficient data management and powerful regularization techniques, to improve its execution speed and forecast accuracy.

- SARLDNet is a very effective and efficient method for large-scale machine learning tasks, surpassing standard methods in execution time and accuracy due to its technical improvements.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alanazi, F. Electric vehicles: Benefits, challenges, and potential solutions for widespread adaptation. Appl. Sci. 2023, 13, 6016. [Google Scholar] [CrossRef]

- Barman, P.; Dutta, L.; Bordoloi, S.; Kalita, A.; Buragohain, P.; Bharali, S.; Azzopardi, B. Renewable energy integration with electric vehicle technology: A review of the existing smart charging approaches. Renew. Sustain. Energy Rev. 2023, 183, 113518. [Google Scholar] [CrossRef]

- Shahzad, K.; Cheema, I.I. Low-carbon technologies in automotive industry and decarbonizing transport. J. Power Sources 2024, 591, 233888. [Google Scholar] [CrossRef]

- Shetty, D.K.; Shetty, S.; Raj Rodrigues, L.; Naik, N.; Maddodi, C.B.; Malarout, N.; Sooriyaperakasam, N. Barriers to widespread adoption of plug-in electric vehicles in emerging Asian markets: An analysis of consumer behavioral attitudes and perceptions. Cogent Eng. 2020, 7, 1796198. [Google Scholar] [CrossRef]

- Alkawsi, G.; Baashar, Y.; Abbas, U.D.; Alkahtani, A.A.; Tiong, S.K. Review of renewable energy-based charging infrastructure for electric vehicles. Appl. Sci. 2021, 11, 3847. [Google Scholar] [CrossRef]

- Boström, T.; Babar, B.; Hansen, J.B.; Good, C. The pure PV-EV energy system—A conceptual study of a nationwide energy system based solely on photovoltaics and electric vehicles. Smart Energy 2021, 1, 100001. [Google Scholar] [CrossRef]

- Aldossary, M. Optimizing Task Offloading for Collaborative Unmanned Aerial Vehicles (UAVs) in Fog-Cloud Computing Environments. IEEE Access 2024, 12, 74698–74710. [Google Scholar] [CrossRef]

- Yap, K.Y.; Chin, H.H.; Klemeš, J.J. Solar Energy-Powered Battery Electric Vehicle charging stations: Current development and future prospect review. Renew. Sustain. Energy Rev. 2022, 169, 112862. [Google Scholar] [CrossRef]

- Di Castelnuovo, M.; Biancardi, A. The future of energy infrastructure: Challenges and opportunities arising from the R-evolution of the energy sector. Disrupt. Infrastruct. Sect. Challenges Oppor. Dev. Investors Asset Manag. 2020, 5–52. [Google Scholar] [CrossRef]

- Srinivasan, S.; Kumarasamy, S.; Andreadakis, Z.E.; Lind, P.G. Artificial intelligence and mathematical models of power grids driven by renewable energy sources: A survey. Energies 2023, 16, 5383. [Google Scholar] [CrossRef]

- Sperling, J.; Henao, A. Electrification of High-Mileage Mobility Services in Cities and at Airports. Intell. Effic. Transp. Syst. 2020, 65. [Google Scholar] [CrossRef]

- Wang, L.; Qin, Z.; Slangen, T.; Bauer, P.; Van Wijk, T. Grid impact of electric vehicle fast charging stations: Trends, standards, issues and mitigation measures-an overview. IEEE Open J. Power Electron. 2021, 2, 56–74. [Google Scholar] [CrossRef]

- Ren, F.; Wei, Z.; Zhai, X. A review on the integration and optimization of distributed energy systems. Renew. Sustain. Energy Rev. 2022, 162, 112440. [Google Scholar] [CrossRef]

- Metais, M.O.; Jouini, O.; Perez, Y.; Berrada, J.; Suomalainen, E. Too much or not enough? Planning electric vehicle charging infrastructure: A review of modeling options. Renew. Sustain. Energy Rev. 2022, 153, 111719. [Google Scholar] [CrossRef]

- Li, J.; Herdem, M.S.; Nathwani, J.; Wen, J.Z. Methods and applications for Artificial Intelligence, Big Data, Internet of Things, and Blockchain in smart energy management. Energy AI 2023, 11, 100208. [Google Scholar] [CrossRef]

- Mahdavian, A.; Shojaei, A.; Mccormick, S.; Papandreou, T.; Eluru, N.; Oloufa, A.A. Drivers and barriers to implementation of connected, automated, shared, and electric vehicles: An agenda for future research. IEEE Access 2021, 9, 22195–22213. [Google Scholar] [CrossRef]

- Selvaraj, V.; Vairavasundaram, I. A Bayesian optimized machine learning approach for accurate state of charge estimation of lithium ion batteries used for electric vehicle application. J. Energy Storage 2024, 86, 111321. [Google Scholar] [CrossRef]

- Ullah, I.; Liu, K.; Yamamoto, T.; Al Mamlook, R.E.; Jamal, A. A comparative performance of machine learning algorithm to predict electric vehicles energy consumption: A path towards sustainability. Energy Environ. 2022, 33, 1583–1612. [Google Scholar] [CrossRef]

- Lee, Z.J.; Lee, G.; Lee, T.; Jin, C.; Lee, R.; Low, Z.; Low, S.H. Adaptive charging networks: A framework for smart electric vehicle charging. IEEE Trans. Smart Grid 2021, 12, 4339–4350. [Google Scholar] [CrossRef]

- Dong, P.; Zhao, J.; Liu, X.; Wu, J.; Xu, X.; Liu, Y.; Guo, W. Practical application of energy management strategy for hybrid electric vehicles based on intelligent and connected technologies: Development stages, challenges, and future trends. Renew. Sustain. Energy Rev. 2022, 170, 112947. [Google Scholar] [CrossRef]

- Venkitaraman, A.K.; Kosuru, V.S.R. Hybrid deep learning mechanism for charging control and management of Electric Vehicles. Eur. J. Electr. Eng. Comput. Sci. 2023, 7, 38–46. [Google Scholar] [CrossRef]

- Shahriar, S.; Al-Ali, A.R.; Osman, A.H.; Dhou, S.; Nijim, M. Prediction of EV charging behavior using machine learning. IEEE Access 2021, 9, 111576–111586. [Google Scholar] [CrossRef]

- Koohfar, S.; Woldemariam, W.; Kumar, A. Prediction of electric vehicles charging demand: A transformer-based deep learning approach. Sustainability 2023, 15, 2105. [Google Scholar] [CrossRef]

- Manoharan, A.; Begam, K.M.; Aparow, V.R.; Sooriamoorthy, D. Artificial Neural Networks, Gradient Boosting and Support Vector Machines for electric vehicle battery state estimation: A review. J. Energy Storage 2022, 55, 105384. [Google Scholar] [CrossRef]

- Zhang, T.; Huang, Y.; Liao, H.; Liang, Y. A hybrid electric vehicle load classification and forecasting approach based on GBDT algorithm and temporal convolutional network. Appl. Energy 2023, 351, 121768. [Google Scholar] [CrossRef]

- Lan, T.; Jermsittiparsert, K.; Alrashood, S.T.; Rezaei, M.; Al-Ghussain, L.; Mohamed, M.A. An advanced machine learning based energy management of renewable microgrids considering hybrid electric vehicles’ charging demand. Energies 2021, 14, 569. [Google Scholar] [CrossRef]

- Puška, A.; Božanić, D.; Mastilo, Z.; Pamučar, D.; Karić, E. Green procurement and electric vehicles: Machine learning approach to predict buyer satisfaction. Sustainability 2023, 15, 2954. [Google Scholar]

- Hardy, J.; Eggert, A.; Olstad, D.; Hohmann, M. Insights into the impact of battery electric vehicle charging on local grid stability: A multi-agent based simulation study. Energy Policy 2023, 173, 113269. [Google Scholar]

- Tan, X.; Tan, Y.; Guo, L.; Wei, Y. Grid impacts of integrating distributed energy resources and electric vehicle charging infrastructures: A review of challenges and recent developments. Renew. Sustain. Energy Rev. 2022, 158, 112093. [Google Scholar]

- Serpi, A.; Poggiani, D.; Bottino, L.; Grazia, L.; Piana, A. Smart Grid Architectures for EV Charging Infrastructures: Current Status, Challenges, and Future Trends. Energies 2021, 14, 6582. [Google Scholar]

- Salkuti, S.R. Advanced technologies for energy storage and electric vehicles. Energies 2023, 16, 2312. [Google Scholar] [CrossRef]

- Ankar, S.J.; Pinkymol, K.P. Optimal Sizing and Energy Management of Electric Vehicle Hybrid Energy Storage Systems With Multi-Objective Optimization Criterion. IEEE Trans. Veh. Technol. 2024. [Google Scholar] [CrossRef]

- Benhammou, A.; Tedjini, H.; Hartani, M.A.; Ghoniem, R.M.; Alahmer, A. Accurate and efficient energy management system of fuel cell/battery/supercapacitor/AC and DC generators hybrid electric vehicles. Sustainability 2023, 15, 10102. [Google Scholar] [CrossRef]

- Hafeez, A.; Alammari, R.; Iqbal, A. Utilization of EV charging station in demand side management using deep learning method. IEEE Access 2023, 11, 8747–8760. [Google Scholar] [CrossRef]

- Yin, W.; Ji, J.; Wen, T.; Zhang, C. Study on orderly charging strategy of EV with load forecasting. Energy 2023, 278, 127818. [Google Scholar] [CrossRef]

- Deng, S.; Wang, J.; Tao, L.; Zhang, S.; Sun, H. EV charging load forecasting model mining algorithm based on hybrid intelligence. Comput. Electr. Eng. 2023, 112, 109010. [Google Scholar] [CrossRef]

- Sasidharan, M.P.; Kinattingal, S.; Simon, S.P. Comparative analysis of deep learning models for electric vehicle charging load forecasting. J. Inst. Eng. (India) Ser. B 2023, 104, 105–113. [Google Scholar] [CrossRef]

- Haghani, M.; Sprei, F.; Kazemzadeh, K.; Shahhoseini, Z.; Aghaei, J. Trends in electric vehicles research. Transp. Res. Part D Transp. Environ. 2023, 123, 103881. [Google Scholar] [CrossRef]

- Khajeh, H.; Laaksonen, H.; Simões, M.G. A fuzzy logic control of a smart home with energy storage providing active and reactive power flexibility services. Electr. Power Syst. Res. 2023, 216, 109067. [Google Scholar] [CrossRef]

- Okafor, C.E.; Folly, K.A. Provision of Additional Inertia Support for a Power System Network Using Battery Energy Storage System (BESS). IEEE Access 2023, 11, 74936–74952. [Google Scholar] [CrossRef]

- Millo, F.; Rolando, L.; Tresca, L.; Pulvirenti, L. Development of a neural network-based energy management system for a plug-in hybrid electric vehicle. Transp. Eng. 2023, 11, 100156. [Google Scholar] [CrossRef]

- Mohammad, F.; Kang, D.K.; Ahmed, M.A.; Kim, Y.C. Energy demand load forecasting for electric vehicle charging stations network based on convlstm and biconvlstm architectures. IEEE Access 2023, 11, 67350–67369. [Google Scholar] [CrossRef]

- Zaboli, A.; Tuyet-Doan, V.N.; Kim, Y.H.; Hong, J.; Su, W. An lstm-sae-based behind-the-meter load forecasting method. IEEE Access 2023, 11, 49378–49392. [Google Scholar] [CrossRef]

- Swan, B. EV Charging Station Data-California Region [Data set]. Kaggle 2024. [Google Scholar] [CrossRef]

- Tatachar, A.V. Comparative assessment of regression models based on model evaluation metrics. Int. J. Innov. Technol. Explor. Eng. 2021, 8, 853–860. [Google Scholar]

| Ref. | Method Used | Problem Identified | Limitations |

|---|---|---|---|

| [17] | Bayesian extreme learning machine | Uncertainty in EV charging consumption | Limited to SOC prediction, may not consider all factors |

| [18] | Machine learning algorithms | Estimation of energy consumption in EVs | Dependency on quality and quantity of training data |

| [19] | Algorithm utilizing cell phone data | Predicting energy consumption at EV charging | Reliance on availability and reliability of cell phone data |

| [20] | Strategy for multi-mode toggle logic control | Enhancing energy forecasting and fuel economy | May not adapt well to dynamic driving conditions |

| [21] | Machine learning for capacity prediction | Planning and optimizing charging station infrastructure | Dependency on accurate data for capacity prediction |

| [22] | Statistical features extraction and supervised learning | Supervised learning for energy consumption prediction | Reliance on availability of comprehensive EV data |

| [23] | Transfer learning algorithms | Transfer learning for prediction model construction | Dependency on availability of sufficient transfer data |

| [24] | Integration of prediction models with energy management strategies | Integration of short-term predictions into energy management | Complexity in integrating prediction models with management systems |

| [25] | Decision tree algorithm with parallel gradient boosting | Energy demand and schedulable capacity forecasting | Complexity in implementing and training boosting algorithms |

| [26] | Advanced machine learning techniques | Using cutting-edge machine learning techniques, new methods | Potential overfitting and complexity in model interpretation |

| [27] | Combination of attributes for prediction | Long-term forecasting approach for energy consumption | Dependency on accurate historical data for forecasting |

| [28] | Extreme learning machine algorithm | Optimal energy management using extreme learning machine | Sensitivity to input parameters and training data quality |

| [29] | Fuzzy logic algorithms | Energy storage system (ESS) fuzzy logic algorithm | Complexity in tuning fuzzy controller parameters |

| [30] | Analytical model for V2G parking lot | Power capacity of the V2G parking lot computed | Assumption-based model, may not accurately reflect real-world conditions |

| [31] | Filtering techniques using ESS for power compensation | Attenuation of power fluctuations using ESS | Design limitations of filtering techniques |

| [32] | Ramp-rate technique for power smoothing | Limiting power rate changes for smoothing | Challenge in selecting appropriate ramp-rate parameters |

| [33] | Use of fuzzy logic for designation of reference powers | Installation of BESS’s relevant authorities | Complexity in fuzzy controller tuning and system integration |

| [34] | Development of learning action automaton | Implementation and validation of learning action automaton | Complexity in system validation and real-world implementation |

| [35] | Utilization of R-R filters for fluctuation attenuation | Fluctuation attenuation using ESS and R-R filters | Design limitations and challenges in selecting filter parameters |

| [36] | Adjustment of ramp-rate in real time for power stabilization | Real-time adjustment of ramp-rate for power stabilization | Complexity in real-time control and coordination |

| [37] | Fuzzy logic algorithm for designation of reference powers | Designation of reference powers for a BESS | Complexity in fuzzy controller tuning and system integration |

| [38] | High-frequency attenuation filters with fuzzy controller | Power calculation using BESS and high-frequency attenuation filters | Complexity in fuzzy controller tuning and system integration |

| [39] | Probabilistic prediction models for energy consumption prediction | Comparison of probabilistic and deterministic prediction models | Complexity in model training and interpretation |

| [40] | Usage of historical charging data for EV session prediction | Prediction of EV session duration and energy consumption | Dependency on historical data quality and availability |

| [41] | Long short-term memory and ARIMA models for charging loads | Forecasting EV charging station usage using time series data | Complexity in model selection and parameter tuning |

| [42] | BP neural network for driving condition prediction | Driving conditions prediction model for parallel hybrid electric vehicles | Dependency on accurate driving condition data and model tuning |

| [43] | A data-driven methodology for predicting the energy consumption of large-scale charging | Prediction of large-scale charging energy demands | Complexity in model training and scalability |

| Ours | Temporal Contextual Imputation (TCI), Integration of Renewable Energy and Weather Data, Enhanced EMD Signal Decomposition, Feature Engineering, SARLDNet Architecture Development, and Execution Efficiency. | Addressed limitations with innovative methods for handling missing data, integrating renewable energy data, enhancing signal decomposition, advanced feature engineering, novel deep learning architecture, and efficient execution, improving accuracy and scalability in EV charging station load forecasting. | Overcame traditional challenges with comprehensive methods integrating novel data handling, improving accuracy and scalability. |

| Feature Type | Feature Name | Description |

|---|---|---|

| Original | Date | The specific date of the recorded data. |

| Original | Time | The hour of the day the data was recorded. |

| Original | EV Charging Demand (kW) | The amount of electricity (in kilowatts) demanded by electric vehicles for charging each hour. |

| Original | Solar Energy Production (kW) | The amount of electricity (in kilowatts) produced from solar energy sources during each hour. |

| Original | Wind Energy Production (kW) | The amount of electricity (in kilowatts) produced from wind energy sources during each hour. |

| Original | Electricity Price ($/kWh) | The price of electricity per kilowatt-hour. |

| Original | Grid Availability | Indicates whether the grid was available (“Available”) or not (”Unavailable“) during each hour. |

| Original | Weather Conditions | Describes the weather during each hour, with values such as “Clear”, “Cloudy”, “Rainy”, etc. |

| Original | Battery Storage (kWh) | The amount of electricity stored in batteries during each hour. |

| Original | Charging Station Capacity (kW) | The maximum capacity of the charging stations in kilowatts. |

| Original | EV Charging Efficiency (%) | The efficiency of the EV charging process, expressed as a percentage. |

| Original | Number of EVs Charging | The number of electric vehicles charging each hour. |

| Original | Peak Demand (kW) | The peak electricity demand during each hour. |

| Original | Renewable Energy Usage (%) | The percentage of energy used from renewable sources. |

| Original | Grid Stability Index | An index indicates the grid’s stability, with higher values indicating greater stability. |

| Original | Carbon Emissions (kgCO2/kWh) | The amount of carbon emissions produced per kilowatt-hour of electricity. |

| Original | Power Outages (hours) | The duration of power outages during each hour. |

| Original | Energy Savings ($) | The amount of money saved through energy efficiencies during each hour. |

| Derived | Total REn Production (kW) | The sum of solar and wind energy production. |

| Derived | Effective Charging Capacity (kW) | The product of charging station capacity and EV charging efficiency. |

| Derived | Adjusted Charging consumption (kW) | The EV charging demand adjusted by renewable energy usage. |

| Derived | Net Energy Cost ($) | The product of EV charging demand and electricity price. |

| Derived | Carbon Footprint Reduction (kgCO2) | The reduction in carbon emissions due to renewable energy usage. |

| Derived | Renewable Energy Efficiency | The efficiency of utilizing renewable energy for charging electric vehicles. |

| Ref. | Method/ Model | No. of Layers | Model Complexity | Filters | Large Scale Data Handling | Regula-rization | Scalability | Temporal Dependency Capture | Time Complexity |

|---|---|---|---|---|---|---|---|---|---|

| [17] | Bayesian Extreme Learning Machine | 1 | × | × | ✓ | × | × | × | ✓ |

| [21] | EfficientNet | Multiple | ✓ | × | ✓ | × | ✓ | × | ✓ |

| [22] | XgBoost | Multiple | ✓ | × | ✓ | × | ✓ | × | ✓ |

| [23] | LSTM | Multiple | ✓ | × | ✓ | × | ✓ | ✓ | ✓ |

| [25] | Parallel Gradient Boosting Decision Tree | Multiple | ✓ | × | ✓ | × | ✓ | × | × |

| [27] | Markov Chain | Multiple | ✓ | × | ✓ | × | ✓ | × | ✓ |

| [31] | ESS for Power Compensation | Multiple | ✓ | × | ✓ | × | × | × | ✓ |

| [34] | CNN | Multiple | ✓ | × | × | × | × | × | ✓ |

| [36] | Real-Time Ramp-Rate Adjustment | Multiple | ✓ | × | ✓ | × | ✓ | × | ✓ |

| [39] | LSTM | Multiple | ✓ | × | ✓ | × | ✓ | ✓ | ✓ |

| [40] | GoogleNet | Multiple | ✓ | × | ✓ | × | ✓ | × | ✓ |

| Ours | SARLDNet | Multiple | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Parameter | Value |

|---|---|

| Learning Rate | 0.001 |

| Architecture | Deep neural network |

| Validation Split | 0.2 |

| Hidden Layer Units | 128, 64, 32 |

| Data Preprocessing | Standardization, EMD signal decomposition |

| Activation Function | ReLU |

| Batch Size | 64 |

| Input Features | Solar energy, wind data, historical charging station data |

| Stem Layer | 1 layer, 64 units, ReLU activation |

| Epochs | 100 |

| Loss Function | Mean Squared Error (MSE) |

| Optimizer | Adam |

| Callbacks | Early stopping, Model checkpointing |

| GPU Acceleration | CUDA |

| Hidden Layers | 3 layers |

| Model | Station | MAPE (%) | RMSE (kW) | MAE (kW) | MSE (kW2) | R2 Score | EVS | MDAE (kW) | MAbPR (%) | Acc (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| LSTM | HAMI #1 | 9.5 | 4.0 | 3.2 | 16.0 | 0.78 | 0.77 | 2.6 | 9.1 | 87.5 |

| HAMI #2 | 9.3 | 3.9 | 3.1 | 15.5 | 0.79 | 0.78 | 2.5 | 8.9 | 87.7 | |

| MPL #6 | 9.4 | 4.1 | 3.3 | 16.6 | 0.77 | 0.76 | 2.7 | 9.2 | 87.4 | |

| WEBS #1 | 9.6 | 4.2 | 3.4 | 17.2 | 0.76 | 0.75 | 2.8 | 9.3 | 87.3 | |

| XGBoost | HAMI #1 | 16.2 | 5.2 | 4.1 | 27.0 | 0.60 | 0.59 | 3.5 | 15.8 | 81.0 |

| HAMI #2 | 16.0 | 5.1 | 4.0 | 26.2 | 0.61 | 0.60 | 3.4 | 15.6 | 81.2 | |

| MPL #6 | 16.1 | 5.3 | 4.2 | 27.5 | 0.59 | 0.58 | 3.6 | 15.9 | 80.9 | |

| WEBS #1 | 16.3 | 5.4 | 4.3 | 28.1 | 0.58 | 0.57 | 3.7 | 16.0 | 80.8 | |

| LR | HAMI #1 | 17.0 | 5.5 | 4.4 | 30.2 | 0.55 | 0.54 | 3.7 | 16.8 | 80.0 |

| HAMI #2 | 16.8 | 5.4 | 4.3 | 29.5 | 0.56 | 0.55 | 3.6 | 16.6 | 80.2 | |

| MPL #6 | 16.9 | 5.6 | 4.5 | 30.8 | 0.54 | 0.53 | 3.8 | 16.9 | 79.9 | |

| WEBS #1 | 17.1 | 5.7 | 4.6 | 31.4 | 0.53 | 0.52 | 3.9 | 17.0 | 79.8 | |

| Transformer | HAMI #1 | 9.0 | 3.8 | 3.0 | 14.4 | 0.81 | 0.80 | 2.5 | 8.6 | 88.0 |

| HAMI #2 | 8.8 | 3.7 | 2.9 | 13.8 | 0.82 | 0.81 | 2.4 | 8.4 | 88.2 | |

| MPL #6 | 8.9 | 3.9 | 3.1 | 14.7 | 0.80 | 0.79 | 2.6 | 8.7 | 87.9 | |

| WEBS #1 | 9.1 | 4.0 | 3.2 | 15.3 | 0.79 | 0.78 | 2.7 | 8.8 | 87.8 | |

| Bay. ELM | HAMI #1 | 10.5 | 4.2 | 3.5 | 17.8 | 0.75 | 0.74 | 3.0 | 11.9 | 86.5 |

| HAMI #2 | 10.3 | 4.1 | 3.4 | 17.0 | 0.76 | 0.75 | 2.9 | 11.7 | 86.7 | |

| MPL #6 | 10.4 | 4.3 | 3.6 | 18.4 | 0.74 | 0.73 | 3.1 | 12.0 | 86.4 | |

| WEBS #1 | 10.6 | 4.4 | 3.7 | 19.0 | 0.73 | 0.72 | 3.2 | 12.1 | 86.3 | |

| ARIMA | HAMI #1 | 11.0 | 4.5 | 3.8 | 20.0 | 0.70 | 0.69 | 3.3 | 13.0 | 85.0 |

| HAMI #2 | 10.8 | 4.4 | 3.7 | 19.2 | 0.71 | 0.70 | 3.2 | 12.8 | 85.2 | |

| MPL #6 | 10.9 | 4.6 | 3.9 | 20.5 | 0.69 | 0.68 | 3.4 | 13.1 | 84.9 | |

| WEBS #1 | 11.1 | 4.7 | 4.0 | 21.1 | 0.68 | 0.67 | 3.5 | 13.2 | 84.8 | |

| Mchain | HAMI #1 | 11.0 | 4.5 | 3.8 | 20.0 | 0.70 | 0.69 | 3.3 | 13.0 | 85.0 |

| HAMI #2 | 10.8 | 4.4 | 3.7 | 19.2 | 0.71 | 0.70 | 3.2 | 12.8 | 85.2 | |

| MPL #6 | 10.9 | 4.6 | 3.9 | 20.5 | 0.69 | 0.68 | 3.4 | 13.1 | 84.9 | |

| WEBS #1 | 11.1 | 4.7 | 4.0 | 21.1 | 0.68 | 0.67 | 3.5 | 13.2 | 84.8 | |

| SARLDNet | HAMI #1 | 2.9 | 2.7 | 2.1 | 3.5 | 0.90 | 0.89 | 0.7 | 2.9 | 98.9 |

| HAMI #2 | 2.7 | 2.6 | 2.0 | 3.9 | 0.91 | 0.90 | 0.6 | 2.8 | 98.9 | |

| MPL #6 | 2.8 | 2.8 | 2.2 | 3.8 | 0.89 | 0.88 | 0.8 | 2.0 | 98.9 | |

| WEBS #1 | 2.0 | 2.9 | 2.3 | 3.4 | 0.88 | 0.87 | 0.9 | 2.1 | 98.9 |

| Method | Wilcoxon p-Value | Student’s t-Test p-Value | ANOVA F-Value | Chi-Squared p-Value | Mann–Whitney U p-Value |

|---|---|---|---|---|---|

| CNN | 0.052 | 0.040 | 5.15 | 0.064 | 0.046 |

| LSTM | 0.035 | 0.027 | 6.50 | 0.050 | 0.033 |

| Bayesian ELM | 0.042 | 0.032 | 5.95 | 0.055 | 0.038 |

| XGBoost | 0.023 | 0.019 | 7.80 | 0.038 | 0.023 |

| ARIMA | 0.057 | 0.046 | 5.00 | 0.070 | 0.049 |

| Logistic Regression | 0.065 | 0.052 | 4.55 | 0.080 | 0.056 |

| Transformer | 0.018 | 0.015 | 8.40 | 0.030 | 0.019 |

| Markov Chain | 0.017 | 0.014 | 8.55 | 0.028 | 0.018 |

| SARLDNet | 0.012 | 0.009 | 9.40 | 0.013 | 0.007 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aldossary, M.; Alharbi, H.A.; Ayub, N. Optimizing Electric Vehicle (EV) Charging with Integrated Renewable Energy Sources: A Cloud-Based Forecasting Approach for Eco-Sustainability. Mathematics 2024, 12, 2627. https://doi.org/10.3390/math12172627

Aldossary M, Alharbi HA, Ayub N. Optimizing Electric Vehicle (EV) Charging with Integrated Renewable Energy Sources: A Cloud-Based Forecasting Approach for Eco-Sustainability. Mathematics. 2024; 12(17):2627. https://doi.org/10.3390/math12172627

Chicago/Turabian StyleAldossary, Mohammad, Hatem A. Alharbi, and Nasir Ayub. 2024. "Optimizing Electric Vehicle (EV) Charging with Integrated Renewable Energy Sources: A Cloud-Based Forecasting Approach for Eco-Sustainability" Mathematics 12, no. 17: 2627. https://doi.org/10.3390/math12172627

APA StyleAldossary, M., Alharbi, H. A., & Ayub, N. (2024). Optimizing Electric Vehicle (EV) Charging with Integrated Renewable Energy Sources: A Cloud-Based Forecasting Approach for Eco-Sustainability. Mathematics, 12(17), 2627. https://doi.org/10.3390/math12172627