Reference Architecture for the Integration of Prescriptive Analytics Use Cases in Smart Factories †

Abstract

1. Introduction

- We perform a literature review on reference architectures and their suitability for integrating prescriptive analytics in smart factories. We further show how prescriptive analytics reference architectures differ from platforms and frameworks;

- We propose a framework for prescriptive analytics and validate it on a smart factory use case. The framework is derived from human decision making combined with prescriptive components;

- Further, we propose a prescriptive reference architecture for multiple smart factory use cases. The linking of the use cases is handled via an orchestration layer.

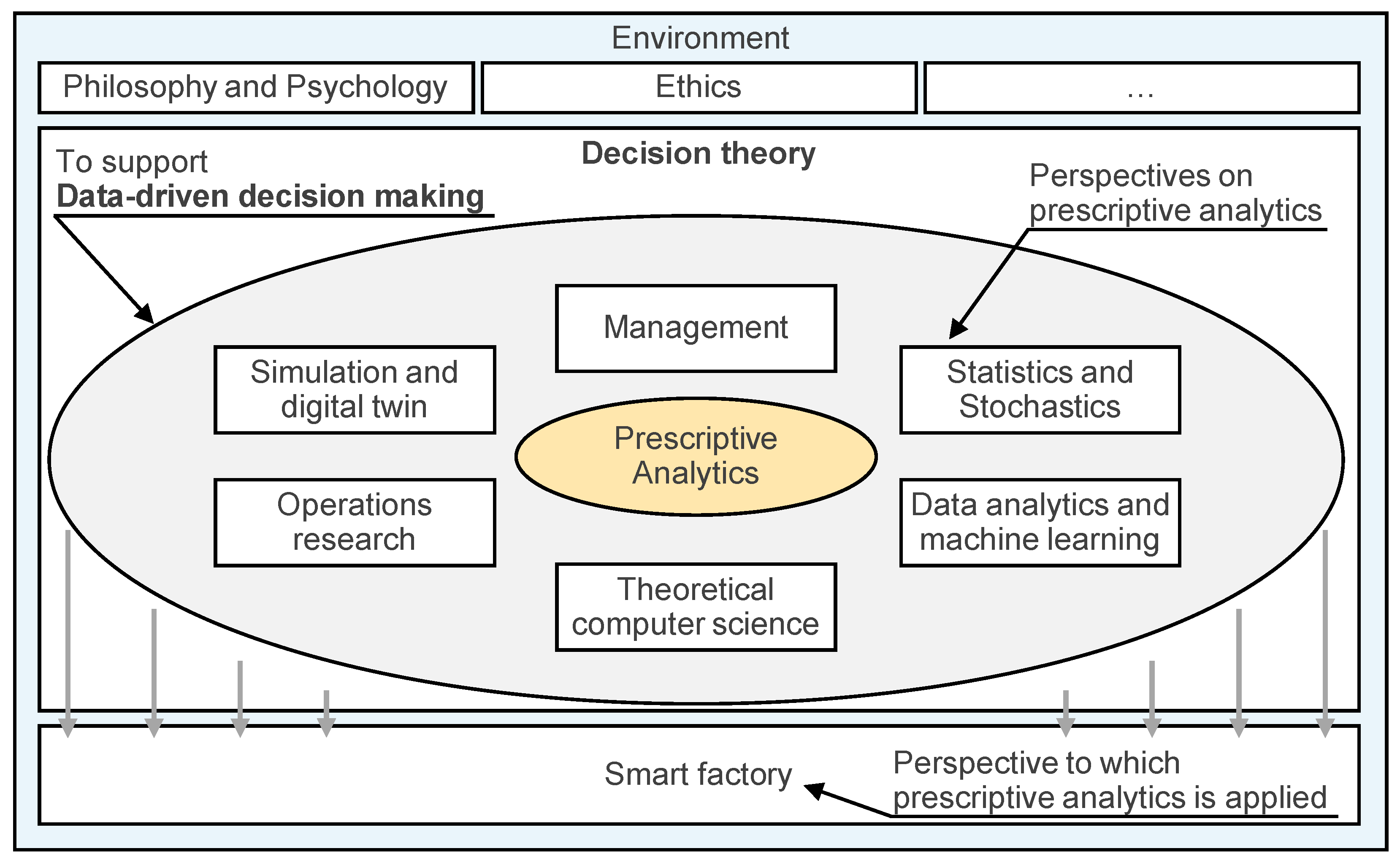

2. Relevant Concepts and Definitions

“A decision is an act in which one of several possible alternative courses of action is selected in order to achieve a certain goal”[15] (p. 162).

- Perspective 1: Decision Theory

- Perspective 2: Data Analytics and ML

3. State of the Art

3.1. Prescriptive Analytics Use Cases

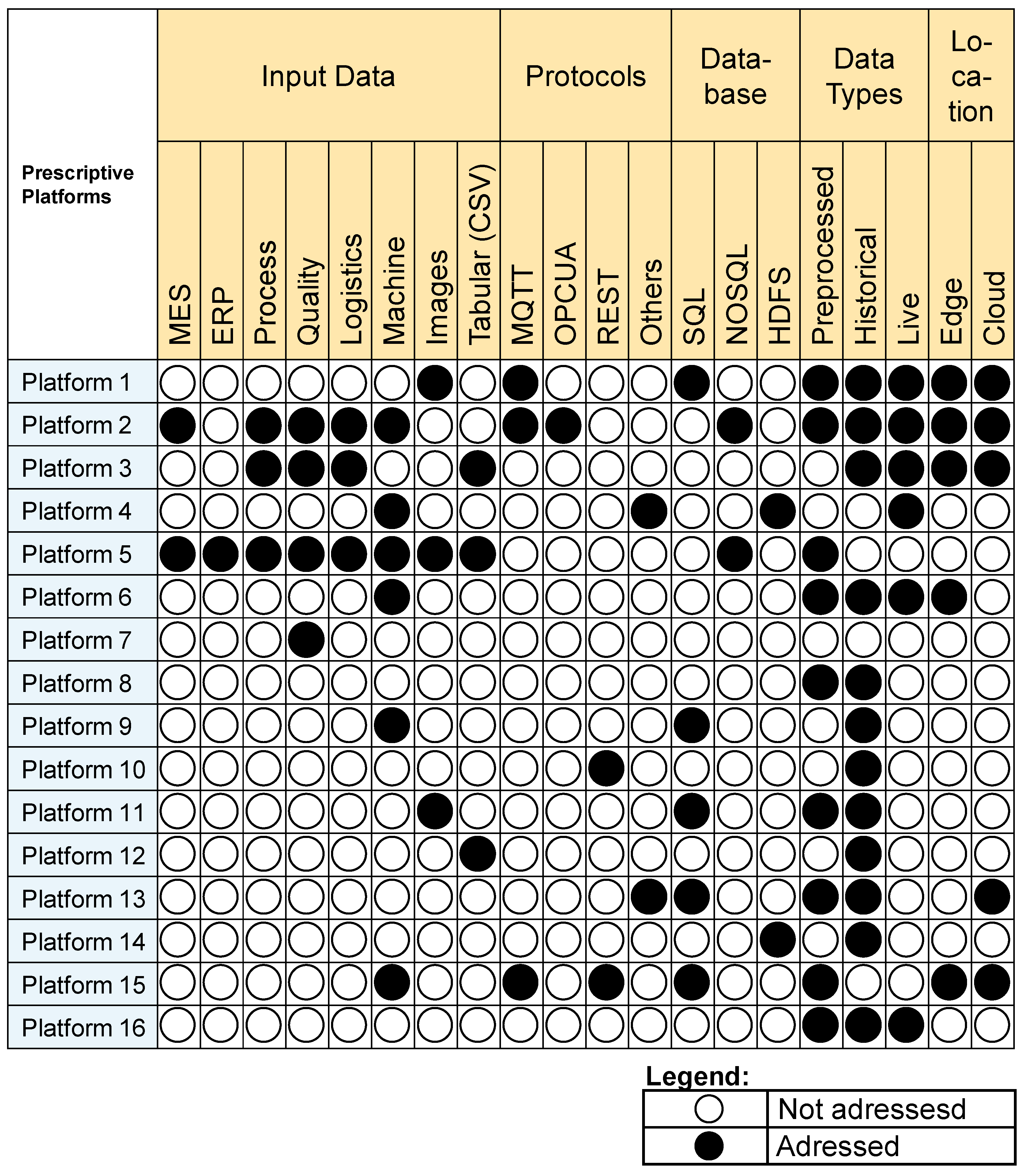

3.2. Prescriptive Analytics Platforms

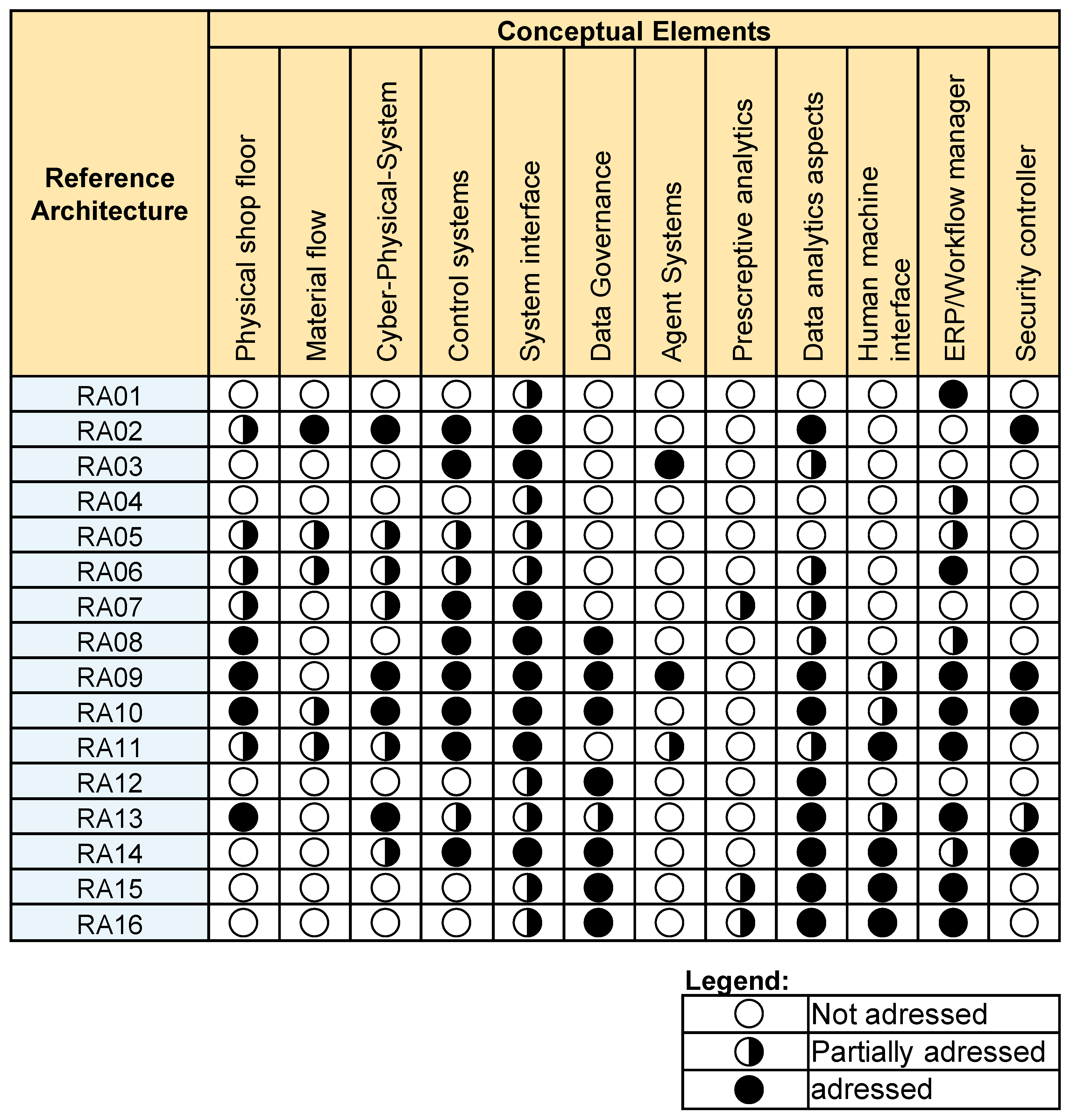

3.3. Smart Factory Reference Architectures

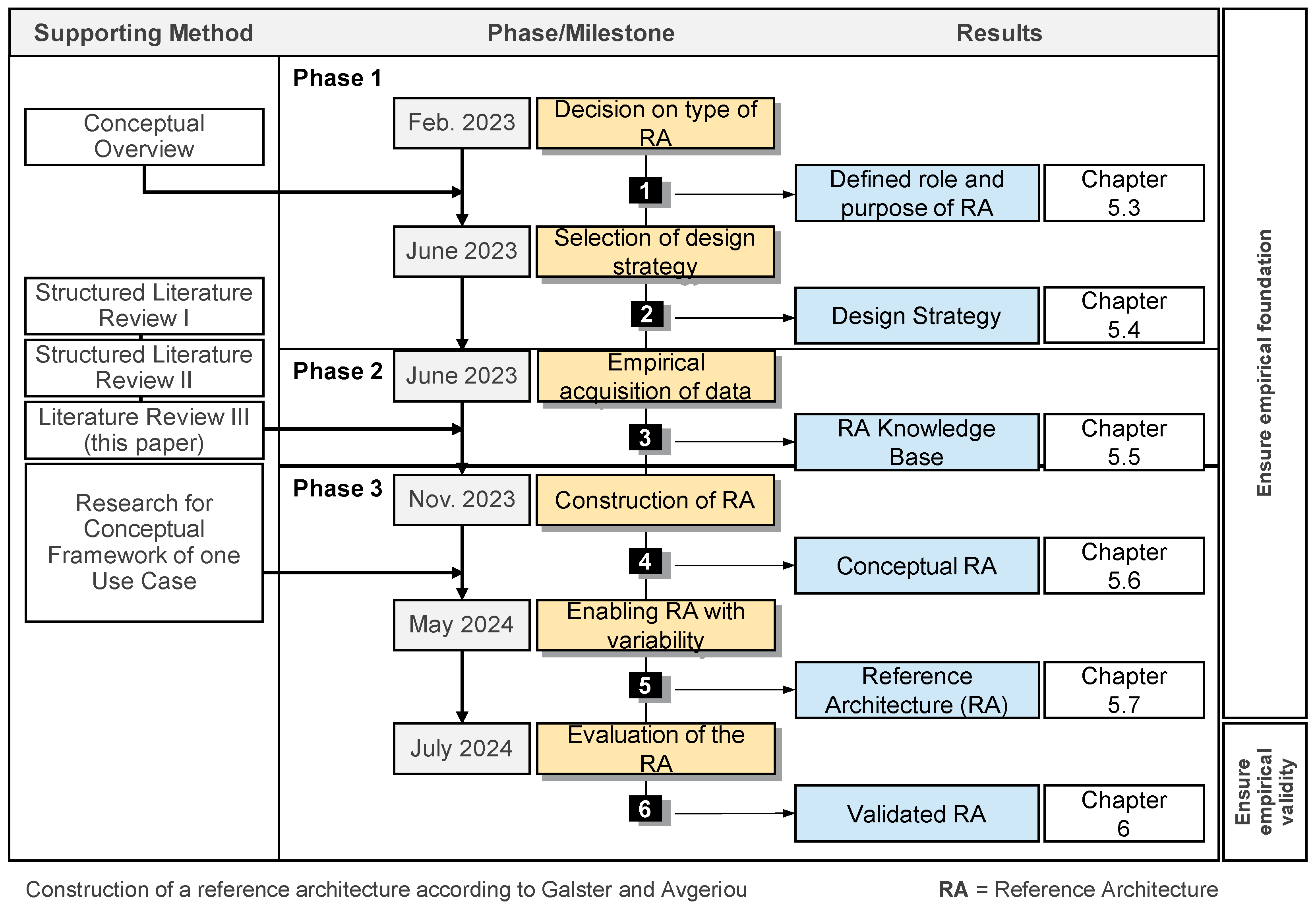

4. Research Methodology

- Structured literature research with a focus on prescriptive analytics use cases in smart factories [28];

- Structured literature research with a focus on prescriptive analytics platforms (broader scope and specific for manufacturing) [11];

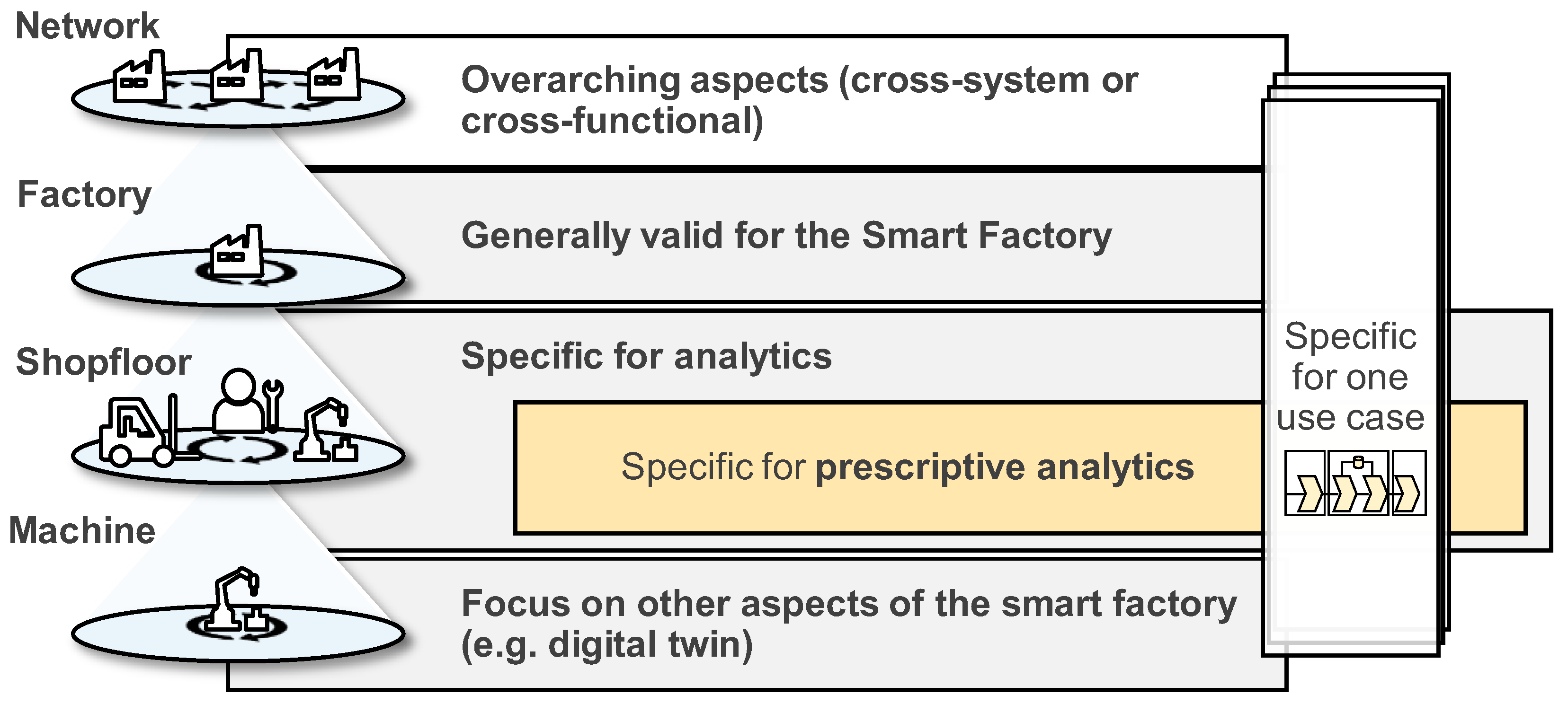

- Rigorous literature research on existing reference architectures (for results, see Section 3.3). For this search, we focused on reference architectures for the overall scope of the smart factory with a narrower focus on intersections with “industrial data science”, “industrial analytics” and “prescriptive analytics”. Sources that include the terminology of “industry 4.0” or “industrie 4.0”, “smart manufacturing”, “manufacturing analytics” or “big data analytics for manufacturing” were included in our search.

5. Reference Architecture for Prescriptive Analytics in Smart Factories

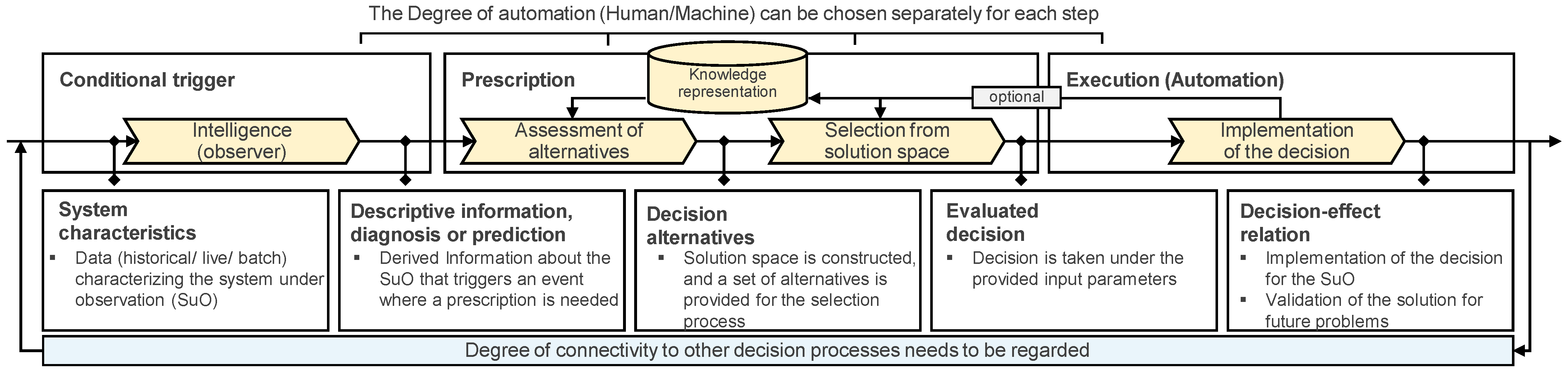

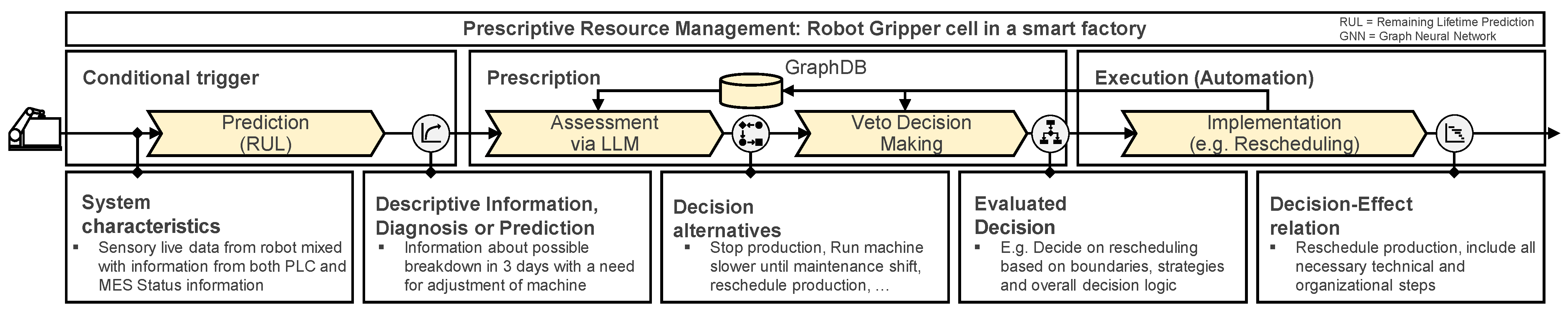

5.1. Framework for One Use Case

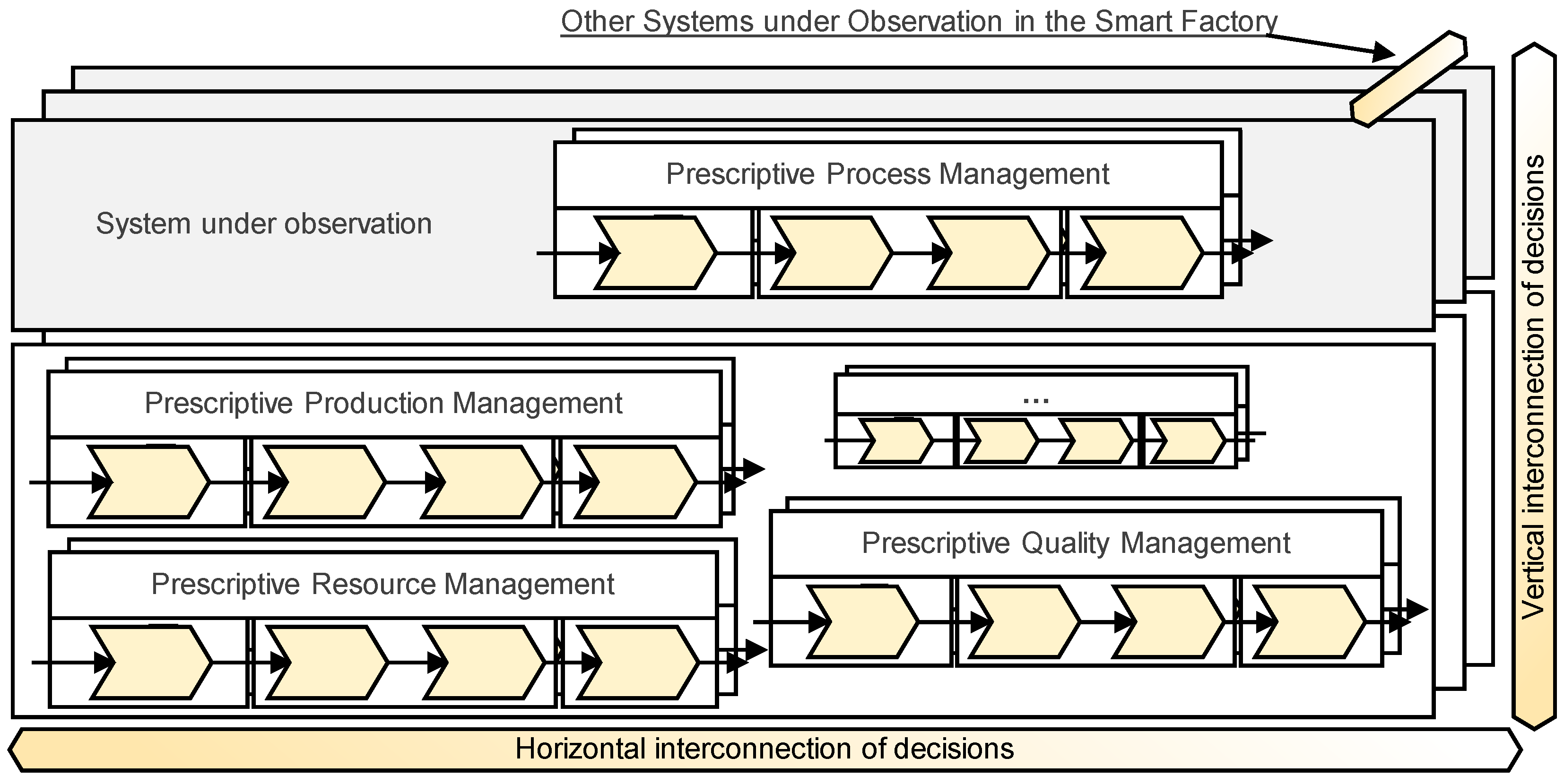

5.2. Integration of Multiple Use Cases into a System under Observation

- The degree of automation in each step (human/machine) as introduced into prescriptive analytics by Gartner [22];

- Possible feedback loops can turn the static system into a framework for learning from past decision processes (e.g., through preferences or metrics measuring the success of the prescribed and executed decision). Implications based on the needed real-time capability need to be regarded when choosing the right implementation pattern for prescriptive analytics [36];

- The amount of interconnectivity between the given decision engines and engines on the same level or levels beneath that (e.g., in the automation pyramid or a decision on a different time horizon) needs to be regarded for both the input and output of each implemented solution (in regards to a system of systems approach) [23,24];

- The way the framework is designed, it is applicable to one use case with a defined scope and system under observation. Resulting effects on other systems outside of the system under observation need to be addressed by additional vertically or horizontally connected frameworks (Figure 10). Thus, there are decisions taking place on the same level (e.g., in another robot cell) or above that (e.g., in the manufacturing execution system of the plant).

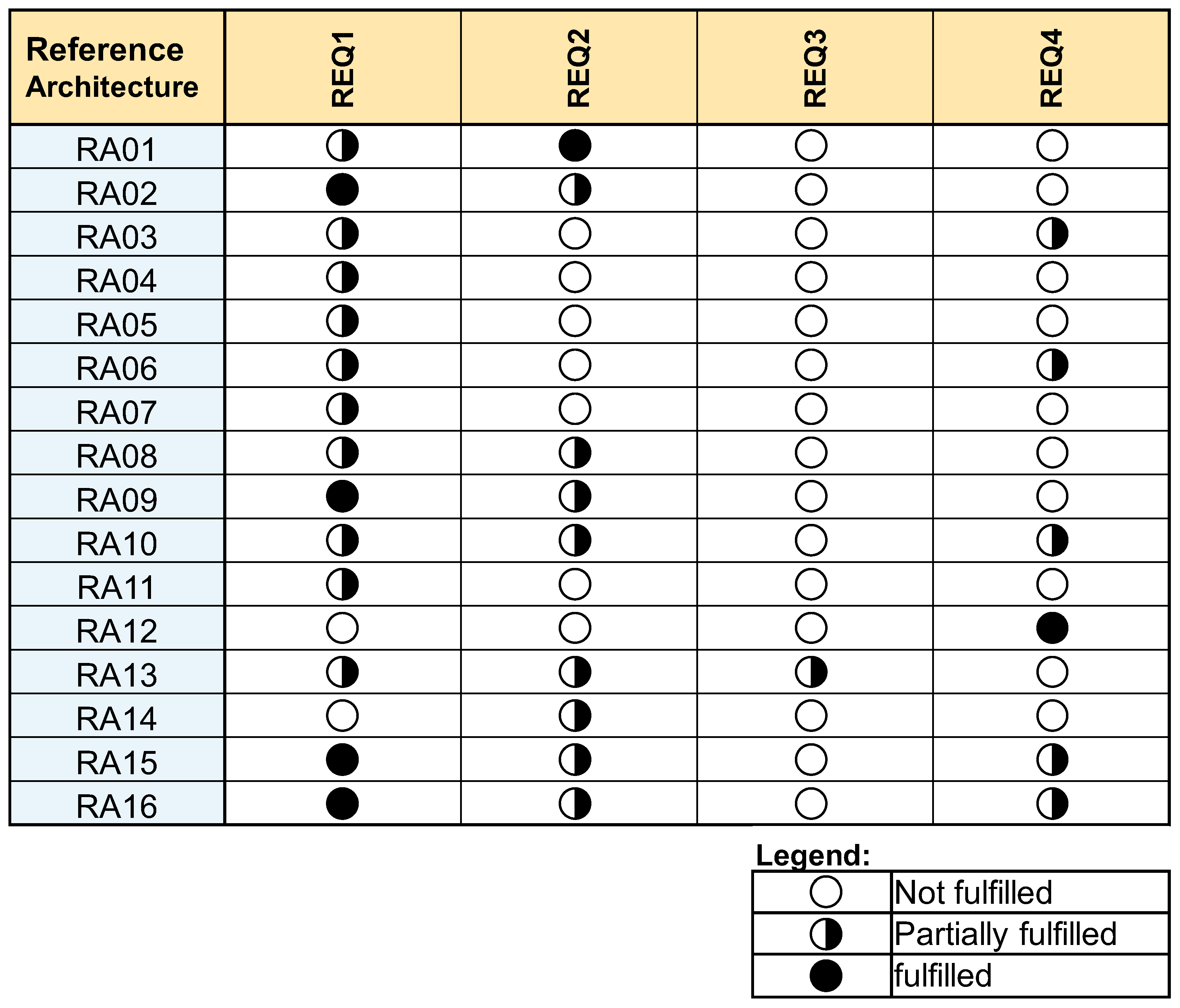

5.3. Definition of Type of Reference Architecture

- REQ1: Existing Manufacturing IT system taken into consideration (based on Section 3). To ensure practical relevance, it is essential to build on the current state of a factory rather than creating an architectural vision that cannot be realized in the near future. This includes integrating existing approaches from all kinds of analytics as well as control theory and operations research (based on Section 2);

- REQ2: Compatible with existing and established reference architectures. The relevant state of the art needs to be considered. This results in the integration or design of suitable interfaces to seamlessly integrate into the existing state of the art.

5.4. Selection of Design Strategy

5.5. Empirical Acquisition of Data

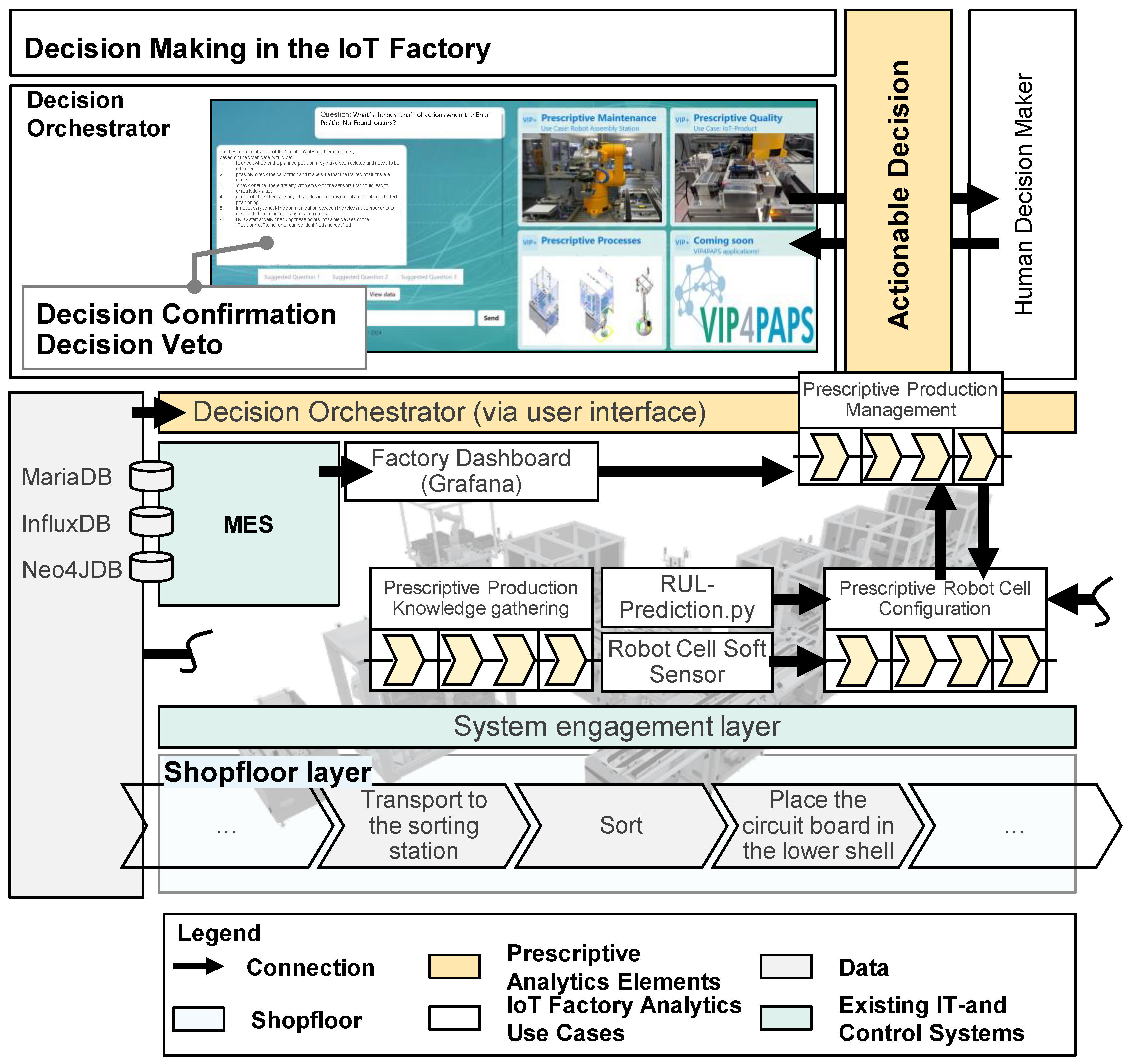

5.6. Construction of Reference Architecture

- Time scale of decisions taken: Use cases differ in terms of the urgency of the decision (ad hoc to long-term) [60] (p. 11). The degree of interconnectedness and the validity of the scope of the decision vary greatly [23]. The same applies to the impact of an individual decision taken [12] (p. 36). The goals, types and results of decisions vary as well [61] (p. 115). The type of interaction with the decision maker (human/algorithm) also provides for different characteristics of a use case [62] (p. 10);

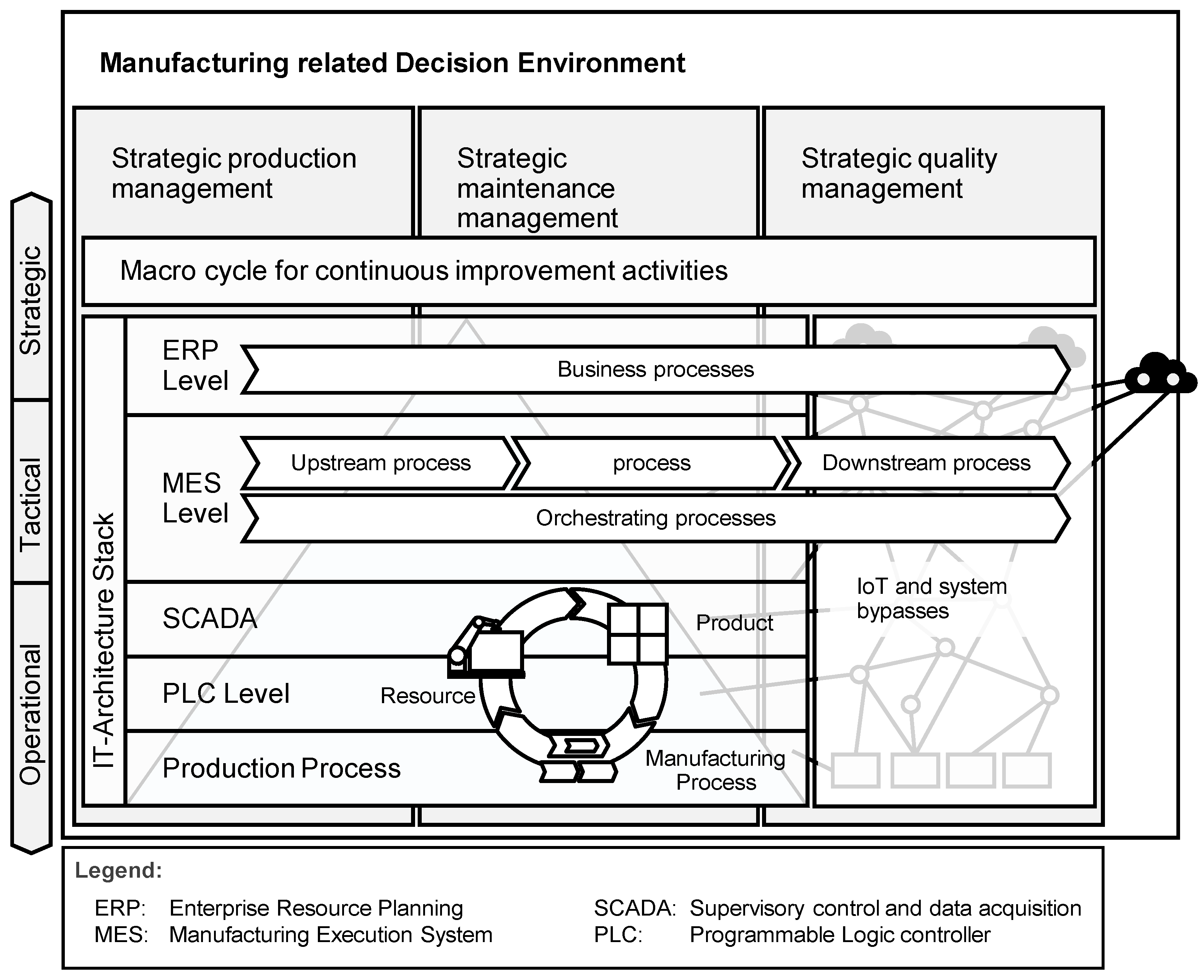

- IT-stack: IT systems vary in terms of their overall role, scope and degree of interconnectivity. There are three key activities in the context of smart factory data which include the horizontal and vertical integration of systems and data streams as well as a holistic systems engineering approach [63] (p. 15). Horizontal integration describes networking via the control and process management level in the context of procurement and distribution to other companies or entities. It enables fast reaction times in the event of changes and facilitates decentralized and flexible production [63] (p. 13). The core of vertical integration is the linking of IT systems across hierarchical levels. The main advantage of this integration effort is the possibility of synchronizing business processes and workflows across different companies [63,64]. The perspective of holistic systems engineering pursues a life cycle approach. Further data is added from the product development and utilization phase. These can be made available implicitly at all levels on an event-based basis [63] (p. 14). These endeavor towards networking within the automation pyramid are slowly breaking it up. Concepts from the Internet of Things are creating bypasses that enable singular solutions with direct networking and relocation of sub-functions to the cloud. It is rarely about replacing existing systems. Rather, the rigid framework for action is being replaced by a network-like structure. It remains to be seen to what extent both trends will level out [65] (p. 7);

- Relevant decision makers (departments): Which decision makers are involved (departments, individuals or groups) [16] (p. 13). The decision maker introduces a vital component into the decision-making process based on his preferences and goals.

- The system under observation (smart factory) itself (system under observation, see Section 2) is a relevant aspect. Here, it is briefly described by its resources, products and manufacturing processes [23]. They are interlocked with existing analytics solutions in the smart factory [68]. The existing analytics solutions usually consists of four layers: use case (business), analysis (algorithms), data pools (where the data is located) and data sources (e.g., resources and sensors) [69].

- Overview of the respective architecture elements:

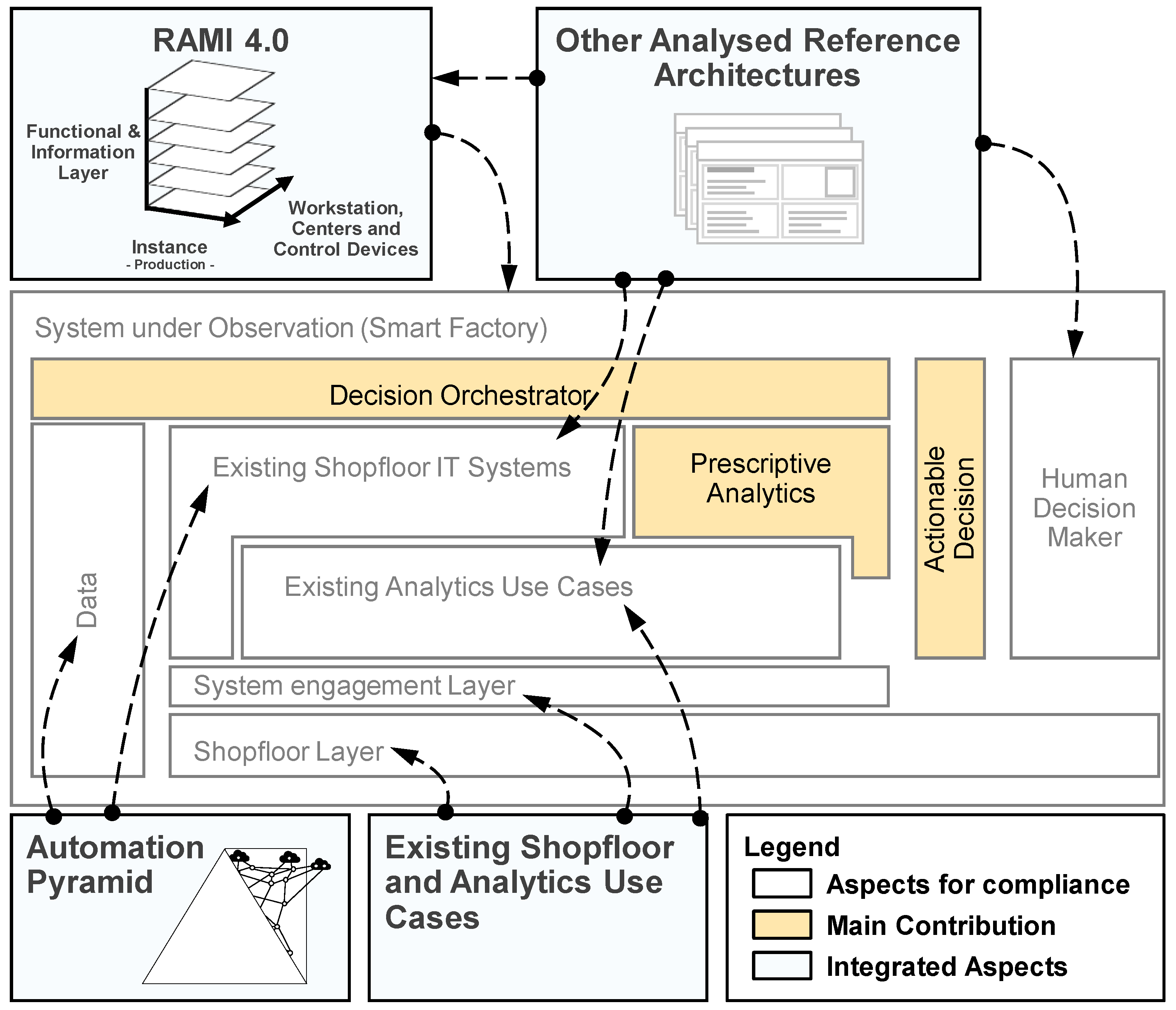

- Existing shopfloor IT systems, existing analytics use cases and shopfloor layer: These elements were already described in the black box view. The shopfloor layer represents the as-is shopfloor with its inherent complexity;

- System engagement layer: This level represents all real-time oriented systems in a smart factory, which are not based on analytics. This can represent all relevant data sources for analytics like control theory-based algorithms (e.g., compare [70]), digital twin-based technology ([71], descriptive) and other systems authorized to intervene with the shopfloor processes;

- Data: As present in most other reference architectures, the data layer represents the data flow through the smart factory IT stacks (horizontal and vertical interconnection) [63];

- Actionable decisions: They represent the core element in the conceptual architecture. They interconnect decision makers and respective systems [72] (p. 18);

- Human decision maker: The human decision maker is an essential element. Possessing up to a full level of autonomy, they will always be involved in decisions or their governance. Based on Gartner’s seven levels of hybrid decision making [21], we differentiate between the following decision interaction schemes: decision confirmation, decision veto, decision audit and decision demand. If a decision is demanded, advice- or recommendation-based outputs can occur;

- Decision Orchestrator: Lastly, some decision overview mechanisms need to be in place. Even though the concept of Industry 4.0 refers to implementing decentralized decision making, a global optimum still needs to be governed (and achieved). This might diverge from local optima of an area of the smart factory.

- Levels: The reference architecture is organized in three time-related levels. The action level focuses on real-time (matter of urgency) elements. The planning level addresses smart factory related processes on a higher level of abstraction. The business level gathers input from the surroundings of the system under observation (smart factory). This is in line with structuring approaches that often refer to operational, tactical and strategic decisions (e.g., [15] (p. 163)). All levels are in line with the general understanding of IT systems based on the automation pyramid;

- Decision triggers: There are different triggers (and connections) that may trigger the need for an actionable decision [42]. Business triggers from outside of the system under observation serve as an input. Direct shopfloor triggers may stem from sensors or partially autonomous systems that diagnose the need for actions to be taken. If a demand for a decision occurs from the human decision maker, another trigger type needs to be regarded;

- Data: The data layer is refined with additional information. Different kinds of data is regarded that is relevant for prescriptive analytics and the non-prescriptive types of analytics (expert knowledge [17] (p. 67), decision data and historical factory data [4]). These data can be available in the form most suitable to the given environment (e.g., graph based [76]).

5.7. Variability of Reference Architecture

6. Evaluation of the Constructed Reference Architecture

6.1. Demonstration and Application of the Proposed Reference Architecture

6.1.1. Enabler Use Case: Structured Gathering of Prescriptive Analytics Knowledge

6.1.2. Use Case: Prescriptive Resource Management

6.1.3. Use Case: Prescriptive Quality Management

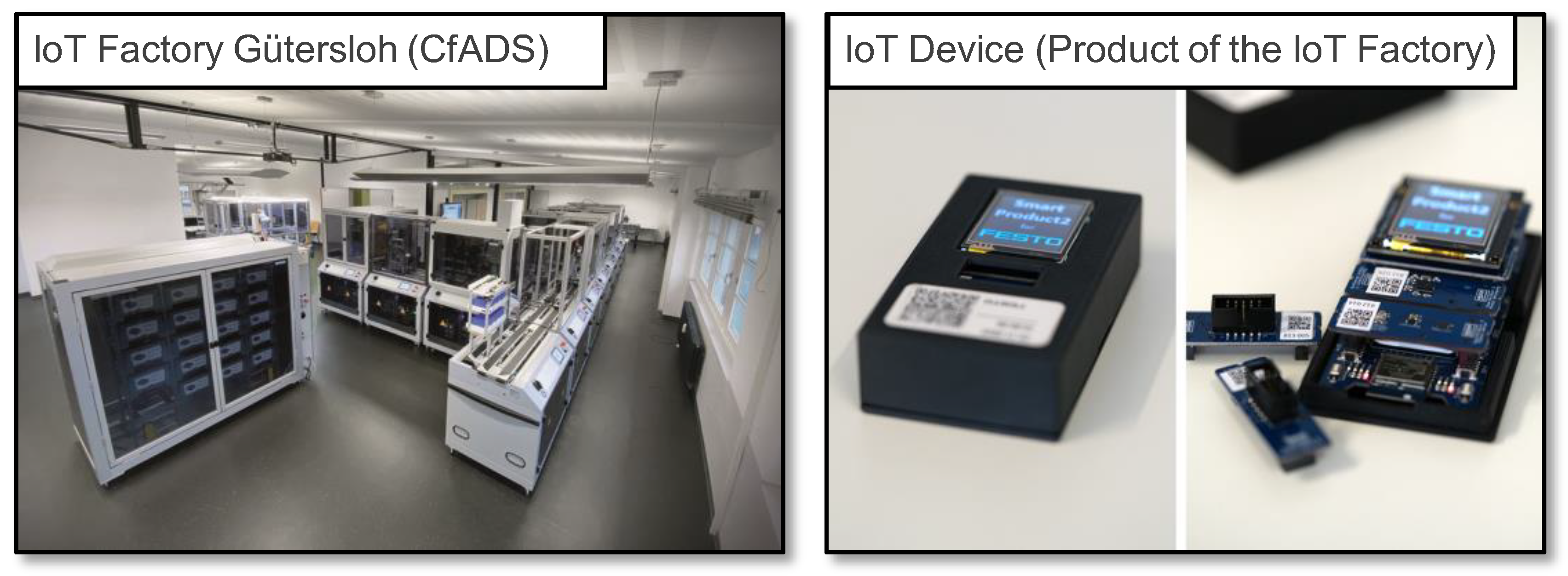

6.1.4. Application of the Reference Architecture to the IoT Factory

6.2. Integration of the Reference Architecture into the Existing State of the Art

- REQ1: Existing manufacturing IT systems are taken into consideration through integrating them into the core of the reference architecture. Variability is enabled through only defining interaction schemes and leaving room for the usage of different IT systems in the reference architecture;

- REQ2: The approach is compatible with existing and established reference architectures. This is ensured by building upon the existing state of the art. The detailed interconnection to other architectures is reasoned upon in the following section;

- REQ3: A focus on actionable decisions was ensured by integrating them into the architecture as a key element. Variability is enabled by defining different interaction modes;

- REQ4: The representation of different forms of decision interactions is enabled by the elements described for REQ3.

- The contribution of this article is the integration of prescriptive analytics into the existing logical connections regarding data flow and IT systems. Based on the state of the art (other analyzed reference architectures, compare Section 3.3), we conclude that the characteristics of prescriptive analytics were not fully addressed in the previously contributed architectures. Reference architectures like [81] already provide good insights into the overall integration of machine learning applications into the shopfloor. Use cases like [82,83] add value through defining specialized workflows that can be used as use case instance-based instantiations for the reference architecture;

- The overall system under observation (smart factory) is already well analyzed in established reference architectures like the automation pyramid, RAMI 4.0 and countless use case-specific analytics-based use case implementations. Thus, we only integrate the already existing findings and focus the value add on the prescriptive analytics-specific elements of the reference architecture.

6.3. Evaluation through Expert Interviews

7. Discussion

7.1. Discussion of the Framework for Singular Use Cases

7.2. Discussion on the Reference Architecture for the Integration of Prescriptive Analytics Use Cases into Smart Factories

8. Conclusions

- Related to this specific journal article: The provided view can be expanded to a set of views (framework) to address the conceptualization and implementation of prescriptive analytics. For this, additional views need to be examined and structured. Validation is needed to explore the interconnections to adjacent domains as well as more complex use cases and their connections;

- Related to the overall field of research: Additional use cases with a higher technical readiness level need to be developed to be able to further judge implications on organizations and overall decision optima when multiple use cases are at use. The establishment of a clearer understanding of what prescriptive analytics provides is needed so the term will be understood more clearly (and used accordingly) in the future.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Use Case 1 | [92] | Use Case 18 | [93] |

| Use Case 2 | [94] | Use Case 19 | [95] |

| Use Case 3 | [96] | Use Case 20 | [97] |

| Use Case 4 | [98] | Use Case 21 | [99] |

| Use Case 5 | [100] | Use Case 22 | [10] |

| Use Case 6 | [101] | Use Case 23 | [102] |

| Use Case 7 | [29] | Use Case 24 | [83] |

| Use Case 8 | [66] | Use Case 25 | [24] |

| Use Case 9 | [103] | Use Case 26 | [104] |

| Use Case 10 | [105] | Use Case 27 | [106] |

| Use Case 11 | [107] | Use Case 28 | [108] |

| Use Case 12 | [109] | Use Case 29 | [82] |

| Use Case 13 | [110] | Use Case 30 | [111] |

| Use Case 14 | [112] | Use Case 31 | [113] |

| Use Case 15 | [114] | Use Case 32 | [115] |

| Use Case 16 | [116] | Use Case 33 | [117] |

| Use Case 17 | [118] | Use Case 34 | [119] |

| Use Case 35 | [120] |

References

- Gachet, A.; Haettenschwiler, P. Development Processes of Intelligent Decision-making Support Systems: Review and Perspective. In Intelligent Decision-Making Support Systems: Foundations, Applications and Challenges; Gupta, J.N.D., Forgionne, G.A., Mora, M., Eds.; Springer: London, UK, 2006; pp. 97–121. ISBN 978-1-84628-228-7. [Google Scholar]

- de Almeida, A.T.; Bohoris, G.A. Decision theory in maintenance decision making. J. Qual. Maint. Eng. 1995, 1, 39–45. [Google Scholar] [CrossRef]

- Kiesel, R.; Gützlaff, A.; Schmitt, R.H.; Schuh, G. Methods and Limits of Data-Based Decision Support in Production Management. In Internet of Production; Brecher, C., Schuh, G., van der Aalst, W., Jarke, M., Piller, F.T., Padberg, M., Eds.; Springer International Publishing: Cham, Switzerland, 2024; ISBN 978-3-031-44496-8. [Google Scholar]

- Bousdekis, A.; Lepenioti, K.; Apostolou, D.; Mentzas, G. A Review of Data-Driven Decision-Making Methods for Industry 4.0 Maintenance Applications. Electronics 2021, 10, 828. [Google Scholar] [CrossRef]

- Duan, L.; Da Xu, L. Data Analytics in Industry 4.0: A Survey. Inf. Syst. Front. 2021, 1–17. [Google Scholar] [CrossRef]

- Bell, D.E.; Raiffa, H.; Tversky, A. (Eds.) Descriptive, Normative, and Prescriptive Interactions in Decision Making. In Decision Making; Cambridge University Press: Cambridge, UK, 2011; pp. 9–30. ISBN 9780521351492. [Google Scholar]

- von Enzberg, S.; Naskos, A.; Metaxa, I.; Köchling, D.; Kühn, A. Implementation and Transfer of Predictive Analytics for Smart Maintenance: A Case Study. Front. Comput. Sci. 2020, 2, 578469. [Google Scholar] [CrossRef]

- Schuetz, C.G.; Selway, M.; Thalmann, S.; Schrefl, M. Discovering Actionable Knowledge for Industry 4.0 from Data Mining to Predictive and Prescriptive Analytics. In Digital Transformation: Core Technologies and Emerging Topics from a Computer Science Perspective; Vogel-Heuser, B., Wimmer, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2023; p. 337. ISBN 978-3-662-65003-5. [Google Scholar]

- Weller, J.; Migenda, N.; Naik, Y.; Heuwinkel, T.; Kühn, A.; Kohlhase, M.; Schenk, W.; Dumitrescu, R. Reference Architecture for the Integration of Prescriptive Analytics Use Cases in Smart Factories. In Mathematics, Special Issue for Selected Papers From the 2023 IEEE International Conference on Advances in Data-Driven Analytics and Intelligent Systems, Marrakech, Morocco, 23–25 November 2023; MDPI: Basel, Switzerland, 2024. [Google Scholar]

- Choubey, S.; Benton, R.G.; Johnsten, T. A Holistic End-to-End Prescriptive Maintenance Framework. Data-Enabled Discov. Appl. 2020, 4, 11. [Google Scholar] [CrossRef]

- Niederhaus, M.; Migenda, N.; Weller, J.; Schenck, W.; Kohlhase, M. Technical Readiness of Prescriptive Analytics Platforms—A Survey. In Proceedings of the 35th FRUCT Conference—Open Innovations Association FRUCT, Tampere, Finland, 24–26 April 2024. [Google Scholar]

- Budde, L.; Hänggi, R.; Friedli, T.; Rüedy, A. Smart Factory Navigator: Identifying and Implementing the Most Beneficial Use Cases for Your Company—44 Use Cases That Will Drive Your Operational Performance and Digital Service Business; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Zenkert, J.; Weber, C.; Dornhöfer, M.; Abu-Rasheed, H.; Fathi, M. Knowledge Integration in Smart Factories. Encyclopedia 2021, 1, 792–811. [Google Scholar] [CrossRef]

- Roth, A. (Ed.) Einführung und Umsetzung von Industrie 4.0: Grundlagen, Vorgehensmodell und Use Cases aus der Praxis; Springer: Berlin/Heidelberg, Germany, 2016; ISBN 3662485044. [Google Scholar]

- Mockenhaupt, A. Digitalisierung und Künstliche Intelligenz in der Produktion; Springer: Wiesbaden, Germany, 2021; ISBN 978-3-658-32772-9. [Google Scholar]

- Becker, W.; Ulrich, P.; Botzkowski, T. Data Analytics im Mittelstand: Aus: Management ud Controlling im Mittelstand; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Lepenioti, K.; Bousdekis, A.; Apostolou, D.; Mentzas, G. Prescriptive analytics: Literature review and research challenges. Int. J. Inf. Manag. 2020, 50, 57–70. [Google Scholar] [CrossRef]

- von Rueden, L.; Mayer, S.; Beckh, K.; Georgiev, B.; Giesselbach, S.; Heese, R.; Kirsch, B.; Walczak, M.; Pfrommer, J.; Pick, A.; et al. Informed Machine Learning—A Taxonomy and Survey of Integrating Prior Knowledge into Learning Systems. IEEE Trans. Knowl. Data Eng. 2021, 35, 614–633. [Google Scholar] [CrossRef]

- Pfeiffer, S. The Vision of “Industrie 4.0” in the Making-a Case of Future Told, Tamed, and Traded. Nanoethics 2017, 11, 107–121. [Google Scholar] [CrossRef]

- Auer, J. Industry 4.0—Digitalisation to Mitigate Demographic Pressure: Germany Monitor—The Digital Economy and Structural Change; Deutsche Bank Research: Frankfurt am Main, Germany, 2018. [Google Scholar]

- Gartner. When to Augment Decisions with Artificial Intelligence: Guides for Effective Business Decision Making; Guide 3 of 5 2022; Gartner: Stamford, CT, USA, 2022. [Google Scholar]

- Steenstrup, K.; Sallam, R.L.; Eriksen, L.; Jacobson, S.F. Industrial Analytics Revolutionizes Big Data in the Digital Business; Gartner, Inc.: Stamford, CT, USA, 2014. [Google Scholar]

- Weller, J.; Migenda, N.; Liu, R.; Wegel, A.; von Enzberg, S.; Kohlhase, M.; Schenck, W.; Dumitrescu, R. Towards a Systematic Approach for Prescriptive Analytics Use Cases in Smart Factories. In ML4CPS—Machine Learning for Cyber Phyisical Systems; Springer: Cham, Switzerland, 2023. [Google Scholar]

- Stein, N.; Meller, J.; Flath, C.M. Big data on the shop-floor: Sensor-based decision-support for manual processes. J. Bus. Econ. 2018, 88, 593–616. [Google Scholar] [CrossRef]

- Lu, J.; Yan, Z.; Han, J.; Zhang, G. Data-Driven Decision-Making (D 3 M): Framework, Methodology, and Directions. IEEE Trans. Emerg. Top. Comput. Intell. 2019, 3, 286–296. [Google Scholar] [CrossRef]

- Luetkehoff, B.; Blum, M.; Schroeter, M. Development of a Collaborative Platform for Closed Loop Production Control. In Collaborative Networks of Cognitive Systems; Camarinha-Matos, L.M., Afsarmanesh, H., Rezgui, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 278–285. ISBN 978-3-319-99126-9. [Google Scholar]

- Shivakumar, R. How to Tell Which Decisions are Strategic. Calif. Manag. Rev. 2014, 56, 78–97. [Google Scholar] [CrossRef]

- Weller, J.; Migenda, N.; von Enzberg, S.; Kohlhase, M.; Schenck, W.; Dumitrescu, R. Design decisions for integrating Prescriptive Analytics Use Cases into Smart Factories. In Proceedings of the 34rd CIRP Design Conference, Bedford, UK, 3–5 June 2024. [Google Scholar]

- Kumari, M.; Kulkarni, M.S. Developing a prescriptive decision support system for shop floor control. Ind. Manag. Data Syst. 2022, 122, 1853–1881. [Google Scholar] [CrossRef]

- Simon, H.A. The New Science of Management Decision; Harper & Brothers: New York, NY, USA, 1960. [Google Scholar]

- Panagiotou, G. Conjoining prescriptive and descriptive approaches. Manag. Decis. 2008, 46, 553–564. [Google Scholar] [CrossRef]

- Haas, S.; Hüllermeier, E. A Prescriptive Machine Learning Approach for Assessing Goodwill in the Automotive Domain. In Proceedings of the Machine Learning and Knowledge Discovery in Databases, European Conference, ECML PKDD 2022, Proceedings, Part VI, Grenoble, France, 19–23 September 2022. [Google Scholar]

- Partelow, S. What is a framework? Understanding their purpose, value, development and use. J. Environ. Stud. Sci. 2023, 13, 510–519. [Google Scholar] [CrossRef]

- Piezunka, H. Technological platforms. J. Betriebswirtsch 2011, 61, 179–226. [Google Scholar] [CrossRef]

- ISO/IEC 20547-3:2020; Information Technology—Big Data Reference Architecture—Part 3: Reference Architecture. ISO: London, UK, 2020.

- Wissuchek, C.; Zschech, P. Survey and Systematization of Prescriptive Analytics Systems: Towards Archetypes from a Human-Machine-Collaboration Perspective; ECIS: London, UK, 2023. [Google Scholar]

- Arnold, L.; Jöhnk, J.; Vogt, F.; Urbach, N. IIoT platforms’ architectural features—A taxonomy and five prevalent archetypes. Electron. Mark. 2022, 32, 927–944. [Google Scholar] [CrossRef]

- Moghaddam, M.; Cadavid, M.N.; Kenley, C.R.; Deshmukh, A.V. Reference architectures for smart manufacturing: A critical review. J. Manuf. Syst. 2018, 49, 215–225. [Google Scholar] [CrossRef]

- Ismail, A.; Truong, H.-L.; Kastner, W. Manufacturing process data analysis pipelines: A requirements analysis and survey. J. Big Data 2019, 6, 1. [Google Scholar] [CrossRef]

- Soares, N.; Monteiro, P.; Duarte, F.J.; Machado, R.J. Extending the scope of reference models for smart factories. Procedia Comput. Sci. 2021, 180, 102–111. [Google Scholar] [CrossRef]

- Galster, M.; Avgeriou, P. Empirically-grounded reference architectures. In Proceedings of the Joint ACM SIGSOFT Conference—QoSA and ACM SIGSOFT Symposium—ISARCS on Quality of Software Architectures—QoSA and Architecting Critical Systems—ISARCS. Comparch ‘11: Federated Events on Component-Based Software Engineering and Software Architecture, Boulder, CO, USA, 20–24 June 2011; Crnkovic, I., Stafford, J.A., Petriu, D., Happe, J., Inverardi, P., Eds.; ACM: New York, NY, USA, 2011; pp. 153–158, ISBN 9781450307246. [Google Scholar]

- Weller, J.; Migenda, N.; Wegel, A.; Kohlhase, M.; Schenck, W.; Dumitrescu, R. Conceptual Framework for Prescriptive Analytics Based on Decision Theory in Smart Factories. In Proceedings of the ADACIS-ADACIS 2023: International Conference on Advances in Data-driven Analytics and Intelligent Systems, Marrakesh, Morocco, 23–25 November 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Angelov, S.; Grefen, P.; Greefhorst, D. A classification of software reference architectures: Analyzing their success and effectiveness. In Proceedings of the 2009 Joint Working IEEE/IFIP Conference on Software Architecture & European Conference on Software Architecture, 3rd European Conference on Software Architecture (ECSA), Cambridge, UK, 14–17 September 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 141–150, ISBN 978-1-4244-4984-2. [Google Scholar]

- Vogel, O.; Arnold, I.; Chughtai, A.; Ihler, E.; Kehrer, T.; Mehlig, U.; Zdun, U. Software-Architektur: Grundlagen-Konzepte-Praxis, 2. Aufl. 2009; Spektrum Akademischer Verlag: Heidelberg, Germany, 2009; ISBN 9783827422675. [Google Scholar]

- Myers, M.D.; Newman, M. The qualitative interview in IS research: Examining the craft. Inf. Organ. 2007, 17, 2–26. [Google Scholar] [CrossRef]

- Angelov, S.; Trienekens, J.J.M.; Grefen, P. Towards a Method for the Evaluation of Reference Architectures: Experiences from a Case. In Proceedings of the Second European Conference on Software Architecture, Paphos, Cyprus, 29 September–1 October 2008. [Google Scholar]

- Shearer, C. The CRISP-DM Model: The New Blueprint for Data Mining. J. Data Warehous. 2000, 5, 13–22. [Google Scholar]

- Martinez, I.; Viles, E.; Olaizola, I.G. Data Science Methodologies: Current Challenges and Future Approaches. Big Data Res. 2021, 24, 100183. [Google Scholar] [CrossRef]

- Mora, M.; Cervantes, F.; Forgionne, G.; Gelman, O. On Frameworks and Architectures for Intelligent Decision-Making Support Systems. In Encyclopedia of Decision Making and Decision Support Technologies; Adam, F., Humphreys, P., Eds.; IGI Global: London, UK, 2008; pp. 680–690. ISBN 9781599048437. [Google Scholar]

- Meister, F.; Khanal, P.; Daub, R. Digital-supported problem solving for shopfloor steering using case-based reasoning and Bayesian networks. Procedia CIRP 2023, 119, 140–145. [Google Scholar] [CrossRef]

- Trunk, A.; Birkel, H.; Hartmann, E. On the current state of combining human and artificial intelligence for strategic organizational decision making. Bus. Res. 2020, 13, 875–919. [Google Scholar] [CrossRef]

- Big Data Value Association. Big Data Challenges Big Data Challenges: A Discussion Paper on Big Data challenges for BDVA and EFFRA Research & Innovation Roadmaps Alignment; Version 1; Big Data Value Association: Brussels, Belgium, 2018. [Google Scholar]

- Gabriel, S.; Falkowski, T.; Graunke, J.; Dumitrescu, R.; Murrenhoff, A.; Kretschmer, V.; Hompel, M.T. Künstliche Intelligenz und industrielle Arbeit–Perspektiven und Gestaltungsoptionen: Expertise des Forschungsbeirats Industrie 4.0; Acatech Expertise–Deutsche Akademie der Technikwissenschaften: München, Germany, 2024. [Google Scholar]

- Weller, J.; Migenda, N.; Kühn, A.; Dumitrescu, R. Prescriptive Analytics Data Canvas: Strategic Planning For Prescriptive Analytics In Smart Factories. In Proceedings of the CPSL-Conference on Production Systems and Logistics, Honululu, HI, USA, 9–12 July 2024; Publish-Ing: Hannover, Germany, 2024. [Google Scholar]

- Thiess, T.; Müller, O. Towards Design Principles for Data-Driven Decision Making—An Action Design Research Project in the Maritime Industry. In ECIS 2018 Proceedings; AIS Electronic Library (AISeL): Atlanta, GE, USA, 2018. [Google Scholar]

- Karim, R.; Galar, D.; Kumar, U. AI factory: Theories, Applications and Case Studies, 1st ed.; CRC Press Taylor & Francis Group: Boca Raton, FL, USA, 2023; ISBN 9781003208686. [Google Scholar]

- ISO/IEC/IEEE 42010:2022; Software, Systems and Enterprise—Architecture Description. ISO: London, UK, 2022.

- Lankhorst, M. Enterprise Architecture at Work: Modelling, Communication and Analysis, 4th ed.; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Ghasemaghaei, M.; Turel, O. The Duality of Big Data in Explaining Decision-Making Quality. J. Comput. Inf. Syst. 2023, 63, 1093–1111. [Google Scholar] [CrossRef]

- Edwards, J.S.; Rodriguez, E. Analytics and Knowledge Management—Chapter 1: Knowledge Management for Action-Oriented Analytics; CRC Press Taylor & Francis Group: Boca Raton, FL, USA; London, UK; New York, NY, USA, 2018; ISBN 9781315209555. [Google Scholar]

- Longard, L.; Bardy, S.; Metternich, J. Towards a Data-Driven Performance Management in Digital Shop Floor Management; Publish-Ing: Hannover, Germany, 2022. [Google Scholar]

- Ransbotham, S.; Khodabandeh, S.; Kiron, D.; Candelon, F.; Chu, M.; LaFountain, B. Expanding AI’s Impact with Organizational Learning; MITSloan Management Review Research report in collaboration with BCG; MIT: Boston, MA, USA, 2020. [Google Scholar]

- Dumitrescu, R.; Gausemeier, J.; Kühn, A.; Luckey, M.; Plass, C.; Schneider, M.; Westermann, T. Auf dem Weg zur Industrie 4.0–Erfolgsfaktor Referenzarchitektur; It’s OWL Clustermanagement: Paderborn, Germany, 2015. [Google Scholar]

- Kagermann, H.; Wahlster, W.; Helbig, J. Forschungsunion Wirtschaft-Wissenschaft. Im Fokus: Das Zukunftsprojekt Industrie 4.0; Handlungsempfehlungen zur Umsetzung; Bericht der Promotorengruppe Kommunikation; Forschungsunion. 2012. Available online: https://www.acatech.de/wp-content/uploads/2018/03/industrie_4_0_umsetzungsempfehlungen.pdf (accessed on 1 May 2024).

- Meudt, T. Die Automatisierungspyramide-Ein Literaturüberblick. 2017. Available online: https://www.researchgate.net/profile/Tobias-Meudt/publication/318788885_Die_Automatisierungspyramide_-_Ein_Literaturuberblick/links/619f8d18b3730b67d5679e63/Die-Automatisierungspyramide-Ein-Literaturueberblick.pdf (accessed on 1 May 2024).

- Schuh, G.; Prote, J.-P.; Busam, T.; Lorenz, R.; Netland, T.H. Using Prescriptive Analytics to Support the Continuous Improvement Process; Springer International Publishing: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Meister, M.; Böing, T.; Batz, S.; Metternich, J. Problem-solving process design in production: Current progress and action required. Procedia CIRP 2018, 78, 376–381. [Google Scholar] [CrossRef]

- Geissbauer, R.; Bruns, M.; Wunderlin, J. PwC Digital Factory Transformation Survey: Digital Backbone, Use Cases and Technologies, Organizational Setup, Strategy and Roadmap, Investment Focus 2022. Available online: https://theonliner.ch/uploads/heroes/pwc-digital-factory-transformation-survey-2022.pdf (accessed on 1 May 2024).

- Kühn, A.; Joppen, R.; Reinhart, F.; Röltgen, D.; von Enzberg, S.; Dumitrescu, R. Analytics Canvas—A Framework for the Design and Specification of Data Analytics Projects. Procedia CIRP 2018, 70, 162–167. [Google Scholar] [CrossRef]

- Dumitrescu, R.; Anacker, H.; Gausemeier, J. Design framework for the integration of cognitive functions into intelligent technical systems. Prod. Eng. Res. Devel. 2013, 7, 111–121. [Google Scholar] [CrossRef]

- Lick, J.; Disselkamp, J.-P.; Kattenstroth, F.; Trienens, M.; Rasor, R.; Kühn, A.; Dumitrescu, R. Digital Factory Twin: A Practioner-Driven Approach for for Integrated Planning of the Enterprise Architecture. In Proceedings of the 34th CIRP Design Conference, Cranfield, UK, 3–5 June 2024. [Google Scholar]

- Cao, L. Data Science. ACM Comput. Surv. 2018, 50, 1–42. [Google Scholar] [CrossRef]

- Siemens. Senseye Predictive Maintenance-Whitepaper True Cost of Downtime 2022. 2023. Available online: https://assets.new.siemens.com/siemens/assets/api/uuid:3d606495-dbe0-43e4-80b1-d04e27ada920/dics-b10153-00-7600truecostofdowntime2022-144.pdf (accessed on 1 May 2024).

- Wegel, A.; Sahrhage, P.; Rabe, M.; Dumitrescu, R. Referenzarchitektur für Smart Services. Stuttgarter Symposium für Produktentwicklung SSP 2021: Stuttgart, 20. Mai 2021, Wissenschaftliche Konferenz; Fraunhofer-Institut für Arbeitswirtschaft und Organisation IAO: Stuttgart, Germany, 2021. [Google Scholar] [CrossRef]

- Rabe, M. Systematik zur Konzipierung von Smart Services. Ph.D. Dissertation, Universität Paderborn, Paderborn, Germany, 2019. [Google Scholar]

- Hodler, A.E. Artificial Intelligence & Graph Technology: Enhancing AI with Context & Connections; Neo4j, Inc.: San Mateo, CA, USA, 2021. [Google Scholar]

- Branke, J.; Mnif, M.; Müller-Schloer, C.; Prothmann, H.; Richter, U.; Rochner, F.; Schmeck, H. Organic Computing-Addressing Complexity by Controlled Self-Organization. In Proceedings of the Second International Symposium on Leveraging Applications of Formal Methods, Verification and Validation (isola 2006), Paphos, Cyprus, 15–19 November 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 185–191, ISBN 978-0-7695-3071-0. [Google Scholar]

- OpenGroup. The ArchiMate® Enterprise Architecture Modeling Language: About the ArchiMate Modeling Language. Available online: https://www.opengroup.org/archimate-forum/archimate-overview (accessed on 1 May 2024).

- HSBI, Center for Applied Data Science. IoT-Factory. Available online: https://www.hsbi.de/ium/cfads/projekte/iot-factory (accessed on 26 March 2024).

- North, C. INFORMATION VISUALIZATION: Chapter 43. In Handbook of Human Factors and Ergonomics, 4th ed.; Salvendy, G., Ed.; Wiley: Hoboken, NJ, USA, 2012; ISBN 978-0-470-52838-9. [Google Scholar]

- Wostmann, R.; Schlunder, P.; Temme, F.; Klinkenberg, R.; Kimberger, J.; Spichtinger, A.; Goldhacker, M.; Deuse, J. Conception of a Reference Architecture for Machine Learning in the Process Industry. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1726–1735, ISBN 978-1-7281-6251-5. [Google Scholar]

- Padovano, A.; Longo, F.; Nicoletti, L.; Gazzaneo, L.; Chiurco, A.; Talarico, S. A prescriptive maintenance system for intelligent production planning and control in a smart cyber-physical production line. Procedia CIRP 2021, 104, 1819–1824. [Google Scholar] [CrossRef]

- Ansari, F.; Glawar, R.; Nemeth, T. PriMa: A prescriptive maintenance model for cyber-physical production systems. Int. J. Comput. Integr. Manuf. 2019, 32, 482–503. [Google Scholar] [CrossRef]

- von Enzberg, S.; Weller, J.; Brock, J.; Merkelbach, S.; Panzner, M.; Lick, J.; Kühn, A.; Dumitrescu, R. On the Current State of Industrial Data Science: Challenges, Best Practices, and Future Directions. In Proceedings of the 57th CIRP Conference on Manufacturing Systems 2024 (CMS 2024), Póvoa de Varzim, Portugal, 6–9 July 2024. [Google Scholar]

- Koot, M.; Mes, M.; Iacob, M.E. A systematic literature review of supply chain decision making supported by the Internet of Things and Big Data Analytics. Comput. Ind. Eng. 2021, 154, 107076. [Google Scholar] [CrossRef]

- Hüllermeier, E.; Waegeman, W. Aleatoric and Epistemic Uncertainty in Machine Learning: An Introduction to Concepts and Methods. Mach. Learn. 2021, 110, 457–506. [Google Scholar] [CrossRef]

- Hüllermeier, E. Prescriptive Machine Learning for Automated Decision Making: Challenges and Opportunities. 2021. Available online: http://arxiv.org/pdf/2112.08268v1 (accessed on 1 May 2024).

- Hankel, M.; Rexroth, B. The Reference Architectural Model Industrie 4.0; ZVEI: Frankfurt, Germany, 2015. [Google Scholar]

- Korsten, G.; Aysolmaz, B.; Turetken, O.; Edel, D.; Ozkan, B. ADA-CMM: A Capability Maturity Model for Advanced Data Analytics. In Proceedings of the 55th Hawaii International Conference on System Sciences, Virtual, 3–7 January 2022. [Google Scholar]

- Beauvoir, P.; Sarrodie, J.-B. Archi-Archimate Modelling (Website): Archi Mate Is a Registered Trademark of the Open Group. Available online: https://www.archimatetool.com/ (accessed on 7 May 2024).

- Gabriel, S.; Kühn, A.; Dumitrescu, R. Strategic planning of the collaboration between humans and artificial intelligence in production. Procedia CIRP 2023, 120, 1309–1314. [Google Scholar] [CrossRef]

- Lepenioti, K.; Pertselakis, M.; Bousdekis, A.; Louca, A.; Lampathaki, F.; Apostolou, D.; Mentzas, G.; Anastasiou, S. Machine Learning for Predictive and Prescriptive Analytics of Operational Data in Smart Manufacturing 2020; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Jasiulewicz-Kaczmarek, M.; Gola, A. Maintenance 4.0 Technologies for Sustainable Manufacturing—An Overview. IFAC-PapersOnLine 2019, 52, 91–96. [Google Scholar] [CrossRef]

- Listl, F.G.; Fischer, J.; Rosen, R.; Sohr, A.; Wehrstedt, J.C.; Weyrich, M. Decision Support on the Shop Floor Using Digital Twins. In Advances in Production Management Systems. Artificial Intelligence for Sustainable and Resilient Production Systems; Dolgui, A., Bernard, A., Lemoine, D., von Cieminski, G., Romero, D., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 284–292. ISBN 978-3-030-85873-5. [Google Scholar]

- Matenga, A.; Murena, E.; Mpofu, K. Prescriptive Modelling System Design for an Armature Multi-coil Rewinding Cobot Machine. Procedia CIRP 2020, 91, 284–289. [Google Scholar] [CrossRef]

- Gröger, C. Building an Industry 4.0 Analytics Platform. Datenbank Spektrum 2018, 18, 5–14. [Google Scholar] [CrossRef]

- Saadallah, A.; Büscher, J.; Abdulaaty, O.; Panusch, T.; Deuse, J.; Morik, K. Explainable Predictive Quality Inspection using Deep Learning in Electronics Manufacturing. Procedia CIRP 2022, 107, 594–599. [Google Scholar] [CrossRef]

- Adesanwo, M.; Bello, O.; Olorode, O.; Eremiokhale, O.; Sanusi, S.; Blankson, E. Advanced analytics for data-driven decision making in electrical submersible pump operations management. In Proceedings of the SPE Nigeria Annual International Conference and Exhibition 2017, Lagos, Nigeria, 31 July–2 August 2017. [Google Scholar] [CrossRef]

- Silva, S.; Vyas, V.; Afonso, P.; Boris, B. Prescriptive Cost Analysis in Manufacturing Systems. IFAC-PapersOnLine 2022, 55, 484–489. [Google Scholar] [CrossRef]

- Beham, A.; Raggl, S.; Hauder, V.A.; Karder, J.; Wagner, S.; Affenzeller, M. Performance, quality, and control in steel logistics 4.0. Procedia Manuf. 2020, 42, 429–433. [Google Scholar] [CrossRef]

- Jin, Y.; Qin, S.J.; Huang, Q. Prescriptive analytics for understanding of out-of-plane deformation in additive manufacturing. In Proceedings of the 2016 IEEE International Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–25 August 2016. [Google Scholar]

- Soltanpoor, R.; Sellis, T. Prescriptive Analytics for Big Data. Database Theory and Applications. In Proceedings of the 27th Australasian Database Conference, ADC 2016, Sydney, NSW, Australia, 28–29 September 2016; Volume 9877, pp. 245–256. [Google Scholar] [CrossRef]

- Vater, J.; Schamberger, P.; Knoll, A.; Winkle, D. Fault classification and correction based on convolutional neural networks exemplified by laser welding of hairpin windings. In Proceedings of the 9th International Electric Drives Production Conference, 2019-Proceedings, Esslingen, Germany, 3–4 December 2019. [Google Scholar]

- Ansari, F.; Glawar, R.; Sihn, W. Prescriptive Maintenance of CPPS by Integrating Multimodal Data with Dynamic Bayesian Networks. In Machine Learning for Cyber Physical Systems; Beyerer, J., Maier, A., Niggemann, O., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; pp. 1–8. ISBN 978-3-662-59083-6. [Google Scholar]

- Vater, J.; Harscheidt, L.; Knoll, A. A Reference Architecture Based on Edge and Cloud Computing for Smart Manufacturing. In Proceedings of the Proceedings-International Conference on Computer Communications and Networks, ICCCN, Valencia, Spain, 29 July–1 August 2019; pp. 1–7. [Google Scholar] [CrossRef]

- González, A.G.; Nieto, E.; Leturiondo, U. A Prescriptive Analysis Tool for Improving Manufacturing Processes; Springer International Publishing: Cham, Switzerland, 2022; pp. 283–291. [Google Scholar] [CrossRef]

- Brodsky, A.; Shao, G.; Krishnamoorthy, M.; Narayanan, A.; Menasce, D.; Ak, R. Analysis and optimization in smart manufacturing based on a reusable knowledge base for process performance models. In Proceedings of the 2015 IEEE International Conference on Big Data (Big Data), Santa Clara, CA, USA, 29 October–1 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1418–1427, ISBN 978-1-4799-9926-2. [Google Scholar]

- Tham, C.-K.; Sharma, N.; Hu, J. Model-based and Model-free Prescriptive Maintenance on Edge Computing Nodes. In Proceedings of the 2023 IEEE 97th Vehicular Technology Conference (VTC2023-Spring), Florence, Italy, 20–23 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6, ISBN 979-8-3503-1114-3. [Google Scholar]

- Faisal, A.M.; Karthigeyan, L. Data Analytics based Prescriptive Analytics for Selection of Lean Manufacturing System. In Proceedings of the 6th International Conference on Inventive Computation Technologies, ICICT 2021, Coimbatore, India, 20–22 January 2021. [Google Scholar] [CrossRef]

- Kuzyakov, O.N.; Andreeva, M.A.; Gluhih, I.N. Applying Case-Based Reasoning Method for Decision Making in IIoT System. In Proceedings of the 2019 International Multi-Conference on Industrial Engineering and Modern Technologies (FarEastCon), Vladivostok, Russia, 1–4 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6, ISBN 978-1-7281-0061-6. [Google Scholar]

- Matyas, K.; Nemeth, T.; Kovacs, K.; Glawar, R. A procedural approach for realizing prescriptive maintenance planning in manufacturing industries. CIRP Ann. 2017, 66, 461–464. [Google Scholar] [CrossRef]

- Thammaboosadee, S.; Wongpitak, P. An Integration of Requirement Forecasting and Customer Segmentation Models towards Prescriptive Analytics For Electrical Devices Production. In Proceedings of the 2018 International Conference on Information Technology (InCIT), Khon Kaen, Thailand, 24–26 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5, ISBN 978-974-19-6032-3. [Google Scholar]

- Elbasheer, M.; Longo, F.; Mirabelli, G.; Padovano, A.; Solina, V.; Talarico, S. Integrated Prescriptive Maintenance and Production Planning: A Machine Learning Approach for the Development of an Autonomous Decision Support Agent. IFAC-PapersOnLine 2022, 55, 2605–2610. [Google Scholar] [CrossRef]

- Vater, J.; Harscheidt, L.; Knoll, A. Smart Manufacturing with Prescriptive Analytics. In Proceedings of the ICITM 2019, Cambridge, UK, 2–4 March 2019; IEEE: Piscataway, NJ, USA, 2019. ISBN 9781728132686. [Google Scholar]

- Das, S. Maintenance Action Recommendation Using Collaborative Filtering. Int. J. Progn. Health Manag. 2013, 4. [Google Scholar] [CrossRef]

- Gyulai, D.; Bergmann, J.; Gallina, V.; Gaal, A. Towards a connected factory: Shop-floor data analytics in cyber-physical environments. Procedia CIRP 2019, 86, 37–42. [Google Scholar] [CrossRef]

- John, I.; Karumanchi, R.; Bhatnagar, S. Predictive and Prescriptive Analytics for Performance Optimization: Framework and a Case Study on a Large-Scale Enterprise System. In Proceedings of the 2019 18th IEEE International Conference on Machine Learning and Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 876–881, ISBN 978-1-7281-4550-1. [Google Scholar]

- Hribernik, K.; von Stietencron, M.; Ntalaperas, D.; Thoben, K.-D. Unified Predictive Maintenance System—Findings Based on its Initial Deployment in Three Use Case. IFAC-PapersOnLine 2020, 53, 191–196. [Google Scholar] [CrossRef]

- Bousdekis, A.; Papageorgiou, N.; Magoutas, B.; Apostolou, D.; Mentzas, G. Sensor-driven Learning of Time-Dependent Parameters for Prescriptive Analytics. IEEE Access 2020, 8, 92383–92392. [Google Scholar] [CrossRef]

- Mohan, S.P.; S, J.N. A prescriptive analytics approach for tool wear monitoring using machine learning techniques. In Proceedings of the 2023 Third International Conference on Secure Cyber Computing and Communication (ICSCCC), Jalandhar, India, 26–28 May 2023; pp. 228–233. [Google Scholar] [CrossRef]

- Vater, J.; Schlaak, P.; Knoll, A. A Modular Edge-/Cloud-Solution for Automated Error Detection of Industrial Hairpin Weldings using Convolutional Neural Networks. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; pp. 505–510. [Google Scholar] [CrossRef]

- Divyashree, N.; Nandini Prasad, K.S. Design and Development of We-CDSS Using Django Framework: Conducing Predictive and Prescriptive Analytics for Coronary Artery Disease. IEEE Access 2022, 10, 119575–119592. [Google Scholar] [CrossRef]

- Hentschel, R. Developing Design Principles for a Cloud Broker Platform for SMEs. In Proceedings of the 2020 IEEE 22nd Conference on Business Informatics (CBI), Antwerp, Belgium, 22–24 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 290–299, ISBN 978-1-7281-9926-9. [Google Scholar]

- Perea, R.V.; Festijo, E.D. Analytics Platform for Morphometric Grow out and Production Condition of Mud Crabs of the Genus Scylla with K-Means. In Proceedings of the 4th International Conference of Computer and Informatics Engineering (IC2IE), Depok, Indonesia, 14–15 September 2021; pp. 117–122. [Google Scholar] [CrossRef]

- Madrid, M.C.R.; Malaki, E.G.; Ong, P.L.S.; Solomo, M.V.S.; Suntay, R.A.L.; Vicente, H.N. Healthcare Management System with Sales Analytics using Autoregressive Integrated Moving Average and Google Vision. In Proceedings of the 2020 IEEE 12th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Manila, Philippines, 3–7 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6, ISBN 978-1-6654-1971-0. [Google Scholar]

- Bashir, M.R.; Gill, A.Q.; Beydoun, G. A Reference Architecture for IoT-Enabled Smart Buildings. SN Comput. Sci. 2022, 3, 493. [Google Scholar] [CrossRef]

- Lepenioti, K.; Bousdekis, A.; Apostolou, D.; Mentzas, G. Human-Augmented Prescriptive Analytics With Interactive Multi-Objective Reinforcement Learning. IEEE Access 2021, 9, 100677–100693. [Google Scholar] [CrossRef]

- Sam Plamoottil, S.; Kunden, B.; Yadav, A.; Mohanty, T. Inventory Waste Management with Augmented Analytics for Finished Goods. In Proceedings of the Third International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 2–4 February 2023; pp. 1293–1299. [Google Scholar] [CrossRef]

- Filz, M.-A.; Bosse, J.P.; Herrmann, C. Digitalization platform for data-driven quality management in multi-stage manufacturing systems. J. Intell. Manuf. 2023, 35, 2699–2718. [Google Scholar] [CrossRef]

- Rehman, A.; Naz, S.; Razzak, I. Leveraging big data analytics in healthcare enhancement: Trends, challenges and opportunities. Multimed. Syst. 2022, 28, 1339–1371. [Google Scholar] [CrossRef]

- Ribeiro, R.; Pilastri, A.; Moura, C.; Morgado, J.; Cortez, P. A data-driven intelligent decision support system that combines predictive and prescriptive analytics for the design of new textile fabrics. Neural. Comput. Appl. 2023, 35, 17375–17395. [Google Scholar] [CrossRef]

- Adi, E.; Anwar, A.; Baig, Z.; Zeadally, S. Machine learning and data analytics for the IoT. Neural. Comput. Appl. 2020, 32, 16205–16233. [Google Scholar] [CrossRef]

- von Bischhoffshausen, J.K.; Paatsch, M.; Reuter, M.; Satzger, G.; Fromm, H. An Information System for Sales Team Assignments Utilizing Predictive and Prescriptive Analytics. In Proceedings of the 2015 IEEE 17th Conference on Business Informatics (CBI), Lisbon, Portugal, 13–16 July 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 68–76, ISBN 978-1-4673-7340-1. [Google Scholar]

- Mustafee, N.; Powell, J.H.; Harper, A. Rh-rt: A data analytics framework for reducing wait time at emergency departments and centres for urgent care. In Proceedings of the 2018 Winter Simulation Conference (WSC), Gothenburg, Sweden, 9–12 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 100–110, ISBN 978-1-5386-6572-5. [Google Scholar]

- DIN SPEC 91345:2016-04, 2016, ICS 03.100.01; 25.040.01; 35.240.50. Available online: https://www.dinmedia.de/en/technical-rule/din-spec-91345/250940128 (accessed on 1 May 2024).

- Ma, J.; Wang, Q.; Jiang, Z.; Zhao, Z. A hybrid modeling methodology for cyber physical production systems: Framework and key techniques. Prod. Eng. Res. Devel. 2021, 15, 773–790. [Google Scholar] [CrossRef]

- Fortoul-Diaz, J.A.; Carrillo-Martinez, L.A.; Centeno-Tellez, A.; Cortes-Santacruz, F.; Olmos-Pineda, I.; Flores-Quintero, R.R. A Smart Factory Architecture Based on Industry 4.0 Technologies: Open-Source Software Implementation. IEEE Access 2023, 11, 101727–101749. [Google Scholar] [CrossRef]

- Kahveci, S.; Alkan, B.; Ahmad, M.H.; Ahmad, B.; Harrison, R. An end-to-end big data analytics platform for IoT-enabled smart factories: A case study of battery module assembly system for electric vehicles. J. Manuf. Syst. 2022, 63, 214–223. [Google Scholar] [CrossRef]

- Parri, J.; Patara, F.; Sampietro, S.; Vicario, E. A framework for Model-Driven Engineering of resilient software-controlled systems. Computing 2021, 103, 589–612. [Google Scholar] [CrossRef]

- Bozhdaraj, D.; Lucke, D.; Jooste, J.L. Smart Maintenance Architecture for Automated Guided Vehicles. Procedia CIRP 2023, 118, 110–115. [Google Scholar] [CrossRef]

- Malburg, L.; Hoffmann, M.; Bergmann, R. Applying MAPE-K control loops for adaptive workflow management in smart factories. J. Intell. Inf. Syst. 2023, 61, 83–111. [Google Scholar] [CrossRef]

- Friederich, J.; Francis, D.P.; Lazarova-Molnar, S.; Mohamed, N. A framework for data-driven digital twins of smart manufacturing systems. Comput. Ind. 2022, 136, 103586. [Google Scholar] [CrossRef]

- Guha, B.; Moore, S.; Huyghe, J.M. Conceptualizing data-driven closed loop production systems for lean manufacturing of complex biomedical devices—A cyber-physical system approach. J. Eng. Appl. Sci. 2023, 70, 50. [Google Scholar] [CrossRef]

- Woo, J.; Shin, S.-J.; Seo, W.; Meilanitasari, P. Developing a big data analytics platform for manufacturing systems: Architecture, method, and implementation. Int. J. Adv. Manuf. Technol. 2018, 99, 2193–2217. [Google Scholar] [CrossRef]

- Zhang, X.; Ming, X. A Smart system in Manufacturing with Mass Personalization (S-MMP) for blueprint and scenario driven by industrial model transformation. J. Intell. Manuf. 2023, 34, 1875–1893. [Google Scholar] [CrossRef]

- Kaniappan Chinnathai, M.; Alkan, B. A digital life-cycle management framework for sustainable smart manufacturing in energy intensive industries. J. Clean. Prod. 2023, 419, 138259. [Google Scholar] [CrossRef]

- Farbiz, F.; Habibullah, M.S.; Hamadicharef, B.; Maszczyk, T.; Aggarwal, S. Knowledge-embedded machine learning and its applications in smart manufacturing. J. Intell. Manuf. 2023, 34, 2889–2906. [Google Scholar] [CrossRef]

- García, Á.; Bregon, A.; Martínez-Prieto, M.A. Digital Twin Learning Ecosystem: A cyber–physical framework to integrate human-machine knowledge in traditional manufacturing. Internet Things 2024, 25, 101094. [Google Scholar] [CrossRef]

- Simeone, A.; Grant, R.; Ye, W.; Caggiano, A. A human-cyber-physical system for Operator 5.0 smart risk assessment. Int. J. Adv. Manuf. Technol. 2023, 129, 2763–2782. [Google Scholar] [CrossRef]

| Interv. | Experience | Industry | Job Title |

|---|---|---|---|

| I1 | 8 years | Manufacturing | Industry 4.0 manager |

| I2 | 11 years | Mechanical Engineering | Industry 4.0 manager |

| I3–I6 | 4 years, 4 years, 3 years, 1 year | Industrial Data Science | Researcher |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weller, J.; Migenda, N.; Naik, Y.; Heuwinkel, T.; Kühn, A.; Kohlhase, M.; Schenck, W.; Dumitrescu, R. Reference Architecture for the Integration of Prescriptive Analytics Use Cases in Smart Factories. Mathematics 2024, 12, 2663. https://doi.org/10.3390/math12172663

Weller J, Migenda N, Naik Y, Heuwinkel T, Kühn A, Kohlhase M, Schenck W, Dumitrescu R. Reference Architecture for the Integration of Prescriptive Analytics Use Cases in Smart Factories. Mathematics. 2024; 12(17):2663. https://doi.org/10.3390/math12172663

Chicago/Turabian StyleWeller, Julian, Nico Migenda, Yash Naik, Tim Heuwinkel, Arno Kühn, Martin Kohlhase, Wolfram Schenck, and Roman Dumitrescu. 2024. "Reference Architecture for the Integration of Prescriptive Analytics Use Cases in Smart Factories" Mathematics 12, no. 17: 2663. https://doi.org/10.3390/math12172663

APA StyleWeller, J., Migenda, N., Naik, Y., Heuwinkel, T., Kühn, A., Kohlhase, M., Schenck, W., & Dumitrescu, R. (2024). Reference Architecture for the Integration of Prescriptive Analytics Use Cases in Smart Factories. Mathematics, 12(17), 2663. https://doi.org/10.3390/math12172663