Abstract

Graph query languages such as Cypher are widely adopted to match and retrieve data in a graph representation, due to their ability to retrieve and transform information. Even though the most natural way to match and transform information is through rewriting rules, those are scarcely or partially adopted in graph query languages. Their inability to do so has a major impact on the subsequent way the information is structured, as it might then appear more natural to provide major constraints over the data representation to fix the way the information should be represented. On the other hand, recent works are starting to move towards the opposite direction, as the provision of a truly general semistructured model (GSM) allows to both represent all the available data formats (Network-Based, Relational, and Semistructured) as well as support a holistic query language expressing all major queries in such languages. In this paper, we show that the usage of GSM enables the definition of a general rewriting mechanism which can be expressed in current graph query languages only at the cost of adhering the query to the specificity of the underlying data representation. We formalise the proposed query language in terms declarative graph rewriting mechanisms described as a set of production rules while both providing restriction to the characterisation of L, and extending it to support structural graph nesting operations, useful to aggregate similar information around an entry-point of interest. We further achieve our declarative requirements by determining the order in which the data should be rewritten and multiple rules should be applied while ensuring the application of such updates on the GSM database is persisted in subsequent rewriting calls. We discuss how GSM, by fully supporting index-based data representation, allows for a better physical model implementation leveraging the benefits of columnar database storage. Preliminary benchmarks show the scalability of this proposed implementation in comparison with state-of-the-art implementations.

Keywords:

direct acyclic graphs; generalised semistructured model; graph grammars; graph query languages; algorithms; operator algebras MSC:

68W40; 68P15; 68Q42; 68Q55; 68R10; 68U35

1. Introduction

Query languages [1] fulfill the aim of retrieving and manipulating data after being adequately processed according to a physical model requiring preliminary data loading and indexing operations. Good declarative languages for manipulating information, such as SQL [2], are agnostic from the underlying physical model while expressing the information need of combining (JOIN), filtering (WHERE) and grouping (e.g., COUNT(*) over GROUP BY-s) data over its logical representation. This language can also be truly declarative as it does not require a user to explicitly convey this in terms of operations to be performed over the data rather than instructing the machine which information should be used and which types of transformations should be applied. Due to the intrinsic nature of graph data representation, graph query languages such as Gremlin [3], Cypher [4], or SPARQL [5] are procedural (i.e., navigational) due to the navigational structure of the data, thus heavily requiring the user to inform the language how to match and transform the data. These considerations extend to how edge and vertex data should be treated, thus adding further complexity [6,7].

On the other hand, graph grammars [8] provide a high-level declarative way to match specific sub-graphs of interest from vertex-labelled graphs for then rewriting them into another subgraph of interest, thus updating the original graph of interest. These consist in a set of rules , where each rule consists of two graphs and possibly sharing a common subgraph K: while specifies a potential removal of either vertices or edges, specifies their addition. Automating the application of such rules requires explicitly following a graph navigational order for applying each matched subgraph to the structure [9] to guarantee the generation of a unique acceptable graph representation resulting from the querying data. As a by-product of exploiting topological sorts for scheduling visiting and rewriting operations, we then require to precisely identify an entry match vertex for each from a rule, thus precisely determining from which position within the graph the rewriting rule should be applied, and in which order.

At the time of writing, the aforementioned graph query languages are not able to express graph grammars natively without resorting to explicitly instructing the query language how to restructure the underlying graph data representation (Lemma 7); furthermore, the replacement of previously matched vertices with new ones invalidates previous matches, thus forcing the user to pipeline different queries until convergence is reached. On the other hand, we would expect any graph query language to directly express the rewriting mechanism by directly expressing the set of rules, without necessarily instructing the querying language in which order such operations shall be performed. Furthermore, when a property-graph model is chosen, and morphisms are expressed through the properties associated with the vertices and edges rather than their associated IDs as per the current Cypher and Neo4j v5.20 specifications, this makes the deletion of vertices and their update through the resulting morphisms quite impractical, as such data model provides no clear way to refer to both vertices and edges via unique identifiers. As a result of these considerations, we then derive that, at the time of writing, both the graph query languages and their underlying representational model are insufficient to adequately express rewriting rules as general as the ones postulated by graph grammars over graphs containing property-value associations, independently from their representation of choice [10].

To overcome the aforementioned limitations, we propose a query language, for the first time directly expressing such rewriting desiderata: we restrict the set of all the possible matching graphs into ego-nets containing one entry-point while incorporating nesting operations (Section 6.1); as updating graph vertices’ properties breaks the declarative assumption as the user should specify the order in which the operations are performed, we relax the language declarativity for the sole rewritings. To better support this query language, we completely shift the data model of interest to object-oriented databases, where relationships across objects are expressed through object “containment” relationships, and where both objects and such containments are uniquely identified. By also requiring that any object shall never contain itself at any nesting level [6], we obtain cycle-free containment relationships.

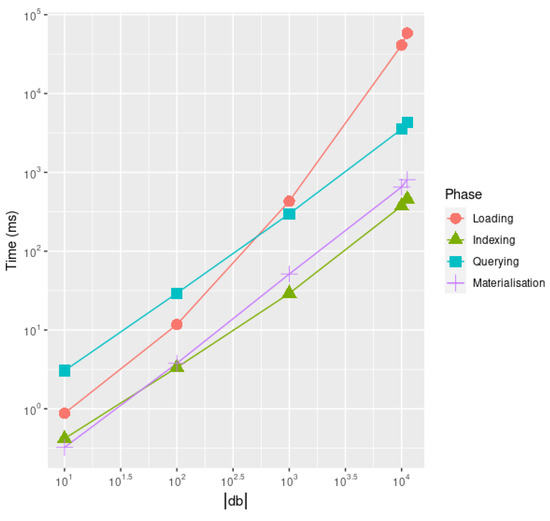

The paper is structured as follows: after providing some preliminary notation used throughout the paper (Section 2), we introduce the relational and the graph data models from current literature, while focusing on both their data representation and associated query languages (Section 3). Their juxtaposition motivates the proposal of our Generalised Semistructured Model (Section 4), for which we outline both the logical and physical data model, where the latter leverages state-of-the-art columnar relational database representations [11]; we also introduce the concept of a view for a generalised semistructured model, as well as introduce some morphism notation used throughout the paper. After introducing the designated operator for instantiating the morphisms by joining matched containments stored in distinct sets of tables (Section 5), we finally propose our query language for matching and rewriting object-oriented databases expressed in the aforementioned GSM model (Section 6). We characterise the formal semantics of such novel graph query language in pseudocode notation (Algorithm from Section 6) characterised in terms of both algorithmic and algebraic notation (Section 6.3 and Section 6.4) for the matching part, as well as in terms of Structured Operational Semantics (SOS) [12] for describing the rewriting steps (Section 6.5). The remaining sections discuss some of the expressiveness properties of such query language if compared to Cypher (Section 7), discuss its time complexity (Section 8), and benchmark it against Cypher running over Neo4j v5.20, showing the extreme inefficiency of property graph computational model (Section 9), which are fairly restricted due to the impossibility of conveying a novel single query for any possible graph schema (Lemmas in Section 7). Scalability tests show that our solution outperforms Cypher and Neo4j v5.20, providing the query language standard nearer to the recently proposed GQL, by two orders of magnitude (Section 9.1) while providing a computational throughput being 600 times faster than Neo4j by solving more queries in the same comparable amount of time (Section 9.1). Last, we draw our final conclusions where we propose some future works (Section 10). To improve the paper’s readability, we move some definitions (Appendix A) and the full set of proofs for the Lemmas and Corollaries (Appendix B) to the Appendix.

These main contributions are then obtained in terms of the following ancillary results:

- Definition of a novel nested relational algebra natural equi-join operator for composing nested morphisms, also supporting left outer joins by parameter tuning (Section 5).

- Definition of an object-oriented database view for updating the databases on the fly without the need for heavy restructuring of the loaded and indexed physical model (Section 4.3).

- As the latter view relies on the definition of a logical model extending GSM (Section 4.1), we show that the physical model is isomorphic to an indexed set of GSM databases expressed within the logical model (Lemma 1).

2. Preliminary Notation

We denote sets of cardinality as standard. The power set of any set S is the set of all subsets of S, including the empty set ∅ and S itself. Formally, .

We define a finite function via its graph explicating the association between a value from the domain of f () and a non-NULL codomain value. Using an abuse of notation, we denote the restriction as the evaluation of f over a domain , i.e., . We can also denote where C is the definition of the function over x as . With an abuse of notation, we denote as the cardinality of its domain, i.e., . We say that two functions f and are equivalent, , if and only if they both share the same domain and, for each element of their domain, both functions return the same codomain value, i.e., .

A tuple or indexed set of length is defined as a finite function in where has i as a natural-valued independent variable representing the index of the tuple, and the dependent variable corresponds to the element in the tuple.

A binary relation ℜ on a set A is said to be an equivalence relation if and only if it is reflexive (), symmetric (), and transitive (). Given a set A and an equivalence relationship ℜ, an equivalence class for is the set of all the elements in A that are equivalent to x, i.e., . Given a set A and an equivalence relationship ℜ, the quotient set is the set of all the equivalence classes of A, i.e., . We denote as the equivalence relationship denoting two functions as equivalent if they are equivalent after the same restriction over X, i.e., .

Given a set of all the possible string attributes and a set of all the possible values , a record is defined as a finite function with [13]. Given two records represented as finite functions f and , we denote as the overriding of f by returning for each and otherwise. Given this, we can also denote the graph of a function as . We denote as NULL a void value not representing a sensible value in : using the usual notation in the database area at the cost of committing an abuse of notation, we say that returns NULL if and only if f is not defined over x (i.e., ). We say that a record is empty if no attribute is associated with a value in (i.e., and ).

Higher-Order Functions

Higher-Order Functions (HOFs) are functions that either take one or more functions as arguments or return a function as a result. We provide the definition of some HOFs used in this paper:

- The zipping operator maps n tuples (or records) to a record of tuples (or records) r defined as if and only if all n tuples are defined over i:

- Given a function and a generic collection C, the mapping operator returns a new collection by applying f to each component of C:

- Given a binary predicate p and a collection C, the filter function trims C by restricting it to its values satisfying p:

- Given a binary function , an initial value (accumulator), and a tuple C, the (left) fold operator is a tail-recursive function returning either for an empty tuple, or for a tuple :

- Given a collection of strings C and a separator s, collapse (also referred to as join in programming languages such as Javascript or Python ) returns a single string where all the strings in C are separated by s. Given “^” the usual string concatenation operator, this can be expressed in terms of as follows:When s is a space “␣”, then we can use as a shorthand.

- Given a function and two values and , the update of f so that it will return b for a and will return any other previous value for otherwise is defined as follows:

- Given a (finite) function f and an input value y, the HOF optionally obtaining the value of if and returning z otherwise is defined as:Please observe that we can use this function in combination with Put to set multiple nested functions:

3. Related Works

This section outlines different logical models and query languages for both graph data (Section 3.1.1 and Section 3.1.2) and the nested relational model (Section 3.2.1 and Section 3.2.2), while starting from their mathematical foundations. We then discuss an efficient physical model used for the relational model (Section 3.2.3).

3.1. Graph Data

3.1.1. Logical Model

Direct Acyclic Graphs (DAGs) and Topological Sort

A digraph consists of vertices V and edges E being a subset of . We say that such a graph is weighted if its definition is extended as where is a weight function associating each edge in the graph to a real value. A closed trail is a sequence of distinct edges which edge is such that and if any, and where . We say that a digraph is acyclic if it contains no closed trails consisting of at least one edge, and we refer to it as a Direct Acyclic Graph (DAG).

If we assume that edges in a graph reflect dependency relationships across their vertices, a natural way to determine the visiting order of the vertices is first to sort its vertices topologically [14], thus inducing an operational scheduling order [11]. This requires the underlying graph to be a DAG. Notwithstanding this, any DAG might come with multiple possible valid topological sorts; among these, the scheduling of tasks operations usually prefers visiting the operations bottom-up in a layered way, thus ensuring the operations are always applied starting from the sub-graphs having less structural-refactoring requirements that the higher ones [11].

We say that a topological sort of a DAG is a linear ordering of its vertices in a tuple t with so that for any edge we have i and such that and . Given this linear ordering of the vertices, we can always define a layering algorithm [15] where vertices sharing no mutual interdependencies are placed in the same layer, and where all the vertices appearing in the shallowest layer will be connected by transitive closure to all the vertices in the deeper layers, while the vertices in the deepest layer will share no interconnection with the other vertices in the graph. To do so, we can use Algorithm 1: we use timestamps for determining the layer ID: we associate all vertices with no outgoing edge to the deepest layer 0 (Line 5) and, for all the remaining vertices, we set the vertex to the layer immediately below to the one of the deepest outgoing vertex (Line 9).

| Algorithm 1 Layering DAGs from a vertex topological order in t |

|

When the graph is cyclic, we can use heuristics to approximate the vertex order, such as using the progressive ID associated with the vertices to disambiguate and choose an ordering when required.

Property Graphs

Property graphs [16] represent multigraphs (i.e., digraphs allowing for multiple edges among two distinct vertices) expressing both vertices and edges as multi-labelled records. The usefulness of this data model is remarked by its implementation in almost all recent Graph DBMSs, such as Neo4j [4].

Definition 1

(Property Graph). A property graph [9] is a tuple , where V and E are sets of distinct integer identifiers (, , ). L is a set of labels, A is a set of attributes and U is a set of values. maps each vertex or edge to a set of labels; maps each vertex or edge within the graph and each attribute within A, to a value in U; last, maps each edge to a pair of vertices , where s is the source vertex and t is the target.

Property graphs do not support aggregated values, as values in U cannot contain either vertices or edges, nor U is made to contain a collection of values.

RDF

The Resource Description Framework (RDF) [17] distinguishes resources via Unique Resource Identifiers (URI), be them vertices or edges within a graph; those are linked to their properties or other resources via triples, acting as edges. RDF is commonly used in the semantic web and in the ontology field [18,19]. Thus, modern reasoners such as Jena [20] or Pellet [21] assume such data structure as the default graph data model.

Definition 2 (RDF (Graph Data) Model).

An RDF (Graph data) model [9] is defined as a set of triples , where s is called “subject”, p is the “predicate” and o is the “object”. Such triple describes an edge with label p linking the source vertex s to the destination vertex o. Such predicate can also be a source vertex [10]. Each vertex is either identified by a unique URI identifier or by a blank vertex . Each predicate is only described by a URI identifier.

Despite this model using unique resource identifiers for either vertices or edges differently from property graphs, RDF is forced to express attribute-value associations for vertices as additional edges through reification. Thus, property graphs can be entirely expressed as RDF triplestore systems as follows:

Definition 3

(Property Graph over Triplestore). Given a property graph , each vertex induces a set of triples for each such that having . Each edge induces a set of triples such that and another set of triples for each such that having .

The inverse transformation is not always possible as RDF properties as property graphs do not allow the representation of edges departing from other edges. RDF also supports the unique identification of distinct databases being loaded within the same physical storage through named graphs via a resource identifier. Even though it allows named graphs to appear as triplet subjects, such named graphs can appear as neither objects nor properties, thus not overcoming property graphs’ limitations on representing nested data.

Notwithstanding the model’s innate ability to represent both vertices and edges via URIs, the inability of the data model to directly associate each URI with a data record while requiring it to express the property-value associations via triplets, requires the expression of property updates via the deletion and subsequent creation of new triplets of the data, which might be quite impractical. Given all the above, we still focus our attention on the Property Graph model, as it better matches our data representation desiderata by associating to vertices labels, property-value associations, as well as binary relationships without any data duplications.

3.1.2. Query Languages

Despite the recent adoption of a novel graph query language standard, GQL, (https://www.iso.org/obp/ui/en/#!iso:std:76120:en accessed on 19 April 2024), its definition has still to be implemented yet in existing systems. This motivates us to briefly survey currently available languages. Different from the more common characterisation of graph query languages in terms of their potential of expressing traversal queries [22], a better analysis of such languages involves their ability to generate new data. Our previous work [9] proposed the following characterisation:

- Graph Traversal and Pattern Matching: these are mainly navigational languages performing the graph visit through “tractable” algorithms through polynomial time visits with respect to the graph size [18,23,24]. Consequently, such solutions do not necessarily involve running a subgraph isomorphism problem, except when expressly requested by specific semantics [22,25].

- (Simple) Graph Grammars: as discussed in the forthcoming paragraph, they can add and remove new vertices and edges that do not necessarily depend on previously matched data, but they are unable to express full data transformation operations.

- Graph Algebras: these are mainly designed either to change the structure of property graphs through unary operators or to combine them through n-ary (often binary) ones. These are not to be confused with the path-algebras for expressing graph traversal and pattern-matching constructs, as they allow us to completely transform graphs alongside the data associated with them as well as deal with graph data collections [26,27,28,29].

- “Proper” Graph Query Languages: We say that a graph query language is “proper” when its expressive power includes all the aforementioned query languages, and possibly expresses the graph algebraic operators while being able to express, to some extent, graph grammar rewriting rules independently from their ability to express them in a fully-declarative way. This is achieved to some extent in commonly available languages, such as SPARQL and Cypher [4].

Graph Grammars

Graph grammars [30] are the theoretical foundations of current graph query languages, as they express the capability of matching specific patterns L [31] within the data through reachability queries while applying modifications to the underlying graph database structure (graph rewriting) R, thus producing a single graph grammar production rule , where there is an implicit morphism between some of the vertices (and edges) matched in L and the ones appearing in R: the vertices (and edges) only appearing in R are considered as newly inserted vertices, while the vertices (and edges) only appearing in L are considered as removed edges; we preserve the remaining matched vertices. Each rule is then considered as a function f, taking a graph database as an input and returning a transformed graph.

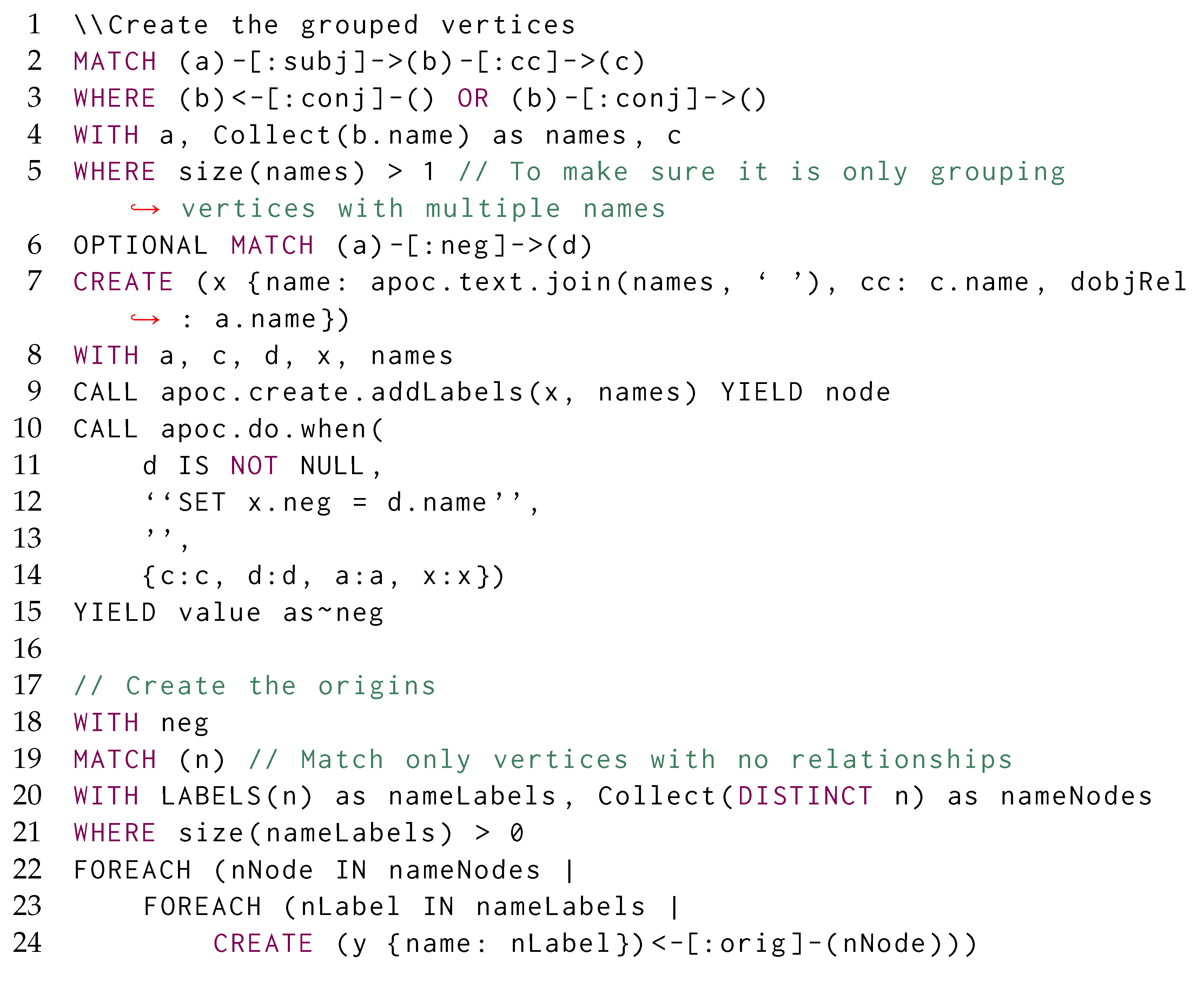

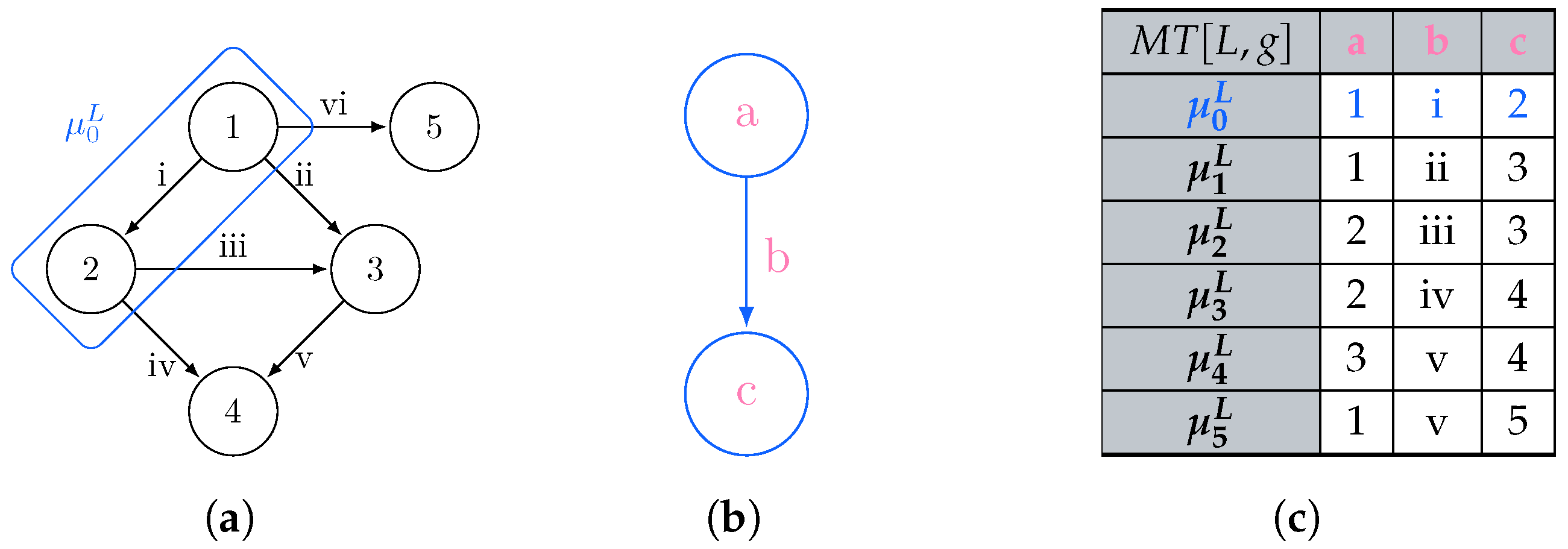

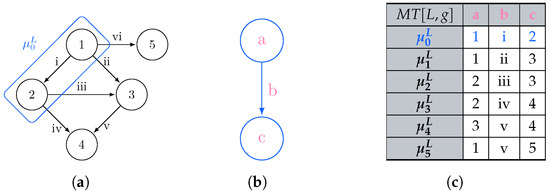

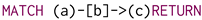

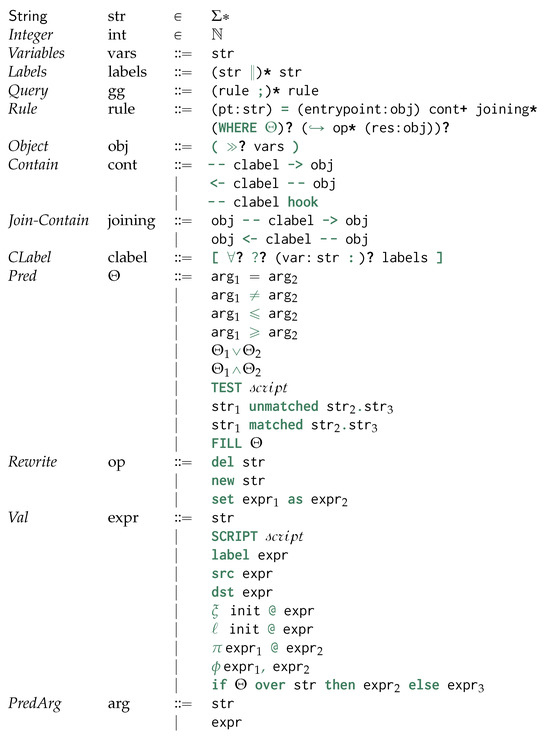

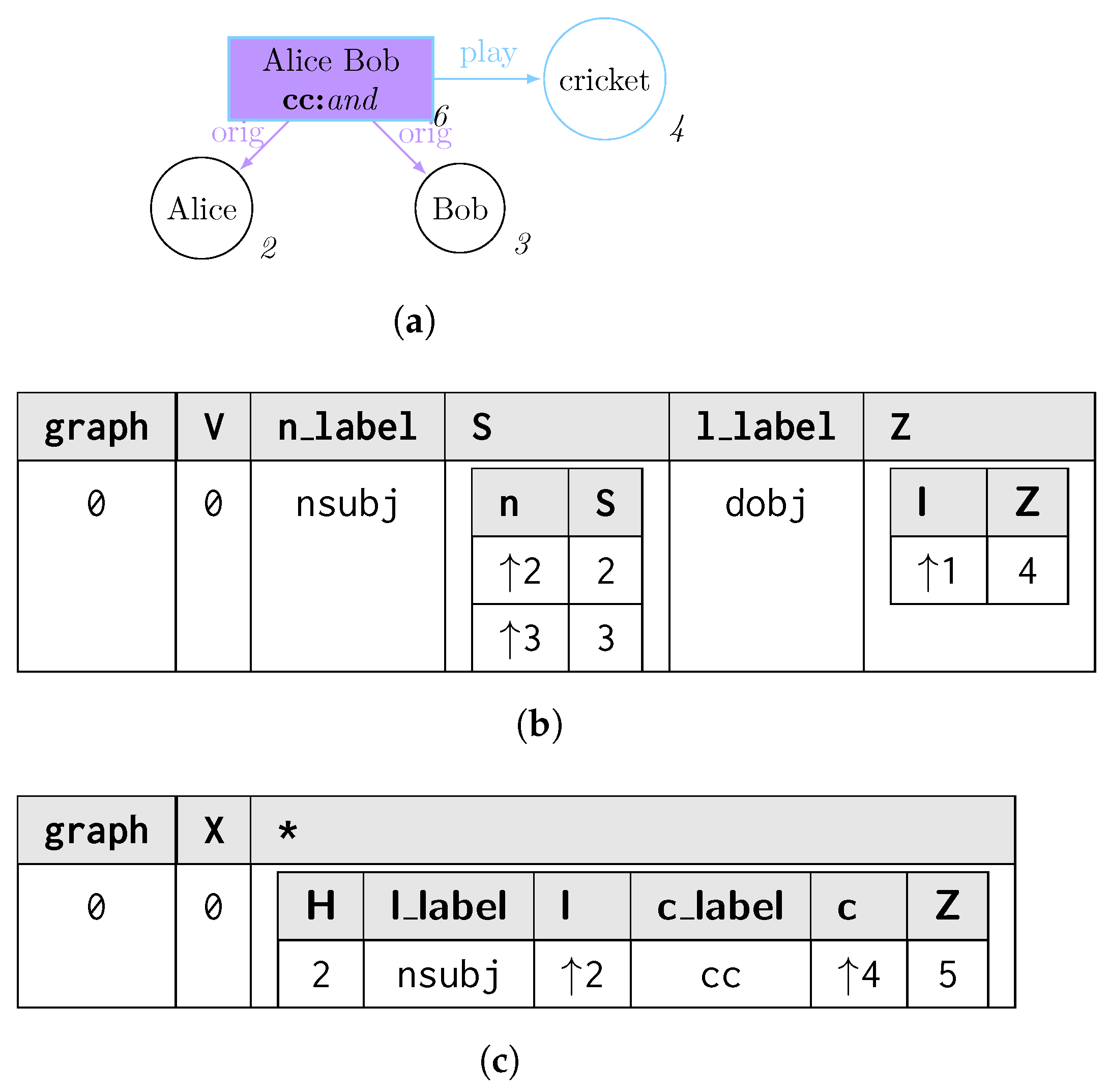

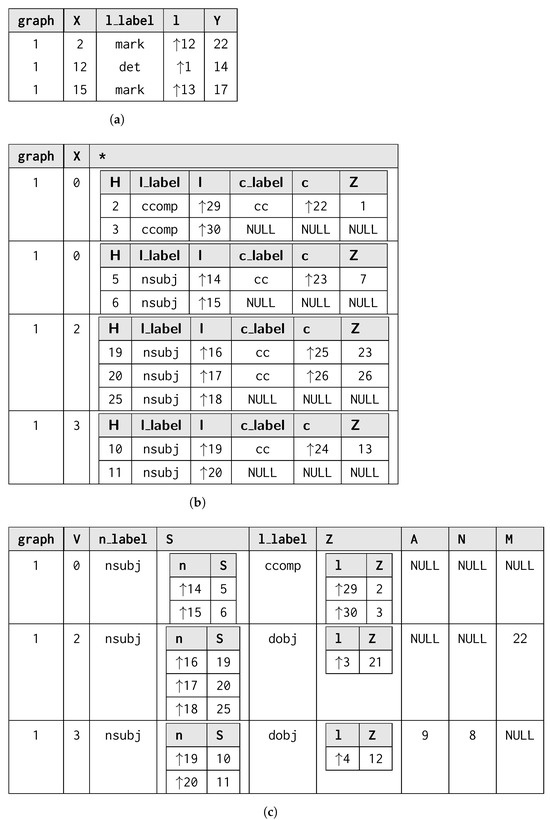

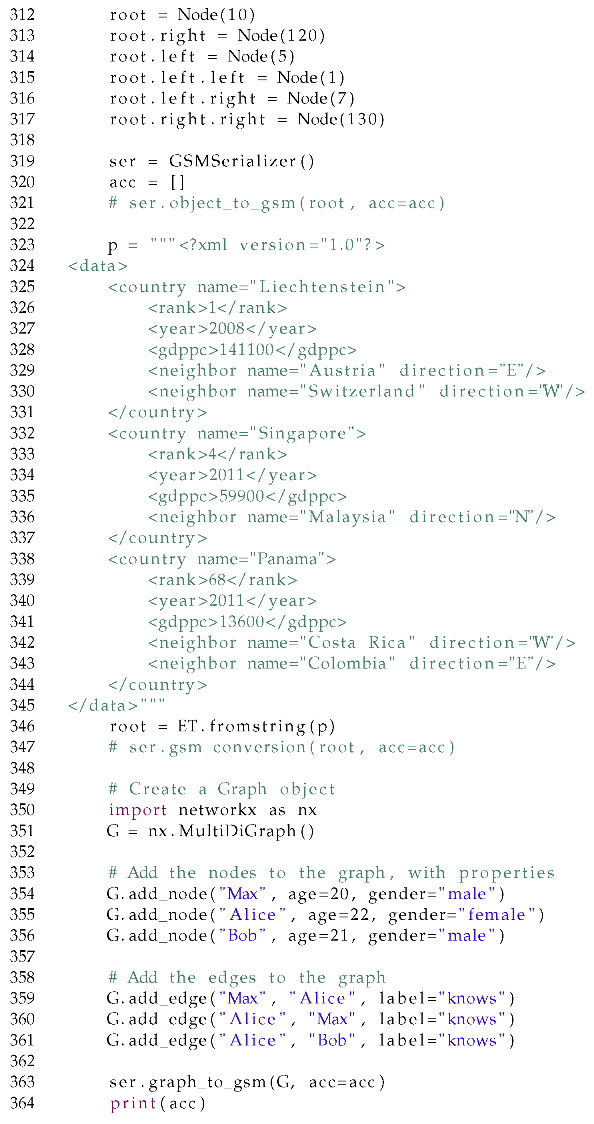

The process of matching L is usually expressed in terms of subgraph isomorphism: given two graphs G and L, we determine whether G contains a subgraph that is isomorphic to L, i.e., there is a bijective correspondence between the vertices and edges of L and . In graph query languages, we consider G as our graph database and return for each matched subgraph . When no rewriting is considered, each possible match for L is usually represented in a tabular form [31,32], where the column header provides the vertex and edge identifiers (e.g., variables) j from L, each row reflects each matched graph , and each cell corresponding to the column j represents . Figure 1 shows the process of querying a graph g (Figure 1a) through a pattern L (Figure 1b), for which all the subgraphs matching in the former could be reported as morphisms listed as rows within a table, which column headers reflect the vertex and edge variables occurring in the graph pattern (Figure 1c).

Figure 1.

Listing all the subgraphs of g being a solution of the subgraph isomorphism problem of g over L. (a) Graph g to be mathed; (b) Graph pattern L; (c) Morphism table where each row describes a morphism between the graph matching L and the graph g.

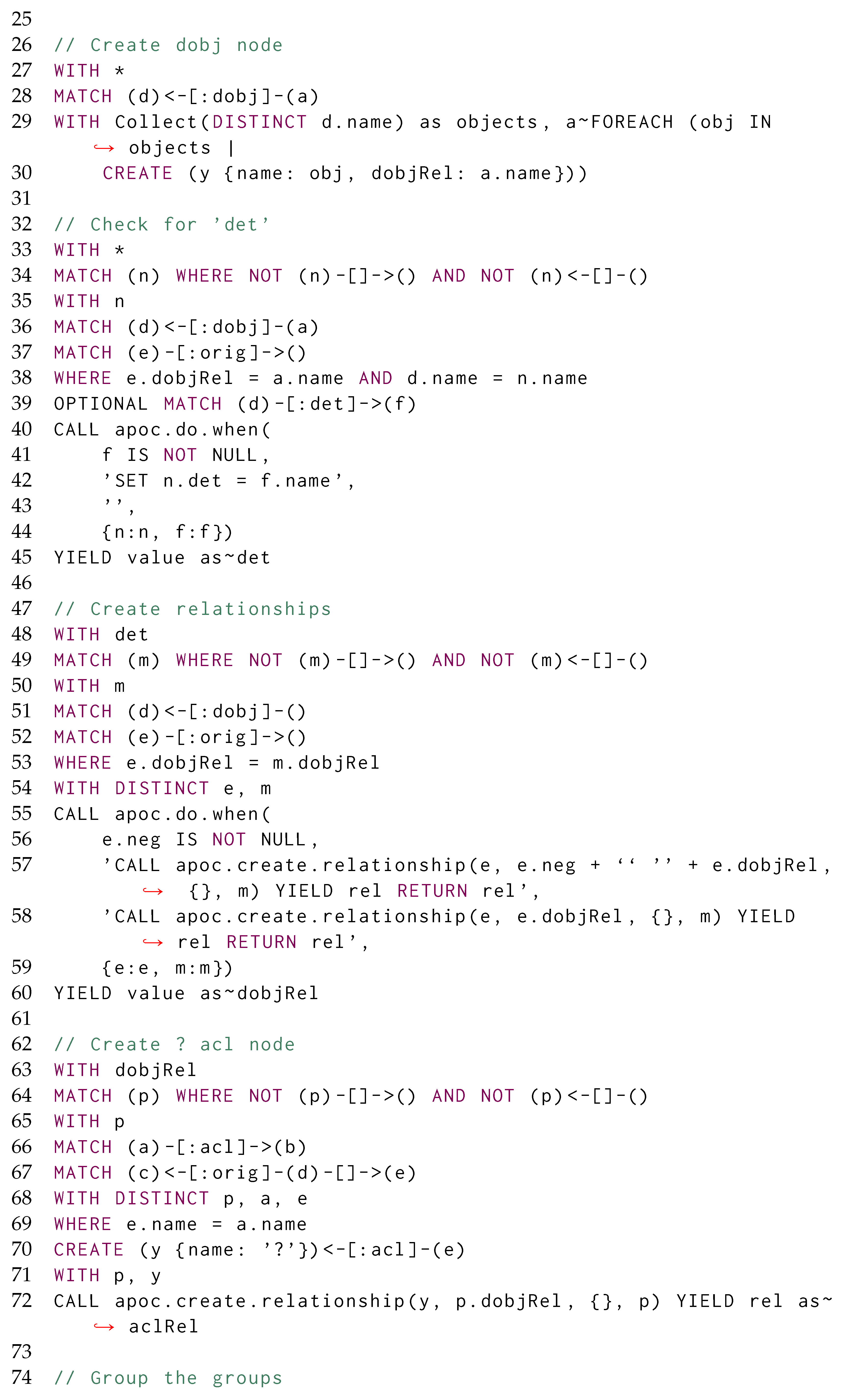

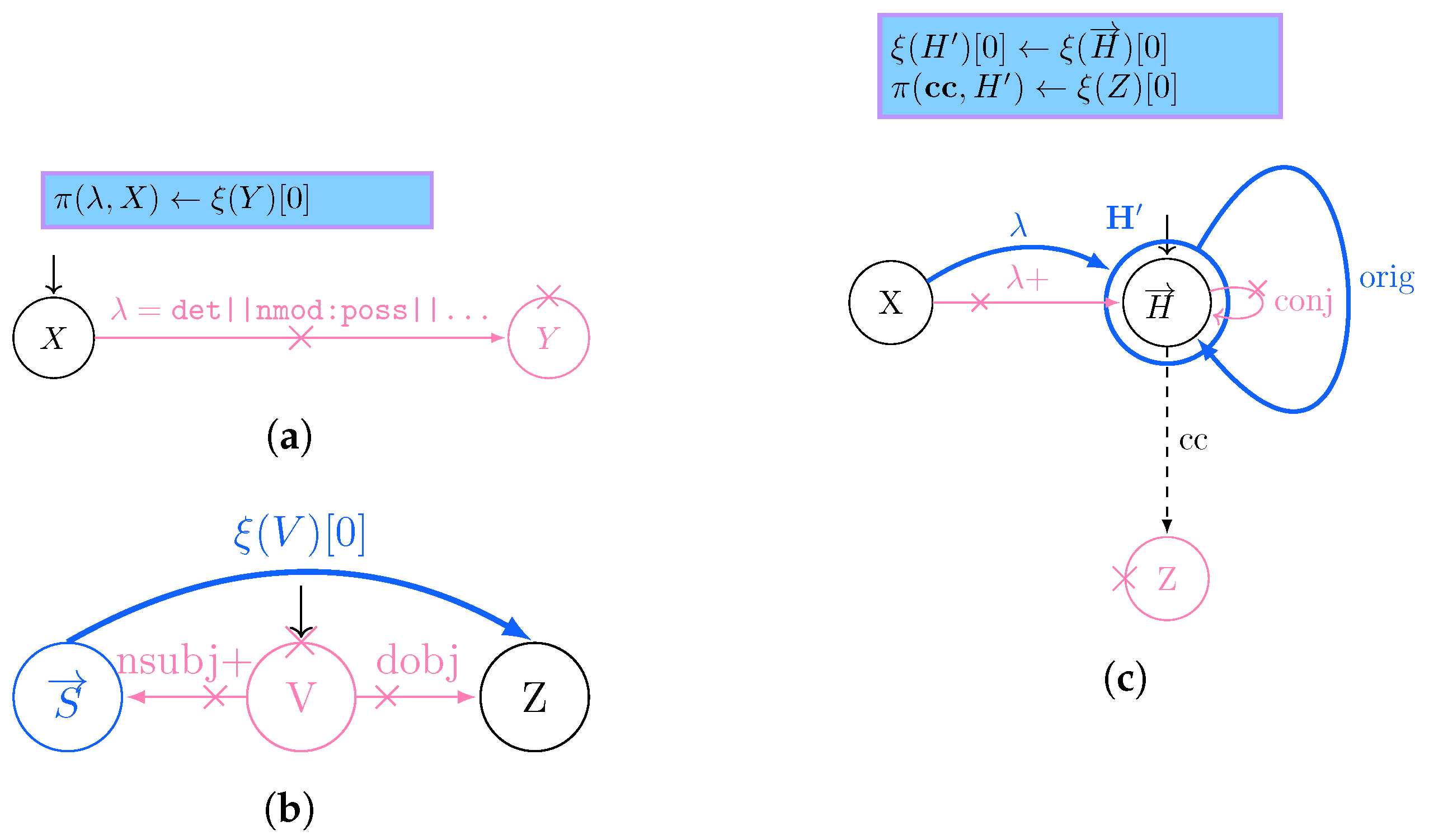

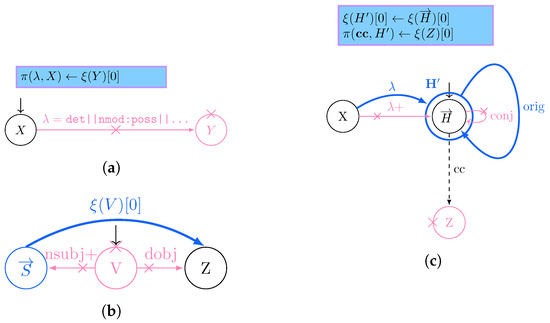

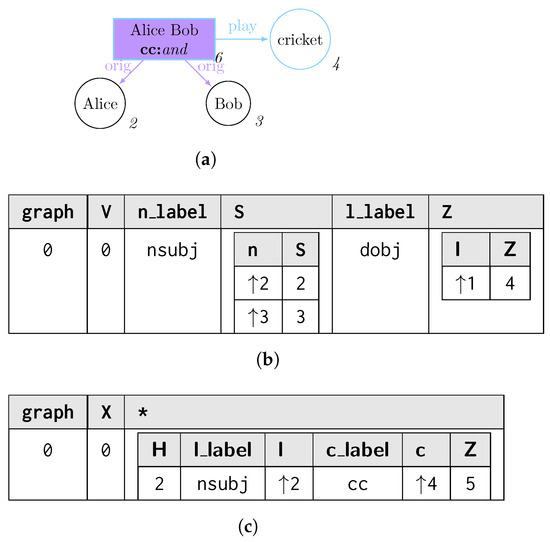

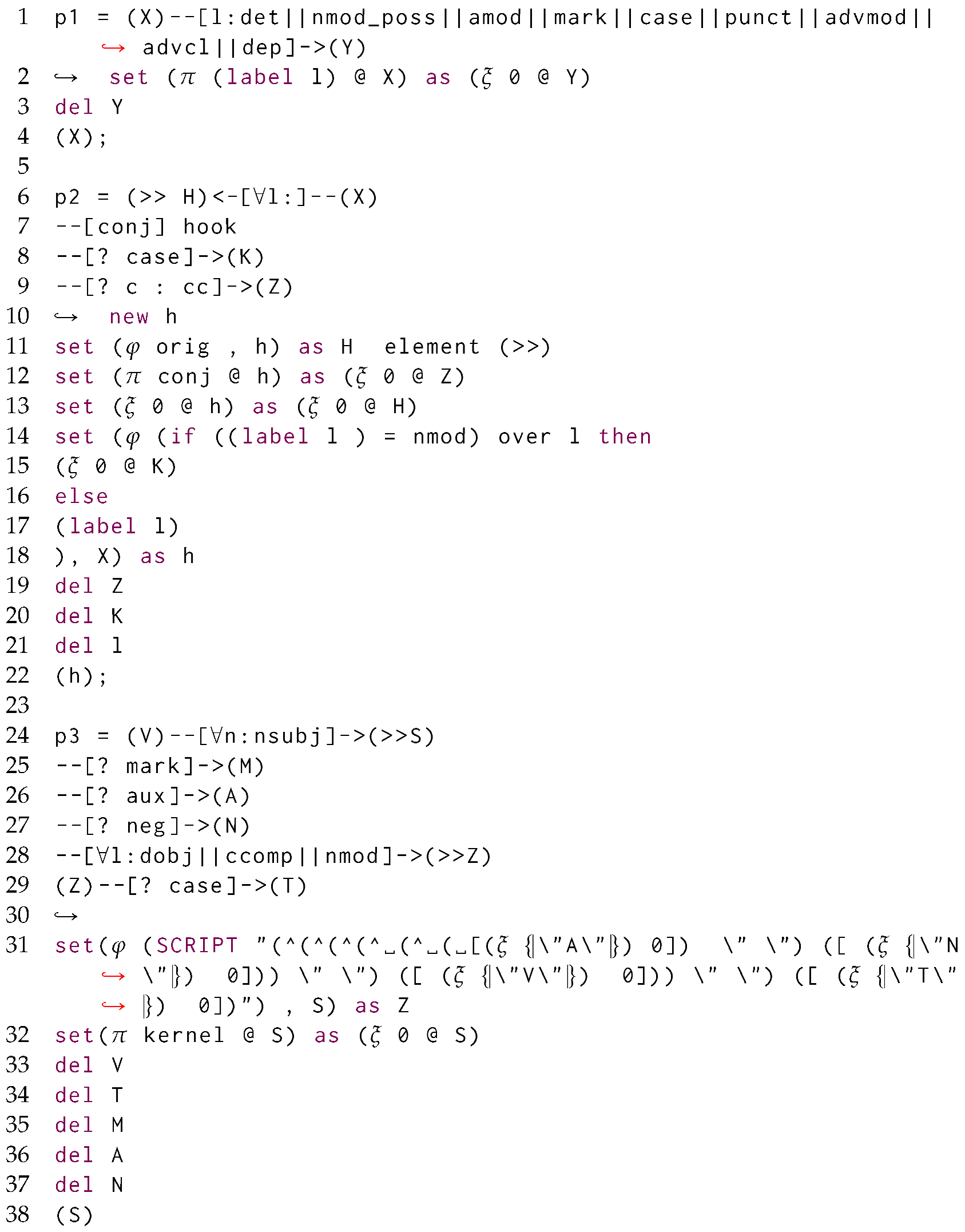

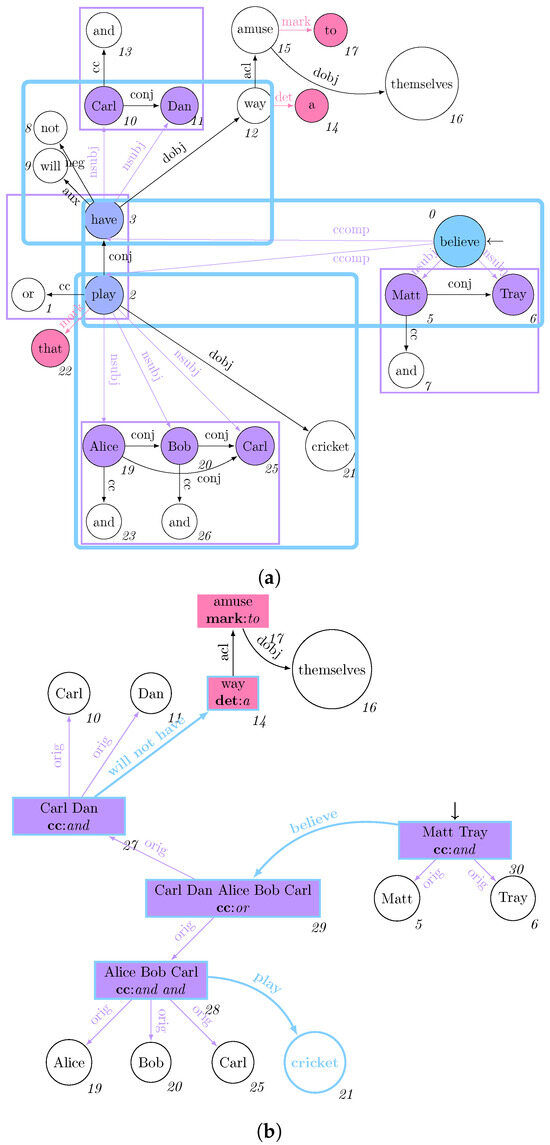

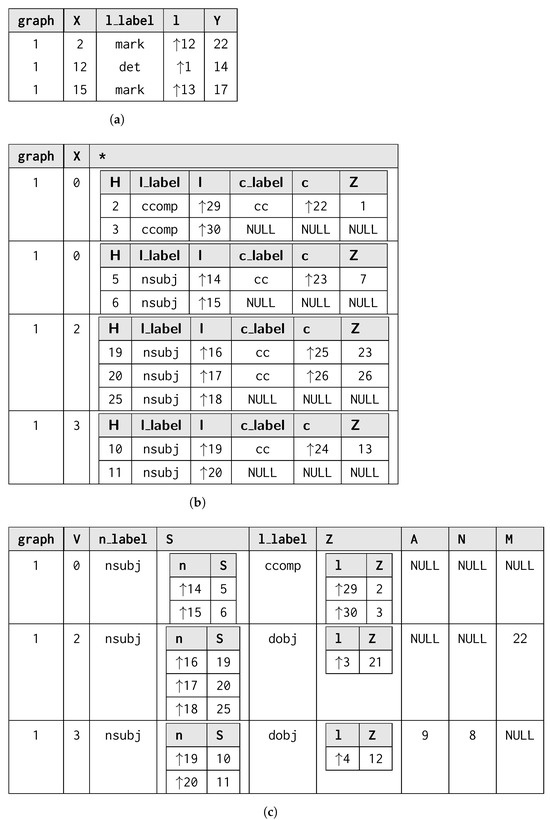

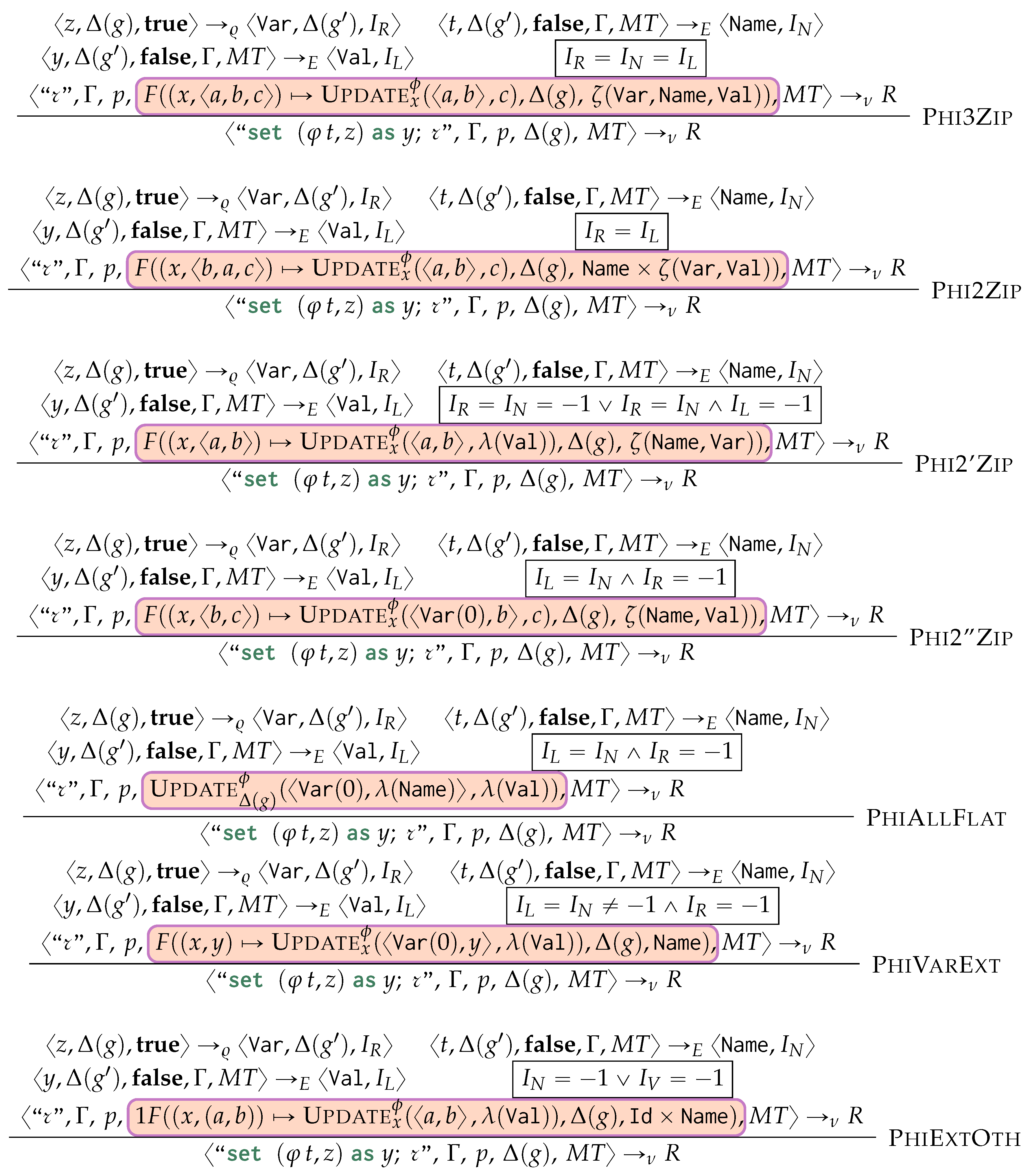

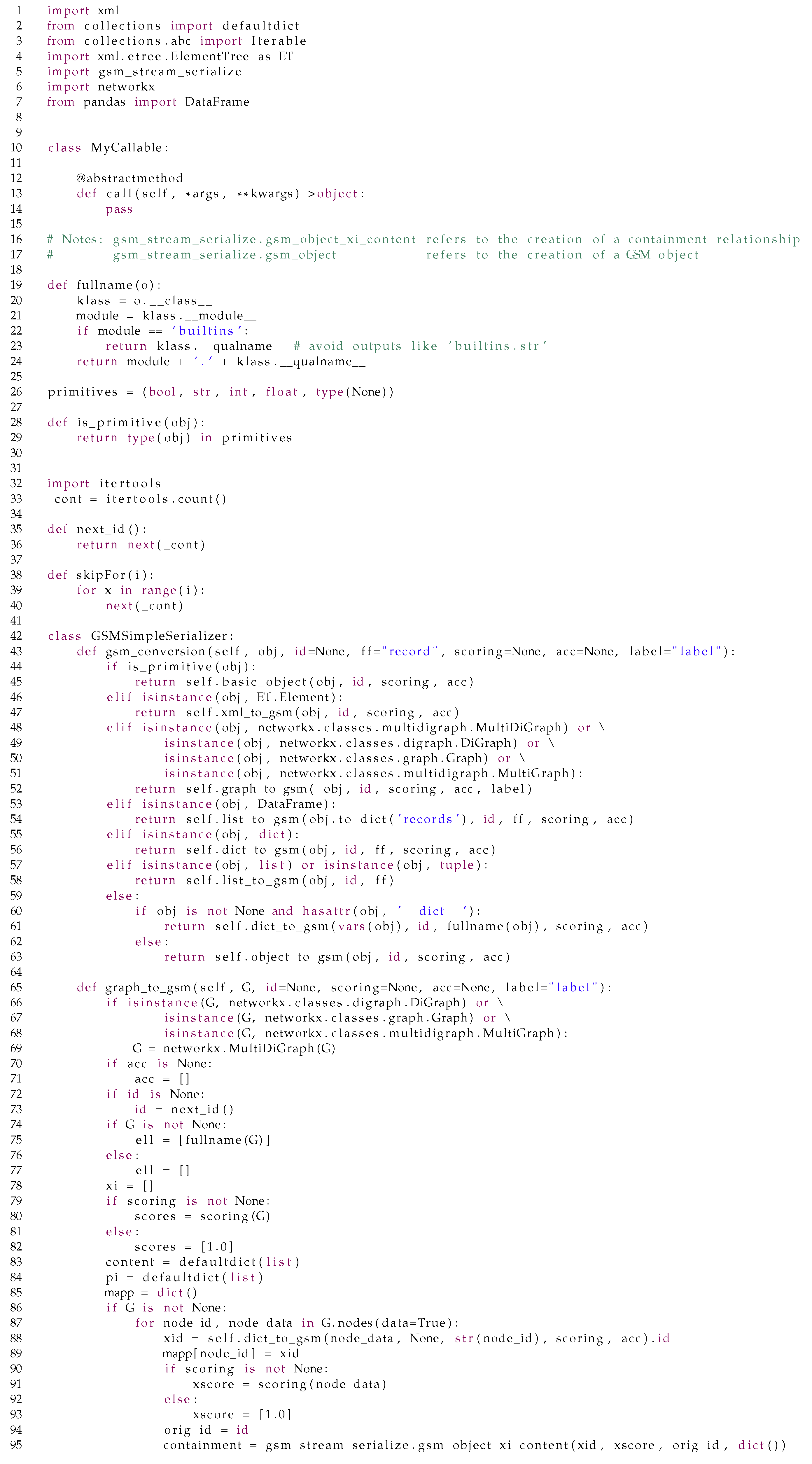

Figure 2 illustrates graph grammar rules as defined in GraphLog [33] for both matching and transforming any graph: we can first create the new vertices required in R, while updating or removing x as determined by the vertex or edge occurring in R. Deletions can be performed as the last operations from R. GraphLog still allows running of one single grammar rule at a time, while authors assume to have a graph where vertices are associated with data values and edges are labelled. Then, the rewriting operations derived from R will be applied to every subgraph being matched via a previously identified morphism in for each graph g of interest. Still, GraphLog considered neither the possibility of simultaneously applying multiple rewriting rules to the same graph nor a formal definition of which order the rules should be applied. The latter is required to ensure that any update on the graph can be performed incrementally while ensuring that any update to a vertex u via th information stored in its neighbours will always rely on the assumption that each neighbour will not be updated in any other subsequent step, thus guaranteeing that the information in u will never become stale.

Figure 2.

Graph grammar production rules à la GraphLog [33] in this paper’s use case scenario: thick denotes insertions, crosses deletions, and optional matches are dashed. We extended it with multiple optional edge label matches (‖), key-value association for property and vertex X, and multiple vertex values . (a) Injecting the articles/possessive pronouns () in Y for an entity X as its own properties, while deleting and Y; (b) Expressing the verb as a binary relationship between subject and direct object; (c) Generating a new entity coalescing the ones under the same conjunction Z, while referring to its original constituents via orig.

This paper solves the ordering issue by applying the changes on the vertices according to their inverse topological order, thus updating the vertices sharing the least dependencies with their direct descendants first.

Furthermore, as GraphLog authors are considering a no-property graph model, authors do not consider the possibility of updating multiple property value associations over a vertex, while not providing a formal definition of how aggregation functions over vertex data should be defined. While the first problem can be solved only by properly exploiting a suitable data model, the latter is solved by the combined provision of nested morphism, explicitly nesting the vertices associated with a grouped variable, while exploiting a scripting language for expressing how level data manipulations when strictly required. Given these considerations, we will also discuss graph data models (Section 3.1) and their respective query languages (Section 3.1.2).

Proper Graph Query Languages

Given the above, we will mainly focus our attention on the last type of language. Even though these languages can be closed under either property graphs or RDF, graphs must not be considered as their main output result, since specific keywords like RETURN for Cypher and CONSTRUCT for SPARQL must be used to force the query result to return graphs. Given also the fact that such languages have not been formalised from the graph returning point of view, such languages prove to be quite slow in producing new graph outputs [6,7].

GQL is largely inspired by Cypher, for which this standard might be considered its natural extension. Such query language enables the partial definition of graph grammar operations by supporting the following operators restructuring vertices and edges within the graph: SET, for setting new values within vertices and edges, MERGE, for merging a set of attributes within a single vertex or edges, REMOVE for removing labels and properties from vertices and edges and CREATE for the creating of new vertices, edges and paths.

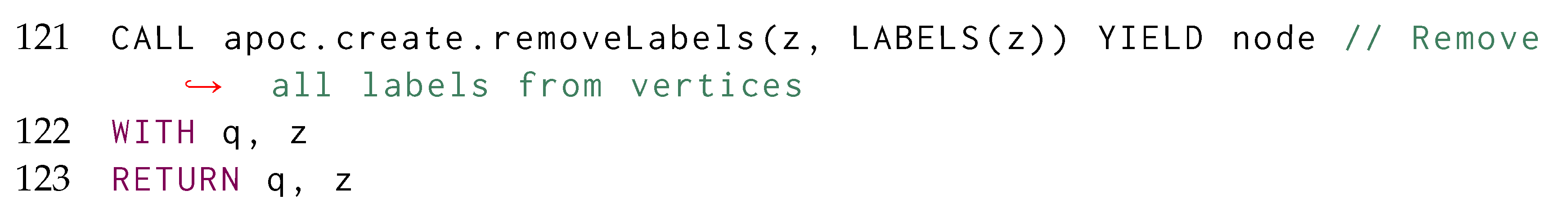

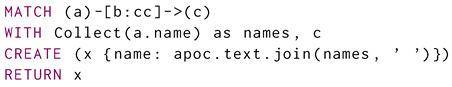

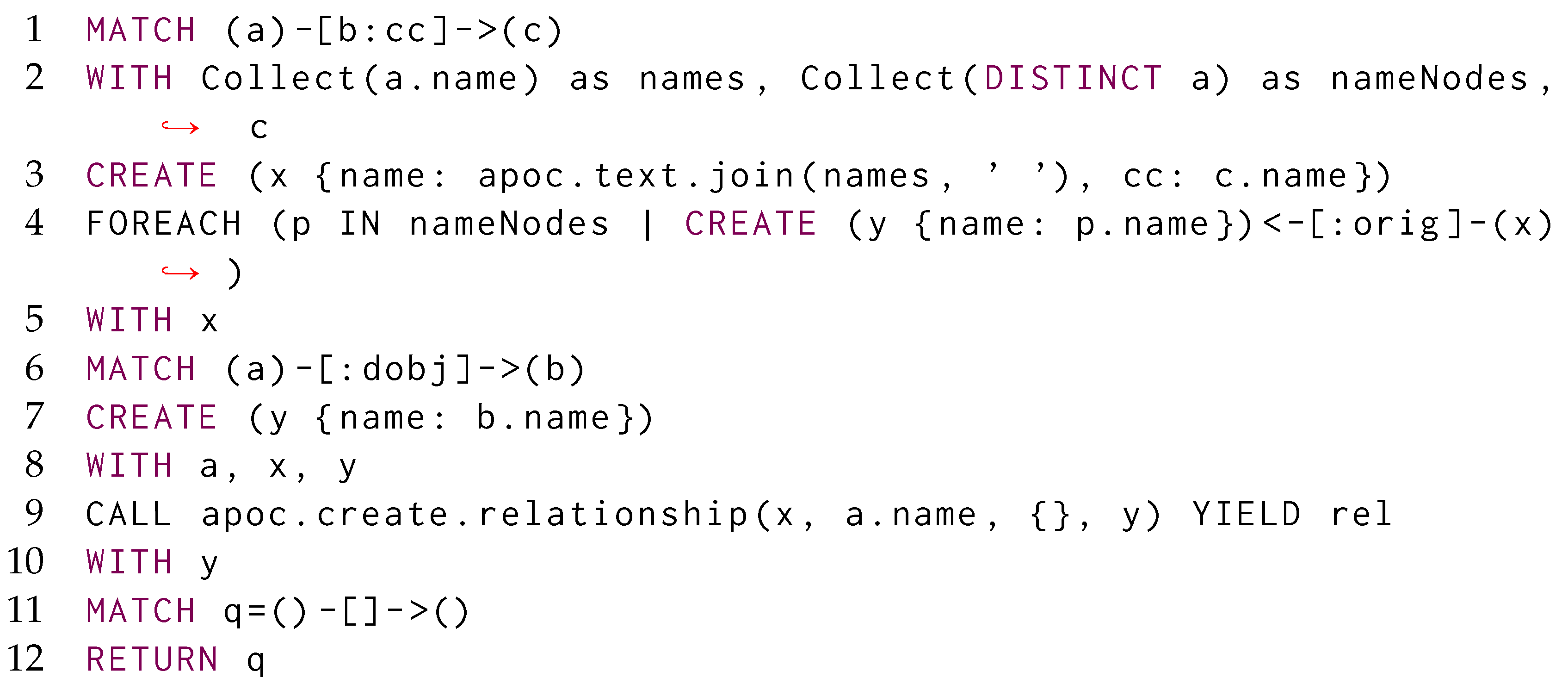

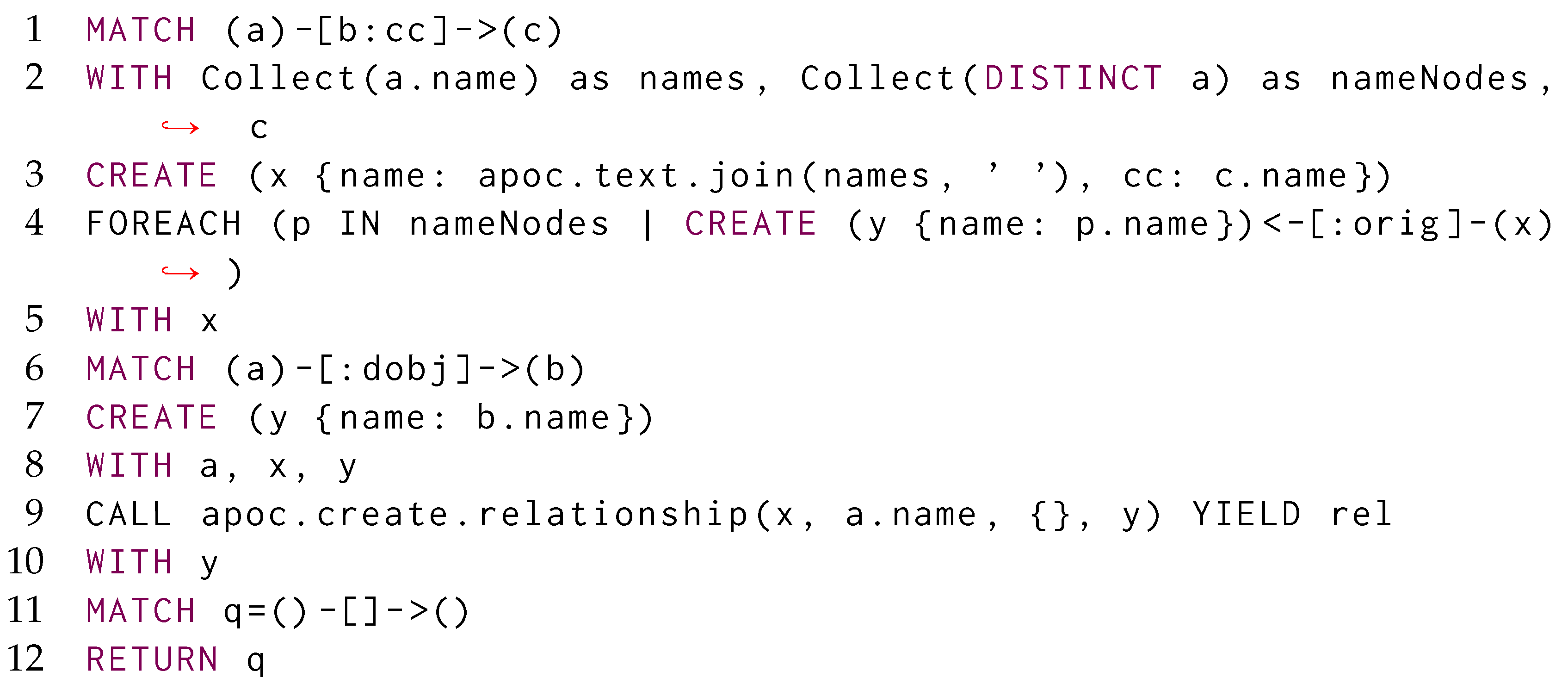

Notwithstanding the former, such language cannot express all the possible data transformation operations, thus requiring an extension via its APOC library (https://Neo4j.com/developer/Neo4j-apoc/, accessed on 13 November 2023) for defining User-Defined Functions (UDF) in a controlled way, like concatenating the properties associated with vertices’ multiple matches:

Thus, Cypher can collect values for then generating one single vertex (See also Listing A2) but, due to the structural limitations of the query language (not supporting nested morphisms) and data model (not supporting explicit identifiers for both vertices and edges), it does not support the nesting of entire sub-patterns of the original matching.

A further limitation of Cypher is the inability to create a relationship with a variable as the name, as standard Cypher syntax only allows a string parameter. Using the APOC library we can use apoc.create.relationship to pass a variable name from an existing vertex for example. Given our last query creating the vertex x, we can continue the aforementioned query:

As there are no associated formal semantics for the entirety of this language’s operators except its fragment related to graph traversal and matching [16], for our proofs regarding this language (e.g., Lemma 7) we are forced to reduce our arguments to common-sense reasoning an experience-driven observation from the usage of Cypher over Neo4j similarly to the considerations in the former paragraph. In fact, such algebra does not involve the creation of new graphs: this is also reflected by its query evaluation plan, which preferred evaluation is a morphism table rather than expressing the rewriting in terms of updated and resulting property graph. As a result, the process of creating or deleting vertices and edges is not optimised.

Overall, Cypher suffers from the limitations posed by the property graph data model which, by having no direct way to refer to the matched vertices or edges by reference, forces the querying user to always refer to the properties associated with them; as a consequence, the resulting morphism tables are carrying out redundant information that cannot reap the efficient data model posed by columnar databases, where entire records can be referenced by their ID. This is evident for  statements, voiding objects represented within the morphisms. This limitation of the property graph model, jointly with the need for representing acyclic graphs, motivates us to use the Generalised Semistructured Model (GSM) as an underlying data model for representing graphs, thus allowing us to refer to the vertices and edges by their ID [34]. Consequently, our implementation represents morphisms for acyclic property graphs as per Figure 1c.

statements, voiding objects represented within the morphisms. This limitation of the property graph model, jointly with the need for representing acyclic graphs, motivates us to use the Generalised Semistructured Model (GSM) as an underlying data model for representing graphs, thus allowing us to refer to the vertices and edges by their ID [34]. Consequently, our implementation represents morphisms for acyclic property graphs as per Figure 1c.

statements, voiding objects represented within the morphisms. This limitation of the property graph model, jointly with the need for representing acyclic graphs, motivates us to use the Generalised Semistructured Model (GSM) as an underlying data model for representing graphs, thus allowing us to refer to the vertices and edges by their ID [34]. Consequently, our implementation represents morphisms for acyclic property graphs as per Figure 1c.

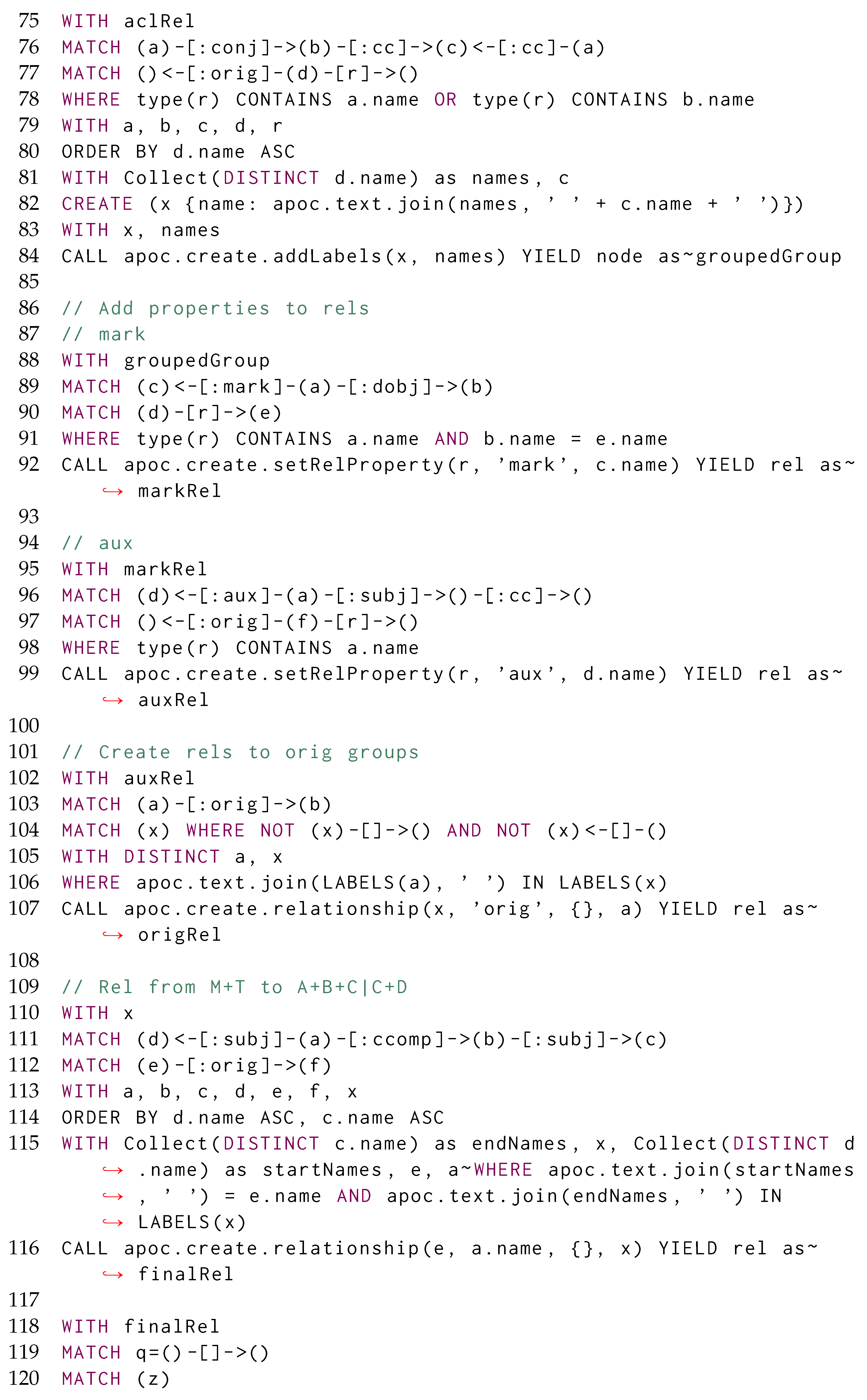

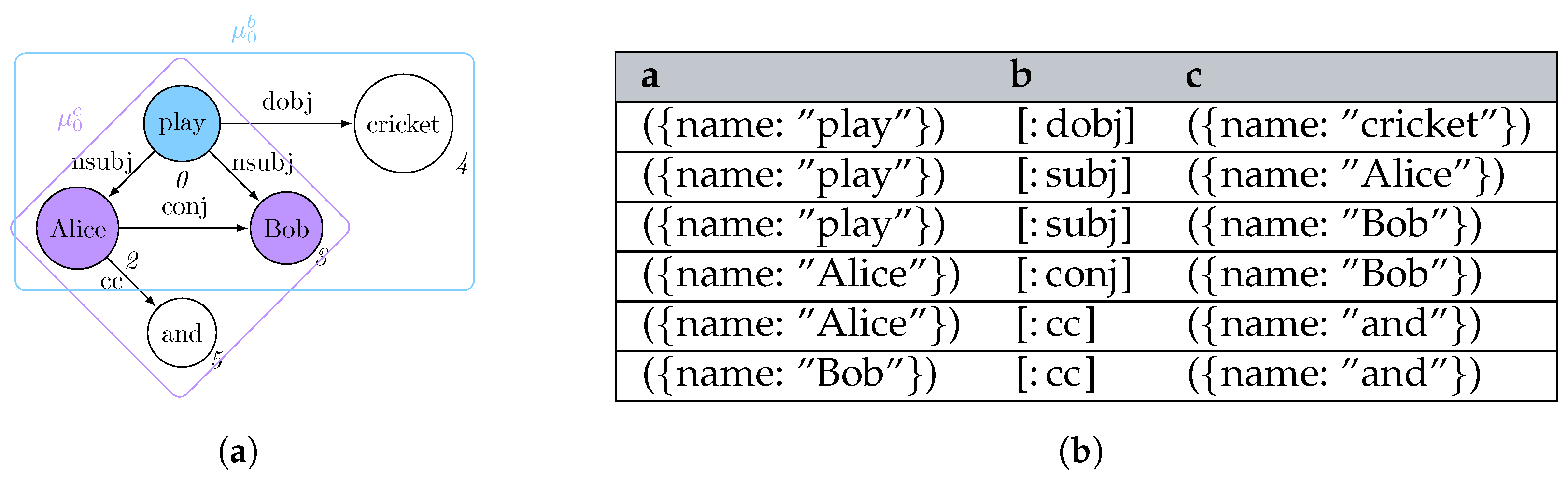

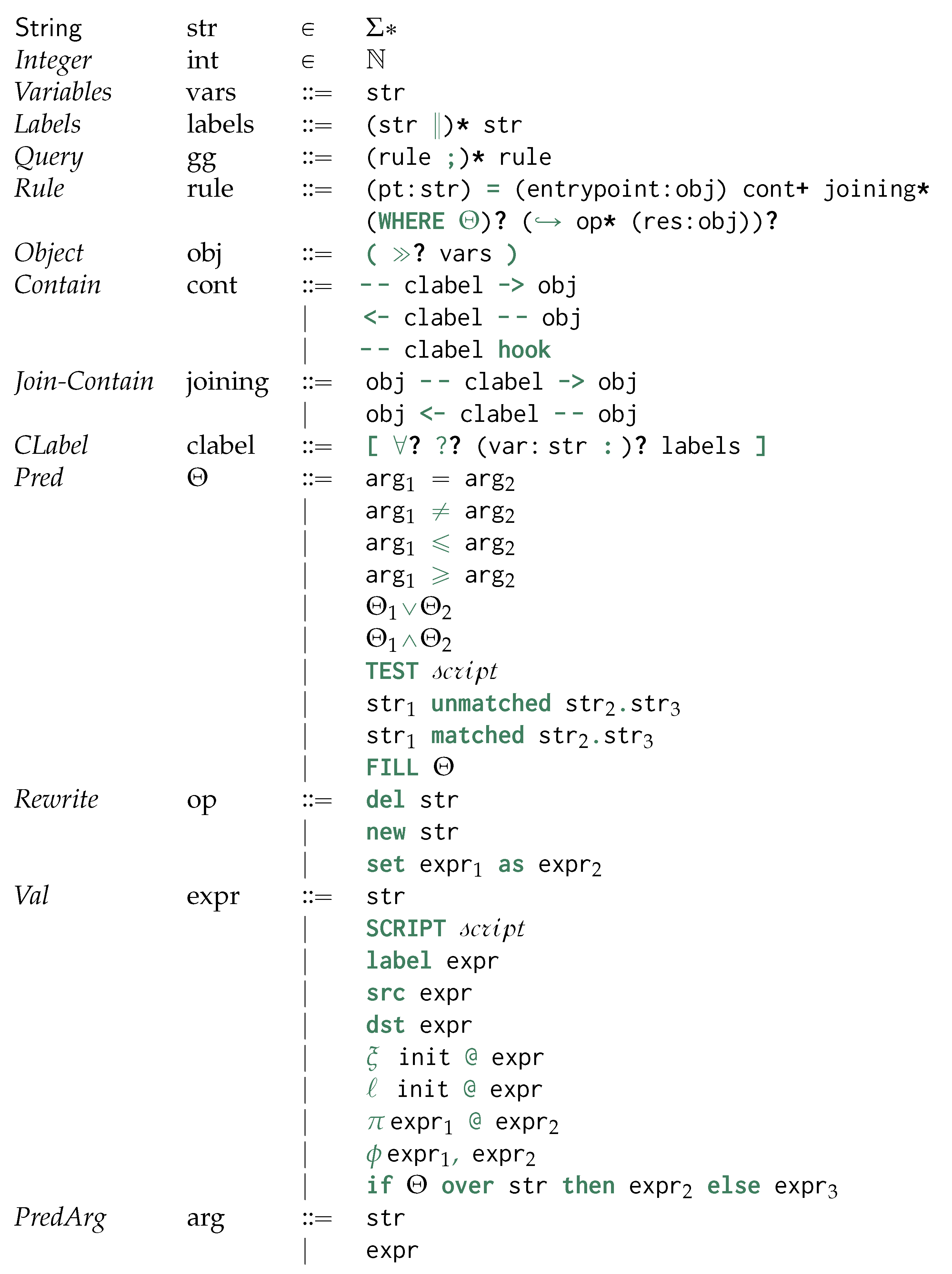

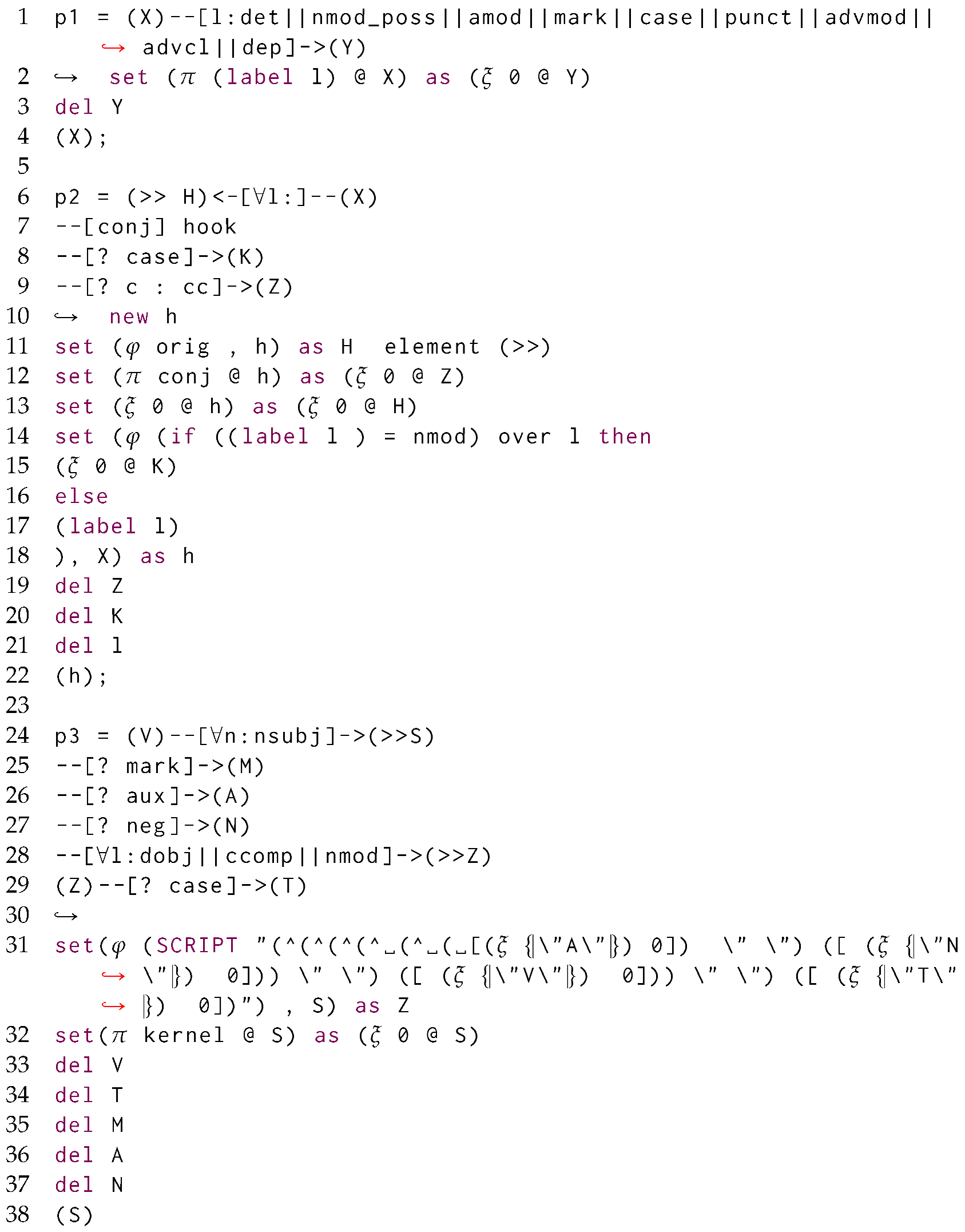

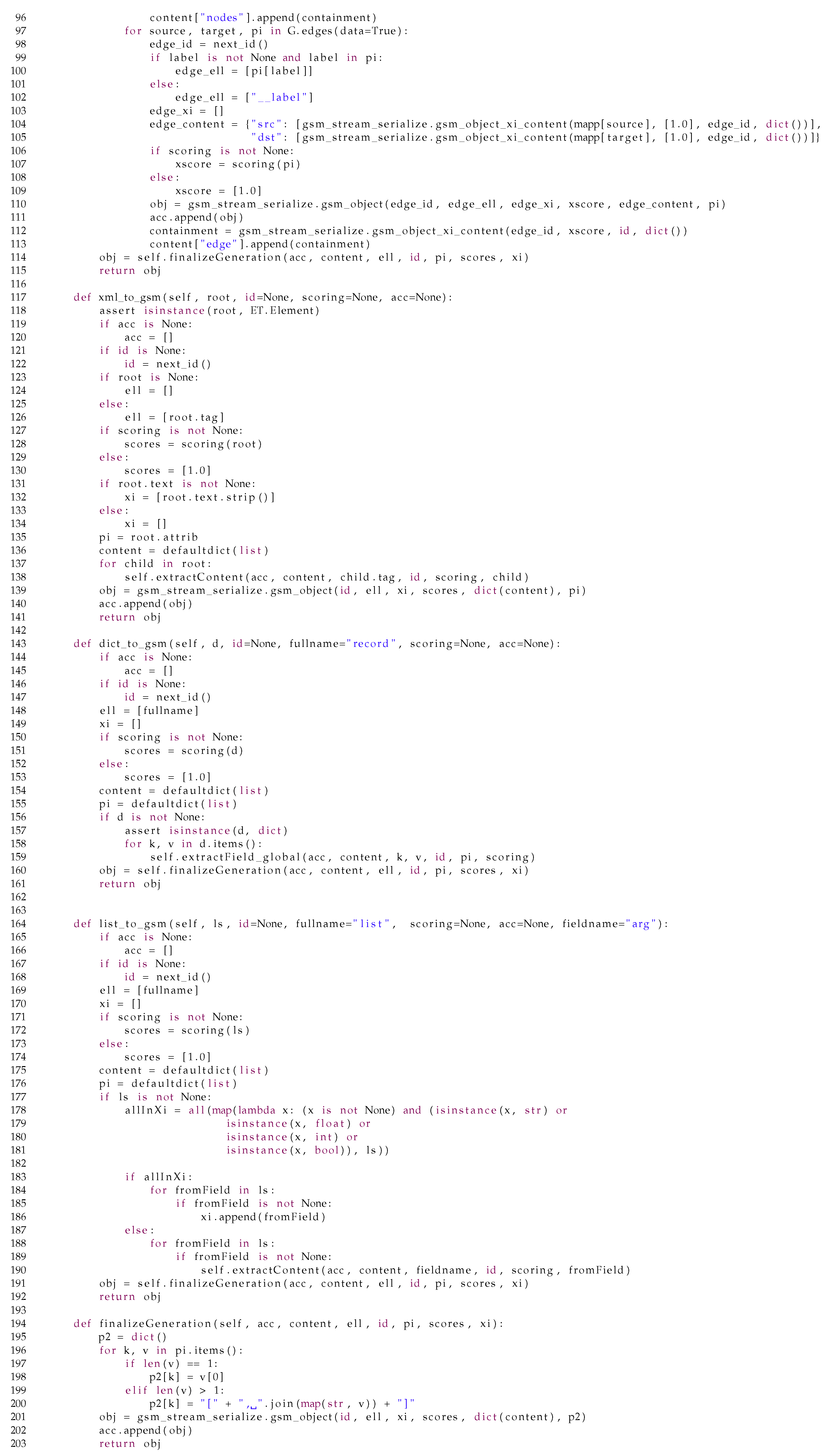

statements, voiding objects represented within the morphisms. This limitation of the property graph model, jointly with the need for representing acyclic graphs, motivates us to use the Generalised Semistructured Model (GSM) as an underlying data model for representing graphs, thus allowing us to refer to the vertices and edges by their ID [34]. Consequently, our implementation represents morphisms for acyclic property graphs as per Figure 1c. Figure 3a provides a possible property graph instantiation of the digraph originally presented in Figure 1a. Notwithstanding the former definition, Neo4j’s implementation of the Property Graph model substantially differs from the aforementioned mathematical definition, as it does not allow the definition of an explicit resource identifier for both vertices and edges. After expressing the matching query in Figure 1b in Cypher as  *, the resulting morphism table from Figure 3b does not explicitly reference the IDs referring to the specific vertices and edges, thus making it quite impractical to update the values associated with the vertices while continuing to restructure the graph, as this will require to re-match the previously updated data to retain it in the match. Although this issue might be partially solved by exploiting explicit incremental views over the property graph model [32], this solution had no application in Neo4j v5.20, thus making it impossible to fully test its feasibility within the available system. Furthermore, the elimination of an object previously matched within a morphism will update the table by providing an empty object rather than providing a NULL match. This will motivate us to investigate other ID-based graph data models.

*, the resulting morphism table from Figure 3b does not explicitly reference the IDs referring to the specific vertices and edges, thus making it quite impractical to update the values associated with the vertices while continuing to restructure the graph, as this will require to re-match the previously updated data to retain it in the match. Although this issue might be partially solved by exploiting explicit incremental views over the property graph model [32], this solution had no application in Neo4j v5.20, thus making it impossible to fully test its feasibility within the available system. Furthermore, the elimination of an object previously matched within a morphism will update the table by providing an empty object rather than providing a NULL match. This will motivate us to investigate other ID-based graph data models.

*, the resulting morphism table from Figure 3b does not explicitly reference the IDs referring to the specific vertices and edges, thus making it quite impractical to update the values associated with the vertices while continuing to restructure the graph, as this will require to re-match the previously updated data to retain it in the match. Although this issue might be partially solved by exploiting explicit incremental views over the property graph model [32], this solution had no application in Neo4j v5.20, thus making it impossible to fully test its feasibility within the available system. Furthermore, the elimination of an object previously matched within a morphism will update the table by providing an empty object rather than providing a NULL match. This will motivate us to investigate other ID-based graph data models.

*, the resulting morphism table from Figure 3b does not explicitly reference the IDs referring to the specific vertices and edges, thus making it quite impractical to update the values associated with the vertices while continuing to restructure the graph, as this will require to re-match the previously updated data to retain it in the match. Although this issue might be partially solved by exploiting explicit incremental views over the property graph model [32], this solution had no application in Neo4j v5.20, thus making it impossible to fully test its feasibility within the available system. Furthermore, the elimination of an object previously matched within a morphism will update the table by providing an empty object rather than providing a NULL match. This will motivate us to investigate other ID-based graph data models.

Figure 3.

Framing Figure 1 in the context of Neo4j’s implementation of the Property Graph model. (a) Dependency graph for “Alice and Bob play cricket”; (b) Neo4j’s property graph morphism table.

Neo4j lists some additional existing limitations beyond APOC and the expressibility of graph grammars in a declarative way with Cypher within their documentation (https://Neo4j.com/docs/operations-manual/current/authentication-authorization/limitations/, accessed on 13 November 2023), mainly related to the interplay between data access security requirements and query computations.

At the time of writing, the most studied graph query language both in terms of semantics and expressive power is SPARQL, which allows a specific class of queries that can be sensibly optimised [5,31]. The algebraic language used to formally represent SPARQL performs queries’ incremental evaluations [35], and hence allows for boosting the querying process while data undergoes updates (both incremental and decremental).

While the clauses represented within the WHERE statement are mapped to an optimisable intermediate algebra [31,36], thus including the execution of “optional joins” paths [37] for the optional matching of paths, such considerations do not apply for the constraints related to the graph update or return, such as CONSTRUCT, INSERT, and DELETE. While CONSTRUCT is required for returning a graph view as a final outcome, INSERT and DELETE create and remove RDF triplets by chaining them with matching operations. These operations also come with sensible limitations: while the first does not allow the return of updated graphs that can be subsequently queried by the same matching algorithm, the two latter statements merely update the underlying data structure and require the re-computation of the overall matching query to retain the updated results. We will partially address these limitations in our query language and data model by associating ID properties directly via an object-oriented representation while keeping track of the updated information on an intermediate view, which is always accessible within the query evaluation phase.

Last but not least, the usage of so-called named graphs allows for the selection of over two distinct RDF graphs, which substantially differs from the queries expressible on Cypher, where those can be only computed by one graph database at a time. Notwithstanding the former, the latest graph query language standard is very different from SPARQL, hence, for the rest of the paper, we are going to draw our attention to Cypher.

3.2. Nested Relational Model

3.2.1. Logical Model

A nested relational model describes data represented in tabular format, where each table, composed of multiple records, comes with a schema.

Given a set of attributes and a set of datatypes, a schema is a finite function mapping each string attribute in to its associated data type (). A schema is said to be not nested if it maps attributes to all basic data types, and nested otherwise. This syncretises the traditional function-based notation for schemas within the traditional relational model [13] with the tree-based characterisation of nested schemas

In this paper, we restrict the basic datatypes in to the following ones: vertex-ID ni, containment-ID ci, and a label or string str. Each of these types is associated with a set of possible values through a function: vertex- and containment-ID are associated with natural numbers (), while the string type is associated with the set of all the possible strings ().

A record , associated with a schema , is also a finite function mapping each attribute in to a possible value, either a natural number, a string, or a list of records (tables) as specified by the associated schema S (). We define a table T with schema as a list of records all having schema S, i.e., .

3.2.2. Query Languages

Relational algebra [13] is the de facto standard to decompose relational queries expressed in SQL to its most fundamental operational constituents while providing well-founded semantics for SQL. This algebra was later extended [38,39] to consider nested relationships. Relational algebra was also adapted to represent the combination of single edge/triple traversals in SPARQL, so as to express the traversal semantics of both required and optional patterns [5].

We now detail a set of operators of interest that will be used across the paper.

We relax the union operation from standard relational algebra by exploiting the notion of outer union [40]: given two tables t and s, respectively, with schema S and U, their outer union is a table with schema and containing each record from each table where shared attributes are associated with the same types ():

A restriction [13] or projection [41] operation over a relational table t with schema S returns a new table with schema in where both its schema and its records have a domain restricted to the attributes in L:

A renaming [41] operation over a relational table t with schema S and replaces all the occurrences of attributes in L with ones in R, thus returning a new table with schema :

A nesting [38] operation over a table t with schema S returns a new table with schema , where all the attributes referring to B are nested within the attribute A, which is associated with the type resulting from the nested attributes. Operatively, it coalesces all the tuples in t sharing the same values not in B as a single equivalence class c: we then restrict one representative of this class to the attributes not in B for then extending it by associating to a novel attribute A the projection of c over the attributes in B, i.e., :

A (natural) join [42] between two non-nested tables t and s with schemas S and U, respectively, if then it combines records from both tables that share the same attributes, and otherwise extends each record from the left table with a record coming from the right one (cross product):

Given a sequence of attributes , this operation can be extended to join the relationship coming from the right at any desired depth level by specifying a suitable path to traverse the nested schema from the left relationship [38]:

This paper will automate the determination of given the schemas of the two tables (Section 5). Although it can be shown that the nested relational model can be easily represented in terms of the traditional not-nested relational model [43], this paper uses the nested relational model for compactly representing graph nesting operations over morphisms. Last, the left (outer) join t  s extends the results from by also adding all the records from t having no matching tuples in s:

s extends the results from by also adding all the records from t having no matching tuples in s:

s extends the results from by also adding all the records from t having no matching tuples in s:

s extends the results from by also adding all the records from t having no matching tuples in s:

As per SPARQL semantics, the left outer join represents patterns that might optionally appear.

3.2.3. Columnar Physical Model

Columnar physical models offer a fast and efficient way to store and retrieve data where each table ℜ with a schema having a domain is decomposed into distinct binary relations with a schema with domain for each attribute in , thus requiring to only refer to one record by its ID while representing data boolean conditions through algebraic operations. As this decomposition guarantees that the full-outer natural join  of the decomposed tables is equivalent to the initial relation ℜ, we can avoid listing NULL values in each , thus limiting our space allocation to the values effectively present in our original table ℜ. Another reason for adopting a logical model compatible with this columnar physical model is to keep provenance information [44] while querying and manipulating the data while carrying out data transformations. By exploiting the features of the physical representation, we are no longer required to use the logical model for representing both data and provenance information as per previous attempts for RDF graph data [45], as the columnar physical model allows natively supporting id information for both objects (i.e., vertices) and containments (i.e., edges), thus further extending the RDF model by extensively providing unique ID similarly to what was originally postulated by the EPGM data model for allowing efficient distributed computation [46]. These considerations remark on the generality of our proposed model relying upon this representation.

of the decomposed tables is equivalent to the initial relation ℜ, we can avoid listing NULL values in each , thus limiting our space allocation to the values effectively present in our original table ℜ. Another reason for adopting a logical model compatible with this columnar physical model is to keep provenance information [44] while querying and manipulating the data while carrying out data transformations. By exploiting the features of the physical representation, we are no longer required to use the logical model for representing both data and provenance information as per previous attempts for RDF graph data [45], as the columnar physical model allows natively supporting id information for both objects (i.e., vertices) and containments (i.e., edges), thus further extending the RDF model by extensively providing unique ID similarly to what was originally postulated by the EPGM data model for allowing efficient distributed computation [46]. These considerations remark on the generality of our proposed model relying upon this representation.

of the decomposed tables is equivalent to the initial relation ℜ, we can avoid listing NULL values in each , thus limiting our space allocation to the values effectively present in our original table ℜ. Another reason for adopting a logical model compatible with this columnar physical model is to keep provenance information [44] while querying and manipulating the data while carrying out data transformations. By exploiting the features of the physical representation, we are no longer required to use the logical model for representing both data and provenance information as per previous attempts for RDF graph data [45], as the columnar physical model allows natively supporting id information for both objects (i.e., vertices) and containments (i.e., edges), thus further extending the RDF model by extensively providing unique ID similarly to what was originally postulated by the EPGM data model for allowing efficient distributed computation [46]. These considerations remark on the generality of our proposed model relying upon this representation.

of the decomposed tables is equivalent to the initial relation ℜ, we can avoid listing NULL values in each , thus limiting our space allocation to the values effectively present in our original table ℜ. Another reason for adopting a logical model compatible with this columnar physical model is to keep provenance information [44] while querying and manipulating the data while carrying out data transformations. By exploiting the features of the physical representation, we are no longer required to use the logical model for representing both data and provenance information as per previous attempts for RDF graph data [45], as the columnar physical model allows natively supporting id information for both objects (i.e., vertices) and containments (i.e., edges), thus further extending the RDF model by extensively providing unique ID similarly to what was originally postulated by the EPGM data model for allowing efficient distributed computation [46]. These considerations remark on the generality of our proposed model relying upon this representation.We now discuss a specific instantiation of this columnar relational model for representing temporal logs: KnoBAB [11]. Although this representation might sound distant from the aim of supporting an Object-Oriented database, Section 4.2 will outline how this might be achieved. KnoBAB stores each temporal log into three distinct types of tables: (i) a CountingTable storing the number of occurrences n of a specific activity label in a trace as a record . Such a table, created in almost linear time while scanning the log, comes at no significant cost at data loading. (ii) An ActivityTable preserving the traces’ temporal information through records asserting that the j-th event of the i-th log trace comes with an activity label a and it is stored as the id-th record of such a table. p (and x) points to the records containing the immediately preceding (and following) event of the trace, thus allowing linear scans of the traces. This is of the uttermost importance as the records are sorted by activity label, trace ID, and event ID for enhancing query run times. (iii) The model also instantiates as many AttributeTableκ as the keys in the data payload associated with temporal events, where each record remarks that the event occurring as the -th element within the table with activity label a associates a key to a non-NULL value v. This data model also come with primary and secondary indices further enhancing the access to the underlying data model; further details are provided in [11].

At the time of writing, no property graph database exploits such a model for efficiently querying data, in particular, Neo4j v5.20 stores property graphs using a simple data model representation where the whole vertices, relationships, or properties are stored in distinct tables (https://Neo4j.com/developer/kb/understanding-data-on-disk/, accessed on 24 August 2024). This substantially differs from the assumptions of the columnar physical model, prescribing the necessity for decomposing any data representation in multiple different tables, thus making the overall search more efficient by assuming that each data record can be associated with a unique ID. To implement a model under these assumptions, we then require both our logical (Section 4.1) and physical (Section 4.2) model to support explicit identifiers for both objects (acting as graph vertices) and containment relationships (acting as graph edges). Although Neo4j v5.20 guarantees to represent records in fixed-size to fasten up the data retrieval process, this is insufficient to ensure fast access to edges or vertices associated with a specific label. On the other hand, our solution tries to address this limitation by explicitly indexing objects and containments by label information.

Concerning triple stores for RDF data. The only database effectively using column-based storage is Virtuoso v1.1 (https://virtuoso.openlinksw.com/whitepapers/Virtuoso_a_Hybrid_RDBMS_Graph_Column_Store.html, accessed on 24 August 2024): this solution is mainly exploited for fast retrieving the data based on the subject, predicate, and object values. As the underlying physical model does not differentiate between vertices and edges due to the specificity of the logical model requiring that both relationships among vertices (URIs) and properties associated with those must be represented via triples, it is not possible to further optimise the data access by reducing the overall size of the data to be navigated and searched. On the other hand, our proposed physical model (Section 4.2) will store this information into separate tables: the ActivityTable, registering all the objects (and therefore, vertices) being loaded, an AttributeTableκ storing key-value properties associated with the objects, and a PhiTableκ for representing the containment relationships (and therefore, edges).

Last, both Virtuoso v1.1 and Neo4j v5.20 do not exploit query caching mechanisms as the ones proposed in [47] for also enhancing the execution of multiple relational queries. This approach, on the other hand, was recently implemented in KnoBAB, thus reinforcing our previous claims for extending such previous implementation to adapt it to a novel logical model. In particular, the Algorithm presented in Section 6.3 partially implements this mechanism for caching intermediate traversal queries that might be shared across patterns to be matched, thus avoiding visiting the same data multiple times for different pattern matching.

4. Generalised Semistructured Model v2.0

We continue the discussion of this paper’s methodology by discussing the logical model (Section 4.1) as a further extension of the Generalised Semistructured Model [34] to explicitly support property-value associations for vertices via . Although we propose no property-value extension for containments, we argue that this is minor and does not substantially change the theoretical and implementation results discussed in this paper for Generalised Graph Grammar. We also introduce a novel columnar-oriented physical model (Section 4.2 on the next page) being a direct application of our previous work on temporal databases [11] already supporting collections of databases (log of traces): this will be revised for loading and indexing collection of GSM databases defining our overall physical storage. As the external data to be loaded into the physical model directly represents the logical model, we show that these two representations are isomorphic (Lemma 1) by defining explicitly the loading and serialisation operations (Algorithm from Section 4.2).

As directly updating the physical model with the changes specified in the rewriting steps of the graph grammar rules might require massive restructuring costs, we instead keep track of such changes in a separate non-indexed representation acting as an incremental view to the entire database. We then refer to this additional structure as a GSM view for each GSM database g loaded in the physical model (Section 4.3). We then characterise the semantics of by defining the updating function for g as a materialisation function considering the incremental changes recorded in (Section 4.3.2 on page 19).

Last, as we consider the execution of the rewriting steps for each graph as a transformation of the vertices and edges as referenced within each morphism, modulo the updates tracked in , it becomes necessary to introduce some preliminary notation for resolving vertex and edge variables from such morphism (Section 4.4).

4.1. Logical Model

The logical model for GSM describes a single object-oriented database g as a tuple , where is a collection of objects (references). Each object is associated with a tuple of possible types and to a tuple of string-representations providing its human-readable descriptions. As an object might be the result of an automated entity-relationship extraction process, each object is associated with a list of confidence values describing the trustworthiness of the provided information. Differently from the previous definition [34], we extend the previous model to also associate each object to an explicit property-value association for each object through a finite function .

Differently from our previous definition of our GSM model, we express object relationships through uniquely identified vertex containments similar to edges as in Figure 1a, to explicitly reference such containments in morphism tables similarly to Figure 1c. We associate each object o with a containment attribute referring to multiple containment IDs via . An explicit index maps each of these IDs to a concrete containment denoting that is contained in o through the containment relationship with a confidence score of w. This separation between indices and values is necessary to allow the removal of containment values when computing queries efficiently. This also guarantees the correct correspondence between each value in the domain of to the label associated with the -th containment requiring each will be associated with one solve containment relationship (e.g., ).

We avoid objects containing themselves at any nesting level by imposing a recursion constraint [6] to be checked at indexing time: this allows both the definition of sound structural aggregations, which can be then conveniently used to represent multi-dimensional data-warehouses [34]. Thus, we freely assume that no object shall store the same containment reference across containment attributes [6]: . This property can also be adapted to conveniently represent Direct Acyclic Graphs, by representing each vertex of a weighted property-graph as a GSM object, and each edge with weight w and label reflects a containment . Given this isomorphism , we can also apply a layered and reverse topological ordering of the vertices of g and denote it as .

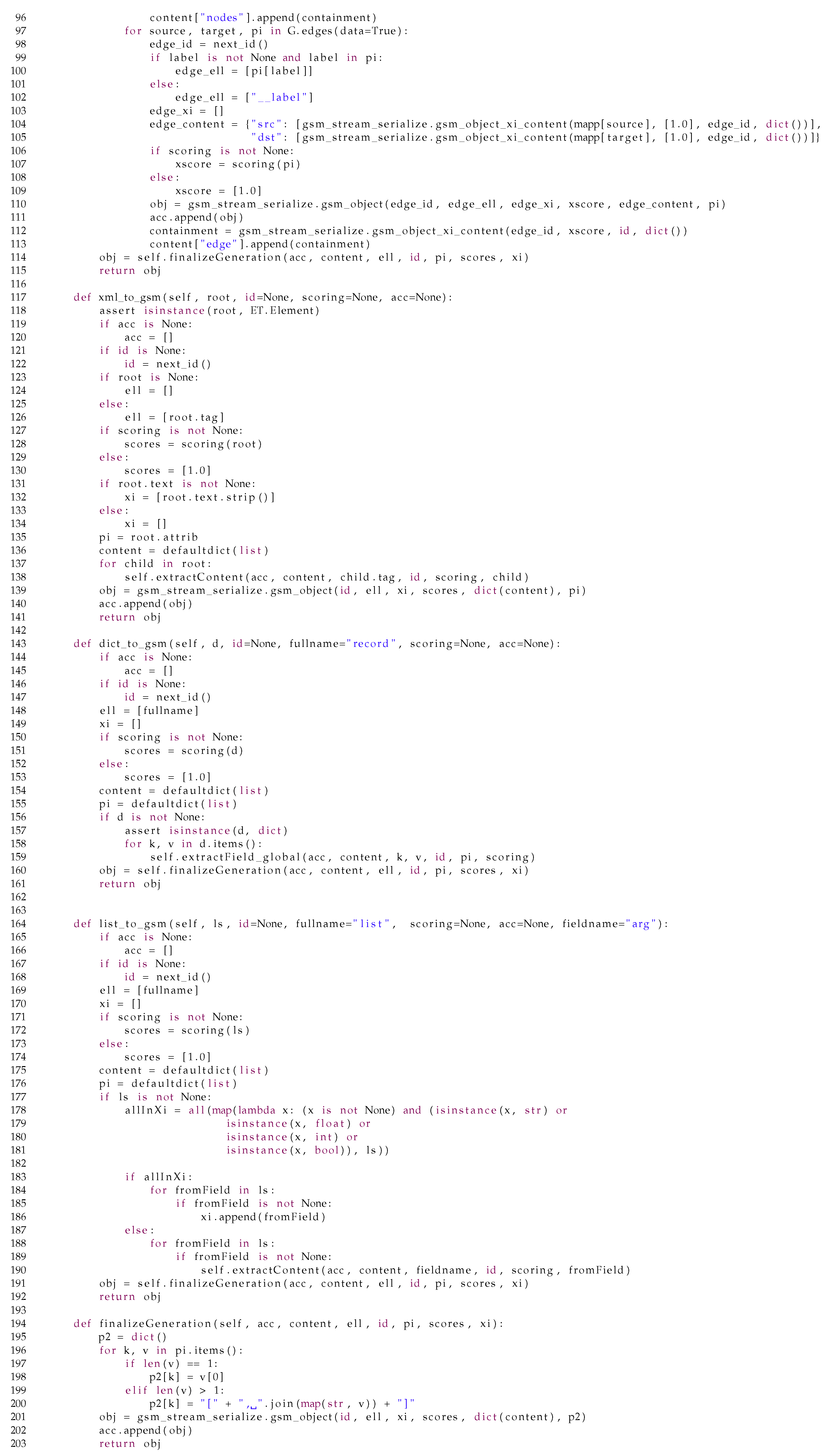

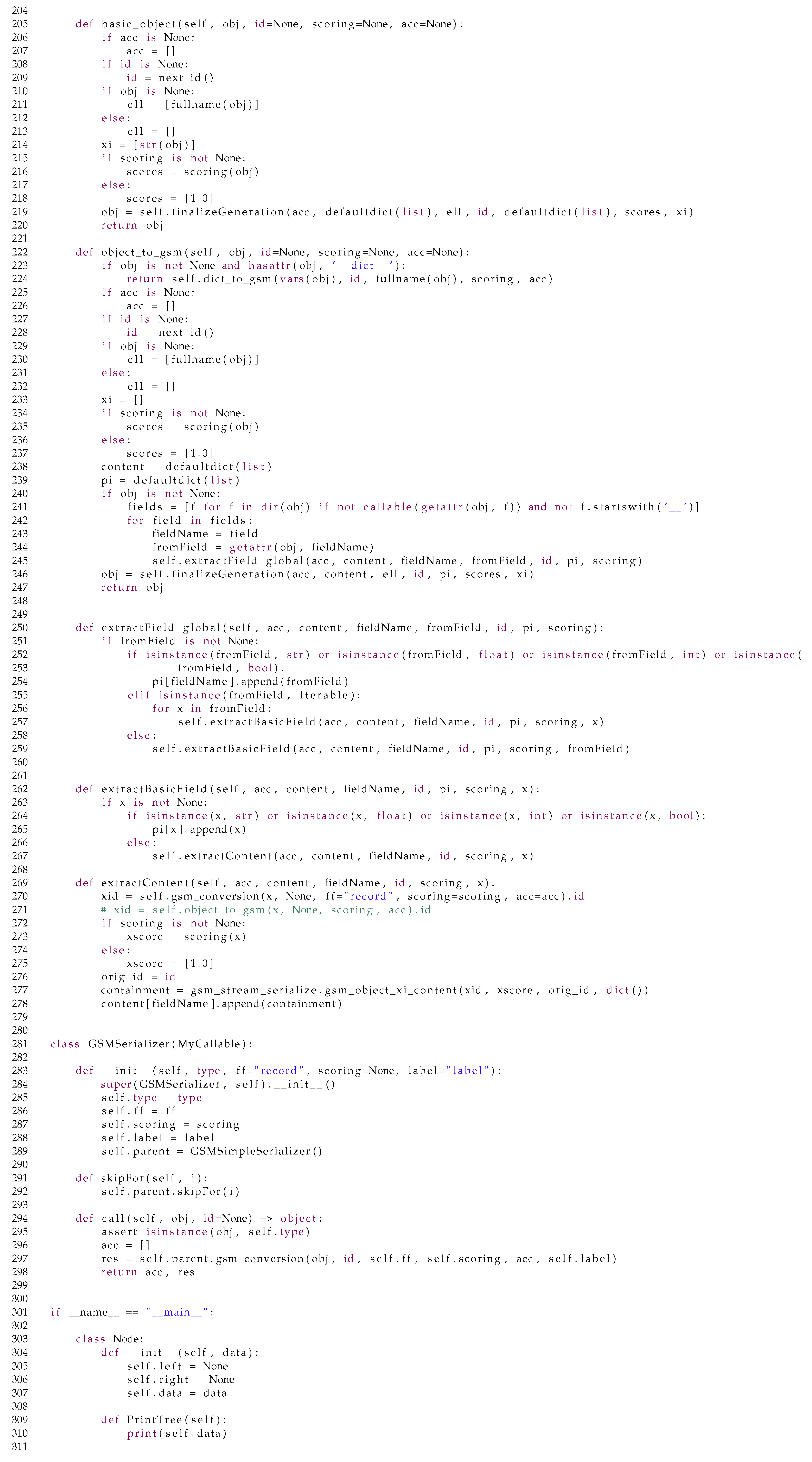

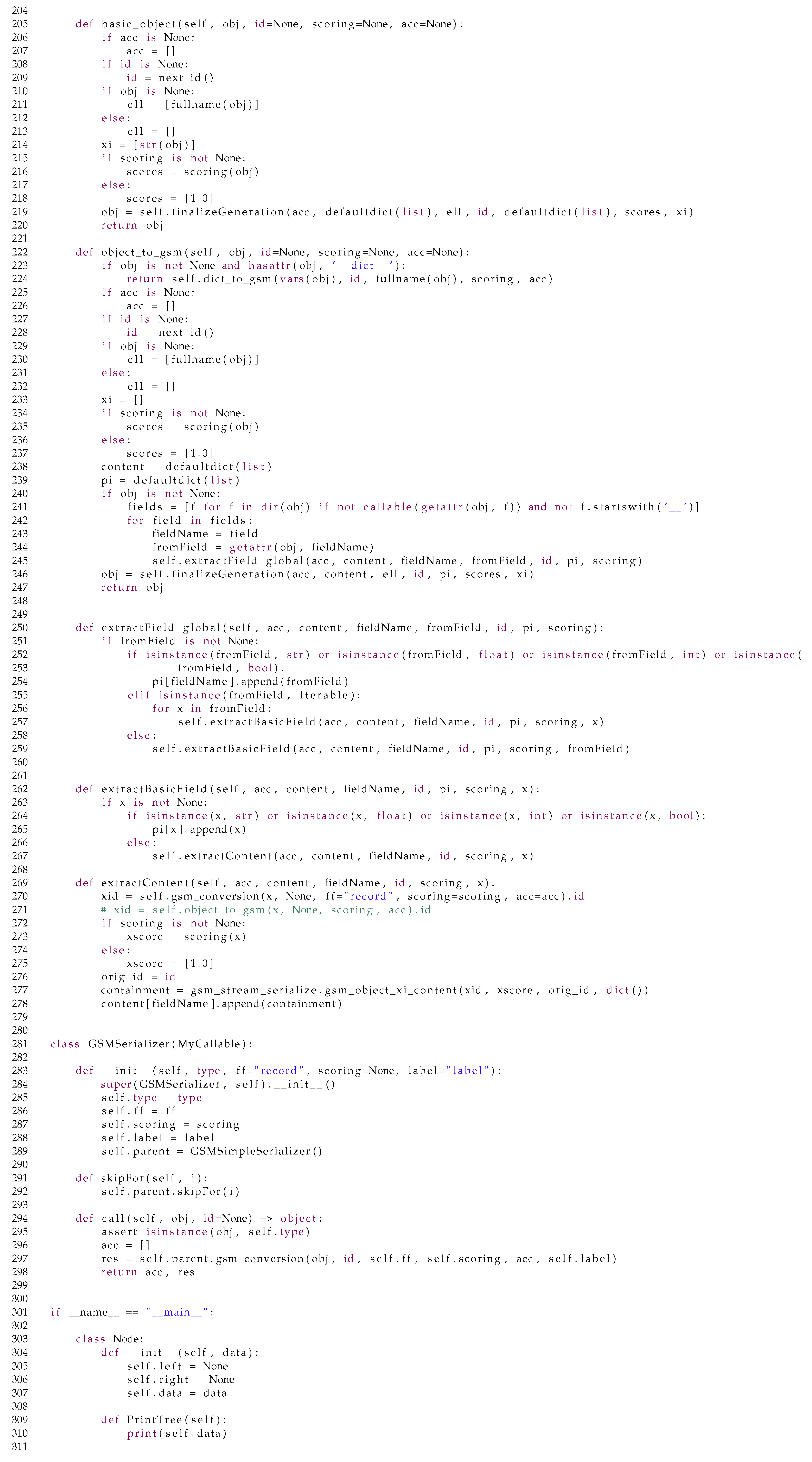

The Python code provided in Appendix A.5 showcases the possibility of instantiating Python objects within the GSM model via the implementation of an adequate transformation function, mapping all native types as key-value properties of a GSM object, while associating the others to and containment relationships (Line 222). Containments also enable the representation of other object-oriented data structures, such as dictionaries/maps (Line 144) and linear data structures (Line 164), thus enabling a direct representation of JSON and nested relational data (Line 53). As per our previous work [9], GSM also supports the representation of XML (Line 117) and property graph data (Line 65). This also achieves the representation aim of EPGM by natively supporting unique identifiers for both vertices and edges, to better operate on those by directly referring to their IDs without the need to necessarily carry out all of its associated payload information [46]. As such, this model leverages all the pros and cons of the previous graph data models by framing them within an object-oriented semistructured model.

4.2. Physical Model

We now describe how the former model can be represented in primary memory for fastening up the matching and rewriting mechanism.

First, we would like to support the loading of multiple GSM databases while being able to operate over them simultaneously similar to the named graphs in the RDF model. Differently from Neo4j v5.20 and similarly to RDF’s named graphs, we ensure a unique and progressive ID across different databases.

We directly exploit our ActivityTable for listing all the objects appearing within each loaded GSM: as such, the id-th record will refer to the i-th object in the g-th GSM database, while a will refer to the first label of , that is .

We extend KnoBAB’s ActivityTable to represent the containment relationship; the result is a PhiTableκ for each containment attribute : each record refers to a specific GSM database g through which object associated with the first label contains with an uncertainty score of w and is associated with an index : this expresses the idea that with . At the indexing phase, the table is sorted by lexicographical order over the record’s constituents. We extend this table with two indices: a primary index mapping each first occurring ℓ value to the first and the last object within the collection, and a secondary index mapping such each database ID g and object ID to a collection of containment records expressing for each and such that .

We retain the AttributeTableκ for expressing the properties associated with the GSM vertices, for which we keep the same interpretation from Section 3.2.3 on page 12: thus, each record refers to where appears as the id-th record within the , now used to list all the objects occurring across GSM models.

Last, ℓ and properties are stored in a one-column table and a secondary index mapping each database ID and object ID to a list of records referring to the strings associated with the object ID. The same approach is also used to store the confidence values associated with each GSM object.

Algorithm 2 shows the algorithms used for loading all of the GSM databases to be loaded of interest to a single columnar database representation , as well as providing the algorithm used to serialize back the stored data into a GSM model for data visualisation and materialisation purposes. Given this, we can easily prove the isomorphism between the two shared data structures, thus also providing proof of the correctness of the two transformations which, as a result, explains the formal characterisation of such an algorithm.

| Algorithm 2 Loading a Logical Model into the Physical Model and serialising it back |

|

Lemma 1.

A collection of logical model GSM databases is isomorphic to the ones loaded and indexed physical model.

The proof is given in Appendix B.1. We refer to Section 8 for proofs related to the time complexity for loading, indexing, and serialising operations.

4.3. GSM View

To avoid massive restructuring costs while updating the information indexed in the physical model, we use a direct extension of the logical model to keep track of which objects were newly generated, removed, or updated during the application of the rule rewriting mechanisms. At query time, we instantiate a view for each being loaded within the physical model (Section 6.5). We want our view to support the following operations: (i) creation of new objects, (ii) update of the type/labelling information ℓ, (iii) updating the human-readable value characterisation , (iv) update of the containment values, (v) removal of specific objects, and (vi) substitution of previously matched vertices with newly-created or other previously matched ones. While we are not explicitly supporting the removal of specific properties or values, these can be easily simulated by setting specific fields to empty strings or values. A view for is defined as follows:

where is a GSM database holding all the objects being newly inserted alongside with the properties as well as the updated properties for the objects within the graph g (i–iv), refers to the nested morphism being considered while evaluating the query, denotes the extension of such morphism with the newly inserted objects through variable declaration, which resulting objects are then collected in (i). (and ) tracks all the removed objects (and specific containment objects, resp.) through their ID (v). Last, is a replacement map to be used when evaluating the transformations over morphisms occurring at a higher topological sort layer, stating to refer to an object when occurs (vi). and are used to retain the updated information locally to each evaluated morphism, while the rest are shared across the evaluation of each distinct morphism.

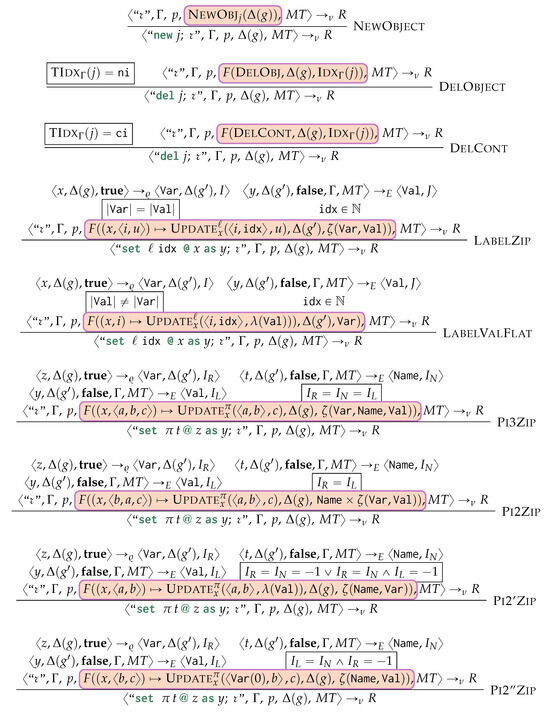

We equip with update operations reflecting the insertion, deletion, update, and replacement operations as per rewriting semantics associated with each graph grammar production rule. Such operations are the following:

- Start:

- re-initialises the view to evaluating a new morphism by discarding any information being local to each specific morphism:

- DelCont:

- We remove the i-th containment relationship from the database:

- NewObj:

- Generates a new empty object associated with a variable j and with a new unique object ID :

- ReplObj:

- replaces with if and only if was not removed ():

- DelObj:

- We remove an object only if this was already in g or if this was inserted in a previous evaluation of a morphism and, within the evaluation of the current morphism, we remove the original object being replaced by :

- Update:

- updates one of the object property functions by specifying which of those should be updated. In other words, this is the extension of Put2 (Equation (2)) as a higher function for updating the view alongside one of these components:

Concerning the time complexity, we can easily see that all operations take time to compute by assuming efficient hash-based sets and dictionaries. Concerning , this operation takes time, as we are merely inserting one pair at a time.

4.3.1. Object Replacement and Resolution

As our query language will have variables to be resolved via matched morphisms and view updates (Appendix A.1), we focus on specific variable resolution operations. Replacement operations should be interpreted as a reflexive and transitive closure over the step-wise replacement operations performed while running the rewriting query (Section 6.5 on page 33).

Definition 4

(Active Replacement). The active replacement function resolves any object ID x into its final replacement vertex following the chain of subsequent unique substitutions of each single vertex in ρ, or otherwise returns x:

During an evaluation of a morphism to be rewritten, such replacements and changes should be effective from the next morphism while we would like to preserve the original information while evaluating the current morphism.

Definition 5

(Local Replacament). The local replacement function blocks any notion of replacement while evaluating the original data matched by the current morphism while activating the changes from the evaluation of any subsequent morphism where such newly-created vertices from the current morphism will not be considered:

We consider objects as removed if they have no effective replacements to be enacted in any subsequent morphism evaluation: . Thus, we also need to resolve objects’ properties (such as ℓ, , , and ) by considering the changes registered in . We want to define a HOF property extraction that is independent of the specific function of choice. By exploiting the notion of local replacement (Definition 5), we obtain the following definition:

Definition 6

(Property Resolution). Given any property access function , a GSM database and a corresponding view we define the following property resolution high-order function:

where we ignore any value associated with a removed vertex in (first case), we consider any value stored in as overriding any other value originally in the loaded graph (second case), while returning the original value if the object underwent no updates (last case).

4.3.2. View Materialisation

Last, we define a materialisation function as a function updating a GSM database with the updates stored in the incremental view . We consider all the objects being inserted (implicitly associated with a score) and removed, as well as extending all the properties as per the view, thus removing containment relationships originating from or arriving at GSM objects.

As a rewriting mechanism might add edges violating the recursion constraint, we prune the containments loading to its violation by adopting the following heuristic: after approximating the topological sort by prioritising the object ID, we remove all the containments generating a cycle where the contained object has an ID with a lower value than its container ID. From this definition, we then derive the definition of the update of all the GSM databases loaded in the physical model G with their corresponding updates in via the following expression:

4.4. Morphism Notation

We consider nested relationships mapping attributes to either basic data types or to not nested schemas, as our query language will syntactically avoid the possibility of arbitrary nesting depths. Given this, any attribute can nest at a maximum level 1 of depth. This will then motivate a similar requirement for the envisioned operator for composing matched containments (collected in relational tables) into nested morphisms (Section 5).

As our query language requires resolving variables by associating each variable to the values stored in a specific morphism , we need a dedicated function enabling this. We can define a value extraction function for each morphism and attribute , returning directly the value associated with in if directly appears in the schema of (), and otherwise returns the list of all the possible values associated with it within a nested relationship having in its domain:

When resolving a variable, we need to determine whether this refers to a containment or to an object, thus selecting to remove the most appropriate type of constituent indicated within a morphism. So, we can define a function similar to the former for extracting the basic datatypes associated with a given attribute:

We also need a function determining the occurrence of an attribute x nested in one of the attributes of S. This will be used for both automating the discovery of the path for joining nested tables from our recently designed operator (Section 5) or for determining whether two variables belong to the same nested cell of the morphism while updating the GSM view. This boils down to defining a function returning if is an attribute of a table nested in , and NULL otherwise.

Last, we need a function for returning all the object and containment IDs under the circumstance that these contribute to the satisfaction of a boolean expression. We then define such a function returning such IDs at any level of depth of a nested morphism:

5. Nested Natural Equi-Join

Although previous literature defines nested natural join, no known algorithmic implementation is available. As our query language will return nested morphisms by gradually composing intermediate tables through natural or left joins is, therefore, important to provide an implementation for such an operator. This will be required to combine tables derived from the containment matching (Section 6.3) into nested morphisms, where it is required to join via attributes appearing within nested tables (Section 6.4). Our lemmas show the necessity of this operator by demonstrating the impossibility of expressing it via Equation (6) directly, while capturing the desired features for generating nested morphisms.

We propose for the first time Algorithm 3 for computing the nested (left outer) equi-join with a path of depth at most 1. The only parameter provided to the algorithm is whether we want a left outer equi-join or a natural one otherwise (isLeft) and, given that the determination of the nesting path will depend on the schema of both the left and right operand, we automate (Line 9) the determination of the path along which compute the nested join for which, we freely assume that we navigate on the nested schema of the left operand similarly to Equation (6): this assumption comes from our practical use case scenario that we are gradually composing the morphisms provided as a left operand argument with the containment relationships provided as the right operand. Furthermore, to apply the definition from Equation (6) while automating the discovery of the path to navigate to nest the relationship, we require that each attribute appearing from the right table schema might appear as nested in one single attribute from the left table or, otherwise, we cannot automatically determine which left attribute to choose to nest the graph visit (Line 8). Otherwise, we determine a unique attribute from the left table alongside which apply the path descent (Line 9).

| Algorithm 3 Nested Natural Equi-Join |

|

The algorithm also takes into account whether no nesting path is derivable, thus resorting to traditional relational algebra operations: if there are no shared attributes, we boil down to the execution of a cross product (Line 4) and, if none of the attributes from the right table appear within a nested attribute from the left table, then we boil down to a classical left-outer or natural equijoin depending on the isLeft parameter (Line 6).

Otherwise, we know that some attributes from the right table appear as nested within the same attribute N of the left table and that the two tables share the same non-nested attributes. Then, we initialize the join across the two tables by first identifying the nested attribute N from the left (Line 9). Given , the attributes appearing as nonnested attributes from the left table also appear in the right one, we partition the tables by , thus identifying the records having the same values for the same attributes in (Lines 10–11). Then, we start producing the results for the nested join by iterating over the values k appearing in either of the two tables (Line 12): if k appears only over the left table and we want to compute a left nested join (Line 13), we reconstruct the original rows appearing from such table and return them (Line 14). Last, we only consider values k for IR appearing on both tables and, for each row y from the left table having values in k, we compute the left (or natural equi-)join of with each row z from the right table and combine the results with k (Line 18).

Properties

We prove that cannot trivially boil down to ⋈ unless ; otherwise, if is in not appearing as an attribute for the to-be-joined table schemas, we will be left out with the left table and not a classic un-nested natural join. Proofs are postponed to Appendix B.2.

Lemma 2.

Given and , if , then

As this is not a desired feature for an operator whose application should be automated, this justifies the need for a novel nested algebra operator for composing nested morphisms, which should shift to left joins [37] for composing optional patterns provided within the right operand (isLeft), while also backtracking to natural joins or cross products if no nested attribute is shared across matched containments. The following lemma discards the possibility of the aforementioned limitation to occur from our operator, by instead capturing the notion of cross-products when tables are not sharing non-nested attributes.

Lemma 3.

Given tables L and R, respectively, with schema S and U with non-shared attributes (), either

NestedNaturalEquijoinFalse() or NestedNaturalEquijoinTrue() compute .

We also demonstrate that the proposed operator falls back to the natural join when no attribute nested in the left operand appears in the right one, while also capturing the notion of left join by changing the isLeft

Lemma 4.

Given tables L and R, respectively, with schema S and U where no nested attribute appearing in the left table appears in the schema of the second, thenNestedNaturalEquijoinfalse() andNestedNaturalEquijointrue() R.

R.

R.

R.The next lemma observes that our proposed operator not only nests the computation of the join operator within a table, but also implements an equijoin doing a value match across the table fields that are shared within the shallowest level. This is a desideratum to guarantee the composition of nested morphisms within the same GSM database ID, thus requiring sharing at least the same dbid field (Section 6.3). Still, these operations cannot be expressed through the nested join operator available from the current literature (Equation (6)).

Lemma 5.

Given tables L and R, respectively, with schema S and U, that is and , where the left table has a column N ( ) being nested () and also appearing in the right table (), NestedNaturalEquijoinfalse() cannot be expressed in terms of for , , and .

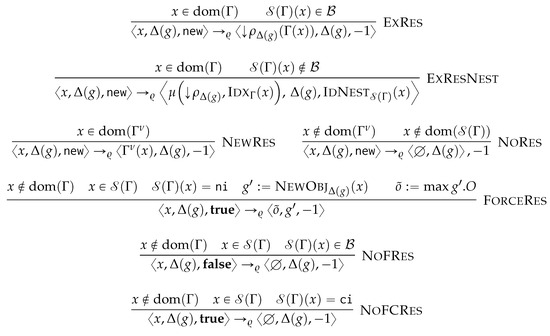

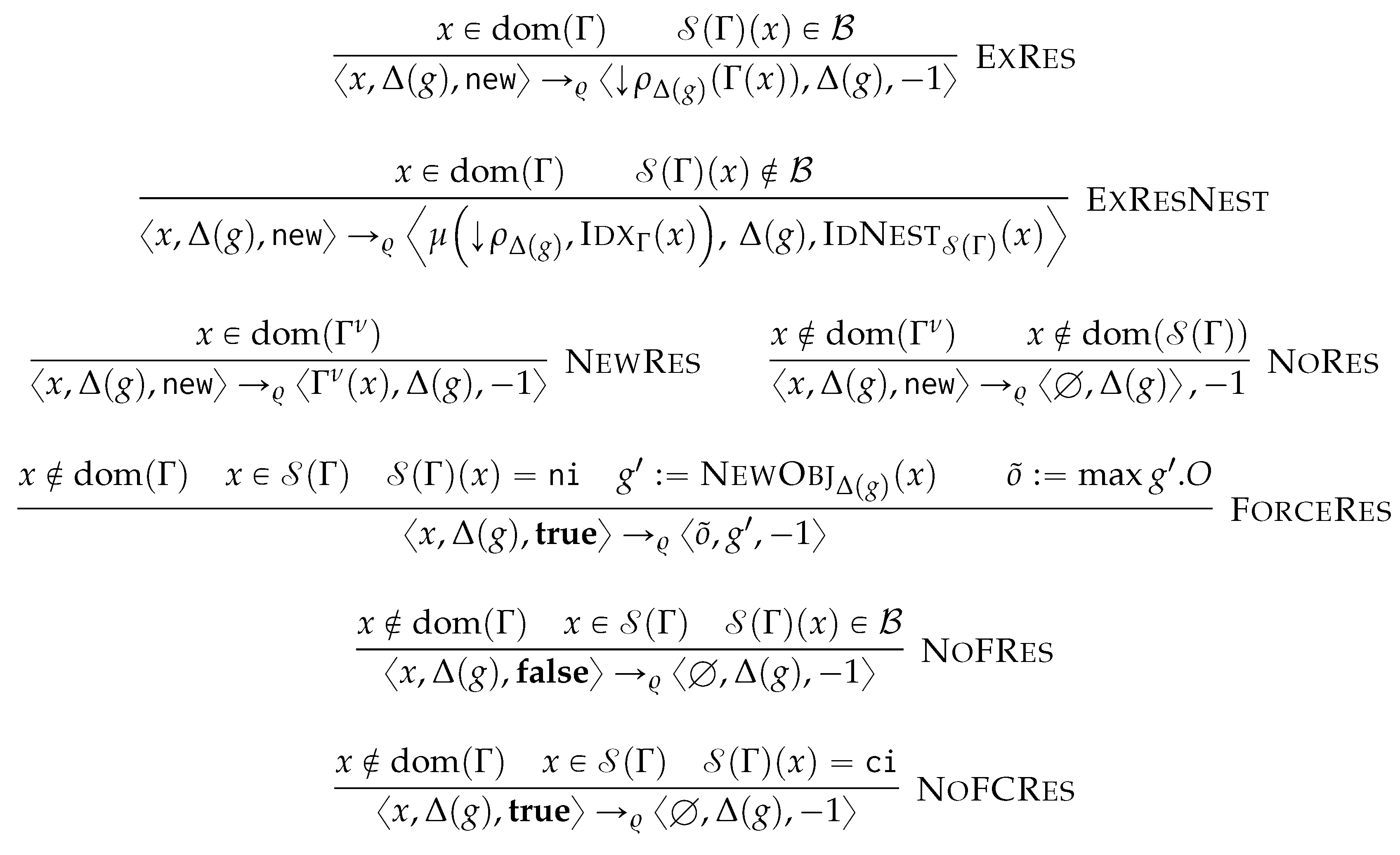

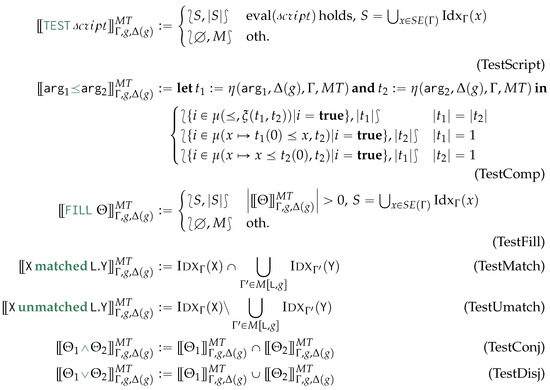

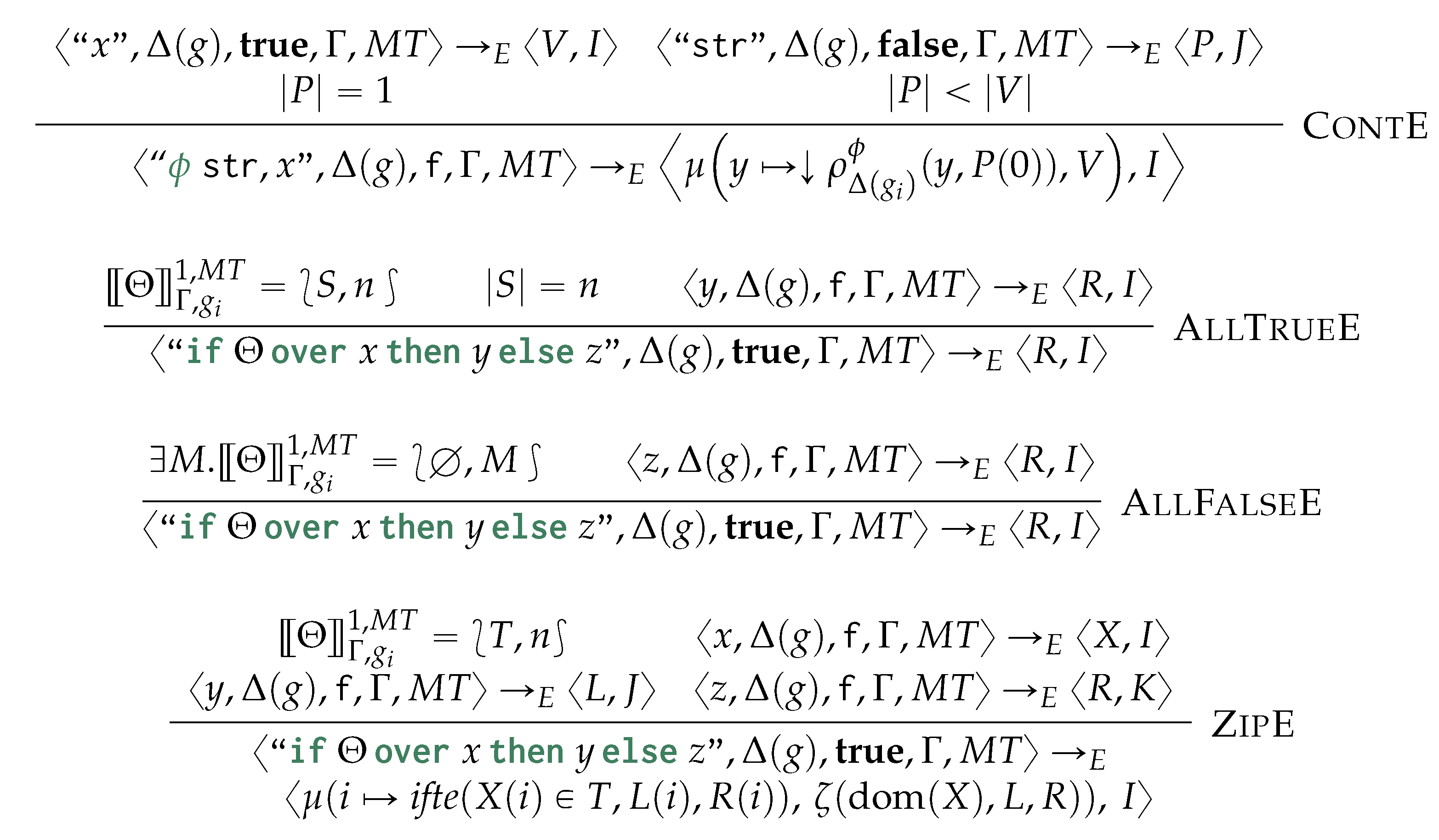

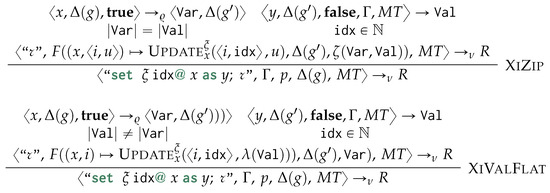

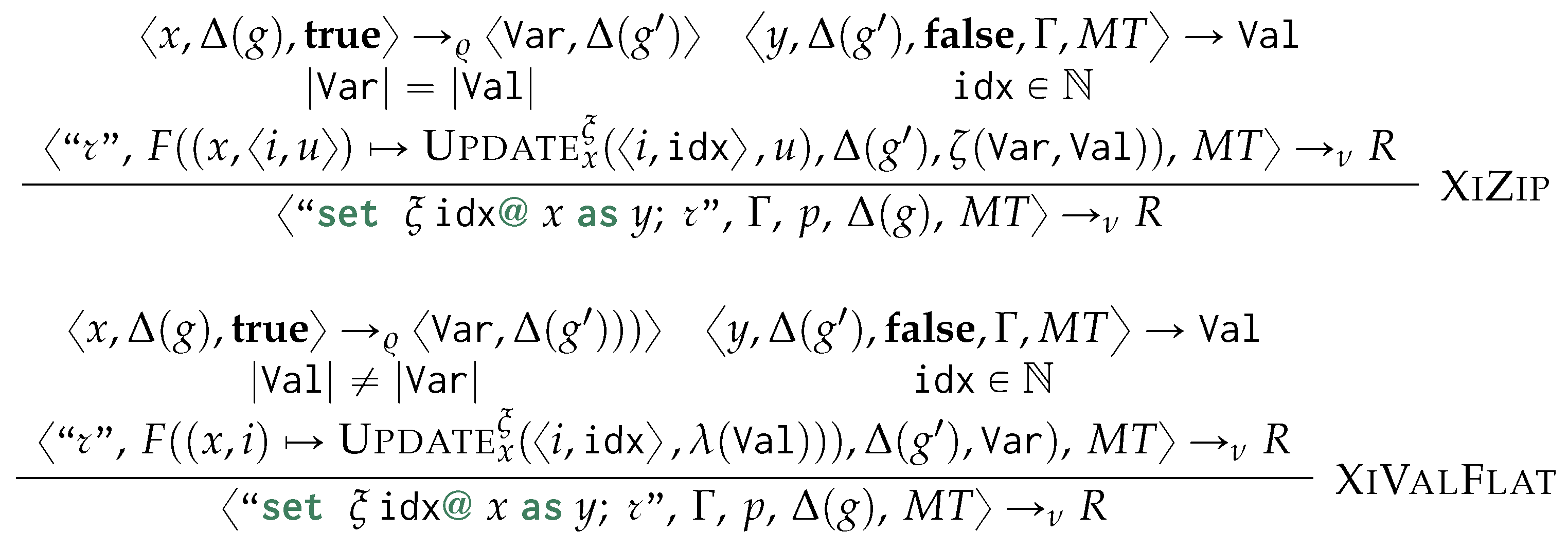

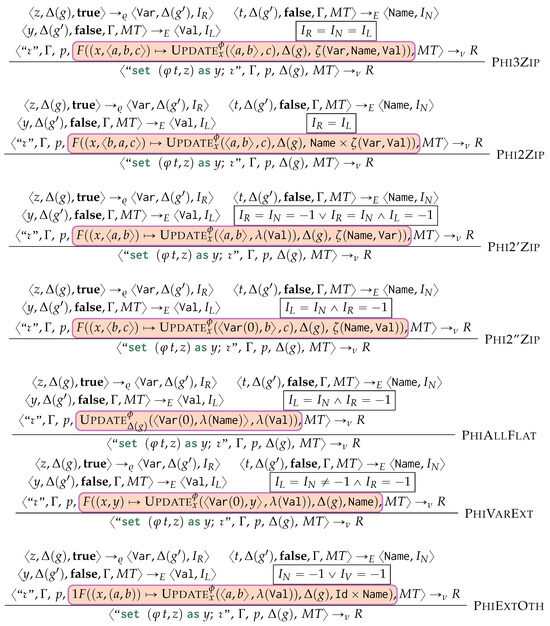

6. Generalised Graph Grammar

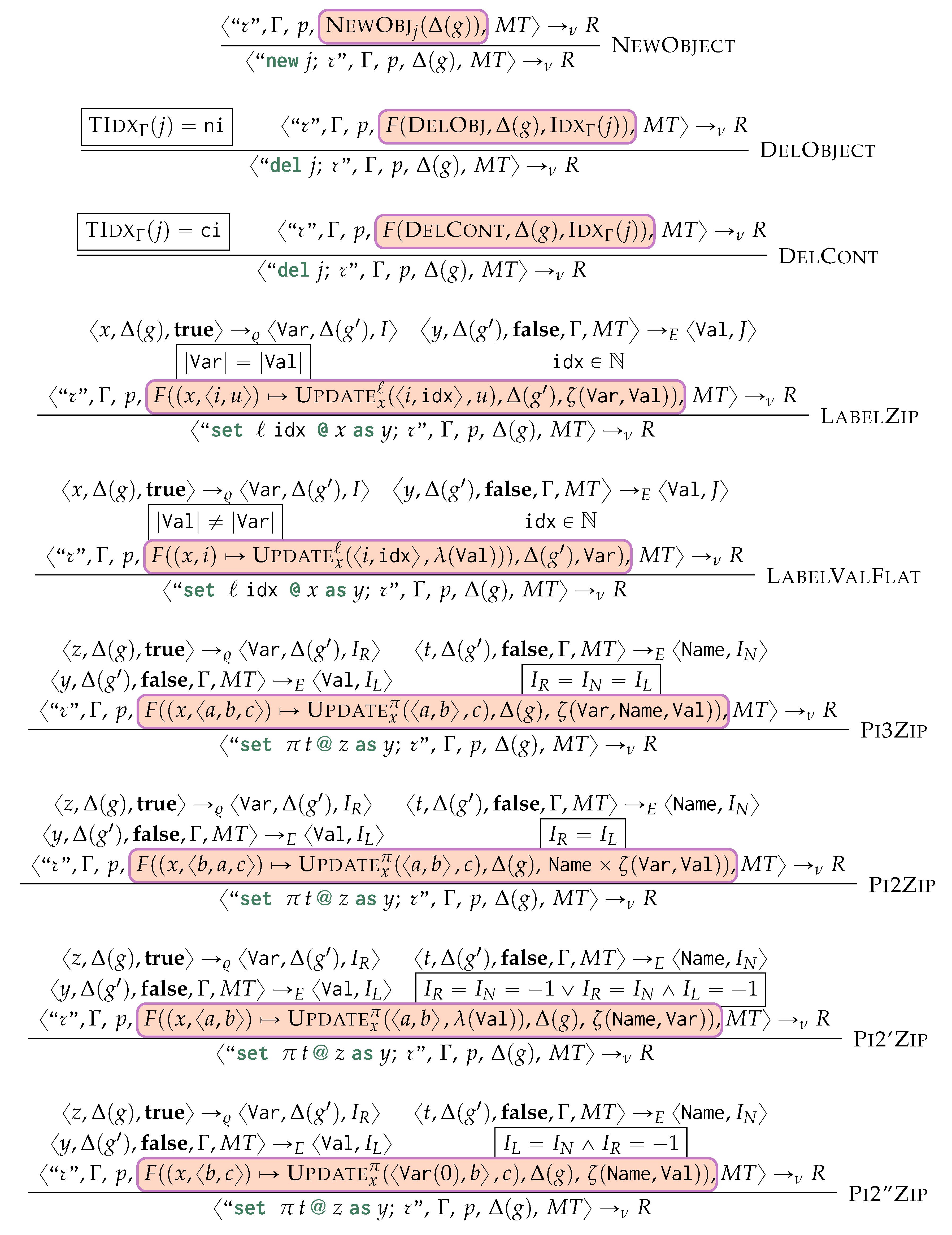

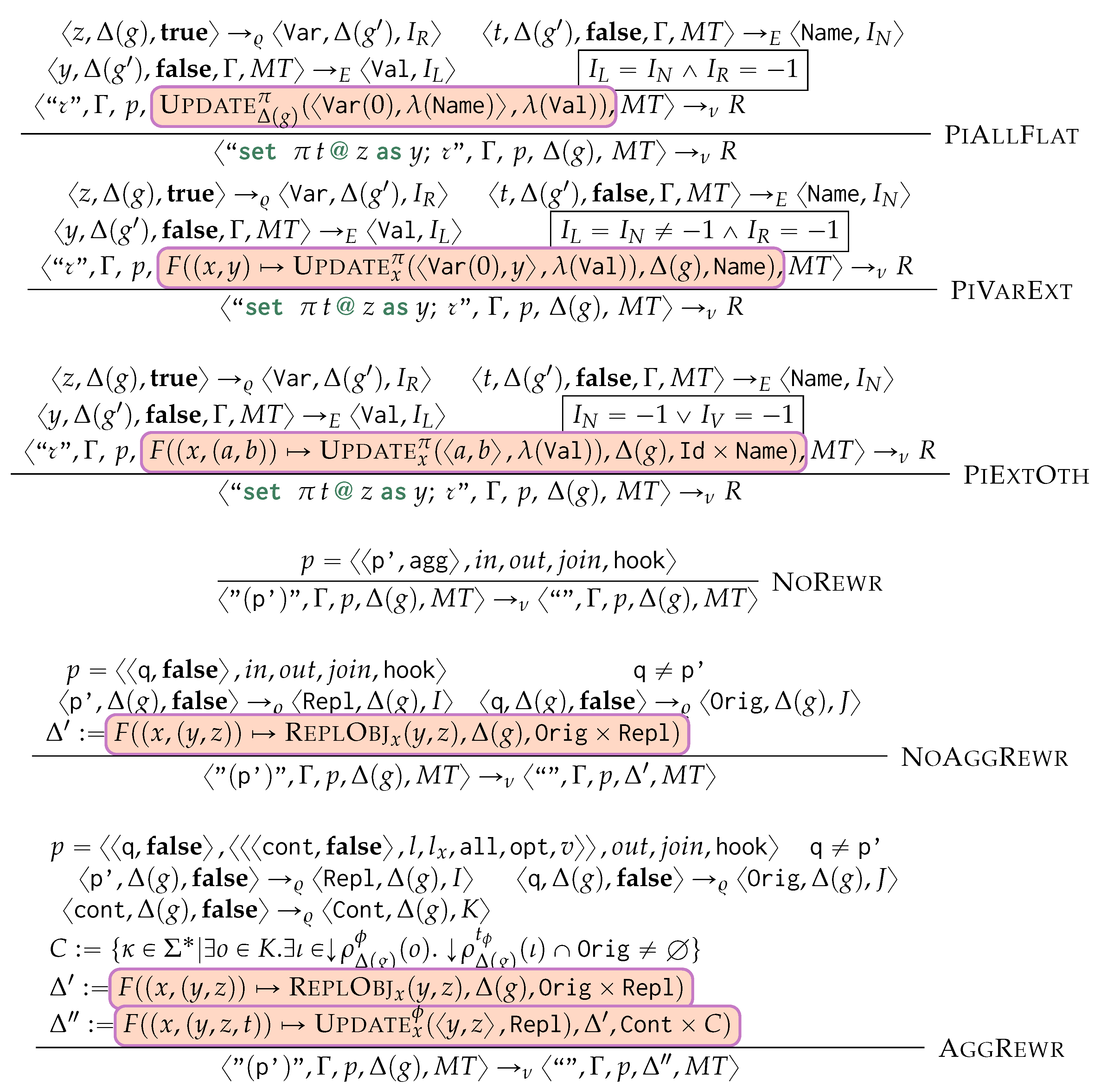

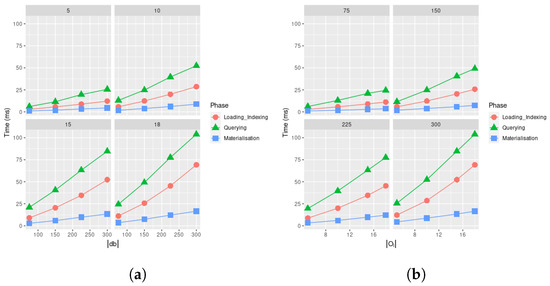

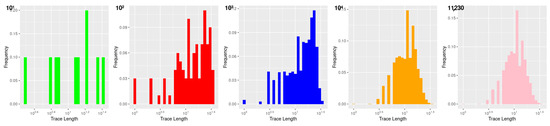

After a preliminary and example-driven representation of the proposed query language (Section 6.1), we characterise the semantics of the proposed query language in terms of procedural semantics being subsumed in Algorithm 4. This is defined by the following phases: after determining the order of application of the matching and rewriting rules (Line 2), we match and cache the traversal of each containment relationship to reduce the number of accesses to the physical model (Line 3), from which we then proceed to the instantiation of the morphisms, to produce the table (Line 4). This fulfills the matching phase. Finally, by visiting the objects from each GSM database in reverse topological order, we then access each morphism stored in for then applying (Line 5) the rewriting rules according to the sorting in Line 2. As this last phase produces the views for each GSM database we then materialise this view and store the resulting logical model on disk (Line 6). Each of the forthcoming sections discusses each of the aforementioned phases.

| Algorithm 4 Generalised Graph Grammar (gg) evaluation |

|

6.1. Syntax and Informal Semantics

We now discuss our novel’s proposed matching and rewriting language by taking inspiration from graph grammar. To achieve language declarativeness, we do not force the user to specify the order of application of the rules as in graph rewriting.

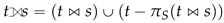

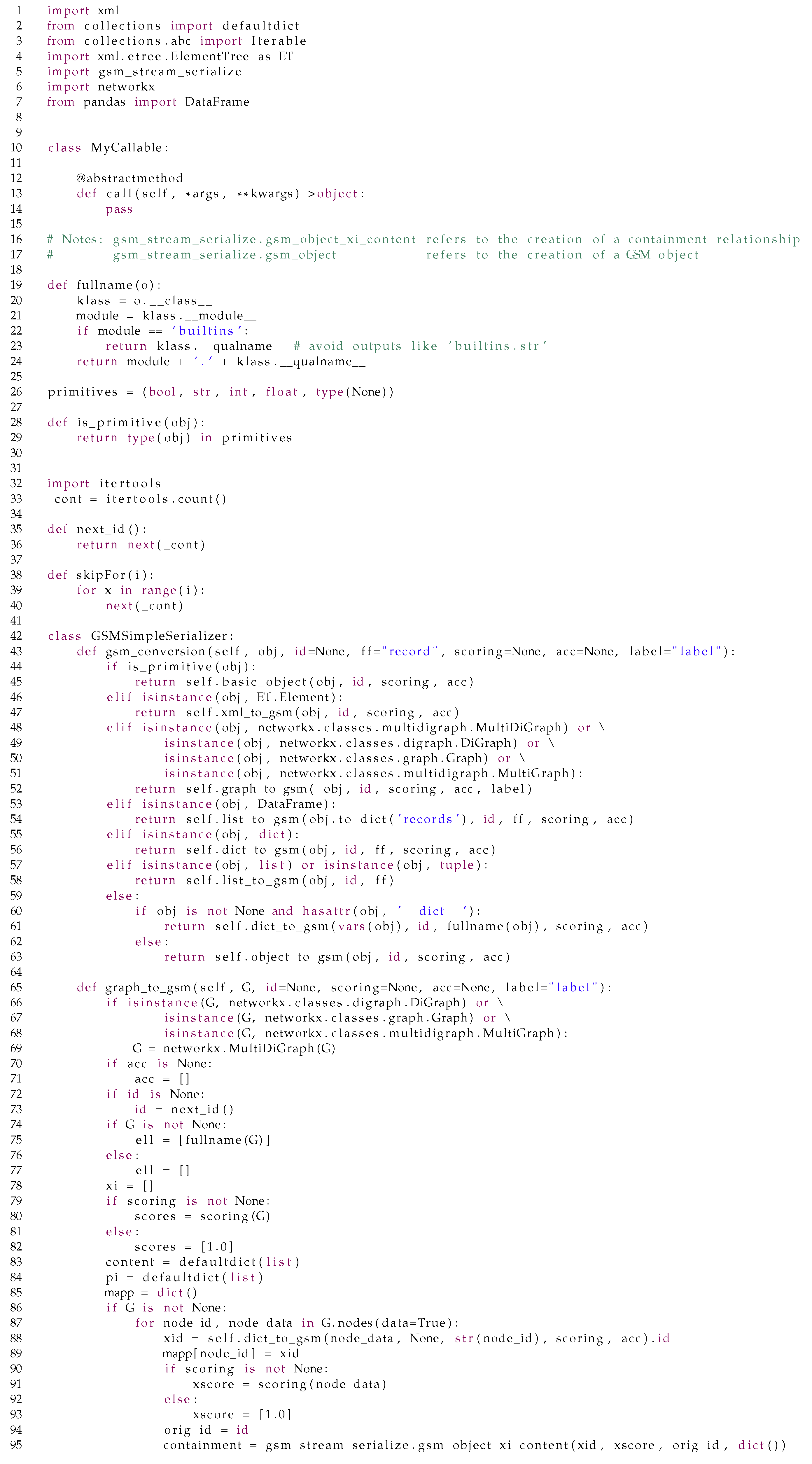

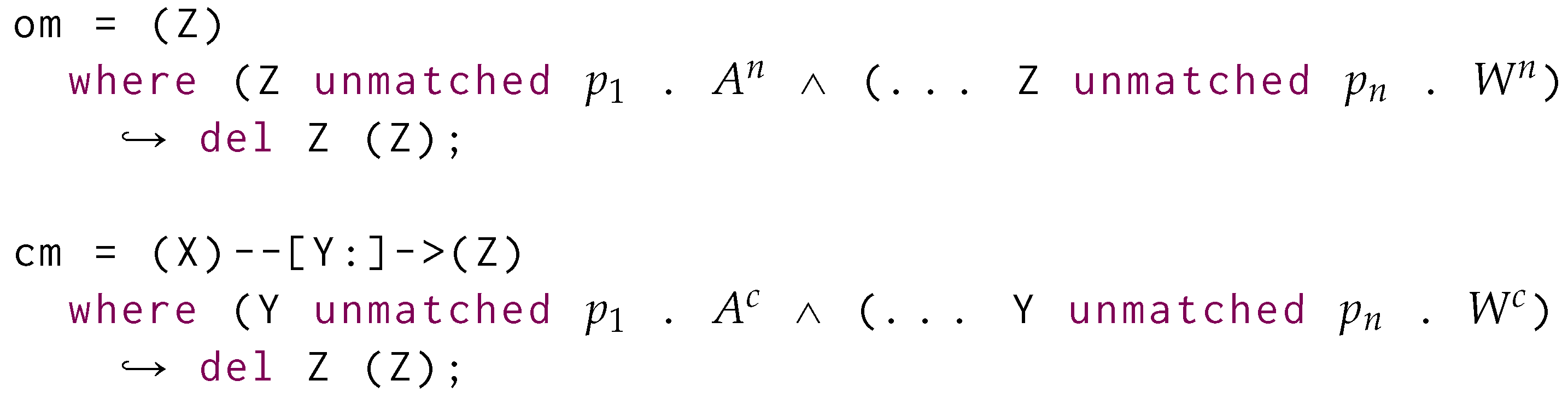

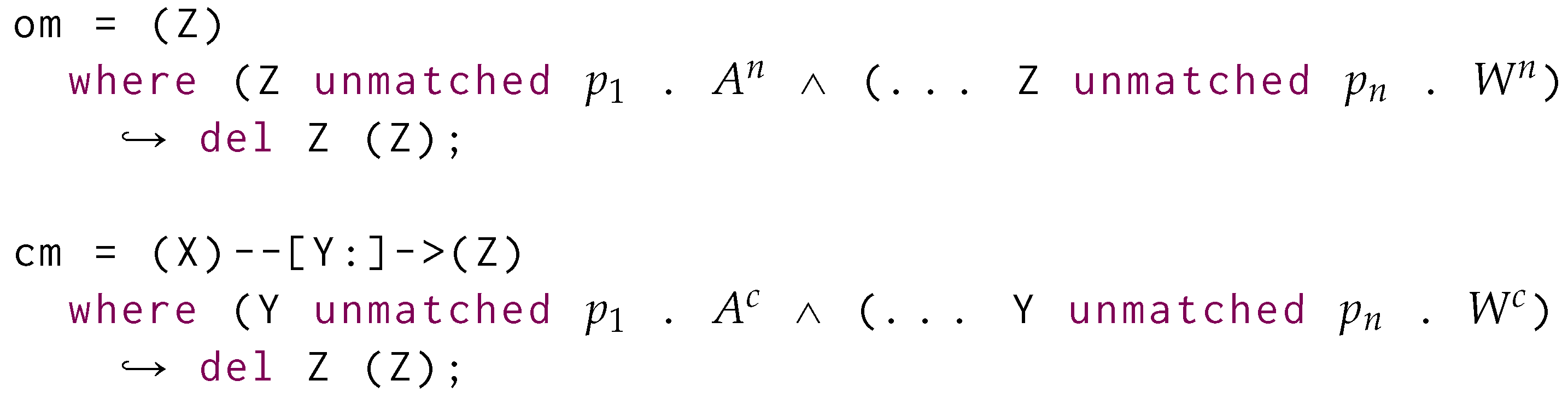

Figure 4 provides the Backus-Naur Form (BNF) [48] for the proposed query language for matching and rewriting object-oriented databases by extending the original definition of graph grammars. Each query (gg) is a list of semi-colon-separated rules (rule), where each of those describes a matching and rewriting rule, . For each uniquely identified rule (pt), we identify a match (obj cont+joining*) and an optional (?) rewrite (op*obj). Those are separated by an optional condition predicate (Appendix A.2 on page 43), providing the conditions upon which the rewriting needs to be applied to the database view, and ↪.

is characterised by one single entry-point similarly to GraphQL [49] as well as other navigational approaches to visiting graph-based data [50], thus defining the main GSM object through which we relativise the graphs’ structural changes or update around its neighbouring vertices, as defined by its ego-network cont of objects being either contained (- - clabel -> obj) or containing (<- clabel - - obj) the entry-point obj. While objects should be always referred to through variables, containment relationships might be optionally referred to through a variable. Edge traversal beyond the ego-net is expressed through additional edges (joining). We require that at least one of the edges should be a mandatory one. Differently from Cypher, we can match containments by providing more possible alternatives for the containment label rather than just considering one single alternative: this acts as the SPARQL’s union operator across differently matched edges, each for a distinct edge label. Please observe that this boils down to a potentially polynomial sub-problem of the usual subgraph isomorphism problem, being still in NP despite the results in Section 8 proposed for the present query language.

Up to this point, all these features are shared with graph query languages. We now start discussing features extending those: we generate nested embeddings by structurally grouping entry-point matches sharing the same containing vertex: this is achieved by specifying the need to group an object (≫) along one of its containment vertices via a containment relationship remarked with ∀. Last, we use return statements in the rewritings to specify which entry-point objects should be replaced by any other matched or newly created objects.

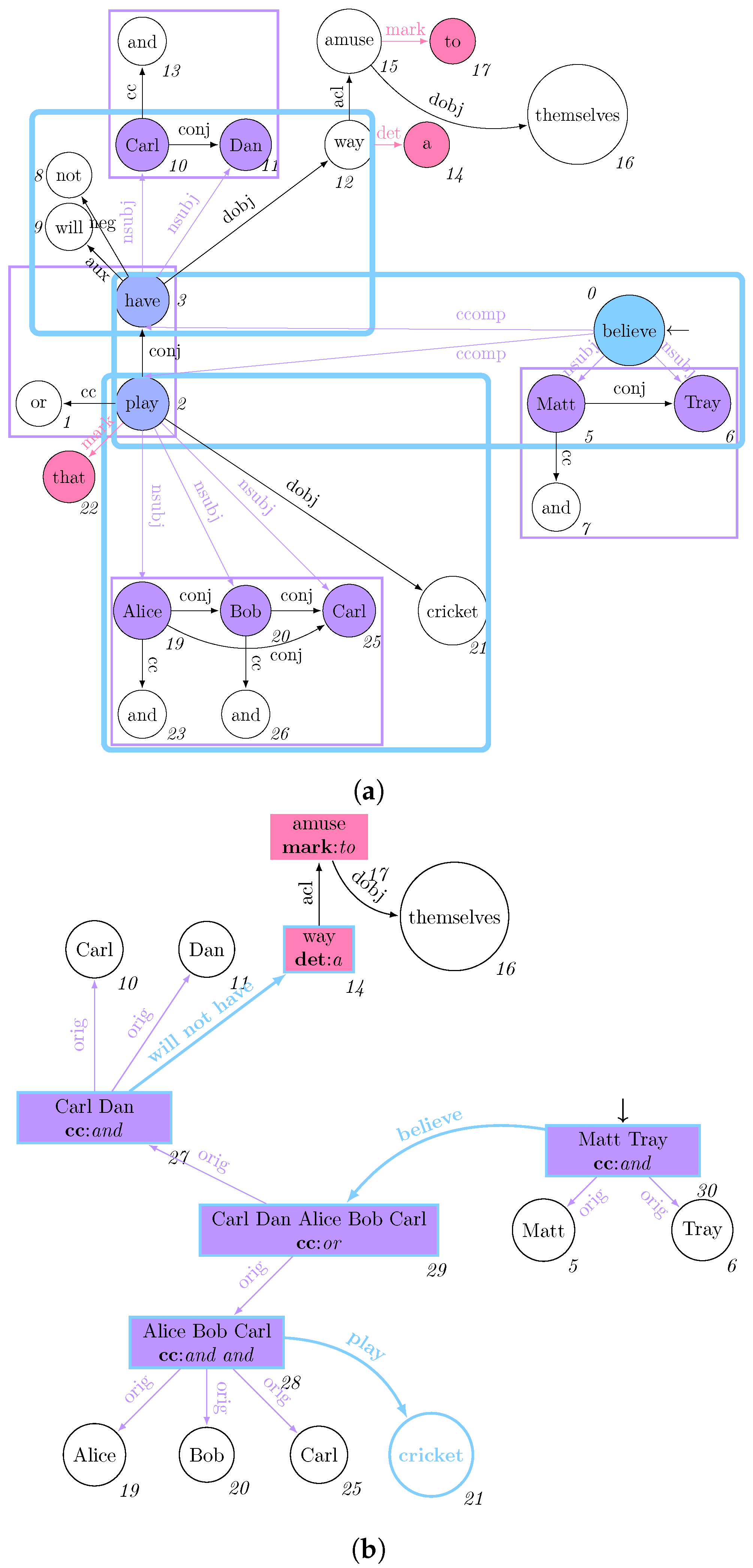

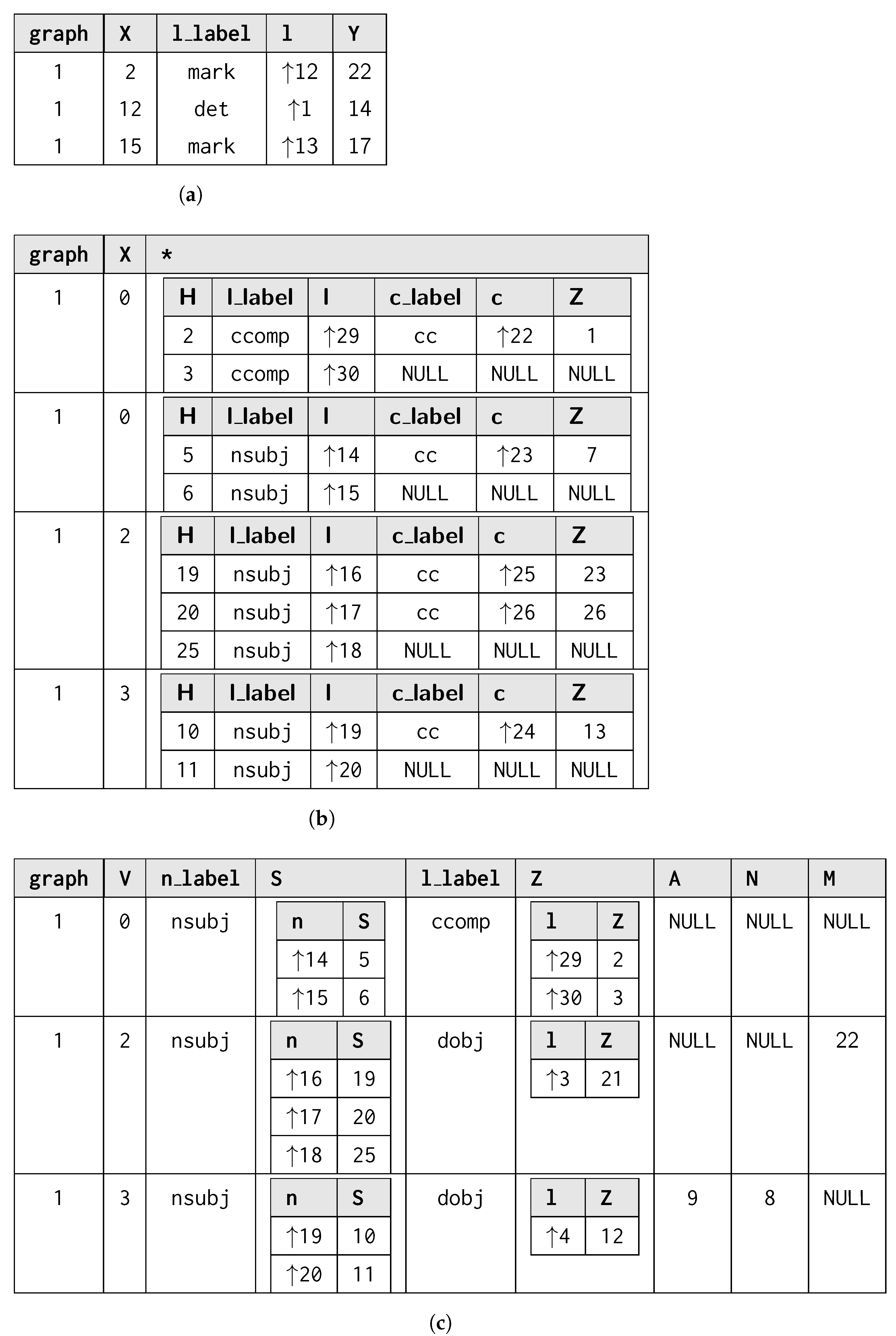

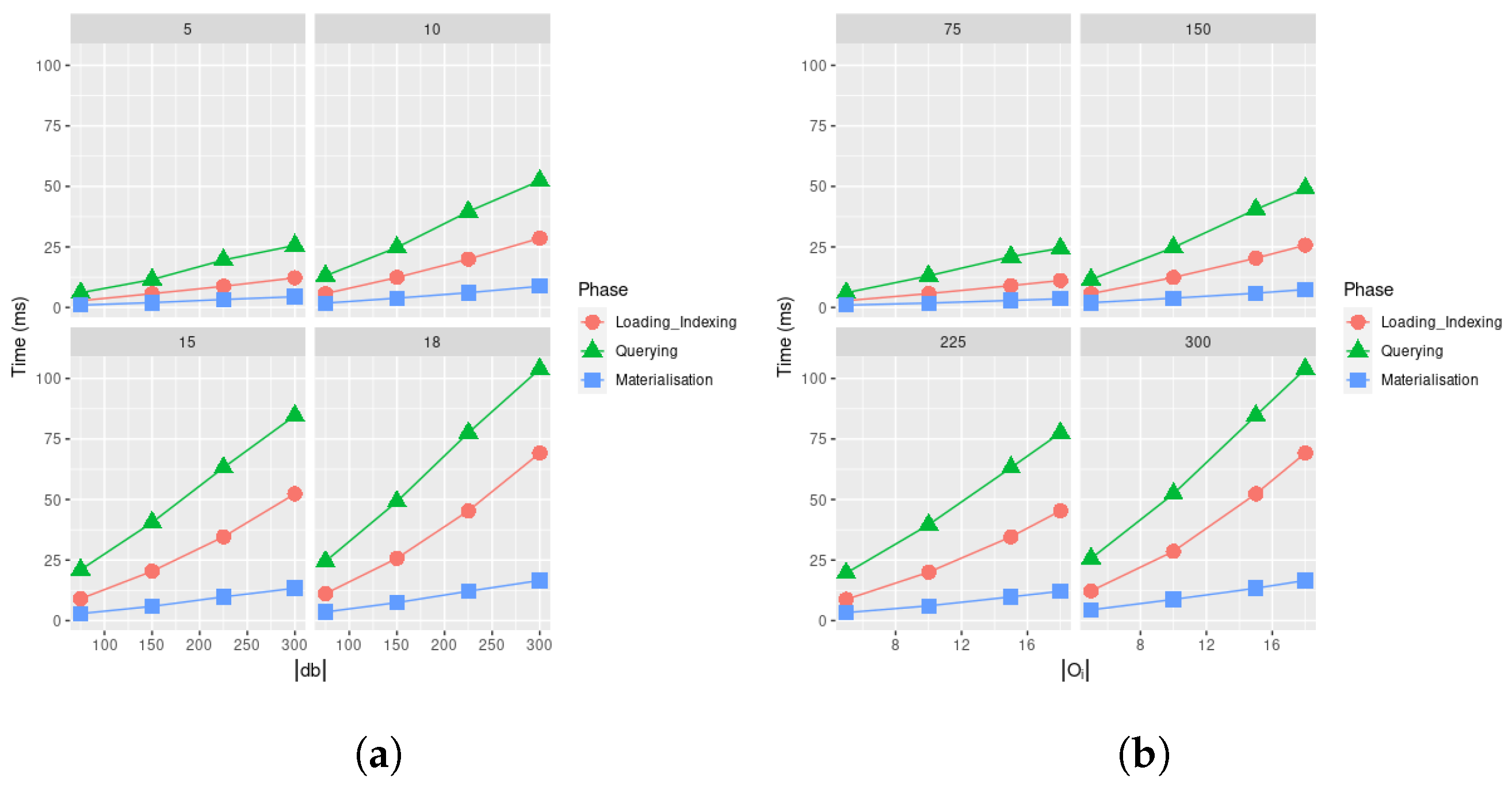

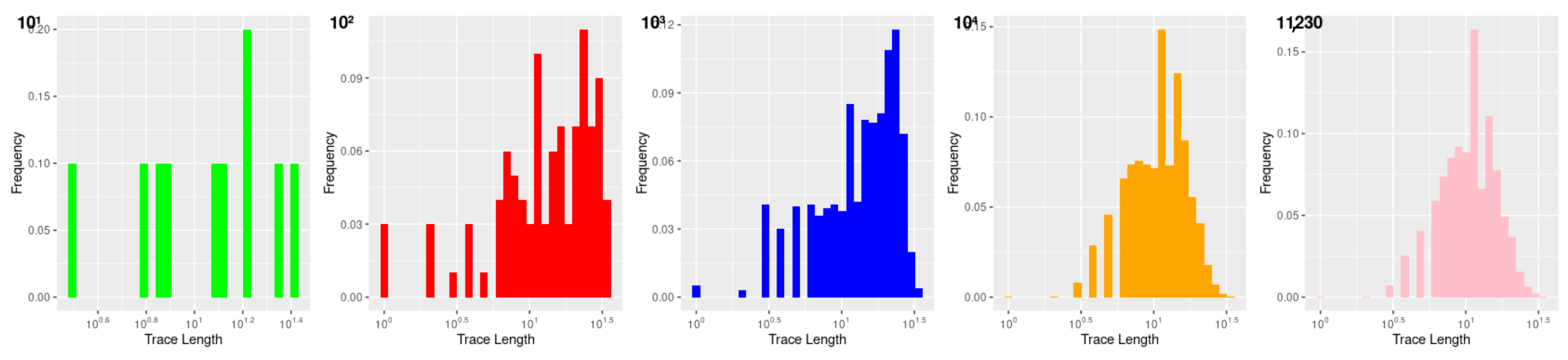

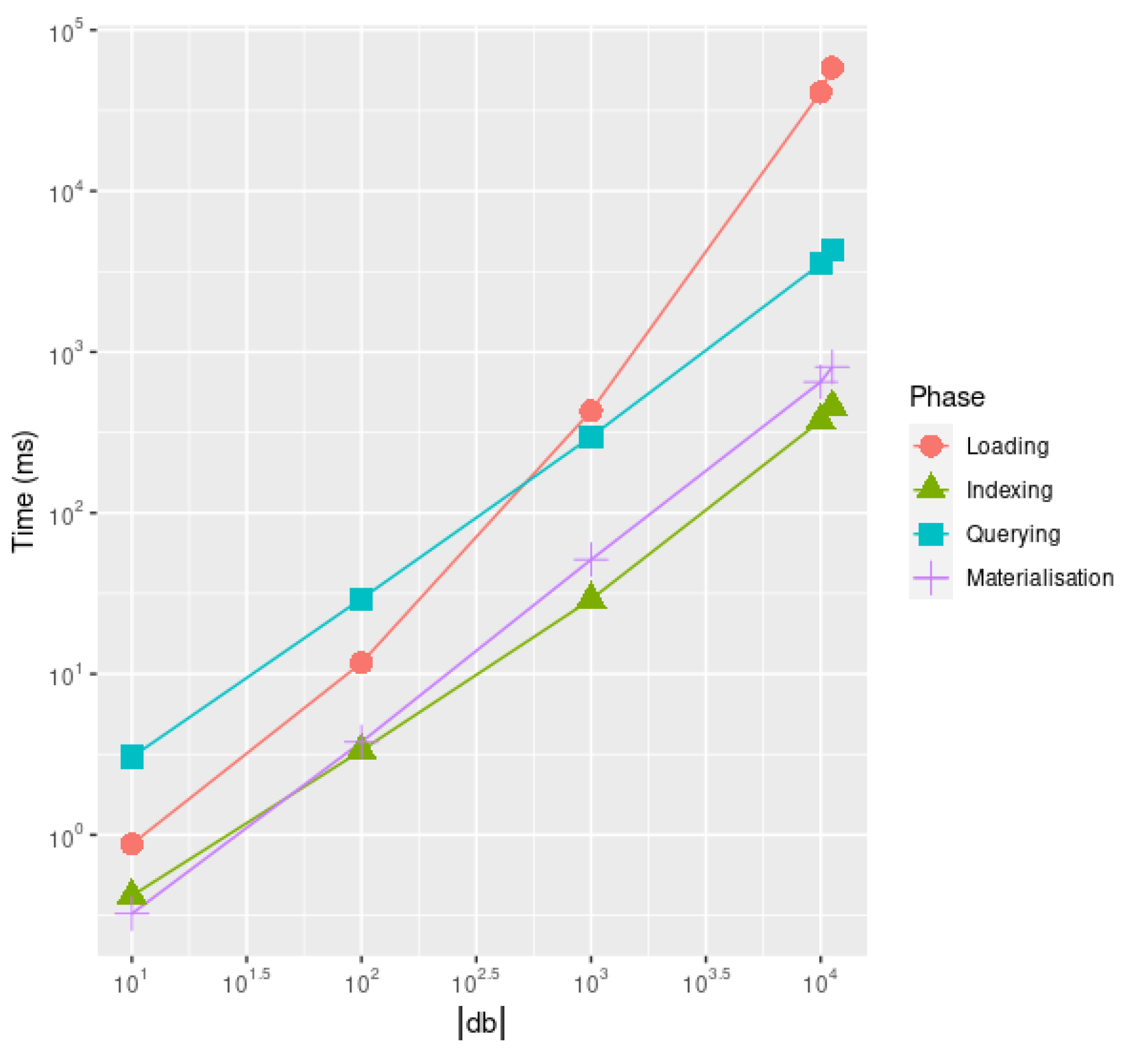

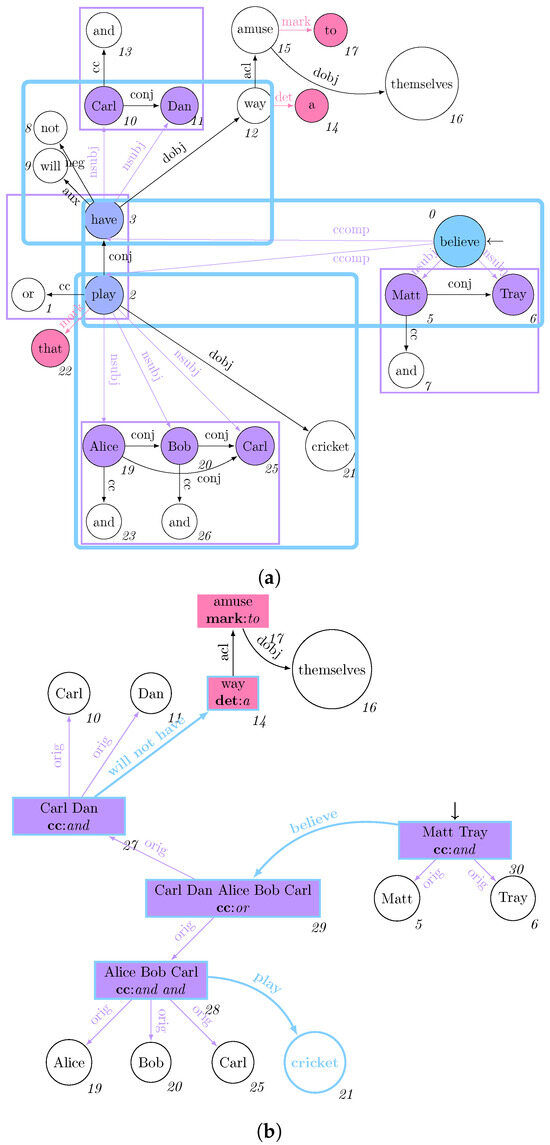

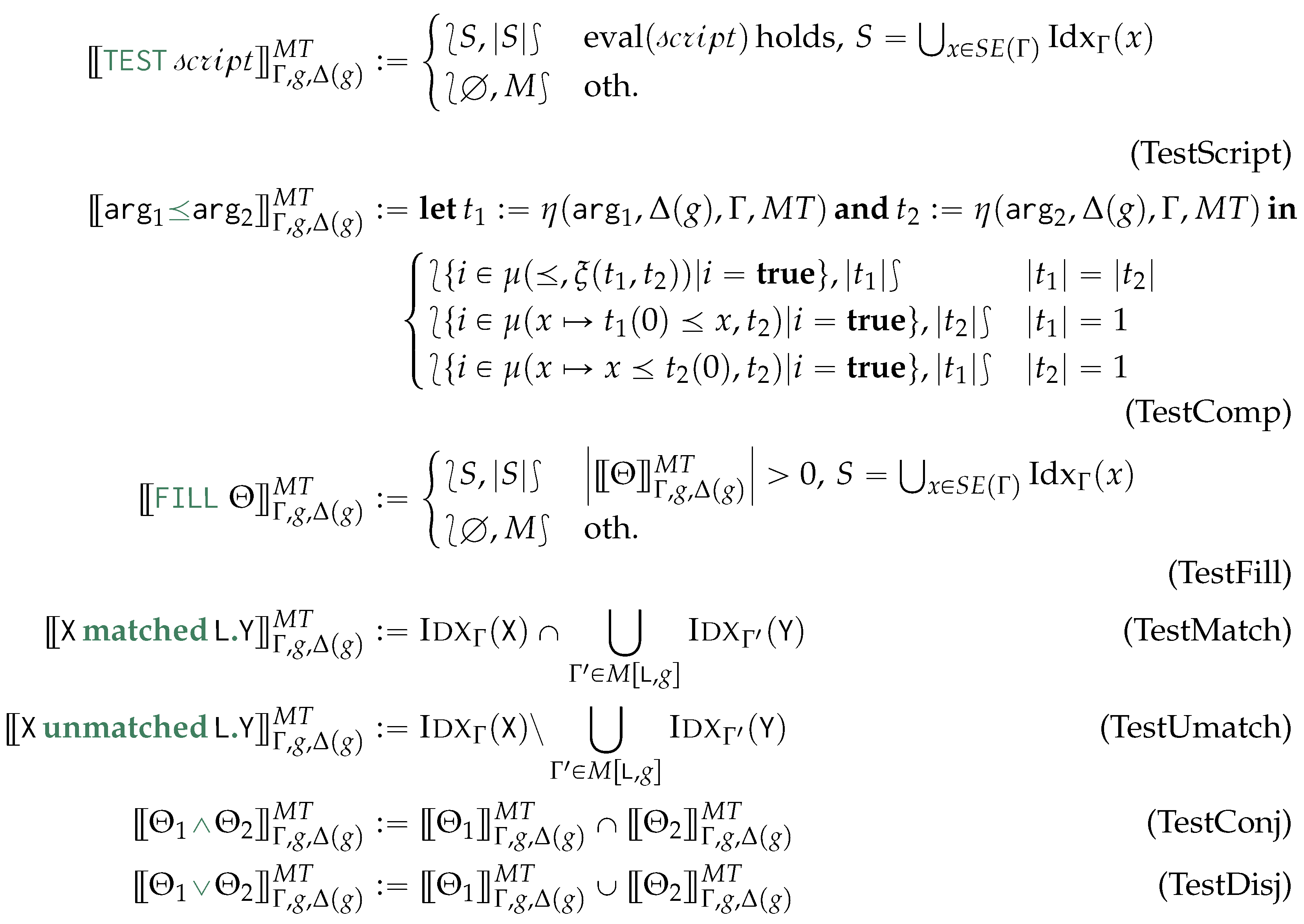

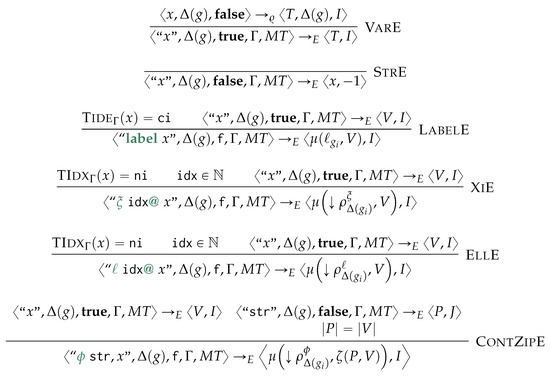

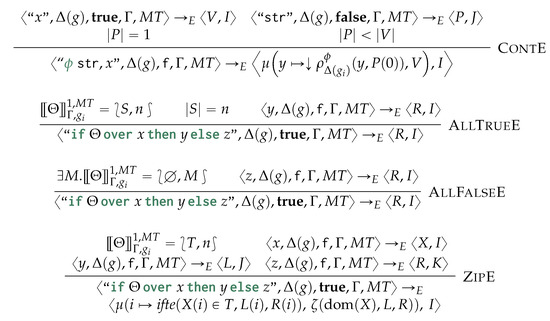

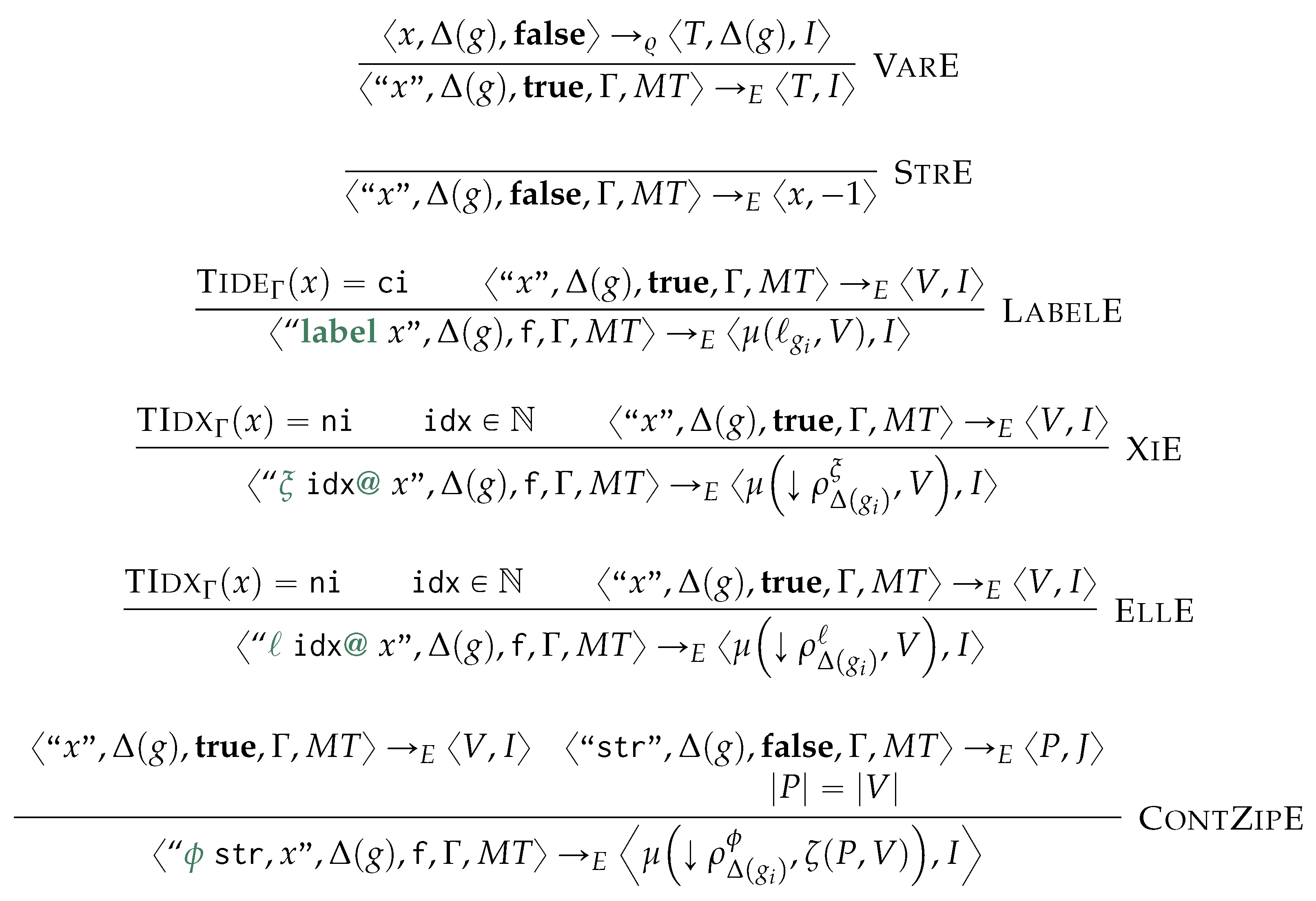

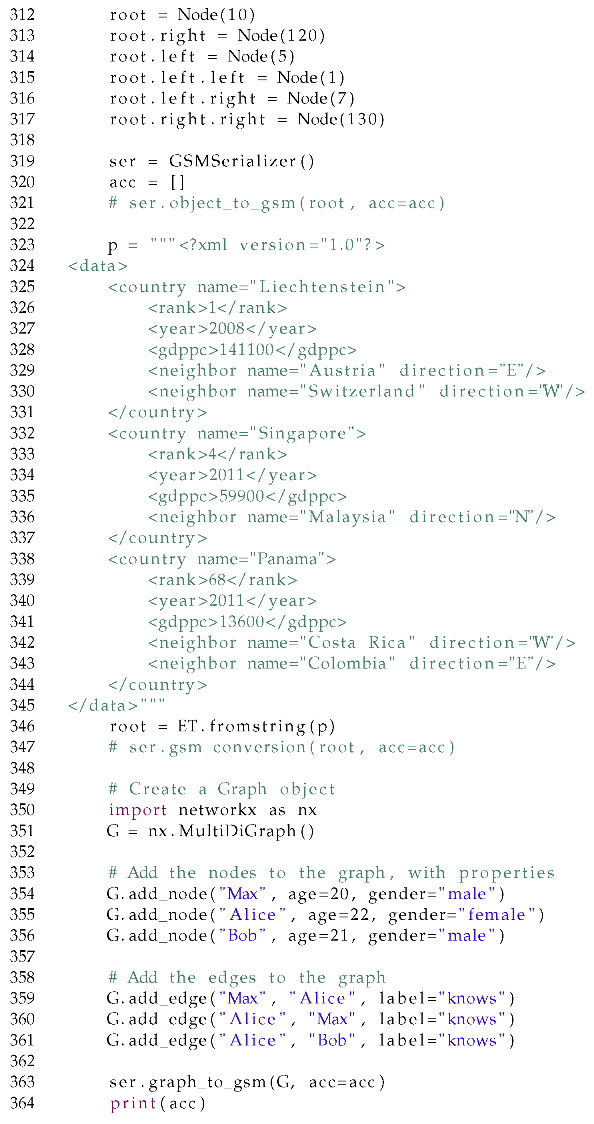

Example 1.