Abstract

The limited availability of thermal infrared (TIR) training samples leads to suboptimal target representation by convolutional feature extraction networks, which adversely impacts the accuracy of TIR target tracking methods. To address this issue, we propose an unsupervised cross-domain model (UCDT) for TIR tracking. Our approach leverages labeled training samples from the RGB domain (source domain) to train a general feature extraction network. We then employ a cross-domain model to adapt this network for effective target feature extraction in the TIR domain (target domain). This cross-domain strategy addresses the challenge of limited TIR training samples effectively. Additionally, we utilize an unsupervised learning technique to generate pseudo-labels for unlabeled training samples in the source domain, which helps overcome the limitations imposed by the scarcity of annotated training data. Extensive experiments demonstrate that our UCDT tracking method outperforms existing tracking approaches on the PTB-TIR and LSOTB-TIR benchmarks.

MSC:

68T01; 68T45

1. Introduction

Compared with the RGB target tracking sequences, the thermal infrared (TIR) target tracking sequences have no color information which is not affected by illumination changes. Therefore, the TIR target tracking can be applied to intelligent transportation, video surveillance, sea rescue, and other scenarios at night or in rainy/foggy weather. The scarcity of TIR training samples makes the trained feature extraction network perform an inferior target feature representation, which significantly restricts the performance of the TIR target tracking method. Although there are some methods to solve the problem of insufficient training data, such as few-shot learning [1,2], domain adaptation [3,4], domain transfer [5,6], etc., there is still a lot of room for improvement in TIR target tracking tasks.

Since training samples of RGB target tracking are plentiful and easy to obtain, RGB target tracking has already been extensively studied for several decades. There are many excellent RGB target tracking frameworks, e.g., the discriminative correlation filters (DCF) based tracking frameworks [7,8,9] and the deep learning based tracking frameworks [10,11,12,13]. The DCF-based trackers usually train a correlation filter to model the relationship of the target feature and its corresponding label [14,15,16]. The deep learning-based trackers need abundant training samples to train their convolutional feature extraction network that can represent the target more comprehensively [17,18,19]. In [20], Algabri and Choi propose a robust recognizer combined with deep learning techniques to adapt to different lighting in scene ambient lighting and employ an enhanced character recognition model online update strategy to solve the problem of target character appearance change drifting during tracking. Inspired by the success of deep learning-based RGB target tracking methods, some TIR target tracking methods are trying to improve their performance by leveraging an off-the-shelf network to extract target features [21,22,23]. The STAMT [21] tracking method leverages a pre-trained convolutional feature extraction network to extract these deep features for a better target representation. The HSSNet [22] tracker refers to the Siamese architecture [24,25,26,27] which views the tracking task as similarity template matching and uses the Siamese-based convolutional neural network with multi-convolutional layers to obtain the semantic and spatial features of the tracking TIR target. These TIR target trackers utilize the off-the-shelf network for feature extraction which achieved some performance improvements; however, the tracking performance of these trackers is still significantly limited because this network is not trained for the TIR target-tracking task [21,28,29]. To make the feature extraction network adapt to the TIR tracking task, it is necessary to use TIR training samples for their convolutional feature extraction network training [30,31,32]. However, the deficiency of the TIR training samples makes it a difficult problem to train such a convolutional feature extraction network on these TIR training samples [33,34].

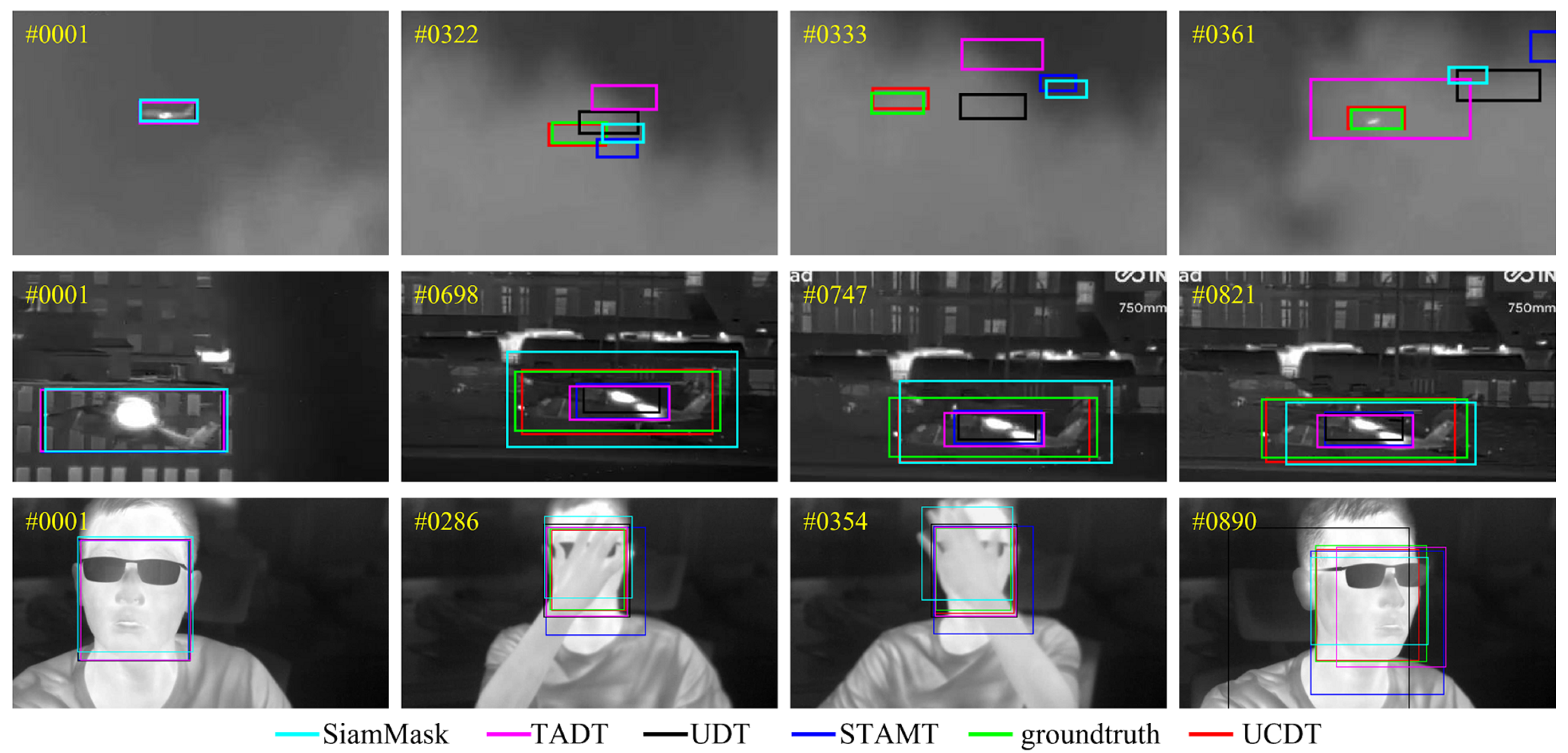

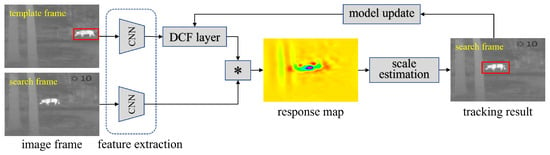

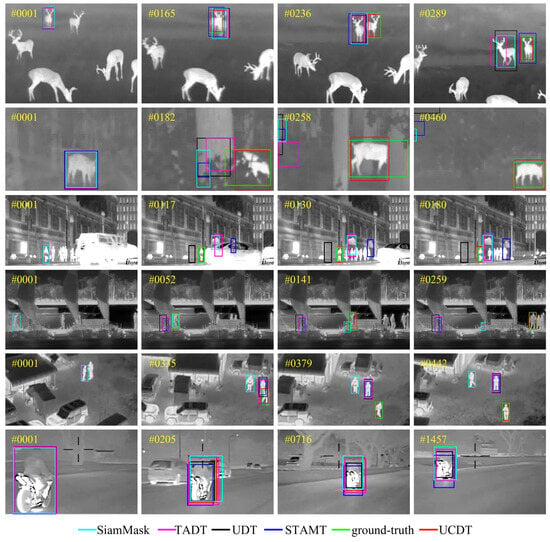

To resolve the aforementioned drawback, we propose an unsupervised cross-domain model (UCDT) for the TIR tracking task. Although these TIR training samples are insufficient, the RGB training samples are numerous and easy to obtain. Therefore, we propose a cross-domain model to transfer the convolutional feature extraction network trained on the RGB domain (source domain) to the TIR domain (target domain) so that the feature extraction network trained on abundant RGB training samples can be used for target representation in the TIR domain. Considering that such abundant RGB training sample annotation in the RGB domain is very time-consuming and expensive, we adopt an unsupervised learning-based method to generate pseudo labels for these unlabeled training samples. This unsupervised pseudo-label generation method can effectively reduce the cost of the sample annotation so that abundant training samples can be used for the feature extraction network training. The trained feature extraction network can extract the comprehensive target representation features, which can make the tracker obtain accurate tracking results. Figure 1 illustrates comparison results in some complex TIR tracking scenarios between our UCDT tracker and some trackers. The figure indicates that our UCDT tracking results are similar to the ground-truth labels of the target, it shows the effectiveness of our unsupervised cross-domain model in the TIR target-tracking task.

Figure 1.

Tracking examples of our UCDT tracker and some other state-of-the-art trackers.

The following are the main contributions of this work:

- We propose an unsupervised cross-domain model for the TIR tracking task.

- The cross-domain model could transfer the convolutional feature extraction network trained in the source RGB domain to the target TIR domain for the target feature extraction, which can improve the poor representation of the trained convolutional feature extraction network effectively due to insufficient training samples in the target TIR domain.

- To make the trained convolutional feature extraction network have a strong target representation capability, an unsupervised learning method is adopted to generate pseudo-labels for the unlabeled training samples in the source RGB domain for the convolutional feature extraction network training.

- Extensive experimental results show the competitiveness of the proposed tracking method compared with other tracking methods on the PTB-TIR [35] and the LSOTB-TIR [36] benchmarks.

2. Related Works

Deep Thermal Infrared Tracking: Deep learning has been widely used in image processing tasks because of its powerful representation ability [29,37,38]. Nowadays, deep learning-based trackers have attracted more and more researchers due to their good representation ability [39,40,41]. Ma et al. [39] present a coarse-to-fine tracking model by combining multi-level convolutional features within the DCF-based framework which can receive more accurate results than DCF-based trackers that only use hand-crafted features. The TADT [40] tracker uses two auxiliary tasks to select target-aware features online to achieve a more compact match between the template and the candidate. In [41], Gundogdu et al. propose a deep convolutional feature based on a classifier combined with the DCF-based framework for the TIR tracking task. Liu et al. [42] present an integrated TIR tracer using a pre-trained CNN model to extract multiple convolution features of the tracking target for a relatively complete representation of the target. To mitigate the crisis caused by this problem, the MMNet [28] tracking method proposes a multi-task framework for the TIR domain tracking task. Despite these trackers having obtained some TIR target tracking results, there is still a big room for improvement in the TIR tracker for its tracking performance compared to the real scenario requirements.

Domain Gap of RGB Images and TIR Images: Deep learning-based trackers require a large amount of labeled data for their model training [43,44,45]. The RGB source domain has relatively sufficient training data, while the TIR target domain has very limited data [31,46,47,48]. Many TIR target trackers use pre-trained RGB-based feature extraction networks, but TIR images capture temperature information through thermal infrared radiation, which is different from RGB’s visible light [21,32,33]. This difference leads to a domain gap, making it challenging to transfer models from RGB to TIR. RGB images are sensitive to lighting changes, while TIR images work well in varying light conditions, including complete darkness. Consequently, applying RGB-trained models to TIR data can lead to performance degradation due to differences in feature distribution [28,29,49]. To bridge this gap, domain adaptation techniques are needed to adapt RGB models to TIR features or retrain models specifically for TIR [50,51]. The limited TIR training samples further restrict tracking accuracy. Utilizing an unsupervised cross-domain model to transfer RGB-trained convolutional networks to TIR can significantly improve tracking accuracy in the TIR domain.

Unsupervised Feature Representation: The target feature representation is an objective way of quantifying targets of interest. Typically, researchers use these hand-crafted features (e.g., HOG, SIFT) to measure targets of interest in the image. By extracting a sequence of various features from the target image, it can be represented as a collection of values. These feature representations can be applied to a variety of tasks, such as image segmentation [52,53], or object classification [54,55]. Due to the defects of these hand-crafted features, visual tasks based on these hand-crafted features can not achieve ideal performance. In most computer vision tasks, convolutional neural networks (CNN) have obtained the best results [56,57]. CNN-learned features are thought to be more sensitive to meaningful visual material than hand-crafted features, making them a viable choice for feature-based image analysis. However, using CNN to learn features is very challenging due to it being heavily dependent on the training tasks [58,59]. An unsupervised approach that leads to high-quality feature learning without the label of training samples will solve the bottleneck of time-consuming and expensive training sample labeling [60,61,62,63,64]. In [60], an unsupervised learning-based UDT tracker has been proposed to reduce the need for annotated training samples in the model training process. Sun et al. [61] present uses a cross-modal distillation method to extract the representation of TIR modes from RGB modes on abundant unannotated paired RGB-TIR samples, which could effectively improve the tracking accuracy of the TIR tracker. Wang et al. [63] propose to use tracking as a proxy task for computer vision systems to learn the visual representation of video-related tasks. The above study utilized various pretexts constructed in the video, including time-sequence verification [60], displacement prediction [61] and background discrimination [64]. In this work, we mainly use multi-cycle consistency loss to perform forward-backward tracking in the deep DCF-based tracking framework to generate pseudo labels of these unlabeled training samples in the source RGB domain for the network training, which can reduce the requirement of sample annotation while ensuring the representation ability of the trained convolutional feature extraction network.

3. The Proposed UCDT Tracker

We propose an unsupervised cross-domain model (UCDT) based tracker for more accurate TIR target tracking accuracy. First, we briefly introduce the deep correlation tracking framework, which includes a deep convolutional neural network for target feature extraction and discriminative correlation filters for the target response map generation. Next, we propose a cross-domain model to transfer the feature extraction network trained in the source RGB domain into the target TIR domain for the target feature extraction, which could improve the poor target representation in the TIR domain. To reduce the cost of labeling these training samples, we adopt an unsupervised learning method to generate pseudo-labels for the unlabeled training samples in the source RGB domain to train the convolutional feature extraction network.

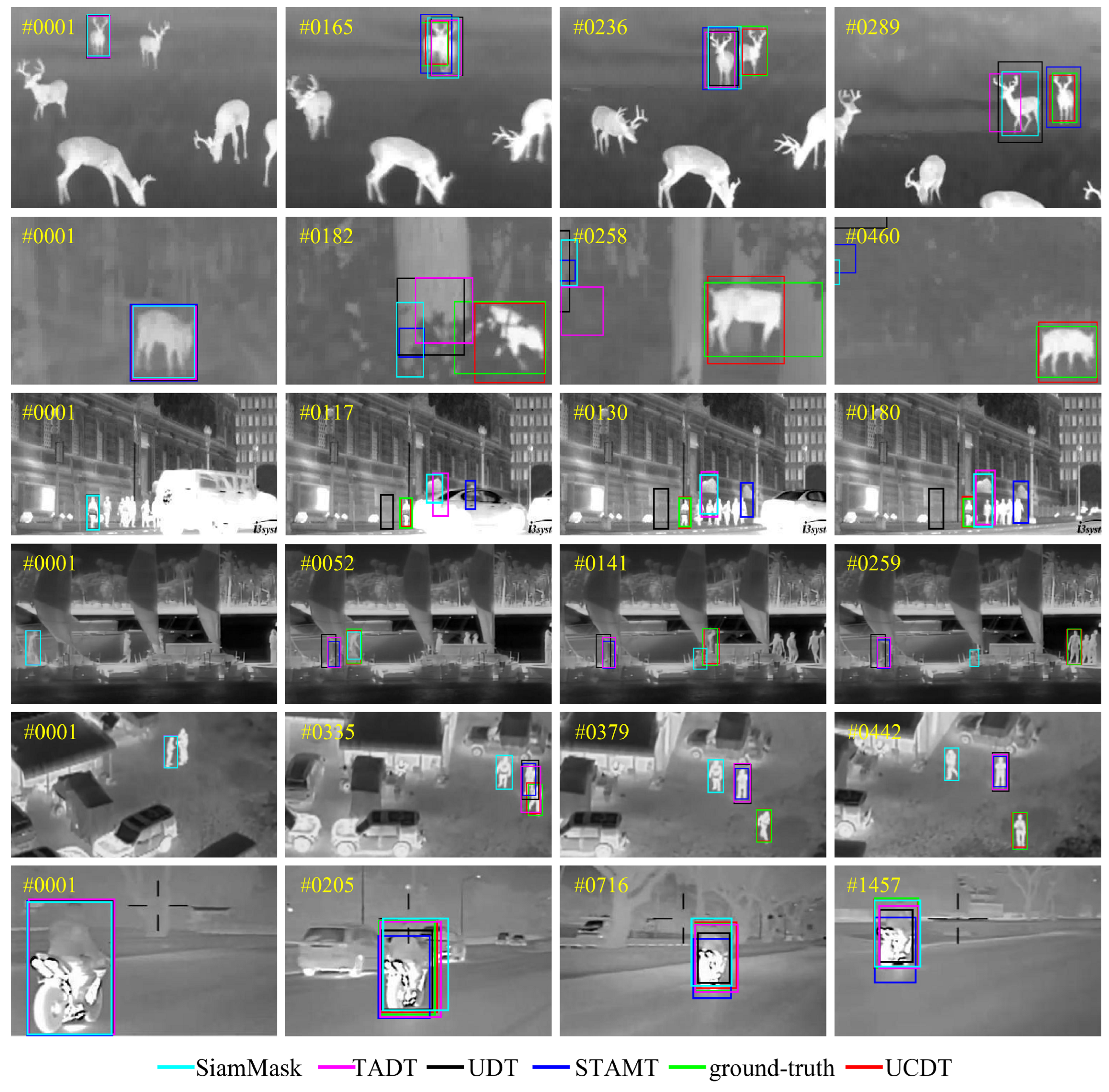

3.1. Deep Correlation Tracking Framework

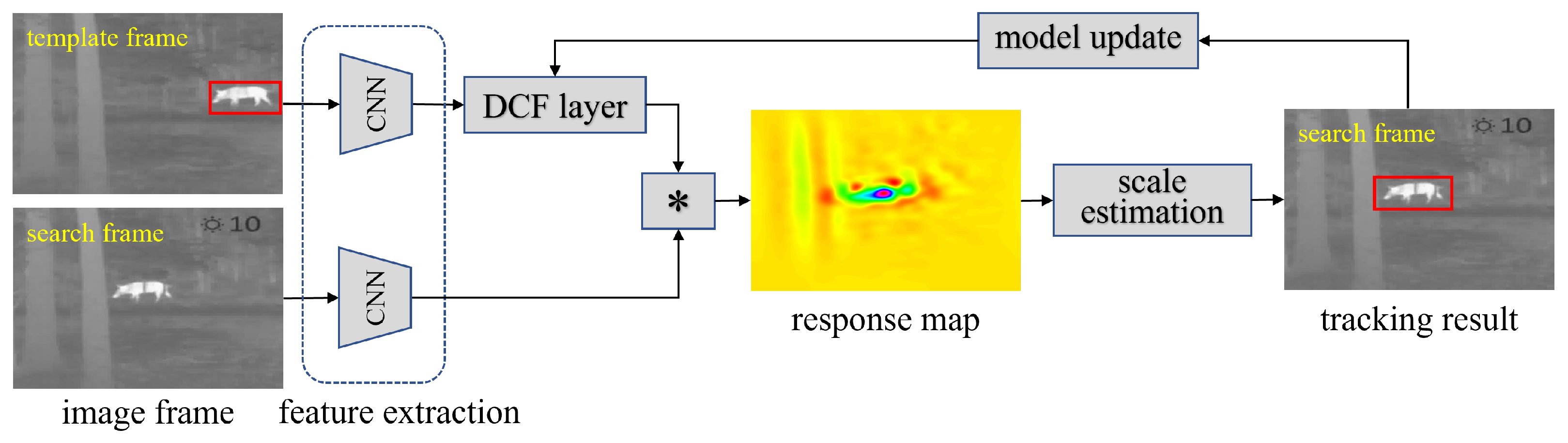

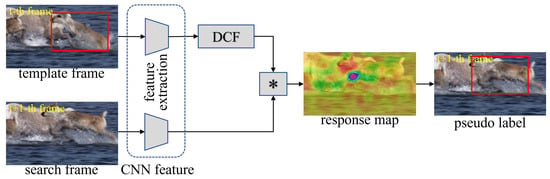

The deep correlation tracking framework includes a deep CNN model for target feature extraction and a discriminative correlation filter (DCF) layer for the target response map generation. Figure 2 shows the TIR target tracking process under the deep correlation tracking framework. In the first frame, the target of interest is given as the template, and the CNN is used to extract features and train the parameters of the DCF layer.

Figure 2.

The general TIR target tracking process under the deep correlation tracking framework. The same CNN is used to extract features from the template frame and search frame.

The DCF-based tracker trains a classifier to separate the target of interest from backgrounds [9,14]. The DCF layer can be expected as:

where W is the trained parameters of the DCF layer in the deep correlation tracking framework, X is training samples, Y is the corresponding labels, and is a regularization parameter. By using the Fourier transform, the convolution operation in Equation (1) is transformed into the form of dot multiplication, which reduces the computational complexity and improves the tracking speed. The parameters W of the DCF layer can be achieved as follows:

where is the Fourier transform, is the training samples in the Fourier domain, is the corresponding labels in the Fourier domain, and is a regularization parameter. In the subsequent search frames, the CNN is used to extract features of the search area S, and convolved with the trained parameters to obtain the response map R:

The center position of the target is determined by finding the position of the maximum point in the response map, and then the tracking result on the search frame can be obtained by using a scale estimation strategy [16,18].

3.2. Cross-Domain Model

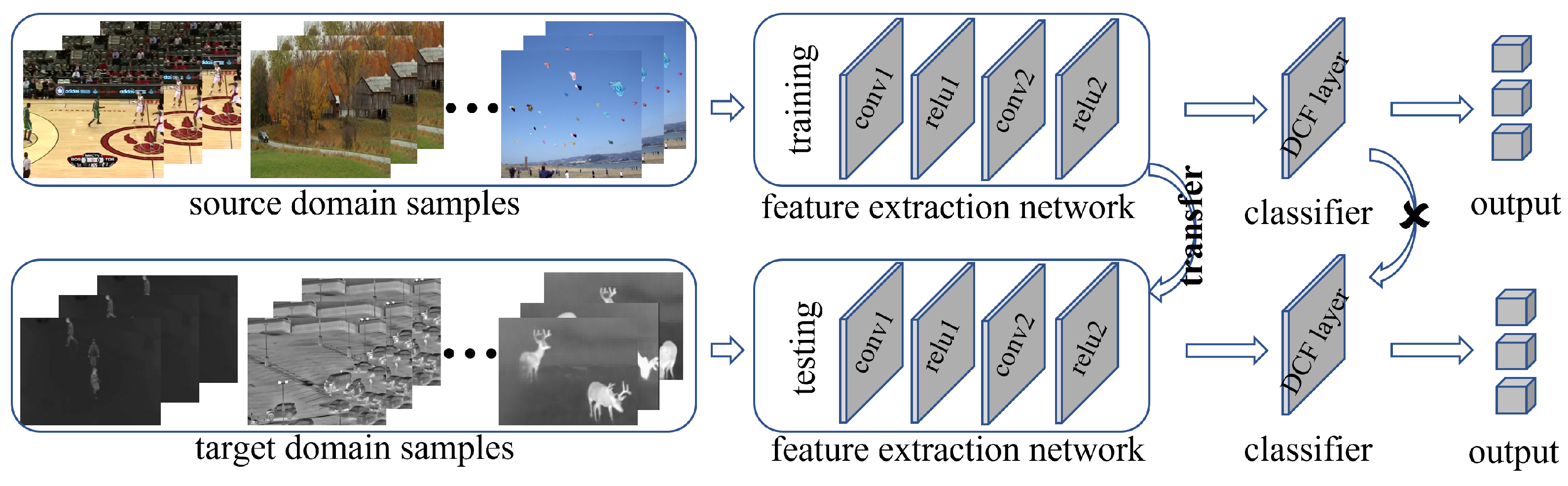

The cross-domain model focuses on improving model performance in the target domain by leveraging labeled data from the source domain. We give the following definition: (1) Source Domain includes feature space and label space , along with the sample set . In our unsupervised cross-domain model, the labels of the samples in the source domain are generated pseudo-labels, which are generated in a manner described in Section 3.3. (2) Target Domain includes feature space and label space , along with the sample set , where samples in the target domain are unlabeled. The sample distributions of the source domain and target domain are typically different, i.e., , where represents the joint distribution in the source domain, and represents the joint distribution in the target domain. Mathematically, we aim to minimize the distribution difference between the source and target domains to achieve domain adaptation. Due to the shortage of training samples that can be directly used in the TIR target tracking task, we propose a cross-domain model to train a feature extraction network using the training samples in the RGB domain, and the trained network could be transferred to the TIR domain for target feature extraction. This operation enables the training of the convolutional feature extraction network in the RGB source domain which could be deployed for the TIR target domain. The network architecture consists of two shared convolutional layers, and each convolution layer is followed by a normalization layer to prevent model over-fitting and multiple task-specific layers. The shared layers mainly share some network parameters, while the specific layer has different parameters for different tasks.

After careful consideration, these convolutional layers and normalization layers are selected as the feature extraction layers that are shared by the RGB domain and the TIR domain. The DCF-based layer is considered the task-specific layer. The overall of our proposed unsupervised cross-domain model is illustrated in Figure 3. The parameters of feature extraction networks in different domains are shared, but the parameters of classifiers are not shared. Although it is difficult to find the roles for each layer in the CNN architecture semantically, we can infer that these two CNN layers are considered feature encoders. They encode input samples in the learned common feature space. The DCF layer uses these features to carry out classification tasks. If multiple domains need to be encoded in a common feature space, and the characteristics of the domains are significantly different, different encoders are required for each domain. In the proposed cross-domain model, we technically create different DCF layers for different domains, which can be conducted by simply sharing layers across different encoders, but only setting up the DCF layer for different domains.

Figure 3.

Overview of the proposed unsupervised cross-domain model for the TIR tracking task.

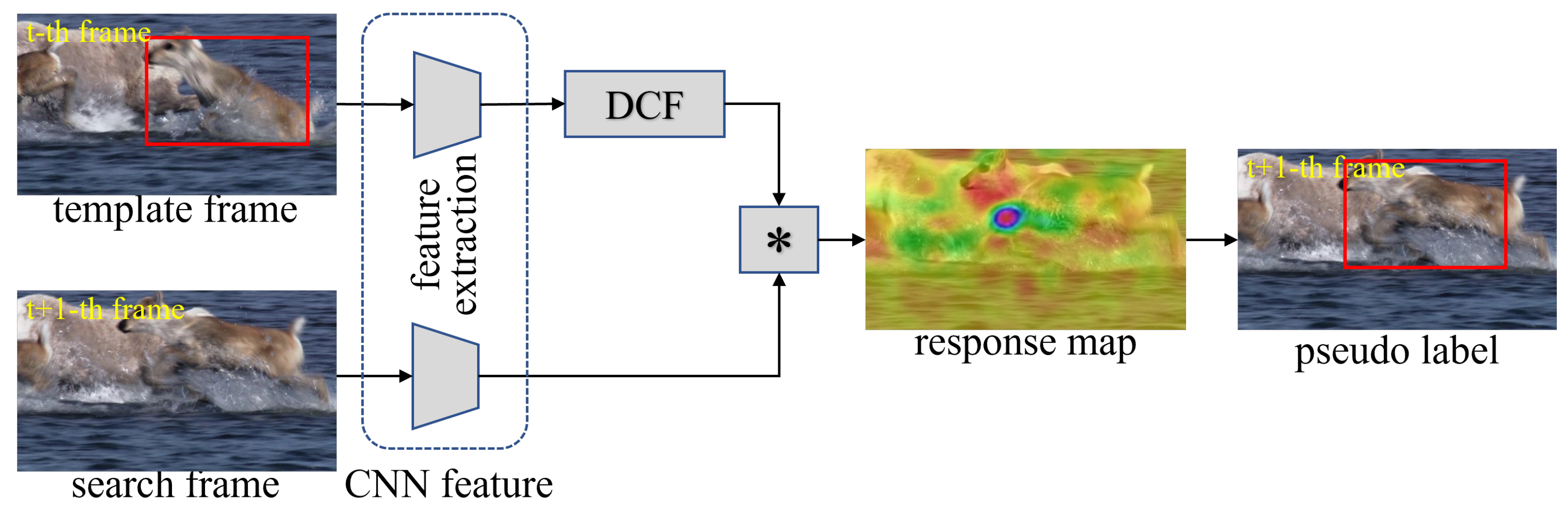

3.3. Unsupervised Pseudo-Label Generation

The training process of the convolutional feature extraction network requires abundant labeled training samples. The training samples in the RGB domain are very rich but the labeled training samples are very limited. A large number of unlabeled samples cannot be directly applied to network training and sample annotation is time-consuming and expensive. Therefore, we adopt an unsupervised learning way to generate pseudo labels for large-scale unlabeled samples, which are then used in the training of the convolutional feature extraction network to ensure the target representation ability of the trained network and reduce the demand for network training on labeled samples. As shown in Figure 4, it is the unsupervised pseudo-label generation process for the unlabeled training samples.

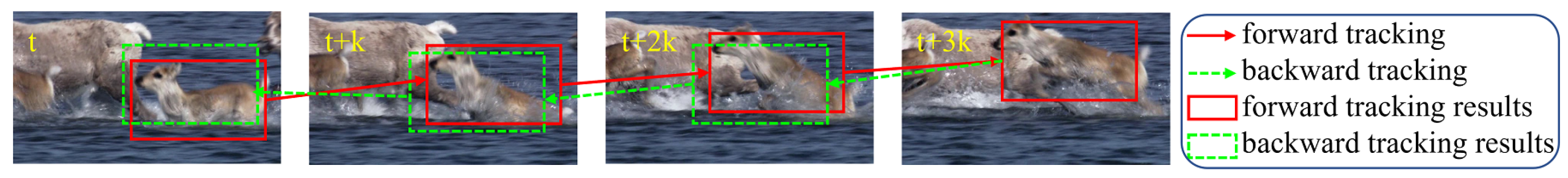

Figure 4.

An example of the unsupervised pseudo-label generation for the unlabeled training sample.

In general, robust tracking methods can guarantee the consistency of forward-backward tracking results. The accuracy of generated pseudo labels of the unlabeled training samples can be improved by using the forward-backward tracking consistency. As shown in Figure 5, we use a multi-cycle consistency method to optimize and improve the generated pseudo labels of the unlabeled training samples. The distances of forward and backward tracking results can be represented by the following loss function:

where l is the training loss, , , represent the forward tracking response map on the corresponding frame, and , , represent the backward tracking response map on the corresponding frame. In this paper, k is set to 2. The accuracy of the generated pseudo labels of the unlabeled training samples is optimized by continuously reducing the distances of forward and backward tracking results on the same frame.

Figure 5.

An example of forward-backward tracking process.

4. Experiments

To verify the performance of the proposed unsupervised cross-domain tracking method (UCDT), we made some comparative experiments for the UCDT and several state-of-the-art tracking methods on PTB-TIR [35] and LSOTB-TIR [36] benchmark datasets. Following the PTB-TIR [35] benchmark, we used the precision and success scores as the evaluation metrics to evaluate the proposed UCDT tracker. More details of the testing benchmarks and evaluation metrics can be found in [35,36].

4.1. Implementation Details

The experiments were implemented in Matlab2021b with MatConvNet toolbox on a PC with an i9-11900KF 3.50 GHz CPU, 64 GB RAM, and an NVIDIA GeForce RTX 3080Ti GPU. The tracking speed is approximately 29 fps. In the unsupervised cross-domain model trained by the SGD with a momentum of the weight decay is set to . The network is trained for 30 epochs with a mini-batch size of 32. The learning rate exponentially decays from to . The regularization parameter is set to , the scale factor is set to , the trend of the target scale , and the model update parameter is set to . The sizes of the feature extraction CNN layers are and , respectively. A local response normalization layer (relu) is employed at the end of each CNN layer to reduce the gradient calculation and avoid over-fitting.

4.2. Experiments on PTB-TIR Benchmark

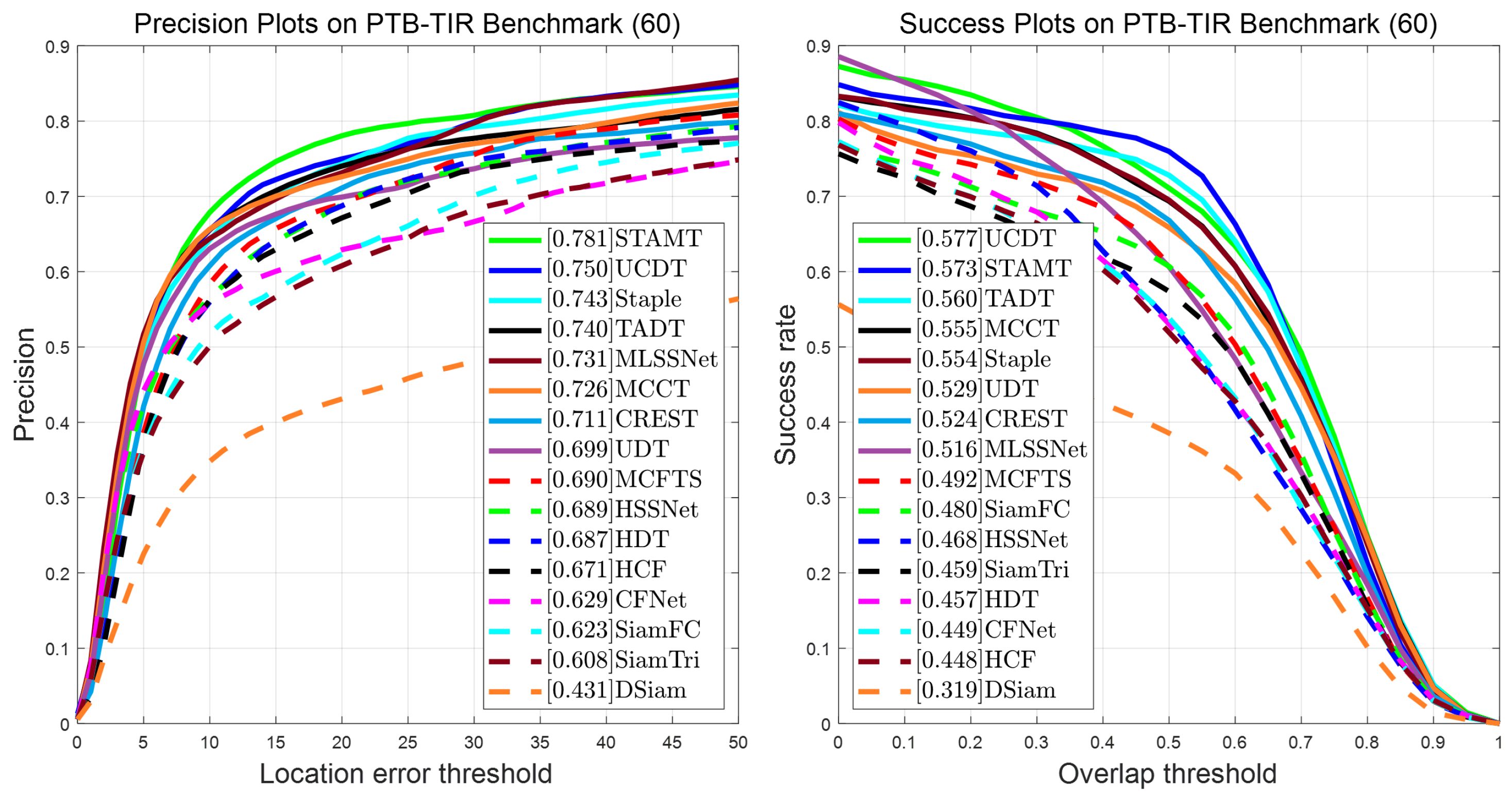

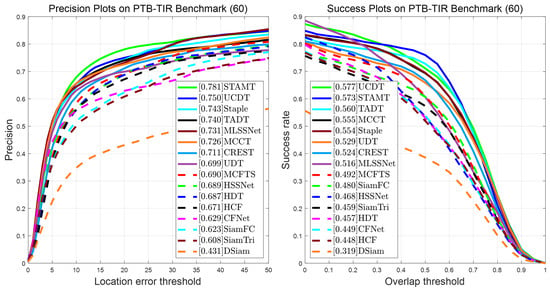

We compared our UCDT tracking method with some other trackers, which including STAMT [21], TADT [40], MMNet [28], MCCT [65], Staple [66], UDT [60], DSiam [27], CREST [18], MLSSNet [67], MCFTS [42], SianFC [24], HSSNet [22], SiamTri [68], HDT [10], CFNet [69], and HCF [39] on the PTB-TIR [35] benchmark. Figure 6 shows the experimental results of these comparison tracking methods. From Figure 6 we could see that our UCDT tracker achieved the best success score and the second-best precision score. Compared to these deep DCF-based tracking methods [10,18,39] that use the off-the-shelf convolutional neural networks for target feature extraction, our UCDT tracking method uses an unsupervised cross-domain model for feature extraction which obtains improvements in tracking accuracy obviously. Although the precision score of our UCDT tracking method is lower than the STAMT [21] tracking method, our UCDT tracking method is higher than the success score, which shows that the proposed UCDT tracking method is competitive compared to the STAMT [21] tracking method. The proposed unsupervised cross-domain model is effective in TIR target tracking scenarios, as demonstrated by these experimental tracking results.

Figure 6.

Experimental comparison on PTB-TIR [35] dataset.

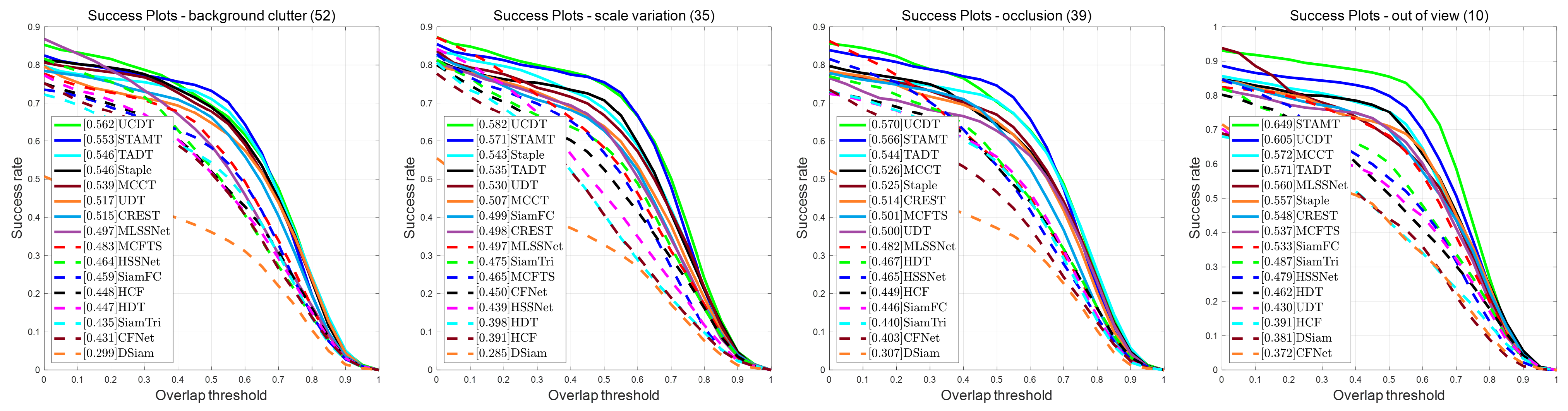

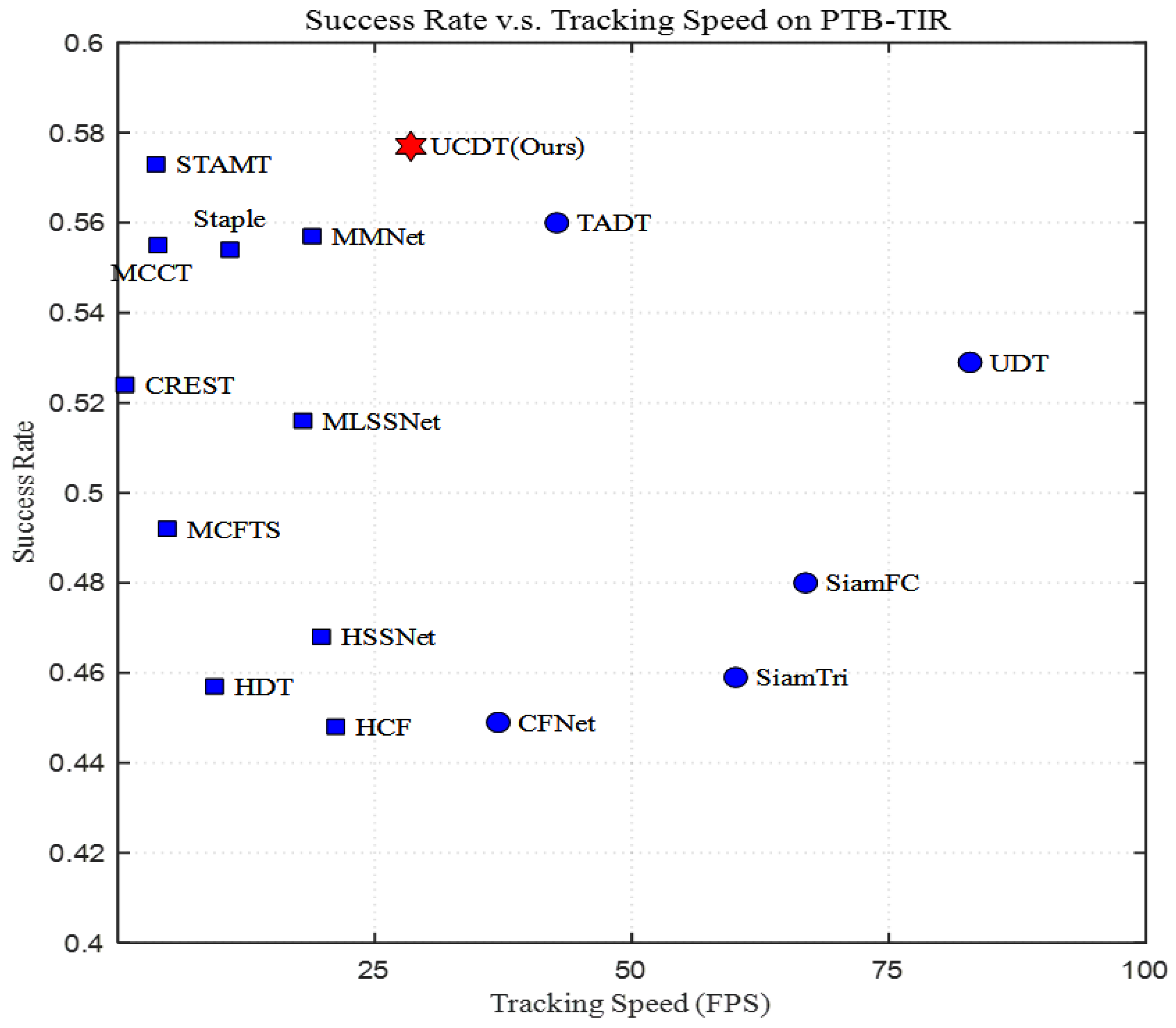

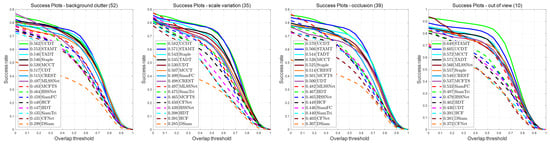

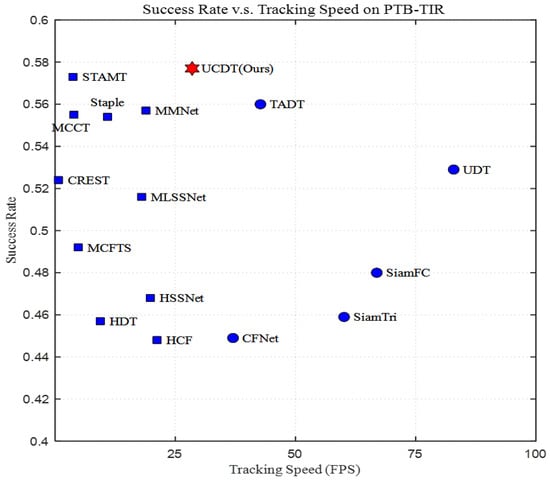

Figure 7 draws the comparison of our UCDT tracker and other trackers for the background clutter, scale variation, occlusion, and out-of-view attributes on the PTB-TIR [35] benchmark in the success metric. It was obvious that the proposed UCDT tracker outperformed other trackers when it came to background clutter, scale variation, and occlusion. To more comprehensively evaluate the tracking accuracy of different tracking methods, we present a comparison result of the success rate and tracking speed for different trackers on the PTB-TIR [35] benchmark. The comparison result is shown in Figure 8, which clearly shows that our UCDT tracker achieves a good balance in tracking success rate and tracking speed.

Figure 7.

Success plots comparison on PTB-TIR [35] benchmark for the background clutter, scale variation, occlusion, and out-of-view attributes.

Figure 8.

The tracking success rate vs. tracking speed comparison on the PTB-TIR [35] benchmark.

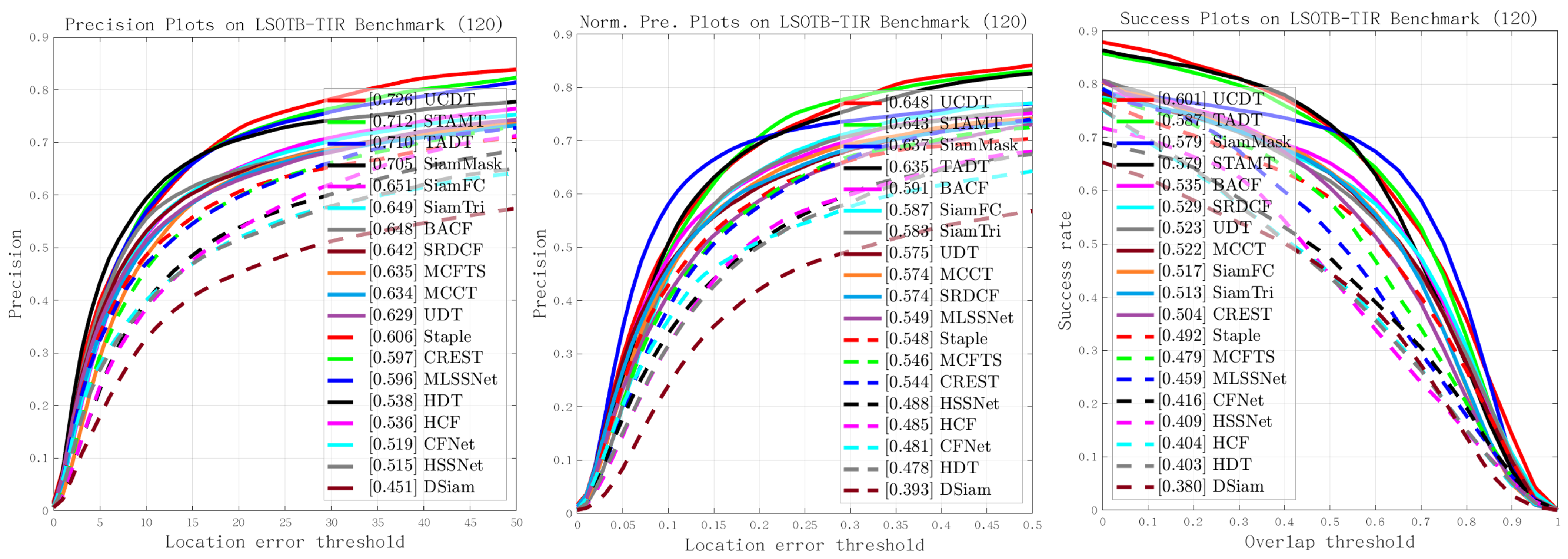

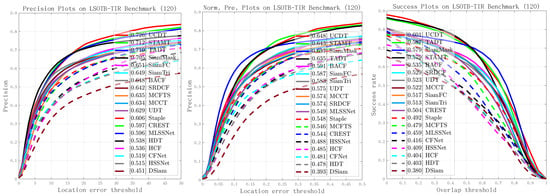

4.3. Experiments on LSOTB-TIR Benchmark

To further evaluate the tracking accuracy of our UCDT tracker, we made some comparisons of it with some other trackers (including TADT [40], SiamMask [26], STAMT [21], BACF [14], SRDCF [16], UDT [60], MCCT [65], SianFC [24], SiamTri [68], CREST [18], Staple [66], MCFTS [42], MLSSNet [67], CFNet [69], HSSNet [22], HCF [39], HDT [10] and DSiam [27]) on LSOTB-TIR [36] benchmark. Figure 9 shows these comparison results of the mentioned tracking methods. We know that the proposed UCDT tracking method achieved the best scores in precision, normalized precision, and success metrics (72.6%, 64.8%, 60.1%). Compared to these Siamese-based tracking methods (e.g., SiamMask [26], SiamFC [24], SiamTri [68]), our UCDT tracking method performs better in each evaluation metric. Compared to these DCF-based tracking methods (e.g., BACF [14], SRDCF [16], HCF [39]), our UCDT tracking method has demonstrated strong advantages in tracking performance. Compared to these convolutional neural network learning-based tracking methods (e.g., CREST [18], TADT [40], HDT [10]), our UCDT tracking method benefits from the unsupervised cross-domain model which obtains more accurate tracking performance. These comparative results demonstrate the efficiency of our UCDT tracking method in a variety of ways.

Figure 9.

Experimental comparison on LSOTB-TIR [36] dataset.

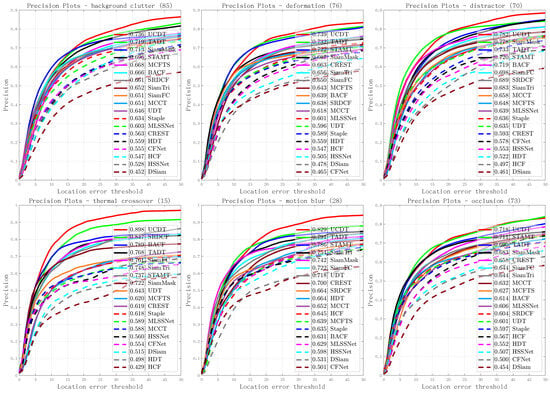

The comparisons of other tracking methods and our UCDT tracking method on some different attributes are shown in Figure 10. The comparison result shows that our UCDT tracking method obtained the best tracking accuracy on these attributes, which shows the validity of the unsupervised cross-domain model in the proposed UCDT tracking method. What is more, Table 1 shows that our UCDT tracking method achieved top-three success scores on four different tracking scenarios. Compared to these DCF-based trackers (e.g., BACF [14], SRDCF [16]), the proposed UCDT tracker achieves significant advantages in all four scenarios. Compared to these deep learning-based trackers (e.g., SiamMask [26], TADT [40], STAMT [21]), the proposed UCDT tracker achieves competitive tracking performance. The results in Table 1 also show that most trackers used directly for RGB on TIR tracking tasks can only obtain a suboptimal result. In conclusion, the proposed UCDT tracker produced some competitive tracking outcomes when compared to other tracking methods.

Figure 10.

Precision plots comparison of our UCDT and other trackers on LSOTB-TIR dataset for some different attributes.

Table 1.

Success scores (%) comparison of our UCDT and other trackers. The top three are highlighted in red, blue and green respectively.

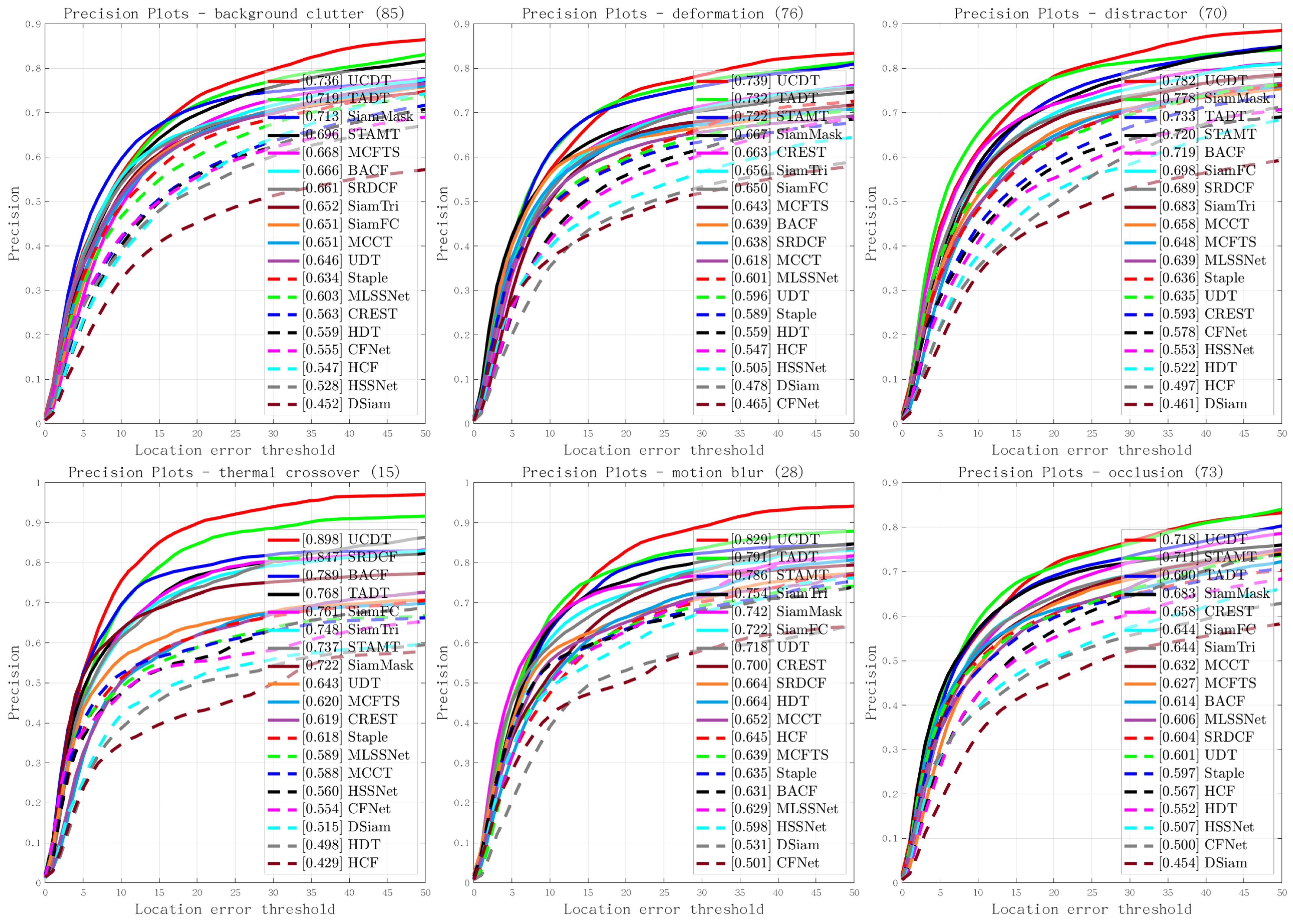

4.4. Qualitative Comparison

To visually illustrate the tracking accuracy of our UCDT tracker, we made some comparisons of UCDT with some other trackers, including UDT [60], SiamMask [26], TADT [40], and STAMT [21] on challenging thermal infrared target testing video sequences. To make the comparison results more intuitive, we give the target’s ground-truth label for comparison. Figure 11 shows the compared results on some TIR test tracking sequences in the LSOTB-TIR [36] benchmark. The target-aware-based TADT [40] tracking method easily interfered with occlusion and the distractor (e.g., deer-H-001, and person-D-019). The STAMT [21] tracker performs well on the deer-H-001 sequence, which benefits from its structural target-aware model. However, these testing results of the STAMT tracker are still unacceptable on other sequences (such as hog-H-001, street-S-002, and person-S-008). As shown in Figure 11 almost all trackers achieved good results in the tracking scenario (e.g., motobiker-V-001) which comes from the vehicle-mounted camera (VM). In other tracking scenarios which come from the surveillance camera (e.g., street-S-002, person-S-008), the drone-mounted camera (e.g., person-D-019), and the hand-held camera (e.g., deer-H-001, hog-H-001), the tracking results of other trackers all have great defects except that our UCDT tracker can obtain results similar to the target’s ground-truth label.

Figure 11.

Qualitative comparison of UCDT with some other trackers (including UDT [60], SiamMask [26], TADT [40], and STAMT [21]) on several TIR tracking sequences (from top to bottom are deer-H-001, hog-H-001, street-S-002, person-S-008, person-D-019, and motobiker-V-001).

5. Conclusions

In this paper, an unsupervised cross-domain model has been proposed for the thermal infrared target-tracking task. The cross-domain model could effectively use the training samples in the source domain to train a general convolutional feature extraction network for target feature extraction in the target domain. Meanwhile, using the unsupervised method to generate pseudo labels for the unlabeled training samples in the source domain can effectively solve the problem that the trained convolutional feature extraction network has insufficient ability of target representation due to insufficient labeled training samples. Extensive experimental results show that our UCDT tracker has achieved some competitive tracking results. The unsupervised cross-domain model relies on the training samples in the source domain, which makes it impossible to evaluate the trained model on the ground truth label of the target domain, limiting the accuracy and optimization effect of the TIR tracking performance. In the future, we will consider using methods such as few-shot learning to further improve the TIR tracking method.

Author Contributions

X.S.: Conceptualization, Methodology, Software, Writing—Original draft preparation. F.H.: Supervision, Writing—Reviewing and Editing. Z.Q.: Data curation, Writing—Original draft preparation. X.Z.: Methodology, Writing—Reviewing and Editing. D.Y.: Investigation, Resources, Supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China under Grant Nos. 62202362, 62302073, and 62172126, by the Fundamental Research Funds for the Central Universities under Grant No. ZYTS24165, by the China Postdoctoral Science Foundation under Grant Nos. 2022TQ0247 and 2023M742742, by the Guangdong Basic and Applied Basic Research Foundation under Grant No. 2021A1515110079, by the Science and Technology Projects in Guangzhou under Grant No. 2023A04J0397, and by the National Key R&D Program of China under Grant No. 2022YFB2902900.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors would like to extend their sincere gratitude to the Editor for excellent collaboration, and to the reviewers for their meticulous evaluations, thoughtful feedback, and valuable recommendations on the initial manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hou, S.; Wang, T.; Qiao, D.; Xu, D.J.; Wang, Y.; Feng, X.; Khan, W.A.; Ruan, J. Temporal-Spatial Fuzzy Deep Neural Network for the Grazing Behavior Recognition of Herded Sheep in Triaxial Accelerometer Cyber-Physical Systems. In IEEE Transactions on Fuzzy Systems; IEEE: New York, NY, USA, 2024; pp. 1–12. [Google Scholar]

- Wang, Y.; Khan, W.A.; Chung, S.H. Few-Shot Defect Detection of Catheter Products via Enlarged Scale Feature Pyramid and Contrastive Proposal Memory Bank. In IEEE Transactions on Industrial Informatics; IEEE: New York, NY, USA, 2024; pp. 1–11. [Google Scholar]

- Li, H.; Wang, X.; Shen, F.; Li, Y.; Porikli, F.; Wang, M. Real-time deep tracking via corrective domain adaptation. IEEE Trans. Circ. Syst. Video Technol. 2019, 29, 2600–2612. [Google Scholar] [CrossRef]

- Ye, J.; Fu, C.; Zheng, G.; Paudel, D.P.; Chen, G. Unsupervised domain adaptation for nighttime aerial tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 8896–8905. [Google Scholar]

- Chen, Y.; Jiang, J.; Lei, R.; Bekiroglu, Y.; Chen, F.; Li, M. GraspAda: Deep grasp adaptation through domain transfer. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: New York, NY, USA, 2023; pp. 10268–10274. [Google Scholar]

- Zhao, L.; Ouyang, E.; Tang, J.; Li, B.; Wu, J.; Zhang, G.; Hu, W. Domain transfer and difference-aware band weighting for object tracking in hyperspectral videos. Int. J. Remote Sens. 2023, 44, 1115–1131. [Google Scholar] [CrossRef]

- Kuppusami Sakthivel, S.S.; Moorthy, S.; Arthanari, S.; Jeong, J.H.; Joo, Y.H. Learning a context-aware environmental residual correlation filter via deep convolution features for visual object tracking. Mathematics 2024, 12, 2279. [Google Scholar] [CrossRef]

- He, L.; Xu, Y.; Chen, Y.; Wen, J. Recent advance on mean shift tracking: A survey. Int. J. Image Graph. 2013, 13, 1350012. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef]

- Qi, Y.; Zhang, S.; Qin, L.; Yao, H.; Huang, Q.; Lim, J.; Yang, M.H. Hedged Deep Tracking. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 4303–4311. [Google Scholar]

- Husna Fauzi, N.I.; Musa, Z.; Hujainah, F. Feature-Based Object Detection and Tracking: A Systematic Literature Review. Int. J. Image Graph. 2024, 24, 2450037. [Google Scholar] [CrossRef]

- Li, D.; Chai, H.; Wei, Q.; Zhang, Y.; Xiao, Y. PACR: Pixel Attention in Classification and Regression for Visual Object Tracking. Mathematics 2023, 11, 1406. [Google Scholar] [CrossRef]

- Jiang, R.; Wang, Q.; Shi, S.; Mou, X.; Chen, S. Flow-assisted visual tracking using event cameras. CAAI Trans. Intell. Technol. 2021, 6, 192–202. [Google Scholar] [CrossRef]

- Kiani Galoogahi, H.; Fagg, A.; Lucey, S. Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 1135–1143. [Google Scholar]

- Liu, L.; Feng, T.; Fu, Y.; Shen, C.; Hu, Z.; Qin, M.; Bai, X.; Zhao, S. Learning Adaptive Spatial Regularization and Temporal-Aware Correlation Filters for Visual Object Tracking. Mathematics 2022, 10, 4320. [Google Scholar] [CrossRef]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the ICCV, Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Liu, B.; Chang, X.; Yuan, D.; Yang, Y. HCDC-SRCF tracker: Learning an adaptively multi-feature fuse tracker in spatial regularized correlation filters framework. Knowl.-Based Syst. 2022, 238, 107913. [Google Scholar] [CrossRef]

- Song, Y.; Ma, C.; Gong, L.; Zhang, J.; Lau, R.W.; Yang, M.H. CREST: Convolutional residual learning for visual tracking. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 2574–2583. [Google Scholar]

- Li, D.; Zhang, Y.; Chen, M.; Chai, H. Attention and Pixel Matching in RGB-T Object Tracking. Mathematics 2023, 11, 1646. [Google Scholar] [CrossRef]

- Algabri, R.; Choi, M.T. Robust person following under severe indoor illumination changes for mobile robots: Online color-based identification update. In Proceedings of the 2021 21st International Conference on Control, Automation and Systems, Jeju, Republic of Korea, 12–15 October 2021; IEEE: New York, NY, USA, 2021; pp. 1000–1005. [Google Scholar]

- Yuan, D.; Shu, X.; Liu, Q.; He, Z. Structural target-aware model for thermal infrared tracking. Neurocomputing 2022, 491, 44–56. [Google Scholar] [CrossRef]

- Li, X.; Liu, Q.; Fan, N.; He, Z.; Wang, H. Hierarchical spatial-aware siamese network for thermal infrared object tracking. Knowl.-Based Syst. 2019, 166, 71–81. [Google Scholar] [CrossRef]

- Zhu, P.; Zheng, J.; Du, D.; Wen, L.; Sun, Y.; Hu, Q. Multi-drone-based single object tracking with agent sharing network. IEEE Trans. Circ. Syst. Video Technol. 2021, 31, 4058–4070. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the ECCV, Amsterdam, The Netherlands, 11–14 October 2016; pp. 850–865. [Google Scholar]

- Zhao, Y.; Zhang, J.; Duan, R.; Li, F.; Zhang, H. Lightweight target-aware attention learning network-based target tracking method. Mathematics 2022, 10, 2299. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast online object tracking and segmentation: A unifying approach. In Proceedings of the CVPR, Long Beach, CA, USA, 15–20 June 2019; pp. 1328–1338. [Google Scholar]

- Guo, Q.; Feng, W.; Zhou, C.; Huang, R.; Wan, L.; Wang, S. Learning dynamic siamese network for visual object tracking. In Proceedings of the ICCV, Venice, Italy, 27–29 October 2017; pp. 1763–1771. [Google Scholar]

- Liu, Q.; Li, X.; He, Z.; Fan, N.; Yuan, D.; Liu, W.; Liang, Y. Multi-task driven feature models for thermal infrared tracking. In Proceedings of the AAAI, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11604–11611. [Google Scholar]

- Yuan, D.; Shu, X.; Liu, Q.; He, Z. Aligned Spatial-Temporal Memory Network for Thermal Infrared Target Tracking. IEEE Trans. Circ. Syst. II Express Briefs 2023, 70, 1224–1228. [Google Scholar] [CrossRef]

- Liu, Q.; Li, X.; Yuan, D.; Yang, C.; Chang, X.; He, Z. LSOTB-TIR: A large-scale high-diversity thermal infrared single object tracking benchmark. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 9844–9857. [Google Scholar] [CrossRef]

- Yuan, D.; Zhang, H.; Shu, X.; Liu, Q.; Chang, X.; He, Z.; Shi, G. Thermal infrared target tracking: A comprehensive review. IEEE Trans. Instrum. Meas. 2024, 73, 5000419. [Google Scholar] [CrossRef]

- Lai, S.; Liu, C.; Wang, D.; Lu, H. Refocus the Attention for Parameter-Efficient Thermal Infrared Object Tracking. In IEEE Transactions on Neural Networks and Learning Systems; IEEE: New York, NY, USA, 2024. [Google Scholar]

- Yang, C.; Liu, Q.; Li, G.; Pan, H.; He, Z. Learning diverse fine-grained features for thermal infrared tracking. Expert Syst. Appl. 2024, 238, 121577. [Google Scholar] [CrossRef]

- Gao, P.; Li, S.M.; Gao, F.; Wang, F.; Yuan, R.Y.; Fujita, H. In defense and revival of Bayesian filtering for thermal infrared object tracking. Knowl.-Based Syst. 2024, 293, 111665. [Google Scholar] [CrossRef]

- Liu, Q.; He, Z.; Li, X.; Zheng, Y. PTB-TIR: A thermal infrared pedestrian tracking benchmark. IEEE Trans. Multimed. 2019, 22, 666–675. [Google Scholar] [CrossRef]

- Liu, Q.; Li, X.; He, Z.; Li, C.; Li, J.; Zhou, Z.; Yuan, D.; Li, J.; Yang, K.; Fan, N.; et al. LSOTB-TIR: A Large-Scale High-Diversity Thermal Infrared Object Tracking Benchmark. In Proceedings of the ACM MM, Seattle, WA, USA, 12–16 October 2020; pp. 3847–3856. [Google Scholar]

- Khan, W.A.; Chung, S.H.; Awan, M.U.; Wen, X. Machine learning facilitated business intelligence (Part I) Neural networks learning algorithms and applications. Ind. Manag. Data Syst. 2020, 120, 164–195. [Google Scholar] [CrossRef]

- Kim, W.; Kanezaki, A.; Tanaka, M. Unsupervised learning of image segmentation based on differentiable feature clustering. IEEE Trans. Image Process. 2020, 29, 8055–8068. [Google Scholar] [CrossRef]

- Ma, C.; Huang, J.B.; Yang, X.; Yang, M.H. Hierarchical convolutional features for visual tracking. In Proceedings of the ICCV, Santiago, Chile, 7–13 December 2015; pp. 3074–3082. [Google Scholar]

- Li, X.; Ma, C.; Wu, B.; He, Z.; Yang, M.H. Target-aware deep tracking. In Proceedings of the CVPR, Long Beach, CA, USA, 15–19 June 2019; pp. 1369–1378. [Google Scholar]

- Gundogdu, E.; Koc, A.; Solmaz, B.; Hammoud, R.I.; Aydin Alatan, A. Evaluation of feature channels for correlation-filter-based visual object tracking in infrared spectrum. In Proceedings of the CVPRW, Las Vegas, NV, USA, 27–30 June 2016; pp. 24–32. [Google Scholar]

- Liu, Q.; Lu, X.; He, Z.; Zhang, C.; Chen, W.S. Deep convolutional neural networks for thermal infrared object tracking. Knowl.-Based Syst. 2017, 134, 189–198. [Google Scholar] [CrossRef]

- Yuan, D.; Chang, X.; Liu, Q.; Yang, Y.; Wang, D.; Shu, M.; He, Z.; Shi, G. Active Learning for Deep Visual Tracking. In IEEE Transactions on Neural Networks and Learning Systems; IEEE: New York, NY, USA, 2023; pp. 1–13. [Google Scholar]

- Xie, F.; Wang, Z.; Ma, C. DiffusionTrack: Point Set Diffusion Model for Visual Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 19113–19124. [Google Scholar]

- Cai, W.; Liu, Q.; Wang, Y. HIPTrack: Visual Tracking with Historical Prompts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 19258–19267. [Google Scholar]

- Li, X.; Ding, H.; Yuan, H.; Zhang, W.; Pang, J.; Cheng, G.; Chen, K.; Liu, Z.; Loy, C.C. Transformer-based visual segmentation: A survey. In IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE: New York, NY, USA, 2024. [Google Scholar]

- Fu, C.; Lu, K.; Zheng, G.; Ye, J.; Cao, Z.; Li, B.; Lu, G. Siamese object tracking for unmanned aerial vehicle: A review and comprehensive analysis. Artif. Intell. Rev. 2023, 56, 1417–1477. [Google Scholar] [CrossRef]

- Zhang, H.; Yuan, D.; Shu, X.; Li, Z.; Liu, Q.; Chang, X.; He, Z.; Shi, G. A Comprehensive Review of RGBT Tracking. IEEE Trans. Instrum. Meas. 2024, 73, 5027223. [Google Scholar] [CrossRef]

- Huang, B.; Dou, Z.; Chen, J.; Li, J.; Shen, N.; Wang, Y.; Xu, T. Searching Region-Free and Template-Free Siamese Network for Tracking Drones in TIR Videos. In IEEE Transactions on Geoscience and Remote Sensing; IEEE: New York, NY, USA, 2023. [Google Scholar]

- Huang, Y.; He, Y.; Lu, R.; Li, X.; Yang, X. Thermal infrared object tracking via unsupervised deep correlation filters. Digit. Signal Process. 2022, 123, 103432. [Google Scholar] [CrossRef]

- Zha, Y.; Sun, J.; Zhang, P.; Zhang, L.; Gonzalez-Garcia, A.; Huang, W. Self-supervised cross-modal distillation for thermal infrared tracking. IEEE MultiMed. 2022, 29, 80–96. [Google Scholar] [CrossRef]

- Shu, X.; Yang, Y.; Wu, B. A neighbor level set framework minimized with the split Bregman method for medical image segmentation. Signal Process. 2021, 189, 108293. [Google Scholar] [CrossRef]

- Shu, X.; Yang, Y.; Wu, B. Adaptive segmentation model for liver CT images based on neural network and level set method. Neurocomputing 2021, 453, 438–452. [Google Scholar] [CrossRef]

- Casolla, G.; Cuomo, S.; Di Cola, V.S.; Piccialli, F. Exploring unsupervised learning techniques for the Internet of Things. IEEE Trans. Ind. Inform. 2019, 16, 2621–2628. [Google Scholar] [CrossRef]

- Caron, M.; Bojanowski, P.; Joulin, A.; Douze, M. Deep clustering for unsupervised learning of visual features. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018; pp. 132–149. [Google Scholar]

- Ren, P.; Xiao, Y.; Chang, X.; Huang, P.; Li, Z.; Gupta, B.B.; Chen, X.; Wang, X. A Survey of Deep Active Learning. ACM Comput. Surv. 2022, 54, 1–40. [Google Scholar] [CrossRef]

- Ren, P.; Xiao, Y.; Chang, X.; Huang, P.; Li, Z.; Chen, X.; Wang, X. A Comprehensive Survey of Neural Architecture Search: Challenges and Solutions. ACM Comput. Surv. 2021, 54, 1–34. [Google Scholar] [CrossRef]

- Crawford, E.; Pineau, J. Exploiting spatial invariance for scalable unsupervised object tracking. In Proceedings of the AAAI, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 3684–3692. [Google Scholar]

- Luo, M.; Chang, X.; Nie, L.; Yang, Y.; Hauptmann, A.G.; Zheng, Q. An Adaptive Semisupervised Feature Analysis for Video Semantic Recognition. IEEE Trans. Cybern. 2018, 48, 648–660. [Google Scholar] [CrossRef]

- Wang, N.; Song, Y.; Ma, C.; Zhou, W.; Liu, W.; Li, H. Unsupervised Deep Tracking. In Proceedings of the CVPR, Long Beach, CA, USA, 15–20 June 2019; pp. 1308–1317. [Google Scholar]

- Sun, J.; Zhang, L.; Zha, Y.; Gonzalez-Garcia, A.; Zhang, P.; Huang, W.; Zhang, Y. Unsupervised Cross-Modal Distillation for Thermal Infrared Tracking. In Proceedings of the ACM MM, Virtual, 20–24 October 2021; pp. 2262–2270. [Google Scholar]

- Luiten, J.; Zulfikar, I.E.; Leibe, B. Unovost: Unsupervised offline video object segmentation and tracking. In Proceedings of the CVPR, Seattle, WA, USA, 16–18 June 2020; pp. 2000–2009. [Google Scholar]

- Wang, G.; Zhou, Y.; Luo, C.; Xie, W.; Zeng, W.; Xiong, Z. Unsupervised visual representation learning by tracking patches in video. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021; pp. 2563–2572. [Google Scholar]

- Wu, Q.; Wan, J.; Chan, A.B. Progressive unsupervised learning for visual object tracking. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021; pp. 2993–3002. [Google Scholar]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Wang, M.; Li, H. Multi-cue correlation filters for robust visual tracking. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4844–4853. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar]

- Liu, Q.; Li, X.; He, Z.; Fan, N.; Yuan, D.; Wang, H. Learning deep multi-level similarity for thermal infrared object tracking. IEEE Trans. Multimed. 2021, 23, 2114–2126. [Google Scholar] [CrossRef]

- Dong, X.; Shen, J. Triplet loss in siamese network for object tracking. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018; pp. 459–474. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H. End-to-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 2805–2813. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).