Abstract

Context: YOLO (You Look Only Once) is an algorithm based on deep neural networks with real-time object detection capabilities. This state-of-the-art technology is widely available, mainly due to its speed and precision. Since its conception, YOLO has been applied to detect and recognize traffic signs, pedestrians, traffic lights, vehicles, and so on. Objective: The goal of this research is to systematically analyze the YOLO object detection algorithm, applied to traffic sign detection and recognition systems, from five relevant aspects of this technology: applications, datasets, metrics, hardware, and challenges. Method: This study performs a systematic literature review (SLR) of studies on traffic sign detection and recognition using YOLO published in the years 2016–2022. Results: The search found 115 primary studies relevant to the goal of this research. After analyzing these investigations, the following relevant results were obtained. The most common applications of YOLO in this field are vehicular security and intelligent and autonomous vehicles. The majority of the sign datasets used to train, test, and validate YOLO-based systems are publicly available, with an emphasis on datasets from Germany and China. It has also been discovered that most works present sophisticated detection, classification, and processing speed metrics for traffic sign detection and recognition systems by using the different versions of YOLO. In addition, the most popular desktop data processing hardwares are Nvidia RTX 2080 and Titan Tesla V100 and, in the case of embedded or mobile GPU platforms, Jetson Xavier NX. Finally, seven relevant challenges that these systems face when operating in real road conditions have been identified. With this in mind, research has been reclassified to address these challenges in each case. Conclusions: This SLR is the most relevant and current work in the field of technology development applied to the detection and recognition of traffic signs using YOLO. In addition, insights are provided about future work that could be conducted to improve the field.

Keywords:

YOLO; traffic sign detection and recognition; road accidents; systematic literature review; object detection; computer vision MSC:

68T07; 68T40; 68T45

1. Introduction

Road Traffic Accidents (RTA) are among the leading causes of damage, injuries, or deaths worldwide [1]. These accidents are events that occur on roads and highways involving vehicles. They can be attributed to various factors, including human error, environmental conditions, technical malfunctions, or a combination of these. Furthermore, the World Health Organization (WHO) [2] indicates that diseases generated by traffic accidents ranked eighth in the world in 2018, accounting for 2.5% of all deaths worldwide. Based on data from 2015, the WHO also estimates that around 1.25 million deaths may occur annually.

In the United States, approximately 12.15 million vehicles were involved in crashes in 2019. The number of road accidents per one million inhabitants in this country is forecast to dip down in the next few years, reaching just over 7100 in 2025 [3]. In Europe, between 2010 and 2020, the number of road deaths decreased by 36%. Compared to 2019, when there were 22,800 fatalities, 4000 fewer people lost their lives on EU roads in 2020 [4].

According to Yu et al. [1], many studies have focused on traffic safety, including traffic accident analysis, vehicle collision detection, collision risk warning, and collision prevention. In addition, several intelligent systems have been proposed that specialize in traffic sign detection and recognition using computer vision (CV) and deep learning (DL). In this context, one of the most popular technologies is the YOLO object detection algorithm [5,6,7,8,9,10,11,12,13].

This article presents an SLR on the detection and recognition of traffic signs using the YOLO object detection algorithm. In this context, a traffic sign serves as a visual guide to convey information about road conditions, potential hazards, and other essential details for safe road navigation [14]. Meanwhile, YOLO, a model based on convolutional neural networks, is specifically designed for object detection [6]. This algorithm has been selected because of its competitiveness compared to other methods based on DL, with respect to processing speed on GPUs, high performance rates regarding the most critical metrics, and simplicity [15,16,17].

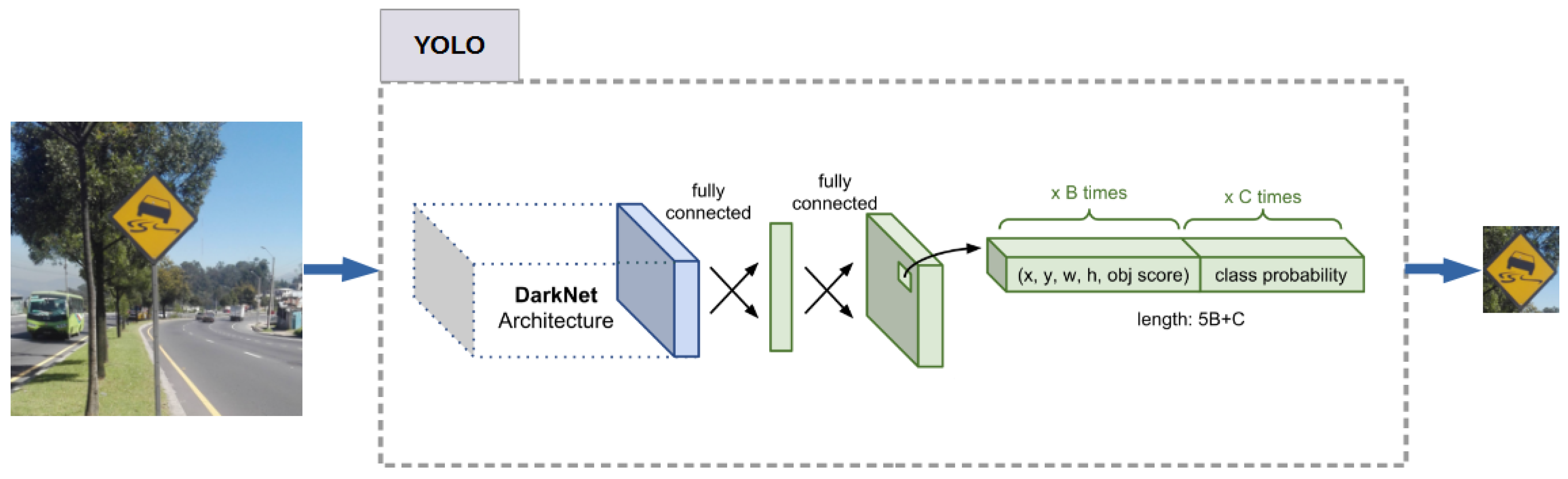

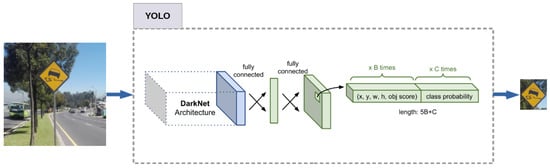

Figure 1 shows the global scheme of this type of system. An input image captured with a camera is fed to the YOLO object detection algorithm, and through a deep convolutional neural network it detects objects and outputs isolated traffic signs when appropriate. Subsequently, it provides pertinent information to the driver (or the autonomous driving system) to make driving safer, more efficient, and comfortable.

Figure 1.

General scheme of a traffic sign detection and recognition system using the YOLO object detection algorithm. The central image has been taken from [18].

The main contributions of this SLR are to gather evidence to answer the following five research questions (RQs): (1) what are the main applications of traffic sign detection and recognition using YOLO? (2) What traffic sign datasets are used to train, validate, and test these systems? (3) What metrics are used to measure the quality of object detection in the context of traffic sign detection and recognition using YOLO? (4) What hardware is used to implement traffic sign recognition and detection systems based on YOLO? (5) What are the problems and challenges encountered in the detection and recognition of traffic signs using YOLO?

The remainder of the paper is organized as follows. This initial section outlines the primary objective of this study. Section 2 details the materials and methods used in this review. Section 3 focuses on the results of this study. Section 4 exhibits the research and practical implications. Finally, the last part is devoted to conclusions and future work.

2. Materials and Methods

This section provides details and guidelines essential for executing a targeted SLR focused on the detection and recognition of traffic signs, employing the YOLO object detection algorithm.

2.1. Traffic Signs

Traffic signs are visual cues and symbols that are placed on public roads to warn, inform, order, or regulate the behavior of road users, especially in densely populated and busy urban areas. They contain a simple visual symbolic language so that the driver can interpret and instantly obtain information from the road for safe driving [5].

Traffic signs are typically made of reflective materials that are visible at night and under low-light conditions. The reflective design not only enhances safety by facilitating night-time visibility but also ensures that drivers can easily discern and understand the intended messages. Each sign conveys a unique message and is distinguished by shape, color, and size, aligning with specific road directives and offering drivers accurate and effective communication, contributing to an overall safer and well-regulated traffic environment [14].

Among the multitude of characteristics, two stand out, namely shape and color, from which traffic signs can be grouped into three types: prohibitive, preventive, and informative [19,20]. Prohibitive (or regulatory) signs inform the driver of the restrictions he must comply with; they are often circular and red in color. Preventive (or warning) signs are warning signals for possible dangers on the road, generally yellow diamonds. Informative (or indicative) signs are designed to assist drivers in navigation tasks. Typically, these signs are rectangular and colored green or blue, providing essential information for route guidance.

The visual appearance of traffic signs exhibits significant variability depending on the country, posing a challenge for classification systems to achieve success. Obviously, this represents a drawback for the development of global traffic sign detection and recognition systems and limits their development to certain types of signs or countries [19]. Other challenging conditions are illumination changes, occlusions, perspectives, weather conditions, aging, blur, and human artifacts. Under such extreme conditions, all methods are unable to complete the detection task efficiently.

2.2. YOLO Object Detection Algorithm

YOLO is a state-of-the-art technology developed for object detection based on DL, with emphasis on real-time and high-accuracy applications [21]. In YOLO, object detection is treated as a regression problem where candidate images and their categories and confidence indices are directly generated by regression. The detection result is finally determined by setting a threshold of the confidence rate and the non-maximum suppression technique [6].

The primary advantage of YOLO lies in its capability for real-time image processing, making it well-suited for applications like autonomous vehicles and Advanced Driver Assistance Systems (ADAS) [18]. Moreover, YOLO achieves state-of-the-art accuracy with limited training data, surpassing other methods. Additionally, its ease of implementation and user-friendliness contribute to its popularity in the field of computer vision (CV).

In contrast, several authors have identified some limitations and handicaps. One important limitation is that YOLO struggles to detect small objects. The algorithm divides an image into grid cells and detects objects within these cells. Objects smaller than these predefined areas may be missed by the algorithm. Another problem is that YOLO does not consider the semantics of the image, ignoring the meaning of the visual data. Certain versions of YOLO are pre-trained using academic datasets, not considering real data that may be blurry, contain obstructed objects, and generally have a low resolution [22].

The first models were implemented by Redmon et al. [21], Redmon and Farhadi [23,24], starting with the first version in 2016, using the convolutional neural network called DarkNet [25]; followed by the second known as YOLO9000 [23] in 2017, using Darknet-19; ending the saga in 2018 with YOLOv3 [24], using Darknet-53. The fourth version corresponds to Bochkovskiy et al. [26,27] released in April 2020, also uses CSPDarknet-53. The fifth version was released in May 2020, by Jocher and the company Ultralytics [28]; this variant uses CSPNet as a neural network. YOLOv5 comes in different versions, YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x.

It is important to mention that this research has only considered the five fundamental versions of the YOLO object detection algorithm. Other variants have been compressed into the original models, for example, the YOLO tiny.

2.3. State of the Art

Presently, there is a limited corpus of literature specifically addressing the detection and recognition of traffic signs utilizing systematic review or survey methodologies. Nonetheless, noteworthy contributions exist, particularly those investigating machine learning methods and their integration in the advancement of ADAS [18] or autonomous vehicles devices. Liu et al. [5] have presented a study in which traffic sign detection methods are grouped into five categories: color-based methods, shape-based methods, color- and shape-based methods, machine-learning-based methods, and LIDAR-based methods. They have also shown that mobile laser scanning technology has experienced significant growth in the last five years and has been a key solution in many ADAS. Interestingly, the prevalence of YOLO-based systems is scarcely acknowledged within the scope of their findings. Wali et al. [29] have indicated that the investigation of automatic traffic sign detection and recognition systems is a crucial research area targeted at advancing CV-based ADAS. Additionally, this article provides a comprehensive examination of methods for detecting, classifying, and tracking traffic signs using machine learning. Notably, the use of YOLO is not explicitly mentioned. On the other hand, Borrego-Carazo et al. [30] have conducted a systematic review of machine learning techniques and embedded systems for implementing ADAS on mobile devices. Their examination extends to a marginal analysis of traffic sign recognition within the framework of machine-learning methodologies, with particular emphasis on the absence of any reference to the YOLO algorithm, a notable omission in the contemporary discourse on such systems. In their contribution, Muhammad et al. [31] have conducted a comprehensive analysis elucidating the challenges and prospective trajectories within the domain of DL applications for autonomous vehicle development. Within this framework, particular emphasis is placed on sign detection systems tailored for traffic, acknowledged as pivotal components in the evolution of future vehicles. It is noteworthy that the YOLO algorithm garners substantial attention, especially concerning its application in the realm of traffic light detection.

Concluding this study, Diwan et al. [16] have presented the fundamental architectures, applications, and challenges associated with various object detectors based on DL neural networks. The authors posit that YOLO manifests notable superiority, particularly in terms of both detection accuracy and inference time. Unfortunately, the study lacks specific information pertaining to YOLO-based traffic sign detection systems.

2.4. Systematic Literature Review Methodology

This section presents an overview of the planning, conducting, and reporting a review following the SLR process outlined in [32].

An SLR is used to identify, select, and systematically evaluate all relevant evidence on a specific topic. The SLR process searches for various databases and sources of information, using rigorous selection criteria to identify relevant studies and assess the quality of those studies. An SLR is considered a rigorous and objective way of summarizing and analyzing existing evidence on a topic and is frequently utilized in areas such as health and social sciences to inform research and decisionmaking.

SLRs have applications in different fields of engineering. For example, they can help engineers identify the latest trends and developments in a specific field, analyze the effectiveness of different techniques and approaches used in previous projects, and identify potential problems or challenges in a particular engineering area. SLRs can also help compare different studies and assess the quality of existing evidence on a specific topic. Overall, SLRs are a valuable tool for engineering research and decisionmaking [33].

The review protocol followed in this investigation consists of six stages: defining the research questions, designing a search strategy, selecting the studies, measuring the quality of the articles, extracting the data, and synthesizing the data [33].

First, we develop a set of research questions based on the objective of this SLR. Next, we develop a search strategy to find studies relevant to our research questions. This step involved defining the scientific databases to be used in the process and the search terms. In the third stage, we specify the criteria for determining articles that address the research questions and those discarded from the study. As part of this phase, pilot studies were conducted to establish inclusion and exclusion criteria more effectively. In the next stage, the quality of the articles is quantified to determine whether they met the minimum standards for inclusion in the review. The following steps considered data extraction and synthesis. During the data extraction stage, a pilot process was also performed to determine which data to extract and how to store them for subsequent synthesis. Finally, in the synthesis stage, we decided on strategies to generate syntheses depending on the type of data analyzed [34].

Following a protocol for SLR is vital to ensure rigor, reproducibility, and minimize investigator bias. In the remainder of this section, details of the review protocol are presented in detail [34].

2.5. Research Questions

The RQs are derived from the general objective of the investigation and structure the literature search, determining the selection criteria for including or excluding studies in the review. The RQs are also used to guide the interpretation and analysis of the review results. The following are the RQs formulated in this investigation.

RQ1 [Applications]: What are the main applications of traffic sign detection and recognition using YOLO?

Knowing the applications of traffic sign detection helps researchers and developers identify areas of opportunity and challenges in the field of CV. This can lead to new advances and improvements in traffic sign detection technology. Additionally, knowing the applications of traffic sign detection can also be useful for users of the technology as it provides a better understanding of how traffic sign detection can be employed.

RQ2 [Datasets]: What traffic sign datasets are used to train, validate, and test these systems?

For several reasons, it is essential to know the datasets used to validate the detection and recognition of traffic signs. First, the datasets provide a source of information to evaluate the accuracy and effectiveness of detection methods. Second, datasets also allow us to observe the behavior of recognition systems in different situations and with different types of data, which allows us to improve and optimize the application. Furthermore, knowledge of the datasets makes it possible to identify potential application problems or errors and correct them before launching them on the market.

RQ3 [Metrics]: What metrics are used to measure the quality of object detection in the context of traffic sign detection and recognition using YOLO?

Performance metrics to measure the accuracy of object detection are crucial for the proper functioning of traffic sign detection and recognition systems. If object detection is not accurate, these systems may not function properly and may even be dangerous to humans. Additionally, these performance measures are useful to compare different object detection systems and algorithms and determine which one is the most suitable for a particular application. Finally, knowing appropriate metrics allows one to identify errors in an object detection system and to develop solutions to improve its accuracy.

RQ4 [Hardware]: What hardware is used to implement traffic sign recognition and detection systems based on YOLO?

Hardware is a crucial element in traffic recognition systems as it provides the processing and storage capacity needed to analyze and process the video images captured by the cameras. Without the right hardware, traffic recognition systems would not be able to function properly and would not be able to detect and track objects in real time. Additionally, hardware also plays an important role in the speed and efficiency of traffic recognition and detection systems as more powerful hardware can process and analyze images faster and more accurately.

RQ5 [Challenges]: What are the problems and challenges encountered in the detection and recognition of traffic signs using YOLO?

Being aware of the problems and challenges of traffic sign detection and recognition using YOLO helps researchers and developers to identify areas where the technology needs to be improved. Also, knowing these problems and challenges enables better understanding of its limitations and potential drawbacks. This helps to make informed decisions about the appropriate use of the technology mentioned above.

2.6. Search Strategy

This section presents the steps used to identify, select, and evaluate relevant studies for inclusion in the review. It includes a description of databases and sources of information, keywords, and inclusion and exclusion criteria applied to identify relevant studies.

2.6.1. Databases of Digital Library

In the context of an SLR, a database is a collection of published research articles that can be searched using specific keywords. These databases are typically used to identify relevant studies to be included in the review. The selected databases for this study are IEEE Xplore, MDPI, Plos, Science Direct, Wiley, Sage, Hindawi Publishing Group, Taylor & Francis, and Springer Nature, all of which contain articles indexed in Web of Science (WoS) and/or Scopus.

WoS serves as a robust research database and citation index, offering a widely utilized platform that grants access to an extensive array of scholarly articles, conference proceedings, and various research materials. Conversely, Scopus, produced by Elsevier Co., is an abstract and indexing database with embedded full-text links.

Furthermore, IEEE Xplore, MDPI, Plos, Science Direct, Wiley, Sage, Hindawi Publishing Group, Taylor & Francis, and Springer Nature are renowned digital libraries. These platforms provide access to an expansive collection of scientific and technical content, encompassing disciplines such as electrical engineering, computer science, electronics, and other related fields.

2.6.2. Timeframe of Study

This study encompasses documents published from 2016 through the end of 2022, extending to early publications of 2023. The selection of 2016 as the starting point aligns with the initial release of the YOLO algorithm.

2.6.3. Keywords

The primary keywords used to search for relevant studies are YOLO, traffic sign, recognition, detection, identification, and object detection.

Later on, the search strings formulated with these keywords will be presented for each digital library.

2.6.4. Inclusion and Exclusion Criteria

To ensure that the SLR concentrates on high-quality, relevant studies, specific inclusion and exclusion criteria are defined.

Inclusion Criteria:

- Studies must evaluate traffic sign detection or recognition using the YOLO object detection algorithm.

- Only studies published between 2016 and 2022 are considered.

- The study should be published in a peer-reviewed journal or conference proceedings.

- Preference is given to documents categorized as “Journal” or “Conference” articles.

- The study must be in English.

Exclusion Criteria:

- Studies that do not utilize the YOLO object detection algorithm for traffic sign detection or recognition.

- Research not focused on traffic sign detection or recognition.

- Publications outside the 2016–2022 timeframe.

- Non-peer-reviewed articles and documents.

- Studies published in languages other than English.

2.6.5. Study Selection

This section outlines the search and selection methodology used in this study, detailing the progression from the initial number of articles identified to the final selection of studies included in the review.

In the initial phase, a keyword-based search across nine bibliographic databases yielded a total of 594,890 documents. The search strings employed, along with the number of articles identified in each digital library, are detailed in Table 1.

Table 1.

Search strings formulated with the keywords for each digital library.

The search was then narrowed to include only publications from 2016 to 2022, with early access articles from 2023 also considered. This refinement resulted in the exclusion of 369,215 documents. Further filtering focused on documents classified under ‘Journals’ or ‘Conferences’, leading to the elimination of an additional 15,020 documents. Priority was given to articles employing YOLO as the primary detection method, which further narrowed the field by excluding 209,378 documents. After these initial stages, 1448 documents remained across all databases. Additionally, 171 articles were specifically sourced from the WoS and Scopus.

A thorough curation process followed. This involved removing duplicates (755 documents), and applying stringent inclusion and exclusion criteria, which led to the further removal of 364 documents due to keyword-related issues, 177 for abstract-related discrepancies, and 37 for lack of sufficient information. The culmination of this meticulous process resulted in a final selection of 115 documents, which comprise the corpus for analysis in this SLR.

2.7. Data Extraction

The data extraction step in SLR is a process of collecting relevant information from the primary studies that meet the inclusion and exclusion criteria. The purpose of this step is to synthesize and analyze the data to answer the research question and validate the results of the SLR.

To determine YOLO’s practical applications, we applied a rigorous evaluation framework, guided by experts. This approach revealed three main domains: road safety, ADAS, and autonomous driving. When an article did not explicitly mention its application domain, we conducted a thorough analysis to deduce the specific context.

To identify relevant datasets, a comprehensive keyword search for ‘data set’ was performed within each article. Upon locating a dataset, its relevance was carefully evaluated before meticulously recording it in the extraction table.

Furthermore, a rigorous review of each article was undertaken to pinpoint and document the specific metrics utilized. These metrics were then systematically integrated into the extraction table, ensuring comprehensive documentation and analysis.

Additionally, we conducted a thorough examination of the hardware configurations employed in each study, systematically recording these details in the extraction table. This approach facilitated the identification of prevalent and frequently used hardware components.

To delineate the challenges intrinsic to YOLO technology, we applied an expert-defined criterion, outlining seven distinct categories: variations in lighting conditions, adverse weather conditions, partial occlusion, signs with visible damage, complex scenarios, sub-optimal image quality, and region-specific concerns. In numerous instances, the application domain may remain implicit, necessitating a detailed analysis of each article to deduce the specific challenge at hand.

2.8. Data Synthesis

The data synthesis step in SLR is the process of gathering and interpreting the relevant data extracted from the selected studies to answer the research questions of the SLR.

First, we categorized real-world YOLO applications, providing a clear overview of deployment areas. Next, we aggregated and cataloged referenced datasets, noting their specific characteristics and providing a comprehensive view of data sources. The metrics employed in various studies were synthesized, revealing prevalent evaluation methodologies. Also, hardware configurations were analyzed, uncovering prevailing trends and supporting the technological landscape. Moreover, challenges in YOLO technology were categorized, shedding light on limitations and areas for improvement.

This synthesis process was crucial in distilling key findings and trends from the extensive literature, offering a nuanced understanding of YOLO technology and its real-world applications.

3. Results Based on RQs

In this section, a critical analysis is performed on 115 primary studies based on the previously described RQs and five different aspects: applications, sign datasets, metrics, hardware, and challenges.

3.1. RQ1 [Applications]: What Are the Main Applications of Traffic Sign Detection and Recognition Using YOLO?

The search revealed three main applications of YOLO: road safety, ADAS, and autonomous driving.

Road Safety: Road safety refers to the efforts used to reduce the likelihood of collisions and protect road users. This includes different efforts and legislation aimed at encouraging safe driving practices, improving road infrastructure, monitoring road conditions, identifying road dangers, and improving traffic management and vehicle safety [35,36,37,38,39,40,41,42,43].

For example, YOLO can be implemented in traffic cameras to identify and evaluate congestion, traffic flow, and accidents, which can influence decisionmaking to improve traffic management and reduce the probability of accidents. Furthermore, YOLO can be integrated with intelligent transportation systems to monitor the movements of pedestrians and cyclists and improve road safety for non-motorized road users.

ADAS: Advanced Driver Assistance Systems (ADAS) are technologies designed to enhance road safety and the driving experience. They utilize a combination of sensors, cameras, and advanced algorithms to aid drivers in various driving tasks [30,44].

ADAS can utilize YOLO to detect and recognize objects in real-time video streams [6]. In this context, YOLO can be applied to detect and recognize traffic signs [7,8,9,10,18,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86].

Autonomous Driving: Autonomous driving is significantly dependent on CV technologies to perceive and evaluate the driving environment. Using cameras and algorithms, CV systems provide relevant information to autonomous vehicles about their surroundings, such as the position and behavior of other cars, pedestrians, and transport networks. These data are used for decisionmaking, vehicle control, and safe road navigation. To develop autonomous driving systems, a vehicle should generally be equipped with numerous sensors and communication systems [11,12,13,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136].

3.2. RQ2 [Datasets]: What Traffic Sign Datasets Are Used to Train, Validate, and Test These Systems?

Traffic sign datasets are collections of images that contain traffic signs and their annotations. They are used for training, validating, and testing different proposed traffic sign detection and recognition systems.

There are many traffic sign datasets that have been created and used by researchers and practitioners. Some of them are publicly available, while others are private, i.e., restricted to the scientific community. The main datasets that have been used are as follows:

- The German Traffic Sign Detection Benchmark (GTSDB) and the German Traffic Sign Recognition Benchmark (GTSRB): The GTSDB and GTSRB datasets are two popular resources for traffic sign recognition research. They contain high-quality images of various traffic signs taken in real-world scenarios in Germany. The images cover a wide range of scenery, times of day, and weather conditions, making them suitable for testing the robustness of recognition algorithms. The GTSDB dataset consists of 900 images, split into 600 for training and 300 for validation. The GTSRB dataset is larger, with more than 50,000 images of 43 classes of traffic signs, such as speed limit signs, stop signs, yield signs, and others. Images are also annotated with bounding boxes and class labels. Both datasets are publicly available and have been used in several benchmarking studies [7,13,37,38,40,46,47,50,52,53,55,58,59,60,63,64,65,66,68,69,70,71,72,77,80,82,92,96,101,103,104,106,109,112,114,115,117,118,125,127,129,130,136,137].

- Tsinghua Tencent 100K (TT100K): The TT100K dataset is a large-scale traffic sign benchmark created by Tsinghua University and Tencent. It consists of 100,000 images from Tencent Street View panoramas, which contain 30,000 traffic sign instances. The images vary in lighting and weather conditions, and each traffic sign is annotated with its class label, bounding box, and pixel mask. The dataset is suitable for traffic sign detection and classification tasks in realistic scenarios. The TT100K dataset is publicly available and can be used for both traffic sign detection and classification tasks [35,38,41,45,51,65,67,80,84,94,95,102,105,108,109,111,113,121,124,128,131,133,134,135].

- Chinese Traffic Sign Dataset (CTSDB y CCTSDB): The CTSDB and CCTSDB datasets are two large-scale collections of traffic sign images for CV research. The CTSDB dataset consists of 10,000 images captured from different scenes and angles, covering a variety of types and shapes of traffic signs. The CCTSDB dataset is an extension of the CTSD dataset, with more than 20,000 images that contain approximately 40,000 traffic signs. The CCTSDB dataset also includes more challenging scenarios, such as occlusion, illumination variation, and scale change [8,18,36,39,60,65,68,81,82,83,85,87,108,116,130,132,135,136,138].

- Belgium Traffic Sign Detection Benchmark and Belgium Traffic Sign Classification Benchmark (BTSDB y BTCDB): The BTSDB dataset, specifically designed for traffic sign detection in Belgium, comprises a total of 7095 images. These images are further divided into 4575 training images and 2520 testing images. The dataset encompasses a diverse range of image sizes, spanning from 11 × 10 pixels to 562 × 438 pixels. The Belgium Traffic Sign Classification Benchmark is a dataset of traffic sign images collected from eight cameras mounted on a van. The dataset contains 62 types of traffic signs and is divided into training and testing sets. The dataset is useful for evaluating traffic sign recognition algorithms, which are essential for intelligent transport systems and autonomous driving. The dataset also provides annotations, background images, and test sequences for further analysis [55,78,101,123].

- Malaysian Traffic Sign Dataset (MTSD): The MTSD includes a variety of traffic sign scenes to be used in traffic sign detection, having 1000 images with different resolutions (FHD pixels; 4K-UHD pixels; UHD+ pixels). It also has 2056 images of traffic signs, divided into five categories, for recognition [11,118].

- Korea Traffic Sign Dataset (KTSD): This dataset has been used to train and test various deep learning architectures, such as YOLOv3 [57], to detect three different classes of traffic signs: prohibitory, mandatory, and danger. The KTSD contains 3300 images of various traffic signs, captured from different roads throughout South Korea. These images feature traffic signs of varying sizes, providing a diverse and comprehensive dataset for traffic sign detection and recognition research [57,59,64].

- Berkley Deep Drive (BDD100K): The Berkeley DeepDrive (BDD) project has released a large-scale and diverse driving video dataset called BDD100K. It contains 100,000 videos with rich annotations to evaluate the progress of image recognition algorithms on autonomous driving. The dataset is available for research purposes and can be downloaded from the BDD website (https://bdd-data.berkeley.edu/, accessed on 12 April 2023). The images in the dataset are divided into two sets: one for training and one for validation. The training set contains 70% of the images, while the validation set contains the remaining 30% [55,90].

- Thai (Thailand) Traffic Sign Dataset (TTSD): The data collection process takes place in the rural areas of Maha Sarakham and Kalasin Provinces within the Kingdom of Thailand. It encompasses 50 distinct classes of traffic signs, each comprising 200 unique instances, resulting in a comprehensive sign dataset that comprises a total of 9357 images [101,126].

- Swedish Traffic Sign Dataset (STSD): This public sign dataset comprises 20,000 images, with 20% of them labeled. Additionally, it contains 3488 traffic signs from Sweden [104].

- DFG Traffic Sign Dataset (DFG): The DFG dataset comprises approximately 7000 traffic sign images captured from highways in Slovenia. These images have a resolution of pixels. To facilitate training and evaluation, the dataset is divided into two subsets, with 5254 images designated for training and the remaining 1703 images for validation. The dataset features a total of 13,239 meticulously annotated instances in the form of polygons, each spanning over 30 pixels. Additionally, there are 4359 instances with less precise annotations represented as bounding boxes, measuring less than 30 pixels in width [12].

- Taiwan Traffic Sign Dataset (TWTSD: The TWTSD dataset comprises 900 prohibitory signs from Taiwan with a resolution of pixels. The training and validation subsets are divided into 70% and 30%, respectively [75].

- Taiwan Traffic Sign (TWSintetic): The Taiwan Traffic Sign (TWSintetic) dataset is a collection of traffic signs from Taiwan, consisting of 900 images, and it has been expanded using generative adversarial network techniques [9].

- Belgium Traffic Signs (KUL): The KUL dataset encompasses over 10,000 images of traffic signs from the Flanders region in Belgium, categorized into more than 100 distinct classes [89].

- Chinese Traffic Sign Detection Benchmark (CSUST): The CSUST dataset comprises over 15,000 images and is continuously updated to incorporate new data [8].

- Foggy Road Image Database (FRIDA): The Foggy Road Image Database (FRIDA) contains 90 synthetic images from 18 scenes depicting various urban road settings. In contrast, FRIDA2 offers an extended collection, with 330 images derived from 66 road scenes. For each clear image, there are corresponding counterparts featuring four levels of fog and a depth map. The fog variations encompass uniform fog, heterogeneous fog, foggy haze, and heterogeneous foggy haze [71,114].

- Foggy ROad Sign Images (FROSI): The FROSI is a database of synthetic images easily usable to evaluate the performance of road sign detectors in a systematic way in foggy conditions. The database contains a set of 504 original images with 1620 road signs (speed and stop signs, pedestrian crossing) placed at various ranges, with ground truth [71,114].

- MarcTR: This dataset contains seven traffic sign classes, collected by using a ZED stereo camera mounted on top of Racecar mini autonomous car [79].

- Turkey Traffic Sign Dataset: The Turkey Traffic Sign Dataset is an essential resource for the development of traffic and road safety technologies, specifically tailored for the Turkish environment. It comprises approximately 2500 images, including a diverse range of traffic signs, pedestrians, cyclists, and vehicles, all captured under real-world conditions in Turkey [77].

- Vietnamese Traffic Sign Dataset: This comprehensive dataset encompasses 144 classes of traffic signs found in Vietnam, categorized into four distinct groups for ease of analysis and application. These include 40 prohibitory or restrictive signs, 47 warning signs, 10 mandatory signs, and 47 indication signs, providing a detailed overview of the country’s traffic sign system [76].

- Croatia Traffic Sign Dataset: This dataset consists of 28 video sequences at 30 FPS with a resolution of pixels. They were taken in the urban traffic of the city of Osijek, Croatia [10].

- Mexican Traffic Sign Dataset: The dataset consists of 1284 RGB images, featuring a total of 1426 traffic signs categorized into 11 distinct classes. These images capture traffic signs from a variety of perspectives, sizes, and lighting conditions, ensuring a diverse and comprehensive collection. The traffic sign images were sourced from a range of locations including avenues, roadways, parks, green areas, parking lots, and malls in Ciudad Juárez, Chihuahua, and Monterrey, Nuevo Leon, Mexico, providing a broad representation of the region’s signage [43].

- WHUTCTSD: It is a more recent dataset with five categories of Chinese traffic signs, including prohibitory signs, guide signs, mandatory signs, danger warning signs, and tourist signs. Data were collected by a camera at a pixel resolution during different time periods. It consists of 2700 images, which were extracted from videos collected in Wuhan, Hubei, China [62].

- Bangladesh Road Sign 2021 (BDRS2021): This dataset consists of 16 classes. Each class consists of 168 images of Bangladesh road signs [69], offering a rich source of data that capture the specific traffic sign environment of Bangladesh, including its urban, rural, and varied geographical landscapes.

- New Zealand Traffic Sign 3K (NZ-TS3K): This dataset is a specialized collection focused on traffic sign recognition in New Zealand [70]. It features over 3000 images, showcasing a wide array of traffic signs commonly found across the country. These images are captured in high resolution (1080 by 1440 pixels), providing clear and detailed visuals essential for accurate recognition and analysis. The dataset is categorized into multiple classes, each representing a different type of traffic sign. These include Stop (236 samples), Keep Left (536 samples), Road Diverges (505 samples), Road Bump (619 samples), Crosswalk Ahead (636 samples), Give Way at Roundabout (533 samples), and Roundabout Ahead (480 samples), offering a diverse range of signs commonly seen on Auckland’s roads.

- Mapillary Traffic Sign Dataset (MapiTSD): The Mapillary Traffic Sign Dataset is an expansive and diverse collection of traffic sign images, sourced globally from the Mapillary platform’s extensive street-level imagery. It features millions of images from various countries, each annotated with automatically detected traffic signs. This dataset is characterized by its wide-ranging geographic coverage and diversity in environmental conditions, including different lighting, weather, and sign types. Continuously updated, it provides a valuable up-to-date resource for training and validating traffic sign recognition algorithms [38].

- Specialized Research Datasets: These datasets consist of traffic sign data compiled by various authors. Generally, they lack detailed public information and are not openly accessible. This category includes datasets from a variety of countries: South Korea [91,103], India [49,99], Malaysia [97], Indonesia [98], Slovenia [54], Argentina [139], Taiwan [74,107,140], Bangladesh [69], and Canada [61]. Each dataset is tailored to its respective country, reflecting the specific traffic signs and road conditions found there.

- Unknown or General Databases (Unknown): Consist of those datasets that do not have any certain information on the subject of traffic [22,42,64,73,86,88,93,99,100,110,119,122,141], or directly constitute general databases such as MSCOCO [63,73,99], KITTI [132], or those that are downloaded from repositories such as Kaggle [42].

Table 2 presents the distribution of traffic sign datasets based on their country of origin, database name, number of categories and classes, number of images, and the researchers utilizing them.

Table 2.

Summary of the traffic sign datasets extracted from scientific papers and employed in the development of traffic sign detection and recognition systems using YOLO in the period 2016–2022.

A substantial number of articles in this study utilize publicly available databases, known for their convenience in model evaluation and result comparison. MapiTSD, BDD100K, and TT100K are prominent due to their extensive image datasets, while GTSDB and GTSRB are the most cited, comprising 27.33% of the citations, followed by TT100K, with 16.15% of the citations.

3.3. RQ3 [Metrics]: What Metrics Are Used to Measure the Quality of Object Detection in the Context of Traffic Sign Detection and Recognition Using YOLO?

Various performance metrics are frequently employed to measure the efficacy of traffic sign recognition systems. This section provides a summary of the metrics utilized most frequently in the articles under consideration.

In assessing the computational efficacy and real-time performance of traffic sign detection systems and other object recognition applications, frames per second (FPS) is a commonly employed metric. FPS, detailed in Equation (1), measures the number of video frames per second that a system can process, providing valuable insight into the system’s responsiveness to changing traffic conditions. Achieving a high FPS is essential for deployment in the real world because it ensures timely and accurate responses in dynamic environments such as autonomous vehicles and traffic management systems. Researchers evaluate FPS by executing the system on representative datasets or actual video streams, taking into account factors such as algorithm complexity, hardware configuration, and frame size. FPS, when combined with other metrics such as mAP, precision, and recall, contributes to a comprehensive evaluation of the system’s overall performance, taking into account both accuracy and speed, which are crucial considerations for practical applications.

Accuracy (ACC) is a fundamental and often used metric in the field of traffic sign detection and object recognition systems to evaluate their performance. The accuracy of traffic sign identification, as measured by the ACC metric, and specified in Equation (2), is determined by the proportion of correctly recognized traffic signs, including both true positives (TP) and true negatives (TN), relative to the total number of traffic signs. This core statistic offers vital insights into the overall efficacy of the system in accurately categorizing items of interest. The accuracy of predictions, as measured by the ACC metric, serves as a dependable indicator of the system’s capacity to differentiate between various kinds of traffic signs. ACC is widely used by researchers and practitioners to fully assess and compare different detection algorithms and models, thereby facilitating the progress and enhancement of technology related to traffic sign recognition.

Precision and recall are crucial metrics used to evaluate the performance of algorithms in the context of traffic sign detection and recognition. Precision is a metric that quantifies the proportion of correctly identified positive instances in relation to the total number of instances identified as being positive. It serves as an indicator of the accuracy of positive predictions and aims to minimize the occurrence of false positives. On the other hand, recall, which is sometimes referred to as sensitivity or true positive rate, measures the proportion of true positives in relation to the total number of positive instances, thereby reflecting the model’s capacity to identify all pertinent instances and reduce the occurrence of false negatives. These complementing measures have significant value in situations where the importance of accuracy and completeness is paramount. They allow researchers to strike a compromise between the system’s capacity to produce precise predictions and its ability to minimize instances of missed detections. By achieving a harmonious equilibrium, precision and recall offer a thorough assessment of the total efficacy of the system in the realm of traffic sign recognition, hence augmenting the advancement and implementation of dependable recognition systems. The mathematical expressions for precision and recall are detailed in Equations (3) and (4), respectively.

The F1 score is a crucial statistic in the field of traffic sign detection and identification since it effectively represents the equilibrium between precision and recall by providing a consolidated number. The F1 score is calculated as the harmonic mean of two measures, providing a comprehensive evaluation of algorithm performance. It takes into account the accuracy of positive predictions as well as the system’s capability to correctly identify all relevant cases. This statistic is particularly valuable in scenarios characterized by unbalanced datasets or disparate class distributions. The F1 score enables researchers and practitioners to thoroughly assess the efficacy of the model, facilitating the advancement of resilient and flexible traffic sign recognition systems that achieve an ideal balance between precision and recall. The formula representing the F1 score is as follows:

Mean Average Precision (mAP) is the metric used by excellence to evaluate the precision of an object detector. In the context of object detection tasks, mAP is widely recognized as a comprehensive and reliable performance metric. It takes precision and recall at multiple levels of confidence thresholds into account and provides an informative evaluation of the detector’s capabilities. By considering varying recall levels, mAP provides insight into how well the detector performs at various sensitivity levels. The mAP is determined by Equation (6).

Intersection over Union (IoU) is another crucial metric utilized in the field of traffic sign detection and recognition. It serves to measure the degree of spatial overlap between the predicted bounding boxes and the ground truth bounding boxes of observed objects. The IoU detailed in Equation (7) quantifies the precision of traffic sign localization by calculating the ratio between the area of intersection and the area of union of the detected boxes. That is, the IoU metric is an important part of figuring out how accurate object boundaries are and how well an algorithm can figure out where something is. This measure holds particular significance in situations where exact object localization is of utmost importance, hence contributing to the progress of reliable traffic sign detection and recognition systems with correct spatial comprehension.

where the variable represents the ground truth bounding box, whereas represents the predicted bounding box.

Finally, the Average Precision (AP) measure bears great importance in the field of traffic sign identification and recognition. It provides a thorough assessment of algorithm performance by considering different confidence criteria. The AP is determined by computing the average of accuracy values at various recall levels. This metric effectively represents the balance between making precise positive predictions and including all positive cases. This statistic offers a nuanced viewpoint on the capabilities of a system, particularly in situations when datasets are unbalanced or object class distributions fluctuate. The utilization of AP facilitates the thorough evaluation of the system’s efficiency in detecting and recognizing traffic signs. This, in turn, fosters the advancement of traffic sign recognition systems that are both resilient and adaptable, capable of performing exceptionally well under various operational circumstances. AP is provided by the following expression:

where is the total number of positive instances (ground truth objects), n is the total number of thresholds at which precision and recall values are computed, represents the recall value at threshold i, denotes the precision value at threshold i, and can be set to 1 for convenience.

These metrics play a critical role in objectively evaluating the performance of traffic sign recognition systems and facilitating comparisons between different approaches. However, the choice among metrics may vary based on specific applications and requirements, and researchers should carefully select the most relevant metrics to assess the system’s effectiveness in real-world scenarios.

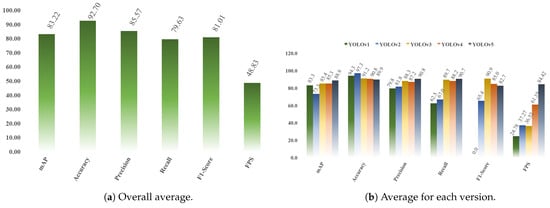

3.4. Comparing Metrics among Different Versions of YOLO

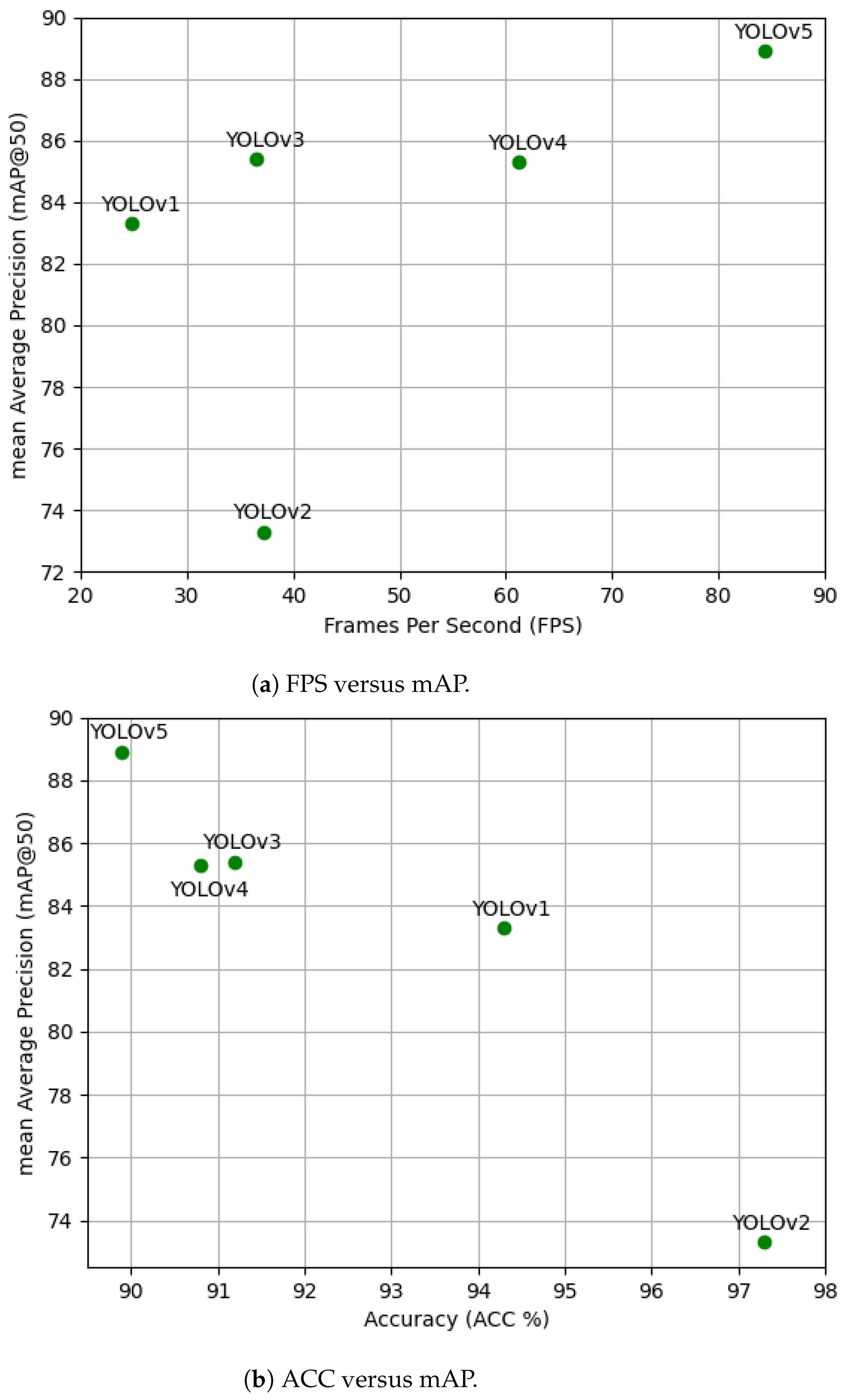

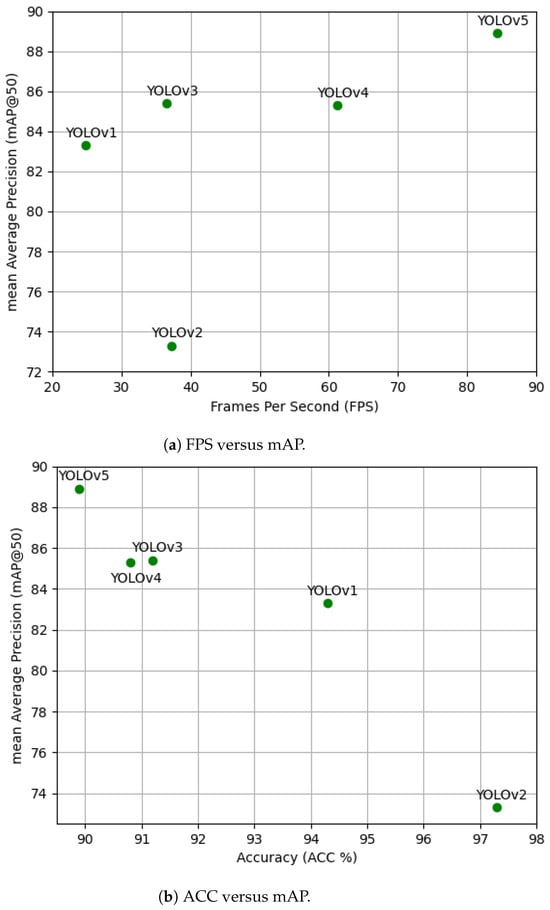

A comprehensive analysis was conducted to compare various metrics by reviewing selected research from 2016 to 2022 and computing the average values. This section introduces a series of scatter plots that assess the efficacy of traffic sign detection and recognition systems utilizing different versions of YOLO.

Figure 2a presents a scatter plot of FPS versus mAP, illustrating that YOLO’s FPS ranges from 25 to 85. This range indicates its suitability as a real-time object detector. Meanwhile, the mAP varies between 73% and 89%, with YOLOv5 emerging as the most efficient version. This suggests that, while YOLO is a competitive detector, there is still room for improvement.

Figure 2.

Scatter plots comparing FPS versus mAP and ACC versus mAP metrics, extracted from scientific papers (2016–2022) to evaluate the performance of traffic sign detection and recognition systems using YOLO. They consider average values.

A more detailed examination in Figure 2b reveals the relationship between ACC and mAP. Here, YOLOv5 demonstrates the lowest ACC but the highest mAP. Conversely, YOLOv2 shows the highest ACC with a comparatively lower mAP, partly due to the limited number of studies reporting ACC data for this version.

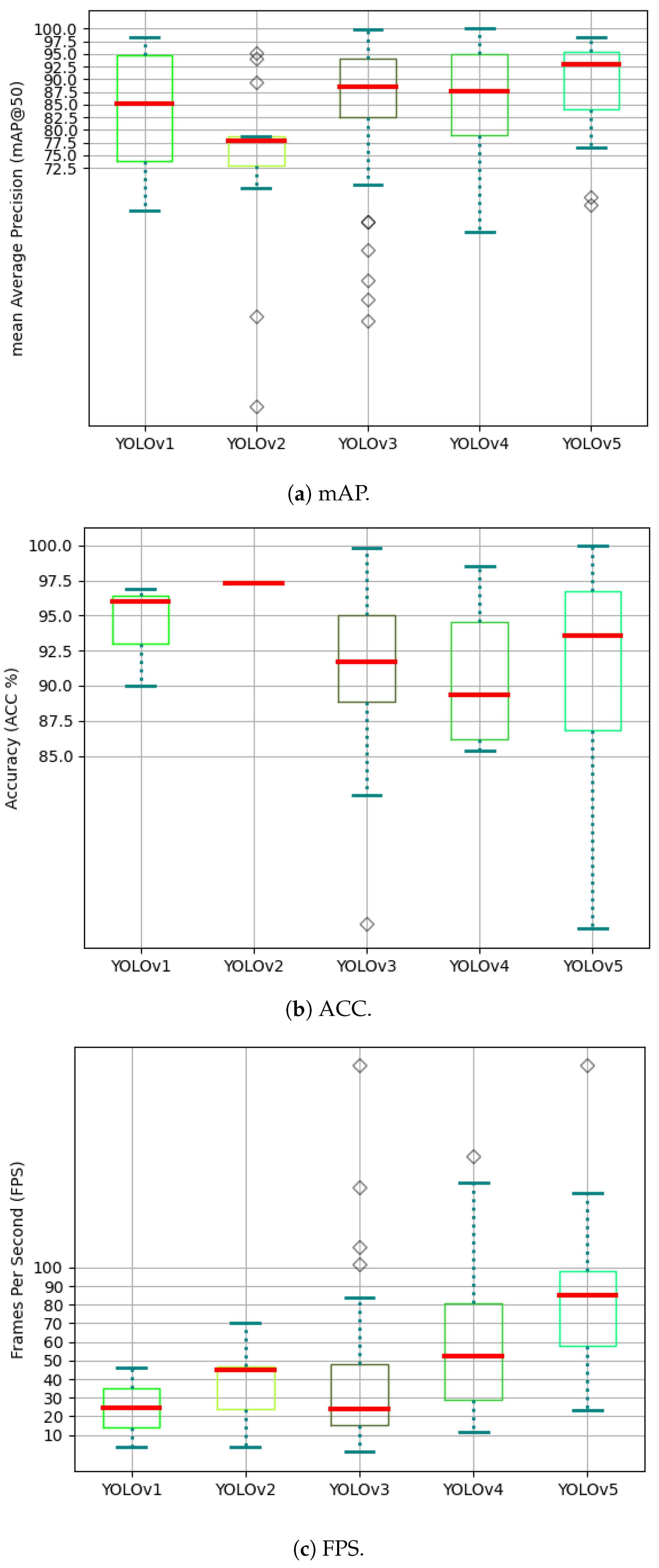

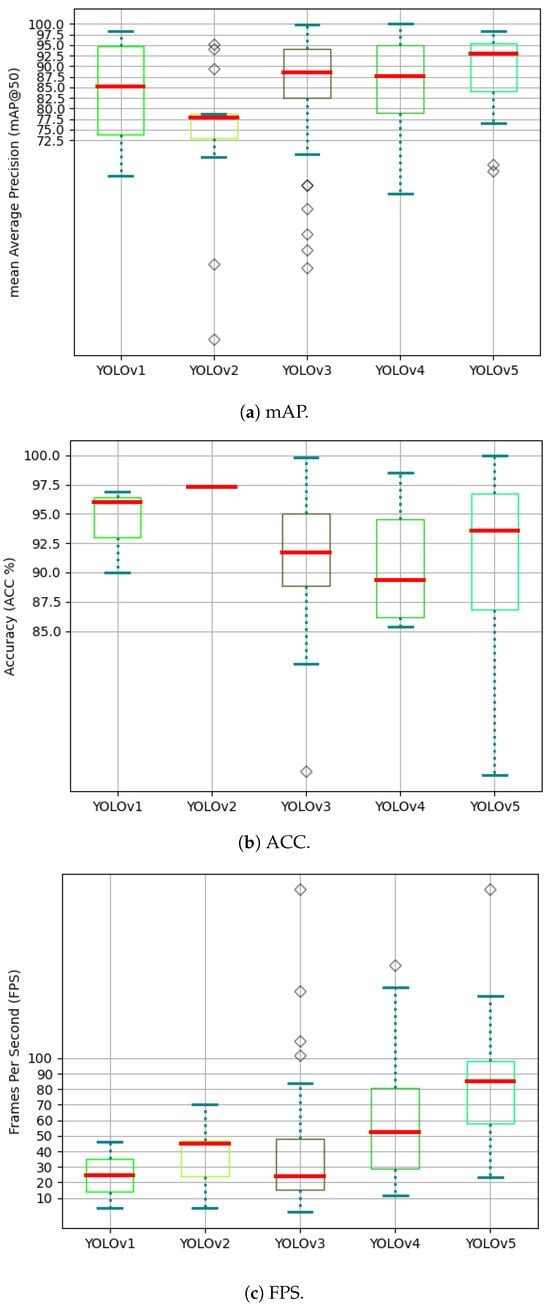

In another perspective, Figure 3a–c show the box plots of the mAP, ACC, and FPS metrics for the different versions of YOLO. YOLOv5 consistently outperforms in the mAP metric, while YOLOv2 lags behind. In terms of ACC, YOLOv1 exhibits the highest overall score, closely followed by YOLOv5. For the FPS metric, YOLOv5 again shows superior performance.

Figure 3.

Box plots depicting the mAP, ACC, and FPS metrics extracted from scientific papers (2016–2022), which evaluate the performance of traffic sign detection and recognition systems.

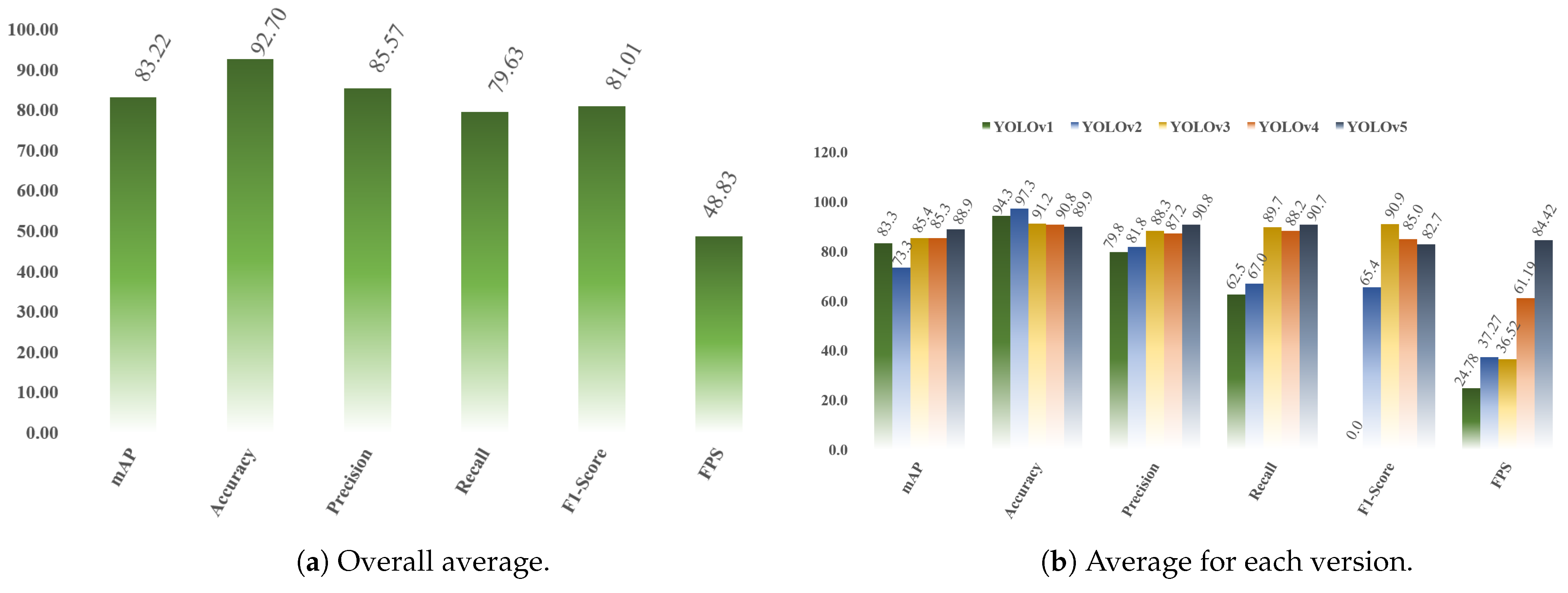

Figure 4a shows the average value of the mAP, ACC, precision, recall, and F1 score metrics, grouped across all YOLO versions. In all cases, there are high results, over 79%. The best precision and recall values correspond to YOLOv5, and the best F1 score value is from YOLOv3.

Figure 4.

Bar charts of evaluation metrics used in scientific papers to assess the quality of traffic sign detection and recognition systems using YOLO in the period 2016–2022.

Then, Figure 4b shows in detail, for each of the YOLO versions, all the metrics. YOLOv4 presents the best ACC value, followed by YOLOv2. In the case of the mAP metric, YOLOv2 has the best performance. However, this is an isolated result because there is only one article in this case.

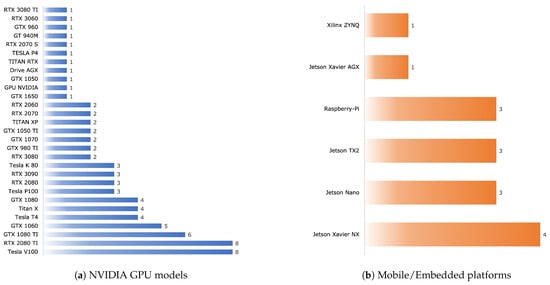

3.5. RQ4 [Hardware]: What Hardware Is Used to Implement Traffic Sign Recognition and Detection Systems Based on YOLO?

The implementation of traffic sign detection and recognition systems using the YOLO framework often necessitates hardware configurations that achieve a balance between computing capacity and efficiency. The selection and arrangement of hardware components may differ based on the specific application and its corresponding requirements. Graphics Processing Units (GPUs) play a crucial role in expediting the inference procedure of deep learning models such as YOLO. High-performance GPUs manufactured by prominent corporations such as Nvidia are frequently employed for the purpose of real-time or near-real-time inference. In certain instances, the precise GPUs employed may not be expressly stated or readily discernible based on the accessible information. This underscores the importance of thorough and precise reporting in both research and practical applications. The inclusion of detailed hardware specifications is essential for the replication of results and the effective implementation of these systems in diverse contexts.

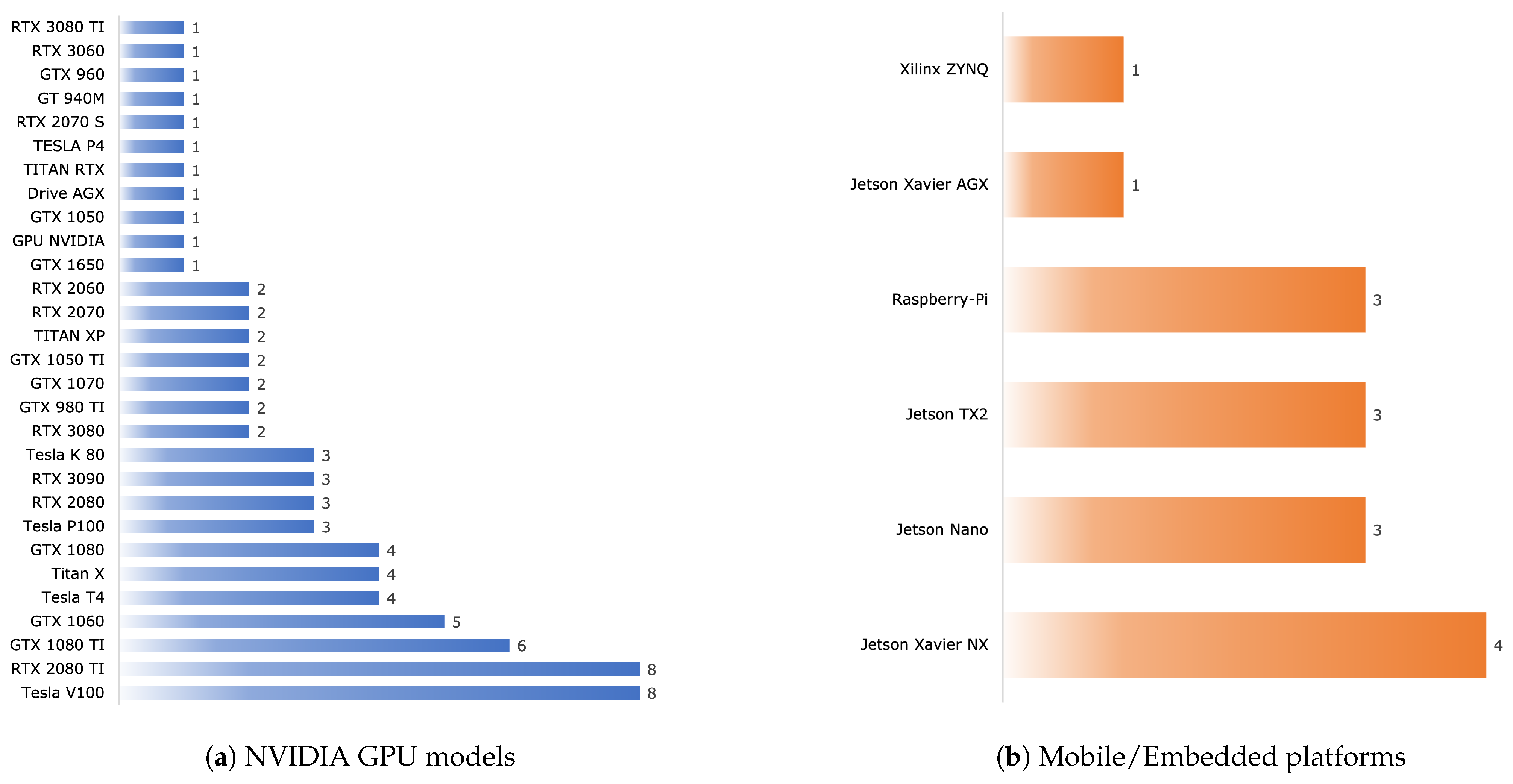

Figure 5a offers a visual depiction of the prevalence of specific Nvidia GPU graphic cards within a range of research articles. Each bar within the plot corresponds to distinct Nvidia GPU models, and the height of each bar signifies the frequency with which each model is employed in these articles. These data provide valuable insights for individuals seeking to understand the prevalent usage of Nvidia GPUs in the realm of traffic sign detection and recognition.

Figure 5.

Horizontal bar charts of the frequency of computer systems present in scientific papers focused on traffic sign detection and recognition systems by using YOLO in the period 2016–2022.

Embedded cards like Jetson, Raspberry Pi, and Xilinx Zynq have emerged as crucial solutions in the field of traffic sign detection and recognition technologies. Embedded systems, which fall under the category of electronic devices, exhibit a remarkable equilibrium between energy efficiency and processing capabilities, rendering them indispensable elements in the realm of road safety and intelligent transportation applications. The compact nature and high computational capabilities of these devices allow for the implementation of sophisticated CV algorithms in real time. This enables accurate identification and classification of traffic lights, signage, and road conditions. Moreover, the inherent adaptability and integrated connection of these devices render them highly suitable for edge computing and industrial automation purposes, thereby playing a significant role in the progress of road safety and traffic optimization in urban as well as rural environments.

The visual representation in Figure 5b provides a graphical depiction of the prevalence of embedded GPU cards within the context of our study. Each bar in the graphical representation corresponds to a unique model of mobile/embedded GPU card, and its height is directly proportional to the frequency with which that specific GPU card model is mentioned in the papers analyzed in this study.

Jetson Xavier NX is identified as the most often utilized embedded system, as evidenced by several scholarly sources [63,68,77,135]. Following closely behind are Jetson Nano, which is also referenced in several academic articles [63,77,129], Jetson TX2 [41,79,140], and Jetson Xavier AGX, which is mentioned in at least one academic source [77]. These embedded systems are supported by Nvidia. Moreover, the utilization of Raspberry Pi [37,46,98] and Xilinx ZYNQ [18] is also observed within the scope of this investigation.

The fundamental differentiation between conventional GPU graphic cards and embedded systems resides in their physical structure, operational capabilities, and appropriateness for particular use cases. Conventional GPUs, which are primarily intended for producing high-performance graphics, possess considerable computational capabilities. However, they exhibit significant drawbacks, such as their bulky size, high power consumption, and limited adaptability in power-constrained or space-restricted settings. On the other hand, embedded systems, which are characterized by their compactness and energy efficiency, are specifically designed to excel in edge computing applications, such as the detection of traffic signs. These systems prioritize real-time processing capabilities and low power consumption as critical factors for optimal performance. Integrated sensors and numerous communication possibilities are frequently included in these devices, hence augmenting their use for many applications, such as intelligent transportation systems and road safety.

3.6. RQ5 [Challenges]: What Are the Challenges Encountered in the Detection and Recognition of Traffic Signs Using YOLO?

The examination carried out in the domain of traffic sign identification and recognition has shown seven noteworthy obstacles. These factors include variations in lighting settings, unfavorable weather conditions, partial occlusion, damage to signs, intricate scenarios, insufficient image quality, and concerns pertaining to geographical regions. In order to efficiently address and classify these challenges, they have been assigned the designations CH1 through CH7.

[CH1] Fluctuation in Lighting Conditions. The fluctuation in lighting conditions inside road surroundings poses a significant obstacle in the accurate detection and recognition of traffic signs. The issue at hand is a result of the fluctuating illumination levels experienced on roadways, which have a direct influence on the comprehensibility of traffic signs. In conditions of reduced illumination, signage may exhibit diminished visibility, hence augmenting the potential for accidents. Conversely, under conditions of extreme illumination, such as exposure to direct sunlight or the presence of headlights emitted by other cars, the phenomenon of glare may manifest, impeding the observer’s ability to accurately discern and interpret signage. The presence of lighting variability can give rise to unforeseeable shadows and contrasts on signage, obstructing crucial details and adding complexity to their identification. Automatic sign identification systems that rely on CV may encounter challenges when operating in environments with varying illumination conditions, particularly in the context of autonomous driving. In order to tackle these issues, researchers have created sophisticated technologies, including image enhancement algorithms, night vision systems, and adaptive image processing approaches. The field of sign technology has witnessed notable advancements, including the introduction of retroreflective signs that enhance their detectability in conditions of reduced illumination. The aforementioned solutions aim to alleviate the effects of lighting unpredictability on the safety of road users.

[CH2] Adverse Weather Conditions. One of the significant challenges in the field of traffic sign recognition and detection pertains to the mitigation of poor weather conditions. These conditions include heavy rainfall, dense fog, frozen precipitation in the form of snowfall, and dust storms, among several other weather phenomena. These atmospheric occurrences result in reduced visibility, which consequently affects the accuracy of images acquired by the sensors incorporated inside these devices. As a result, the effectiveness of detecting and recognizing traffic signs is degraded, which has significant ramifications for road safety and the operational efficiency of autonomous driving systems. Therefore, it is crucial to address this difficulty and develop robust procedures that can operate well in all weather situations.

[CH3] Partial Occlusion. The issue of partial occlusion in the field of traffic sign recognition and detection pertains to the circumstance in which a notable portion of a traffic sign becomes occluded or concealed by other items within the scene. The presence of parked vehicles, trees, buildings, or other features in the road environment can contribute to this phenomenon. The presence of partial occlusion poses a significant obstacle for CV systems that are responsible for accurately detecting and categorizing traffic signs. This is due to the fact that the available visual data may be inadequate or corrupted. The resolution of this issue necessitates the creation of algorithms and methodologies with the ability to identify and categorize traffic signs, even in situations where they are partially obscured. This is of utmost importance in enhancing both road safety and the efficacy of driver assistance systems.

[CH4] Sign Damage. The issue of sign damage within the domain of traffic sign recognition and detection pertains to the physical degradation or deterioration of road signs. This degradation can impede the accurate identification and categorization of signs by automated systems. These phenomena can be attributed to a range of circumstances, encompassing severe weather conditions, acts of vandalism, vehicular crashes, and other manifestations of natural deterioration. The existence of signs that are damaged or illegible is a notable obstacle for algorithms designed to recognize traffic signs as their capacity to comprehend and analyze the information conveyed by the sign is undermined. Hence, it is imperative to address the issue of sign degradation in order to guarantee the efficiency and dependability of driving assistance and road safety systems that rely on automatic traffic sign identification.

[CH5] Complex Scenarios. The examination of traffic sign recognition and detection in intricate settings poses considerable difficulties owing to the existence of numerous signs with backgrounds that are challenging to distinguish, as well as instances where multiple signs may seem like a unified entity. The intricate nature of these circumstances presents a significant challenge for CV systems designed to automatically read traffic signs in dynamic and diverse settings. The presence of many traffic signs within the visual range of a detection system poses a significant obstacle. In scenarios characterized by heavy traffic or intricate crossings, it is common for multiple lights to overlap or be situated in close proximity to one another. The presence of this overlap has the potential to introduce confusion to the detection system, leading to the misidentification of signs or the inadvertent deletion of certain signs. Moreover, intricate backgrounds, such as densely vegetated areas or the existence of items within the surrounding environment, might provide visual interference that poses challenges in effectively distinguishing the sign from the background. Another significant problem pertains to the system’s capacity to differentiate between various signs that could initially appear as a unified entity. This phenomenon may occur as a result of physical overlap or the simultaneous presence of signs exhibiting identical form and color patterns. An erroneous understanding of sign merging can lead to significant ramifications in relation to both the safety of road users and the overall efficiency of traffic flow.

[CH6] Inadequate Image Quality. The issue pertaining to inadequate image quality poses a substantial constraint within the domain of traffic sign recognition and detection. This difficulty pertains to the existence of photographs that exhibit insufficient resolution, noise, or visual deterioration, hence impeding the accurate identification and categorization of traffic signs. The presence of substandard photos can be attributed to a range of circumstances, including unfavorable lighting conditions, obstructions, significant distances between the camera and the subject, or inherent constraints of the employed capture technologies. The presence of adverse conditions has the potential to undermine the accuracy and dependability of recognition algorithms. This highlights the significance of developing resilient strategies that can effectively handle such images within the domain of traffic sign recognition and detection.

[CH7] Geographic Regions. The study of traffic sign recognition and detection is influenced by various complexities that are inherent to different geographic regions. The intricacies arise from the variety of approaches to road infrastructure design and development, as well as the cultural disparities deeply rooted in various communities.

One major challenge is the presence of disparities in the placement and design of traffic signs. Various geographic regions may adopt distinct strategies when it comes to the placement, dimensions, and color schemes employed in signs. The extent of this heterogeneity might span from minor variations in typographic elements to significant alterations in the emblematic icons. Hence, it is crucial that recognition systems possess the necessary flexibility to handle such variability and effectively read a wide range of indicators.

The existence of divergent traffic laws and regulations across different locations presents significant issues. There can be variations in traffic regulations and signaling rules, which can have a direct impact on the interpretation and enforcement of traffic signs. Hence, it is imperative for recognition systems to possess the capability of integrating a wide range of regulations and to be developed in a manner that can adapt to requirements specific to different regions.

Table 3 presents a full summary of each challenge, along with the related articles that advocate for them. After conducting a comprehensive analysis, a range of solutions employed by diverse writers have been identified. The solutions can be categorized into three primary groups: SOL1 studies, which actively propose solutions to the identified problems; SOL2 studies, which acknowledge the issues but do not provide specific remedies; and SOL3 studies, which provide information about datasets without directly addressing the challenges at hand.

Table 3.

Thorough analysis of challenges and corresponding solutions explored in scientific papers addressing traffic sign detection systems in the period 2016–2022.

From Table 3, it can seen that challenges Ch1, Ch2, and Ch2 are the most addressed with direct and specific solutions. On the contrary, challenges Ch2 to Ch7 are not addressed by any researcher.

3.7. Discussion

This study presents an SLR on the utilization of YOLO technology for the purpose of object detection within the domain of traffic sign detection and recognition. This review offers a detailed examination of prospective applications, datasets, essential metrics, hardware considerations, and challenges associated with this technology. This section provides an analysis of the primary discoveries and implications of the SLR.

The measurement of FPS holds significant importance, particularly in dynamic settings where prompt detection plays a vital role in alerting drivers to potential hazards or infractions. It is imperative to strike a balance between accuracy and speed in real-time applications.

The accessibility and caliber of datasets play a crucial role in the training and assessment of object detection algorithms. The review elucidates the difficulties encountered in the process of data normalization, the discrepancies in data quality across different authors, and the insufficient amount of information available for certain datasets. The TTK1000 China dataset is notable for its comprehensive representation of traffic signs, categories, and authentic driving scenarios. The USA BDD100K dataset provides a comprehensive compilation of traffic signs, encompassing a diverse array of lighting situations. Nevertheless, the analysis of these datasets necessitates substantial computational resources.

From a pragmatic perspective, the German databases, although moderately sized, are extensively employed. These datasets provide a diverse range of traffic signs and categories, hence enhancing their suitability for experimental purposes.

In the realm of hardware considerations, the fundamental importance lies in the utilization of efficient algorithms that exhibit lower energy consumption and robustness in contexts with limited memory capacity. It is advisable to minimize redundancy in the quantity of layers inside neural networks. The effective dissipation of heat is of utmost importance in mitigating the risk of overheating, particularly in the context of mobile graphics processing unit (GPU) systems. It is worth mentioning that NVIDIA presently holds a dominant position in the market for mobile GPU systems.

Examining the challenges, this review emphasizes the necessity of conducting research that focuses on sign degradation, which is a crucial but frequently disregarded element in the field of traffic sign detection and recognition. The failure to consider the presence of damaged signs may result in the generation of incorrect detections and the probable occurrence of hazardous circumstances on the roadway. Tackling this difficulty not only improves the resilience of algorithms but also contributes to the broader goal of developing more reliable traffic sign recognition systems.

Another notable difficulty that has been emphasized is the lack of research studies that specifically examine the geographical disparities in the detection and recognition of traffic signs. Geographical variations can introduce unique complexities, including diverse traffic sign designs, language differences, and local regulations. Failing to account for these nuances can severely limit the effectiveness and applicability of traffic sign detection systems across diverse regions. This observation underscores the pressing need for targeted research efforts in this area. By addressing this challenge, we not only enhance the robustness and adaptability of our detection systems but also contribute to safer and more reliable transportation networks on a global scale.

Lastly, the SLR emphasizes the broad applications of YOLO technology in traffic-related contexts. It serves as a pivotal tool in preventing traffic accidents by providing early warnings of potential issues during driving. Additionally, it can be integrated with GPS systems for infrastructure maintenance, allowing for real-time assessment of road sign quality. Moreover, YOLO technology plays a foundational role in the development of autonomous vehicles.

3.8. Possible Threats to SLR Validation

This section will discuss the threats that may affect this SLR, and how they have been resolved.

3.9. Construct Validity Threat

During our comprehensive investigation of research papers centered on YOLO for the detection and recognition of traffic signs, we recognized a number of possible challenges to the soundness of our findings, mostly emanating from the presumptions made throughout the evaluation procedure. In order to ensure the trustworthiness and appropriate interpretation of the presented findings, it is crucial to acknowledge and tackle these constraints.

3.9.1. The Influence of Version Bias on Metrics

Threat: The potential bias in the metrics arises from the inclusion of different versions of YOLO in the collected research, with the assumption that newer versions of YOLO are intrinsically more efficient.

Mitigation: This review acknowledges the variations in YOLO versions but assumes that the metrics presented in various research may be compared, regardless of the specific YOLO version used. Nevertheless, it is imperative to recognize the potential impact of differences in versions on the documented performance measures.

3.9.2. Geographic Diversity in Sign Datasets

Threat: The inclusion of traffic sign datasets from various geographical locations may generate unpredictability in the reported performance of the YOLO algorithm, thereby affecting the applicability of the findings.

Mitigation: Despite acknowledging the constraint of dataset origin diversity, we make the assumption of comparability in performance indicators across various research. Nevertheless, it is important to acknowledge the potential influence of geographical variations on the performance of YOLO, hence underscoring the necessity for careful interpretation.

3.9.3. Hardware Discrepancies

Threat: The presence of disparities in hardware utilization among studies may result in variations in the time performance of the detection and recognition systems. This assumption is based on the idea that newer versions of YOLO use greater hardware resources and that more advanced hardware leads to quicker response times.

Mitigation: The underlying premise of our study is that the comparability of results holds true across experiments conducted with varying hardware setups. However, it is important to identify and take into consideration the potential impact of hardware changes on the reported time performance in this assessment.

3.9.4. Limitations of Statistical Metrics

Threat: Relying on a single statistical summary metric to measure YOLO’s capability for traffic sign detection may not fully capture the system’s performance.

Mitigation: Despite this limitation, our main premise argues that the selected metrics enable the comparability of various YOLO systems and adequately assess their overall effectiveness. Acknowledging the inherent limitations of statistical summaries is imperative when attempting to conduct a thorough assessment of detection and identification systems.

Our goal is to improve the clarity and reliability of our review’s interpretation by openly acknowledging these potential threats to construct validity. This provides a more nuanced understanding of the limitations associated with the diverse aspects of YOLO techniques for traffic sign detection and recognition.

3.9.5. Threats to Internal Validation

The greatest threat that this SLR has faced is the number of studies collected. A total of 115 articles have been analyzed, selected in the period from 2016 to 2022, which are in the category of “Journal” or “Conferences”. On the other hand, there are articles about to be published in 2023, which have not yet been analyzed.

3.9.6. Threats to External Validation

In this study, only the data contained in the previously selected articles have been collected and analyzed; that is, no experimentation has been carried out with traffic sign detection, recognition, and identification systems, so our own experimental data have not been compared with those provided by the different researchers.

3.9.7. Threats to the Validation of the Conclusions

The documents studied have been compiled from nine world-renowned publishers, but valuable information has only been available from seven of them. Likewise, works published in other publishers (ACM), in pre-prints (arXiv), in patent bases (EPO, Google Patents), or technology companies (VisLab, Continental) have been omitted. Consequently, no literature has been collected from these sources. Obviously, ignoring this literature leads to the notion that sign detection and recognition systems traffic may be more advanced than presented in this study. Therefore, conclusions based on 115 studies may not adequately present the progress of detection, recognition, and identification systems of traffic signs using YOLO in the period 2016 to 2022.

3.10. Future Research Directions

This study suggests that, despite the potential benefits of traffic sign detection and recognition systems as a technology with the potential to reduce traffic accidents and contribute to the development of autonomous vehicles, specific barriers such as extreme weather conditions, lack of standardized databases, and insufficient criteria for choosing the object detector are the main problems that need to be addressed. Thus, the following questions arise:

- What is the performance of traffic sign detection and recognition systems under extreme weather conditions?

- How could datasets for the development of traffic sign detection and recognition systems be standardized?

- What is the best object detector to be used in traffic sign detection and recognition systems?

Future research will be initiated based on the answers to these questions, which will include new versions of YOLO, e.g., v6, v7, v8, and NAS.

4. Conclusions

This study presents a comprehensive and up-to-date analysis of the utilization of the YOLO object detection algorithm in the field of traffic sign detection and recognition for the period from 2016 to 2022. The review also offers an analysis of applications, sign datasets, effectiveness assessment measures, hardware technologies, challenges faced in this field, and their potential solutions.

The results emphasize the extensive use of the YOLO object detection algorithm as a popular method for detecting and identifying traffic indicators, particularly in fields such as vehicular security and intelligent autonomous vehicles.

The accessibility and usefulness of the YOLO object detection algorithm are underscored by the existence of publicly accessible datasets that may be used for training, testing, and validating YOLO-based systems.