Review on System Identification, Control, and Optimization Based on Artificial Intelligence

Abstract

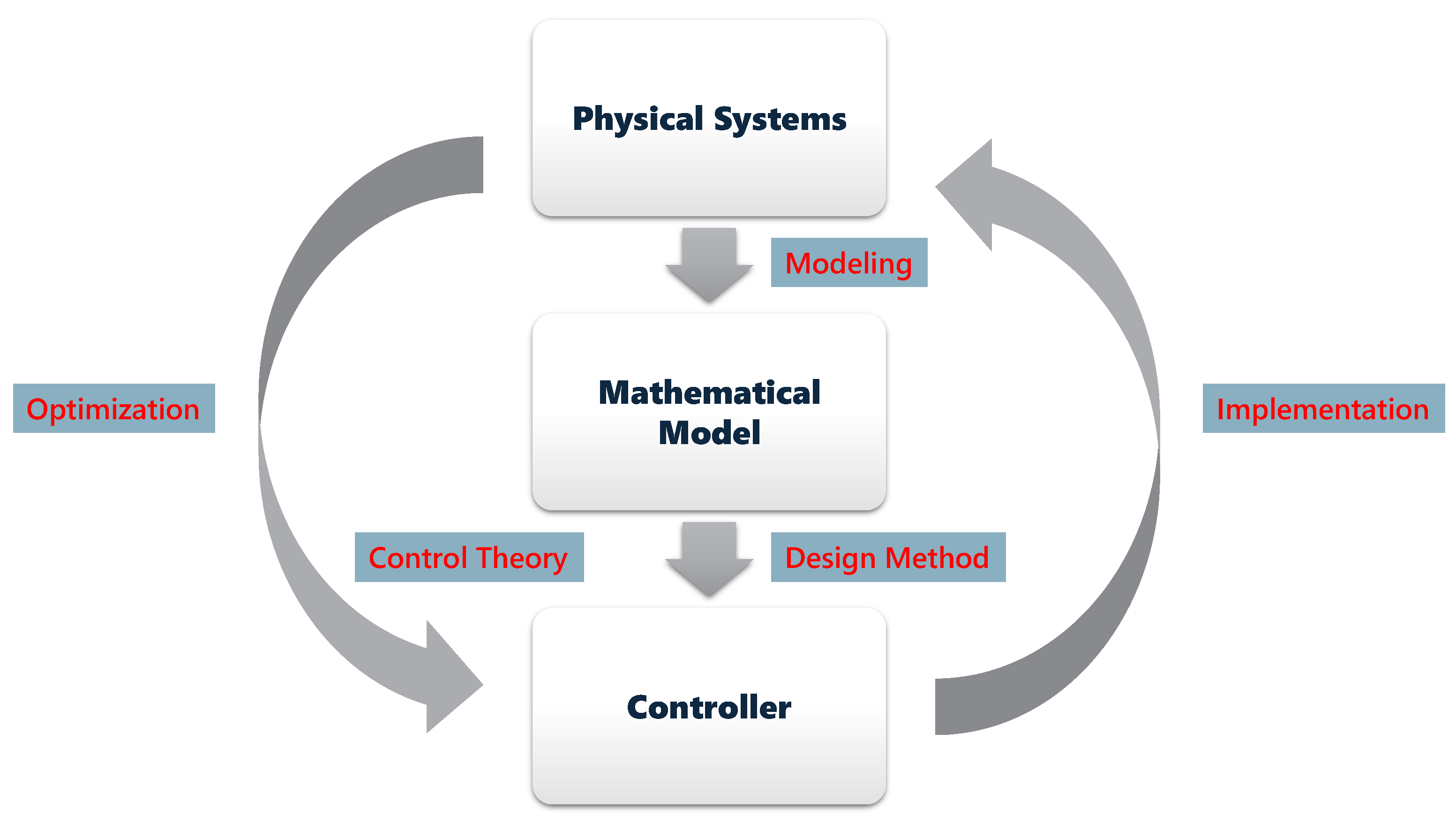

:1. Introduction

2. AI-Based System Identification

2.1. Parameter Estimation

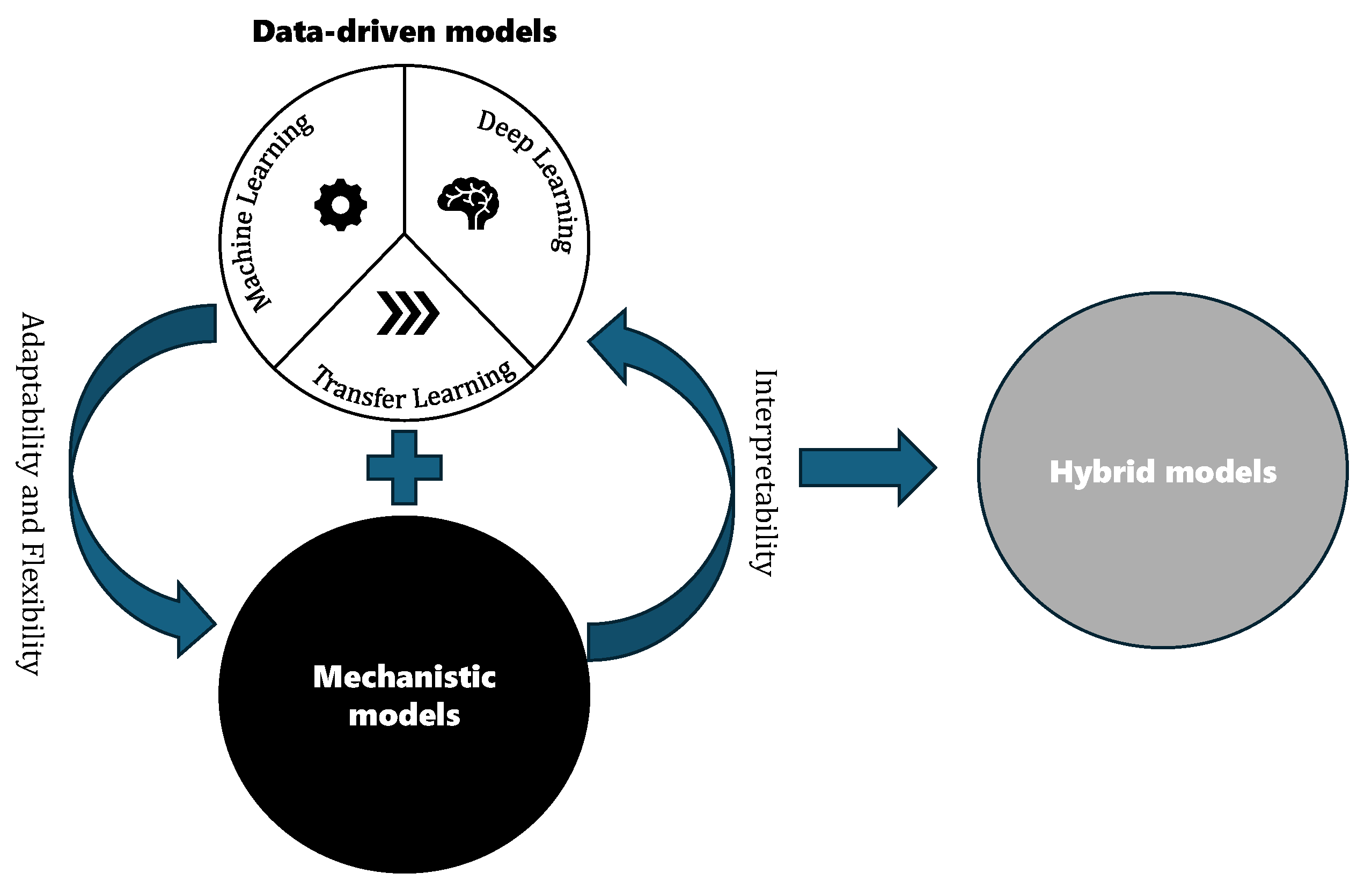

2.2. Data-Driven Modeling

2.2.1. Machine Learning

2.2.2. Deep Learning

2.2.3. Transfer Learning

2.3. Hybrid Modeling

2.3.1. Parallel Structures

2.3.2. Serial Structures

3. AI-Based Control Methods

3.1. Neural Network Control

3.1.1. Neural Network-Based Adaptive Control

3.1.2. Neural Network-Based Sliding-Mode Control

3.1.3. Neural Network-Based Backstepping Control

3.1.4. Neural Network-Based Iterative Learning Control

3.1.5. Summary

3.2. Model Prediction Control

3.2.1. Model Prediction

3.2.2. Fast Optimization

3.3. Reinforcement Learning Control

3.3.1. Approximate Dynamic Programming

3.3.2. Model-Based RL Control

3.3.3. Model-Free RL Control

4. Performance Optimization

4.1. Gradient-Based Optimization

4.2. Metaheuristic Optimization

5. Challenges and Prospects

5.1. Challenges

- (1)

- AI models or algorithms usually rely on a large amount of high-quality training data. The means for obtaining these data, especially in dynamic and complex environments, are a significant challenge.

- (2)

- Data-driven techniques for system identification rely on extracted patterns from historical or experimental data. The reasoning mechanism has yet to be fully understood or explained by physics and may thus be opaque to engineers. In other words, interpretability could be a concern due to its black-box nature.

- (3)

- Since the mathematical foundations of AI are yet to be fully established, a practice guide to facilitate the architecture designs and implementation of AI models or algorithms for system identification, control, and optimization is still an open issue.

- (4)

- Although AI models or algorithms can improve the modeling accuracy or control performance of dynamical systems, computational complexity becomes correspondingly more complex. Ways to deal with the trade-off are also a crucial challenge.

5.2. Future Directions

- (1)

- More models and learning methods can be used to improve the generalization ability of deep learning. Future efforts should prioritize multi-modal learning architectures that integrate heterogeneous data sources (e.g., multi-modal sensor fusion of vision, LiDAR, and inertial measurement units) to create more comprehensive system representations. Meta-learning approaches, particularly few-shot and zero-shot learning paradigms, could enable control systems to adapt rapidly to novel scenarios with minimal retraining. Foundation-scale models pre-trained on multi-domain physical system datasets demonstrate transformative potential. They can serve as universal priors for low-level control tasks while retaining domain-specific knowledge through lightweight fine-tuning. For instance, a physics-informed large language model could interpret system dynamics from textual maintenance logs and simultaneously process numerical sensor data, enabling cross-domain knowledge transfer in industrial control engineering applications.

- (2)

- Developing a new, effective, and more interpretable architecture to implement AI models or algorithms in feedback control systems could make the decision-making process of control systems more transparent, ensuring stability and real-time guarantees. Neural ordinary differential equation networks could be synergistically integrated with traditional MPC frameworks, where the neural component learns residual dynamics while the MPC core provides stability guarantees through convex optimization constraints. Furthermore, attention mechanisms and symbolic regression layers should be incorporated into control strategies to produce human-understandable explanations for control actions. Moreover, the integration of performance assessment tools, such as reachability analysis and Lyapunov functional synthesis, with neural network-based controllers will be essential for safety-critical applications like autonomous vehicles and medical robotics.

- (3)

- Exploring strategies regarding the ways machine learning is used to model and handle uncertainties can achieve more robust control. For most mechanical systems, the dominant dynamics can be obtained by first principles. Uncertainties including parameter variations (e.g., the moment of the inertia of robotic arms) and unmodeling dynamics (e.g., the aerodynamic disturbances of unmanned aerial vehicles) are the main causes of performance deterioration. A hierarchical learning framework could be developed where Bayesian neural networks quantify uncertainty distributions, while adversarial reinforcement learning agents train controllers to cope optimally under worst-case uncertainty scenarios. Physics-guided uncertainty propagation methods should be investigated, whereby learned uncertainty bounds are systematically incorporated into robust control strategies such as H infinity control and SMC. For distributed systems, federated learning architectures could enable collaborative uncertainty modeling across fleets of cyber-physical systems while preserving data privacy.

- (4)

- Most works focus on uncertainty estimation and compensation without considering the learning performance. Utilizing AI-based optimization algorithms to calculate the control input could be a more efficient and direct control method. Implicit neural representation networks could parameterize entire families of optimal control laws, enabling real-time solutions for nonlinear MPC problems without iterative computations. Evolutionary strategies enhanced by neural surrogates may discover non-conventional control strategies that outperform conventional PID or linear quadratic Gaussian designs in complex multi-objective scenarios. For large-scale systems, graph neural networks could solve distributed optimization problems by learning message-passing mechanisms between subsystems. Crucially, these approaches must address the duality between learning performance and control stability through novel loss functions that penalize Lyapunov function derivatives or contraction metric violations.

Author Contributions

Funding

Conflicts of Interest

References

- Ljung, L. Perspectives on system identification. Annu. Rev. Control 2010, 34, 1–12. [Google Scholar] [CrossRef]

- Zadeh, L. On the identification problem. IRE Trans. Circuit Theory 1956, 3, 277–281. [Google Scholar] [CrossRef]

- Fréchet, M. Sur les fonctionnelles continues. Ann. Sci. L’éCole Norm. SupéRieure 1910, 27, 193–216. [Google Scholar] [CrossRef]

- Volterra, V. Theory of Functionals and of Integral and Integro-Differential Equations; Dover Publications, Inc.: New York, NY, USA, 1958. [Google Scholar]

- Ljung, L. System Identification—Theory for the User, 2nd ed.; Prentice-Hall: Hoboken, NJ, USA, 1999. [Google Scholar]

- Sidorov, D. Integral Dynamical Models: Singularities, Signals and Control; World Scientific: Singapore, 2014. [Google Scholar]

- Sidorov, D.; Tynda, A.; Muratov, V.; Yanitsky, E. Volterra Black-Box Models Identification Methods: Direct Collocation vs. Least Squares. Mathematics 2024, 12, 227. [Google Scholar] [CrossRef]

- Apartsyn, A.S. Nonclassical Linear Volterra Equations of the First Kind; De Gruyter: Berlin, Germany, 2011. [Google Scholar]

- Franz, M.O.; Schölkopf, B. A unifying view of Wiener and Volterra theory and polynomial kernel regression. Neural Comput. 2006, 18, 3097–3118. [Google Scholar] [CrossRef]

- Sidorov, D.; Sidorov, N.A. Convex majorants method in the theory of nonlinear Volterra equations. Banach J. Math. Anal. 2012, 6, 1–10. [Google Scholar] [CrossRef]

- Sidorov, D. Existence and blow-up of Kantorovich principal continuous solutions of nonlinear integral equations. Differ. Equ. 2014, 50, 1175–1182. [Google Scholar] [CrossRef]

- Schweidtmann, A.M.; Zhang, D.D.; Stosch, M.V. Review and perspective on hybrid modeling methodologies. Digit. Chem. Eng. 2024, 10, 100136. [Google Scholar] [CrossRef]

- Chernyshev, S.; Sypalo, K.; Bazhenov, S. Intelligent control algorithms to ensure the flight safety of aerospace vehicles. Acta Astronaut. 2025, 226, 782–790. [Google Scholar] [CrossRef]

- Monostori, L.; Kádár, B.; Bauernhansl, T. Cyber-physical systems in manufacturing. CIRP Ann. 2016, 65, 621–641. [Google Scholar] [CrossRef]

- Liu, F.Y.; Jin, L.; Shang, M.; Wang, X. ACP-Incorporated Perturbation-Resistant Neural Dynamics Controller for Autonomous Vehicles. IEEE Trans. Intell. Veh. 2024, 9, 4675–4686. [Google Scholar] [CrossRef]

- Huang, X.; Rong, Y.; Gu, G. High-Precision Dynamic Control of Soft Robots with the Physics-Learning Hybrid Modeling Approach. IEEE/ASME Trans. Mechatron. 2024; early access. [Google Scholar] [CrossRef]

- Khaki-Sedigh, A. An Introduction to Data-Driven Control Systems; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2023. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Liu, D. Design Information Extraction and Visual Representation based on Artificial Intelligence Natural Language Processing Techniques. In Proceedings of the International Conference on Computer Vision, Image and Deep Learning, Zhuhai, China, 12–14 May 2023; pp. 154–158. [Google Scholar]

- Zimo, Y.; Jian, P.; Ru, W.; Xiang, Y.X. Embrace sustainable AI: Dynamic data subset selection for image classification. Pattern Recognit. 2024, 151, 110392. [Google Scholar]

- Kashyap, A.; Ray, A.; Kalyan, B.; Bhushan, P. A Minimalistic Model for Converting Basic Cars Into Semi-Autonomous Vehicles Using AI and Image Processing. In Proceedings of the 2023 International Conference on Applied Intelligence and Sustainable Computing, Dharwad, India, 16–17 June 2023; pp. 1–6. [Google Scholar]

- Bhamare, A.; Upadhyay, V.; Bansal, P. AI based Plant Growth Monitoring System using Computer Vision. In Proceedings of the 2023 IEEE Technology & Engineering Management Conference—Asia Pacific, Bengaluru, India, 14–16 December 2023; pp. 1–5. [Google Scholar]

- Jorge, R.; Rui, L.; Tiago, E.; Sara, P. Robotic Process Automation and Artificial Intelligence in Industry 4.0—A Literature review. Procedia Comput. Sci. 2021, 181, 51–58. [Google Scholar]

- Kutz, J. Machine learning for parameter estimation. Proc. Natl. Acad. Sci. USA 2023, 120, e2300990120. [Google Scholar] [CrossRef]

- Richard, C.A.; Brian, B.; Clifford, H.T. Parameter Estimation and Inverse Problems, 2nd ed.; Academic Press: Cambridge, MA, USA, 2013; pp. 93–127. [Google Scholar]

- Kabanikhin, S.I. Inverse and Ill-Posed Problems; de Gruyter: Berlin, Germany, 2008. [Google Scholar]

- Lavrentiev, M.M. Some Improperly Posed Problems of Mathematical Physics; Springer: Berlin/Heidelberg, Germany, 1967. [Google Scholar]

- Gaskin, T.; Pavliotis, G.A.; Girolami, M. Neural parameter calibration for large-scale multi-agent models. Proc. Natl. Acad. Sci. USA 2023, 120, e2216415120. [Google Scholar] [CrossRef]

- Yong, Y.; Haibin, L. Neural ordinary differential equations for robust parameter estimation in dynamic systems with physical priors. Appl. Soft Comput. 2025, 169, 112649. [Google Scholar] [CrossRef]

- Pillonetto, G.; Chen, T.; Chiuso, A.; Nicolao, G.; Ljung, L. Regularized System Identification: Learning Dynamic Models from Data; Springer: Cham, Switzerland, 2022. [Google Scholar]

- Tikhonov, A.N.; Arsenin, V.Y. Solutions of Ill-Posed Problems; Halsted Press: Ultimo, Australia, 1977. [Google Scholar]

- Anubhab, G.; Mohamed, A.; Saikat, C.; Håkan, H. DeepBayes—An estimator for parameter estimation in stochastic nonlinear dynamical models. Automatica 2024, 159, 111327. [Google Scholar]

- Genaro, N.; Torija, A.; Ramos, A.; Requena, I.; Ruiz, D.P.; Zamorano, M. Modeling environmental noise using artificial neural networks. In Proceedings of the International Conference on Intelligent Systems Design and Applications, Pisa, Italy, 30 November–2 December 2009; pp. 215–219. [Google Scholar]

- Khodayari, A.; Ghaffari, A.; Kazemi, R.; Braunsting, R. A modified car-following model based on a neural network model of the human driver effects. IEEE Trans. Syst. 2012, 42, 1440–1449. [Google Scholar] [CrossRef]

- Dong, A.; Starr, A.; Zhao, Y. Neural network-based parametric system identification: A review. Int. J. Syst. Sci. 2023, 54, 2676–2688. [Google Scholar] [CrossRef]

- Zhang, H.R.; Wang, X.S.; Zhang, C.J.; Xu, X.L. Modeling nonlinear dynamical systems using support vector machine. In Proceedings of the International Conference on Machine Learning and Cybernetics, Guangzhou, China, 18–21 August 2005; pp. 3204–3209. [Google Scholar]

- De Santis, E.; Rizzi, A. Modeling failures in smart grids by a bilinear logistic regression approach. Neural Netw. 2024, 174, 106245. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.H.; Wang, Z.H.; Yuan, J.L.; Ma, J.; He, Z.L.; Xu, Y.L.; Shen, X.J.; Zhu, L. Development of a novel feedforward neural network model based on controllable parameters for predicting effluent total nitrogen engineering. Engineering 2021, 7, 195–202. [Google Scholar] [CrossRef]

- Hihi, S.E.; Bengio, Y. Hierarchical recurrent neural networks for long-term dependencies. Int. Conf. Neural Inf. Process. Syst. 1995, 8, 493–499. [Google Scholar]

- Thuruthel, T.G.; Falotico, E.; Renda, F.; Laschi, C. Model-based reinforcement learning for closed-loop dynamic control of soft robotic manipulators. IEEE Trans. Robot. 2019, 35, 124–134. [Google Scholar] [CrossRef]

- Wang, J.J.; Ma, Y.; Zhang, L.B.; Gao, R.X.; Wu, D.Z. Deep learning for smart manufacturing: Methods and applications. J. Manuf. Syst. 2018, 48, 144–156. [Google Scholar] [CrossRef]

- Morton, J.; Wheeler, T.A.; Kochenderfer, M.J. Analysis of recurrent neural networks for probabilistic modeling of driver behavior. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1289–1298. [Google Scholar] [CrossRef]

- Yu, M.; Zhang, G.; Li, Q.; Chen, F. PGMM—Pre-trained gaussian mixture model based convolution neural network for electroencephalography imagery analysis. IEEE Access 2020, 8, 157418–157426. [Google Scholar] [CrossRef]

- Zhang, J. Sfr modeling for hybrid power systems based on deep transfer learning. IEEE Trans. Ind. Inform. 2024, 20, 399–410. [Google Scholar] [CrossRef]

- Zhou, X.; Zhai, N.; Li, S.; Shi, H. Time series prediction method of industrial process with limited data based on transfer learning. IEEE Trans. Ind. Inform. 2023, 19, 6872–6882. [Google Scholar] [CrossRef]

- Hassan, N.; Miah, A.; Shin, J. A deep bidirectional LSTM model enhanced by transfer-learning-based feature extraction for dynamic human activity recognition. Appl. Sci. 2024, 14, 603. [Google Scholar] [CrossRef]

- Psichogios, D.C.; Ungar, L.H. A hybrid neural network-first principles approach to process modeling. AIChE J. 1992, 38, 1499–1511. [Google Scholar] [CrossRef]

- Su, H.T.; Bhat, N.; Minderman, P.A.; McAvoy, T.J. Integrating neural networks with first principles models for dynamic modeling. In Dynamics and Control of Chemical Reactors, Distillation Columns and Batch Processes; Elsevier: Amsterdam, The Netherlands, 1993; pp. 327–332. [Google Scholar]

- Thompson, M.L.; Kramer, M.A. Modeling chemical processes using prior knowledge and neural networks. AIChE J. 1994, 40, 1328–1340. [Google Scholar] [CrossRef]

- Yang, S.; Pranesh, N.; Sambit, G.; Wayne, B. Hybrid modeling in the era of smart manufacturing. Comput. Chem. Eng. 2020, 140, 106874. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, J.; Chu, Q.; Wang, Y. Study on hybrid modeling of urban wastewater treatment process. In Proceedings of the 2022 34th Chinese Control and Decision Conference, Hefei, China, 15–17 August 2022; pp. 792–797. [Google Scholar]

- Wang, J.J.; Li, Y.L.; Zhao, R.; Gao, R.X. Physics guided neural network for machining tool wear prediction. J. Manuf. Syst. 2020, 57, 298–310. [Google Scholar] [CrossRef]

- Wang, J.J.; Li, Y.L.; Gao, R.X.; Zhang, F.L. Hybrid physics-based and data-driven models for smart manufacturing: Modelling, simulation, and explainability. J. Manuf. Syst. 2022, 63, 381–391. [Google Scholar] [CrossRef]

- Wei, C.J.; Zhang, J.X.; Liechti, M.; Wu, C.L. Data-driven modeling of interfacial traction–separation relations using a thermodynamically consistent neural network. Comput. Methods Appl. Mech. Eng. 2023, 404, 115826. [Google Scholar] [CrossRef]

- Gao, T.; Zhu, H.; Wu, J. Hybrid physics data-driven model-based fusion framework for machining tool wear prediction. Int. J. Adv. Manuf. Technol. 2024, 132, 1481–1496. [Google Scholar] [CrossRef]

- Zendehboudi, S.; Rezaei, N.; Lohi, A. Applications of hybrid models in chemical, petroleum, and energy systems: A systematic review. Appl. Energy 2018, 228, 2539–2566. [Google Scholar] [CrossRef]

- Sadoughi, M.; Hu, C. Physics-based convolutional neural network for fault diagnosis of rolling element bearings. IEEE Sens. J. 2019, 19, 4181–4192. [Google Scholar] [CrossRef]

- Wang, J.M.; Liu, J.Y.; Hou, X.J. A hybrid mechanics-data modeling approach for predicting the accuracy of train body flexible assembly. Mech. Syst. Signal Process. 2024, 202, 111242. [Google Scholar]

- Jabbari, Z.M.; Chatterjee, P.; Srivastava, A.K. Physics-informed machine learning for data anomaly detection, classification, localization, and mitigation: A review, challenges, and path forward. J. Mech. Eng. 2024, 60, 177–186. [Google Scholar]

- Lawal, Z.K.; Lai, D.T.C.; CheIdris, A. Physics-informed neural network (PINN) evolution and beyond: A systematic literature review and bibliometric analysis. Big Data Cogn. Comput. 2022, 26, 140. [Google Scholar] [CrossRef]

- Hu, H.; Shen, Z.; Zhuang, C. A PINN-based friction-inclusive dynamics modeling method for industrial robots. IEEE Trans. Ind. Electron. 2024; early access. [Google Scholar] [CrossRef]

- Hunt, K.J.; Sbarbaro, D.; Zbikowski, R.; Gawthrop, P.J. Neural networks for control systems—A survey. Automatica 1992, 28, 1083–1112. [Google Scholar] [CrossRef]

- Zhan, X.; Wang, W.; Chung, H. A neural-network-based color control method for multi-color LED systems. IEEE Trans. Power Electron. 2019, 34, 7900–7913. [Google Scholar] [CrossRef]

- Wang, W.; Chung, H.S.; Cheng, R.; Leung, C.S.; Zhan, X.; Lo, A.W.; Kwok, J.; Xue, C.J.; Zhang, J. Training neural-network-based controller on distributed machine learning platform for power electronics systems. In Proceedings of the 2017 IEEE Energy Conversion Congress and Exposition, Cincinnati, OH, USA, 1–5 October 2017; pp. 3083–3089. [Google Scholar]

- Novak, M.; Dragicevic, T. Supervised imitation learning of finite-set model predictive control systems for power electronics. IEEE Trans. Ind. Electron. 2021, 68, 1717–1723. [Google Scholar] [CrossRef]

- Lin, C.M.; Hung, K.N.; Hsu, C.F. Adaptive neuro-wavelet control for switching power supplies. IEEE Trans. Power Electron. 2007, 22, 87–95. [Google Scholar] [CrossRef]

- Zhao, J.; Bose, B. Neural-network-based waveform processing and delayless filtering in power electronics and AC drives. IEEE Trans. Ind. Electron. 2004, 51, 981–991. [Google Scholar] [CrossRef]

- Lin, F.J.; Teng, L.T.; Yu, M.H. Radial basis function network control with improved particle swarm optimization for induction generator system. IEEE Trans. Power Electron. 2008, 23, 2157–2169. [Google Scholar] [CrossRef]

- Wang, F.; Chao, Z.Q.; Huang, L.B.; Li, H.Y.; Zhang, C.Q. Trajectory tracking control of robot manipulator based on RBF neural network and fuzzy sliding mode. Clust. Comput. 2019, 22, 5799–5809. [Google Scholar] [CrossRef]

- Polycarpou, M. Stable adaptive neural control scheme for nonlinear systems. IEEE Trans. Autom. Control 1996, 41, 447–451. [Google Scholar] [CrossRef]

- Seshagiri, S.; Khalil, H. Output feedback control of nonlinear systems using RBF neural networks. IEEE Trans. Neural Netw. 2000, 11, 69–79. [Google Scholar] [CrossRef] [PubMed]

- Fei, J.; Ding, H. Adaptive sliding mode control of dynamic system using RBF neural network. Nonlinear Dyn. 2012, 70, 1563–1573. [Google Scholar] [CrossRef]

- Li, S.; Wang, Y.; Tan, J.; Zheng, Y. Adaptive RBFNNs/integral sliding mode control for a quadrotor aircraft. Neurocomputing 2016, 216, 126–134. [Google Scholar] [CrossRef]

- Lin, W.M.; Hong, C.M. A new Elman neural network-based control algorithm for adjustable-pitch variable-speed wind-energy conversion systems. IEEE Trans. Power Electron. 2011, 26, 473–481. [Google Scholar] [CrossRef]

- Lin, F.J.; Teng, L.T.; Chu, H. A robust recurrent wavelet neural network controller with improved particle swarm optimization for linear synchronous motor drive. IEEE Trans. Power Electron. 2008, 23, 3067–3078. [Google Scholar] [CrossRef]

- Lin, F.J.; Huang, P.K.; Wang, C.C.; Teng, L.T. An induction generator system using fuzzy modeling and recurrent fuzzy neural network. IEEE Trans. Power Electron. 2007, 22, 260–271. [Google Scholar] [CrossRef]

- Chen, C.S. TSK-type self-organizing recurrent-neural-fuzzy control of linear microstepping motor drives. IEEE Trans. Power Electron. 2010, 25, 2253–2265. [Google Scholar] [CrossRef]

- Bose, B.K. Neural network applications in power electronics and motor drives—An introduction and perspective. IEEE Trans. Ind. Electron. 2007, 54, 14–33. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Gastli, A.; Ben-Brahim, L.; Al-Emadi, N.; Gabbouj, M. Real-time fault detection and identification for MMC using 1-D convolutional neural networks. IEEE Trans. Ind. Electron. 2019, 66, 8760–8771. [Google Scholar] [CrossRef]

- Esfandiari, K.; Abdollahi, F.; Talebi, H.A. Neural Network-Based Adaptive Control of Uncertain Nonlinear Systems; Springer International Publishing: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Leu, Y.G.; Wang, W.Y.; Lee, T.T. Observer-based direct adaptive fuzzy-neural control for nonaffine nonlinear systems. IEEE Trans. Neural Netw. 2005, 16, 853–861. [Google Scholar] [CrossRef] [PubMed]

- Park, J.H.; Huh, S.H.; Kim, S.H.; Seo, S.J.; Park, G.T. Direct adaptive controller for nonaffine nonlinear systems using self-structuring neural networks. IEEE Trans. Neural Netw. 2005, 16, 414–422. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Hui, Y.; Sun, X.; Shi, D. Neural network sliding mode control of intelligent vehicle longitudinal dynamics. IEEE Access 2019, 7, 162333–162342. [Google Scholar] [CrossRef]

- Bao, Y.; Thesma, V.; Velni, J.M. Physics-guided and neural network learning-based sliding mode control. IFAC-PapersOnLine 2021, 54, 705–710. [Google Scholar] [CrossRef]

- Sun, T.; Pei, H.; Pan, Y.; Zhou, H.; Zhang, C. Neural network-based sliding mode adaptive control for robot manipulators. Neurocomputing 2011, 74, 2377–2384. [Google Scholar] [CrossRef]

- Yen, V.T.; Nan, W.Y.; Van Cuong, P.; Quynh, N.X.; Thich, V.H. Robust adaptive sliding mode control for industrial robot manipulator using fuzzy wavelet neural networks. Int. J. Control Autom. Syst. 2017, 15, 2930–2941. [Google Scholar] [CrossRef]

- Yen, V.T.; Nan, W.Y.; Van Cuong, P. Robust adaptive sliding mode neural networks control for industrial robot manipulators. Int. J. Control Autom. Syst. 2019, 17, 783–792. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, X.; Zhang, G.; Fan, J.; Jia, S. Neural network adaptive sliding mode control for omnidirectional vehicle with uncertainties. ISA Trans. 2019, 86, 201–214. [Google Scholar] [CrossRef]

- Sun, Z.; Zou, J.; He, D.; Zhu, W. Path-tracking control for autonomous vehicles using double-hidden-layer output feedback neural network fast nonsingular terminal sliding mode. Neural Comput. Appl. 2021, 34, 5135–5150. [Google Scholar] [CrossRef]

- Liu, H.; Gong, Z. Upper bound adaptive learning of neural network for the sliding mode control of underwater robot. In Proceedings of the 2008 International Conference on Advanced Computer Theory and Engineering, Phuket, Thailand, 20–22 December 2008; pp. 276–280. [Google Scholar]

- Bagheri, A.; Moghaddam, J.J. Simulation and tracking control based on neural-network strategy and sliding-mode control for underwater remotely operated vehicle. Neurocomputing 2009, 72, 1934–1950. [Google Scholar] [CrossRef]

- Tlijani, H.; Jouila, A.; Nouri, K. Wavelet neural network sliding mode control of two rigid joint robot manipulator. Adv. Mech. Eng. 2022, 14, 16878132221119886. [Google Scholar] [CrossRef]

- Boukens, M.; Boukabou, A.; Chadli, M. Robust adaptive neural network-based trajectory tracking control approach for nonholonomic electrically driven mobile robots. Robot. Auton. Syst. 2017, 92, 30–40. [Google Scholar] [CrossRef]

- Yen, V.T.; Nan, W.Y.; Van, C.P. Recurrent fuzzy wavelet neural networks based on robust adaptive sliding mode control for industrial robot manipulators. Neural Comput. Appl. 2018, 31, 6945–6958. [Google Scholar] [CrossRef]

- Lai, G.; Tao, G.; Zhang, Y.; Liu, Z. Adaptive control of noncanonical neural-network nonlinear systems with unknown input dead-zone characteristics. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 3346–3360. [Google Scholar] [CrossRef] [PubMed]

- El-Sousy, F.F.M.; Abuhasel, K.A. Adaptive nonlinear disturbance observer using a double-loop self-organizing recurrent wavelet neural network for a two-axis motion control system. IEEE Trans. Ind. Appl. 2018, 54, 764–786. [Google Scholar] [CrossRef]

- Choi, J.Y.; Farrell, J. Adaptive observer backstepping control using neural networks. IEEE Trans. Neural Netw. 2001, 12, 1103–1112. [Google Scholar] [CrossRef]

- Xi, R.D.; Ma, T.N.; Xiao, X.; Yang, Z.X. Design and implementation of an adaptive neural network observer–based backstepping sliding mode controller for robot manipulators. Trans. Inst. Meas. Control 2024, 46, 1093–1104. [Google Scholar] [CrossRef]

- Zhou, Z.; Yu, J.; Yu, H.; Lin, C. Neural network-based discrete-time command filtered adaptive position tracking control for induction motors via backstepping. Neurocomputing 2017, 260, 203–210. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, C.; Wei, Y.; Wang, J. Neural-based command filtered backstepping control for trajectory tracking of underactuated autonomous surface vehicles. IEEE Access 2020, 8, 42481–42490. [Google Scholar] [CrossRef]

- Niu, S.; Wang, J.; Zhao, J.; Shen, W. Neural network-based finite-time command-filtered adaptive backstepping control of electro-hydraulic servo system with a three-stage valve. ISA Trans. 2024, 144, 419–435. [Google Scholar] [CrossRef]

- Zhou, Q.; Zhao, S.; Li, H.; Lu, R.; Wu, C. Adaptive neural network tracking control for robotic manipulators with dead zone. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3611–3620. [Google Scholar] [CrossRef] [PubMed]

- Zong, G.; Xu, Q.; Zhao, X.; Su, S.F.; Song, L. Output-feedback adaptive neural network control for uncertain nonsmooth nonlinear systems with input deadzone and saturation. IEEE Trans. Cybern. 2023, 53, 5957–5969. [Google Scholar] [CrossRef] [PubMed]

- Armstrong, A.A.; Alleyne, A.G. A multi-input single-output iterative learning control for improved material placement in extrusion-based additive manufacturing. Control Eng. Pract. 1990, 111, 104783. [Google Scholar] [CrossRef]

- Schwegel, M.; Kugi, A. A simple computationally efficient path ILC for industrial robotic manipulators. In Proceedings of the IEEE International Conference on Robotics and Automation, Yokohama, Japan, 13–17 May 2024; pp. 2133–2139. [Google Scholar]

- Hao, S.L.; Liu, T.; Rogers, E. Extended state observer based indirect-type ILC for single-input single-output batch processes with time- and batch-varying uncertainties. Automatica 2020, 112, 108673. [Google Scholar] [CrossRef]

- Bristow, D.A.; Tharayil, M.; Alleyne, A.G. A survey of iterative learning control. IEEE Control Syst. Mag. 2006, 26, 96–114. [Google Scholar]

- Yu, X.; Hou, Z.; Polycarpou, M.M. Controller-dynamic-linearization-based data-driven ILC for nonlinear discrete-time systems with RBFNN. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 4981–4992. [Google Scholar] [CrossRef]

- Shi, Q.; Huang, X.; Meng, B. Neural network-based iterative learning control for trajectory tracking of unknown SISO nonlinear systems. Expert Syst. Appl. 2023, 232, 120863. [Google Scholar] [CrossRef]

- Patan, K.; Patan, M. Neural-network-based iterative learning control of nonlinear systems. ISA Trans. 2020, 98, 445–453. [Google Scholar] [CrossRef]

- Yu, Q.; Hou, Z.; Bu, X.; Yu, Q. RBFNN-based data-driven predictive iterative learning control for nonaffine nonlinear systems. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 1170–1182. [Google Scholar] [CrossRef]

- Chen, Z.; Hou, Y.; Huang, R. Neural network compensator-based robust iterative learning control scheme for mobile robots nonlinear systems with disturbances and uncertain parameters. Appl. Math. Comput. 2024, 469, 128549. [Google Scholar] [CrossRef]

- Zhang, Y.; Ge, S.S. Design and analysis of a general recurrent neural network model for time-varying matrix inversion. IEEE Trans. Neural Netw. 2005, 16, 1477–1490. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y. Noise-tolerant ZNN-based data-driven iterative learning control for discrete nonaffine nonlinear MIMO repetitive systems. IEEE/CAA J. Autom. Sin. 2024, 11, 344–361. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, Z.; Masayoshi, T. Neural-network-based iterative learning control for multiple tasks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4178–4190. [Google Scholar] [CrossRef]

- Lv, Y.; Ren, X.; Tian, J. Inverse-model-based iterative learning control for unknown MIMO nonlinear system with neural network. Neurocomputing 2023, 519, 187–193. [Google Scholar] [CrossRef]

- Abdollahi, F.; Talebi, H.; Patel, R. Stable identification of nonlinear systems using neural networks: Theory and experiments. IEEE Trans. Mechatron. 2006, 11, 488–495. [Google Scholar] [CrossRef]

- Chen, F.C.; Liu, C.C. Adaptively controlling nonlinear continuous-time systems using multilayer neural networks. IEEE Trans. Autom. Control 1994, 39, 1306–1310. [Google Scholar] [CrossRef]

- Wang, C.C.; Tan, K.L.; Chen, C.T.; Lin, Y.H.; Keerthi, S.S.; Mahajan, D.; Sundararajan, S.; Lin, C.J. Distributed Newton Methods for Deep Neural Networks. Neural Comput. 2018, 30, 1673–1724. [Google Scholar] [CrossRef]

- Indrapriyadarsini, S.; Mahboubi, S.; Ninomiya, H.; Kamio, T.; Asai, H. Accelerating Symmetric Rank-1 Quasi–Newton Method with Nesterov’s Gradient for Training Neural Networks. Algorithms 2022, 15, 6. [Google Scholar] [CrossRef]

- Guilhoto, L.F.; Perdikaris, P. Composite Bayesian Optimization in function spaces using NEON—Neural Epistemic Operator Networks. Sci. Rep. 2024, 14, 29199. [Google Scholar] [CrossRef]

- Magallón, D.A.; Jaimes, R.R.; García, J.H.; Huerta, C.G.; López, M.D.; Pisarchik, A.N. Control of Multistability in an Erbium-Doped Fiber Laser by an Artificial Neural Network: A Numerical Approach. Mathematics 2022, 10, 3140. [Google Scholar] [CrossRef]

- Norouzi, A.; Heidarifar, H.; Borhan, H.; Shahbakhti, M.; Koch, C.R. Integrating machine learning and model predictive control for automotive applications: A review and future directions. Eng. Appl. Artif. Intell. 2023, 120, 105878. [Google Scholar] [CrossRef]

- Akpan, V.A.; Hassapis, G.D. Nonlinear model identification and adaptive model predictive control using neural networks. IEEE Robot. Autom. Lett. 2011, 4, 177–194. [Google Scholar] [CrossRef] [PubMed]

- Lanzetti, N.; Lian, Y.Z.; Cortinovis, A.; Dominguez, L.; Mercangoz, M.; Jones, C. Recurrent neural network based MPC for process industries. Eur. Control Conf. 2019, 6, 1005–1010. [Google Scholar]

- Gillespie, M.T.; Best, C.M.; Townsend, E.C.; Wingate, D.; Killpack, M.D. Learning nonlinear dynamic models of soft robots for model predictive control with neural networks. Proc. IEEE Int. Conf. Soft Robot. 2018, 7, 568–581. [Google Scholar]

- Seel, K.; Grotli, E.I.; Moe, S.; Gravdahl, J.T.; Pettersen, K.Y. Neural network-based model predictive control with input-to-state stability. Proc. Am. Control Conf. 2021, 5, 3556–3563. [Google Scholar]

- Ostafew, C.J.; Schoellig, A.P.; Barfoot, T.D. Learning-based nonlinear model predictive control to improve vision-based mobile robot path-tracking in challenging outdoor environments. Proc. IEEE Int. Conf. Robot. Autom. 2014, 5, 4029–4036. [Google Scholar]

- McKinnon, C.D.; Schoellig, A.P. Learning probabilistic models for safe predictive control in unknown environments. Proc. IEEE Int. Conf. Robot. Autom. 2019, 6, 2472–2479. [Google Scholar]

- Hose, H.; Gräfe, A.; Trimpe, S. Parameter-adaptive approximate MPC: Tuning neural-network controllers without retraining. Annu. Learn. Dyn. Control. Conf. 2024, 242, 349–360. [Google Scholar]

- Gros, S.; Zanon, M. Data-driven economic NMPC using reinforcement learning. IEEE Trans. Autom. Control 2020, 2, 636–648. [Google Scholar] [CrossRef]

- Karg, B.; Lucia, S. Efficient representation and approximation of model predictive control laws via deep learning. IEEE Trans. Cybern. 2020, 9, 3866–3878. [Google Scholar] [CrossRef]

- Lovelett, R.J.; Dietrich, F.; Lee, S.; Kevrekidis, I.G. Some manifold learning considerations towards explicit model predictive control. AIChE J. 2019, 66, 16881. [Google Scholar] [CrossRef]

- Klaučo, M.; Kalúz, M.; Kvasnica, M. Machine learning-based warm starting of active set methods in embedded model predictive control. Eng. Appl. Artif. Intell. 2019, 1, 1–8. [Google Scholar] [CrossRef]

- Chen, S.W.; Wang, T.; Atanasov, N.; Kumar, V.; Morari, M. Large scale model predictive control with neural networks and primal active sets. Automatica 2022, 1, 109947. [Google Scholar] [CrossRef]

- Chakrabarty, A.; Dinh, V.; Corless, M.J.; Rundell, A.E.; Zak, S.H.; Buzzard, G.T. Support vector machine informed explicit nonlinear model predictive control using low-discrepancy sequences. IEEE Trans. Autom. Control 2017, 1, 135–148. [Google Scholar] [CrossRef]

- Chen, S.; Saulnier, K.; Atanasov, N.; Lee, D.D.; Kumar, V.; Pappas, G.J.; Morari, M. Approximating explicit model predictive control using constrained neural networks. Proc. Annu. Am. Control Conf. 2018, 6, 1520–1527. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Li, S.E. Reinforcement Learning for Sequential Decision and Optimal Control; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Bellman, R.E. Dynamic Programming; Princeton University Press: Princeton, NJ, USA, 1957. [Google Scholar]

- Werbos, P. Consistency of HDP applied to a simple reinforcement learning problem. Neural Netw. 1990, 3, 179–189. [Google Scholar] [CrossRef]

- Werbos, P. Approximate dynamic programming for real-time control and neural modeling. In Handbook of Intelligent Control; Van Nostrand Reinhold: New York, NY, USA, 1992. [Google Scholar]

- Sutton, R.S. Learning to predict by the methods of temporal differences. Mach. Learn. 1988, 3, 9–44. [Google Scholar] [CrossRef]

- Watkins, C. Learning from Delayed Rewards. Ph.D. Thesis, University of Cambridge, Cambridge, UK, 1989. [Google Scholar]

- Wang, Z.H.; Justin, S. Continuous-time stochastic gradient descent for optimizing over the stationary distribution of stochastic differential equations. Math. Financ. 2023, 34, 348–424. [Google Scholar] [CrossRef]

- Moerl, T.M.; Broekens, J.; Plaat, A. Model-based reinforcement learning: A survey. Found. Trends Mach. Learn. 2023, 16, 1–118. [Google Scholar]

- Vamvoudakis, K.G.; Lewis, F.L. Online actor-critic algorithm to solve the continuous-time infinite horizon optimal control problem. Automatica 2010, 46, 878–888. [Google Scholar] [CrossRef]

- Liu, D.; Wei, Q. Finite-approximation-error-based optimal control approach for discrete-time nonlinear systems. IEEE Trans. Cybern. 2013, 43, 779–789. [Google Scholar] [PubMed]

- Abu-Khalaf, M.; Lewis, F.L.; Huang, J. Neuro dynamic programming and zero-sum games for constrained control systems. IEEE Trans. Neural Netw. 2008, 19, 1243–1252. [Google Scholar] [CrossRef]

- Feng, Y.; Anderson, B.D.O.; Rotkowitz, M. A game theoretic algorithm to compute local stabilizing solutions to HJBI equations in nonlinear H∞ control. Automatica 2009, 45, 881–888. [Google Scholar] [CrossRef]

- Wang, D.; Liu, D.; Wei, Q. Finite-horizon neuro-optimal tracking control for a class of discrete-time nonlinear systems using adaptive dynamic programming approach. Neurocomputing 2012, 78, 14–22. [Google Scholar] [CrossRef]

- Garaffa, L.C.; Basso, M.; Konzen, A.A.; de Freitas, E.P. Reinforcement Learning for Mobile Robotics Exploration: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 3796–3810. [Google Scholar] [CrossRef]

- Liu, D.; Zhang, H. A neural dynamic programming approach for learning control of failure avoidance problems. Int. J. Intell. Control Syst. 2005, 10, 21–32. [Google Scholar]

- Si, J.; Wang, Y.T. Online learning control by association and reinforcement. IEEE Trans. Neural Netw. 2001, 12, 264–276. [Google Scholar] [CrossRef]

- Long, M.; Su, H.; Zeng, Z. Output-feedback global consensus of discrete-time multiagent systems subject to input saturation via Q-Learning method. IEEE Trans. Cybern. 2022, 52, 1661–1670. [Google Scholar] [CrossRef]

- Song, S.; Zhu, M.; Dai, X.; Gong, D. Model-free optimal tracking control of nonlinear input-affine discrete-time systems via an iterative deterministic Q-learning algorithm. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 999–1012. [Google Scholar] [CrossRef]

- Liu, F.; Liu, Q.; Tao, Q. Deep reinforcement learning based energy storage management strategy considering prediction intervals of wind power. Int. J. Electr. Power Energy Syst. 2023, 145, 108608. [Google Scholar] [CrossRef]

- Xu, X.; Hu, D.; Lu, X. Kernel-based least squares policy iteration for reinforcement learning. IEEE Trans. Neural Netw. 2007, 18, 973–992. [Google Scholar] [CrossRef] [PubMed]

- Sartoretti, G.; Paivine, W.; Shi, Y.; Wu, Y.; Choset, H. Distributed learning of decentralized control policies for articulated mobile robots. IEEE Trans. Robot. 2019, 35, 1109–1122. [Google Scholar] [CrossRef]

- Zhang, M.; Geng, X.; Bruce, J.; Caluwaerts, K.; Vespignani, M.; SunSpiral, V.; Abbeel, P.; Levine, S. Deep reinforcement learning for tensegrity robot locomotion. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 634–641. [Google Scholar]

- Wang, X.; Deng, H.; Ye, X. Model-free nonlinear robust control design via online critic learning. ISA Trans. 2022, 129, 446–459. [Google Scholar] [CrossRef]

- Xia, L.; Li, Q.; Song, R.; Modares, H. Optimal synchronization control of heterogeneous asymmetric input constrained unknown nonlinear MASs via reinforcement learning. IEEE/CAA J. Autom. Sin. 2022, 9, 520–532. [Google Scholar] [CrossRef]

- Yong, H.; Seo, J.; Kim, J.; Kim, M.; Choi, J. Suspension control strategies using switched soft actor-critic models for real roads. IEEE Trans. Ind. Electron. 2023, 70, 824–832. [Google Scholar] [CrossRef]

- Nassef, A.M.; Abdelkareem, M.A.; Maghrabie, H.M.; Baroutaji, A. Review of metaheuristic optimization algorithms for power systems problems. Sustainability 2023, 15, 9434. [Google Scholar] [CrossRef]

- Sehgal, A.; Ward, N.; La, H.; Louis, S. Automatic parameter optimization using genetic algorithm in deep reinforcement learning for robotic manipulation tasks. arXiv 2022, arXiv:2204.03656. [Google Scholar]

- Erodotou, P.; Voutsas, E.; Sarimveis, H. A genetic algorithm approach for parameter estimation in vapour-liquid thermodynamic modelling problems. Comput. Chem. Eng. 2020, 134, 106684. [Google Scholar] [CrossRef]

- Qian, S.; Ye, Y.; Liu, Y.; Xu, G. An improved binary differential evolution algorithm for optimizing PWM control laws of power inverters. Optim. Eng. 2018, 19, 271–296. [Google Scholar] [CrossRef]

- Godoy, R.B.; Pinto, J.O.; Canesin, C.A.; Coelho, E.A.; Pinto, A.M. Differential-evolution-based optimization of the dynamic response for parallel operation of inverters with no controller interconnection. IEEE Trans. Ind. Electron. 2011, 59, 2859–2866. [Google Scholar] [CrossRef]

- Wang, D.; Sun, X.; Kang, H.; Shen, Y.; Chen, Q. Heterogeneous differential evolution algorithm for parameter estimation of solar photovoltaic models. Energy Rep. 2022, 8, 4724–4746. [Google Scholar] [CrossRef]

- Shi, J.; Mi, Q.; Cao, W.; Zhou, L. Optimizing BLDC motor drive performance using particle swarm algorithm-tuned fuzzy logic controller. SN Appl. Sci. 2022, 4, 293. [Google Scholar] [CrossRef]

- Hafez, I.; Dhaouadi, R. Parameter identification of DC motor drive systems using particle swarm optimization. In Proceedings of the 2021 International Conference on Engineering and Emerging Technologies, Istanbul, Turkey, 27–28 October 2021; pp. 1–6. [Google Scholar]

- Tungadio, D.H.; Numbi, B.P.; Siti, M.W.; Jimoh, A.A. Particle swarm optimization for power system state estimation. Neurocomputing 2015, 148, 175–180. [Google Scholar] [CrossRef]

- Foong, W.K.; Maier, H.R.; Simpson, A.R. Ant colony optimization for power plant maintenance scheduling optimization. In Proceedings of the 7th Annual Conference on Genetic and Evolutionary Computation, Washington, DC, USA, 25–29 June 2005; pp. 249–256. [Google Scholar]

- Lee, K.Y.; Vlachogiannis, J.G. Optimization of power systems based on ant colony system algorithms: An overview. In Proceedings of the 13th International Conference on Intelligent Systems Application to Power Systems, Arlington, VA, USA, 6–10 November 2005; pp. 22–35. [Google Scholar]

- Sitarz, P.; Powałka, B. Modal parameters estimation using ant colony optimisation algorithm. Mech. Syst. Signal Process. 2016, 76, 531–554. [Google Scholar] [CrossRef]

- Zao, R.Z. Simulated annealing based multi-object optimal planning of passive power filters. In Proceedings of the 2005 IEEE/PES Transmission and Distribution Conference and Exposition: Asia and Pacific, Dalian, China, 18 August 2005; pp. 1–5. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, P.; Wan, H.; Zhang, B.; Wu, Q.; Zhao, B.; Xu, C.; Yang, S. Review on System Identification, Control, and Optimization Based on Artificial Intelligence. Mathematics 2025, 13, 952. https://doi.org/10.3390/math13060952

Yu P, Wan H, Zhang B, Wu Q, Zhao B, Xu C, Yang S. Review on System Identification, Control, and Optimization Based on Artificial Intelligence. Mathematics. 2025; 13(6):952. https://doi.org/10.3390/math13060952

Chicago/Turabian StyleYu, Pan, Hui Wan, Bozhi Zhang, Qiang Wu, Bohao Zhao, Chen Xu, and Shangbin Yang. 2025. "Review on System Identification, Control, and Optimization Based on Artificial Intelligence" Mathematics 13, no. 6: 952. https://doi.org/10.3390/math13060952

APA StyleYu, P., Wan, H., Zhang, B., Wu, Q., Zhao, B., Xu, C., & Yang, S. (2025). Review on System Identification, Control, and Optimization Based on Artificial Intelligence. Mathematics, 13(6), 952. https://doi.org/10.3390/math13060952