Abstract

We present three progressively refined pseudorandom function (PRF) constructions based on the learning Burnside homomorphisms with noise (-LHN) assumption. A key challenge in this approach is error management, which we address by extracting errors from the secret key. Our first design, a direct pseudorandom generator (PRG), leverages the lower entropy of the error set (E) compared to the Burnside group (). The second, a parameterized PRG, derives its function description from public parameters and the secret key, aligning with the relaxed PRG requirements in the Goldreich–Goldwasser–Micali (GGM) PRF construction. The final indexed PRG introduces public parameters and an index to refine efficiency. To optimize computations in Burnside groups, we enhance concatenation operations and homomorphisms from to for . Additionally, we explore algorithmic improvements and parallel computation strategies to improve efficiency.

Keywords:

post quantum cryptography; Burnside group; pseudorandom function; learning homomorphisms with noise MSC:

20D05

1. Introduction

In modern cryptography, researchers are increasingly exploring non-abelian group-based cryptosystems, due to their intricate algebraic structures and the perceived potential for heightened security against quantum computational methods. This exploration extends beyond traditional cryptographic assumptions like factorization and discrete logarithm problems (DLP), addressing one-way trapdoor functions in non-abelian groups. The emergence of Shor’s algorithm, capable of efficiently factoring integers and computing discrete logarithms, underscores the vulnerability of classical hardness problems to quantum attacks. This motivates the pursuit of post-quantum hardness assumptions in cryptosystems.

Baumslag et al. presented a group-theoretic learning challenge termed learning homomorphisms with noise (LHN), generalizing the established hardness assumptions, notably learning parity with noise (LPN) and learning with errors (LWE). LWE establishes a quantum hardness assumption rooted in lattice-based cryptography, forming the foundation for diverse constructions in modern cryptographic systems. It asserts the computational challenge of learning a random linear relationship between secret information and noisy data within this lattice-based cryptographic paradigm [1,2,3,4,5,6,7]. The LWE hardness assumption is fundamentally based on abelian integer groups. However, our study centers on the LHN associated with the non-abelian Burnside groups and , where , commonly referred to as the learning Burnside homomorphisms with noise (-LHN) [8,9]. In this context, the -LHN hardness problem focuses on recovering the homomorphism between Burnside groups and based on probabilistic polynomial sample pairs of the preimage and distorted image. Several aspects related to the security and cryptography of the -LHN problem, such as random-self reducibility, error distribution, and symmetric cryptosystem, have already been extensively studied. The paper by Pandey et al. [10] extended existing research by introducing derandomization of the -LHN assumption, resulting in a new assumption, termed the -LHR assumption. Furthermore, the paper discussed the design of a length-preserving weak PRF based on the -LHR assumption, leading to a PRF construction. However, the construction of a PRF from the derandomization of the -LHN assumption appears to be less efficient in terms of both secret-key size and performance compared to the direct PRF construction from the -LHN assumption proposed in this study.

The pseudorandom function (PRF) and pseudorandom generator (PRG) constitute fundamental constructs in theoretical computer science, with implications spanning cryptography, computational complexity theory, and related domains. A PRG is defined by a deterministic algorithm taking a uniformly sampled seed as input, aiming to extend it into a longer sequence mimicking randomness, indistinguishable from a truly random sequence for any probabilistic polynomial time () adversary. Formally, a deterministic function with a sufficiently large security parameter and is considered a PRG if no efficient adversary can distinguish the polynomial outputs from truly random outputs [11]. Similarly, a PRF is a deterministic mathematical function defined by a uniformly sampled secret-key, producing outputs with random-like characteristics. Despite its deterministic nature, the PRF output depends on both the uniformly sampled secret-key and the adaptive input. For a PRF defined by a uniformly sampled secret-key, it is computationally infeasible for a adversary to distinguish between oracles with pseudorandom outputs and truly random outputs. A well-defined PRF family facilitates easy sampling of functions and efficient evaluation for a given secret-key and adaptive input. The adaptive power conferred to the adversary makes designing a PRF challenging. Our primary objective is to construct a PRF family based on a post-quantum hardness assumption, specifically the -LHN. This study adheres to the standard PRF definition from [12,13,14] for PRF constructions based on the -LHN assumptions. Consider a pseudorandom function (PRF) family denoted by with a sufficiently large security parameter . A function in is defined by a secret key . For a uniformly sampled secret key k, the function is deemed a PRF if no adversary can distinguish the polynomially many outputs from truly random outputs. The adversary is granted the ability to make adaptive queries to the inputs .

PRF designs can be broadly categorized into two approaches: theory-based and heuristic-based. The heuristic-based approach relies on practical heuristics to design a PRF family, exemplified in the construction of Rijndael’s AES [15,16]. While heuristic-based designs are often efficient and practical, their security lacks rigorous justification. Conversely, the theory-based approach employs well-established hardness assumptions to construct a PRF family with justified security. The foundational exploration of PRF concepts began in the seminal work of Goldreich, Goldwasser, and Micali (GGM) [12]. GGM significantly contributed to pseudorandomness by establishing a critical link between PRGs and PRFs. They introduced the use of a length-doubling PRG as an intermediate function in constructing a PRF.

The outline of the PRF construction proposed by GGM is as follows: Let be a length-doubling PRG, where is a sufficiently large security parameter. The output is split into two equal halves, denoted as and , representing the left and right halves, respectively. For a PRF family with a sufficiently large security parameter , a PRF in with secret key and an m-bit input is defined as in Equation (1). The PRF construction in GGM follows a sequential approach employing PRGs. It necessitates m invocations of the PRG to calculate the output . The primary advantage of GGM’s PRG-based PRF construction lies in the utilization of the secret-key as the seed in the initial PRG invocation.

In the paper [17], NR proposed a groundbreaking design for a length-doubling PRG utilizing the decisional Diffie–Hellman (DDH) assumption. Moreover, there is a positive catch in the design, and one can employ this property for many other aspects. The length-doubling PRG utilizing the DDH assumption is defined as follows: For sufficiently large primes P and Q, where Q divides , let g be a generator in a subgroup of (the multiplicative group modulo P) of order Q. The DDH assumption holds if no adversary, given , distinguishes the outputs and with non-negligible advantage. Here, the exponents a, b, and c are sampled uniformly from . Utilizing the DDH assumption, NR designed a length-doubling PRG G with index , as in Equation (2).

Upon initial examination, an apparent paradox arises as appears to defy efficiency, due to the presumed computational complexity of the Diffie–Hellman (DH) problem. If the function were indeed efficiently computable, it would not qualify as a PRG, given the assumed complexity of the DH problem. However, a distinctive attribute of comes to light, rendering it suitable for incorporation into the GGM construction of a PRF. Specifically, demonstrates efficient computability when either exponents a or b are known. The key idea here is to use exponent a as an index in from a secret-key of the resultant PRF. A PRF utilizing a length-doubling PRG is defined as shown in Equation (3).

Here, the secret-key k is defined as for . The construction of these length-doubling PRGs relies on the DDH assumption, where the function is defined based on the secret-key components of a PRF. It is important to note that the security of the length-doubling PRG is inherently tied to the security of the DDH assumption. Independently, if not used in the PRF construction, the length-doubling PRG is not efficient unless the DDH problem is easy. However, if used as an intermediate function in a PRF construction, the function acts like a PRG and can be used to construct a PRF.

Contribution In this study, we make the following contributions to the field of cryptography: First and foremost, to address the efficiency of cryptographic protocols based on the -LHN assumption, we introduce an optimized and parallelizable concatenation operation tailored for Burnside groups. Moreover, we introduce and formulate three progressively refined designs for constructing a PRF family using the GGM approach, rooted in the -LHN assumption. In the first attempt, a PRG , for the given homomorphism from to , , and , was defined as

For , with and . The design above, termed as a direct PRG, applies the -LHN assumption by capitalizing on the lower entropy of a set of errors E compared to a Burnside group . Moreover, we introduce an adjustment to the direct PRG design, leading to a significant decrease in the secret-key size of a corresponding PRF. We call the design parameterized PRG. However, the modified construction of parameterized PRG introduces extra public parameters. In the second attempt, a PRG was defined as

For , for . Furthermore, is a public parameter associated with an error . We further propose a modification to the parameterized PRG that yields a significant decrease in the secret-key size of a PRF. The construction is detailed as follows: Let be a homomorphism from to . An indexed PRG with index is constructed as

where and e is sampled from a set of errors E. Furthermore, a is sampled from a Burnside group and is a public parameter associated with the input seed e. Here, the input and output bit size to the function are the entropies of a set of errors E and a Burnside group , respectively.

Following the GGM construction, we design a PRG-based PRF construction from the aforementioned PRG family, as follows: Let be a secret-key, where , . Let be an indexed PRG, as defined above. For , let represent a set of public parameters, where is sampled uniformly from a Burnside group . A PRF for input string and secret-key k is defined as

where the ith iteration of a function call uses an associated public parameter for . Note, and represent the equal left and right half of the output . Finally, we establish the security of a PRF construction where an indexed PRG is used as an intermediate function.

Outline Section 2 introduces the concept of a relatively free group, with a specific focus on the Burnside group. The section provides an in-depth exploration of the -LHN hardness assumption, elucidating its significance. Furthermore, it outlines the construction framework for minicrypt, incorporating Burnside learning problems. The section delves into the clarification of error distribution, a pivotal component for establishing a post-quantum hardness assumption known as -LHN. We reference a derandomization technique for the -LHN assumption and the construction of a pseudorandom function (PRF) utilizing the -LHR assumption from [10]. Section 3 presents an optimized concatenation operation within the Burnside groups and for , emphasizing parallel efficiency. Section 4 explores three distinct approaches to constructing a pseudorandom function (PRF) from an original -LHN assumption without derandomization. Within this context, the section introduces designs for the fundamental primitive: pseudorandom generator (PRG). The section investigates how the PRG-based PRF design significantly reduces the secret-key size compared to alternative designs from the modified -LHR assumption. Section 5 provides a comprehensive analysis of the security and efficiency characteristics of our proposed PRG and PRF schemes.

2. Background

Burnside [18], in 1902, put forward the question of whether a finitely generated group all of whose elements have finite order is necessarily finite. After six decades, the question was addressed by Golod and Shaferevich by finding an example of an infinite finitely-generated group all of whose elements have finite order [19]. In their paper, Golod and Shafarevich showed that the order of the group is infinite for a number of generators greater than 4380 and odd. In 1975, Adian improved the result, showing that a group is infinite for a number of generators greater than 664 and odd [20]. A free Burnside group with n generators and exponent m, denoted by , is a group where for all elements w. Clearly, it is easy to visualize that the group is abelian and it has order . In the original paper, Burnside proved that the order of is finite. Later, Levi and van der Waerden showed the exact order of to be , where [21]. Furthermore, Burnside also showed that the order of is , and later Sanov enhanced the result by showing that the order of , in general, is finite but the order is not known [22]. Similarly, for order , Marshall showed that is finite and the order of is , , , and [23]. In , for an exponent m other than , it is unknown that is finite for all n generators.

Notation Throughout our discussion, the following conventions are consistently applied: The symbols and signify a security parameter and the set of natural numbers, respectively. The term log is used to denote the binary logarithm. For a set S, the notation indicates that a is sampled uniformly from S. Similarly, for a distribution over a set S, denotes that a is an element in S sampled according to the distribution . The notation represents the bit-strings resulting from the concatenation of strings , which may have different lengths. However, in an algebraic context, signifies a (relatively) free group G generated by a set of generators X. For some polynomial function and the security parameter , the set denotes a set where is the ith element for .

2.1. Relatively Free Group: Burnside Group

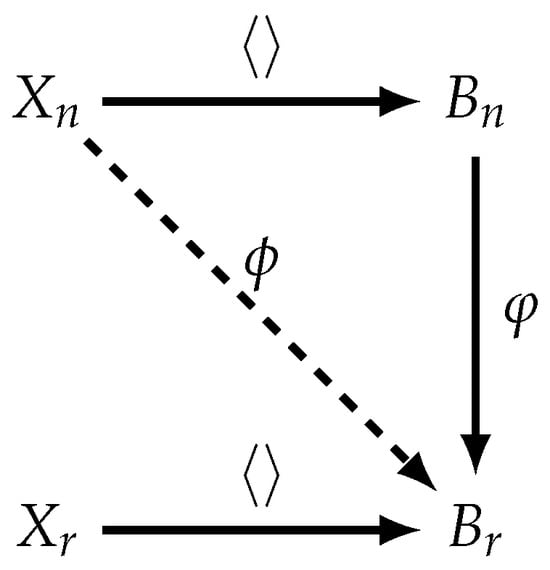

Let represent an arbitrary set of symbols, where . Within , each element and its inverse (or equivalently, ) are denoted as literals. A word w signifies a finite sequence of literals from . A word w is considered reduced if all occurrences of sub-words or are eliminated. A group G is termed a free group with a generating set , denoted as , if every nontrivial element in G can be expressed as a reduced word in . If N is a normal subgroup of a free group G, then the factor group is relatively free if N is fully invariant. That is, for any endomorphism of G. A Burnside group is a (relatively) free group with a generating set , where the order of all the words in is 3 [23,24,25,26]. For the (relatively) free groups and where , the universal property holds as follows: for every mapping , for some (relatively) free group , there exists a unique homomorphism (Figure 1).

Figure 1.

Universal property of a free group .

The group operation, we shall refer to this as a concatenation operation (·), between words is to write and side by side and generate the reduced word in . This is denoted by (or ) for any . Since the order of is 3, for all . The empty word is the identity in and is represented by 1. Each word in can also be represented in normal form, as in Equation (4) [8,9]. More comprehensive details are provided in the literature [18,20,22,23,25,27,28].

In the normal representation of a word w in a Burnside group , , , are the exponents of generators , 2-commutators , and 3-commutators , respectively. The following example illustrates the transformation of a word in a Burnside group .

Example 1.

This is an example of transforming a word in a Burnside group with a generating set to a corresponding normal representation. Properties associated with commutator words in a Burnside group are discussed in Appendix A. The transformation is as follows, where at each step the bold expression from the previous line is simplified using the underlined transformation in the next line:

The order of a group is where . The abelianization operation, denoted by , is defined in Equation (5), which collects all the generators and corresponding exponents in a word from Equation (4).

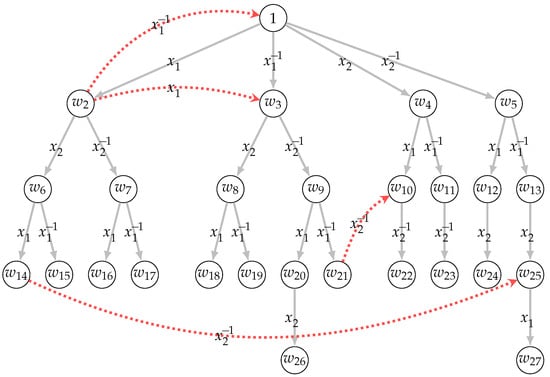

Finitely generated Burnside groups can be geometrically represented using Cayley graphs. The Cayley graph of a Burnside group , defined with respect to a generator set , depicts group words as vertices. Edges connect two vertices if a generator’s (or its inverse) multiplication transforms one into the other. The Cayley distance between two words is the shortest path length between their corresponding vertices in the Cayley graph. The Cayley norm of a word is defined as its distance from the identity word in the Cayley graph. Figure 2 illustrates the partial Cayley graph with essential edges connecting all words in a Burnside group with a generating set in breadth-first order.

Figure 2.

A (partial) Cayley graph of a Burnside group .

2.2. Learning Burnside Homomorphisms with Noise

There exists a homomorphism for any random mapping from a generating set to a Burnside group . denotes a set of homomorphisms from to . For each generator in the generating set , there are possible mappings where . The order of a set of all homomorphisms is . The distribution represents the error distribution in a set of errors (Details are illustrated in Section 2.4). For , the distribution defines the outputs , where is randomly chosen from and with . On the other hand, the corresponding random distribution defines the outputs , where both and are chosen uniformly from and , respectively. Similarly, and represent the oracles with distributions and , respectively. The decisional -LHN problem is to distinguish the oracles and with a non-negligible advantage from given polynomial samples. By setting the value of n, a level of security of bits can be achieved from the decisional -LHN problem. The security parameter is defined as , where . Therefore, the decisional -LHN assumption is formally stated as follows:

Definition 1 (Decisional Bn-LHN Assumption).

For any adversary and sufficiently large security parameter λ, there exists a negligible function , such that

2.3. Minicrypt Using Burnside Learning Problem

A secret-key cryptosystem utilizes a single secret-key for both encryption and decryption tasks. This shared key is exclusive to the communicating entities and necessitates a secure channel for its distribution. Mathematical functions within symmetric key algorithms facilitate the transformation of plaintext into ciphertext and vice versa. The utilization of a symmetric cryptosystem based on the decisional hardness of the -LHN problem is explored in [8]. To encrypt t-bit message m, we define independent words in . Words and are independent if the sets and are disjoint, for all . To encrypt the decimal number m that represents a t-bit message, ciphertext is generated, where and for error . A homomorphism sampled uniformly from represents a shared secret-key. To decrypt a ciphertext , we compute . The plaintext is recovered as m if the word is in the set .

2.4. Error Distribution

The security of the -LHN learning problem relies on the assumed hardness of group theoretic problems and introduced errors. The introduction of errors contributes to making these problems computationally hard, forming the foundation of the security assumptions. Recall that in the context of the hardness of the -LHN problem, we define two Burnside groups and such that . The error distribution in a Burnside group is generated by concatenating generators from in random order, accompanied by random exponents from ternary set [8,9,10].

The probability mass function of errors is precisely defined as follows [8]:

In Equation (7), is the ith component of a vector sampled uniformly from a field . is the set of all permutations of a set . The probability mass function in Equation (7) generates a multiset with possible errors in . The abelianization operation () extracts the generators and their corresponding exponents from a word, while discarding any other exponents, as shown in Equation (5). In a set of samples with , represents an error generated according to the distribution from a set E. For randomness in abelianized samples , an error distribution is required. The computation ensures randomness, emphasizing the importance of establishing the error distribution as defined in Equation (7) to prevent abelianization attacks on the -LHN hardness assumption [8].

Let , denotes a multiset of errors defined in Equation (7). Here, represents a set of errors with Cayley norm l. Correspondingly, let , where is the associated underlying set of the multiset . The function is defined by simplifying an error in M through multiple concatenation operations in the Burnside group . The order of the multiset M is . Similarly, the order of the subsets and is and , respectively. Since the function f maps an error from to , has precisely preimages for an error in . In other words, errors in constitute different representations for an error in . Considering identical errors in as a cluster, there are such clusters in . The straightforward approach to sample errors according to distribution is to determine , as indices and exponents, respectively. However, this approach requires multiple concatenation operations to obtain the simplified error. A bottleneck for cryptosystems based on the -LHN assumption arises due to the multiple concatenations for simplifying an error. However, achieving a distribution of errors within an error set E can be realized through two distinct methods. In the initial approach, we establish a mapping from a multiset M to an error set E using multiple concatenation operations. This constitutes a one-time precomputation, serving for all subsequent error computations from set E based on the distribution . As a second approach, we assign the subset an appropriate weight, ensuring that the induced distribution in M is uniform, a requirement for the -LHN cryptosystem. By assigning a distribution weight to the subset as , we can achieve a uniform distribution of M, representing the distribution of E [10].

3. Bn Optimization

The cryptographic primitives developed in this study are constructed based on the -LHN hardness assumption. The -LHN hardness assumption assures that no adversary distinguishes the outputs from random outputs with non-negligible advantage, see Definition (1). Here, and is a secret-homomorphism sampled uniformly from . The error is sampled from a set of errors E according to the distribution and (·) is the concatenation operation. Cryptographic primitives leveraging the -LHN problem require an optimized design for two key operations: 1. Concatenation operations within the Burnside groups and . 2. Homomorphisms from a Burnside group to a Burnside group for . In this section, an efficient concatenation operation is introduced within the Burnside groups and for , highlighting a parallel approach.

3.1. Representation of a Word

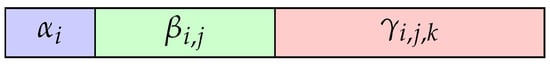

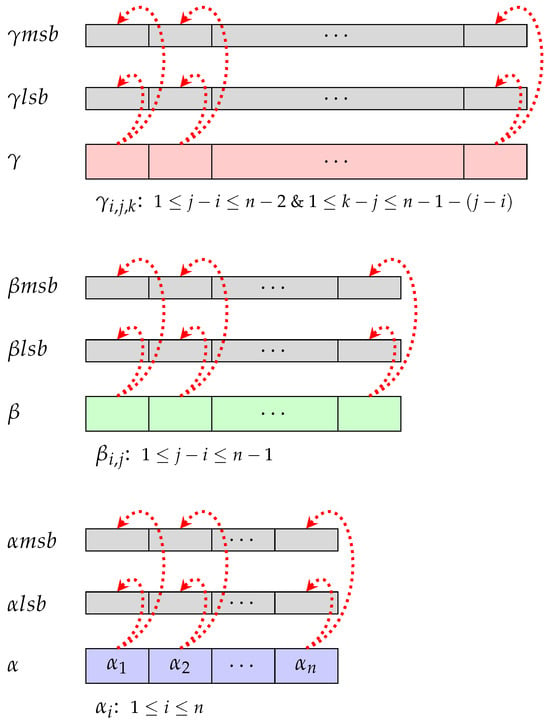

The most fundamental operation within the Burnside groups is the concatenation operation. To achieve an efficient concatenation operation, we utilize the standard representation of a word w in a Burnside group , as displayed in Equation (4). The normal representation of a word w in comprises approximately generators, 2-commutators, and 3-commutators alongside their respective exponents. To execute the concatenation operation, it suffices to store the exponents of generators, 2-commutators, and 3-commutators of a word w as a sequence. Figure 3 shows a word w in a Burnside group . In memory, a word w in a Burnside group requires the space for n exponents for generators, exponents for 2-commutators, and exponents for 3-commutators.

Figure 3.

Normal representation of a word .

The first block of a word w in Figure 3 sequentially holds the exponents for . We shall refer to this as the alpha-block of a word w for future convenience. The second block of a word w, referred to as the beta-block, sequentially stores the exponents for . The beta-block contains exponents. The third block of a word w, known as the gamma-block, sequentially contains the exponents for . The gamma-block encompasses exponents. Collectively, we represent a word as for .

3.2. Computing the Group Operation: The Collecting Process

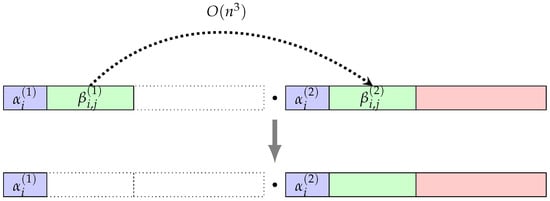

To concatenate words and in a Burnside group , represented as , we utilize a three-stage collecting process. For , let , , and be the corresponding exponents in the alpha-block, beta-block, and gamma-block in the resultant word , respectively. Furthermore, let the words and be defined as and , respectively.

In stage 1 of the collecting process, the exponent in the gamma-block of merges with the corresponding exponent in the gamma-block of , as shown in Figure 4. Since the 3-commutator commutes with everything, as in Property (4), the time complexity to merge an exponent with the corresponding exponent is . Since there are exponents in the gamma-block of , the time complexity to merge the gamma-block of with the gamma-block of is .

Figure 4.

Collecting process (stage 1).

In stage 2, the exponent in the beta-block of merges with the corresponding exponent in the beta-block of , as shown in Figure 5. During the merge, the exponent swaps with the exponents in the alpha block of . Each swap between and generates a 3-commutator exponent, as in Property (2), which can freely move towards the gamma-block of the resulting word w with complexity. Since there are exponents in the beta-block of that swap with the exponents in the alpha-block of , the time complexity for stage 2 is .

Figure 5.

Collecting process (stage 2).

In the final stage, the lexicographical order among exponents in the alpha-block of and alpha-block of is restored, as shown in Figure 6. The swapping among the exponents of the alpha-blocks of and creates a 2-commutator exponent, as in Property (1). The generated 2-commutator exponent crosses the exponents in the remaining alpha-block of to merge into the beta-block of the resultant word w. Since there are swaps among generators in the alpha-blocks of and , and as there are at most crossings for each generated 2-commutator exponent, the time complexity for stage 3 is .

Figure 6.

Collecting process (stage 3).

Example 2 illustrates the collecting process of a concatenation operation in a Burnside group .

Example 2.

In a Burnside group with a generating set , let and be two words in . The concatenation operation using the three-stage collecting process in is computed as follows, where at each step the bold expression from the previous line is simplified using the underlined transformation in the next line:

3.3. Computing the Group Operation: A Direct Approach

For the subsequent discussions, we use the following properties associated with exponents of generators, 2-commutators, and 3-commutators. The properties follow from the details as discussed in Appendix A.

Property 1 (Swapping Generators).

Let and be words in a Burnside group , defined as and , respectively. For , the collecting process in allows the swapping of exponents and , updating the resulting exponent as follows: .

Property 2 (Swapping 2-Commutator and Generator).

Let and be words in a Burnside group , defined as and , respectively. For and with and , the collecting process in allows the swapping of exponents and . The swap updates the resultant exponent in two scenarios:

- Case I:

- If or , .

- Case I:

- If , .

Note: If or , the collecting process in allows the swapping of exponents and without necessitating updates to the resultant exponent .

Property 3 (Swapping 2-Commutators).

Let and be words in a Burnside group , defined as and , respectively. For and , the collecting process in allows the swapping of exponents and without requiring updates to the resultant exponents.

Property 4 (Swapping 3-Commutator with Everything).

Let the words and in a Burnside group be defined as and , respectively. For and , the collecting process in allows the swapping of exponents and without requiring updates to the resultant exponents. Similarly, for and , the collecting process in allows the swapping between exponents and without requiring updates to the resultant exponents. Likewise, for and , the collecting process in allows the swapping between exponents and without requiring updates to the resultant exponents.

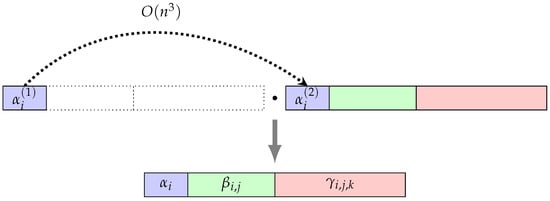

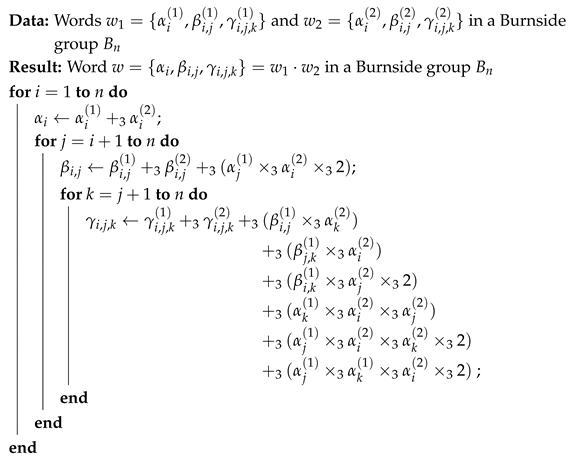

Utilizing the idea of the collecting process, we design a direct concatenation operation to generate the resultant word for , as shown in Algorithm 1. Note: and are addition and multiplication mod 3 operations, respectively.

| Algorithm 1: Direct concatenation operation (·) |

|

3.4. Computing the Group Operation: A Parallelizable Direct Approach

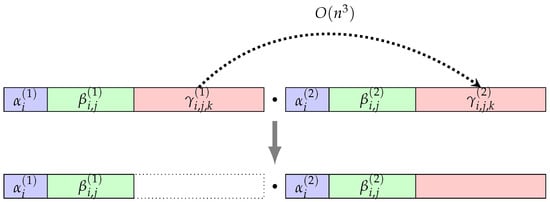

The direct concatenation operation defined for a Burnside group that utilizes the collecting process requires time complexity, as illustrated in Algorithm 1. In this section, we enhance the design of the direct concatenation operation in Algorithm 1, where we can utilize parallel computation. We shall refer to this as a parallelizable direct concatenation operation. To achieve a parallelizable direct concatenation operation, we further extend the memory representation of the alpha-block, beta-block, and gamma-block of a word in a Burnside group . We employ the Type I and Type II representations proposed by [29].

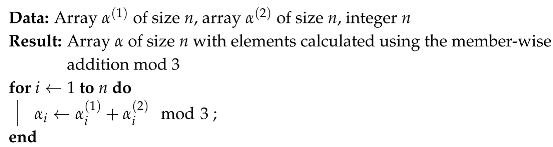

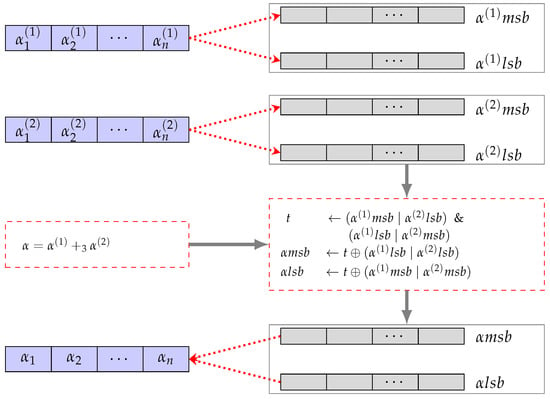

3.5. Parallel Addition and Multiplication mod 3

For the subsequent discussion, we introduce a parallel design for member-wise addition modulo 3 and multiplication modulo 3, crucial for implementing the parallelizable concatenation operation. To illustrate, let us examine the initial computation from Algorithm 1. With the naive approach, we can formulate the algorithm, as depicted in Algorithm 2.

| Algorithm 2: addition modulo 3 operation |

|

For the parallel design of the addition modulo 3 and multiplication modulo 3, we employ two binary arrays of size n, namely and , in lieu of a ternary array of the same size. This is denoted as . The transformation from the ternary array to its equivalent binary representations and is conducted as follows: If is 0, both corresponding bits and are set to 0. In the case where is 1, the bit is set to 0, and the bit is set to 1. Finally, if is 2, the bit is set to 1, and the bit is set to 0. Similarly, the binary representations for an alpha-block (), beta-block (), and gamma-block () for a word w are shown in Figure 7.

Figure 7.

Memory representation of a word in .

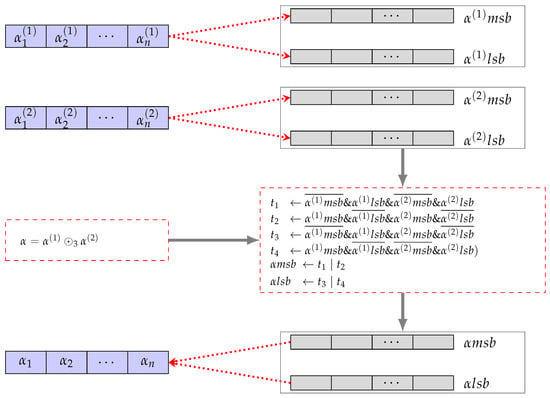

Utilizing Algorithm 3, we achieve time complexity in a parallel setting, a significant improvement compared to Algorithm 2, for the computation for . This computation can be implemented with just seven machine instructions. Similarly, we achieve the time complexity in a parallel setting for for using Algorithm 4. This provides a streamlined and efficient approach for both addition and multiplication operations in comparison to the previously mentioned Algorithm 2. From now on, we represent the parallel designs for and for as and , respectively, without using the indices. This simplification enhances the clarity and conciseness in our notation. Equivalent parallel designs are depicted in Figure 8 and Figure 9.

| Algorithm 3: Parallel addition mod 3 operation, |

Data: Ternary arrays and of size n Result: Ternary array of size n for the member-wise addition mod 3, where |

Figure 8.

Parallel addition mod 3 operation.

Figure 9.

Parallel multiplication mod 3 operation.

| Algorithm 4: Parallel multiplication mod 3 operation, |

Data: Ternary arrays and of size n Result: Ternary array of size n for the member-wise multiplication mod 3, where |

3.6. Multi-Block Representation of a Word

The extended memory representation of a word in a Burnside group to achieve time complexity is visualized as follows: Note: One block is an n-sized array filled from left to right in chronological order.

3.6.1. Representation of Alpha-Block

To represent an alpha-block of a word , we define a block that stores all exponents for . That is, the block stores the entries .

3.6.2. Representation of Beta-Block

To represent a beta-block of a word , we define n-sized blocks for . The block stores all exponents , such that for from left to right. The remaining entries are set to zero. For instance, the block stores the exponents . The last entry in the block is set to zero. Similarly, the last block stores only exponent as . All other entries except the first in the block are set to zero.

3.6.3. Representation of Gamma-Block

To represent a gamma-block of a word , we define n-sized blocks for and . The block stores all exponents , such that and for from left to right in a chronological order. The remaining entries are set to zero. For instance, the block stores the exponents . The last two entries in the block are set to zero. Similarly, the last block stores only exponent as . All other entries except the first in the block are set to zero.

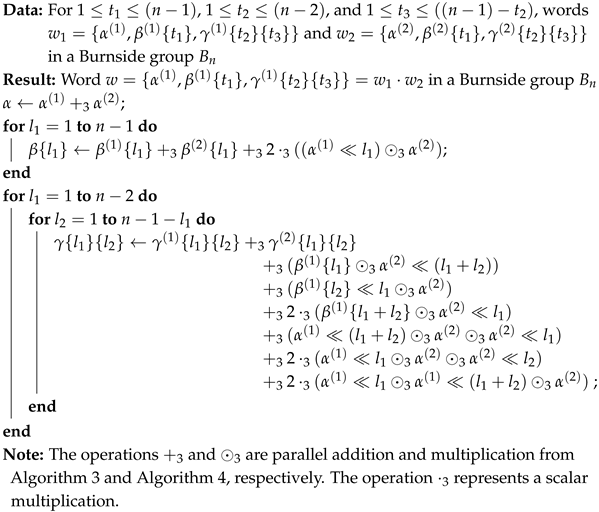

Utilizing the multi-block structure, we can represent a word in a Burnside group as , where , , and . Similarly, we represent words and in a multi-block structure as and for , , and . Algorithm 5 demonstrates the parallelizable direct concatenation operation in a Burnside group using the parallel block-addition and block-multiplication from Algorithm 3 and Algorithm 4, respectively.

| Algorithm 5: Parallelizable direct concatenation operation (·) |

|

3.7. Homomorphism Optimization

A homomorphism from a Burnside group , where , to another Burnside group is defined as a mapping from a generator to a word . Consequently, a homomorphism is represented as , where . Consider a word in a Burnside group with exponents , , and in the alpha-block, beta-block, and gamma-block, respectively, for . The computation of the homomorphism in a word , denoted as , is as Equation (8).

As a naive approach, a 2-commutator and a 3-commutator requires 3 and 9 concatenation operations in , respectively. Note: We calculate the inverse of a word as . However, while performing the homomorphism, there are many duplicate computations. For example, we can reuse the 2-commutator in the computation . Thus, to optimize the homomorphism computation, we generate weight-2 and weight-3 look-up tables that store 2-commutators () and 3-commutators (), respectively. For a given homomorphism , generating weight-2 and weight-3 look-up tables is a one-time precomputation and can be utilized for all subsequent computations in .

4. PRF Construction

In this section, we design three progressively refined constructions of a PRF family using the GGM approach, grounded in the -LHN assumption. The primary challenge in designing a PRF based on the -LHN assumption lies in managing the errors associated with it. The key idea here, in the PRF designs, is to extract errors from the secret-key of the underlying PRF. The initial PRG design, termed the direct PRG, applies the -LHN assumption by capitalizing on the lower entropy of a set of errors E compared to a Burnside group . In the second approach, we introduce a PRG design, referred to as the parameterized PRG, where the function description of a PRG is derived from the public parameters and secret-key of the underlying PRF. Although this may seem counterintuitive at first, our findings indicate that the intermediate PRG used in the GGM’s PRG-based PRF construction places a less strict requirement on the PRG itself, as discussed in the following outlines. In the final approach, we propose a design called an indexed PRG with a set of public parameters and an index associated with it.

Construction 1 (Direct PRG).

Let be a homomorphism in . For some , we define a function with as follows:

where each is computed as for . Here, the values are sampled from the Burnside group , while are chosen from the error set E.

The input and output bit sizes of G are given by and , respectively. Here, p and denote the entropy of the Burnside groups and , respectively, while represents the entropy of the homomorphism set , and characterizes the entropy of the error set .

Theorem 1.

If the -LHN assumption holds and , then the function G from Construction 1 is a PRG.

Proof.

The proof follows directly from the -LHN assumption. A function G qualifies as a PRG if its output bit length exceeds its input bit length.

The input bit size of G is given by , while the output bit size is . For G to be a PRG, it must satisfy , which simplifies to

Rearranging the inequality, we obtain

Solving for t, we obtain the required bound:

Since the entropy of the set of errors E (denoted by ) is smaller than the entropy of the Burnside group (denoted by ), we can select a sufficiently large t to overcome the entropy in the input of G. This ensures that the entropy of the output of G exceeds that of its input, thereby establishing G as a PRG. □

Construction 2 (PRF from Direct PRG).

(Outline) We construct a PRF by utilizing a length-stretching PRG G from Construction 1 as follows: First, we construct a length-doubling PRG from a length-stretching PRG G by cascading multiple PRGs in series. Second, we define a PRF , for any input and secret-key k, using the GGM approach as follows:

The secret-key k is where is sampled from a set of homomorphisms . Moreover, , for . The size of the secret-key k is where . However, a disadvantage of this construction appears to be a notably large secret-key size, even for small values of n.

We suggest an adjustment to the direct PRG, leading to a significant decrease in the secret-key size of a PRF. This reduction is considerable, particularly for a large enough n, reducing the key size significantly. This modified construction introduces extra public parameters and is denoted as the parameterized PRG. The construction is detailed as follows:

Construction 3 (Parameterized PRG).

Let be a homomorphism in . For some , a function with is defined as

For , and . Furthermore, is a public parameter associated with an error . Here, the input and output bit size of a function G are and , respectively.

Claim 1.

Length-doubling parameterized PRG is sufficient for a GGM PRG-based PRF approach, as demonstrated in Construction 3 and Theorem 2.

Theorem 2.

Let the -LHN assumption hold and . A function G, as in Construction 3, is a PRG if the following holds: for the input seed to a function G, we generate an associated public parameter sampled uniformly from for .

Proof.

The proof becomes straightforward using the argument that we are stating the -LHN assumption from a different perspective. To illustrate the proof, let us consider a scenario where a adversary aims to distinguish the oracles with distributions and .

Consider a function G obtained from Construction 3. With G, a distribution produces an output from a secret input for . The secret input is uniformly sampled, that is and for . Additionally, an adversary having access to from its oracle also has access to a set of public parameters sampled uniformly from . Here, for all and . Similarly, the corresponding random distribution is identical to , except that the output is replaced with a randomly generated output for . By utilizing the hybrid argument, the proof is simplified and becomes straightforward from the -LHN assumption. □

Construction 4 (PRF from parameterized PRG).

Let be a secret-key where , for , and . Let G be a parameterized PRG, as defined in Construction 3, that uses a set of public parameters for . A pseudorandom function (PRF) , for input string and secret-key k is defined as

where the ith iteration of a function call uses a set of public parameters for . Note: and represent an equal left and right half of the output .

We further propose a modification to the parameterized PRG that yields a significant decrease in the secret-key size of a PRF. The construction is detailed as follows:

Construction 5 (Indexed PRG).

Let be a homomorphism in . An indexed PRG with index is constructed as

where and e is sampled from a set of errors E. Furthermore, a is sampled from a Burnside group and is a public parameter associated with the input seed e. Here, the input and output bit size for a function are the entropies of a set of errors E and a Burnside group , respectively.

Claim 2.

In particular, for a Burnside group with , an indexed PRG is a length-doubling PRG because the entropy of a Burnside group is roughly twice the entropy of a set of errors E. Furthermore, indexed PRG is sufficient for a GGM-based PRF construction as demonstrated in Construction 6 and Theorem 3.

Theorem 3.

Let the -LHN assumption hold, and φ is a homomorphism sampled uniformly from . A function , as in Construction 5, is a PRG if it is used as an intermediate function in a PRF from Construction 6.

Proof.

The proof is similar to Theorem 2. Moreover, if is used as an intermediate function, as in Construction 6, the following holds: for the input seed to a function , we generate an associated public parameter a sampled uniformly from a Burnside group . □

Construction 6 (PRF from indexed PRG).

Let be a secret-key where , . Let be an indexed PRG as defined in Construction 5. For , let represent a set of public parameters, where is sampled uniformly from a Burnside group . A PRF for input string and secret-key k is defined as

where the ith iteration of a function call uses an associated public parameter for . Note: and represent an equal left and right half of the output .

5. Performance Evaluation

In all PRF constructions, the universal property of relatively free groups, specifically Burnside groups and (), is utilized. The security parameter is tied to a homomorphism , where each generator has possible mappings, with . Thus, the number of homomorphisms is , yielding

Similarly, the entropies of the Burnside groups and are denoted by p and , respectively. For a constant in the target Burnside group , Table 1 illustrates the entropies and associated security bits for different values of n, where . These entropies quantify the complexity and security level of cryptographic operations involving Burnside groups, providing a foundation for evaluating various cryptographic applications.

Table 1.

Entropies (in bits) associated to and .

5.1. Efficiency of Burnside Group Operation and Homomorphism

Table 2 compares concatenation times in Burnside groups for varying n (with ) using Algorithms 1 and 5. Similarly, Table 3 analyzes the efficiency in (, ), showing the computation time (ns) and ops/sec for . The results reveal how n affects complexity, crucial for cryptographic applications of .

Table 2.

Average time * for concatenation operation in and .

Table 3.

Average time * for where , with .

5.2. Performance Metrics of PRG and PRF Constructions

Table 4 and Table 5 compare the input and output sizes, in bits, for the two PRG constructions. Table 4 analyzes Construction 1 with , where is the error set entropy. Table 5 evaluates Construction 3, showing p, , and public parameter (pp) size for various n.

Table 4.

Input/output (in bits) for direct PRG for .

Table 5.

Input/Output (in bits) for parameterized PRG for .

For a PRF constructed from the indexed PRG (Construction 5), the details are as follows:

- Secret-key size = bits.

- Input size = m bits.

- Output size = bits.

- Public parameter size = bits.

- Number of computations (for all m invocations of ) = m.

Finally, we can safely conclude that for a 1-bit output, the PRF requires an average of number of computations, which is equal to the total number of computations divided by the output size.

For a PRF constructed from the indexed PRG (Construction 5), the key parameters are defined as follows: The secret-key size is given by bits. The input size is m bits, while the output size is bits. The size of the public parameter is bits. The total number of computations required for all m invocations of is exactly m. Consequently, the PRF requires an average of evaluations of per output bit, obtained by dividing the total computations by the output size.

6. Conclusions

In conclusion, this paper explored foundational concepts related to relatively free groups, with a specific focus on the Burnside group. The examination of the -LHN hardness assumption and its incorporation into elucidating error distribution establishes a robust foundation for post-quantum hardness assumptions. The streamlined concatenation operation within Burnside groups and , as presented in the paper, highlights the achievable parallel efficiency in this context. Looking ahead, the paper offers insights into three distinct approaches for constructing a pseudorandom function (PRF) based on the original -LHN assumption without derandomization. Notably, the PRG-based PRF design demonstrated a significant reduction in secret-key size compared to alternatives stemming from the modified -LHR assumption.

Author Contributions

Conceptualization, D.K.P.; Formal analysis, D.K.P.; Supervision, A.R.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Properties of Commutators in a Burnside Group

A 2-commutator is a result of commuting two words in a Burnside group , as in Definition A1. Similarly, an l-commutator is defined as in Definition A2.

Definition A1

(2-Commutator). For and , a 2-commutator in is defined as:

In general, an l-commutator in , for all , is defined as

Definition A2

(l-Commutator). Let , a l-commutator in is defined as

Some properties associated with 2-commutators include

- •

- In a 2-commutator, the interchange of two elements yields the inverse of the original 2-commutator.

- •

- 2-commutators commute among themselves.

Some properties associated with 3-commutators include

- •

- In the case where two elements within a 3-commutator are identical, the resulting 3-commutator is the identity element.

- •

- The value of a 3-commutator remains unaffected by cyclic rotation.

- •

- In a 3-commutator, the interchange of two elements yields the inverse of the original 3-commutator.

- •

- The 3-commutator exhibits commutativity with all elements.

For all , the following holds for l-commutators:

- •

References

- Ajtai, M. Generating hard instances of lattice problems extended abstract. In Proceedings of the Twenty-Eighth Annual ACM Symposium on Theory of Computing, Philadelphia, PA, USA, 22–24 May 1996; pp. 99–108. [Google Scholar]

- Brakerski, Z.; Langlois, A.; Peikert, C.; Regev, O.; Stehlé, D. Classical hardness of learning with errors. In Proceedings of the Forty-Fifth Annual ACM Symposium on Theory of Computing, Grand Rapids, MN, USA, 1–4 June 2013; pp. 575–584. [Google Scholar]

- Micciancio, D.; Regev, O. Lattice-based cryptography. In Post-Quantum Cryptography; Springer: Berlin/Heidelberg, Germany, 2009; pp. 147–191. [Google Scholar]

- Regev, O. New lattice-based cryptographic constructions. J. ACM (JACM) 2004, 51, 899–942. [Google Scholar] [CrossRef]

- Regev, O. Lattice-based cryptography. In Proceedings of the Advances in Cryptology-CRYPTO 2006: 26th Annual International Cryptology Conference, Santa Barbara, CA, USA, 20–24 August 2006; Proceedings 26. Springer: Berlin/Heidelberg, Germany, 2006; pp. 131–141. [Google Scholar]

- Regev, O. On lattices, learning with errors, random linear codes, and cryptography. J. ACM (JACM) 2009, 56, 1–40. [Google Scholar]

- Regev, O. The learning with errors problem (invited survey). In Proceedings of the 2010 IEEE 25th Annual Conference on Computational Complexity, Boston, MA, USA, 9–12 June 2010; pp. 191–204. [Google Scholar]

- Baumslag, G.; Fazio, N.; Nicolosi, A.R.; Shpilrain, V.; Skeith, W.E. Generalized learning problems and applications to non-commutative cryptography. In International Conference on Provable Security; Springer: Berlin/Heidelberg, Germany, 2011; pp. 324–339. [Google Scholar]

- Fazio, N.; Iga, K.; Nicolosi, A.R.; Perret, L.; Skeith, W.E. Hardness of learning problems over Burnside groups of exponent 3. Des. Codes Cryptogr. 2015, 75, 59–70. [Google Scholar] [CrossRef]

- Pandey, D.K.; Nicolosi, A.R. Learning Burnside Homomorphisms with Rounding and Pseudorandom Function. Secur. Inf. Technol. Commun. (SecITC2023) 2024, 14534, 1–19. [Google Scholar] [CrossRef]

- Blum, M.; Micali, S. How to generate cryptographically strong sequences of pseudorandom bits. SIAM J. Comput. 1984, 13, 850–864. [Google Scholar]

- Goldreich, O.; Goldwasser, S.; Micali, S. How to construct random functions. J. ACM 1986, 33, 792–807. [Google Scholar] [CrossRef]

- Luby, M. Pseudorandomness and Cryptographic Applications; Princeton University Press: Princeton, NJ, USA, 1996; Volume 1. [Google Scholar]

- Levin, L.A. The tale of one-way functions. Probl. Inf. Transm. 2003, 39, 92–103. [Google Scholar]

- Daemen, J.; Rijmen, V. AES Proposal: Rijndael; Report; AES submission; National Institute of Standards and Technology (NIST): Gaithersburg, MD, USA, 1999. [Google Scholar]

- Joan, D.; Vincent, R. The design of Rijndael: AES-the advanced encryption standard. In Information Security and Cryptography; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Naor, M.; Reingold, O. Number-theoretic constructions of efficient pseudo-random functions. J. ACM 2004, 51, 231–262. [Google Scholar] [CrossRef]

- Burnside, W. On an unsettled question in the theory of discontinuous groups. Quart. J. Pure Appl. Math. 1902, 33, 230–238. [Google Scholar]

- Golod, E.S.; Shafarevich, I.R. On the class field tower. Izv. Ross. Akad. Nauk. Seriya Mat. 1964, 28, 261–272. [Google Scholar]

- Adian, S.I. The Burnside Problem and Identities in Groups; Springer: Berlin/Heidelberg, Germany, 1979. [Google Scholar]

- Levi, F.; van der Waerden, B.L. Über eine besondere Klasse von Gruppen. In Abhandlungen aus dem Mathematischen Seminar der Universität Hamburg; Springer: Berlin/Heidelberg, Germany, 1933; Volume 9, pp. 154–158. [Google Scholar] [CrossRef]

- Shanov, I. Solution of the Burnside’s problem for exponent 4. Leningr. State Univ. Ann. (Uchenye Zap.) Mat. Ser. 1940, 10, 166–170. [Google Scholar]

- Hall, M. Solution of the Burnside Problem for Exponent 6. Proc. Natl. Acad. Sci. USA 1957, 43, 751–753. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Gupta, N. On groups in which every element has finite order. Am. Math. Mon. 1989, 96, 297–308. [Google Scholar]

- Hall, M. The Theory of Groups; Macmillan Company: New York, NY, USA, 1959. [Google Scholar]

- Ivanov, S.V. The free Burnside groups of sufficiently large exponents. Int. J. Algebra Comput. 1994, 4, 1–308. [Google Scholar] [CrossRef]

- Adian, S.I. The Burnside problem and related topics. Russ. Math. Surv. 2010, 65, 805. [Google Scholar] [CrossRef]

- Burnside, W. The Collected Papers of William Burnside: Commentary on Burnside’s Life and Work; Papers 1883–1899; Oxford University Press: Oxford, UK, 2004; Volume 1. [Google Scholar]

- Harrison, K.; Page, D.; Smart, N.P. Software implementation of finite fields of characteristic three, for use in pairing-based cryptosystems. LMS J. Comput. Math. 2002, 5, 181–193. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).