Finite Element Method for Solving the Screened Poisson Equation with a Delta Function

Abstract

1. Introduction

- We propose a finite element method using integrated Legendre basis functions to solve the screened Poisson equation with Dirac delta forcing, preserving sparsity and capturing singular behavior.

- We develop a structured discretization approach that leads to Banded-Block-Banded Arrowhead matrices, and apply it in 1D, 2D, and 3D domains.

- We employ and adapt the ADI method to efficiently solve the resulting systems.

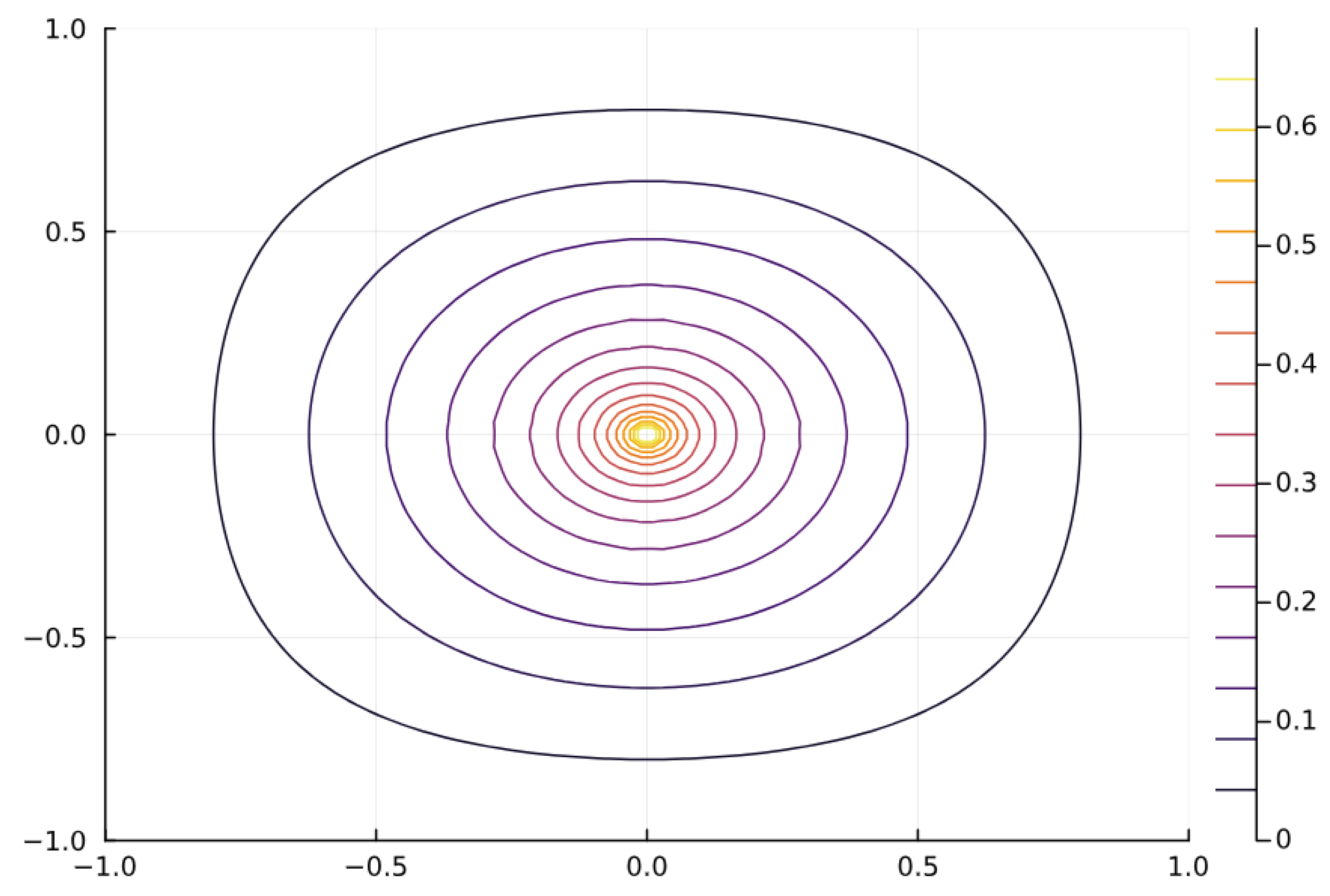

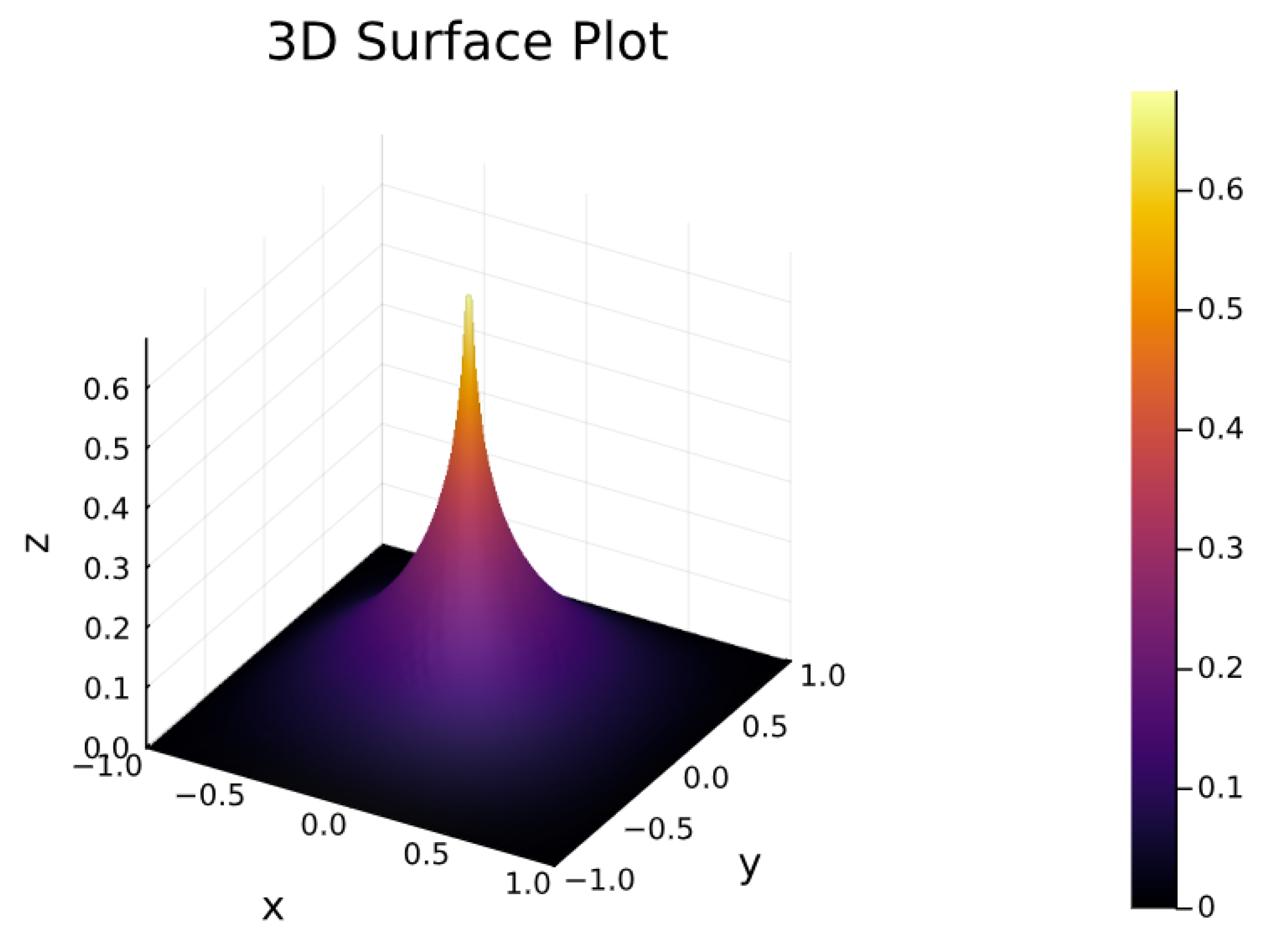

- We present detailed numerical results that confirm the expected Green’s function behavior and validate the accuracy of our method for singular sources.

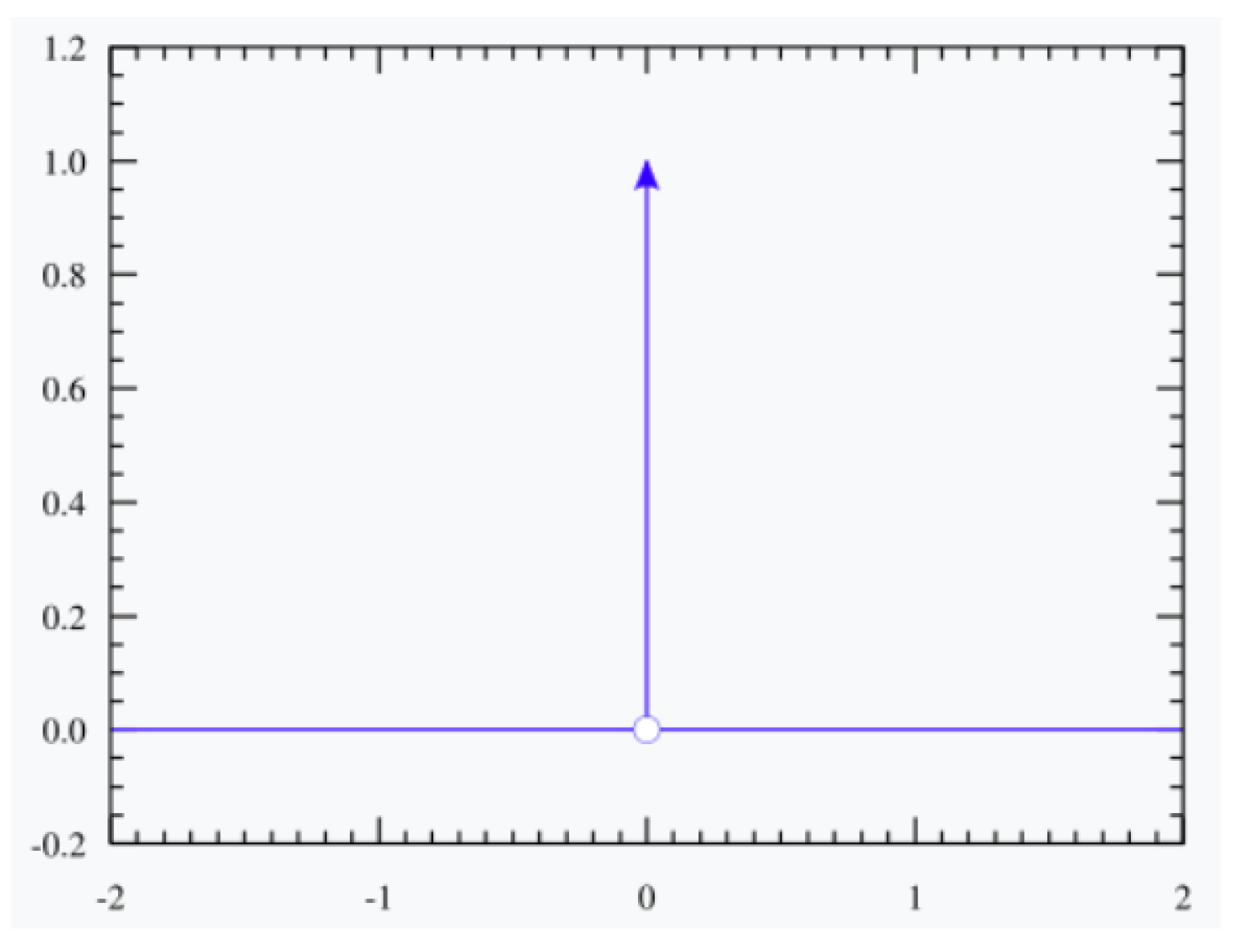

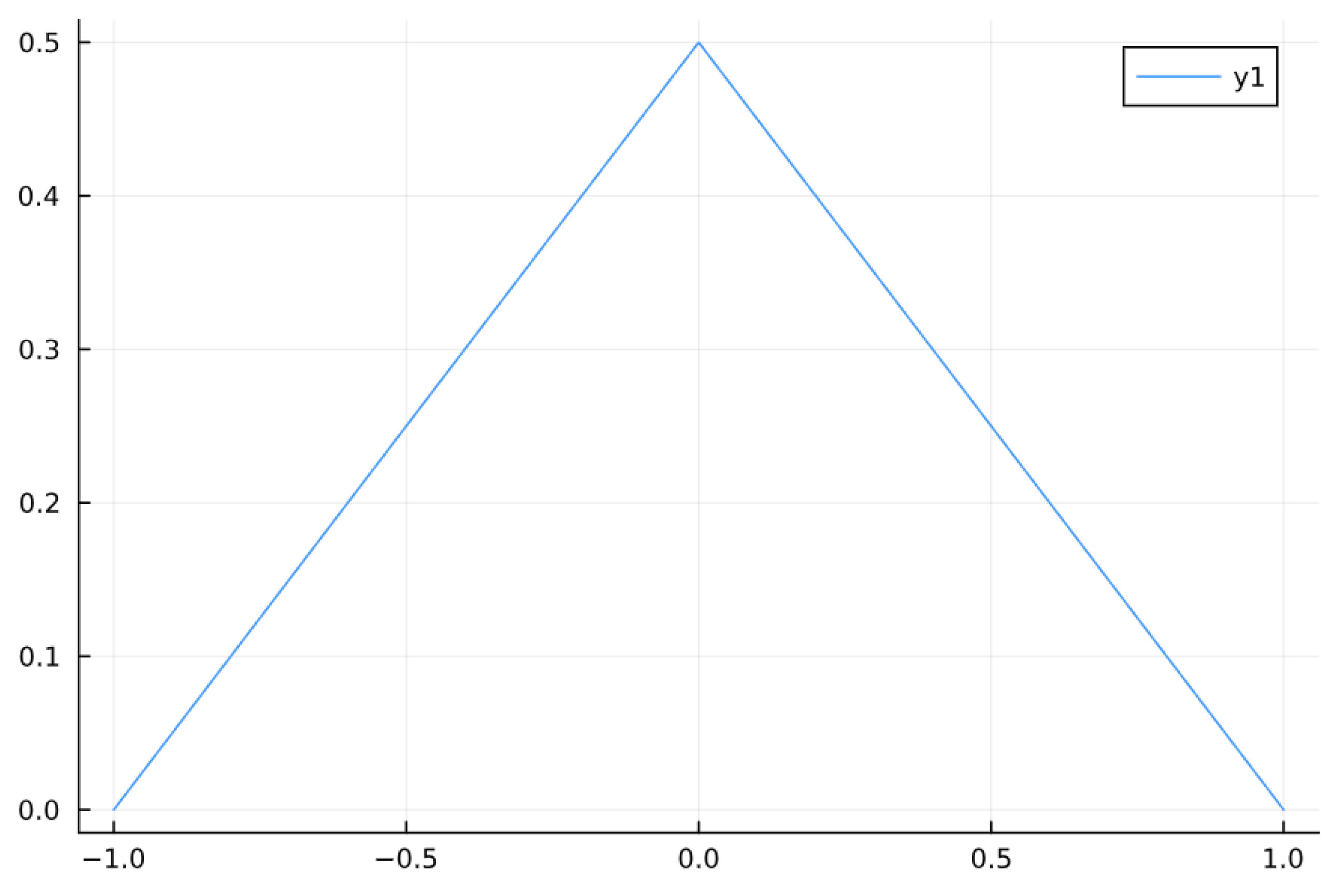

2. Delta Function

2.1. Definitions

2.2. As a Measure

2.3. Generalizations

3. Integrated Legendre Functions

3.1. A Set of Basis Functions Defined over a Interval

3.2. Multiple Intervals

3.2.1. Mass Matrix

3.2.2. Weak Laplacian

3.3. Homogeneous Dirichlet Boundary

- (1)

- It is a matrix with a block-banded structure, where the block-bandwidths are .

- (2)

- The top-left block follows a banded pattern with bandwidths .

- (3)

- The blocks in the first row, denoted as , have bandwidths .

- (4)

- The blocks in the first column, represented as , exhibit bandwidths .

- (5)

- All other blocks, labeled as , are diagonal.

4. Discretizations of the Screened Poisson Equation

4.1. Screened Poisson in 1D

4.2. Screened Poisson in 2D

| Algorithm 1 Generalized ADI Solver |

|

4.3. Screened Poisson in 3D

5. Computational Cost Analysis

5.1. Sparse Matrix Construction

5.2. ADI Iterative Algorithm

5.3. Scalability and Parallelization

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| FEM | Finite Element Method |

| ADI | Alternating Direction Implicit |

References

- Fortunato, D.; Townsend, A. Fast Poisson solvers for spectral methods. IMA J. Numer. Anal. 2020, 40, 1994–2018. [Google Scholar] [CrossRef]

- Knook, K.; Olver, S.; Papadopoulos, I. Quasi-optimal complexity hp-FEM for Poisson on a rectangle. arXiv 2024, arXiv:2402.11299. [Google Scholar]

- Babuška, I.; Craig, A.; Mandel, J.; Pitkäranta, J. Efficient preconditioning for the p-version finite element method in two dimensions. SIAM J. Numer. Anal. 1991, 28, 624–661. [Google Scholar] [CrossRef]

- Wachspress, E.L. Three-variable alternating-direction-implicit iteration. Comput. Math. Appl. 1994, 27, 1–7. [Google Scholar] [CrossRef]

- Schwab, C. p-and hp-Finite Element Methods: Theory and Applications in Solid and Fluid Mechanics; Clarendon Press: Oxford, UK, 1998. [Google Scholar]

- Szabó, B.; Babuška, I. Introduction to Finite Element Analysis: Formulation, Verification and Validation; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Beuchler, S.; Schoeberl, J. New shape functions for triangular p-FEM using integrated Jacobi polynomials. Numer. Math. 2006, 103, 339–366. [Google Scholar] [CrossRef]

- Babuška, I.; Suri, M. The p and h-p versions of the finite element method, basic principles and properties. SIAM Rev. 1994, 36, 578–632. [Google Scholar] [CrossRef]

- Beuchler, S.; Pechstein, C.; Wachsmuth, D. Boundary concentrated finite elements for optimal boundary control problems of elliptic PDEs. Comput. Optim. Appl. 2012, 51, 883–908. [Google Scholar] [CrossRef]

- Beuchler, S.; Pillwein, V. Sparse shape functions for tetrahedral p-FEM using integrated Jacobi polynomials. Computing 2007, 80, 345–375. [Google Scholar] [CrossRef]

- Dubiner, M. Spectral methods on triangles and other domains. J. Sci. Comput. 1991, 6, 345–390. [Google Scholar] [CrossRef]

- Houston, P.; Schwab, C.; Süli, E. Discontinuous hp-finite element methods for advection-diffusion-reaction problems. SIAM J. Numer. Anal. 2002, 39, 2133–2163. [Google Scholar] [CrossRef]

- Jia, L.; Li, H.; Zhang, Z. Sparse spectral-Galerkin method on an arbitrary tetrahedron using generalized Koornwinder polynomials. J. Sci. Comput. 2022, 91, 22. [Google Scholar] [CrossRef]

- Karniadakis, G.; Sherwin, S.J. Spectral/hp Element Methods for Computational Fluid Dynamics; Oxford University Press: Oxford, MS, USA, 2005. [Google Scholar]

- Pavarino, L.F. Additive Schwarz methods for the p-version finite element method. Numer. Math. 1993, 66, 493–515. [Google Scholar] [CrossRef]

- Snowball, B.; Olver, S. Sparse spectral and-finite element methods for partial differential equations on disk slices and trapeziums. Stud. Appl. Math. 2020, 145, 3–35. [Google Scholar] [CrossRef]

- Averbuch, A.; Israeli, M.; Vozovoi, L. A fast Poisson solver of arbitrary order accuracy in rectangular regions. SIAM J. Sci. Comput. 1998, 19, 933–952. [Google Scholar] [CrossRef]

- Wikipedia Contributors. 2024. Available online: https://en.wikipedia.org/wiki/Diracdeltafunction (accessed on 10 January 2025).

- Olver, S.; Slevinsky, R.M.; Townsend, A. Fast algorithms using orthogonal polynomials. Acta Numer. 2020, 29, 573–699. [Google Scholar] [CrossRef]

- Olver, S.; Townsend, A. A fast and well-conditioned spectral method. SIAM Rev. 2013, 55, 462–489. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, L.; Tang, Y. Finite Element Method for Solving the Screened Poisson Equation with a Delta Function. Mathematics 2025, 13, 1360. https://doi.org/10.3390/math13081360

Tang L, Tang Y. Finite Element Method for Solving the Screened Poisson Equation with a Delta Function. Mathematics. 2025; 13(8):1360. https://doi.org/10.3390/math13081360

Chicago/Turabian StyleTang, Liang, and Yuhao Tang. 2025. "Finite Element Method for Solving the Screened Poisson Equation with a Delta Function" Mathematics 13, no. 8: 1360. https://doi.org/10.3390/math13081360

APA StyleTang, L., & Tang, Y. (2025). Finite Element Method for Solving the Screened Poisson Equation with a Delta Function. Mathematics, 13(8), 1360. https://doi.org/10.3390/math13081360