In this section, we will adapt the Pareto Explorer to perform from a given Pareto optimal solution a movement along the Pareto set/front toward a knee solution. Since we are considering problems with many objectives, we will first have to (slightly) modify the definition of the knee since for such problems the knee might not be located in the “center” of the Pareto front. Next, we will characterize such knee solutions. From Theorem 2, it follows that the normal of the CHIM can be used to steer the search in objective space, and also provides a possible stopping criterion for the algorithm we present afterwards.

3.1. The Problem

For a given

with

and

,

, the NBI sub-problem [

50] is defined as

It is well-known that for problems with

objectives not all points on the Pareto front can be obtained via solving a NBI-subproblem for a

with the restriction that

,

. As example, consider the unconstrained three objective problem

where

Figure 3 shows the Pareto front (in yellow) for this problem, where we have chosen

,

,

, and

. The blue circles represent the CHIM for this problem, and the black stars the resulting solutions obtained via solving (

13) (note that every blue circle can be expressed by

for a

with

and

,

).

Hence, via imposing the non-negativity for the

’s in (

12), the search for the “knee” solution is not based on the entire set of solutions of a given multi- (or many) objective optimization problem. We hence suggest to drop the non-negativity restriction leading to the following problem:

Let

and let

be defined as in Problem (

12), and then we define the knee as the solution of

The only difference between problems (

12) and (

16) is that in the latter one the non-negativity conditions for

, are omitted. The omission of the non-negativity has first been discussed in the original NBI work and later been used in implementations (e.g., Reference [

51,

52]) in the context of Pareto front approximations. For the definition of the knee, however, the non-negativity has been assumed in literature. The rational behind this that it was expected by the author that the knee solution (obtained via solving (

12)) is typically located near to the "center" of the Pareto front. While this is indeed the case for problems with few objectives, this does not have to hold any more if many objectives are considered. In the following, we show a counterexample using a MOP with only three objectives. Let

where the objectives

are given by

where

, and

,

, are defined as for MOP (

14).

Figure 4 shows the Pareto front of the problem (black point), as well as the CHIM (yellow) and the solution of the Pareto front approximation using NBI (blue circles). The knee according to (

12) is restricted to the latter set, and indicated by the green diamond. For this solution

we obtain

and

. When omitting the non-negativity restriction, one obtains

with

(red diamond) and

.

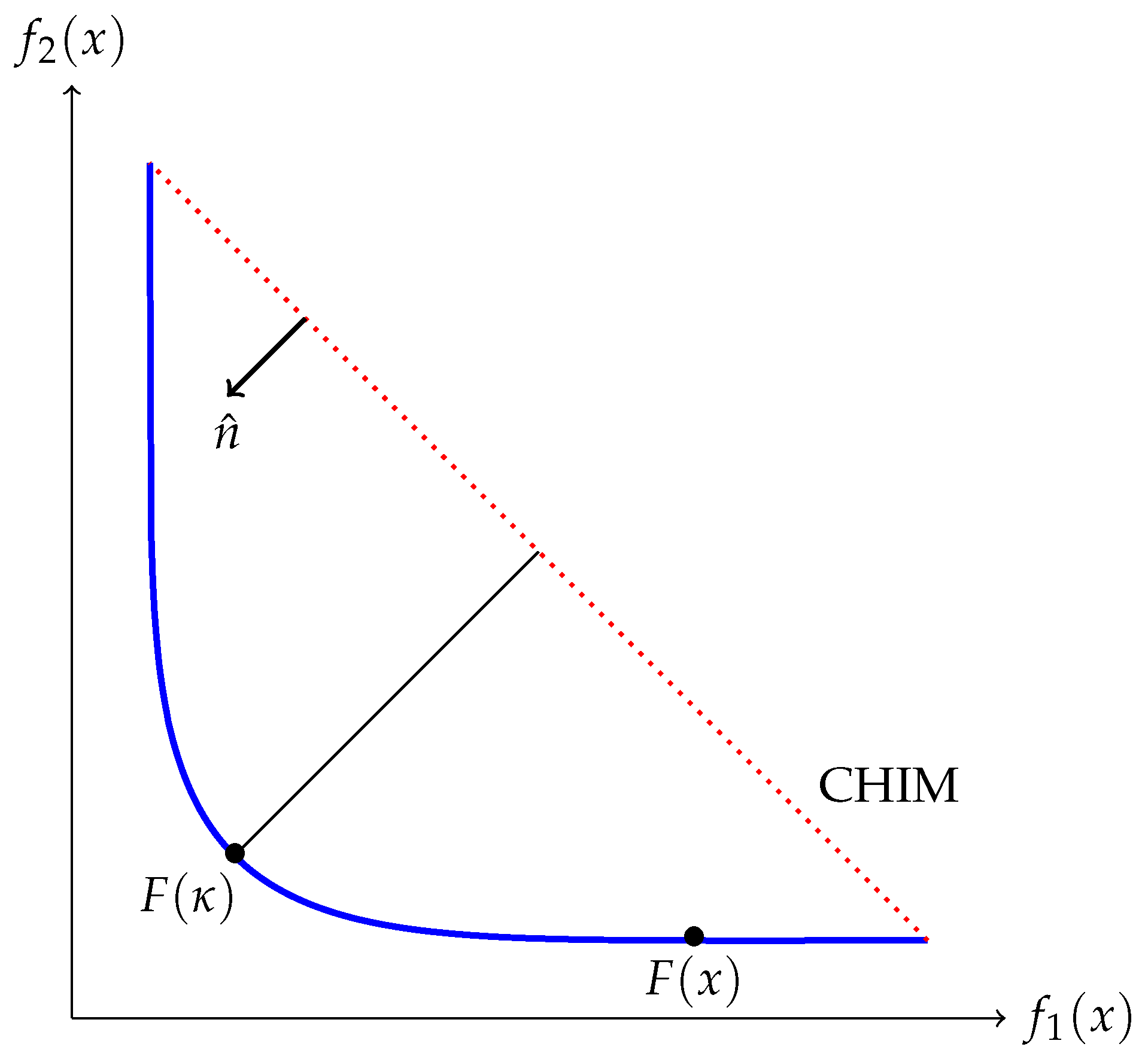

3.2. Characterization of the Knee

Figure 5 shows the Pareto front of a hypothetical BOP together with the image of a hypothetical starting point

. Since we are here focusing on the second phase of the Pareto Explorer, we are assuming that

is Pareto optimal (and hence, that

is located on the Pareto front). The figure already indicates that the movement in objective space can be performed in order to compute a sequence of points leading toward knee solutions. More precisely, one can choose the “best fit” direction of

that points along the linearized Pareto front at

, and perform a movement into this direction. The question, however, in this context, is when to stop the search. In addition, for this problem,

Figure 5 gives us a hint: denote by

the image of the solution

of (

16). Note that, at this point, the weight vector

and the desired direction vector

point into opposite direction. The following results shows that this is indeed the case, in general.

Theorem 2. Letbe a KKT point of (1), andbe its associated Lagrange vector as specified in Theorem 1. Further, letbe the normal vector of the CHIM as specified in (16). Ifis anti-parallel to, then there exist a vectorand a valuesuch that the tupleis a KKT point of Problem (16). Proof. If

is a KKT point of Problem (

16) associated to the MOP (

1), then there exist Lagrange multipliers

and

, where

,

,

, and

, such that the following conditions are satisfied:

We have to show that all of these equations are satisfied. For this, observe first that

is a KKT point of (

1), and hence, that

is located on the boundary of the image of the feasible region. Thus, by construction of the NBI subproblems there exist a vector

and a value

such that Equations (

20) and (21) are satisfied. In addition, notice that conditions (5) and (6) are equivalent to (22) and (23), respectively (since

is feasible). Further, when choosing

, where

c is any positive scalar, we also have equivalence of conditions (9) and (10) with (24) and (25), respectively.

It remains to show that also condition (

19) is satisfied for a vector

that is a KKT point of (

1) with

.

Since

satisfies (4), we have

Choosing

and multiplying the above equation by this value leads to

We see that the first equation of (

19) is satisfied when choosing

,

, and

(as already done before). If we choose

, also the second equation of (

19) is satisfied. It remains to show the third equation. Since

and

are anti-parallel, it holds

(note that

is already normalized). Since

also this equation is satisfied, and the claim follows. □

3.3. The Algorithm

Based on the insights from the previous section, we are now in the position to adapt the Pareto Explorer to perform a movement from a given initial solution along the Pareto front toward a knee of the given MOP.

Assume we are given a Pareto optimal point of an equality constrained MOP (where inequalities that are active at are treated as equalities). That is, we can assume by Theorem 1 that there exist vectors and such that the tuple satisfies the KKT equations.

In order to compute a next candidate solution along the Pareto set/front, we see from

Figure 5 that we can choose the “steering in objective space” of the Pareto Explorer as follows: the desired direction in objective space is given by the vector

that is normal to the CHIM and that points toward the origin,

Since this is not a feasible direction (note that

is already optimal and all entries of

are negative), we will in the next step have to compute the “best fit” direction that points along the Pareto front. To this end, we first compute the weight vector

associated to

. This can be done via solving the following scalar optimization problem, which follows directly from the KKT equations:

where

is as defined in (

11), and

Since

is perpendicular to the linearized Pareto front at

, we can obtain the desired search direction via an orthogonal projection of

onto the orthogonal complement of

. To get this vector, we first compute a

-factorization of

where

is an orthogonal matrix and

right upper triangular. The orthogonal complement of

is hence spanned by the column vectors of the matrix

and the orthogonal projection (and thus the best fit direction) is given by

Next, we perform a step in direction

. Note that

is defined in objective space. In order to realize such a movement, the respective direction in decision variable space is given by the vector

that solves [

49]:

Hereby,

denotes the matrix

and

is the vector that solves

where

and

denotes–for sake of a simpler formulation—the direction vector

. The resulting search direction

is tangential to the Pareto set at

. Alternatively, and as done in our implementations, one can use the matrix

and solve the system

to obtain

. The only difference of (

36) compared to (

32) is the use of

instead of

which saves the computations of the Hessians of the equality constraints, while still yielding satisfying results.

It remains to determine the step size. For this, we follow the suggestion of [

42] and use

such that, for the iterate

it holds

for a user specified value of

. Note that

is not necessarily a Pareto optimal solution. Since the aim is to perform a movement along Pareto set/front of the given MOP, the last step to obtain the new iterate

is to compute a corrector step starting from

which can, e.g., be realized via a multi-objective Newton method [

30,

53]. The Newton direction for at a point

x for an equality constrained MOP can be obtained via solving the following problem:

Algorithm 1 shows the pseudo code of the complete algorithm to compute steps toward the knee solution. The basis is the above described iteration step. The process has to be stopped if either the corner of the Pareto front is reached since, or if the iterates are sufficiently close to the knee solution. Since the idea of the Pareto Explorer is to present all the steps to the decision-maker, a fixed step size is used as described above. At one point, however, an oscillatory behavior will be observed near to the knee solution since the value is not chosen adaptively. In order to address this issue, we start with one step size . If a first oscillation occurs, the step size will be replaced with a smaller one, called . The iteration will terminate if oscillation also occurs with this smaller step size. By Theorem 2, it also follows that a (local) knee solution is found if , and that if is almost anti-parallel to one can expect that is near to such a solution. We hence have to stop the iteration in both cases (since the first case will never be observed in computations, we have left this step out in Algorithm 1).

Special attention has to be paid in case the algorithm is terminated since the iterates have reached a corner of the Pareto front (line 3 of Algorithm 1). If the Pareto front is concave, each corner point is indeed a knee solution. If the Pareto front is linear, then every point is a knee solution by definition. Hence, the algorithm will stop at

. Else (and if

is not a corner solution), the corner will only be reached if an error occurred in the computation of

. This is the case if the MaOP is degenerated, which can be checked numerically via considering the condition number of the matrix

: in case the Pareto front is degenerated at

, the matrix

is singular. In that case, the computation of the CHIM and the usage of the resulting direction vector

makes no sense. Note, however, that the PE approach can be realized without explicitly computing the CHIM. Instead, it is necessary to define an alternative “suitable” direction vector. If, for instance, the values of each individual objective are more or less in the same range, one can choose the direction

, and accordingly if the ranges differ. We will see examples for this in the next section.

| Algorithm 1 Pareto Explorer for finding the knee. |

- Require:

: initial Pareto optimal solution, : normal vector of the CHIM, steps sizes , tolerances , maximal number M of iterations - Ensure:

Set of candidate solutions around in which images are a best fit movement in -direction along the Pareto front, where ideally is the knee solution.

- 1:

- 2:

- 3:

fordo - 4:

compute as solution to ( 28) - 5:

if then

|

- 6:

return

| ▹ corner of Pareto front reached |

- 7:

end if - 8:

compute as in ( 29) - 9:

set - 10:

set - 11:

if then

|

- 12:

return ▹ no further movement in -direction can be performed (knee reached)

|

- 13:

end if

|

- 14:

if then

| ▹ oscillation of candidate solutions |

- 15:

if then

|

- 16:

| ▹ reduce step size. |

- 17:

else

|

- 18:

return

| ▹ oscillation with (knee reached) |

- 19:

end if - 20:

end if - 21:

compute via solving ( 34) - 22:

compute via solving ( 36) - 23:

set - 24:

set - 25:

compute solution of ( 1) near using a corrector step - 26:

end for

|

- 27:

return

| ▹ more iterations needed to reach the knee |