The Singular Value Decomposition over Completed Idempotent Semifields

Abstract

:1. Introduction

1.1. Motivation

- If we can guarantee the existence of additive inverses, several further observations might eventually lead us to work in the familiar landscape of the groups such as and modules.

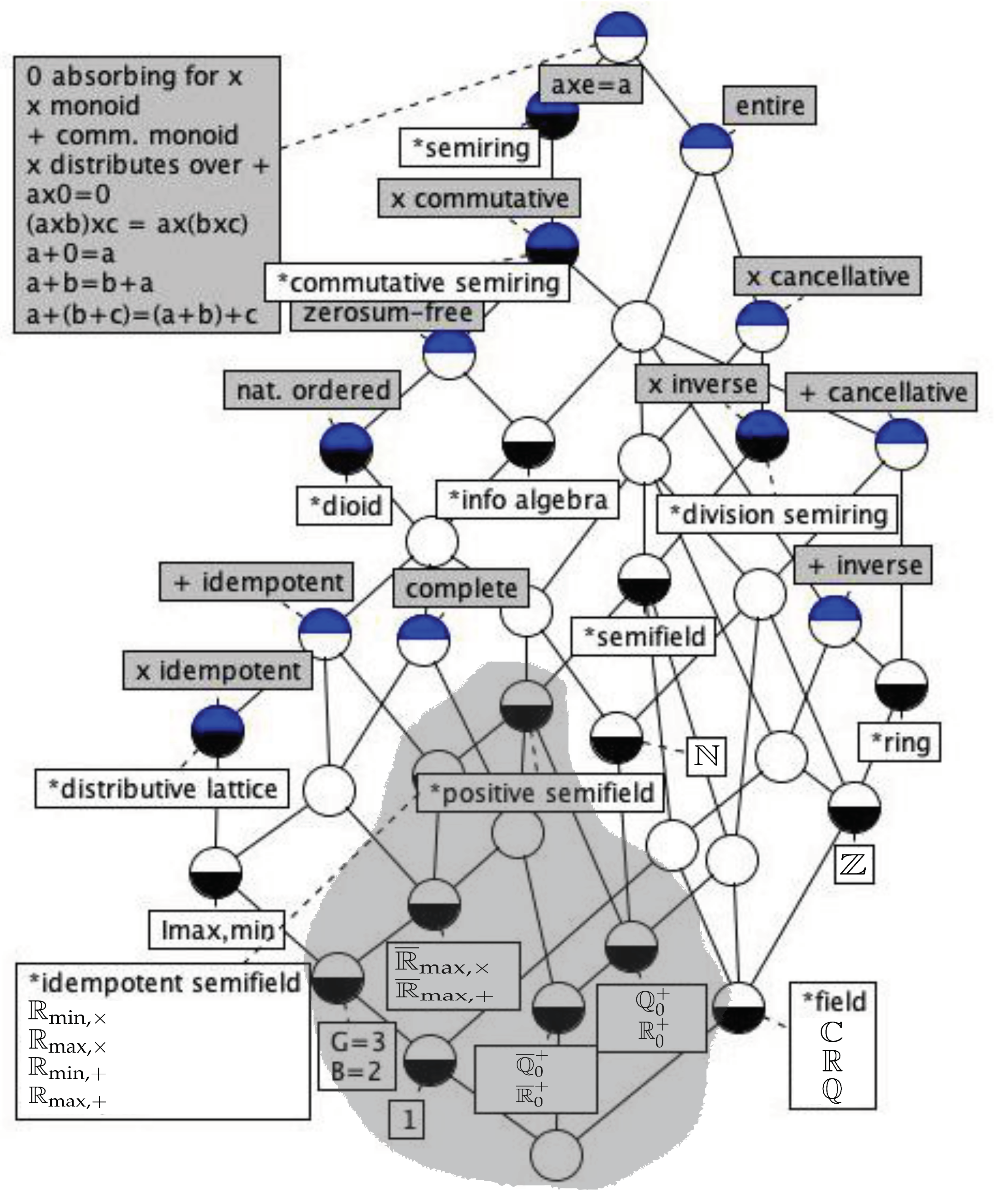

- On the other hand, if we cannot guarantee the existence of additive inverses, the toolset is quite scarce. It is first improved by demanding a natural order compatible with the operations in the semiring, leading to dioids (for double monoids), or entireness, leading to so-called information algebras.

- Adding the semifield structure to a group eventually leas us to the fields such as or and vector spaces of standard algebra.In this case, the SVD is a well-known decomposition scheme for matrices over a standard field, e.g., real or complex numbers [4] which clarifies enormously the action of the linear form (Section 1.2).

- On the other hand, adding the semifield structure to information algebras that are dioids leads us to positive semifields and their positive semimodules, which are quite varied.

1.2. The Singular Value Decomposition

- The image, range or column space of A, as ,

- The kernel or null-space of A, , as ,

- The co-image or row space of A, , as ,

- The co-kernel or left null-space of A, , as .

- 1.

- From to , the matrix yields an invertible transformation wherefore they have the same dimension, the rank,

- 2.

- In , the kernel is the orthogonal complement of the co-image, .

- 3.

- In , the co-kernel is the orthogonal complement of the co-image,

- 4.

- (Rank-nullity theorem) The dimension of the domain space decomposes as: .

- 5.

- (Rank-co-nullity) The dimension of the range space decomposes as: .

- 6.

- there is a factorization given in term of three matrices

- (a)

- is a unitary matrix of left singular vectors.

- (b)

- is a diagonal matrix of non-negative real values called the singular values.

- (c)

- is a unitary matrix of right singular vectors, and they can be structured as:where is a zero matrix and and are the left and right singular vectors, respectively, of the null singular value, if it exists.

- 7.

- And conjugate-dually, there is a factorization of in terms of the same three matriceswhere image and co-image, kernel and co-kernel swap roles.

1.3. Formal Concept Analysis and Boolean Matrix Factorization

- 1.

- The context analysis phase.

- (a)

- The polar operators of the context and .form a Galois connection whose formal concepts are the pairs of closed elements such that whence the set of formal concepts is

- (b)

- Formal concepts are partially ordered with the hierarchical orderand the set of formal concepts with this order is a complete lattice called the concept lattice of .

- (c)

- In infima and suprema are given by:

- (d)

- The basic functions andare mappings such that is supremum-dense in , is infimum-dense in .

- 2.

- The context synthesis phase.

- (a)

- A complete lattice is isomorphic to (read can be built as) the concept lattice if and only if there are mappings and such that

- is supremum-dense in , is infimum-dense in , and

- is equivalent to for all and all .

- (b)

- In particular, consider the doubling context of , , and the standard context of , where and are the sets of join- and meet-irreducibles, respectively, of , then

- The operations invoked on the matrix depend on the underlying algebra carried by , e.g., a Boolean algebra, and suggests there might be a reconstruction for every algebra definable on that carrier set. Since it is well-known that the most abstract structure to support matrix algebra is that of a semiring, we review in Section 2.1 several concepts around semirings.The Boolean algebra is embedded in every idempotent semifield, a special kind of semiring with an idempotent addition (Section 2.1–Section 2.1.2), and similar results for the reconstruction of matrices have been proven [26]. This paper is a natural extension of those results.

- Clearly, and are the extent and intent of formal concepts encoded in correlative columns of and rows of , and the ∘ operation is an external product, which suggests that extents and intents are being used as column and row vectors, respectively, if both were used as column vectors, the factorization would have to be written as . This points to the fact that the concepts of Linear Algebra over semirings, in general are important for this Theorem 3.

1.4. Formal Concept Analysis as Linear Algebra over Idempotent Semifields

1.5. Previous Results for the SVD over Idempotent Semifields

1.6. Reading Guide

- (1)

- An idempotent analogue of the SVD based on the irreducibles of a Galois connection,

- (2)

- What are the implications of a change of bias, i.e., using for the analysis, and

- (3)

- Whether there is and analogue of a Fundamental Theorem of Linear Algebra for matrices over idempotent semifields.

2. Preliminaries

2.1. Linear Algebra over Complete Idempotent and Positive Semifields

2.1.1. A Short Systemization

- The Boolean lattice

- All fuzzy semirings, e.g.,

- The min-plus semiring or tropical algebra also called the optimization algebra.

- The max-plus semiring or polar algebra also called the schedule algebra.

- 1.

- The non-negative rationals and non-negative reals are two positive semifields.

- 2.

- The min-plus and max-plus algebras of Example 2 are idempotent semifields.

- 3.

- The min-times algebra and the max-times algebra are also idempotent semifields.

2.1.2. Semifield Completions

- 1.

- There is a pair of completed semifields overwhere and by definition,

- 2.

- In addition to the individual laws as positive semifields, we have the modular laws:the analogues of the De Morgan laws:and the self-dual inequality in the natural order

- 3.

- Furthermore, if is a positive dioid, then the inversion operation is a dual order isomorphism between the dual order structures and with the natural order of the original semifield a suborder of the first structure.

- The issue of notation is contentious: so as not to derail the exposition, in Appendix A we discuss the problem and our decisions, which essentially amount to using a notation that maximally resembles that of linear algebra but also accommodates Boolean algebra.

- Please also note that in complete semifields which distinguishes them from inclines, and also that the inverse for the null is prescribed as . On a practical note, residuation in complete commutative idempotent semifields can be expressed in terms of inverses, and this extends to eigenspaces, examples of which will be given in the following sections.

- In fact, complete idempotent semifields , appear as enriched structures, the advantage of working with them being that meets can be expressed by means of joins and inversion as .

- 1.

- The “smallest” example of complete idempotent semifield is , but it lacks a common neutral element for multiplications and .

- 2.

- The next smallest is is embedded in , and is embedded in any bigger complete semifield.

- 3.

- The complete max-times semifield

- 4.

- The complete min-times semifield

- 5.

- The complete min-plus semifield .

- 6.

- The complete max-plus semifield .

2.1.3. Idempotent Semimodules

- The (pointwise) inverse [30], is a right sub-semimodule of the inverse semifield such that if , then . This duality is the order duality in partial orders and inverts the natural order: if then .

- The transpose, is a left sub-semimodule of the same semifield such that if x is a right (column) vector, then is a left (row) vector.These two dualities commute and allow us the definition of the conjugate [30] (When applied on or we call it the Cuninghame-Green conjugate.), is a left sub-semimodule of the inverse semifield such that if , then , and x is a row vector. This duality at the same time inverts the natural order and the row-column nature of the semimodule.In fact, we can choose any two of the previous three dualities as independent and have the other defined from it:

- Using residuation [44], another dual can be defined [45] (The original name for this dual was “opposite” in [45], and so it was adopted in [29]. The authors in [45] now prefer to call this concept the “dual” [46] due to the order dualization of the construction, we surmise.): given a complete idempotent semifield and a right semimodule over it define the (residuation) dual of as the left -semimodule with addition related to the original addition (which is the join in the natural order) and action . Note that and that this dual commutes with inversion. One of the advantages of operating on completed idempotent semifields is that residuation can be expressed in terms of the original operations of the semimodule:

- 1.

- Alternating A- products of 3 matrices can be shortened as in:

- 2.

- Alternating A- products of 4 matrices can be shortened as in:

- 3.

- Alternating A- products of 3 matrices and another terminal, arbitrary matrix can be shortened as in:

- 4.

- The following inequalities apply:

- 1.

- Set the vectors up as matrix with .

- 2.

- Find the matrix . This annihilates the diagonal components of .

- 3.

- Lastly, form the product .

- For each the vector if and only if is a -linear combination of the other vectors of A.

- In such case, the elements of the j-th column of provide the coefficients of the combination.

- S is a minimal set of generators of .

- and .

- S is a basis for .

2.1.4. Matrices as Linear Transformations between Idempotent Semimodules

- 1.

- is an idempotent semimodule generated by the columns of A, and row-dually for .

- 2.

- The nullspace of A is an idempotent semimodule generated by the columns of the identity matrix that are co-indexed with the empty columns of A, and row-column-dually for the nullspace of .Furthermore, if A is column--astic then its nullspace is reduced to and row-colum dually for .

- 1.

- If , then A has two columns such that every other column is a linear combination of them.

- 2.

- If , there exist m vectors in none of which is a linear combination of the others.

- 3.

- If and , we can find (at least) a set of linearly independent vectors.

2.1.5. One-Sided Systems of Equations over Idempotent Semifields

- The algebraic approach, based on relaxing the equations to inequalities and studying lower or upper bounds to the solution by means of residutation, available in every complete, naturally ordered semiring.

- The combinatorial approach, which involves considering a trisection or partition of the values in the carrier set and studying the cases made evident by the consideration of such trisection both in the matrix and the given vectors and [30]. Since the trisection can easily be seen to contain an embedded copy of this approach extends, at least partially, to every positive semifield.

2.1.6. Complete Congruences of Idempotent Semimodules

- The orthogonal of a semimodule is the congruence

- The orthogonal of a congruence (as a semimodule) is the semimodule

2.2. Generalized -Formal Concept Analysis

2.2.1. Galois Connections between Idempotent Semivector Spaces

- Several results stem from using one of the polars or projectors in an argument: when a similar result follows from using the alternate polar, projector or bikernel, we will say that it holds GC-dually. This is licensed by the combination of row-column, and domain-range duality, between the polars.

2.2.2. -Formal Concept Analysis

- the triple is a (formal) -context, and

- the isomorphic pairs of elements of Proposition 6 such that and are (formal) φ-concepts of the -context , in which case a is called its φ-extent, and b its φ-intent. When the value of φ is clear we will omit it. The concept generating functions are:

- It is customary to use the notation to refer to the set of formal -concepts of context , and we will also be using the notation and to refer to the sets of φ-extents and φ-intents, respectively, sometimes even without explicit mention of the context itself as and .

- Please note that since the upper and lower multiplication only differ in the behaviour of the extreme (non-invertible) elements, it is not necessary to use the dotted convention in scalings.

- Using ⊥ in a semifield for scaling would annul every coordinate, so .

- Using ⊤ would render any non-zero coordinate of x to ⊤ and we say that is saturated. This is the way Boolean spaces are embedded into semivector spaces. [34] (§ 3.8, for an example).

- Since most of the calculations below are already scaled, we will not use this cumbersone notation usually, but only when we have to mix in expressions or procedures both scaled and un-scaled magnitudes.

- 1.

- If , then:

- 2.

- If , then:

- 3.

- Futhermore, if , then:

2.2.3. Dual -Formal Concept Analysisor -Formal Concept Analysis

- Equation (51) describes closure operators for , but not those found previously for . In fact, since the semifield is order-dual, they are interior operators in the original semifield .

- The dual special elements whose direct forms were found at the end of Section 2.2.2, can also be worked out. They appear in the right column of Table 1.

- We can also invoke the dual of Proposition 6.

- Since we will need for results from dual Theorems 5 and 6 to coexist, we must supply more notation. For the purpose of simplifying glyphs and as an easy mnemonic, we propose to mark the formal extents and intents of -FCA with underlines as and those of -FCA with overlines as

3. Results: The SVD Based in -FCA

3.1. Approximating the Incidence with Formal Concepts

- 1.

- For , define the lower conceptual -factor as , and

- 2.

- For , define the upper conceptual -factor , as .

- 1.

- If (dually ) the lower (upper) bound is the worst possible.

- 2.

- If (dually ) the upper (lower) bound is only tight in the saturated (empty) lines.

3.2. Finding Minimal Sets of Factors

- If with finite components, then the ray has as many elements as the cardinality of k. Let take the following values . Since multiplication is compatible with the order, we have . Since we have where , whence , and so:For finite we have , wherefore applying the polar of intents we obtain:By applying the polar of extents and pairing up we finally obtain the following structure for the ray:

- When , since for , the ray has a maximum of three elements:

- For we have the concept which reconstructs the saturated columns of R as , from (55). Recall for later that similarly reconstructs the saturated rows, and they are both lower approximations to R, by Proposition 1.4.

- It is easy to see what concept is . Recall that, since is an intent it rewrites as for some , but since it is the extent due to , we must have , whence we can choose . We have , a closure, so that which shows that it is already an extent. So .

- If is a set of -independent columns, and be a set of -independent rows of R, respectively. Then,where the operators are those of .

- Order-dually, if be a set of -independent columns, and is a set of -independent rows of R, respectively. Then.where the operators are those of .

3.3. Towards a Fundamental Theorem of Linear Algebra over Idempotent Semifields

- The set of extents:

- The set of intents:

- The bikernel of extents:

- The bikernel of intents:

- 1.

- From to the polars yield an invertible transformation, .

- 2.

- The set of extents: is generated by the columns of R, and the set of intents is generated by the columns of ,

- 3.

- In the bikernel of extents is the “orthogonal” of the system of extents, , that is the blocks of intersect at precisely one point, .

- 4.

- In the bikernel of intents is the “orthogonal” of the system of intents, , that is the blocks of intersect at precisely one point, .

- 5.

- If is a -independent set of columns with vectors and a -independent set of rows with vectors, then

- 6.

- There are two “minimal” factorizations of the matrix, one using the join-irreducible and another the meet-irreducible -concepts:

4. Discussion

4.1. Contributions

- The ranges of linear forms and the other related types of Galois Connections between idempotent spaces are complete lattices, i.e., it is computing lattices.

- The computation of these spaces, their fixpoints, and pairs thereof—the -concepts—can all be computed algebraically via the entwined operators of a complete idempotent semifield and its dual, and these operators remind of and generalize boolean algebra, which lends a familiarity to computing in idempotent semifields. Since complete idempotent semifields are complete lattices, we say that in using the theorem we are computing in lattices.

- -formal concepts in these different types of Galois connections allow us to reconstruct the initial matrix of the linear form (Lemma 8). Since the objects and notions of (Concept) Lattice Theory are relevant for this application, we say that we are computing with lattices.

- Finally, independent subsets of join- and meet-irreducibles of the range lattices of the connection provide two perfect reconstructions and analogues of the SVD for matrices with values in an idempotent semifield (Proposition 9), also an instance of computing in lattices with lattices.

4.2. On the Possibility of a Fundamental Theorem of Idempotent Algebra

4.3. On the Relationship of the i-SVD with the Spectral Theorem

4.4. Using other Types of Galois Connections to Base the i-SVD

4.5. The i-SVD and Matrix Factorization

4.6. The i-SVD as a Lattice Computing Technique

- Since there is a proper embedding of the boolean semiring into complete idempotent semifields as abstract algebras, while at the same time there are concrete instances of those algebras supported in the extended reals and even the extended positive reals, the tools we present conform to the LC tenet of homogeneously processing discrete (boolean) and continuous data with the same tools. This is what we called in the introduction computing in lattices.

- Furthermore, this procedure extends through products and sums to several constructions for weighted sequences, formal series, etc. [10] that strictly formalize many objects relevant to Computer Science, which can themselves be partially ordered. As an instance of this, matrices being partially ordered with the entrywise order, NMF and BMF are seamlessly integrated in the theory we develop here.

- As a consequence of matrices over idempotent semifields being considered linear forms between idempotent spaces, and since the images of these linear forms are (-FCA) lattices, a shift in concern is introduced from orthonormal bases to dense sets of meet and join-generators, from vectors and their images to formal concepts, etc., which we called computing with lattices above, since the concerns of the latter seem more relevant.

- Finally, the programme of LC to emerge as an “information processing paradigm” is fostered since complete idempotent semifields—in particular the completed max-plus and min-plus semifields—are the limits of algebras that “process information” in the Information Theory meaning of the expression [9].

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| BMF | Boolean Matrix Factorization |

| FCA | Formal Concept Analysis |

| i-SVD | Idempotent Singular Value Decomposition |

| -FCA | Semifield-Valued Formal Concept Analysis |

| LC | Lattice Computing |

| NMF | Non-negative Matrix Factorization |

| SVD | Singular Value Decomposition |

Appendix A. The Problem with Notation of Completed Semifields and Their Semimodules

| Origin/Operation | Max Addition | Min Addition | Max Product | Min Product | e | Conjugate | ||

|---|---|---|---|---|---|---|---|---|

| standard algebra | [35] | [35] | 0 | ∞ | ||||

| this paper [29] | ⊥ | e | ⊤ | |||||

| Cuninghame-Green [7,30] | ⊕ | ⊗ | 0 | ∞ | ||||

| lattice algebra (scalar) [38] (matricial) | ∨ | ∧ | + | 0 | ∞ | |||

| ∨ | ∧ | |||||||

| Maragos (scalar) [32] (matricial) | ∨ | ∧ | + | ∞ | ||||

| ∨ | ∧ | ⊞ |

Appendix B. Residuated Maps, Adjunctions and Galois Connections

- A map is residuated if inverse images of principal (order) ideals of Q under f are again principal ideals. Its residual map or simply residual, is.

- A map is dually residuated if the inverse images of principal dual (order) ideals under g are again dual ideals. Its dual residual map or simply dual residual, is.

- 1.

- is an adjunction on the left or simply a left adjunction, and we write iif: , that is, the functions are covariant, and we say that λ is the lower or left adjoint while ρ is the upper or right adjoint.

- 2.

- is an adjunction on the right or simply a right adjunction iff: , both functions are covariant, ρ is the upper adjoint, and λ the lower adjoint.

- 3.

- is a Galois Connection (proper), of two dual adjoints iff: , that is, both functions are contravariant. For that reason they are sometimes named contravariant or symmetric adjunctions on the right.

- 4.

- is a co-Galois connection, of dual adjoints if: , that is, both functions are contravariant. For that reason they are sometimes named contravariant or symmetric adjunctions on the left. is also a co-Galois connection.

- A closure system, , the closure range of the right adjoint (see below).

- An interior system, , the kernel range of the left adjoint (see below).

- A closure function [64] (suggest “closure operator”) , from P to the closure range , with adjoint inclusion map , where denotes the identity over P.

- A kernel function [64] (also “interior operator”, “kernel operator”) , from Q to the range of , with adjoint inclusion map , where denotes the identity over Q.

- a perfect adjunction , i.e., a dual order isomorphism between the closure and kernel ranges and .

- if form a left adjunction, then is residuated, preserves existing least upper bounds (for lattices, joins) and preserves existing greatest lower bounds (for lattices, meets).

- if form a Galois connection, then both and invert existing least upper bounds (for lattices, they transform joins into meets).

- if form a right Galois connection, then preserves existing greatest lower bounds (meets for lattices) and is residuated, preserves existing least upper bounds (joins for lattices).

- if form a co-Galois connection, then both an invert existing greatest lower bounds (for lattices, they transform meets into joins).

| Left Adjunction (type oo): | Galois Connection (type oi): |

|---|---|

| and | and |

| and | and |

| monotone, residuated | antitone |

| monotone, residual | antitone |

| join-preserving, meet-preserving | join-inverting, join-inverting |

| co-Galois connection (type io): | Right Adjunction (type ii): |

| and | and |

| and | and |

| antitone | monotone, residual |

| antitone | monotone, residuated |

| meet-inverting, meet-inverting | meet-preserving, join-preserving |

A Naming Convention for Galois Connections

- We take the type oo Galois connectionto be a basic adjunction composed with an even number of anti-isomorphism on the domain and range orders.

- To obtain a type oi Galois connectioncompose a basic adjunction with an odd number of anti-isomorphism on the range.

- To get a a type io Galois connectionwe compose a basic adjunction with an odd number of anti-isomorphisms on the domain.

- Finally, a type ii Galois connection, is a basic adjunction with an odd number of anti-isomorphisms composed on both the domain and range.

References

- Kaburlasos, V.G. The Lattice Computing (LC) Paradigm. In Proceedings of the 15th International Conference on Concept Lattices and Their Applications CLA, Tallinn, Estonia, 29 June–1 July 2020; Valverde-Albacete, F.J., Trnecka, M., Eds.; Tallinn University of Technology: Tallinn, Estonia, 2020; pp. 1–7. [Google Scholar]

- Kaburlasos, V.G.; Ritter, G.X. (Eds.) Computational Intelligence Based on Lattice Theory; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Platzer, A. Logical Foundations of Cyber-Physical Systems; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. Matrix Computations, 3rd ed.; JHU Press: Baltimore, MD, USA, 2012. [Google Scholar]

- Mirkin, B. Mathematical Classification and Clustering; Nonconvex Optimization and its Applications; Kluwer Academic Publishers: Dordrecht, The Netherlands; Springer: Berlin/Heidelberg, Germany, 1996. [Google Scholar]

- Baccelli, F.; Cohen, G.; Olsder, G.; Quadrat, J. Synchronization and Linearity; Wiley: Hoboken, NJ, USA, 1992. [Google Scholar]

- Butkovič, P. Max-Linear Systems. Theory and Algorithms; Monographs in Mathematics; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Ganter, B.; Wille, R. Formal Concept Analysis: Mathematical Foundations; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Valverde-Albacete, J.F.; Peláez-Moreno, C. The Rényi Entropies Operate in Positive Semifields. Entropy 2019, 21, 780. [Google Scholar] [CrossRef] [Green Version]

- Golan, J.S. Semirings and Their Applications; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Gondran, M.; Minoux, M. Graphs, Dioids and Semirings. New Models and Algorithms; Operations Research Computer Science Interfaces Series; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Denecke, K.; Erné, M.; Wismath, S. (Eds.) Galois Connections and Applications; Number 565 in Mathematics and Its Applications; Kluwer Academic: Dordrecht, The Netherlands; Boston, MA, USA; London, UK, 2004. [Google Scholar]

- Lanczos, C. Linear Differential Operators; Dover Publications: Mineola, NK, USA, 1997. [Google Scholar]

- Strang, G. The fundamental theorem of linear algebra. Am. Math. Mon. 1993, 100, 848–855. [Google Scholar] [CrossRef]

- Deerwester, S.; Dumais, S.; Furnas, G.W.; Landauer, T.K.; Harshman, R. Indexing by latent semantic analysis. J. Am. Soc. Inf. Sci. 1990, 41, 391–407. [Google Scholar] [CrossRef]

- Pearson, K. On Lines and Planes of Closest Fit to Systems of Points in Space. Philos. Mag. 1901, 2, 559–572. [Google Scholar] [CrossRef] [Green Version]

- Landauer, T.K.; McNamara, D.S.; Dennis, S.; Kintsch, W. Handbook of Latent Semantic Analysis; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2007. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning. Data Mining, Inference and Prediction, 2nd ed.; Springer Series in Statistics; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1–764. [Google Scholar]

- Wille, R. Finite distributive lattices as concept lattices. Log. Math. 1985, 2, 635–648. [Google Scholar]

- Davey, B.; Priestley, H. Introduction to Lattices and Order, 2nd ed.; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Birkhoff, G. Lattice Theory, 3rd ed.; American Mathematical Society: Providence, RI, USA, 1967. [Google Scholar]

- Ore, O. Theory of Graphs. American Mathematical Society Colloquiun Publications; American Mathematical Society: Providence, RI, USA, 1962; Volume XXXVIII. [Google Scholar]

- Barbut, M.; Monjardet, B. Ordre et Classification. Algèbre et Combinatoire, Tome I; Méthodes Mathématiques des Sciences de l’Homme; Hachette: New York, NY, USA, 1970. [Google Scholar]

- Barbut, M.; Monjardet, B. Ordre et Classification. Algèbre et Combinatoire, Tome II; Méthodes Mathématiques des Sciences de l’Homme; Hachette: New York, NY, USA, 1970. [Google Scholar]

- Belohlavek, R.; Vychodil, V. Formal concepts as optimal factors in Boolean factor analysis: Implications and experiments. In Proceedings of the 5th International Conference on Concept Lattices and their Applications, (CLA07), Montpellier, France, 24–26 October 2007. [Google Scholar]

- Valverde-Albacete, F.J.; Pelaez-Moreno, C. On the Relation between Semifield-Valued FCA and the Idempotent Singular Value Decomposition. In Proceedings of the IEEE International Conference on Fuzzy Systems (FUZZ IEEE 2018), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. Towards a Generalisation of Formal Concept Analysis for Data Mining Purposes. In Formal Concept Analysis; Springer: Berlin/Heidelberg, Germany, 2006; Volume LNAI 3874, pp. 161–176. [Google Scholar]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. Further Galois connections between Semimodules over Idempotent Semirings. In Proceedings of the Fifth International Conference on Concept Lattices and Their Applications, CLA 2007, Montpellier, France, 24–26 October 2007; pp. 199–212. [Google Scholar]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. Extending conceptualisation modes for generalised Formal Concept Analysis. Inf. Sci. 2011, 181, 1888–1909. [Google Scholar] [CrossRef] [Green Version]

- Cuninghame-Green, R. Minimax Algebra; Number 166 in Lecture notes in Economics and Mathematical Systems; Springer: Berlin/Heidelberg, Germany, 1979. [Google Scholar]

- Ritter, G.X.; Sussner, P. An Introduction to Morphological Neural Networks. In Proceedings of the International Conference on Pattern Recognition (ICPR’96), Vienna, Austria, 25–29 August 1996; pp. 709–717. [Google Scholar]

- Maragos, P. Dynamical systems on weighted lattices: General theory. Math. Control Signals Syst. 2017, 29, 1–49. [Google Scholar] [CrossRef] [Green Version]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. The Linear Algebra in Formal Concept Analysis over Idempotent Semifields. In Formal Concept Analysis; Number 9113 in LNAI; Springer: Berlin/Heidelberg, Germany, 2015; pp. 97–113. [Google Scholar]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. K-Formal Concept Analysis as linear algebra over idempotent semifields. Inf. Sci. 2018, 467, 579–603. [Google Scholar] [CrossRef]

- Moreau, J.J. Inf-convolution, Sous-additivité, convexité des fonctions Numériques. J. Math. Pures Appl. 1970, 49, 109–154. [Google Scholar]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. The Linear Algebra in Extended Formal Concept Analysis Over Idempotent Semifields. In Formal Concept Analysis; Bertet, K., Borchmann, D., Cellier, P., Ferré, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; pp. 211–227. [Google Scholar]

- Ritter, G.X.; Sussner, P. The minimax eigenvalue transform. In Image Algebra and Morphological Image Processing, III (San Diego, CA, 1992); Gader, P.D., Dougherty, E.R., Serra, J.C., Eds.; SPIE: Bellingham, WA, USA, 1992; pp. 276–282. [Google Scholar]

- Ritter, G.X.; Diaz-de Leon, J.; Sussner, P. Morphological bidirectional associative memories. Neural Netw. 1999, 12, 851–867. [Google Scholar] [CrossRef]

- Ritter, G.X.; Sussner, P.; Diaz-de Leon, J. Morphological Associative Memories. IEEE Trans. Neural Netw. 1998, 9, 281–293. [Google Scholar] [CrossRef] [PubMed]

- Schutter, B.D.; Moor, B.D. The Singular-Value Decomposition in the Extended Max Algebra. Linear Algebra Its Appl. 1997, 250, 143–176. [Google Scholar] [CrossRef] [Green Version]

- Schutter, B.D.; Moor, B.D. The QR Decomposition and the Singular Value Decomposition in the Symmetrized Max-Plus Algebra Revisited. SIAM Rev. 2002, 44, 417–454. [Google Scholar] [CrossRef]

- Hook, J. Max-plus singular values. Linear Algebra Its Appl. 2015, 486, 419–442. [Google Scholar] [CrossRef]

- Ronse, C. Why mathematical morphology needs complete lattices. Signal Process. 1990, 21, 129–154. [Google Scholar] [CrossRef]

- Blyth, T.; Janowitz, M. Residuation Theory; Pergamon Press: Oxford, UK, 1972. [Google Scholar]

- Cohen, G.; Gaubert, S.; Quadrat, J.P. Duality and separation theorems in idempotent semimodules. Linear Algebra Its Appl. 2004, 379, 395–422. [Google Scholar] [CrossRef] [Green Version]

- Cohen, G.; Gaubert, S.; Quadrat, J.P. Projection and aggregation in maxplus algebra. In Current Trends in Nonlinear Systems and Control; Birkhäuser Boston: Boston, MA, USA, 2006; pp. 443–454. [Google Scholar]

- Gaubert, S.; Katz, R.D. The tropical analogue of polar cones. Linear Algebra Its Appl. 2009, 431, 608–625. [Google Scholar] [CrossRef] [Green Version]

- Di Loreto, M.; Gaubert, S.; Katz, R.D.; Loiseau, J.J. Duality Between Invariant Spaces for Max-Plus Linear Discrete Event Systems. SIAM J. Control Optim. 2010, 48, 5606–5628. [Google Scholar] [CrossRef] [Green Version]

- Gaubert, S. Théorie des Systèmes Linéaires Dans les Dioïdes. Ph.D. Thesis, École des Mines de Paris, Paris, France, 1992. [Google Scholar]

- Cohen, G.; Gaubert, S.; Quadrat, J. Kernels, images and projections in dioids. In Proceedings of the Workshop on Discrete Event Systems (WODES), Edinburgh, Scotland, UK, 19–21 August 1996; pp. 1–8. [Google Scholar]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. Galois Connections between Semimodules and Applications in Data Mining. In Formal Concept Analysis, Proceedings of the 5th International Conference on Formal Concept Analysis, ICFCA 2007, Clermont-Ferrand, France, 12–16 February 2007; Number 4390 in LNAI; Kusnetzov, S., Schmidt, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 181–196. [Google Scholar]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. Idempotent Semifield-Valued Formal Concept Analysis and the 4-fold Galois Connection. 2020; in preparation. [Google Scholar]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. Towards Galois Connections over Positive Semifields. In Information Processing and Management of Uncertainty in Knowledge-Based Systems; Springer: Berlin/Heidelberg, Germany, 2016; Volume 611 CCIS, pp. 81–92. [Google Scholar]

- Bloch, I. On links between mathematical morphology and rough sets. Pattern Recognit. 2000, 33, 1487–1496. [Google Scholar] [CrossRef]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. The Spectra of irreducible matrices over completed idempotent semifields. Fuzzy Sets Syst. 2015, 271, 46–69. [Google Scholar] [CrossRef] [Green Version]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. The spectra of reducible matrices over complete commutative idempotent semifields and their spectral lattices. Int. J. Gen. Syst. 2016, 45, 86–115. [Google Scholar] [CrossRef] [Green Version]

- Kleinberg, J.M. Authoritative sources in a hyperlinked environment. J. ACM 1999, 46, 604–632. [Google Scholar] [CrossRef]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. A Formal Concept Analysis Look at the Analysis of Affiliation Networks. In Formal Concept Analysis of Social Networks; Missaoui, R., Kuznetsov, S.O., Obiedkov, S., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 171–195. [Google Scholar]

- Belohlavek, R.; Vychodil, V. Factor Analysis of Incidence Data via Novel Decomposition of Matrices. In Proceedings of the International Conference on Formal Concept Analysis (ICFCA09), Darmstadt, Germany, 12 May 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 83–97. [Google Scholar]

- Belohlavek, R. Optimal decompositions of matrices with entries from residuated lattices. J. Log. Comput. 2012, 22, 1405–1425. [Google Scholar] [CrossRef]

- Belohlavek, R.; Trnecka, M. From-below approximations in Boolean matrix factorization: Geometry and new algorithm. J. Comput. Syst. Sci. 2015, 81, 1678–1697. [Google Scholar] [CrossRef] [Green Version]

- Belohlavek, R.; Outrata, J.; Trnecka, M. Toward quality assessment of Boolean matrix factorizations. Inf. Sci. 2018, 459, 71–85. [Google Scholar] [CrossRef]

- Vorobjev, N. The extremal matrix algebra. Dokl. Akad. Nauk SSSR 1963, 4, 1220–1223. [Google Scholar]

- Düntsch, I.; Gediga, G. Approximation operators in qualitative data analysis. In Theory and Applications of Relational Structures as Knowledge Instruments; Lecture Notes in Computer Science; de Swart, H., Orłowska, E., Schmidt, G., Roubens, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2929, pp. 214–230. [Google Scholar]

- Erné, M. Adjunctions and Galois connections: Origins, History and Development. In Galois Connections and Applications; Springer: Amsterdam, The Netherlands, 2004; pp. 1–138. [Google Scholar]

- Erné, M.; Koslowski, J.; Melton, A.; Strecker, G. A primer on Galois Connections. Ann. N. Y. Acad. Sci. 1993, 704, 103–125. [Google Scholar] [CrossRef]

| Special Element | BIAS in | Bias in |

|---|---|---|

| top | ||

| attribute-concepts | ||

| object-concepts | ||

| bottom |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Valverde-Albacete, F.J.; Peláez-Moreno, C. The Singular Value Decomposition over Completed Idempotent Semifields. Mathematics 2020, 8, 1577. https://doi.org/10.3390/math8091577

Valverde-Albacete FJ, Peláez-Moreno C. The Singular Value Decomposition over Completed Idempotent Semifields. Mathematics. 2020; 8(9):1577. https://doi.org/10.3390/math8091577

Chicago/Turabian StyleValverde-Albacete, Francisco J., and Carmen Peláez-Moreno. 2020. "The Singular Value Decomposition over Completed Idempotent Semifields" Mathematics 8, no. 9: 1577. https://doi.org/10.3390/math8091577