Abstract

This paper is devoted to the approximation of matrix pth roots. We present and analyze a family of algorithms free of inverses. The method is a combination of two families of iterative methods. The first one gives an approximation of the matrix inverse. The second family computes, using the first method, an approximation of the matrix pth root. We analyze the computational cost and the convergence of this family of methods. Finally, we introduce several numerical examples in order to check the performance of this combination of schemes. We conclude that the method without inverse emerges as a good alternative since a similar numerical behavior with smaller computational cost is obtained.

Keywords:

matrix pth root; inverse operator; iterative method; order of convergence; stability; semilocal convergence MSC:

49N45; 65J22

1. Introduction

The computation of operators on a matrix appears in many applications. The use of iterative methods has emerged as a useful technique to approximate it. This paper is devoted to the approximation of the matrix pth root. We recall that the principal pth root of a matrix , where is a set of complex numbers, without positive eigenvalues in , as the unique solution X of

with an argument of the eigenvalues in modulus lower than

For this kind of problem, the well-known and famous Newton method takes the following form

This formula is a direct extension of the method applied to the scalar equation . In this form, the method achieves quadratic convergence, but unfortunately, it is unstable [1]. However, as can be in seen in [2], a stable version can be found.

In fact, several applications of the Newton method exist for finding the pth root matrix, see this incomplete list of references [1,2,3,4,5,6].

Similarly, we can derive the third-order Chebyshev method as

where s.t. B has no nonpositive real eigenvalues.

In our paper [7], we proposed stable versions of this algorithm, we presented some numerical advantages of it with respect to Newton and other third-order methods such as Halley’s method [8,9], and finally, we developed a general family of any order that includes both the Newton and Chebyshev methods.

In order to develop a method avoiding the use of inverses of the different iterates, we can consider the approximation of this less natural equation . In this case, the method presented in [7] has the form

where

This method has order m, and in particular, for and , we recover the Newton and Chebyshev methods.

In the above Formula (2), we only need to compute the inverse of A. In the present paper, we propose to approximate the inverse of A by another iterative method. We can use our family introduced in [10] that has the form

Our goal is to see that the new approach yields a similar numerical behavior but with the advantage that it is free of inverse operators, and in particular, has a smaller computational cost, which means that it requires fewer operations.

The computation of the pth root of a matrix appears, for example, in fractional differential equations, discrete representations of norms corresponding to finite element discretizations of fractional Sobolev spaces, and the computation of geodesic-midpoints in neural networks (see [11] and the references therein).

2. A General Method for Approximating the Matrix pth Root Free of Inverse Operators

As we mentioned in the introduction, for approximating , we propose the use of a combination of two families. One for the approximation of and other, that use this approximation, for the approximation of the matrix pth root.

First step: for approximating

Second step: for approximating

where denotes the final iteration computed by the above method (4) in the first step.

2.1. Convergence

To establish the convergence of the general method (5), we observe that

Now, suppose that, from method (4), we consider such that . Then, for the iterative process (4), using Theorem 3.2 in [10], we obtain

Finally, fixed we take such that . Then, from Theorem 4.1 in [7], the sequence , given by the general method (5), converges to ; therefore, is bounded. So, there exists such that for all .

Theorem 1.

Suppose with and such that, from (4), we consider . If is such that , then for all tolerance given, there exists such that for .

2.2. Computational Cost and Efficiency

If A is a matrix , and taking into account that the computational cost of the product of two matrices is and that adding or subtracting two matrices has a computational cost of , then the computational cost of

- the computation of matrix , given by means of using (4), is and its computational efficiency is

- the computation of method (2) has a computational cost of and its computational efficiency is

- the computational cost of method (5) is obtained directly by and its computational efficiency is

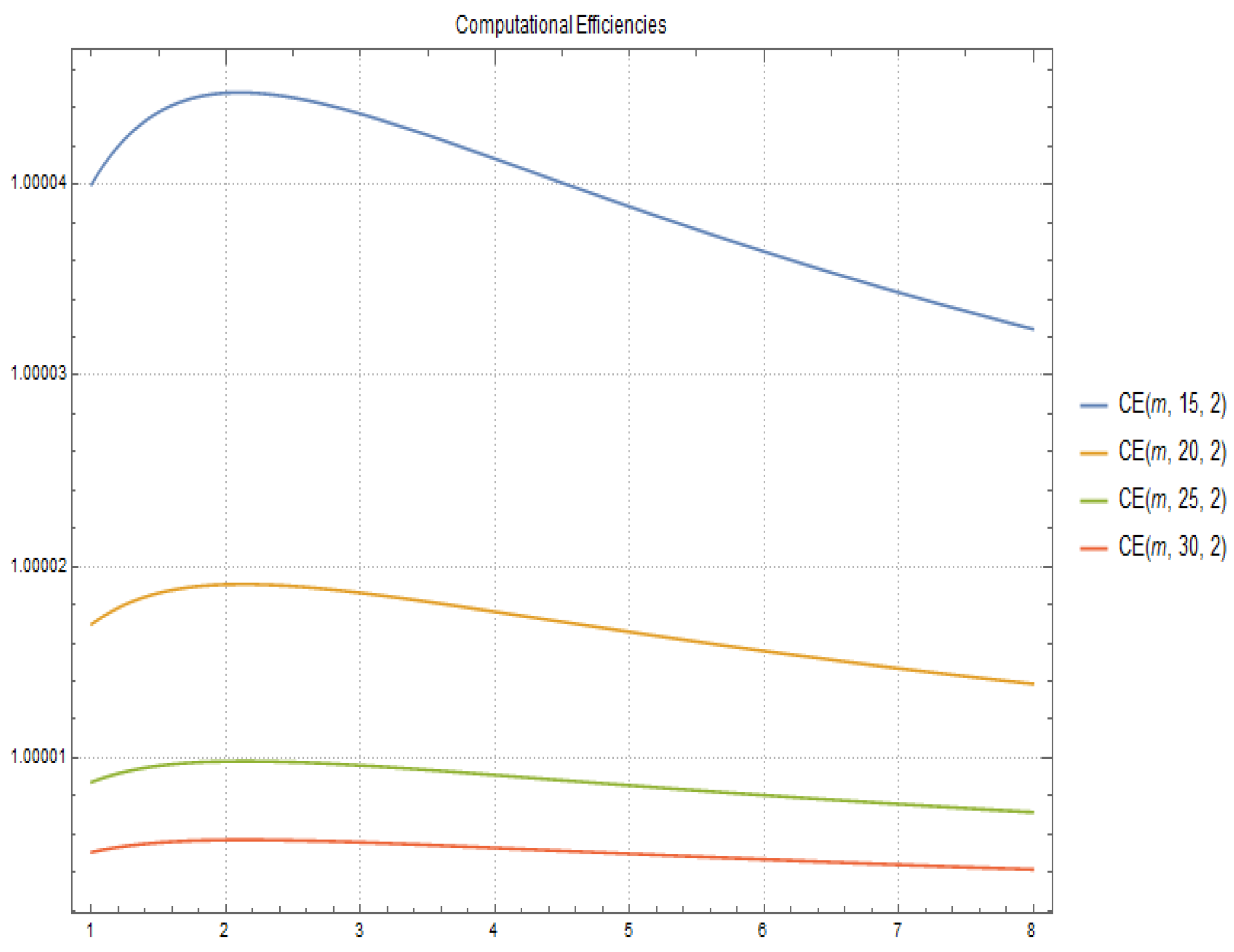

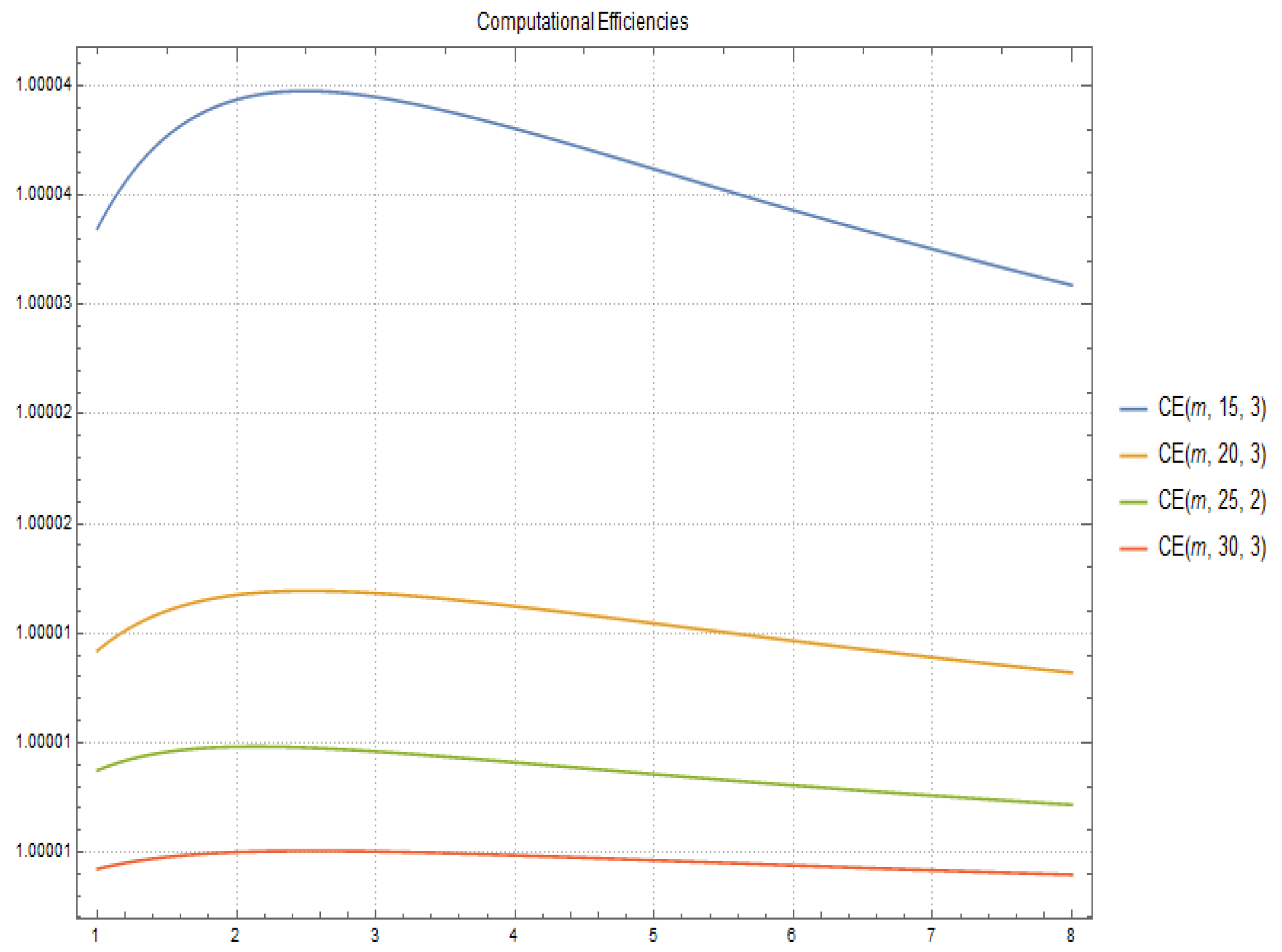

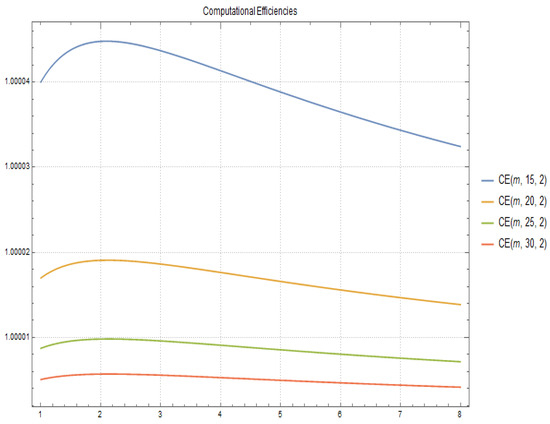

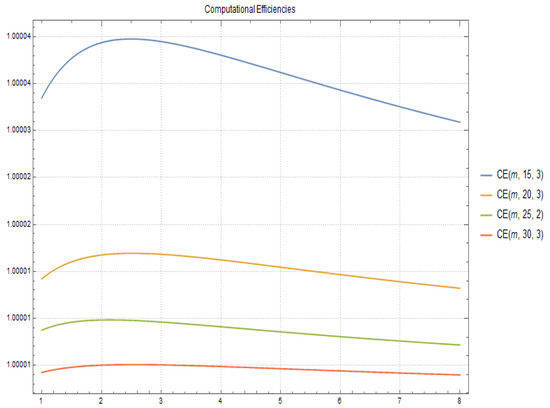

In Figure 1 and Figure 2 the computational efficiency for fixed and respectively and different values of q and m are shown.

Figure 1.

Computational Efficiencies for and different values of q and m.

Figure 2.

Computational Efficiencies for and different values of q and m.

In most cases, when working with a family of methods, the best one is related to the problem considered (see the next figures) and the main way used to solve the problem. For example, the most efficient method (in terms of computation) for solving sparse problems, which appear when discretizing differential equations, is Chebyshev’s method [7,10].

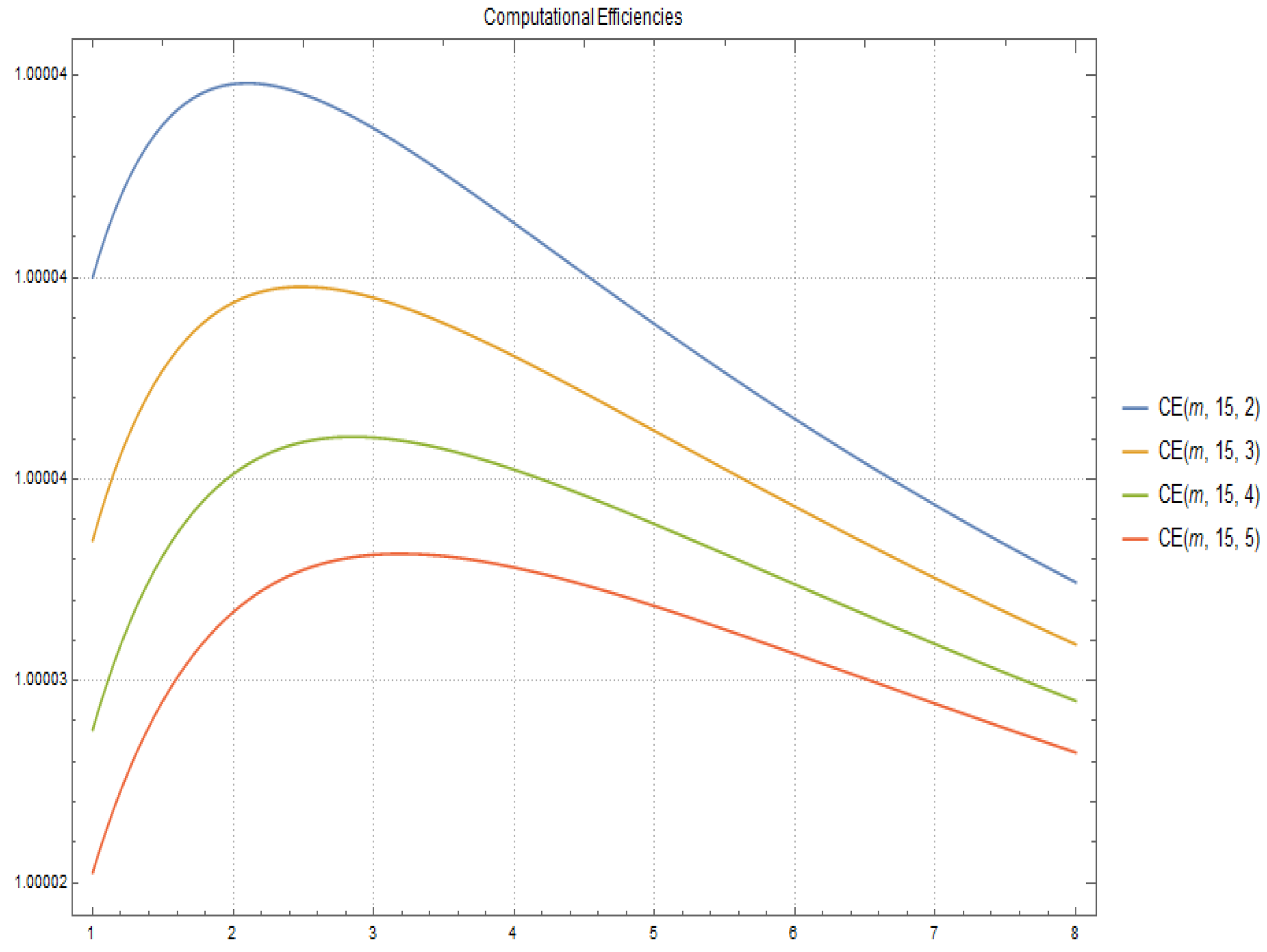

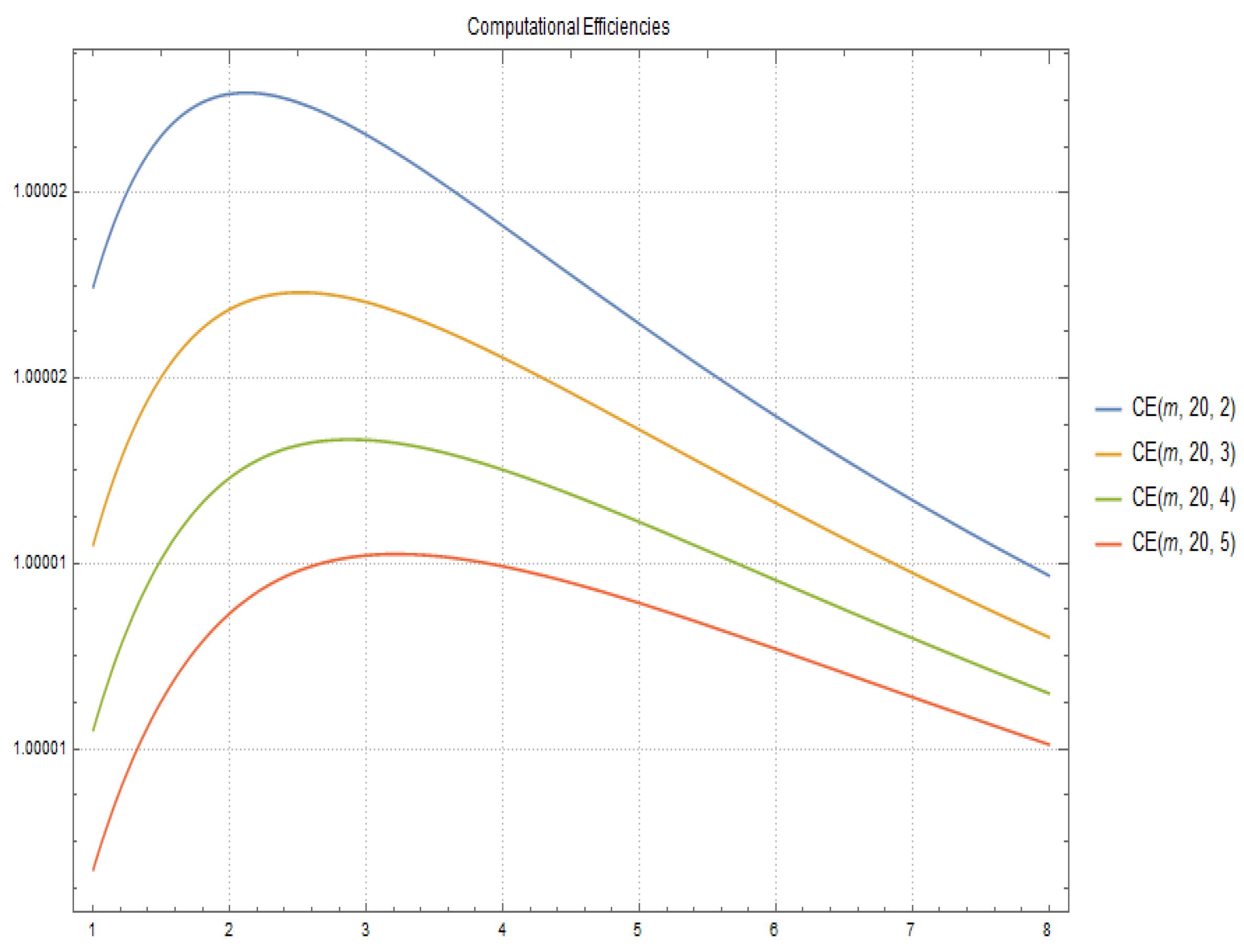

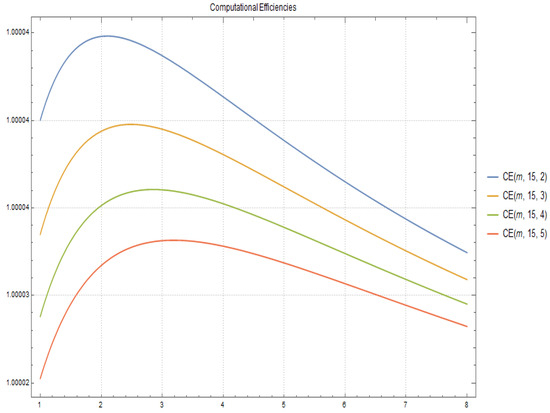

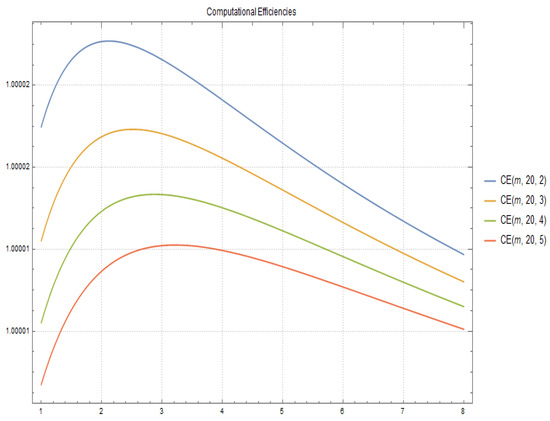

In Figure 3 and Figure 4 the computational efficiency for fixed and respectively and different values of p and m are shown.

Figure 3.

Computational Efficiencies for and different values of p and m.

Figure 4.

Computational Efficiencies for and different values of p and m.

3. Applications Related to Differential Equations

In this section, we present a comparison of the original method (2) using and the new proposal (5), avoiding the use of any inverse operator. We refer to our paper [7] in order to see the advantages of the original method in comparison with other methods that appear in the literature.

We consider the approximation of the pth root of two matrices related to the discretization of differential equations. This type of matrix operations appears in the approximation of space-fractional diffusion problems [12].

We start with the following matrix

where and .

The matrix (9) can be seen as the result of applying a discretization process, using finite differences to the boundary value problem defined as

where

Since the matrix A is sparse, the most efficient methods in both families are Chebyshev-like methods.

In Table 1, we compare our original method using (2) and the new combination using the approximation of the inverse (5). We observe a similar numerical behavior of both methods. Thus, the approximation of the inverse seems a good alternative since has similar errors with smaller computational cost.

Table 1.

Error for the approximation of , taking 20,000, ) and four iterations.

Finally, we compute the pth matrix roots for the matrix

This matrix is related to the discretization, using finite differences, of the laplacian operator that appear in many mathematical models. In Table 2, we observe again a similar numerical behavior of both schemes.

Table 2.

Error for the approximation of taking , , and three iterations.

4. Conclusions

The function evaluation of a matrix appears in a large and growing number of applications. We have presented and studied a general family of iterative methods without using inverse operators, for the approximation of the matrix pth root. The family incorporates the approximation of , by an iterative method, into another iterative method for approximating . The family includes methods of every order of convergence.

As it appears in [7,10], the most efficient method, in computational terms, for solving sparse problems, which appears when discretizing differential equations, is Chebyshev’s method.

Some numerical examples related to the discretization of differential equations have been presented. We have concluded that the new approach (5) has a similar numerical behavior to that of (2) but with the advantage that it is free of inverse operators, and in particular, has a lower computational cost. Finally, we care to mention the existence of other strategies for computing fractional powers of a matrix, which do not use matrix iterations [13,14] or even take into account other techniques such as those appearing in [15].

Author Contributions

Conceptualization, S.A., S.B., M.Á.H.-V. and Á.A.M.; methodology, S.A., S.B., M.Á.H.-V. and Á.A.M.; software, S.A., S.B., M.Á.H.-V. and Á.A.M.; validation, S.A., S.B., M.Á.H.-V. and Á.A.M.; formal analysis, S.A., S.B., M.Á.H.-V. and Á.A.M.; investigation, S.A., S.B., M.Á.H.-V. and Á.A.M.; resources, S.A., S.B., M.Á.H.-V. and Á.A.M.; data curation, S.A., S.B., M.Á.H.-V. and Á.A.M.; writing original draft preparation, S.A., S.B., M.Á.H.-V. and Á.A.M.; writing review and editing, S.A., S.B., M.Á.H.-V. and Á.A.M.; visualization, S.A., S.B., M.Á.H.-V. and Á.A.M.; supervision, S.A., S.B., M.Á.H.-V. and Á.A.M.; project administration, S.A., S.B., M.Á.H.-V. and Á.A.M.; funding acquisition, S.A., S.B., M.Á.H.-V. and Á.A.M.; All authors have read and agreed to the published version of the manuscript.

Funding

The research of the authors S.A. and S.B. was funded in part by Programa de Apoyo a la investigación de la fundación Séneca-Agencia de Ciencia y Tecnología de la Región de Murcia 20928/PI/18 and by PID2019-108336GB-100 (MINECO/FEDER). The research of the author M.Á.H.-V. was supported in part by Spanish MCINN PGC2018-095896-B-C21. The research of the author Á.A.M. was funded in part by Programa de Apoyo a la investigación de la fundación Séneca-Agencia de Ciencia y Tecnología de la Región de Murcia 20928/PI/18 and by Spanish MCINN PGC2018-095896-B-C21.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Higham, N.J. Newton’s method for the matrix square root. Math. Comp. 1986, 46, 537–549. [Google Scholar]

- Iannazzo, B. On the Newton method for the matrix pth root. SIAM J. Matrix Anal. Appl. 2006, 28, 503–523. [Google Scholar] [CrossRef]

- Guo, C.-H.; Higham, N.J. A Schur-Newton method for the matrix pth root and its inverse. IAM J. Matrix Anal. Appl. 2006, 28, 788–804. [Google Scholar] [CrossRef]

- Iannazzo, B. A note on computing the matrix square root. Calcolo 2003, 40, 273–283. [Google Scholar] [CrossRef]

- Petkov, M.; Bors̆ukova, S. A modified Newton method for obtaining roots of matrices. Annu. Univ. Sofia Fac. Math. 1974, 66, 341–347. (In Bulgarian) [Google Scholar]

- Smith, M.I. A Schur algorithm for computing matrix pth roots. SIAM J. Matrix Anal. Appl. 2003, 24, 971–989. [Google Scholar] [CrossRef]

- Amat, S.; Ezquerro, J.A.; Hernández-Verón, M.A. On a new family of high-order iterative methods for the matrix pth root. Numer. Linear Algebra Appl. 2015, 22, 585–595. [Google Scholar] [CrossRef]

- Guo, C.-H. On Newton’s method and Halley’s method for the principal pth root of a matrix. Linear Algebra Appl. 2010, 432, 1905–1922. [Google Scholar] [CrossRef]

- Iannazzo, B. A family of rational iterations and its application to the computation of the matrix pth root. SIAM J. Matrix Anal. Appl. 2008, 30, 1445–1462. [Google Scholar] [CrossRef]

- Amat, S.; Ezquerro, J.A.; Hernández-Verón, M.A. Approximation of inverse operators by a new family of high-order iterative methods. Numer. Linear Algebra Appl. 2014, 21, 629–644. [Google Scholar] [CrossRef]

- Higham, N.J.; Al-Mohy, A.H. Computing Matrix Functions; The University of Manchester, MIMS EPrint: Manchester, UK, 2010; Volume 18. [Google Scholar]

- Szekeres, B.J.; Izsák, F. Finite difference approximation of space-fractional diffusion problems: The matrix transformation method. Comput. Math. Appl. 2017, 73, 261–269. [Google Scholar] [CrossRef]

- Iannazzo, B.; Manasse, C. A Schur logarithmic algorithm for fractional powers of matrices. SIAM J. Matrix Anal. Appl. 2013, 34, 794–813. [Google Scholar] [CrossRef][Green Version]

- Higham, N.J.; Lin, L. An improved Schur–Pade algorithm for fractional powers of a matrix and their Fréchet derivatives. SIAM J. Matrix Anal. Appl. 2013, 34, 1341–1360. [Google Scholar] [CrossRef]

- Marino, G.; Scardamaglia, B.; Karapinar, E. Strong convergence theorem for strict pseudo-contractions in Hilbert spaces. J. Inequalities Appl. 2016, 2016, 134. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).