Abstract

The goal of this article is to investigate a new solver in the form of an iterative method to solve as an important nonlinear matrix equation (NME), where are appropriate matrices. The minimal and maximal solutions of this NME are discussed as Hermitian positive definite (HPD) matrices. The convergence of the scheme is given. Several numerical tests are also provided to support the theoretical discussions.

MSC:

65F30; 15A24

1. Subject, Literature, Motivation and Progress

1.1. Problem Statement

In this article, we take into account the nonlinear matrix equation (NME) [1]:

where is its Hermitian positive definite (HPD) solution, I is an identity matrix of the appropriate size, A is an invertible real or complex matrix and is the conjugate transpose of the matrix A. The existence of the solution for this NME was discussed in [2] and it was found that if one HPD solution was available, then all its Hermitian solutions would be positive definite. This problem for any HPD solution possesses the minimal solution and the maximal solution such that , for more details see [3].

The maximal solution for (1) can be derived via

where is the minimal solution of the dual NME as follows:

The NME in (1) is an important member of the general NME of the following form:

where , are arbitrary matrices [4,5].

In several disciplines such as control theory, stochastic filtering, queuing theory, etc., this type of nonlinear equation typically appears, see [6,7,8] for further discussions. On the other hand, it is always a daunting task to find a positive definite solution (PDS) to an NME [9,10]. This is because most of the existing methods are computationally expensive due to presence of the inverse part in each loop, see the discussions given in [11,12].

1.2. Literature

One of the pioneer solvers for computing the maximal solutions of (1) is the iterative expression (FPI) below which is based on a fixed-point strategy [13]:

Here, for each computing cycle, one matrix inverse must be computed. The authors in [14] presented another iteration scheme (SM) to compute the minimal solution as follows:

This scheme is categorized as a method without the computation of an inverse for each computing step, since is calculated once only by noticing that .

The main challenge in employing iterative schemes such as (6) is their slow rate of convergence specially at the initial stage of the process. To overcome this, author in [15] proposed to apply the multiple second-order Newton’s scheme for finding the HPD solution at the initial phase of convergence. This was pursued as follows:

for any .

Another well-known iteration scheme is the method proposed in [16,17], here denoted by EAM, which is as follows:

Authors in [18] proposed another iteration scheme as a generalization of the Chebyshev’s method (SOM) to solve (1) as follows:

1.3. Our Result

Our major result is a new iteration scheme similar to the form of SM for extreme solutions of (1). The method needs only the calculation of one inversion at the initial stage of the process and because of this, is categorized under the inversion-free iterative methods. Under mild conditions, the convergence of the scheme as well as some theoretical investigations to show how the method produces HPD solutions are given. In conclusion, we accelerate the long-standing algorithms for an important problem of numerical linear algebra. The experiments considered in this work reconfirm the competitiveness of the proposed iteration scheme compared to known algorithms. These will make this work interesting both practically and theoretically.

1.4. Organization of the Paper

After having a short introductory discussion regarding the issues with iterative methods for NMEs in this section, the remaining parts of this article are structured as follows. In Section 2, the solution of this NME as the zero of a nonlinear operator equation is introduced. In Section 2, several numerical experiments are investigated with implementation details given in Section 3 to confirm its applicability and to compare with several well-known and state-of-the-art methods from the literature. It is shown by way of illustration that the proposed solution method is useful and provides promising results. Lastly, some concluding remarks are made in Section 4.

2. Novel Iteration Method

2.1. An Equivalent NME

Clearly we can now impose a transformation as follows

to further simplify the nonlinear operator equation:

To obtain an iteration scheme to find the minimal HPD solutions, now it is necessary to solve the following problem:

where

2.2. Our Method

We employ (14) and (15) and view this NME as an inverse-finding problem via the Schulz-type iterative schemes (see, e.g., [19]), and accordingly we propose the following method:

The matrix iteration (16) requires to compute once specially at the initial stage of the implementation and it provides the iteration scheme considered an inversion-free method to solve (1).

Moreover, it is straightforward to state that the zeros of the map are equal to the zeros of the map , wherein . To discuss further, we find the inverse of H, which corresponds to the minimal HPD solution of (1).

The derivation of (16) can mainly be viewed as a matrix inverse finder. In fact, the last step of (16) is a method that can be used for finding generalized inverses. It is a member of the family of hyperpower iteration schemes employing several matrix products to compute the inverse of a given matrix. The iterative method can be rearranged to be written in its Horner form in order to reduce the rounding and cancellation errors when facing big matrices.

2.3. Theoretical Investigations

Lemma 1.

Employing the Hermitian matrix , the iteration scheme (16) provides a sequence of Hermitian matrices.

Proof.

It is obvious that the seed is Hermitian, and . Therefore, is also Hermitian, i.e., . Using an inductive argument, we have:

By considering , (), we then show that:

Note that has been employed in (18). The conclusion is valid for any . □

Theorem 1.

Assume that and A are invertible matrices. Then the sequence produced by (16) tends to the minimal solution of the NME (1) by having .

Proof.

We start by denoting that . Thus, we can write:

Then, by using (19) and taking a norm on both sides, one obtains:

Therefore, the inequality (20) can lead to convergence as long as the initial approximation reads . This yields to the following condition:

Then, by employing the HPD initial approximation , one obtains that

which holds as long as is the minimal HPD solution of (1). Furthermore, due to

we obtain that , thus . Hence, by mathematical induction, it is seen that converges to . □

It is required to discuss the rate of convergence. Although the proposed method (16) converges to the HPD solution under mild conditions, its q order of convergence is just linear. As will be seen later, however, due to its structure and a higher rate for computing matrix inverses, it converges faster because it comes to the convergence phase more quickly. This linear rate of convergence can be improved by employing an accelerator such as (7), in the initial phase of convergence.

3. Experiments

The computational efficiency and convergence behavior of PM (16) for the NME (1) are hereby illustrated on some numerical problems. All the compared methods were written in the programming package Mathematica 12.0 [20].

All computations were performed in standard floating point arithmetic without applying any compilations. In addition, computations were done on a laptop with 16.0 GB of RAM and Intel® Core™ i7-9750H. The matrix inversions whenever required were done using Mathematica 12.0 built-in commands. We found the number of iterations required to observe convergence. In the code we wrote to implement different schemes, we stopped all the applied schemes when two successive iterations in the infinity norm was less than a tolerance, as follows:

Here PM and SOM were employed along with (7) with and iterations and then (16) was used. Without imposing (7) the iteration schemes PM and SOM were faster than the other competitive ones, but the superiority was not tangible. Because of this, a multiple Newton’s method was imposed at the beginning of the iterations to accelerate the convergence as much as possible. The impact of the multiple Newton’s method is that it is useful when there is a clear smoothness around the zero of the nonlinear operator equation. It helps arrive at the convergence region much faster than simple root finders.

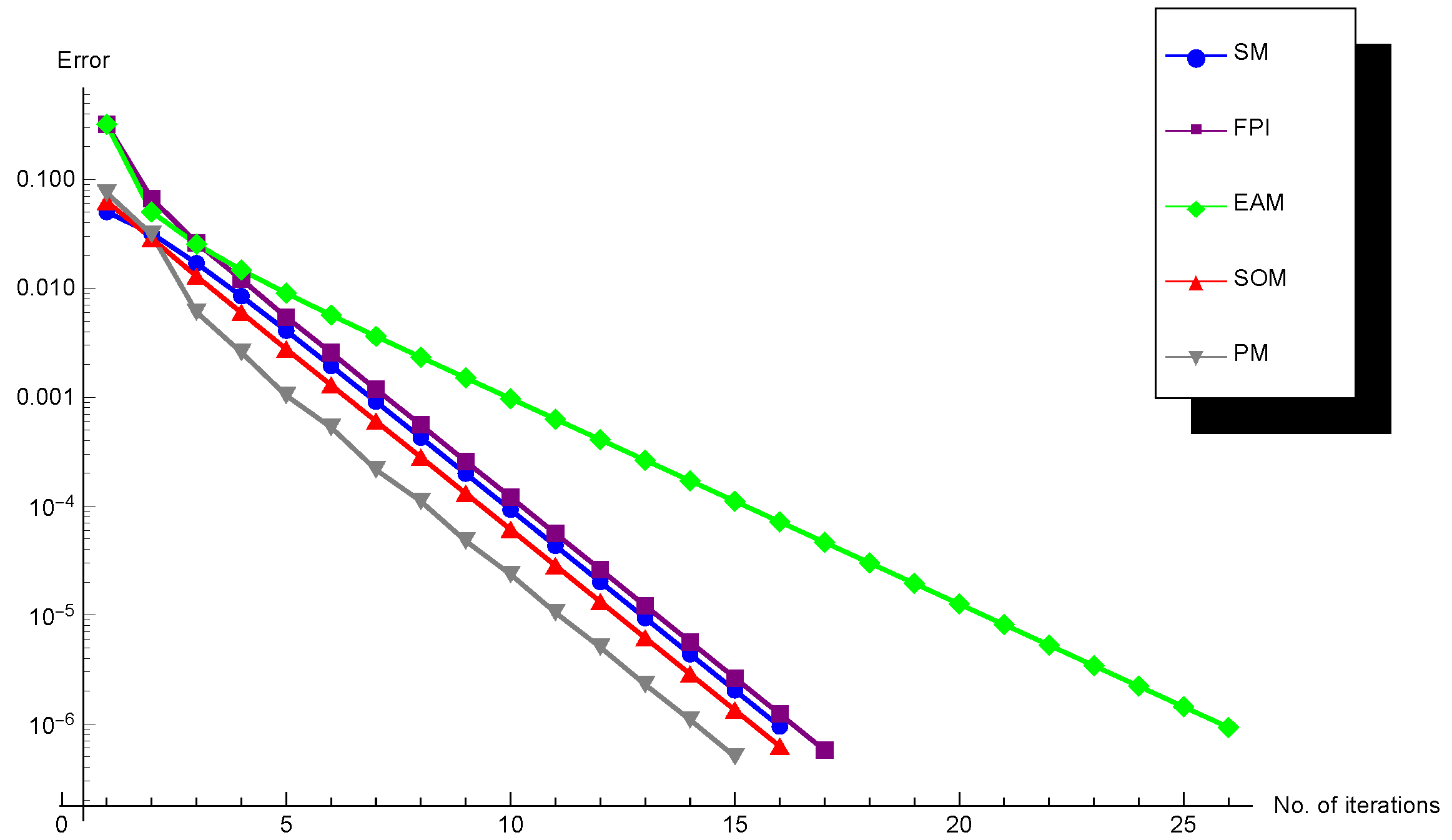

Example 1.

This example tests the effectiveness of PM on a real matrix A defined as follows:

to find the minimal solution of (1) which is given by

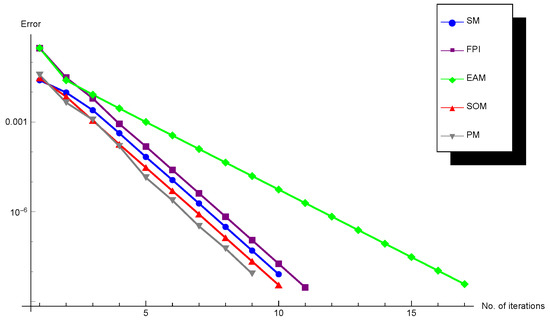

The results are given in Figure 1 using .

Figure 1.

Convergence history for Example 1.

Note that PM converges to , therefore we employed other methods to compute the maximal solution of the dual problem in our written implementations to have fair comparisons.

Numerical evidence of Example 1 reveals that PM converges much faster than existing solvers for the same purpose. Our method requires fewer numbers of iterations to reach the stopping criterion. In fact, under mild conditions, the error analysis, convergence and stability of the scheme were observed based on the expected norm of the error.

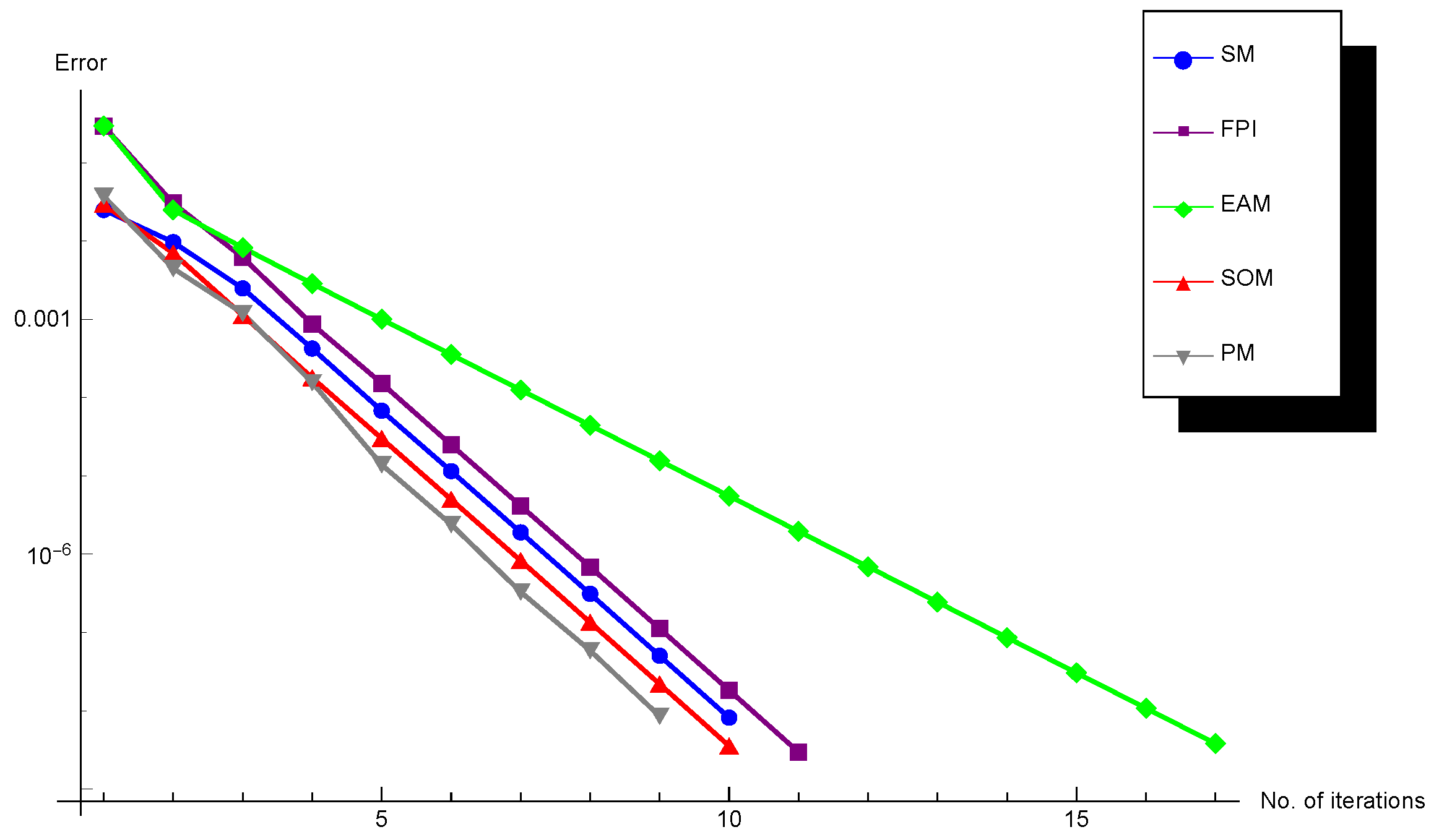

Example 2.

Using , we compare different iteration schemes to find the minimal solution of (1) when A is a complex matrix as follows:

and the solution is:

The numerical evidence is given in Figure 2. It too reveals that the proposed method is efficient in solving NME (1). Note that the CPU time for all methods is low, though PM converges in less than a second for the given examples and performs faster than the other solvers.

Figure 2.

Convergence history for Example 2.

4. Concluding Remarks

We have studied the minimal HPD solution of (1). The proposed solver needs the calculation of one matrix inversion at the initial stage of the process and then it is an inversion-free scheme. In this paper, a novel iterative scheme was developed. Several computational tests were given and found in agreement with the theoretical findings. Lastly, based on the computational evidence, we can conclude that the novel scheme is useful and effective in computing the numerical solutions of a broad range of NMEs.

Funding

The Deanship of Scientific Research (DSR), King Abdulaziz University, Jeddah, under Grant No. (FP-163-43).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No special data were used in this study.

Acknowledgments

The Deanship of Scientific Research (DSR) at King Abdulaziz University, Jeddah, Saudi Arabia has funded this project, under grant no. FP-163-43.

Conflicts of Interest

The author declares that he has no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- El-Sayed, S.M. An algorithm for computing positive definite solutions of the nonlinear matrix equation X + A*X−1A = I. Int. J. Comput. Math. 2003, 80, 1527–1534. [Google Scholar] [CrossRef]

- Engwerda, J.C.; Ran, C.M.A.; Rijkeboer, A.L. Necessary and sufficient conditions for the existence of a positive definite solution of the matrix equation X + A*X−1A = Q. Linear Algebra Its Appl. 1993, 186, 255–275. [Google Scholar] [CrossRef]

- Ivanov, I.G.; Hasanov, V.I.; Minchev, B.V. On matrix equations X ± A*X−2A = I. Linear Algebra Its Appl. 2001, 326, 27–44. [Google Scholar] [CrossRef]

- Huang, N.; Ma, C.; Tang, J. The inversion-free iterative methods for a system of nonlinear matrix equations. Int. J. Comput. Math. 2016, 93, 1470–1483. [Google Scholar] [CrossRef]

- Shil, S.; Nashine, H.K. Latest inversion-free iterative scheme for solving a pair of nonlinear matrix equations. J. Math. 2021, 2021, 2667885. [Google Scholar] [CrossRef]

- Bougerol, P. Kalman filtering with random coefficients and contractions. SIAM J. Control Optim. 1993, 31, 942–959. [Google Scholar] [CrossRef]

- Soheili, A.R.; Toutounian, F.; Soleymani, F. A fast convergent numerical method for matrix sign function with application in SDEs. J. Comput. Appl. Math. 2015, 282, 167–178. [Google Scholar] [CrossRef]

- Tian, Y.; Xia, C. On the low-degree solution of the Sylvester matrix polynomial equation. J. Math. 2021, 2021, 4612177. [Google Scholar] [CrossRef]

- Guo, C.H.; Lancaster, P. Iterative solution of two matrix equations. Math. Comput. 1999, 68, 1589–1603. [Google Scholar] [CrossRef]

- Liu, A.; Chen, G. On the Hermitian positive definite solutions of nonlinear matrix equation Xs + A*X−t1A + B*X−t2B = Q. Math. Prob. Eng. 2011, 2011, 163585. [Google Scholar] [CrossRef]

- El-Sayed, S.M.; Ran, A.C.M. On an iteration method for solving a class of nonlinear matrix equations. SIAM J. Matrix Anal. Appl. 2002, 23, 632–645. [Google Scholar] [CrossRef]

- Li, J. Solutions and improved perturbation analysis for the matrix equation X + A*X−pA = Q. Abst. Appl. Anal. 2013, 2013, 575964. [Google Scholar]

- Engwerda, J.C. On the existence of a positive definite solution of the matrix equation X + A*X−1A = I. Linear Algebra Its Appl. 1993, 194, 91–108. [Google Scholar] [CrossRef]

- Monsalve, M.; Raydan, M. A new inversion-free method for a rational matrix equation. Linear Algebra Its Appl. 2010, 433, 64–71. [Google Scholar] [CrossRef][Green Version]

- Zhang, L. An improved inversion-free method for solving the matrix equation X + A*X−αA = Q. J. Comput. Appl. Math. 2013, 253, 200–203. [Google Scholar] [CrossRef]

- El-Sayed, S.M.; Al-Dbiban, A.M. A new inversion free iteration for solving the equation X + A*X−1A = Q. J. Comput. Appl. Math. 2005, 181, 148–156. [Google Scholar] [CrossRef]

- Zhan, X. Computing the extremal positive definite solutions of a matrix equation. SIAM J. Sci. Comput. 1996, 17, 1167–1174. [Google Scholar] [CrossRef]

- Soleymani, F.; Sharifi, M.; Vanani, S.K.; Haghani, F.K.; Kiliçman, A. An inversion-free method for finding positive definite solution of a rational matrix equation. Sci. World J. 2014, 2014, 560931. [Google Scholar] [CrossRef] [PubMed]

- Soleymani, F.; Sharifi, M.; Shateyi, S.; Haghani, F.K. An algorithm for computing geometric mean of two Hermitian positive definite matrices via matrix sign. Abst. Appl. Anal. 2014, 2014, 978629. [Google Scholar] [CrossRef]

- Trott, M. The Mathematica Guide-Book for Numerics; Springer: New York, NY, USA, 2006. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).