Abstract

Transparent and accurate reporting is essential to evaluate the validity and applicability of risk prediction models. Our aim was to evaluate the reporting quality of studies developing and validating risk prediction models for melanoma according to the TRIPOD (Transparent Reporting of a multivariate prediction model for Individual Prognosis Or Diagnosis) checklist. We included studies that were identified by a recent systematic review and updated the literature search to ensure that our TRIPOD rating included all relevant studies. Six reviewers assessed compliance with all 37 TRIPOD components for each study using the published “TRIPOD Adherence Assessment Form”. We further examined a potential temporal effect of the reporting quality. Altogether 42 studies were assessed including 35 studies reporting the development of a prediction model and seven studies reporting both development and validation. The median adherence to TRIPOD was 57% (range 29% to 78%). Study components that were least likely to be fully reported were related to model specification, title and abstract. Although the reporting quality has slightly increased over the past 35 years, there is still much room for improvement. Adherence to reporting guidelines such as TRIPOD in the publication of study results must be adopted as a matter of course to achieve a sufficient level of reporting quality necessary to foster the use of the prediction models in applications.

1. Introduction

Guidelines are a ubiquitous tool in all areas of healthcare to provide evidence-based guidance on how to act appropriately according to current knowledge. The cornerstone of healthcare guidelines, which allows for the synthesis of empirical evidence from the scientific literature on a specific topic, is the proper publication of studies that address the topic in question. To appropriately evaluate a study in the process of research synthesis and for inclusion in the body of scientific evidence that influences the contents of the guideline, two prerequisites exist: First, the publication of a scientific study has to contain all relevant aspects of the design, conduct, and analysis in the necessary detail. Second, the results have to be presented comprehensively. Therefore, a specific subtype of guidelines for researchers has emerged, namely reporting guidelines, which aim to improve the quality of reporting of scientific studies in the medical literature by providing advice on what items should be included in a publication. In 1994, the first version of the CONSORT (CONsolidated Standards Of Reporting Trials) statement, a reporting guideline for randomized parallel group clinical trials, was published [1] and triggered a long-lasting development in which more and more reporting guidelines were created for different types and areas of medical research, such as STROBE (STrengthening the Reporting of OBservational studies in Epidemiology) for observational studies in epidemiology [2], PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) for systematic reviews and meta-analyses [3], STARD (STAndards for Reporting of Diagnostic accuracy) for diagnostic accuracy studies [4], SPIRIT (Standard Protocol Items: Recommendations for Interventional Trials) for the definition of standard protocols for clinical trials [5] and COREQ (COnsolidated criteria for REporting Qualitative research) for qualitative research [6], to name just a few of the best-known reporting guidelines.

In 2015, the TRIPOD (Transparent Reporting of a multivariate prediction model for Individual Prognosis Or Diagnosis) statement was published, a reporting guideline that addresses publication of studies describing diagnostic and prognostic prediction models [7]. TRIPOD consists of 22 items that are considered essential for informative reporting of studies developing and/or validating multivariable prediction models. Transparent reporting of these items enables the assessment of the generalizability and risk of bias, as well as the replication of the published models by other researchers. In order to enhance objectivity and to ensure consistent measurement of reporting quality a TRIPOD adherence assessment form was developed in 2019 [8]. It provides guidance for extracting the relevant information and calculating summary scores to determine the degree of adherence to TRIPOD.

One clinical domain, where a high number of studies reporting prediction models have been published during the last 35 years, is the field of cutaneous melanoma. As melanoma is an aggressive malignancy that tends to metastasize beyond its primary site, detection in its early stages is essential for the successful treatment of the disease [9]. The importance of early diagnosis of melanoma combined with its rising incidence over the last decades [10] has fueled the demand for melanoma risk prediction. Our objective was to investigate the reporting quality and compliance with TRIPOD in this special segment of prediction studies.

2. Materials and Methods

2.1. Study Selection and Eligibility Criteria

In our TRIPOD rating we included all studies developing and validating models for predicting the individual risk of occurrence of cutaneous melanoma. As the basis for the set of studies to be assessed, we used a recent systematic review on melanoma prediction modeling [11] updating two earlier systematic reviews on the topic [12,13]. One eligibility criterion for that systematic review had been that all included studies provide either absolute risk or risk scores, or report mutually adjusted relative risks for primary cutaneous melanoma. Furthermore, the studies had to use a multivariable prediction model and a well-defined statistical method for the development of their models. More details on the search strategy are described in [11].

As the end of the search period for the systematic review was 31 January 2020, we updated the literature search to ensure that our TRIPOD rating included all relevant studies. Specifically, the forward snowballing technique was performed on all three systematic reviews on the topic [11,12,13] for the time interval since the most recent systematic review, that is, February 2020 to August 2021. Forward snowballing is an efficient search strategy that explores citations to specific reference papers and thus looks forward in time when performing a search among citations [14]. Furthermore, an electronic literature search in PubMed using the same search string as in [11] was conducted for the same time interval.

2.2. TRIPOD Rating

The reporting quality of each study was assessed by six independent reviewers (I.K., K.D., M.V.H., S.M., T.S., O.G.) using the “TRIPOD Adherence Assessment Form” (www.tripod-statement.org/ (accessed on 21 December 2021)) for a uniform rating and scoring. The reviewer panel was multidisciplinary and consisted of reviewers with methodological, clinical and public health backgrounds and different levels of experience. For data collection, an web-based input tool was created using the software SoSci Survey [15]. All six reviewers rated all 42 studies. Disagreements between the reviewers regarding the assessment of the individual items were resolved in ten consensus meetings. Furthermore, two independent referees (A.B.P and W.U.) decided in case of sustained disagreements.

In total, the TRIPOD checklist contains 22 items related to different parts of the publication: title and abstract (items 1 and 2), introduction (item 3), methods (items 4 through 12), results (items 13 through 17), discussion (items 18 through 20) and other information (items 21 and 22). Ten of the 22 items are split into subitems, resulting in a total of 37 TRIPOD components, see Table 1. Those 12 items without subitems and the 25 subitems contain one or more elements which are mostly scored as either “yes” or “no”. For some elements, there is also the response option “referenced” if the requested information is contained in another publication and the authors provide the reference to this publication. Another response option for specific elements is “not applicable” if the element deemed to be not applicable to a specific situation.

Table 1.

Components of the Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD) statement adapted from www.tripod-statement.org/ (accessed on 21 December 2021). Items are numbered and subitems are marked with letters.

2.3. Calculation of TRIPOD Adherence Scores

We used the published scoring algorithms as provided in [8] to quantify the adherence to TRIPOD. If all elements of a particular TRIPOD component are adequately addressed, meaning they are answered with either “yes” or “referenced”, adherence to this TRIPOD component is scored as “1”. Otherwise, non-adherence is scored as “0”. An overall TRIPOD adherence score was calculated as the sum of TRIPOD components divided by the total number of applicable TRIPOD components for the corresponding study report. Item 21—providing information about the availability of supplementary material for the publication—is not taken into account for the score calculation [8]. Furthermore, the number of applicable components depends on the study type, as some of the components do not apply to all study types. The TRIPOD statement covers three types of studies: (i) those that solely report model development, (ii) those that combine development and external validation of a prediction model, and (iii) those that describe solely external validation of an already published model [7]. For studies solely describing model development the sub-/items 10c, 10e, 12, 13c, 17 and 19a do not apply. Therefore, the maximum number of applicable TRIPOD components for the score calculation is 29 for development studies. Subitems that are not rated for validation studies are 10a, 10b, 14a, 14b, 15a and 15b, which again results in a total of 30 applicable TRIPOD components. For studies describing both development and external validation, all 36 sub-/items apply. Additionally, five sub-/items (5c, 10a, 11, 14b, 17) can be rated as “not applicable” by the reviewers reducing the denominator for the TRIPOD adherence score in these cases.

The overall adherence per TRIPOD sub-/item is defined as the number of studies that adhered to a specific item divided by the number of studies in which the item is applicable.

2.4. Statistical Analysis

All adherence scores are reported as percentages. Descriptive statistical analyses were performed to describe the score distribution per study and per sub-/item. We further examined whether a temporal effect regarding the reporting quality of risk prediction studies exists. Therefore, we used beta regression, as the adherence scores are restricted to the interval (0,1) [16]. The beta regression is modeled using mean and precision parameters. In a first step, we determined the possible relationship between completeness of reporting as dependent variable and year of publication as only independent variable of the model. In a second step, we added journal subject category (categorical) and journal impact factor (continuous) as independent variables in the mean parametrization of the multivariable regression model. To demonstrate the impact of the subject categories, we calculated the model adjusted mean TRIPOD overall adherence scores for the mean pattern of other variables in the model. Journal subject category and impact factor of 2020 were extracted from the 2021 Journal Citation Reports® [17]. For journals with multiple categories we selected the subject category listed first (e.g., for the journal “Melanoma Research”, which has been assigned to the categories “Oncology”, “Dermatology” and “Medicine, Research & Experimental”, we used the category “Oncology”).

Group comparisons, e.g., between studies reporting solely model development and studies reporting both model development and external validation, were done by the exact version of the non-parametric Mann–Whitney-U test with a significance level of 0.05. All statistical analyses were performed using the R software [18]. Beta regression modeling was implemented using the “betareg” package of R [19].

3. Results

3.1. Description of Studies

We included 42 studies in our TRIPOD rating. Forty studies [20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59] were adopted from the systematic review about risk prediction models for melanoma published in 2020, whereas the remaining two studies [60,61] arose from the updated literature search. Details on the information extracted from the studies are summarized in Table A1. Out of the 42 studies, 35 (83%) reported the development of a melanoma risk prediction model, while seven studies (17%) described both the development and external validation of a risk prediction model. None of the studies belonged to the third study type covered by TRIPOD, namely those studies that describe exclusively the external validation of an already published model. The studies were published between 1988 and 2021, with a marked increase in the number of studies in the last decade of this interval. Study designs used were mainly case–control (n = 30) and cohort (n = 10). Two studies used published material from meta-analyses to determine their risk estimates. The median journal impact factor was 12.5 (range 0–26). The journals in which the studies were published belong to the following subject categories: “Oncology “(N = 15), “Dermatology” (N = 12), “Medicine, General & Internal” (N = 4), “Multidisciplinary Sciences” (N = 4), “Public, Environmental & Occupational Health” (N = 2) and “Other” (N = 3). The category “Other” includes one study [57] that was published in a conference proceeding, one study [41] published in a journal that was not included in the Web of Science Core Collection and one study [53] published in a journal of the category “Biochemistry & Molecular Biology”.

3.2. Reporting Completeness per Study in TRIPOD

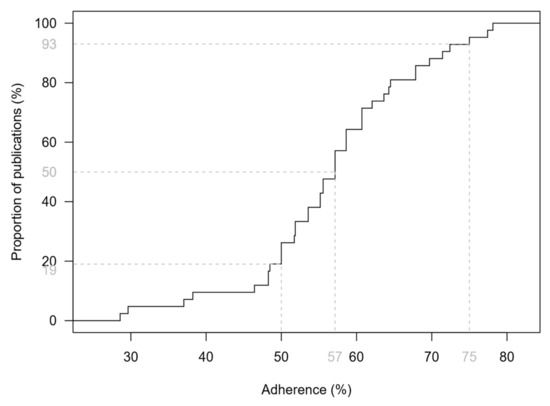

Figure 1 shows the empirical distribution function of the TRIPOD adherence score. The median adherence to TRIPOD was 57% with a range from 29% to 78%. There was no study that satisfied all requirements of transparent reporting. In total, 34 studies (81%) fulfilled at least 50% of the TRIPOD components, whereas only 3 studies (7%) reached an adherence of 75% or more. A more complete reporting was seen for studies with a combined reporting of model development and external validation (median: 64%; range: 38–78%) compared to development studies, which had a median adherence of 56% (29–75%). However, the score difference was not statistically significant (p = 0.11). Studies that claim to report according to the TRIPOD statement (N = 3) [44,47,55] achieved a significantly higher adherence than studies that did not (75% vs. 56%, p < 0.05). The lowest adherence to TRIPOD (29% and 30%) was observed in two studies [40,58] which were not published as regular original articles.

Figure 1.

Empirical distribution function of the TRIPOD adherence score based on 42 studies addressing melanoma risk prediction models and their validation. Dashed lines indicate the median, as well as the proportions of studies that achieved a score of less than 50% and less than 75%.

3.3. Reporting of Individual TRIPOD Components

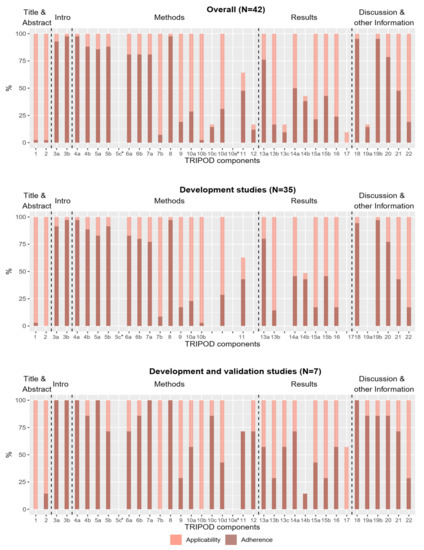

Completeness of reporting of individual TRIPOD components over all 42 studies and per type of study is shown in Figure 2. In total, 15 of the 37 sub-/items were fulfilled in less than half of the studies for which they were applicable. Two subitems (5c and 10e) were rated as “not applicable” in all studies for which they were rated (item 10e has to be rated only for studies reporting development and validation). Consequently, no adherence score could be determined for these subitems. No sub-/item was reported in all studies, but several sub-/items (3a, 3b, 4a, 5a, 7a and 18) were provided for all seven development and validation studies.

Figure 2.

Applicability and reporting of TRIPOD components in the total group of studies (N = 42), in development studies (N = 35) and in development and validation studies (N = 7). Bright bars represent the percentage of studies for which the components were applicable. Dark bars represent the percentage of studies in which the TRIPOD component is fulfilled. * The subitems were rated as “not applicable” in all studies. (Subitem 10e does not apply to development studies, so in this case “all studies” means all development and external validation studies (N = 7)).

The most and least frequently reported sub-/items are shown in Table 2. Six sub-/items were reported in 90% or more of the studies in which they were applicable. The most frequently reported sub-/items with a relative frequency of 98% were related to model objectives (subitem 3b), study design and source of data (subitem 4a), and sample size (item 8). The least reported sub-/items with relative frequencies less than 10% were those related to blinding methods for predictor assessment (subitem 7b), title and abstract (items 1 and 2), model development procedure (subitem 10b) and model updating (item 17). Item 17 was not reported by any study, but it should be noted that it was only applicable in four studies.

Table 2.

TRIPOD components reported in more than 90% and less than 10% of the studies. Completeness of reporting of the sub-/items is given as percentage. Additionally, the number of studies that adhered to the specific sub-/item (n) and the number of studies in which the sub-/item is applicable (N) are provided.

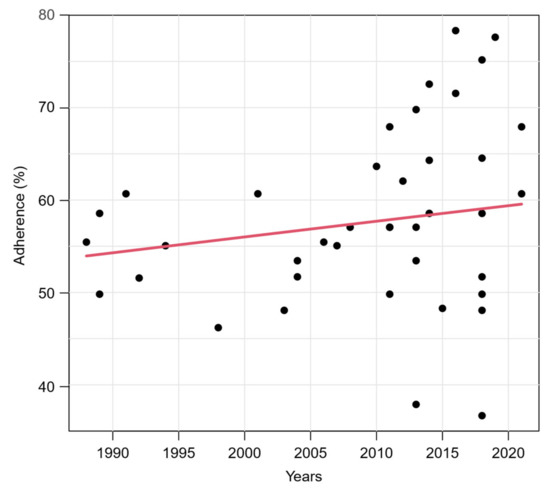

3.4. Temporal Analysis and Multivariable Regression

To avoid bias, only original articles were retained for the following analyses, as the low adherence of the two excluded studies [40,58] is due to their publication type. The relationship between the adherence to TRIPOD and the publication year of the study is illustrated in Figure 3. A slight increase in the score over the years could be noted. However, the association was not significant (p = 0.078) in a simple beta regression model containing publication year as the only explanatory variable. The variance of the TRIPOD score increased strongly among studies that were published after 2010 compared to studies published before. Supplementary Figure S1 shows the relationship between the TRIPOD adherence and the publication year in different subgroups. The temporal relationship is greater when only studies in journal subject categories “Dermatology” and/or “Oncology” are considered in the model.

Figure 3.

Relationship between TRIPOD adherence and publication year of studies. Red line represents predicted mean curve from a beta regression model based on 40 studies (two studies were excluded, see text).

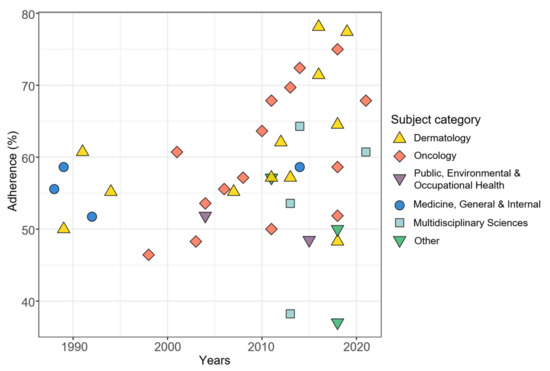

When adding the impact factor and the journal subject category as additional independent variables, results of multivariable beta regression revealed a significant influence of the publication year on the adherence score (p < 0.001) and the variability of the score (p < 0.001). In addition, the journal subject category closely missed significance (p = 0.065). The categories “Dermatology” and “Oncology” were associated with higher adherence scores than the categories “Multidisciplinary Sciences”, “Public, Environmental & Occupational Health” and the combined category “Other”. This is shown by the model adjusted mean values of the TRIPOD overall adherence scores calculated for the mean pattern of other variables in Table 3 and further illustrated in Figure 4, in which we have added the journal subject categories to the previous correlation plot. The subject category “Dermatology” has a model adjusted mean score of 62%, while in the subject category “Other” it is only 48%. The journal impact factor had a negligible influence on the reporting quality (p = 0.72). An illustration of the relationship between the adherence to TRIPOD and the impact factor is given in the Supplementary Figure S2.

Table 3.

Model adjusted mean TRIPOD overall adherence scores for each journal subject category using the mean pattern of other variables.

Figure 4.

Relationship between TRIPOD adherence and publication year of studies with journal subject categories added. N = 40 (two studies were excluded, see text).

4. Discussion

Our results show substantial deficits in the reporting of risk prediction models for cutaneous melanoma. More than half of the components deemed essential for good reporting in publications of prediction models according to the TRIPOD statement were insufficiently reported. Yet, transparent and accurate reporting is essential in order to be able to interpret the results, appraise study validity, replicate the model, and evaluate its applicability.

4.1. Interpretation of Results

Of note, none of the 42 studies included in the analysis satisfied all required TRIPOD components. The maximum adherence score, achieved by the study of Vuong et al. [43] was 78%. Elements of the introduction related to the background and objectives of the study (subitems 3a and 3b) were adequately reported by almost all studies (93% and 98%). The description of the source of data (subitems 4a and 4b) was also at an overall high reporting level (98% and 88%), as well as the discussion of limitations and discussion of results (sub-/items 18 and 19b, 95%). At the same time, several sub-/items were missing in a large proportion of the studies. These include the items related to title and abstract (items 1 and 2), which at first glance seem easy to fulfill, but which comprise a long list of precisely defined elements that all have to be met. For item 1, it is required that the title contains (1) the terms “development” or “validation” (or synonyms) depending on the study type, (2) terms like “risk prediction/risk model/risk score” (or synonyms), (3) the target population, and (4) the outcome that is predicted. In fact, only one of the 42 studies [54] fulfilled all four elements. Other components that were sparsely reported, especially in development studies, are sub-/items related to missing data (item 9) and statistical analysis methods (10a–e), as well as details of model specification and model performance (subitems 15a, 15b and 16). Instructions on how to use the model, for example, were found in less than half of the studies (43%). Reporting in these domains needs to be improved, otherwise re-validating and re-calibrating the developed models in future research and their use in clinical settings will be nearly impossible.

Our results indicate that the mean reporting quality has slightly improved over the past 40 years. However, the variance has also increased sharply since 2010. While there are some recent studies with relatively high scores above 70% [43,44,47,49,54], there are also few recent studies that scored well below average (37% and 38%) [52,57]. Although it might be conceivable that studies published in methodologically oriented journals place a higher focus on the complete reporting of their methods and results, this could not be proven. However, we identified only two studies published in journals of the journal subject category “Public, Environmental & Occupational Health” as our surrogate for methodologically oriented journals which limits assessment of this hypothesis. On the positive side, studies citing TRIPOD had a significantly better adherence than the rest of the studies. Of 12 studies that appeared after the publication of the TRIPOD statement and that could have used the guideline for their reporting, only three studies stated compliance with the guideline explicitly. This shows that the use of established reporting guidelines like TRIPOD needs to increase among researchers conducting risk prediction studies. Ultimately, the goal should be for all publishing researchers to adopt existing reporting guidelines as a matter of course. Scientific journals play a key role in achieving this goal. If journals introduced a mandatory requirement to include a completed TRIPOD checklist with each submitted manuscript describing studies developing and/or validating prediction models, this would increase awareness of TRIPOD and have a positive impact on the quality of reporting. The experiences gained in implementing reporting guidelines such as CONSORT [1] and PRISMA [3] can serve as a role model [62,63].

4.2. Comparison with Other Studies

Even though this analysis related to a special segment of prediction studies, our finding of inadequate reporting in the field of melanoma risk prediction is comparable to other studies [64,65,66,67]. Heus et al. [65] included 146 publications across 37 clinical domains and reported a median TRIPOD adherence of 44%, which is even lower than the median of the studies included in our analysis (57%). Other publications [64,67] found poor reporting quality of prognostic models in oral health and for COVID-19. Their results demonstrate that incomplete and non-transparent reporting is an interdisciplinary problem and present in most areas of medicine.

One previous study [66] focused already on melanoma prediction studies and claimed to assess TRIPOD adherence in this area. However, due to a less sensitive search strategy combined with broad eligibility criteria this study is not comparable to ours. The set of studies assessed by Yiang et al. [66] comprised a mixed bag of investigations devoted to predicting (i) occurrence of melanoma in population settings, (ii) progression of melanoma in clinical settings, (iii) survival of melanoma patients, (iv) lymph node positivity of melanoma patients, and (v) correct identification of melanoma in diagnostic settings. Due to the broader definition of melanoma prediction, Yiang et al. [66] should have found many more publications for their TRIPOD assessment than we, but in fact they identified only 27 studies in their literature search. Altogether 34 of the 42 studies in our evaluation were not included in their set of studies, while 19 of their studies were intentionally not covered by us as they did not address prediction models for melanoma occurrence. Interestingly, although the scope of the two investigations on TRIPOD adherence in melanoma prediction studies was different, the results were similar: the median TRIPOD adherence scores in both investigations were nearly identical (61% vs. 57%).

Only one study reported a relatively good TRIPOD compliance (median 74%) related to prediction models for hepatocellular carcinoma [68]. Nevertheless, the study also highlighted several sub-/items that were poorly reported like item 2 (abstract), subitem 10d (specification of measures used to assess model performance in methods section) and subitem 13b (description of the characteristics of the participants).

The item-specific frequency of reporting varied among studies. Items that were very poorly reported in our analysis were very well presented in other studies and vice versa. Nevertheless, the basic statement that reporting of studies about prediction models needs to be improved was the same across all clinical domains.

4.3. Evaluation of TRIPOD Feasibility

During the rating process, we identified some limitations of the TRIPOD assessment form. The main feasibility problem was that TRIPOD is primarily designed for longitudinal studies, while we identified mostly case–control studies (N = 30, 71%) in our setting which is quite typical for studies developing prediction models for the occurrence of specific cancer entities. Some elements of the assessment form are not applicable to case–control studies in their current form. This includes the question about the time point of outcome assessment (element of subitem 6a). Since the outcome of case–control studies is already known at the time of recruitment, the element should actually be rated as “not applicable”, but the assessment form does not provide this response option for this element. The same applies to the questions about the reporting of a follow-up time (element of subitem 13a) and the number of participants with missing data for the outcome (element of subitem 13b). Again, “not applicable” is missing as a response option. Furthermore, reporting of participant flow (element of subitem 13a) is very uncommon for case–control studies as opposed to cohort studies. It is thus clear that the TRIPOD assessment form is not optimal in its current form for all study designs.

Furthermore, it can be concluded that TRIPOD is less suitable for publications that do not have the typical structure of introduction, methods, results and discussion. The two studies which were not published as regular original articles [40,58] achieved the lowest adherence scores of the 42 included studies (29% and 30%). However, due to their different structure, neither could report on all components required by TRIPOD. Extensions of the TRIPOD statement will improve the rating of different publication and study types. In 2020 the authors of TRIPOD published an additional checklist that applies explicitly to journal and conference abstracts and contains 12 items [69]. In addition, an extension for clustered data, e.g., datasets consisting of multiple centers or countries, will appear in Spring 2022 [70]. Due to the reasons already mentioned in the last paragraph, an extension or modification of TRIPOD for case–control studies would also be useful.

Another aspect is that TRIPOD may overestimate gaps in reporting by weighting all components equally for the calculation of the score. However, reporting or not reporting has different effects depending on the sub-/item. Regarding item 1, omitting certain terms such as “development” or “validation” from the title affects how well the study is found in literature searches. Furthermore, if a study does not report all predictors that they used (element of subitem 7a), it is no longer possible to apply, replicate or validate the model. Not reporting the source of funding and the role of the funders (item 22) reduces the adherence score in the same way as the two examples before, but the impact is completely different. In addition, some TRIPOD components consist of several individual elements, whereas other items comprise only one or two elements. They, therefore, take different amounts of time and effort to fulfill. For example, item 2 (abstract) includes 12 elements. If a single element, e.g., the specification of a calibration measure, is not fulfilled, although all other eleven elements are present in the abstract, the whole item is considered as not fulfilled. In contrast, only one element (description of study design/data source) is needed to obtain the adherence point of subitem 4b. In addition, it is not taken into account whether the missing element could be reported at all. When a study does not evaluate the calibration of its model, evidently a calibration measure cannot be reported in the abstract. However, according to the assessment form, the element cannot be rated “not applicable”. Thereby, a lack of calibration is penalized twice, in item 2 (abstract) and item 16 (performance measures).

While TRIPOD claims to not provide a measure for the quality of the studies [7], there are nevertheless some items that are related to methodological quality, as already seen in the example of the calibration measure. Another example is whether internal validation was reported (element of item 10b). If this element is not reported, regardless of whether internal validation has actually been performed and could thus be reported or not, the element must be rated “no”. To be more independent of the methodological quality of the studies, it is necessary that especially items related to the analysis and the results can be rated “not applicable”. In fact, it is impossible to completely separate reporting quality from methodological quality, as this would require consistent conditions. Specifically, the studies would all need to have adopted the same methodologic concept and the same statistical analyses, only then TRIPOD would evaluate which study reports more carefully and more completely. Therefore, although TRIPOD is intended to reflect only reporting quality, it is also an implicit indicator of study quality.

If journals required a TRIPOD checklist for all studies describing development and/or validation of prediction models, indicating which items were fulfilled, not applicable or not relevant to the study type, and published the checklist together with the study report after review, it would be easier for other researchers or users of the prediction model to evaluate and interpret the results of the study.

4.4. Limitations

The set of studies assessed in our rating comprised the scientific literature on risk prediction models for melanoma identified by three systematic reviews on the topic and our literature update. However, due to the eligibility criteria of the systematic reviews only studies describing solely development and studies describing both development and external validation of risk prediction models for melanoma were included in our assessment. Thus, publications describing exclusively external validation studies of preexisting models were not part of our investigation. In consequence, our results do not allow conclusions about reporting the quality of external validation studies of risk prediction models for melanoma.

Although we felt that defining the overall TRIPOD adherence score as a simple sum score with equally weighted components had its shortcomings and did not adequately reflect reporting quality, we refrained from using a modified score version in which more important components receive a higher weight than less important components. This decision was made to allow for better comparability of our assessment results with other TRIPOD assessments.

Some parts of the TRIPOD assessment form do not lend themselves to a clear objective rating, they contain a subjective flavor. Different raters will thus come to different conclusions on how to rate the corresponding TRIPOD sub-/item. Therefore, it cannot be ruled out that another group of raters would have come to other results regarding the distribution of TRIPOD adherence scores in our set of studies. We have tried to minimize this rater dependence by holding consensus meetings to resolve discrepant ratings and by involving two independent referees in case of persisting disagreement.

5. Conclusions

In conclusion, the current level of reporting of risk prediction models for cutaneous melanoma is insufficient, especially with regard to details of the title, abstract, blinding, model-building procedures and model performance. Even though completeness of reporting has increased slightly over the years, there is still much room for improvement. One point that needs to be addressed in order to improve the reporting quality in future research is the more frequent use of the TRIPOD guideline, which is currently rather rare. Otherwise, potentially valuable risk prediction models may be less useful in clinical practice simply because of inadequacies in their reporting.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/healthcare10020238/s1, Figure S1: Relationship between TRIPOD adherence and publication year based on studies from different subject categories, Figure S2: Relationship between TRIPOD adherence and impact factor.

Author Contributions

Conceptualization, O.G.; methodology, I.K., K.D., M.V.H., S.M., T.S. and O.G.; software, I.K.; validation, A.B.P., W.U. and O.G.; formal analysis, I.K.; investigation, I.K., K.D., M.V.H., S.M., T.S. and O.G.; resources, O.G.; data curation, A.B.P. and I.K.; writing—original draft preparation, I.K.; writing—review and editing, K.D., M.V.H., S.M., A.B.P., T.S., W.U. and O.G.; visualization, I.K.; supervision, O.G.; project administration, I.K.; funding acquisition, O.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on reasonable request from the first author (I.K.).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Basic characteristics of studies reporting risk prediction models for melanoma. Studies are ordered according to study type and year of publication. Within studies of the same study type and year of publication, the studies are sorted by the last name of the first author. (N = 42 studies).

Table A1.

Basic characteristics of studies reporting risk prediction models for melanoma. Studies are ordered according to study type and year of publication. Within studies of the same study type and year of publication, the studies are sorted by the last name of the first author. (N = 42 studies).

| Authors | Study Type | Publication Year | Journal | Journal Subject Category | Impact Factor 2020 | Study Design |

|---|---|---|---|---|---|---|

| English and Armstrong [24] | D | 1988 | British Medical Journal | Medicine, Research and Experimental | 39.890 | Case–control |

| Garbe et al. [29] | D | 1989 | International Journal of Dermatology | Dermatology | 2.736 | Case–control |

| MacKie et al. [34] | D | 1989 | Lancet | Medicine, Research and Experimental | 79.323 | Case–control |

| Augustsson et al. [59] | D | 1991 | Acta Dermato-Venereologica | Dermatology | 4.437 | Case–control |

| Marret et al. [36] | D | 1992 | Canadian Medical Association Journal | Medicine, Research and Experimental | 8.262 | Case–control |

| Garbe et al. [28] | D | 1994 | Journal of Investigative Dermatology | Dermatology | 8.551 | Case–control |

| Barbini et al. [21] | D | 1998 | Melanoma Research | Oncology | 3.599 | Case–control |

| Landi et al. [33] | D | 2001 | British Journal of Cancer | Oncology | 7.640 | Case–control |

| Harbauer et al. [32] | D | 2003 | Melanoma Research | Oncology | 3.599 | Case–control |

| Dwyer et al. [23] | D | 2004 | American Journal of Epidemiology | Public, Environmental and Occupational Health | 4.897 | Case–control |

| Fargnoli et al. [25] | D | 2004 | Melanoma Research | Oncology | 3.599 | Case–control |

| Cho et al. [22] | D | 2005 | Journal of Clinical Oncology | Oncology | 44.544 | Cohort |

| Whiteman and Green [40] | D | 2005 | Cancer Epidemiology, Biomarkers and Prevention | Public, Environmental and Occupational Health | 4.254 | Published case–control studies |

| Fears et al. [26] | D | 2006 | Journal of Clinical Oncology | Oncology | 44.544 | Case–control |

| Goldberg et al. [30] | D | 2007 | Journal of the American Academy of Dermatology | Dermatology | 11.527 | Cohort |

| Mar et al. [35] | D | 2011 | Australasian Journal of Dermatology | Dermatology | 2.875 | Published meta-analysis and registry data |

| Nielsen et al. [37] | D | 2011 | International Journal of Cancer | Oncology | 7.396 | Cohort |

| Quéreux et al. [42] | D | 2011 | European Journal of Cancer Prevention | Oncology | 2.497 | Case–control |

| Williams et al. [41] | D | 2011 | Journal of Clinical and Experimental Dermatology Research | NA | NA | Case–control |

| Guther et al. [31] | D | 2012 | Journal of the European Academy of Dermatology and Venereology | Dermatology | 6.166 | Cohort |

| Smith et al. [58] | D | 2012 | Journal of Clinical Oncology | Oncology | 44.544 | Case–control |

| Bakos et al. [20] | D | 2013 | Anais Brasileiros de Dermatologia | Dermatology | 1.896 | Case–control |

| Stefanaki et al. [39] | D | 2013 | PLOS ONE | Multidisciplinary Sciences | 3.240 | Case–control |

| Nikolic et al. [45] | D | 2014 | Vojnosanitetski pregled | Medicine, Research and Experimental | 0.168 | Case–control |

| Penn et al. [38] | D | 2014 | PLOS ONE | Multidisciplinary Sciences | 3.240 | Case–control |

| Sneyd et al. [54] | D | 2014 | BMC Cancer | Oncology | 4.430 | Case–control |

| Kypreou et al. [49] | D | 2016 | Journal of Investigative Dermatology | Dermatology | 8.551 | Case–control |

| Cho et al. [51] | D | 2018 | Journal of the American Academy of Dermatology | Dermatology | 11.527 | Cohort |

| Gu et al. [53] | D | 2018 | Human Molecular Genetics | Biochemistry and Molecular Biology | 6.150 | Case–control |

| Hübner et al. [48] | D | 2018 | European Journal of Cancer Prevention | Oncology | 2.497 | Cohort study based on data from SCREEN project |

| Olsen et al. [47] | D | 2018 | Journal of the National Cancer Institute | Oncology | 13.506 | Cohort study |

| Richter and Koshgoftaar [57] | D | 2018 | Proceedings of ACM-BCB’18 | NA | NA | Cohort study based on EHR data |

| Tagliabue et al. [50] | D | 2018 | Cancer Management and Research | Oncology | 3.989 | Case–control |

| Bakshi et al. [61] | D | 2021 | Journal of the National Cancer Institute | Oncology | 13.506 | Cohort |

| Fontanillas et al. [60] | D | 2021 | Nature Communications | Multidisciplinary Sciences | 14.919 | Cohort |

| Fortes et al. [27] | D + V | 2010 | European Journal of Cancer Prevention | Oncology | 2.497 | Case–control |

| Cust et al. [56] | D + V | 2013 | BMC Cancer | Oncology | 4.430 | Case–control |

| Fang et al. [52] | D + V | 2013 | PLOS ONE | Multidisciplinary Sciences | 3.240 | Multiple case–control studies |

| Davies et al. [46] | D + V | 2015 | Cancer Epidemiology, Biomarkers and Prevention | Public, Environmental and Occupational Health | 4.254 | Multiple case–control |

| Vuong et al. [43] | D + V | 2016 | JAMA Dermatology | Dermatology | 10.282 | Case–control |

| Cust et al. [55] | D + V | 2018 | Journal of Investigative Dermatology | Dermatology | 8.551 | Case–control |

| Vuong et al. [44] | D + V | 2019 | British Journal of Dermatology | Dermatology | 9.302 | Case–control |

References

- Begg, C.; Cho, M.; Eastwood, S.; Horton, R.; Moher, D.; Olkin, I.; Pitkin, R.; Rennie, D.; Schulz, K.F.; Simel, D.; et al. Improving the quality of reporting of randomized controlled trials. The CONSORT statement. JAMA 1996, 276, 637–639. [Google Scholar] [CrossRef] [PubMed]

- Vandenbroucke, J.P.; von Elm, E.; Altman, D.G.; Gøtzsche, P.C.; Mulrow, C.D.; Pocock, S.J.; Poole, C.; Schlesselman, J.J.; Egger, M.; Strobe Initiative. Strengthening the Reporting of Observational Studies in Epidemiology (STROBE): Explanation and Elaboration. PLoS Med. 2007, 4, e297. [Google Scholar] [CrossRef] [Green Version]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. J. Clin. Epidemiol. 2021, 134, 178–189. [Google Scholar] [CrossRef]

- Bossuyt, P.M.; Reitsma, J.B.; Bruns, D.E.; Gatsonis, C.A.; Glasziou, P.; Irwig, L.; Lijmer, J.G.; Moher, D.; Rennie, D.; De Vet, H.C.W.; et al. STARD 2015: An updated list of essential items for reporting diagnostic accuracy studies. BMJ 2015, 351, h5527. [Google Scholar] [CrossRef] [Green Version]

- Chan, A.-W.; Tetzlaff, J.M.; Altman, D.G.; Laupacis, A.; Gøtzsche, P.C.; Krleža-Jerić, K.; Hróbjartsson, A.; Mann, H.; Dickersin, K.; Berlin, J.A.; et al. SPIRIT 2013 Statement: Defining Standard Protocol Items for Clinical Trials. Ann. Intern. Med. 2013, 158, 200–207. [Google Scholar] [CrossRef] [Green Version]

- Tong, A.; Sainsbury, P.; Craig, J. Consolidated criteria for reporting qualitative research (COREQ): A 32-item checklist for interviews and focus groups. Int. J. Qual. Health Care 2007, 19, 349–357. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Collins, G.S.; Reitsma, J.B.; Altman, D.G.; Moons, K.G.M. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD statement. BMJ 2014, 350, g7594. [Google Scholar] [CrossRef] [Green Version]

- Heus, P.; Damen, J.A.A.G.; Pajouheshnia, R.; Scholten, R.J.P.M.; Reitsma, J.B.; Collins, G.; Altman, D.G.; Moons, K.G.M.; Hooft, L. Uniformity in measuring adherence to reporting guidelines: The example of TRIPOD for assessing completeness of reporting of prediction model studies. BMJ Open 2019, 9, e025611. [Google Scholar] [CrossRef]

- Matthews, N.H.; Li, W.Q.; Qureshi, A.A.; Weinstock, M.A.; Cho, E. Epidemiology of Melanoma. In Cutaneous Melanoma: Etiology and Therapy; Ward, W.H., Farma, J.M., Eds.; Codon Publications: Brisbane, Australia, 2017. [Google Scholar]

- Garbe, C.; Keim, U.; Gandini, S.; Amaral, T.; Katalinic, A.; Hollezcek, B.; Martus, P.; Flatz, L.; Leiter, U.; Whiteman, D. Epidemiology of cutaneous melanoma and keratinocyte cancer in white populations 1943–2036. Eur. J. Cancer 2021, 152, 18–25. [Google Scholar] [CrossRef]

- Kaiser, I.; Pfahlberg, A.B.; Uter, W.; Heppt, M.V.; Veierød, M.B.; Gefeller, O. Risk Prediction Models for Melanoma: A Systematic Review on the Heterogeneity in Model Development and Validation. Int. J. Environ. Res. Public Health 2020, 17, 7919. [Google Scholar] [CrossRef] [PubMed]

- Usher-Smith, J.A.; Emery, J.; Kassianos, A.; Walter, F. Risk Prediction Models for Melanoma: A Systematic Review. Cancer Epidemiol. Biomark. Prev. 2014, 23, 1450–1463. [Google Scholar] [CrossRef] [Green Version]

- Vuong, K.; McGeechan, K.; Armstrong, B.K.; Cust, A. Risk Prediction Models for Incident Primary Cutaneous Melanoma: A systematic review. JAMA Dermatol. 2014, 150, 434–444. [Google Scholar] [CrossRef] [PubMed]

- Wohlin, C. Guidelines for snowballing in systematic literature studies and a replication in software engineering. In Proceedings of the Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering, London, UK, 13–14 May 2014; pp. 1–10. [Google Scholar]

- Leiner, D.J. SoSci Survey, Version 3.2.21; Computer Software. SoSci Survey GmbH: München, German, 2019; Available online: https://www.soscisurvey.de (accessed on 14 October 2021).

- Ferrari, S.; Cribari-Neto, F. Beta Regression for Modelling Rates and Proportions. J. Appl. Stat. 2004, 31, 799–815. [Google Scholar] [CrossRef]

- Clarivate Analytics. 2021 Journal Citation Reports®. Available online: https://jcr.clarivate.com/jcr/home (accessed on 15 December 2021).

- R Development Core Team, R. A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Cribari-Neto, F.; Zeileis, A. Beta Regression inR. J. Stat. Softw. 2010, 34, 1–24. [Google Scholar] [CrossRef] [Green Version]

- Bakos, L.; Mastroeni, S.; Bonamigo, R.R.; Melchi, F.; Pasquini, P.; Fortes, C. A melanoma risk score in a Brazilian population. An. Bras. Dermatol. 2013, 88, 226–232. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barbini, P.; Cevenini, G.; Rubegni, P.; Massai, M.; Flori, M.; Carli, P.; Andreassi, L. Instrumental measurement of skin colour and skin type as risk factors for melanoma: A statistical classification procedure. Melanoma Res. 1998, 8, 439–447. [Google Scholar] [CrossRef]

- Cho, E.; Rosner, B.A.; Feskanich, D.; Colditz, G.A. Risk Factors and Individual Probabilities of Melanoma for Whites. J. Clin. Oncol. 2005, 23, 2669–2675. [Google Scholar] [CrossRef] [PubMed]

- Dwyer, T.; Stankovich, J.; Blizzard, L.; FitzGerald, L.; Dickinson, J.; Reilly, A.; Williamson, J.; Ashbolt, R.; Berwick, M.; Sale, M.M. Does the Addition of Information on Genotype Improve Prediction of the Risk of Melanoma and Nonmelanoma Skin Cancer beyond That Obtained from Skin Phenotype? Am. J. Epidemiol. 2004, 159, 826–833. [Google Scholar] [CrossRef] [Green Version]

- English, D.R.; Armstrong, B.K. Identifying people at high risk of cutaneous malignant melanoma: Results from a case-control study in Western Australia. BMJ 1988, 296, 1285–1288. [Google Scholar] [CrossRef] [Green Version]

- Fargnoli, M.C.; Piccolo, D.; Altobelli, E.; Formicone, F.; Chimenti, S.; Peris, K. Constitutional and environmental risk factors for cutaneous melanoma in an Italian population. A case–control study. Melanoma Res. 2004, 14, 151–157. [Google Scholar] [CrossRef]

- Fears, T.R.; Guerry, D.; Pfeiffer, R.M.; Sagebiel, R.W.; Elder, D.E.; Halpern, A.; Holly, E.A.; Hartge, P.; Tucker, M.A. Identifying Individuals at High Risk of Melanoma: A Practical Predictor of Absolute Risk. J. Clin. Oncol. 2006, 24, 3590–3596. [Google Scholar] [CrossRef] [PubMed]

- Fortes, C.; Mastroeni, S.; Bakos, L.; Antonelli, G.; Alessandroni, L.; Pilla, M.A.; Alotto, M.; Zappalà, A.; Manoorannparampill, T.; Bonamigo, R.; et al. Identifying individuals at high risk of melanoma: A simple tool. Eur. J. Cancer Prev. 2010, 19, 393–400. [Google Scholar] [CrossRef]

- Garbe, C.; Krüger, S.; Orfanos, C.E.; Büttner, P.; Weiß, J.; Soyer, H.P.; Stocker, U.; Roser, M.; Weckbecker, J.; Panizzon, R.; et al. Risk Factors for Developing Cutaneous Melanoma and Criteria for Identifying Persons at Risk: Multicenter Case-Control Study of the Central Malignant Melanoma Registry of the German Dermatological Society. J. Investig. Dermatol. 1994, 102, 695–699. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Garbe, C.; Krüger, S.; Stadler, R.; Guggenmoos-Holzmann, I.; Orfanos, C.E. Markers and Relative Risk in a German Population for Developing Malignant Melanoma. Int. J. Dermatol. 1989, 28, 517–523. [Google Scholar] [CrossRef]

- Goldberg, M.S.; Doucette, J.T.; Lim, H.W.; Spencer, J.; Carucci, J.; Rigel, D.S. Risk factors for presumptive melanoma in skin cancer screening: American Academy of Dermatology National Melanoma/Skin Cancer Screening Program experience 2001–2005. J. Am. Acad. Dermatol. 2007, 57, 60–66. [Google Scholar] [CrossRef]

- Guther, S.; Ramrath, K.; Dyall-Smith, D.; Landthaler, M.; Stolz, W. Development of a targeted risk-group model for skin cancer screening based on more than 100 000 total skin examinations. J. Eur. Acad. Dermatol. Venereol. 2011, 26, 86–94. [Google Scholar] [CrossRef]

- Harbauer, A.; Binder, M.; Pehamberger, H.; Wolff, K.; Kittler, H. Validity of an unsupervised self-administered questionnaire for self-assessment of melanoma risk. Melanoma Res. 2003, 13, 537–542. [Google Scholar] [CrossRef]

- Landi, M.T.; Baccarelli, A.; Calista, D.; Pesatori, A.; Fears, T.R.; Tucker, M.A.; Landi, G. Combined risk factors for melanoma in a Mediterranean population. Br. J. Cancer 2001, 85, 1304–1310. [Google Scholar] [CrossRef] [PubMed]

- Mackie, R.; Freudenberger, T.; Aitchison, T. Personal risk-factor chart for cutaneous melanoma. Lancet 1989, 334, 487–490. [Google Scholar] [CrossRef]

- Mar, V.; Wolfe, R.; Kelly, J.W. Predicting melanoma risk for the Australian population. Australas. J. Dermatol. 2011, 52, 109–116. [Google Scholar] [CrossRef] [PubMed]

- Marrett, L.D.; King, W.D.; Walter, S.D.; From, L. Use of host factors to identify people at high risk for cutaneous malignant melanoma. Can. Med. Assoc. J. 1992, 147, 445–453. [Google Scholar]

- Nielsen, K.; Måsbäck, A.; Olsson, H.; Ingvar, C. A prospective, population-based study of 40,000 women regarding host factors, UV exposure and sunbed use in relation to risk and anatomic site of cutaneous melanoma. Int. J. Cancer 2011, 131, 706–715. [Google Scholar] [CrossRef] [PubMed]

- Penn, L.A.; Qian, M.; Zhang, E.; Ng, E.; Shao, Y.; Berwick, M.; Lazovich, D.; Polsky, D. Development of a Melanoma Risk Prediction Model Incorporating MC1R Genotype and Indoor Tanning Exposure: Impact of Mole Phenotype on Model Performance. PLoS ONE 2014, 9, e101507. [Google Scholar] [CrossRef] [PubMed]

- Stefanaki, I.; Panagiotou, O.A.; Kodela, E.; Gogas, H.; Kypreou, K.P.; Chatzinasiou, F.; Nikolaou, V.; Plaka, M.; Kalfa, I.; Antoniou, C.; et al. Replication and Predictive Value of SNPs Associated with Melanoma and Pigmentation Traits in a Southern European Case-Control Study. PLoS ONE 2013, 8, e55712. [Google Scholar] [CrossRef]

- Whiteman, D. A Risk Prediction Tool for Melanoma? Cancer Epidemiol. Biomark. Prev. 2005, 14, 761–763. [Google Scholar] [CrossRef] [Green Version]

- Williams, L.H.; Shors, A.R.; Barlow, W.E.; Solomon, C.; White, E. Identifying Persons at Highest Risk of Melanoma Using Self-Assessed Risk Factors. J. Clin. Exp. Dermatol. Res. 2011, 2, 1000129. [Google Scholar] [CrossRef] [Green Version]

- Quéreux, G.; Moyse, D.; Lequeux, Y.; Jumbou, O.; Brocard, A.; Antonioli, D.; Dréno, B.; Nguyen, J.-M. Development of an individual score for melanoma risk. Eur. J. Cancer Prev. 2011, 20, 217–224. [Google Scholar] [CrossRef]

- Vuong, K.; Armstrong, B.K.; Weiderpass, E.; Lund, E.; Adami, H.-O.; Veierød, M.B.; Barrett, J.H.; Davies, J.R.; Bishop, T.; Whiteman, D.; et al. Development and External Validation of a Melanoma Risk Prediction Model Based on Self-assessed Risk Factors. JAMA Dermatol. 2016, 152, 889–896. [Google Scholar] [CrossRef]

- Vuong, K.; Armstrong, B.; Drummond, M.; Hopper, J.; Barrett, J.; Davies, J.; Bishop, T.; Newton-Bishop, J.; Aitken, J.; Giles, G.; et al. Development and external validation study of a melanoma risk prediction model incorporating clinically assessed naevi and solar lentigines. Br. J. Dermatol. 2019, 182, 1262–1268. [Google Scholar] [CrossRef]

- Nikolic, J.; Loncar-Turukalo, T.; Sladojevic, S.; Marinkovic, M.; Janjic, Z. Melanoma risk prediction models. Vojn. Pregl. 2014, 71, 757–766. [Google Scholar] [CrossRef]

- Davies, J.R.; Chang, Y.-M.; Bishop, T.; Armstrong, B.K.; Bataille, V.; Bergman, W.; Berwick, M.; Bracci, P.M.; Elwood, J.M.; Ernstoff, M.S.; et al. Development and Validation of a Melanoma Risk Score Based on Pooled Data from 16 Case–Control Studies. Cancer Epidemiol. Biomark. Prev. 2015, 24, 817–824. [Google Scholar] [CrossRef] [Green Version]

- Olsen, C.; Pandeya, N.; Thompson, B.; Dusingize, J.C.; Webb, P.M.; Green, A.C.; Neale, R.E.; Whiteman, D.C.; QSkin Study. Risk Stratification for Melanoma: Models Derived and Validated in a Purpose-Designed Prospective Cohort. JNCI: J. Natl. Cancer Inst. 2018, 110, 1075–1083. [Google Scholar] [CrossRef]

- Hübner, J.; Waldmann, A.; Eisemann, N.; Noftz, M.; Geller, A.C.; Weinstock, M.A.; Volkmer, B.; Greinert, R.; Breitbart, E.W.; Katalinic, A. Association between risk factors and detection of cutaneous melanoma in the setting of a population-based skin cancer screening. Eur. J. Cancer Prev. 2018, 27, 563–569. [Google Scholar] [CrossRef] [PubMed]

- Kypreou, K.P.; Stefanaki, I.; Antonopoulou, K.; Karagianni, F.; Ntritsos, G.; Zaras, A.; Nikolaou, V.; Kalfa, I.; Chasapi, V.; Polydorou, D.; et al. Prediction of Melanoma Risk in a Southern European Population Based on a Weighted Genetic Risk Score. J. Investig. Dermatol. 2016, 136, 690–695. [Google Scholar] [CrossRef] [Green Version]

- Tagliabue, E.; Gandini, S.; Bellocco, R.; Maisonneuve, P.; Newton-Bishop, J.; Polsky, D.; Lazovich, D.; A Kanetsky, P.; Ghiorzo, P.; A Gruis, N.; et al. MC1R variants as melanoma risk factors independent of at-risk phenotypic characteristics: A pooled analysis from the M-SKIP project. Cancer Manag. Res. 2018, 10, 1143–1154. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cho, H.G.; Ransohoff, K.J.; Yang, L.; Hedlin, H.; Assimes, T.; Han, J.; Stefanick, M.; Tang, J.Y.; Sarin, K.Y. Melanoma risk prediction using a multilocus genetic risk score in the Women’s Health Initiative cohort. J. Am. Acad. Dermatol. 2018, 79, 36–41. [Google Scholar] [CrossRef] [PubMed]

- Fang, S.; Han, J.; Zhang, M.; Wang, L.-E.; Wei, Q.; Amos, C.I.; Lee, J.E. Joint Effect of Multiple Common SNPs Predicts Melanoma Susceptibility. PLoS ONE 2013, 8, e85642. [Google Scholar] [CrossRef]

- Gu, F.; Chen, T.-H.; Pfeiffer, R.M.; Fargnoli, M.C.; Calista, N.; Ghiorzo, P.; Peris, K.; Puig, S.; Menin, C.; De Nicolo, A.; et al. Combining common genetic variants and non-genetic risk factors to predict risk of cutaneous melanoma. Hum. Mol. Genet. 2018, 27, 4145–4156. [Google Scholar] [CrossRef]

- Sneyd, M.J.; Cameron, C.; Cox, B. Individual risk of cutaneous melanoma in New Zealand: Developing a clinical prediction aid. BMC Cancer 2014, 14, 359. [Google Scholar] [CrossRef] [Green Version]

- Cust, A.E.; Drummond, M.; Kanetsky, P.A.; Australian Melanoma Family Study Investigators; Leeds Case-Control Study Investigators; Goldstein, A.M.; Barrett, J.H.; MacGregor, S.; Law, M.H.; Iles, M.; et al. Assessing the Incremental Contribution of Common Genomic Variants to Melanoma Risk Prediction in Two Population-Based Studies. J. Investig. Dermatol. 2018, 138, 2617–2624. [Google Scholar] [CrossRef] [Green Version]

- Cust, A.E.; Goumas, C.; Vuong, K.; Davies, J.R.; Barrett, J.H.; Holland, E.A.; Schmid, H.; Agha-Hamilton, C.; Armstrong, B.K.; Kefford, R.F.; et al. MC1Rgenotype as a predictor of early-onset melanoma, compared with self-reported and physician-measured traditional risk factors: An Australian case-control-family study. BMC Cancer 2013, 13, 406. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Richter, A.; Khoshgoftaar, T. Melanoma Risk Prediction with Structured Electronic Health Records. In Proceedings of the ACM-BCB’18: 9th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics, Washington, DC, USA, 29 August–1 September 2018. [Google Scholar]

- Smith, L.A.; Qian, M.; Ng, E.; Shao, Y.; Berwick, M.; Lazovich, D.; Polsky, D. Development of a melanoma risk prediction model incorporating MC1R genotype and indoor tanning exposure. J. Clin. Oncol. 2012, 30, 8574. [Google Scholar] [CrossRef]

- Augustsson, A. Melanocytic naevi, melanoma and sun exposure. Acta Derm. Venereol. Suppl. (Stockh.) 1991, 166, 1–34. [Google Scholar]

- Fontanillas, P.; Alipanahi, B.; Furlotte, N.A.; Johnson, M.; Wilson, C.H.; 23andMe Research Team; Pitts, S.J.; Gentleman, R.; Auton, A. Disease risk scores for skin cancers. Nat. Commun. 2021, 12, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Bakshi, A.; Yan, M.; Riaz, M.; Polekhina, G.; Orchard, S.G.; Tiller, J.; Wolfe, R.; Joshi, A.; Cao, Y.; McInerney-Leo, A.M.; et al. Genomic Risk Score for Melanoma in a Prospective Study of Older Individuals. J. Natl. Cancer Inst. 2021, 113, 1379–1385. [Google Scholar] [CrossRef]

- Liu, H.; Zhou, X.; Yu, G.; Sun, X. The effects of the PRISMA statement to improve the conduct and reporting of systematic reviews and meta-analyses of nursing interventions for patients with heart failure. Int. J. Nurs. Pr. 2019, 25, e12729. [Google Scholar] [CrossRef]

- Ghimire, S.; Kyung, E.; Lee, H.; Kim, E. Oncology trial abstracts showed suboptimal improvement in reporting: A comparative before-and-after evaluation using CONSORT for Abstract guidelines. J. Clin. Epidemiol. 2014, 67, 658–666. [Google Scholar] [CrossRef]

- Du, M.; Haag, D.; Song, Y.; Lynch, J.; Mittinty, M. Examining Bias and Reporting in Oral Health Prediction Modeling Studies. J. Dent. Res. 2020, 99, 374–387. [Google Scholar] [CrossRef] [PubMed]

- Heus, P.; Damen, J.A.A.G.; Pajouheshnia, R.; Scholten, R.J.P.M.; Reitsma, J.B.; Collins, G.S.; Altman, D.G.; Moons, K.G.M.; Hooft, L. Poor reporting of multivariable prediction model studies: Towards a targeted implementation strategy of the TRIPOD statement. BMC Med. 2018, 16, 120. [Google Scholar] [CrossRef] [PubMed]

- Jiang, M.Y.; Dragnev, N.C.; Wong, S.L. Evaluating the quality of reporting of melanoma prediction models. Surgery 2020, 168, 173–177. [Google Scholar] [CrossRef]

- Yang, L.; Wang, Q.; Cui, T.; Huang, J.; Shi, N.; Jin, H. Reporting of coronavirus disease 2019 prognostic models: The transparent reporting of a multivariable prediction model for individual prognosis or diagnosis statement. Ann. Transl. Med. 2021, 9, 421. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Wang, Q.; Cui, T.; Huang, J.; Jin, H. Reporting and Performance of Hepatocellular Carcinoma Risk Prediction Models: Based on TRIPOD Statement and Meta-Analysis. Can. J. Gastroenterol. Hepatol. 2021, 2021, 9996358. [Google Scholar] [CrossRef] [PubMed]

- Heus, P.; Reitsma, J.B.; Collins, G.; Damen, J.A.; Scholten, R.J.; Altman, D.G.; Moons, K.G.; Hooft, L. Transparent Reporting of Multivariable Prediction Models in Journal and Conference Abstracts: TRIPOD for Abstracts. Ann. Intern. Med. 2020, 173, 42–47. [Google Scholar] [CrossRef] [PubMed]

- TRIPOD. TRIPOD Clustered Data. Available online: https://www.tripod-statement.org/clustered/ (accessed on 16 December 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).