Deep Learning on Ultrasound Images Visualizes the Femoral Nerve with Good Precision

Abstract

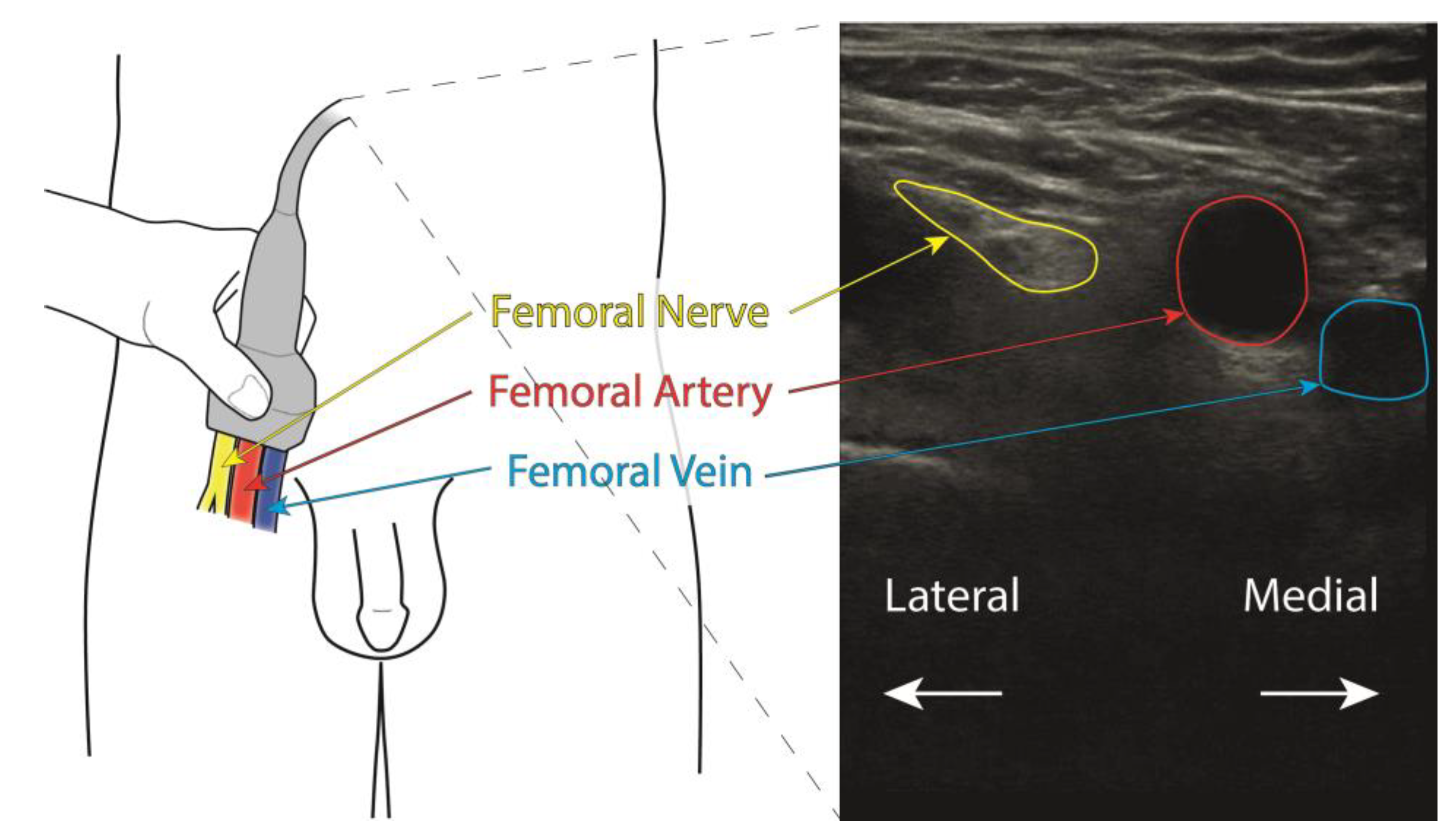

1. Introduction

1.1. Hip Fracture

1.2. Advantage of Regional Blockade

2. Materials and Methods

2.1. Setting

2.2. Ethics Approval and Consent to Participate

2.3. Design

2.4. Participants

2.5. Model Evaluation

3. Results

3.1. Participant Demographics

3.2. IoU Processing

4. Discussion

4.1. Study Design

4.2. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Holt, G.; Smith, R.; Duncan, K.; Finlayson, D.; Gregori, A. Early mortality after surgical fixation of hip fractures in the elderly. J. Bone Jt. Surg. Br. 2008, 90, 1357–1363. [Google Scholar] [CrossRef] [PubMed]

- Bottle, A.; Aylin, P. Mortality associated with delay in operation after hip fracture: Observational study. Br. Med. J. 2006, 332, 947–951. [Google Scholar] [CrossRef] [PubMed]

- Godoy Monzon, D.; Vazquez, J.; Jauregui, J.R.; Iserson, K.V. Pain treatment in post-traumatic hip fracture in the elderly: Regional block vs. systemic non-steroidal analgesics. Int. J. Emerg. Med. 2010, 3, 321–325. [Google Scholar] [CrossRef] [PubMed]

- Aronsson, K.; Björkdahl, I.; Wireklint Sundström, B. Prehospital emergency care for patients with suspected hip fractures after falling—Older patients’ experiences. J. Clin. Nurs. 2014, 23, 3115–3123. [Google Scholar] [CrossRef] [PubMed]

- Egbert, A. Postoperative pain management in the frail elderly. Clin. Geriatr. Med. 1996, 12, 583. [Google Scholar] [CrossRef] [PubMed]

- Jakopovic, D.; Falk, A.-C.; Lindström, V. Ambulance personnel’s experience of pain management for patients with a suspected hip fracture: A qualitative study. Int. Emerg. Nurs. 2015, 23, 244–249. [Google Scholar] [CrossRef] [PubMed]

- Herr, K.; Coyne, P.; McCaffery, M.; Manworren, R.; Merkel, S. Pain assessment in the patient unable to self-report: Position statement with clinical practice recommendations. Pain Manag. Nurs. 2011, 12, 230–250. [Google Scholar] [CrossRef] [PubMed]

- Lippert, S.C.; Nagdev, A.; Stone, M.B.; Herring, A.; Norris, R. Pain control in disaster settings: A role for ultrasound-guided nerve blocks. Ann. Emerg. Med. 2013, 61, 690–696. [Google Scholar] [CrossRef] [PubMed]

- Worm, B.S.; Krag, M.; Jensen, K. Ultrasound-guided nerve blocks—Is documentation and education feasible using only text and pictures? PLoS ONE 2014, 9, e86966. [Google Scholar] [CrossRef] [PubMed]

- Redborg, K.; Antonakakis, J.; Beach, M.; Chinn, C.; Sites, B. Ultrasound improves the success rate of a tibial nerve block at the ankle. Reg. Anesth. Pain Med. 2009, 34, 256–260. [Google Scholar] [CrossRef] [PubMed]

- Yuan, J.; Yang, X.; Fu, S.; Yuan, C.; Chen, K.; Li, J.; Li, Q. Ultrasound guidance for brachial plexus block decreases the incidence of complete hemi-diaphragmatic paresis or vascular punctures and improves success rate of brachial plexus nerve block compared with peripheral nerve stimulator in adults. Chin. Med. J. 2012, 125, 1811–1816. [Google Scholar] [PubMed]

- Huang, C.; Zhou, Y.; Tan, W.; Qiu, Z.; Zhou, H.; Song, Y.; Zhao, Y.; Gao, S. Applying deep learning in recognizing the femoral nerve block region on ultrasound images. Ann. Transl. Med. 2019, 7, 453. [Google Scholar] [CrossRef] [PubMed]

- Bowness, J.; El-Boghdadly, K.; Burckett-St Laurent, D. Artificial intelligence for imageinterpretation in ultrasound-guided regional anaesthesia. Anaesthesia 2021, 76, 602–607. [Google Scholar] [CrossRef] [PubMed]

- Smistad, E.; Johansen, K.; Iversen, D.; Reinertsen, I. Highlighting nerves and blood vessels for ultrasound-guided axillary nerve block procedures using neural networks. J. Med. Imaging 2018, 5, 044004. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Wang, L.; Li, G.; Yu, Z.; Li, D.; Guan, Q.; Zhang, Q.; Guo, T.; Wang, H.; Wang, Y. Artificial intelligence using deep neural network learning for automatic location of the interscalene brachial plexus in ultrasound images. Eur. J. Anaesthesiol. 2022, 39, 758–765. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Guo, Y.; Duan, X.; Wang, C.; Guo, H. Segmentation and recognition of breast ultrasound images based on an expanded U-Net. PLoS ONE 2021, 16, e0253202. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Altman, D.G. (Ed.) Practical Statistics for Medical Research; Chapman & Hall: London, UK, 1991; pp. 455–460. [Google Scholar]

- Madani, A.; Namazi, B.; Altieri, M.; Hashimoto, D.; Rivera, A.; Pucher, P.; Navarrete-Welton, A.; Sankaranarayanan, G.; Brunt, M.; Okrainec, A.; et al. Artificial Intelligence for Intraoperative Guidance: Using Semantic Segmentation to Identify Surgical Anatomy During Laparoscopic Cholecystectomy. Ann. Surg. 2022, 276, 363–369. [Google Scholar] [CrossRef] [PubMed]

- Alpantaki, K.; Papadaki, C.; Raptis, K.; Dretakis, K.; Samonis, G.; Koutserimpas, C. Gender and Age Differences in Hip Fracture Types among Elderly: A Retrospective Cohort Study. Maedica 2020, 15, 185–190. [Google Scholar] [PubMed]

- Negassi, M.; Wagner, D.; Reiterer, A. Smart (Sampling)Augment: Optimal and Efficient Data Augmentation for Semantic Segmentation. Algorithms 2022, 15, 165. [Google Scholar] [CrossRef]

- Jimenez-Castaño, C.; Álvarez-Meza, A.; Aguirre-Ospina, O.; Cárdenas-Peña, D.; Orozco-Gutiérrez, Á. Random Fourier Features-Based Deep Learning Improvement with Class Activation Interpretability for Nerve Structure Segmentation. Sensors 2021, 21, 7741. [Google Scholar] [CrossRef] [PubMed]

| Patients | n = 48 | p-Value |

|---|---|---|

| Gender (men/women) | ||

| n (%) | 25 (52)/23 (48) | 0.083 c |

| Height (mean ± SD, min–max) | ||

| Overall | 1.72 ± 0.09, 1.58–1.92 | |

| Men | 1.78 ± 0.75, 1.66–1.92 | 0.001 t * |

| Women | 1.66 ± 0.44, 1.58–1.74 | |

| Weight (mean ± SD/min–max) | ||

| Overall | 82 ± 13, 58–110 | |

| Men | 90 ± 10, 70–110 | 0.001 t * |

| Women | 74 ± 8, 58–88 | |

| Age (mean ± SD/min–max) | ||

| Overall | 66 ± 11, 46–88 | |

| Men | 65 ± 10, 51–88 | 0.434 t |

| Women | 67 ± 11, 46–87 | |

| BMI (mean ± SD/min–max) | ||

| Overall | 28.5 ± 2.2, 23.2–30.0 | |

| Men | 28.9 ± 1.8, 24.6–30.0 | 0.026 t * |

| Women | 26.8 ± 2.4, 23.2–29.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Berggreen, J.; Johansson, A.; Jahr, J.; Möller, S.; Jansson, T. Deep Learning on Ultrasound Images Visualizes the Femoral Nerve with Good Precision. Healthcare 2023, 11, 184. https://doi.org/10.3390/healthcare11020184

Berggreen J, Johansson A, Jahr J, Möller S, Jansson T. Deep Learning on Ultrasound Images Visualizes the Femoral Nerve with Good Precision. Healthcare. 2023; 11(2):184. https://doi.org/10.3390/healthcare11020184

Chicago/Turabian StyleBerggreen, Johan, Anders Johansson, John Jahr, Sebastian Möller, and Tomas Jansson. 2023. "Deep Learning on Ultrasound Images Visualizes the Femoral Nerve with Good Precision" Healthcare 11, no. 2: 184. https://doi.org/10.3390/healthcare11020184

APA StyleBerggreen, J., Johansson, A., Jahr, J., Möller, S., & Jansson, T. (2023). Deep Learning on Ultrasound Images Visualizes the Femoral Nerve with Good Precision. Healthcare, 11(2), 184. https://doi.org/10.3390/healthcare11020184