Abstract

Machine learning (ML) can enhance a dermatologist’s work, from diagnosis to customized care. The development of ML algorithms in dermatology has been supported lately regarding links to digital data processing (e.g., electronic medical records, Image Archives, omics), quicker computing and cheaper data storage. This article describes the fundamentals of ML-based implementations, as well as future limits and concerns for the production of skin cancer detection and classification systems. We also explored five fields of dermatology using deep learning applications: (1) the classification of diseases by clinical photos, (2) der moto pathology visual classification of cancer, and (3) the measurement of skin diseases by smartphone applications and personal tracking systems. This analysis aims to provide dermatologists with a guide that helps demystify the basics of ML and its different applications to identify their possible challenges correctly. This paper surveyed studies on skin cancer detection using deep learning to assess the features and advantages of other techniques. Moreover, this paper also defined the basic requirements for creating a skin cancer detection application, which revolves around two main issues: the full segmentation image and the tracking of the lesion on the skin using deep learning. Most of the techniques found in this survey address these two problems. Some of the methods also categorize the type of cancer too.

1. Introduction

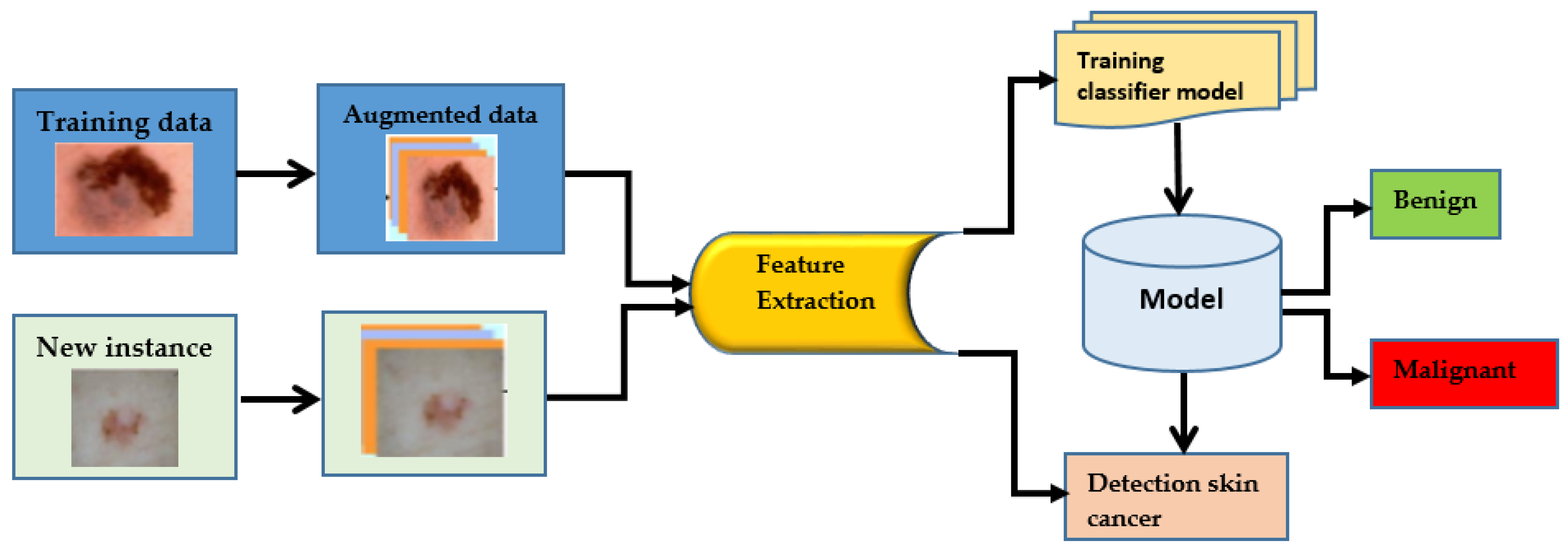

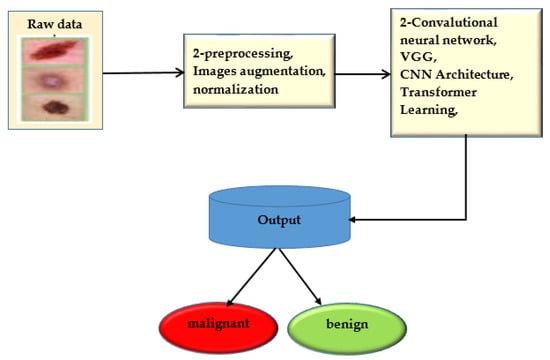

Skin cancer is a term used to describe a group of diseases in which abnormal skin cells grow uncontrolled and form tumors. These cancers are primarily brought on by unprotected skin damage to UV (ultraviolet) rays [1]. Melanomas make up just one percent of skin cancers. Skin cancers or basal cell carcinomas make up the remaining cases [2]. Due to its widespread occurrence and the potential for huge consequences, HPV is a significant health concern in the United States. In the United States, about five million different skin diseases are thought to be recorded annually. The rate of skin cancer has increased since the 1970s. Specialists use a variety of methods to find skin cancer [3]. To determine if a lesion is malignant, a trained physician will frequently use a predefined set of criteria, such as looking for concerning lesions, performing a dermoscopy, and then performing a biopsy. It could take some time before the person advances to the next level. Dermoscopy has improved the absolute accuracy of picture identification by fifty percent, from seventy-five to eighty-four percent. A doctor’s skills are also essential to making an appropriate diagnosis. For those who have skin conditions, manually diagnosing them is challenging and time-consuming [4]. Dermoscopy procedures can be analyzed using computer-assisted diagnosis when a certified expert is not accessible because of limited expertise. The differences between samples and researchers can be minimized with the help of automatic categorization. There were two major problems with the most effective computer-assisted skin-related picture categorization systems: insufficient data and the way the photos were taken. With today’s methodologies, significant preprocessing, segmentation, and feature extraction was necessary before skin image classification. AI-related changes are similar to those brought about by widespread technological adoption. Machine learning (ML) technology can be used for classification tasks rather than manually extracting features. You can save time and effort by doing this. More people are now trying to use ML methods to diagnose cancer, a recent trend. Machine learning algorithms have improved cancer prediction accuracy by 15% to 20% over the last two decades. Artificial intelligence’s deep learning discipline is fast growing and has many possible applications. One of the most powerful and widely used machine learning (ML) techniques for recognizing and categorizing pictures is deep learning, using specifically convolutional neural networks (CNNs), which are powered by state-of-the-art computational algorithms and massive datasets. Traditional ML techniques, which demand a lot of background knowledge and lengthy preparation stages, are no longer frequently used. Deep learning-based classifiers can identify skin cancer in images just as effectively as dermatologists. CNN’s can aid in developing computer-aided categorization systems for skin lesions used by dermatologists. There are not enough training sets for high-quality medical images, though. This is mostly due to a lack of photos of rare classes that are sufficiently annotated and described. Standard CNNs are less likely to work successfully with small data sets. There is a significant problem with processing costs for therapeutic applications because some researchers utilize exceptionally deep CNN models (Resnet152, for example, has 152 layers), which improve network classification performance. In addition, researchers use CNNs that have been trained to categorize skin lesions. By doing this, data overfitting is avoided. Features obtained from real-world photo datasets are added to trained convolutional neural networks (CNNs) (such as ImageNet). Additionally, CAD makes identifying and treating tumor diseases easier and more cost-effective. Imaging methods such as MRI, PET, and X-rays are often used to diagnose organ problems. Skin lesions were initially identified visually; CT, dermoscopy image processing, clinical screening, and other methods allowed this. Less experienced dermatologists are more likely to misdiagnose skin lesions. The procedures used by doctors to review and understand images of lesions take a lot of time and are often subjective and inaccurate. This is mostly because it is challenging to describe skin problems on camera fully. Knowing precisely where pixels are situated within skin lesions is crucial to analyzing images and diagnosing problems with lesions [5]. The ability of CADI and CPS systems to identify skin cancer has considerably increased with the integration of machine-learning approaches to computer vision. Image preprocessing and lesion image categorization are two of the most important elements in cancer detection and diagnosis. However, the outcome and mortality rates of skin cancer must be improved through early detection. For diagnostic tests or assessments of patient response to therapy (such as for cancer), this approach is unsuitable. To enhance and speed up the diagnostic decision-making process, many doctors are using AI in medicine [6]. Most artificial intelligence research for clinical diagnosis has either ignored or inadequately addressed the necessity for accurate assessment and reporting of future problems, even though there have been encouraging signals of development in this field. Numerous diseases can be recognized quickly, precisely, and consistently using computer-aided design [7]. Additionally, CAD makes identifying and treating tumor diseases easier and more cost-effective. Imaging methods such as MRI, PET, and X-rays are often used to diagnose organ problems. In the past, methods such as clinical screening, computed tomography (CT), dermatoscopy image processing, and others were used to detect skin lesions. Dermatologists with less experience are less proficient in correctly diagnosing skin lesions. The procedures used by doctors to interpret and analyze images of lesions take a lot of time and are often subjective and inaccurate. This is mostly because it is challenging to depict skin imperfections on camera accurately. One approach to a skin cancer diagnosis is shown in Figure 1.

Figure 1.

Process of cancer detection.

This study of dermatology and medicine at large are the basis of a variety of knowledge that can revolutionize customized healthcare, such as the availability of clinical records, patient population data, and imagery testing and evaluation data [1,2]. The fields of study frequently related to the shortened term omics are now prevalent in dermatological translation research using evidence from genomes, epigones, transcriptomes, proteomes and microbiomes [3]. Recent developments in faster computing and cheaper storage have facilitated the creation of human-like intelligent machine learning (ML) algorithms for various dermatology apps. Dermatologists must have a basic understanding of artificial intelligence and ML to test the usefulness of these new technologies. Skin cancer, with over five million cases diagnosed annually, is the most prevalent cancer in the U.S [1]. With over 100,000 new possibilities in the United States and about 9000 deaths yearly, basal cell carcinoma is the deadliest type of skin disease [2]. U.S. health care costs more than USD 8 billion [4]. Skin cancer represents a massive danger to public health worldwide as well. Over 13,000 new cases of melanoma arise annually in Australia, resulting in over 1200 deaths [5]. More than 20,000 deaths yearly are triggered by melanoma in Europe [6]. Early diagnosis is essential to combat the elevated mortality of melanoma. Highly trained dermoscopic clinicians are required to diagnose melanoma reliably and early, but the number of experts has not met the need [7]. A Dermot scope is a sophisticated form of high-resolution skin imagery that decreases the reflectivity of the skin surface to enable clinicians to see deeper underlying structures. This system displays diagnostic precision of 75% to 84% by specially qualified clinicians [8]. However, the identification efficiency declines dramatically when the physicians are not well qualified.

2. Literature Review

The use of technology in early cancer diagnosis has shown that it is possible to get around the limitations of the manual process, opening up a new field of study. To better understand the reader’s understanding of the topic at issue and the current state of knowledge, this section summarizes various pertinent studies. Deep learning techniques have outperformed traditional machine learning techniques in many situations. Over the past couple of decades, deep learning has fundamentally changed how machine learning works. The topic’s novel feature is using artificial neural networks in machine learning. This approach was based on how the brain works and is organized [9]. Researchers looked at the data to see how accurate computerized methods are [10]. Researchers revealed a more accurate way to identify melanoma skin cancer [11]. A non-linear segmentation insertion surface was used to generate fake images of melanoma. Data augmentation was used to create a new collection of skin melanoma datasets using ceroscopy photos from the publicly accessible PH2 dataset. The Squeeze Net deep learning model was trained using these photos. The study showed a major improvement in melanoma detection. The VGG-Seg Net technique was suggested to extract a skin melanoma (SM) region from a dermatoscopy image [12]. Important performance metrics were developed by comparing the segmented SM to reality. The suggested method was reviewed and verified using the industry-recognized ISIC2016 database. The Classification of skin cancer was determined by researchers using both machine and human intelligence. The 300 skin lesions confirmed by biopsy were categorized into five kinds by a CNN expert and 112 German dermatologists. The two separate sets of diagnostics were integrated into a single classifier using boost. The results revealed that 82.95%of the time, humans and machines were right about more than one class. Deep learning-based technology can identify benign and malignant tumors [13]. The method was tested using HAM10000 images from the 2018 ISIC, 2019 ISIC, and 2020 ISIC in a typical laboratory setting. The InSiNet framework outperforms the other methods using the ISIC 2018 dataset, with accuracy rates of 94.59%, 91.89%, and 90.549%, respectively. Researchers created a deep learning method to identify early-stage melanoma that uses a region-based convolutional neural network and fuzzy k-means clustering [14]. The effectiveness of the suggested method in assisting dermatologists in the early recognition of this potentially lethal condition was assessed using a variety of clinical pictures. The effectiveness of the proposed method was assessed using the ISIC-2017, PH2, and ISBI-2016 datasets. It was more accurate on average than the top methods at the time (95.40%, 93.11%, and 95.60%). Convolutional neural networks are a type of deep learning model that has been discovered to be more effective than conventional techniques for detecting images and features [15]. They have also been used in the medical field, where they have helped patients overcome incredible obstacles and perform amazing accomplishments. There are currently many DL-based medical imaging systems that clinicians and specialists can use to identify, treat and track the progress of cancer patients. To distinguish between melanoma and non-melanoma skin lesions, we developed the Lesion classifier. The system’s efficacy was evaluated using the ISBI2017 and PH2 skin lesion databases. Tests on the ISIC 2017 and PH2 datasets showed that the proposed approach was 95% accurate. To determine whether 100 skin lesions were benign or malignant, researchers created a set of deep learning algorithms using data from the International Skin Imaging Collaboration (ISIC)-2016 dataset [16]. Overall, they were more accurate than specialists. Compared to specialists, who had an accuracy rate of 70.5% and a specificity rate of 59%, they had a 76% accuracy rate and a 62% specificity rate. A dermoscopic dataset of over 100,000 benign lesions and melanoma images was used to train the InceptionV4 deep learning algorithm. Then, the results were contrasted with those of 58 dermatologists. There were two groups, depending on the diagnosis level [17]. Dermoscopy was the only technique used in the first level, but it was combined with patient clinical data and images in the second level. Dermatologists’ reports indicate that the first level’s median sensitivity was 86.6%, and its median specificity was 71.3%. Sensitivity and specificity climbed to 88.9% and 75.7%, respectively, at level II. There was a statistically significant rise in specificity (p 0.05). The increase in sensitivity, however, was not statistically significant, according to statistical analysis (p = 0.19). The CNN with deep learning produced a much more precise receiver operating characteristic curve than dermatologists at level (p 0.01). CNN performed better than the majority of dermatologists in this study. This suggests that CNN could be used to help with melanoma detection using dermoscopy images. In this study (MClass-D), 157 dermatologists’ performances on 100 dermoscopic images was compared using a convolutional neural network (ResNet50) (MClass-D). Dermatologists’ sensitivity and specificity were lower than those of deep learning, which had a sensitivity of 84.2% and a specificity of 69.2%. In a head-to-head comparison, CNN outperformed 86.6% of the dermatologists in the study. Each subgroup of dermatologists performed better depending on their experience in categorizing dermoscopy images of melanoma. Thus, CNN might be able to help dermatologists make an accurate melanoma diagnosis. Deep learning algorithms exceeded human groups with beginner and intermediate raters. The trained combined CNN had a significantly larger area under the ROC curve than dermatologists. Compared to general dermatologists, it correctly identified more cases, but identified fewer than experts with more than ten years of experience [18]. The sensitivity and specificity of the ResNet50 deep learning system’s capability to categorize skin lesions into specific groups were assessed and 112 German dermatologists. Dermatologists had a sensitivity of 74.4% and a specificity of 59.0% for correctly classifying skin lesions. The algorithm was accurate 91.3% of the time at the same sensitivity level. Dermatologists correctly classified an image into one of the five diagnostic categories with a sensitivity of 56.5% and a specificity of 89.0%. The algorithm offered 98.8% specificity at the same level of sensitivity. The deep learning algorithm generally performed better on the main outcome than dermatologists (p 0.001). The secondary outcome comparison revealed that, except for basal cell carcinoma, the algorithm consistently outperformed dermatologists [19]. Dermatologists helped test an InceptionV4-based deep learning architecture using 100 dermoscopy images. Dermoscopic images made up Level I, while clinical details, a dermoscopic image, and a clinical close-up image made up Level II. The deep learning system’s sensitivity and specificity were both 95% when compared to Level I dermatologists. Dermatologists’ average specificity remained constant while their average sensitivity increased to 94.1% with Level II information [20]. An online open study was used to ascertain what was wrong with the dermatoscopy photographs. The researchers evaluated the average performance of 139 AI algorithms against 511 human readers on a set of 1511 images from the ISIC 2018 competition. We contrasted human and machine learning algorithm diagnoses, each of which fit into one of seven predefined categories. We evaluated the effectiveness of various diagnostic techniques. The participants included 55.4% board-certified dermatologists, 23.1% dermatology residents, and 16.2% family physicians. A human and an algorithm performed diagnostic tasks differently on average by 2.01 points, and there was a statistically significant difference (p 0.0001). This suggested that AI algorithms could diagnose illnesses more precisely than people [21].

3. Methodology

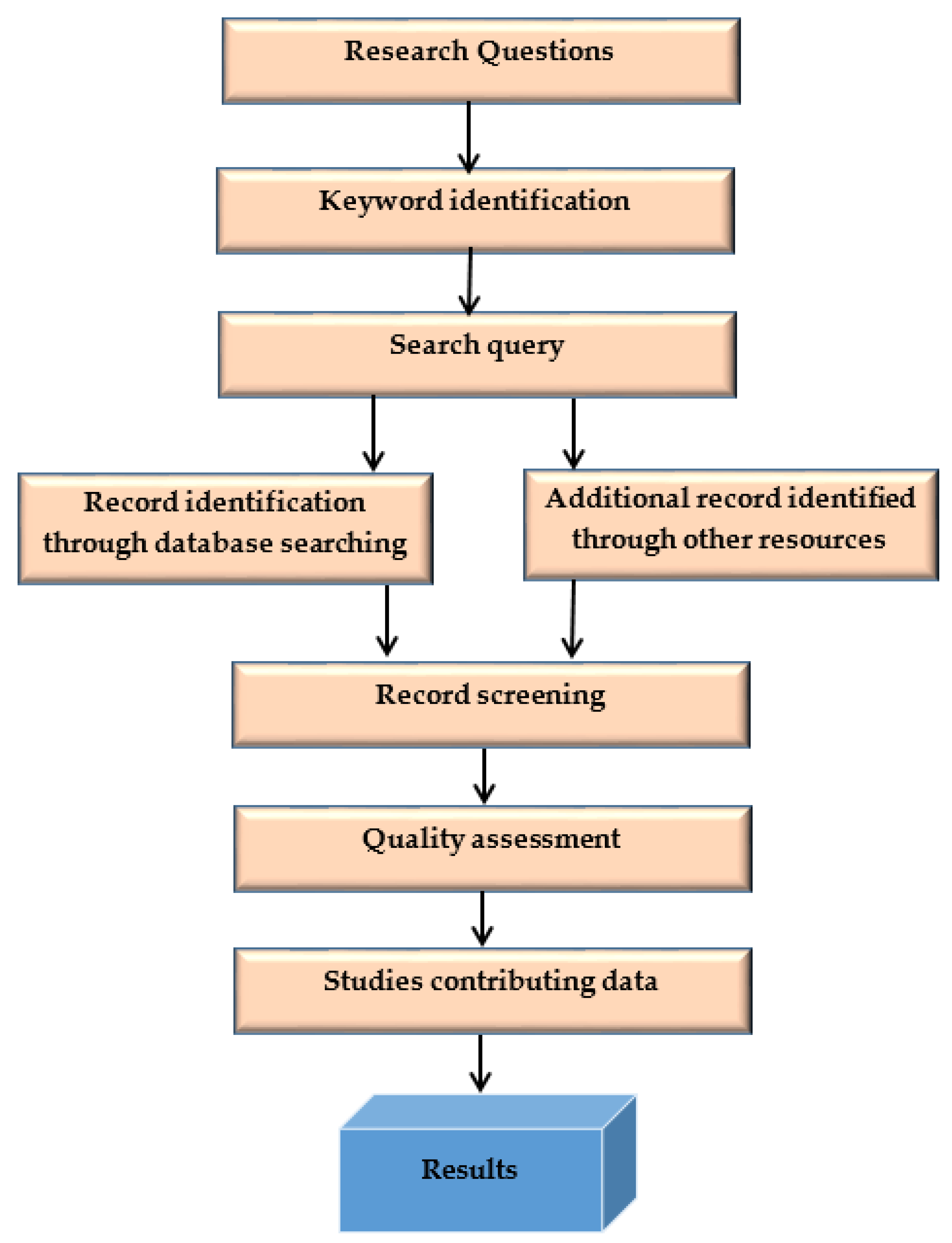

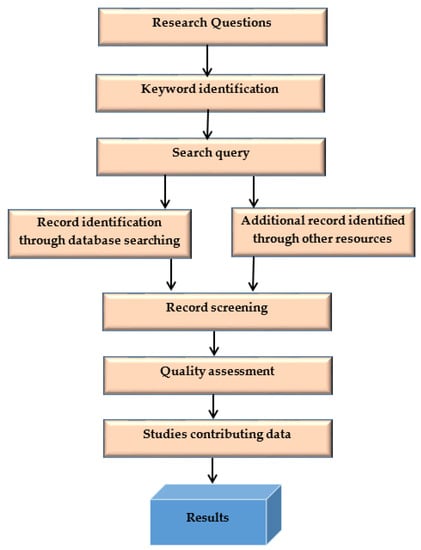

This systematic literature search (SLR) methodology process is shown in Figure 2.

Figure 2.

SLR methodology process.

3.1. Problem Statement

The primary problem is to outline the pros and cons of the method used to identify and classify skin cancer disease from the medical images and compare them for their efficiency.

3.2. Research Question

The research questions are listed in Table 1.

Table 1.

The research questions.

Kitchenham and Charters [8] defined guidelines for computer engineering systematic literature reviews, which this study follows. A systematic literature review uses a disciplined and repeatable technique to give a reliable and objective analysis of a research topic. The standards used are high-level and do not consider how the study issue affects the evaluation process. The review strategy is depicted in Figure 1. It is a different set of functions that aid in managing the review process; the author defined the protocol. In the following sections, we describe each step we summarized in Figure 1. To identify proper, efficient deep-learning skin cancer classification methods, we conducted a large-scale survey of studies of machine learning methods. This survey aims to understand different algorithms’ specific requirements and performance characteristics. We compared different categories of algorithms, referred to as machine learning and deep learning methods. The following questions are formulated to carry this SLR.

3.3. Keyword Identification

Primary and secondary keywords are presented in Table 2.

Table 2.

Primary and secondary keywords.

3.4. Search Query

Keywords are important words used to describe the subject and field of study in the titles and abstracts of research articles. The literature is searched using keywords to obtain solutions to the study questions. The literature is retrieved from the database and other sources using these keywords. There is a list of the keywords that were used to discover the primary studies for this SLR in the “Abstract” section. Finding out what keywords are most commonly used is the first step in developing a search strategy. To locate the primary keywords, the search begins by looking at the papers of well-known scholars. These keywords, as well as phrases that are similar to them, make up search queries. The research process is made more accessible by using the provided keywords. If you use the appropriate terms, your research will move much faster. The search query process is presented in Table 3.

Table 3.

The search query process.

3.4.1. Year-Wise Papers Selection

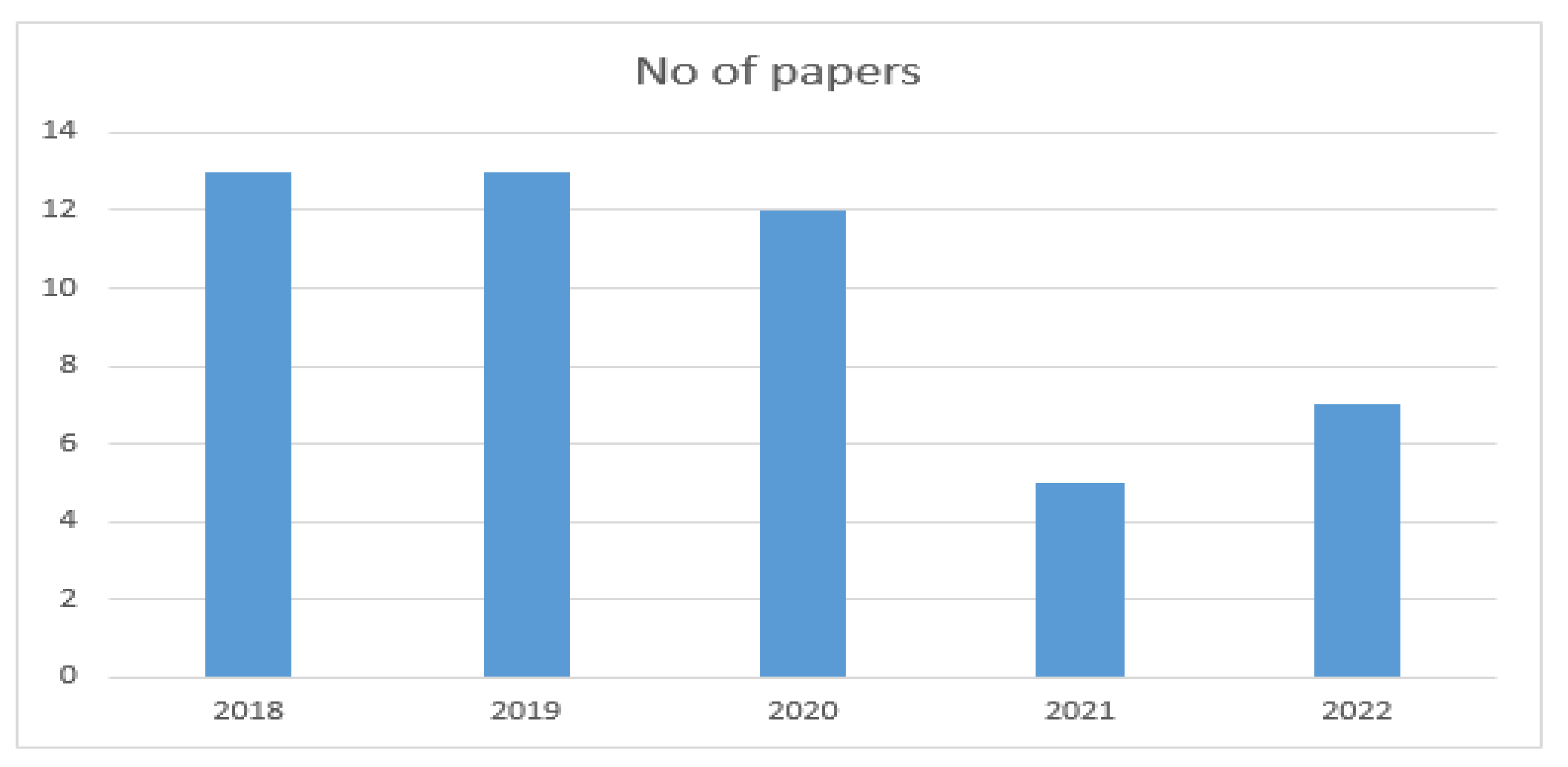

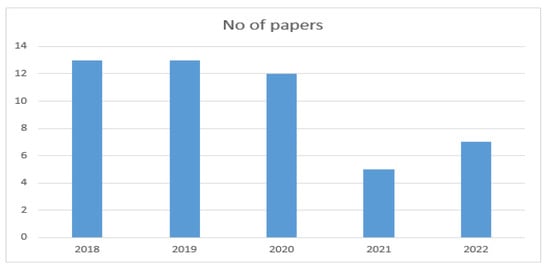

The final papers selected from 2018 to 2022 are presented in Table 4.

Table 4.

Year-wise selection of papers.

The year-wise selection of papers is shown in Figure 3.

Figure 3.

Year-wise selection of papers.

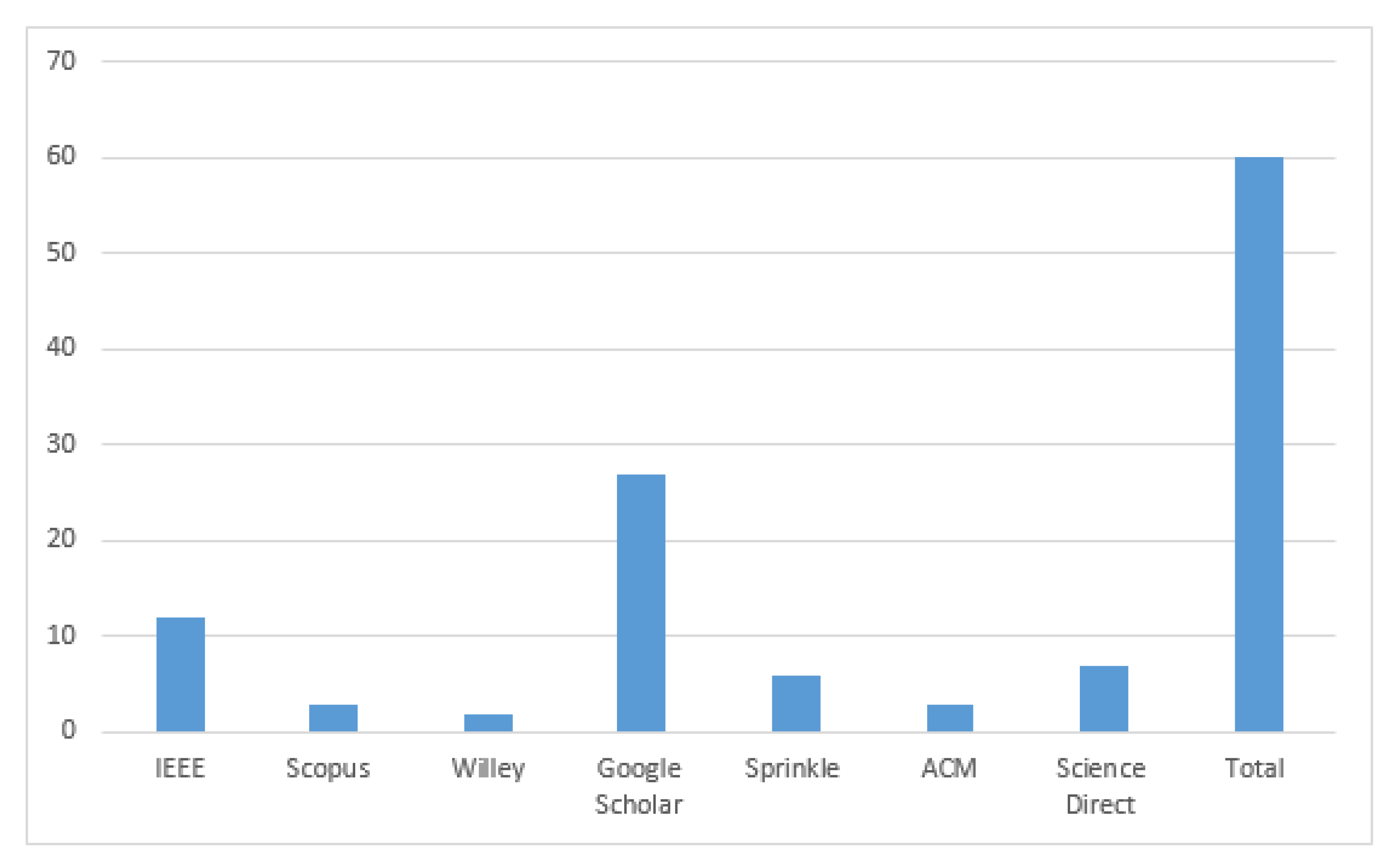

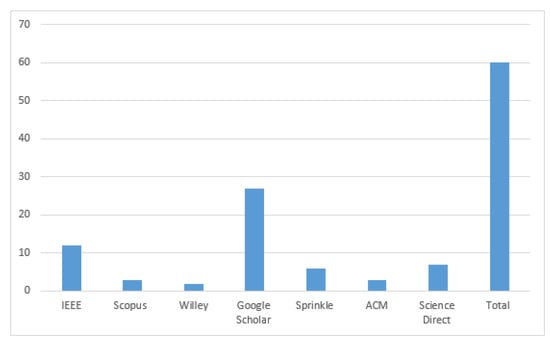

3.4.2. Final Paper Selection

Table 5.

Final paper selection.

Figure 4.

Final paper selection.

3.5. Inclusion/Exclusion Criteria and Quality Assessment

The following steps are followed for the quality assessment of papers:

- Initially, an extensive list of papers is obtained following the keyboard search.

- Publisher-based filtering is performed. Only journal/workshop papers are included

- Only papers from renowned publishers are included, such as springer, IEEE, A.C.M., Wiley, etc.

- Papers from Indian journals are excluded.

- Title-based filtering is performed, and the rest of the papers with the keywords in their titles are excluded.

- Then abstracts of the papers are read, and those papers are included, which describe comparing deep learning methods’ performance in classifying skin cancer.

- After that, papers are searched for answers to our questions. Articles are included if the solution to any question is found; otherwise, it is excluded.

The following factors are taken into account when sorting the papers:

- If the title contains at least one keyword, the mark is 1; otherwise, it is 0.

- If the abstract defines a performance evaluation metric, the mark is 1; otherwise, the mark is 0.

- If the introduction and conclusion discuss performance measurements, the mark is 1; otherwise, the grade is 0.

- If the work describes a comparison with at least one earlier study, the mark is 1; otherwise, it is 0.

- In the end, papers with a score >=3 are included in the results.

- Workshop indicates A, conference indicates B, journal indicates C,

- The quality assessment of the papers is presented in Table 6.

Table 6. The quality assessment of the papers.

Table 6. The quality assessment of the papers.

Cancer develops when tissues in a particular organ or body part grow out of control. One of the cancers with the fastest global spread is skin cancer.

Skin cancer can develop due to the uncontrolled and rapid spread of unwanted skin cells. Potential treatments can be successful only if cancer is identified early and diagnosed correctly. Most deaths from skin cancer in developed countries are caused by melanoma, the fatal type of disease. Deep learning significantly outperforms human experts in several computer vision competitions at early skin cancer detection, resulting in lower mortality rates. It is possible to combine powerful formulations with deep learning methods to achieve excellent processing and classification precision. The deep hybrid learning (DL) model for classification and prediction is used to identify early cancer indicators in lesion images. Preprocessing and classification are essential in the system under consideration. The overall image intensity is increased during the preprocessing stage to reduce the counterpoint between shots. The image is normalized and scaled during this process to match the size of the training model. The proposed model’s performance was assessed in the comparative studies using several different metrics. As quantitative measures, precision, recall, F1, and the area under the curve were used (AUC).

Skin cancer has increased in occurrence over the past ten years. Since the skin is the body’s largest organ, skin cancer is probably the most common in people. Only when skin cancer is found early can it be effectively treated. The earliest signs of skin cancer can now be found more easily than ever, thanks to computational methods. According to one report, there are several non-invasive ways to look at skin cancer symptoms. The various shortcomings of methods for dividing and classifying skin lesions were examined. An improved method for melanoma skin cancer diagnosis is described in clause [39]. An implantation manifold with non-linear embedding was used to create synthetic images of melanoma. Using the data augmentation strategy, a new set of skin melanoma datasets were created using dermoscopic scans from the public PH2 dataset. The Squeeze Net deep learning model was trained using the improved images. Experiments showed a significant improvement in melanoma detection accuracy (92.18). A skin melanoma (SM) region could be extracted from a digital dermatoscopy image using the VGG Sag Net algorithm.

Comparing the segmented SM to the actual data revealed critical performance parameters (GT). The proposed method was tested, and its accuracy was verified using the industry-recognized ISIC2016 database. The use of machines to assist in early cancer detection has shown the shortcomings of the manual method and given rise to a new area of study. This section summarizes a few pertinent studies to give readers a sense of the subject at hand and an idea of the current situation. In many instances, deep learning techniques have outperformed more traditional machine learning methods. Over the past several decades, deep learning has significantly changed the field of machine learning. Machine learning’s most cutting-edge technology is artificial neural networks. This approach was based on how the human brain works and is organized. In several fields, CNNs and other deep learning models have been shown to outperform traditional approaches. Examples include the ability to recognize features and images. They have achieved outstanding results and excelled under pressure in the medical field.

A wide range of DL-based medical imaging systems are now available to medical professionals, which can help with cancer diagnosis, treatment planning and treatment efficacy assessment. With the help of CAD (Computer-aided design) software, diagnosing various diseases can be accelerated, uniformed and made more precise. Additionally, CAD makes detecting and preventing cancer diseases simpler and more affordable. Imaging methods such as magnetic resonance imaging (MRI), positron emission tomography (PET) and X-rays are used to examine organ issues in people. In the past, clinical screening, image analysis with computed tomography (CT), dermatoscopy and other techniques could be used to find and diagnose skin lesions. The difficulty in diagnosing and reporting NMSC, on the other hand, may cause the second number to be significantly lower than the actual number. Numerous factors contribute to the high death rate from skin cancer, including late diagnosis brought on by ambiguous symptoms, ineffective screening techniques, a lack of sensitive and specific biomarkers for early diagnosis, prognosis, and treatment monitoring, as well as ignorance of the mechanisms by which these tumors develop drug resistance. As a result, the COVID-19 pandemic has taken center stage in daily clinical practice. Due to the difficulty in treating those with CM and other skin cancers and the delay in diagnosis, there has been an increase in the rates of illness and death and an increase in the financial burden on the healthcare system. Future cancer treatments may benefit from personalized medicine, which determines the best course of action for each cancer patient based on their particular molecular characteristics. The personalized approach determines the likelihood that the tumor will spread and the best course of treatment using a multidimensional biochemical analysis of several biological endpoints. Droplet digital polymerase chain reaction (DDPCR) has recently become a popular omics technology due to its ability to identify and measure minuscule amounts of nucleic acids in various biological samples. This is crucial for subtyping cancer, predicting outcomes, and keeping track of the disease’s progression.

The fact that skin cancer is frequently detected too late is a major contributor to its high mortality rate. There are not enough trustworthy screening techniques, as well as not enough sensitive and precise biomarkers for early diagnosis and prediction of the course of these tumors, and it is unclear how drugs lose their effectiveness. Nearly all thin lesions in Australia that were surgically removed after five years are still alive (depending on the presence of ulceration). As a result, survival rates drastically decrease as tumor thickness increases. Only 54% of people with CM tumors thicker than 4 mm will survive. The health of the lymph nodes in the affected area can predict future outcomes even in the earliest stages of melanoma. Unnoticeable lymph node metastases can be found using a sentinel lymph node (SLN) biopsy. This makes it possible to remove lymph nodes early to stop the cancer’s spread. The identification of biomarkers that can be used in the clinical management of oral cancer, ovarian cancer, and esophagogastric cancer, however, has been made possible by the promising outcomes of DDPCR assays for circulating miRNAs in other categories of tumors. There is a great deal of optimism that more efficient and individualized treatments will be found for these patients when DDPCR techniques are used in biomedical and translational research on skin cancer.

4. Results

4.1. RQ1: What Are the Features and Advantages of Recently Developed DL Methods for Skin Cancer Classification?

Initially, most articles conducted two operations on the skin lesion and graded it into melanoma and the initial stage. Most studies analyzed in this report centered on an evaluation based on differential disease classification. Upon diagnosing cancer such as melanoma, a suitable type was further tested. Melanoma comprises four significant forms, nodal melanoma, acral lentiginous, and lentigo malignant, which are superficially propagated. Deep learning methods to detect a specific type were trained on lesion images. All forms, positions and lesion objects concerning proportions and colors exist. Superficial melanoma has an obscure hue on the skin with an unusual line.

In comparison, both in scale and color, lentigo malignancy and lentigo entities have been formed irregularly. If the disease has not been described as benign, the disease is graded as dermal, melanocytic or epidermal in three distinct forms. Such forms fall into the heading of a non-cancerous skin condition similar to melanoma. Moreover, the most used technique is DCNN, followed by Fr CN and transfer learning, which is almost reported for every data set. A comparison of different DL methods is presented in Table 7.

Table 7.

Comparison of different DL methods.

4.2. RQ2: What Data Sets Are Used in Skin Cancer Detection Methods Evaluations?

To detect skin cancer, several computer-based methods have been suggested. A strong and reliable collection of dataset images is necessary for assessing diagnostic performance and assuring expected outcomes. Images of nevi and melanoma lesions are the only images in skin cancer databases currently available. Insufficient data types and small datasets make it challenging to train artificial neural networks to classify skin lesions. Although patients frequently have a wide range of non-melanocytic lesions, previous research on automated skin cancer diagnosis has mainly focused on melanocytic lesions.

The list of the datasets used for skin cancer detection is explained in Table 8.

Table 8.

A list of publicly available datasets for skin cancer detection.

Other datasets are not publicly and freely available, which is why they are considered private and not included in this SLR.

4.3. RQ3: What Are Future Challenges Reported in This Domain?

4.3.1. Extensive Training

One of the most important problems is that skin cancer detection using neural networks is not as efficient as it could be. The system must undergo extensive training, which requires a lot of time and very powerful hardware, before accurately assessing and interpreting the features from image data.

4.3.2. Variation in Lesion Sizes

Another issue is that lesions come in different sizes. Many images of benign and malignant melanoma lesions were taken in the 1990s by an Italian and Austrian research team [55]. Doctors who attempted to locate lesions were accurate between 95% and 96% of the time. However, initially, the diagnostic procedure was more challenging and faulty when the lesions were only 1 mm or 2 mm in size [56].

4.3.3. Images of Light-Skinned People in Standard Datasets

Caucasians, Europeans, and fair-skinned Australians are represented in the standard dataset. To more accurately identify skin cancer in people of color, a neural network can be trained to consider skin color [14]. This is only feasible, though, if enough images of people of color are included in the neural network’s training data. Lesion images from enough people with dark and light skin are needed to improve the precision of skin cancer detection systems [57].

4.3.4. Small Interclass Variation in Images of Skin Cancer

Medical images are essentially identical to other kinds of images. The differences between cats and dogs are significantly less when compared to images of melanoma and non-melanoma skin cancer lesions [58]. It might be challenging to tell a pimple from a specific skin cancer called melanoma. Some diseases’ lesions are hardly distinguishable from one another. Due to a lack of differences, image analysis and classification are incredibly difficult [59].

4.3.5. Use of Various Optimization Techniques

In order to do preprocessing and automatically detect skin cancer, the boundaries of the lesion must be identified. Automated skin cancer diagnosis systems should perform better when using optimization techniques, such the artificial bee colony algorithm, ant colony optimization, social spider optimization, and particle swarm optimization.

4.3.6. Unbalanced Skin Cancer Datasets

Real-world data are highly biased, which makes identifying skin cancer challenging. The number of images for each type of skin cancer differs widely when data sets are not symmetrical. It is challenging to conclude skin cancer from dermoscopy images because there are many images of common types but few images of rare types [60].

4.3.7. Lack of Availability of Powerful Hardware

The Neural network needs a lot of hardware resources and a strong graphics processing unit to extract specific areas of an image of a lesion, which is required for a more precise skin cancer diagnosis. Deep learning is difficult to train for skin cancer detection due to low processing power.

4.3.8. Lack of Availability of Age-Wise Division of Images in Standard Datasets

Merkel cell carcinoma, basal cell carcinoma, and squamous cell carcinoma are common after age 65. The dermoscopy databases that are currently available follow industry standards and contain pictures of children. However, until neural networks have seen enough images of people over 50, they will not be able to diagnose skin cancer in people over 50 correctly [61].

4.3.9. Analysis of Genetic and Environmental Factors

This type of skin cancer is genetically more likely to affect people with fair skin, light eyes, red hair, many moles, and a family history of melanoma. The likelihood of developing skin cancer dramatically rises when these environmental risk factors, such as extended exposure to UV light, are combined with genetic risk factors. Deep learning methods can be enhanced by including these elements [62].

Different studies have identified future challenges in this domain, as shown in Table 9.

Table 9.

The future challenges reported in this domain.

4.4. RQ4: What Are the Machine Learning and Deep Learning Approaches for Skin Cancer Detection?

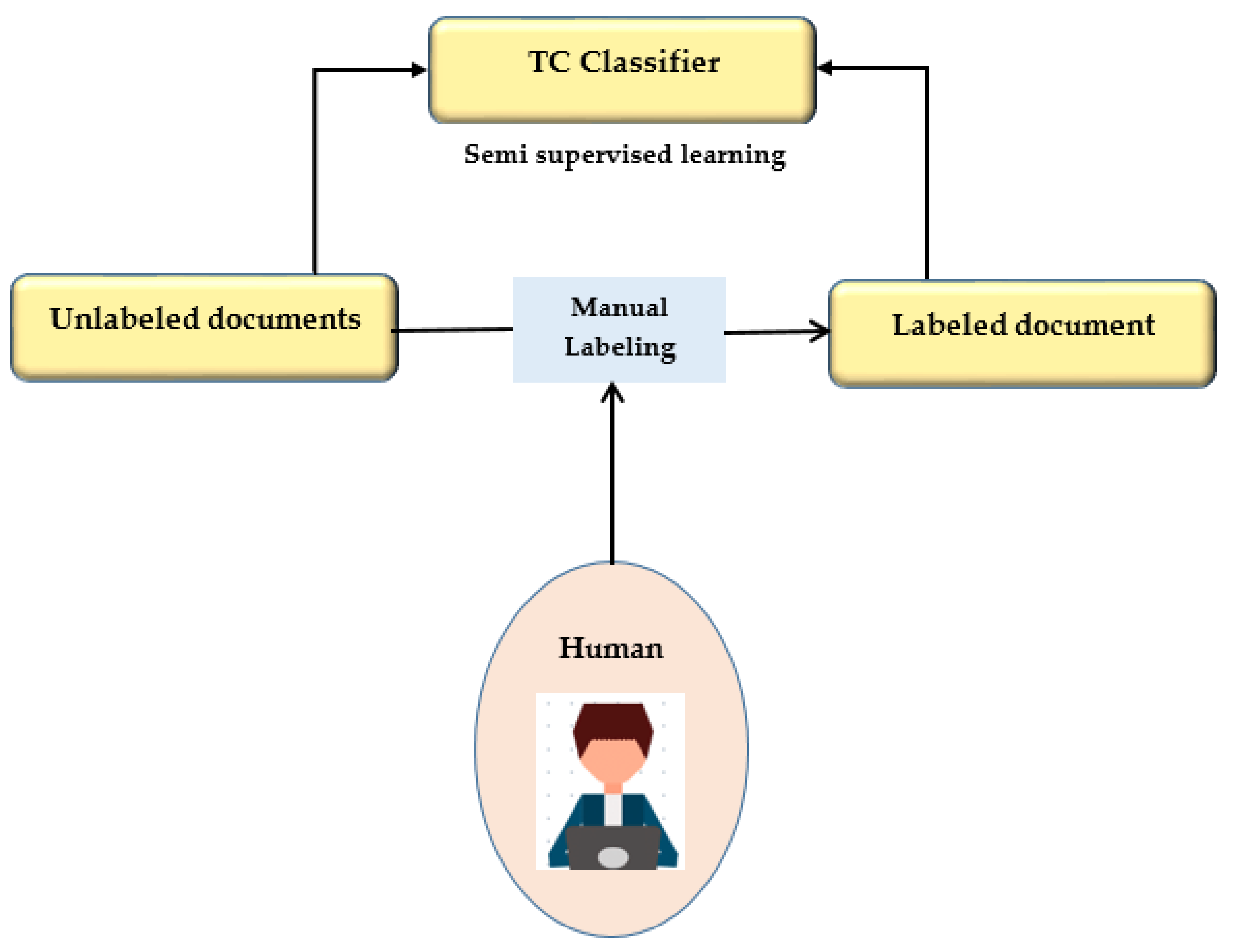

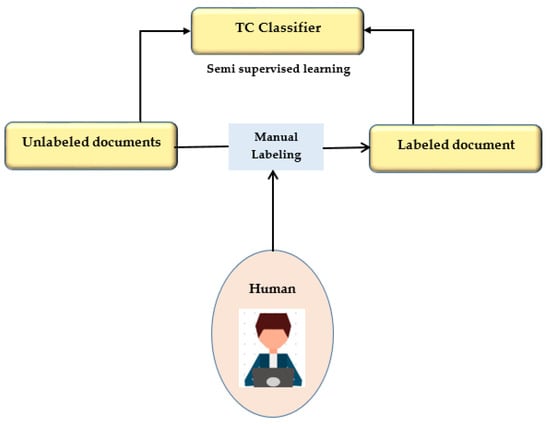

This study presents a semi-supervised learning technique using two iterations of preprocessing and segmentation to separate lesions from dermoscopy images autonomously. Non-linear regression and the CLACHE algorithm are used to fix the image’s unequal lighting during the preprocessing stage of picture scaling [52]. by classifying pixels according to how their RGB color space appears, k-means clustering is used to increase the lesion prediction’s accuracy. A unique strategy is suggested for finding lesions that combine deep learning and local description encoding. A wide range of feature values that can be applied to different lesions can be quickly generated using this model. The publicly available ISBI 2016 dataset was used to assess the proposed model [32]. Semi-supervised learning is presented in Figure 5.

Figure 5.

Semi-supervised learning.

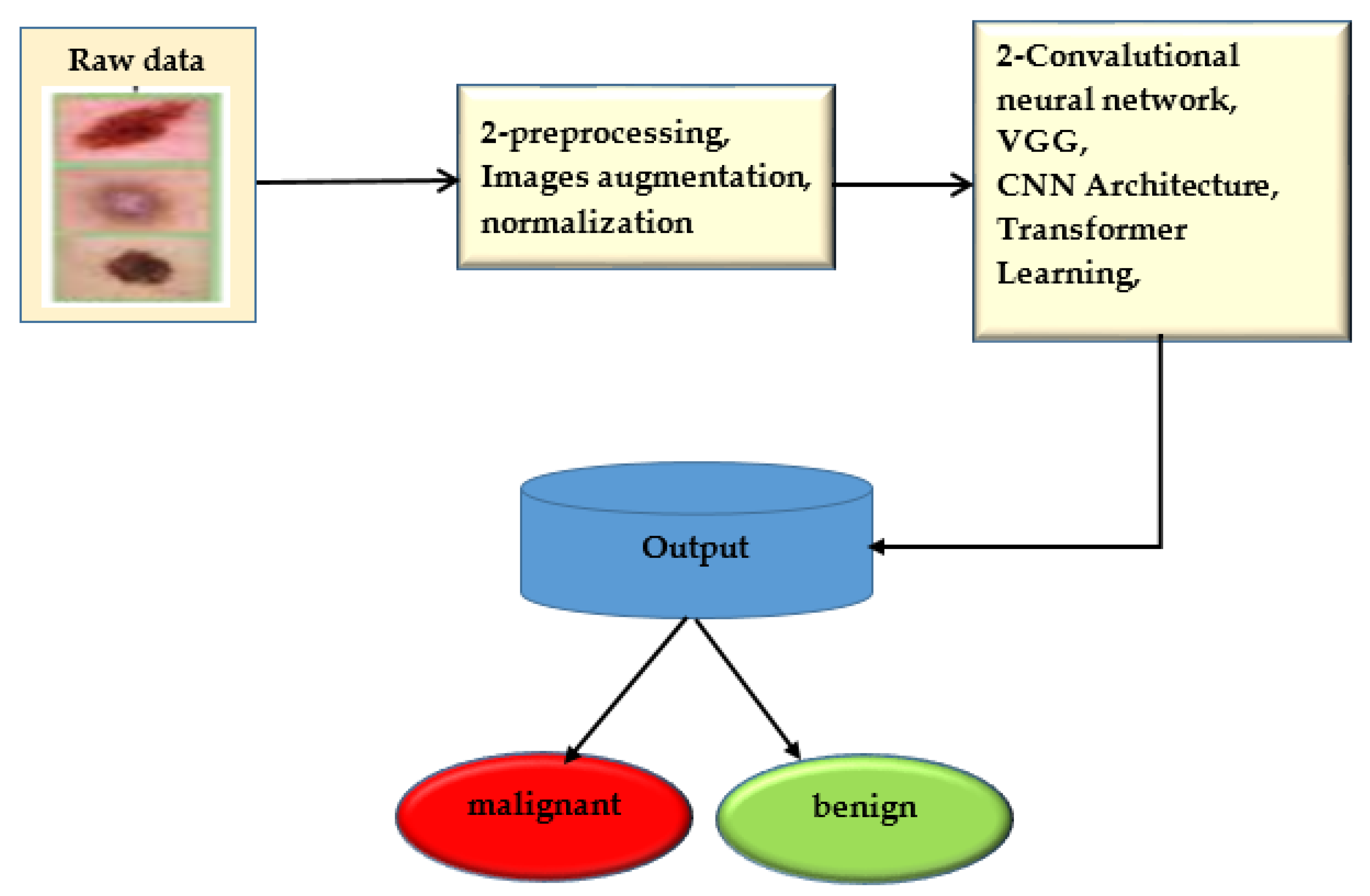

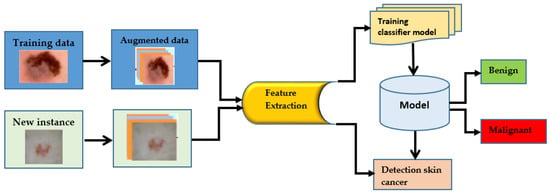

The convolution neural network was trained to use deep learning to anticipate minor skin changes using dermoscopy images. An initial dermoscopy screening is frequently used to diagnose skin cancer. A biopsy and histopathological analysis are then completed. The proposed framework correctly categorizes lesions using a novel regularized binary classifier [39]. To assess performance, the area under the curve for nevus images is calculated and contrasted with the area under the curve for lesion images. The convolutional neural network is presented in Figure 6.

Figure 6.

Convolutional neural network.

The effectiveness of the suggested approach depends on the user’s knowledge and skill level. This project aims to make manual analysis less confusing and unpredictable. Researchers created a deep learning model based on lesion patterns for automatic melanoma recognition and lesion segmentation from images of skin lesions, combining a variety of hypotheses into one evaluation using a variety of deep learning algorithms [53]. It has issues because, before alerting a patient, a single doctor would frequently seek the advice of other specialists to pass and confirm the accuracy of the diagnosis. Numerous deep learning techniques were created using the same dataset and a significant amount of data improvement. Inception-v4, ResNet-152, and DenseNet-161 are only a few of the convolutional neural networks trained to recognize the difference between melanoma and seborrheic keratosis images. U-Net and U-Net with VGG-16 Encoder were used to construct segmentation masks for the lesion, both of which required training.

The CNN model is used as a kind of identification approach in dermoscopy images from the HAM10000 dataset to identify skin cancer. The results showed that the CNN model could achieve excellent accuracy when this dataset was used to train and test it. The authors of a different study assessed the effectiveness of various machine learning (ML) algorithms for breast cancer diagnosis using data from the UCI machine learning repository [54]. To choose which features to use, they used information-gain and relief. They then entered these features into algorithms including SVM, RF, RNN and CNN, to improve classification accuracy. The outcomes showed that RNN and other deep learning algorithms are more effective in identifying cancer than earlier methods.

As techniques that can be used independently to improve classification effectiveness and boost the precision of melanoma detection, a CNN is used to identify the different aspects of the lesion, and a deep learning technique based on the U-Net algorithm is used to separate the lesion area from dermoscopy images. Melanomas are categorized, and the malignancy of the tumor is determined using the VGG16 Net method. Both groups, finding segmented images and non-segmented images, are given. The ISIC 2016 data set found that deep learning-based categorization works more accurately when segmented pictures are used [55]. Deep learning-based techniques such as neural networks and feature-based algorithms can be used to find skin lesions more accurately by integrating probabilistic graphical models into this network [56]. PH2 and ISIC 2019 data should be used to train the network. The procedure is finished after NN architecture analysis and examination of training metrics such as accuracy, specificity, sensitivity, and the Dice coefficient. Using transfer learning based on CNN’s design, they can identify the different types of lesions. To identify what is in a photo, the proposed system employs a traditional technique that extracts a few elements from the image before classifying it. The categorizing procedure makes use of support vector machines. Due to the high incidence of skin cancer in western countries, especially the United States, the proposed technique is essential. There are 12 million people with cancer, and the suggested method provides more precise results. In the United States, an additional million cases of skin cancer are anticipated to be diagnosed by 2020. A dermatologist’s skilled eye is the best way to identify malignant cells and skin cancer. Because of this, it is challenging to provide everyone with high-quality dermatological care, and many people wait until their disease has grown worse before seeking it. A biopsy is a common procedure for making a variety of diagnoses. A little skin is removed during a biopsy and submitted to the lab for analysis. This is time-consuming and usually highly frustrating. Screenings with computer assistance can now find skin cancer in its earliest stages. Ordinary digital cameras and video recorders can capture images of tiny objects. The following classification of these images as measurable ones, commonly used in computer processing, is presented here. Poor lighting and artefacts, including skin lines, highlights, repetitions and hair, are potential problems in medical photography. Researching skin lesions is exceedingly challenging because of these barriers. On a computational level, preprocessing, trend detection, character selection, feature extraction and skin cancer detection are all conceivable. Malignant melanoma, the worst type of skin cancer, is on the rise. Due to artefacts, low contrast and the fact that skin cancer resembles a mole, scar, etc., it may be challenging to identify it from a skin lesion. Hence, lesion detection systems that are accurate, efficient and effective are used for automated skin lesion identification [57]. Early skin lesion detection is possible with the help of the ABCD rule, GLCM, and HOG feature extraction. Preprocessing improves the clarity and quality of skin lesions and eliminates abnormalities such as skin and hair color. Different parts of the lesion were made GAC, and each can be used separately for feature extraction. The ABCD scoring system was used to determine the features of symmetry, border, color and diameter. The texture of the object was determined using the HOG and GLCM programmed. Classifiers use characteristics to assess whether a skin lesion is benign or cancerous. They then use machine learning methods, such as support vector machines, k-nearest neighbors, and naive Bayes classifiers. The International Skin Imaging Collaboration (ISIC) scanned 328 benign and 672 malignant skin lesions for this study. The results showed an AUC of 0.94 and a classification accuracy of 97.8% using SVM classifiers. The test’s accuracy rate was 86.2%, with 85% confidence in its results [55]. Identifying benign from malignant tumors is the main goal of skin cancer research. Melanoma subtypes, on the other hand, have not previously drawn much attention. Dermoscopy and deep learning were used in this study to see if they could help detect AM and other types of melanoma. In this study, deep learning was used to learn how to recognize skin cancer. Using a collection of dermoscopy photos, we classified skin lesions. Several innovative image processing and information-adding methods have made AM identification easier. We used to seven-layer deep convolutional networks to build our model. We assessed the performance of our model using transfer learning on two different datasets, Alex Net and ResNet-18. With our new model, we could produce findings that were more accurate than 90% of the time for benign nevus and AM. We also avoided a 97% reduction in approach size because of transfer learning. Based on our research, we concluded that our skin cancer classification system was reliable and accurate. According to our research, dermatologists could use the suggested method to identify AML, which would be essential for patient therapy. Different machine and deep learning approaches and their results are shown in Table 10.

Table 10.

Machine learning and deep learning approaches.

5. Findings

This systematic review discusses various deep learning algorithms for skin cancer detection and classification. These methods are all non-invasive. Preprocessing and picture segmentation are followed by feature extraction and classification to detect skin cancer. For the classification of lesion images, this review focused on ANNs, CNNs, KNNs and RBFNs. Each algorithm has its own set of benefits and drawbacks. Choosing the right classification technique is the most important factor in achieving the best results. However, when it comes to identifying picture data, CNN outperforms other types of neural networks since it is more closely tied to computer vision than others.

6. Conclusions

This paper addresses state-of-the-art investigations of the techniques proposed for the diagnosis of melanoma. In addition, there have been identified problems and difficulties. In addition, deep learning-based strategies such as convergent neural networks, pre-trained models, transfer learning and hybrid methods for detecting melanoma were studied in this research. It has been noted that deep learning techniques are essential for complex and composite preprocessing techniques such as picture resize and cropping, as well as pixel value norms. In addition, the critical limitations of existing approaches are described in this analysis as the areas where more changes are required. The hand-made techniques were better than the standard methods for deep learning. Many studies have employed designed features for the preprocessing and segmentation extraction capabilities. In addition, in medical image libraries, marking photographs is deemed the most critical task. A broad number of established benchmarks were made available to researchers to test their work, including PH2, ISBI, Derm IS, Dermquest, Med node, and Open Access datasets. In addition, melanoma diagnosis was also available in unpublished/non-listed data sets. However, it is hard to compare. For the classification of melanoma, various databases were available. Such datasets were freely accessible, and others were not available. It has been found that the numbers of images differed in multiple datasets. Moreover, some articles rendered a self-collected image dataset using the website. In the end, different challenges and future works are mentioned.

Author Contributions

Conceptualization, L.P.W.G. and I.H.; methodology, F.R.; software, I.H. and A.D.; validation, A.D. and F.R.; formal analysis, A.D.; investigation, I.Z.; resources, I.Z.; writing—original draft, T.M. and S.A.H.M.; writing—review and editing, T.M., S.A.H.M. and J.A.G.; project administration, J.A.G. and L.P.W.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nawaz, M.; Mehmood, Z.; Nazir, T.; Naqvi, R.A.; Rehman, A.; Iqbal, M.; Saba, T. Skin cancer detection from dermoscopic images using deep learning and fuzzy k -means clustering. Microsc. Res. Tech. 2021, 85, 339–351. [Google Scholar] [CrossRef]

- Naqvi, R.A.; Hussain, D.; Loh, W.-K. Artificial Intelligence-based Semantic Segmentation of Ocular Regions for Biometrics and Healthcare Applications. Comput. Mater. Contin. 2020, 66, 715–732. [Google Scholar] [CrossRef]

- Hassan, S.S.U.; Abbas, S.Q.; Ali, F.; Ishaq, M.; Bano, I.; Hassan, M.; Jin, H.Z.; Bungau, S.G. A Comprehensive in silico exploration of pharmacological properties, bioactivities, molecular docking, and anticancer potential of vieloplain F from Xylopia vielana Targeting B-Raf Kinase. Molecules 2022, 27, 917. [Google Scholar] [CrossRef]

- Naeem, A.; Anees, T.; Naqvi, R.A.; Loh, W.-K. A Comprehensive Analysis of Recent Deep and Federated-Learning-Based Methodologies for Brain Tumor Diagnosis. J. Pers. Med. 2022, 12, 275. [Google Scholar] [CrossRef]

- Hassan, S.S.U.; Abbas, S.Q.; Hassan, M.; Jin, H.-Z. Computational Exploration of Anti-Cancer Potential of Guaiane Dimers from Xylopia vielana by Targeting B-Raf Kinase Using Chemo-Informatics, Molecular Docking and MD Simulation Studies. Anti-Cancer Agents Med. Chem. 2021, 22, 731–746. [Google Scholar] [CrossRef]

- Hassan SS, U.; Zhang, W.D.; Jin, H.Z.; Basha, S.H.; Priya, S.S. In-silico anti-inflammatory potential of guaiane dimers from Xylopia vielana targeting COX-2. J. Biomol. Struct. Dyn. 2022, 40, 484–498. [Google Scholar] [CrossRef]

- Hassan, S.S.U.; Muhammad, I.; Abbas, S.Q.; Hassan, M.; Majid, M.; Jin, H.-Z.; Bungau, S. Stress Driven Discovery of Natural Products from Actinobacteria with Anti-Oxidant and Cytotoxic Activities Including Docking and ADMET Properties. Int. J. Mol. Sci. 2021, 22, 11432. [Google Scholar] [CrossRef]

- Codella, N.C.F.; Gutman, D.; Celebi, M.E.; Helba, B.; Marchetti, M.A.; Dusza, S.W.; Kalloo, A.; Liopyris, K.; Mishra, N.; Kittler, H.; et al. Skin lesion analysis toward melanoma detection: A challenge at the 2017 international symposium on biomedical imaging (ISBI), Hosted by the international skin imaging collaboration (ISIC). In Proceedings of the IEEE 15th International Symposium on Biomedical Imaging (ISBI), Washington, DC, USA, 4–7 April 2018; pp. 168–172. [Google Scholar]

- Mendonça, T.; Ferreira, P.M.; Marques, J.S.; Marcal, A.R.; Rozeira, J. PH 2-A dermoscopic image database for research and benchmarking. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013. [Google Scholar]

- Bisla, D.; Choromanska, A.; Berman, R.S.; Stein, J.A.; Polsky, D. Towards automated melanoma detection with deep learning: Data purification and augmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Rathee, G.; Sharma, A.; Saini, H.; Kumar, R.; Iqbal, R. A hybrid framework for multimedia data processing in IoT-healthcare using blockchain technology. Multimed. Tools Appl. 2020, 79, 9711–9733. [Google Scholar] [CrossRef]

- Codella, N.; Rotemberg, V.; Tschandl, P.; Celebi, M.E.; Dusza, S.; Gutman, D.; Helba, B.; Kalloo, A.; Liopyris, K.; Marchetti, M.; et al. Skin lesion analysis toward melanoma detection 2018: A challenge hosted by the international skin imaging collaboration (isic). arXiv 2019, arXiv:1902.03368. [Google Scholar]

- Brinker, T.J.; Hekler, A.; Utikal, J.S.; Grabe, N.; Schadendorf, D.; Klode, J.; Berking, C.; Steeb, T.; Enk, A.H.; von Kalle, C. Skin Cancer Classification Using Convolutional Neural Networks: Systematic Review. J. Med. Internet Res. 2018, 20, e11936. [Google Scholar] [CrossRef]

- Mukherjee, S.; Adhikari, A.; Roy, M. Malignant melanoma classification using cross-platform dataset with deep learning CNN architecture. In Recent Trends in Signal and Image Processing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 31–41. [Google Scholar]

- Guidelines for Performing Systematic Literature Reviews in Software Engineering. Available online: https://www.elsevier.com/__data/promis_misc/525444systematicreviewsguide.pdf (accessed on 23 December 2022).

- Hosny, K.M.; Kassem, M.A.; Foaud, M.M. Classification of skin lesions using transfer learning and augmentation with Alex-net. PLoS ONE 2019, 14, e0217293. [Google Scholar] [CrossRef] [PubMed]

- Interactive Dermatology Atlas. Available online: https://resourcelibrary.stfm.org/resourcelibrary/viewdocument/interactive-dermatology-atlas (accessed on 25 December 2022).

- Hosny, K.M.; Kassem, M.A.; Foaud, M.M. Skin cancer classification using deep learning and transfer learning. In Proceedings of the 2018 9th Cairo International Biomedical Engineering Conference (CIBEC), Cairo, Egypt, 20–22 December 2018. [Google Scholar]

- Naeem, A.; Farooq, M.S.; Khelifi, A.; Abid, A. Malignant Melanoma Classification Using Deep Learning: Datasets, Performance Measurements, Challenges and Opportunities. IEEE Access 2020, 8, 110575–110597. [Google Scholar] [CrossRef]

- Jianu, S.R.S.; Ichim, L.; Popescu, D. Automatic diagnosis of skin cancer using neural networks. In Proceedings of the 2019 11th International Symposium on Advanced Topics in Electrical Engineering (ATEE), Bucharest, Romania, 28–30 March 2019. [Google Scholar]

- Al-Masni, M.A.; Al-Antari, M.A.; Choi, M.-T.; Han, S.-M.; Kim, T.-S. Skin lesion segmentation in dermoscopy images via deep full resolution convolutional networks. Comput. Methods Programs Biomed. 2018, 162, 221–231. [Google Scholar] [CrossRef]

- Xie, F.; Yang, J.; Liu, J.; Jiang, Z.; Zheng, Y.; Wang, Y. Skin lesion segmentation using high-resolution convolutional neural network. Comput. Methods Programs Biomed. 2019, 186, 105241. [Google Scholar] [CrossRef]

- Warsi, F.; Khanam, R.; Kamya, S.; Suárez-Araujo, C.P. An efficient 3D color-texture feature and neural network technique for melanoma detection. Inform. Med. Unlocked 2019, 17, 100176. [Google Scholar] [CrossRef]

- Sarkar, R.; Chatterjee, C.C.; Hazra, A. Diagnosis of melanoma from dermoscopic images using a deep depthwise separable residual convolutional network. IET Image Process. 2019, 13, 2130–2142. [Google Scholar] [CrossRef]

- El-Khatib, H.; Popescu, D.; Ichim, L. Deep Learning–Based Methods for Automatic Diagnosis of Skin Lesions. Sensors 2020, 20, 1753. [Google Scholar] [CrossRef]

- Adegun, A.; Viriri, S. Deep convolutional network-based framework for melanoma lesion detection and segmentation. In International Conference on Advanced Concepts for Intelligent Vision Systems; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Soudani, A.; Barhoumi, W. An image-based segmentation recommender using crowdsourcing and transfer learning for skin lesion extraction. Expert Syst. Appl. 2018, 118, 400–410. [Google Scholar] [CrossRef]

- Khan, M.A.; Javed, M.Y.; Sharif, M.; Saba, T.; Rehman, A. Multi-model deep neural network based features extraction and optimal selection approach for skin lesion classification. In Proceedings of the 2019 international conference on computer and information sciences (ICCIS), Sakaka, Saudi Arabia, 3–4 April 2019. [Google Scholar]

- Abbas, Q.; Celebi, M. DermoDeep-A classification of melanoma-nevus skin lesions using multi-feature fusion of visual features and deep neural network. Multimed. Tools Appl. 2019, 78, 23559–23580. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.I.; Raza, M.; Anjum, A.; Saba, T.; Shad, S.A. Skin lesion segmentation and classification: A unified framework of deep neural network features fusion and selection. Expert Syst. 2019, 39, e12497. [Google Scholar] [CrossRef]

- Yu, Z.; Jiang, X.; Zhou, F.; Qin, J.; Ni, D.; Chen, S.; Lei, B.; Wang, T. Melanoma Recognition in Dermoscopy Images via Aggregated Deep Convolutional Features. IEEE Trans. Biomed. Eng. 2018, 66, 1006–1016. [Google Scholar] [CrossRef] [PubMed]

- Jayapriya, K.; Jacob, I.J. Hybrid fully convolutional networks-based skin lesion segmentation and melanoma detection using deep feature. Int. J. Imaging Syst. Technol. 2019, 30, 348–357. [Google Scholar] [CrossRef]

- Majtner, T.; Yildirim-Yayilgan, S.; Hardeberg, J.Y. Optimised deep learning features for improved melanoma detection. Multimedia Tools Appl. 2018, 78, 11883–11903. [Google Scholar] [CrossRef]

- Namozov, A.; Cho, Y.I. Convolutional neural network algorithm with parameterized activation function for melanoma classification. In Proceedings of the 2018 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 17–19 October 2018. [Google Scholar]

- Pham, T.C.; Luong, C.M.; Visani, M.; Hoang, V.D. Deep CNN and data augmentation for skin lesion classification. In Asian Conference on Intelligent Information and Database Systems; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Yang, J.; Xie, F.; Fan, H.; Jiang, Z.; Liu, J. Classification for Dermoscopy Images Using Convolutional Neural Networks Based on Region Average Pooling. IEEE Access 2018, 6, 65130–65138. [Google Scholar] [CrossRef]

- Aldwgeri, A.; Abubacker, N. Ensemble of deep convolutional neural network for skin lesion classification in dermoscopy images. In International Visual Informatics Conference; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Albahar, M.A. Skin Lesion Classification Using Convolutional Neural Network with Novel Regularizer. IEEE Access 2019, 7, 38306–38313. [Google Scholar] [CrossRef]

- Dorj, U.-O.; Lee, K.-K.; Choi, J.-Y.; Lee, M. The skin cancer classification using deep convolutional neural network. Multimedia Tools Appl. 2018, 77, 9909–9924. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, A.; Gul, N.; Anjum, M.A.; Nisar, M.W.; Azam, F.; Bukhari, S.A.C. Integrated design of deep features fusion for localization and classification of skin cancer. Pattern Recognit. Lett. 2019, 131, 63–70. [Google Scholar] [CrossRef]

- Zhang, N.; Cai, Y.-X.; Wang, Y.-Y.; Tian, Y.-T.; Wang, X.-L.; Badami, B. Skin cancer diagnosis based on optimized convolutional neural network. Artif. Intell. Med. 2020, 102, 101756. [Google Scholar] [CrossRef]

- Fuzzell, L.N.; Perkins, R.B.; Christy, S.M.; Lake, P.W.; Vadaparampil, S.T. Cervical cancer screening in the United States: Challenges and potential solutions for underscreened groups. Prev. Med. 2021, 144, 106400. [Google Scholar] [CrossRef]

- Adegun, A.; Viriri, S. Deep learning techniques for skin lesion analysis and melanoma cancer detection: A survey of state-of-the-art. Artif. Intell. Rev. 2020, 54, 811–841. [Google Scholar] [CrossRef]

- Vaishnavi, K.P.; Ramadas, M.A.; Chanalya, N.; Manoj, A.; Nair, J.J. Deep learning approaches for detection of covid-19 using chest x-ray images. In Proceedings of the 2021 Fourth International Conference on Electrical, Computer and Communication Technologies (ICECCT), Erode, India, 15–17 September 2021. [Google Scholar]

- Abayomi-Alli, O.; Damaševicius, R.; Misra, S.; Maskeliunas, R.; Abayomi-Alli, A. Malignant skin melanoma detection using image augmentation by oversampling in non-linear lower-dimensional embedding manifold. Turk. J. Electr. Eng. Comput. Sci. 2021, 8, 2600–2614. [Google Scholar] [CrossRef]

- Kadry, S.; Taniar, D.; Damaševičius, R.; Rajinikanth, V.; Lawal, I.A. Extraction of abnormal skin lesion from dermoscopy image using VGG-SegNet. In Proceedings of the 2021 Seventh International conference on Bio Signals, Images, and Instrumentation (ICBSII), Chennai, India, 25-27 March 2021. [Google Scholar]

- Duc, N.T.; Lee, Y.-M.; Park, J.H.; Lee, B. An ensemble deep learning for automatic prediction of papillary thyroid carcinoma using fine needle aspiration cytology. Expert Syst. Appl. 2021, 188, 115927. [Google Scholar] [CrossRef]

- Humayun, M.; Sujatha, R.; Almuayqil, S.N.; Jhanjhi, N.Z. A Transfer Learning Approach with a Convolutional Neural Network for the Classification of Lung Carcinoma. Healthcare 2022, 10, 1058. [Google Scholar] [CrossRef]

- Gouda, W.; Sama, N.U.; Al-Waakid, G.; Humayun, M.; Jhanjhi, N.Z. Detection of Skin Cancer Based on Skin Lesion Images Using Deep Learning. Healthcare 2022, 10, 1183. [Google Scholar] [CrossRef] [PubMed]

- Trager, M.H.; Geskin, L.J.; Samie, F.H.; Liu, L. Biomarkers in melanoma and non-melanoma skin cancer prevention and risk stratification. Exp. Dermatol. 2020, 31, 4–12. [Google Scholar] [CrossRef] [PubMed]

- Kim, G.; Lee, S.K.; Suh, D.H.; Kim, K.; No, J.H.; Kim, Y.B.; Kim, H. Clinical evaluation of a droplet digital PCR assay for detecting POLE mutations and molecular classification of endometrial cancer. J. Gynecol. Oncol. 2022, 33, e15. [Google Scholar] [CrossRef]

- Dobre, E.-G.; Constantin, C.; Neagu, M. Skin Cancer Research Goes Digital: Looking for Biomarkers within the Droplets. J. Pers. Med. 2022, 12, 1136. [Google Scholar] [CrossRef]

- Shoji, Y.; Bustos, M.A.; Gross, R.; Hoon, D.S.B. Recent Developments of Circulating Tumor Cell Analysis for Monitoring Cutaneous Melanoma Patients. Cancers 2022, 14, 859. [Google Scholar] [CrossRef]

- Argenziano, G.; Soyer, H.P.; De Giorgio, V.; Piccolo, D.; Carli, P.; Delfino, M.; Ferrari, A.; Hofmann-Wellenhof, R.; Massi, D.; Mazzocchetti, G.; et al. Interactive Atlas of Dermoscopy; Edra Medical Publishing & New Media: Milan, Italy, 2000. [Google Scholar]

- Tizhoosh, H.R.; Pantanowitz, L. Artificial Intelligence and Digital Pathology: Challenges and Opportunities. J. Pathol. Inform. 2018, 9, 38. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Yu, L.; Chen, H.; Dou, Q.; Qin, J.; Heng, P.A. Automated Melanoma Recognition in Dermoscopy Images via Very Deep Residual Networks. IEEE Trans. Med. Imaging 2017, 36, 994–1004. [Google Scholar] [CrossRef] [PubMed]

- Fleet, D.; Pajdla, T.; Schiele, B.; Tuytelaars, T. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Milton, M.A.A. Automated skin lesion classification using ensemble of deep neural networks in isic 2018: Skin lesion analysis towards melanoma detection challenge. arXiv 2019, arXiv:1901.10802. [Google Scholar]

- Garcovich, S.; Colloca, G.; Sollena, P.; Andrea, B.; Balducci, L.; Cho, W.C.; Bernabei, R.; Peris, K. Skin Cancer Epidemics in the Elderly as An Emerging Issue in Geriatric Oncology. Aging Dis. 2017, 8, 643–661. [Google Scholar] [CrossRef] [PubMed]

- Sturm, R.A. Skin colour and skin cancer–MC1R, the genetic link. Melanoma Res. 2002, 12, 405–416. [Google Scholar] [CrossRef]

- Zafar, K.; Gilani, S.O.; Waris, A.; Ahmed, A.; Jamil, M.; Khan, M.N.; Sohail Kashif, A. Skin Lesion Segmentation from Dermoscopic Images Using Convolutional Neural Network. Sensors 2020, 20, 1601. [Google Scholar] [CrossRef]

- Sun, Y.; Thakor, N. Photoplethysmography Revisited: From Contact to Noncontact, From Point to Imaging. IEEE Trans. Biomed. Eng. 2015, 63, 463–477. [Google Scholar] [CrossRef]

- Pham, H.N.; Koay, C.Y.; Chakraborty, T.; Gupta, S.; Tan, B.L.; Wu, H.; Vardhan, A.; Nguyen, Q.H.; Palaparthi, N.R.; Nguyen, B.P.; et al. Lesion segmentation and automated melanoma detection using deep convolutional neural networks and XGBoost. In Proceedings of the 2019 International Conference on System Science and Engineering (ICSSE), Dong Hoi, Vietnam, 20–21 July 2019. [Google Scholar]

- Nugroho, A.A.; Slamet, I.; Sugiyanto. Skins cancer identification system of HAMl0000 skin cancer dataset using convolutional neural network. AIP Conf. Proc. 2019, 2202, 020039. [Google Scholar]

- Shirke, P.P. A reviewed study of deep learning techniques for the early detection of skin cancer. J. Tianjin Univ. Sci. Technol. 2022, 55, 2022. [Google Scholar]

- Gopalakrishnan, S.; Ebenezer, A.; Vijayalakshmi, A. An erythemato squamous disease (esd) detection using dbn technique. In Proceedings of the 2022 International Conference on Communication, Computing and Internet of Things (IC3IoT), Chennai, India, 10–11 March 2022. [Google Scholar]

- Nahata, H.; Singh, S. Deep learning solutions for skin cancer detection and diagnosis. In Machine Learning with Health Care Perspective; Springer: Berlin/Heidelberg, Germany, 2020; pp. 159–182. [Google Scholar]

- Skin Lesion Detection in Dermatological Images Using Deep Learning. Available online: http://lapi.fi-p.unam.mx/wp-content/uploads/Jose-Carlos_AISIS_2019_compressed.pdf (accessed on 25 December 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).