Morton Filter-Based Security Mechanism for Healthcare System in Cloud Computing

Abstract

:1. Introduction

2. Motivation

2.1. Objectives of the Mechanism

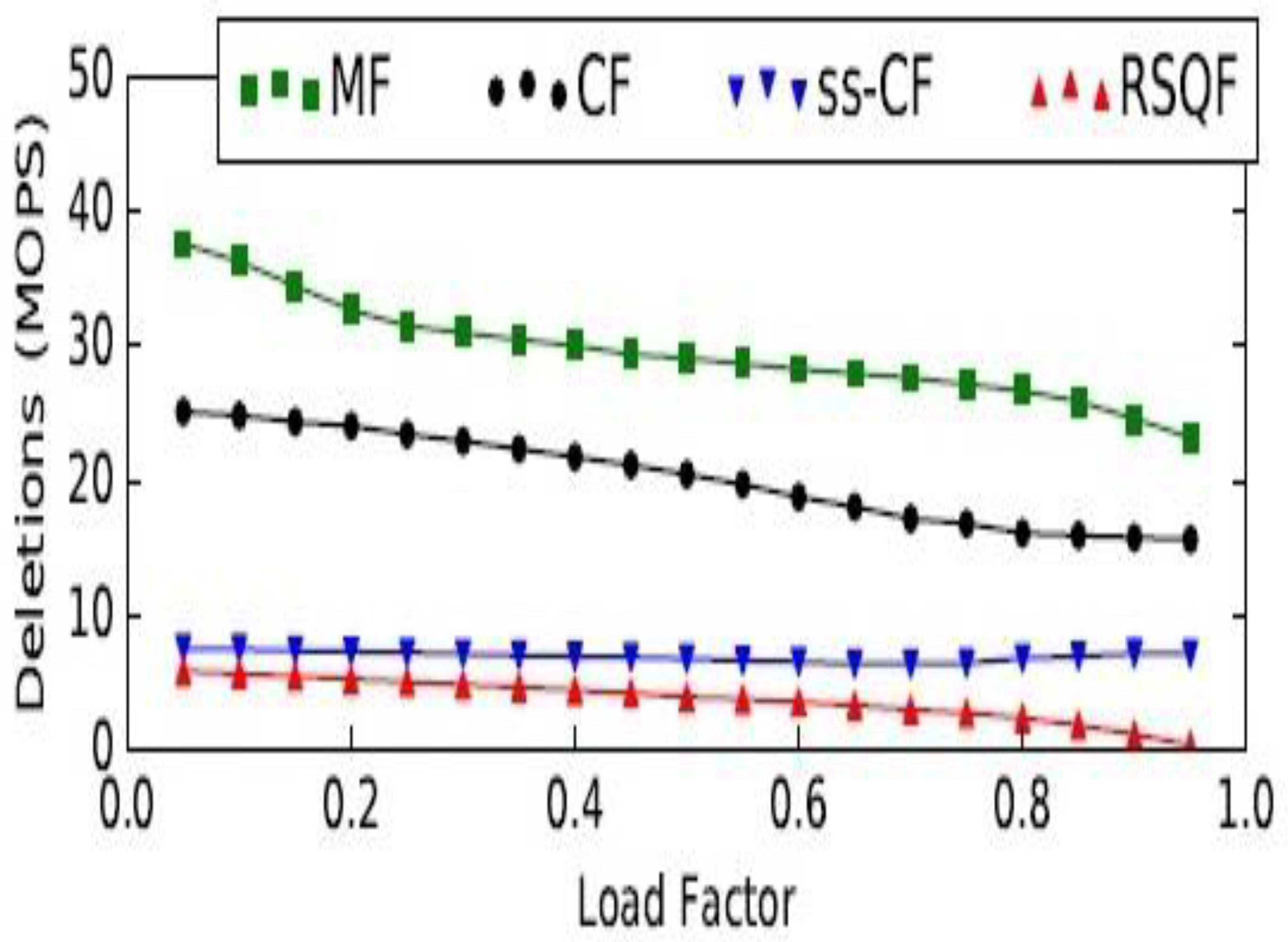

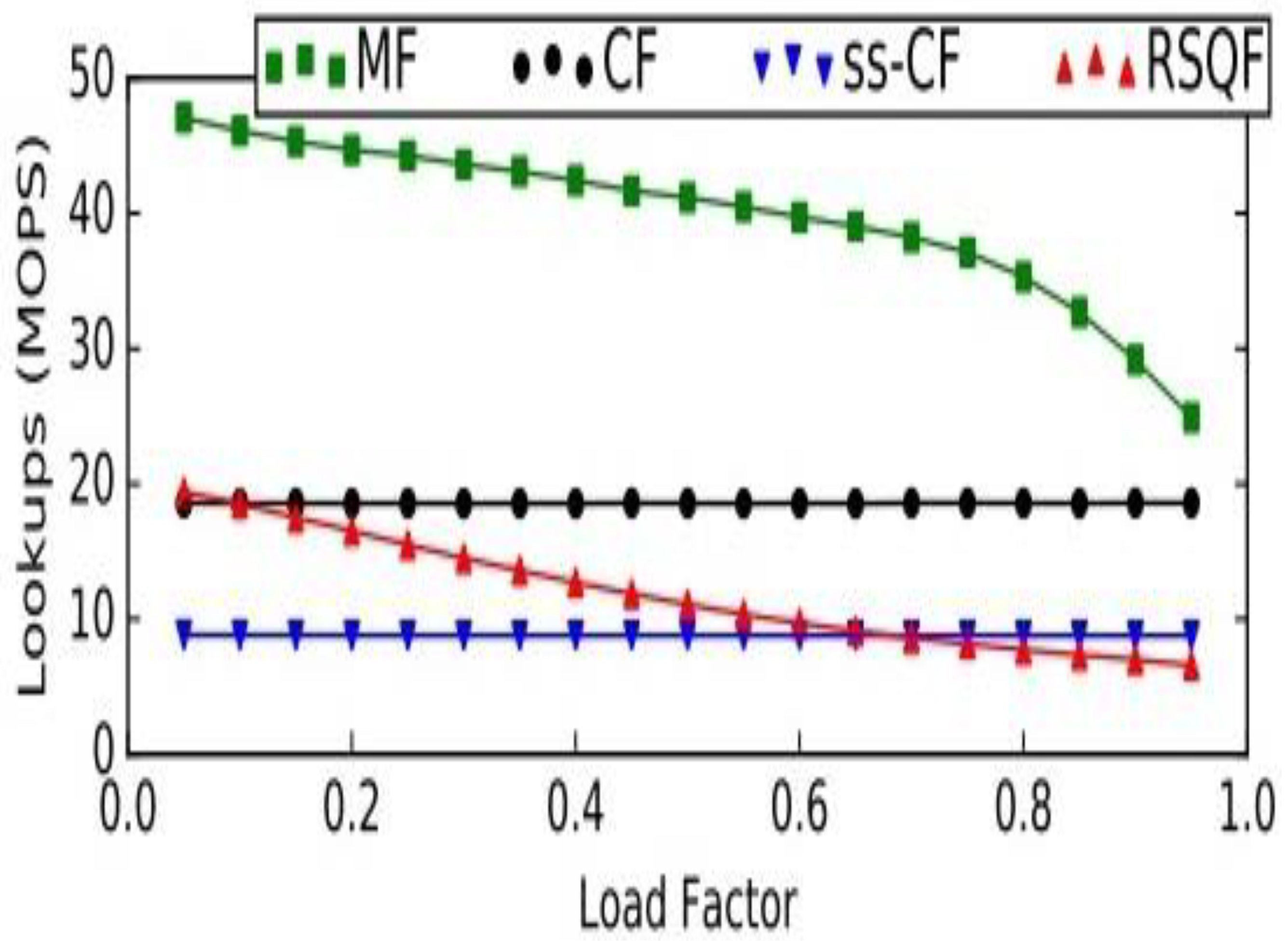

- Various secure, cloud-based healthcare systems were designed with probabilistic data structures, such as cuckoo [12], bloom, and attribute bloom filters. The most advanced and efficient filter is the Morton filter, and it is the best approach to design a cloud security mechanism with Morton filter as throughput, when compared with other probabilistic data structures.

- Designing a security mechanism that provides secure data storage in cloud-based healthcare systems.

- Employing fragile watermark that has the capability to find any tampering of data and digital artifacts.

- Designing a generic security mechanism that can be applied to various cloud-based healthcare systems.

- Realizing a performance evaluation of the proposed security mechanism, based on metrics such as throughput for lookups, insertions, and deletions load factor, but also by making a security and privacy analysis and assessment of the designed security mechanism, by comparison with other similar approaches.

2.2. Related Works

2.3. Major Research Gaps

3. Discussion

4. Materials and Methods

| Algorithm 1. Identify positions. |

| 1. Procedure: identify_positions of the modified data |

| 2. Start |

| 3. Input: The data class Ci in the data stream DS, the Morton Filter MF, Stack TK, counter i. |

| 4. DS ← ø ; initial (MF), initial (TK); |

| 5. i ← 0 |

| 6. while Ci ← DS.chkcls ( ) do |

| 7. i++; |

| 8. switch Ci.type do |

| 9. Case “ €” |

| 10. h ← DS (Ci) |

| 11. if MF.involve (DSi) = = false |

| 12. then DS.push (h); |

| 13. MF.inst (h); |

| 14. Case “£” |

| 15. If MF.lookup (DSi) = = true |

| 16. then h ← DS.pop ( ); |

| 17. MF.del (h); |

| 18. Case “Data” |

| 19. If detect (Ci) = = true |

| 20. then Temp_DS = peek (TK) |

| 21. if MF.involve (Temp_D) = = true |

| 22. then |

| 23. Dj = stacktraceback ( ); |

| 24. MPS = MPS ∪ {Dj} |

| 25. End |

| Output: The set of modified positions MPS and modified data is detected. |

5. Functionality of Algorithm

6. Benefits of Implementing the Morton Filter

7. Results

- Memory utilization is one of the important advantages of using the Morton filter.

- It is placed in a better position, when compared to the cuckoo filter, in the context of insert, delete, and lookup [60] throughput.

- Its implementation is not a complex and tedious task, as in the case of earlier filters, such as the bloom, quotient, and cuckoo filters.

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ko, R.; Choo, K.-K.R. The Cloud Security Ecosystem: Technical, Legal, Business and Management Issues; Syngress Is an Imprint of Elsevier: Waltham, MA, USA, 2015. [Google Scholar]

- Park, S.; Kim, Y.; Park, G.; Na, O.; Chang, H. Research on Digital Forensic Readiness Design in a Cloud Computing-Based Smart Work Environment. Sustainability 2018, 10, 1203. [Google Scholar] [CrossRef] [Green Version]

- Kim, P.; Jo, E.; Lee, Y. An Efficient Search Algorithm for Large Encrypted Data by Homomorphic Encryption. Electronics 2021, 10, 484. [Google Scholar] [CrossRef]

- Fan, B.; Andersen, D.G.; Kaminsky, M.; Mitzenmacher, M.D. Cuckoo Filter. In Proceedings of the 10th ACM International on Conference on emerging Networking Experiments and Technologies, Sydney, Australia, 2–5 December 2014. [Google Scholar] [CrossRef] [Green Version]

- Kävrestad, J. Fundamentals of Digital Forensics; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Ray, B.R.; Chowdhury, M.; Abawajy, J. Hybrid Approach to Ensure Data Confidentiality and Tampered Data Recovery for RFID Tag. Int. J. Networked Distrib. Comput. 2013, 1, 79–88. [Google Scholar] [CrossRef] [Green Version]

- Chun, Y.; Han, K.; Hong, Y. High-Performance Multi-Stream Management for SSDs. Electronics 2021, 10, 486. [Google Scholar] [CrossRef]

- Breslow, A.D.; Jayasena, N.S. Morton filters: Fast, compressed sparse cuckoo filters. VLDB J. 2020, 29, 731–754. [Google Scholar] [CrossRef]

- Yang, H.; Kim, Y. Design and Implementation of High-Availability Architecture for IoT-Cloud Services. Sensors 2019, 19, 3276. [Google Scholar] [CrossRef] [Green Version]

- Bhatia, S.; Malhotra, J. CFRF: Cloud Forensic Readiness Framework—A Dependable Framework for Forensic Readiness in Cloud Computing Environment. Lect. Notes Data Eng. Commun. Technol. 2020, 765–775. [Google Scholar] [CrossRef]

- Islam, S.; Ouedraogo, M.; Kalloniatis, C.; Mouratidis, H.; Gritzalis, S. Assurance of Security and Privacy Requirements for Cloud Deployment Models. IEEE Trans. Cloud Comput. 2015, 6, 387–400. [Google Scholar] [CrossRef] [Green Version]

- Cui, J.; Zhang, J.; Zhong, H.; Xu, Y. SPACF: A Secure Privacy-Preserving Authentication Scheme for VANET with Cuckoo Filter. IEEE Trans. Veh. Technol. 2017, 66, 10283–10295. [Google Scholar] [CrossRef]

- Urban, T.; Tatang, D.; Holz, T.; Pohlmann, N. Towards Understanding Privacy Implications of Adware and Potentially Unwanted Programs. In Computer Security; Springer International Publishing: Cham, Switzerland, 2018; pp. 449–469. [Google Scholar]

- Zawoad, S.; Hasan, R. I Have the Proof: Providing Proofs of Past Data Possession in Cloud Forensics. In Proceedings of the 2012 International Conference on Cyber Security, Alexandria, VA, USA, 14–16 December 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 75–82. [Google Scholar]

- Jiang, M.; Zhao, C.; Mo, Z.; Wen, J. An improved algorithm based on Bloom filter and its application in bar code recognition and processing. EURASIP J. Image Video Process. 2018, 2018, 139. [Google Scholar] [CrossRef] [Green Version]

- Najafimehr, M.; Ahmadi, M. SLCF: Single-hash lookup cuckoo filter. J. High Speed Netw. 2019, 25, 413–424. [Google Scholar] [CrossRef]

- Huang, K.; Zhang, J.; Zhang, D.; Xie, G.; Salamatian, K.; Liu, A.X.; Li, W. A Multi-partitioning Approach to Building Fast and Accurate Counting Bloom Filters. In Proceedings of the 2013 IEEE 27th International Symposium on Parallel and Distributed Processing, Cambridge, MA, USA, 20–24 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1159–1170. [Google Scholar]

- Sivan, R.; Zukarnain, Z. Security and Privacy in Cloud-Based E-Health System. Symmetry 2021, 13, 742. [Google Scholar] [CrossRef]

- Butt, U.A.; Mehmood, M.; Shah, S.B.H.; Amin, R.; Shaukat, M.W.; Raza, S.M.; Suh, D.Y.; Piran, J. A Review of Machine Learning Algorithms for Cloud Computing Security. Electronics 2020, 9, 1379. [Google Scholar] [CrossRef]

- Wu, Z.-Y. A Secure and Efficient Digital-Data-Sharing System for Cloud Environments. Sensors 2019, 19, 2817. [Google Scholar] [CrossRef] [Green Version]

- Amanowicz, M.; Jankowski, D. Detection and Classification of Malicious Flows in Software-Defined Networks Using Data Mining Techniques. Sensors 2021, 21, 2972. [Google Scholar] [CrossRef]

- Wang, Q.; Su, M.; Zhang, M.; Li, R. Integrating Digital Technologies and Public Health to Fight COVID-19 Pandemic: Key Technologies, Applications, Challenges and Outlook of Digital Healthcare. Int. J. Environ. Res. Public Heal. 2021, 18, 6053. [Google Scholar] [CrossRef]

- Waseem, Q.; Alshamrani, S.; Nisar, K.; Din, W.W.; Alghamdi, A. Future Technology: Software-Defined Network (SDN) Forensic. Symmetry 2021, 13, 767. [Google Scholar] [CrossRef]

- Han, S.; Wu, Q.; Zhang, H.; Qin, B.; Hu, J.; Shi, X.; Liu, L.; Yin, X. Log-Based Anomaly Detection with Robust Feature Extraction and Online Learning. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2300–2311. [Google Scholar] [CrossRef]

- Tahirkheli, A.; Shiraz, M.; Hayat, B.; Idrees, M.; Sajid, A.; Ullah, R.; Ayub, N.; Kim, K.-I. A Survey on Modern Cloud Computing Security over Smart City Networks: Threats, Vulnerabilities, Consequences, Countermeasures, and Challenges. Electronics 2021, 10, 1811. [Google Scholar] [CrossRef]

- Chenthara, S.; Ahmed, K.; Wang, H.; Whittaker, F. Security and Privacy-Preserving Challenges of e-Health Solutions in Cloud Computing. IEEE Access 2019, 7, 74361–74382. [Google Scholar] [CrossRef]

- Ogiela, L.; Ogiela, M.R.; Ko, H. Intelligent Data Management and Security in Cloud Computing. Sensors 2020, 20, 3458. [Google Scholar] [CrossRef] [PubMed]

- Seh, A.H.; Zarour, M.; Alenezi, M.; Sarkar, A.K.; Agrawal, A.; Kumar, R.; Ahmad Khan, R. Healthcare Data Breaches: Insights and Implications. Healthcare 2020, 8, 133. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Crespo, R.G.; Martínez, O. Enhancing Privacy and Data Security across Healthcare Applications Using Blockchain and Distributed Ledger Concepts. Healthcare 2020, 8, 243. [Google Scholar] [CrossRef] [PubMed]

- Chadwick, D.W.; Fan, W.; Costantino, G.; de Lemos, R.; Di Cerbo, F.; Herwono, I.; Manea, M.; Mori, P.; Sajjad, A.; Wang, X.-S. A cloud-edge based data security architecture for sharing and analysing cyber threat information. Futur. Gener. Comput. Syst. 2020, 102, 710–722. [Google Scholar] [CrossRef]

- Ying, Z.; Jiang, W.; Liu, X.; Xu, S.; Deng, R. Reliable Policy Updating under Efficient Policy Hidden Fine-grained Access Control Framework for Cloud Data Sharing. IEEE Trans. Serv. Comput. 2021, 1. [Google Scholar] [CrossRef]

- Xie, G.; Liu, Y.; Xin, G.; Yang, Q. Blockchain-Based Cloud Data Integrity Verification Scheme with High Efficiency. Secur. Commun. Netw. 2021, 2021, 1–15. [Google Scholar] [CrossRef]

- Kumar, G.S.; Krishna, A.S. Data Security for Cloud Datasets with Bloom Filters on Ciphertext Policy Attribute Based Encryption. Res. Anthol. Artif. Intell. Appl. Secur. 2021, 1431–1447. [Google Scholar] [CrossRef]

- Cano, M.-D.; Cañavate-Sanchez, A. Preserving Data Privacy in the Internet of Medical Things Using Dual Signature ECDSA. Secur. Commun. Netw. 2020, 2020, 1–9. [Google Scholar] [CrossRef]

- Breidenbach, U.; Steinebach, M.; Liu, H. Privacy-Enhanced Robust Image Hashing with Bloom Filters. In Proceedings of the 15th International Conference on Availability, Reliability and Security, Dublin, Ireland, 25–28 August 2020. [Google Scholar]

- Shi, S.; He, D.; Li, L.; Kumar, N.; Khan, M.K.; Choo, K.-K.R. Applications of blockchain in ensuring the security and privacy of electronic health record systems: A survey. Comput. Secur. 2020, 97, 101966. [Google Scholar] [CrossRef]

- Adamu, J.; Hamzah, R.; Rosli, M.M. Security issues and framework of electronic medical record: A review. Bull. Electr. Eng. Inform. 2020, 9, 565–572. [Google Scholar] [CrossRef]

- Jeong, J.; Joo, J.W.J.; Lee, Y.; Son, Y. Secure Cloud Storage Service Using Bloom Filters for the Internet of Things. IEEE Access 2019, 7, 60897–60907. [Google Scholar] [CrossRef]

- Patgiri, R.; Nayak, S.; Borgohain, S.K. Hunting the Pertinency of Bloom Filter in Computer Networking and Beyond: A Survey. J. Comput. Netw. Commun. 2019, 2019, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ming, Y.; Zhang, T. Efficient Privacy-Preserving Access Control Scheme in Electronic Health Records System. Sensors 2018, 18, 3520. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Decouchant, J.; Fernandes, M.; Völp, M.; Couto, F.M.; Esteves-Veríssimo, P. Accurate filtering of privacy-sensitive information in raw genomic data. J. Biomed. Inform. 2018, 82, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Ramu, G. A secure cloud framework to share EHRs using modified CP-ABE and the attribute bloom filter. Educ. Inf. Technol. 2018, 23, 2213–2233. [Google Scholar] [CrossRef]

- Brown, A.P.; Ferrante, A.M.; Randall, S.M.; Boyd, J.; Semmens, J.B. Ensuring Privacy When Integrating Patient-Based Datasets: New Methods and Developments in Record Linkage. Front. Public Health 2017, 5, 34. [Google Scholar] [CrossRef] [Green Version]

- Vatsalan, D.; Christen, P.; Rahm, E. Scalable Privacy-Preserving Linking of Multiple Databases Using Counting Bloom Filters. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining Workshops (ICDMW), Barcelona, Spain, 12–15 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 882–889. [Google Scholar]

- Sarkar, U.; Chakrabarti, P.; Ghose, S.; De Sarkar, S. Effective use of memory in iterative deepening search. Inf. Process. Lett. 1992, 42, 47–52. [Google Scholar] [CrossRef]

- Roy, S.S.; Basu, A.; Das, M.; Chattopadhyay, A. FPGA implementation of an adaptive LSB replacement based digital watermarking scheme. In Proceedings of the 2018 International Symposium on Devices, Circuits and Systems (ISDCS), Howrah, India, 29–31 March 2018. [Google Scholar]

- Kricha, Z.; Kricha, A.; Sakly, A. Accommodative extractor for QIM-based watermarking schemes. IET Image Process. 2019, 13, 89–97. [Google Scholar] [CrossRef]

- Li, M.; Yuan, X.; Chen, H.; Li, J. Quaternion Discrete Fourier Transform-Based Color Image Watermarking Method Using Quaternion QR Decomposition. IEEE Access 2020, 8, 72308–72315. [Google Scholar] [CrossRef]

- Wang, D.; Jiang, Y.; Song, H.; He, F.; Gu, M.; Sun, J. Verification of Implementations of Cryptographic Hash Functions. IEEE Access 2017, 5, 7816–7825. [Google Scholar] [CrossRef]

- Na, D.; Park, S. Fusion Chain: A Decentralized Lightweight Blockchain for IoT Security and Privacy. Electronics 2021, 10, 391. [Google Scholar] [CrossRef]

- Su, G.-D.; Chang, C.-C.; Lin, C.-C. Effective Self-Recovery and Tampering Localization Fragile Watermarking for Medical Images. IEEE Access 2020, 8, 160840–160857. [Google Scholar] [CrossRef]

- Naz, F.; Khan, A.; Ahmed, M.; Khan, M.I.; Din, S.; Ahmad, A.; Jeon, G. Watermarking as a service (WaaS) with anonymity. Multimedia Tools Appl. 2019, 79, 16051–16075. [Google Scholar] [CrossRef]

- Harfoushi, O.; Obiedat, R. Security in Cloud Computing Using Hash Algorithm: A Neural Cloud Data Security Model. Mod. Appl. Sci. 2018, 12, 143. [Google Scholar] [CrossRef]

- Nandhini, K.; Balasubramaniam, R. Malicious Website Detection Using Probabilistic Data Structure Bloom Filter. In Proceedings of the 2019 3rd International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 27–29 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 311–316. [Google Scholar]

- Reviriego, P.; Pontarelli, S.; Maestro, J.A.; Ottavi, M. A Synergetic Use of Bloom Filters for Error Detection and Correction. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2014, 23, 584–587. [Google Scholar] [CrossRef]

- Ho, T.; Cho, S.; Oh, S. Parallel multiple pattern matching schemes based on cuckoo filter for deep packet inspection on graphics processing units. IET Inf. Secur. 2018, 12, 381–388. [Google Scholar] [CrossRef]

- Li, G.; Wang, J.; Liang, J.; Yue, C. Application of Sliding Nest Window Control Chart in Data Stream Anomaly Detection. Symmetry 2018, 10, 113. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Zhang, T.; Ji, X.; Xu, W. Cuckoo-RPL: Cuckoo Filter Based RPL for Defending AMI Network from Blackhole Attacks. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019. [Google Scholar]

- Bhattacharjee, M.; Dhar, S.K.; Subramanian, S. Recent Advances in Biostatistics False Discovery Rates, Survival Analysis, and Related Topics; World Scientific Pub. Co.: Singapore, 2011. [Google Scholar]

- Li, D.; Chen, P. Optimized Hash Lookup for Bloom Filter Based Packet Routing. In Proceedings of the 2013 16th International Conference on Network-Based Information Systems, Gwangju, Korea, 4–6 September 2013. [Google Scholar]

- Roussev, V.; McCulley, S. Forensic analysis of cloud-native artifacts. Digit. Investig. 2016, 16, S104–S113. [Google Scholar] [CrossRef] [Green Version]

| Reference | DataStructure/ Technology Used | Cloud-Based Healthcare System | Contribution |

|---|---|---|---|

| Ying et al. (2021) [31] | Cuckoo Filter | No | Suggested a security-enhanced attribute cuckoo filter to hide the access policy and designed ciphertext-policy attribute-based encryption |

| Xie et al. (2021) [32] | Cuckoo Filter | No | Proposed a lattice signature method, with Cuckoo filter, that can simplify the computational overhead |

| Kumar et al. (2021) [33] | Bloom Filter | Yes | Explained technique to protect cloud datasets with bloom filter, based ciphertext-policy attribute-based encryption |

| Cano et al. (2020) [34] | Elliptic Curve Cryptography | Yes | Presented a solution to achieve security and the preservation of data privacy in internet of medical things and the cloud |

| Breidenbach et al. (2020) [35] | Bloom Filter | No | Discussed privacy-preserving concept, by using bloom filter and cryptographic functions |

| Shi et al. (2020) [36] | Block Chain | Yes | Investigated various approaches of E-health records in blockchain technology and proposed different applications of healthcare in blockchain |

| Adamu et al. (2020) [37] | Laravel Security Features | Yes | Proposed a framework that can be used to apply security and privacy to electronic medical record |

| Breslow et al. (2019) [8] | Morton Filter | No | Designed a mechanism to prove that the Morton filter is an improvement over the cuckoo filter |

| Jeong et al. (2019) [38] | Bloom Filter | No | Proposed a secure cloud storage service, on the basis of bloom filter and provable data possession model |

| Patgiri et al. (2019) [39] | Bloom Filter | No | Explored the adaption of bloom filter in network security, packet filtering, and IP address lookup. |

| Ming et al. (2018) [40] | Cuckoo Filter | Yes | Designed an attribute-based signcryption scheme (ABSC) for privacy-preserving in electronic health record |

| Decouchant et al. (2018) [41] | Bloom Filter | No | Presented a bloom filter-based novel filtering method that can be applied to reads of any length |

| Ramu (2018) [42] | Attribute Bloom Filter | Yes | Proposed a secure cloud mechanism to share health records among various users, using ciphertext-policy attribute-based encryption and attribute bloom filter |

| Brown et al. (2017) [43] | Bloom Filter | Yes | Discussed privacy-preserving record linkage (PPRL) model, along with bloom filter, to overcome the problems of data integration and privacy |

| Vatsalan et al. (2016) [44] | Counting Bloom Filter | Yes | Proposed a novel method to provide privacy for multi-party privacy-preserving record linkage with counting bloom filter |

| Token and Its Explanation | α (Load Factor) | m (No. of Buckets) | b (No. of Entries per Bucket) | f (Length of Fingerprints in Bits) |

|---|---|---|---|---|

| Value | 0.8 | 8 | 8 | 36 |

| Token and Its Explanation | α (Load Factor) | m (No. of Buckets) | b (No. of Entries per Bucket) | f (Length of Fingerprints in Bits) |

|---|---|---|---|---|

| Value | 0.9 | 8 | 8 | 36 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhatia, S.; Malhotra, J. Morton Filter-Based Security Mechanism for Healthcare System in Cloud Computing. Healthcare 2021, 9, 1551. https://doi.org/10.3390/healthcare9111551

Bhatia S, Malhotra J. Morton Filter-Based Security Mechanism for Healthcare System in Cloud Computing. Healthcare. 2021; 9(11):1551. https://doi.org/10.3390/healthcare9111551

Chicago/Turabian StyleBhatia, Sugandh, and Jyoteesh Malhotra. 2021. "Morton Filter-Based Security Mechanism for Healthcare System in Cloud Computing" Healthcare 9, no. 11: 1551. https://doi.org/10.3390/healthcare9111551

APA StyleBhatia, S., & Malhotra, J. (2021). Morton Filter-Based Security Mechanism for Healthcare System in Cloud Computing. Healthcare, 9(11), 1551. https://doi.org/10.3390/healthcare9111551